Abstract

The assessment of loess slope stability is a highly complex nonlinear problem. There are many factors that influence the stability of loess slopes. Some of them have the characteristic of uncertainty. Meanwhile, the relationship between different factors may be complicated. The existence of multiple correlation will affect the objectivity of stability analysis and prevent the model from making correct judgments. In this paper, the main factors affecting the stability of loess slopes are analyzed by means of the partial least-squares regression (PLSR). After that, two new synthesis variables with better interpretation to the dependent variables are extracted. By this way, the multicollinearity among variables is overcome preferably. Moreover, the BP neural network is further used to determine the nonlinear relationship between the new components and the slope safety factor. Then, a new improved BP model based on the partial least-squares regression, which is initialized by the particle swarm optimization (PSO) algorithm, is developed, i.e., the PLSR-BP model. The network with global convergence capability is simplified and more efficient. The test results of the model show satisfactory precision, which indicates that the model is feasible and effective for stability evaluation of loess slopes.

Similar content being viewed by others

Introduction

The slope stability analysis can not only provide basis for economical and reasonable slope design, but also help to make judgments about the stability state and evolution trend of no matter artificial or natural slopes, prevent the potential risks and guide the slope treatment. Due to the economic development and population expansion, the safety and security of the transport networks and residential areas may be threatened by the potential slope instabilities in many countries. Slope instabilities are complex natural hazards that may result in disastrous consequences1. Therefore, the slope stability assessment is a critical research area in civil engineering2. In order to ensure the safety of economic construction and prevent the potential economic losses and casualties, slope stability analyses are required, and appropriate assessment methods are of practical need. Currently, the expert evaluation, analytical methods and machine learning are three common methods employed for the slope stability analysis3,4. The first method is mainly used to analyze the reasons and developing processes of slope deformation according to the expertise and engineering geological survey. The essence is to apply the previous practical experience into the similar slope engineering projects5. Based on the experts’ experiences and knowledge, the relative factors which may trigger the slope collapses can be identified and the safety and stability of a slope can be evaluated. However, the major disadvantage of the expert evaluation techniques is the subjectivity and the decisions may contain the bias of researchers6. The analytical methods are mainly used to analyze the slope system characteristics by establishing appropriate mathematical models. Based on this approach, the dangerous sliding surface and safety factor can be identified. However, it is actually difficult to determine the calculation parameters accurately, which may lead to misleading results. In fact, this kind of methods are only appropriate for evaluating slope stability in small areas7. Recently, based on the intelligent statistical learning theory, machine learning has been introduced into the slope collapse prediction. The machine learning models are generally established on the basis of the artificial intelligence techniques and historical data8. Yan and Li9 built a prediction model to evaluate the stability of an open pit slope based on the Bayes discriminant analysis (BDA). Samui and Kothari10 applied the least squares support vector machine (LSSVM) to explore the mapping function between the input pattern and the safety factor of slopes. Zhao et al.11 developed the nonlinear relationship between the slope stability and influence factors using the relevance vector machine (RVM). Wang et al.12 constructed a method to evaluate the stability of complex slope systems based on the projection pursuit algorithm. Liu et al.13 applied the improved particle swarm optimization (PSO) algorithm to analyze some critical factors affecting saturated rock slope slip in numerical simulation. Himanshu et al.14 used the unified particle swarm optimization (UPSO) to assess optimum location of non-circular failure surface in soil slope. And Moayedi et al.15 compared the feasibility of the artificial neural network (ANN), adaptive neuro-fuzzy inference system (ANFIS), and hybrid particle swarm optimization (HPSO) for assessing the safety factor of cohesive slopes.

In terms of loess slope stability evaluation, many internal and external factors should be considered. However, some of them show the obvious features of fuzziness, randomness and variability. Simultaneously, there is a complex nonlinear relationship between the evaluation indices and the influencing factors, which cannot be described by simple mathematical formula. Therefore, the stability assessment of loess slopes is a dynamic, nonlinear, uncertain and systematic problem. Besides, the correlation may exist between different parameters of geomaterials. Actually, it is impossible to take the effects of all the influencing factors into account fully and properly. However, a multi-variable system containing some main factors will be affected by the overlapping information inevitably because the multicollinearity among variables will exaggerate certain characteristics of the analysis system, which will definitely affect the objectivity and chock the decision process16.

The artificial neural network (ANN) has been successfully used to solve the slope stability problems by many scholars17,18,19,20 in recent years. Among them, the back propagation (BP) neural network is a kind of widely used neural networks. BP neural network shows good performance in knowledge learning, experience storage, computational efficiency and fault tolerance. It has the ability to extract features and acquire knowledge from the dynamic uncertain multi-factor systems, and also to approximate any complex nonlinear functional relationship. Meanwhile, in consideration of the explicit error back-propagation strategy and strict weight correction procedure from a mathematical point of view, it is reasonable to evaluate the slope stability with the BP neural network. Partial least-squares regression (PLSR) is a new multivariate statistical data-analysis method which can realize the multiple linear regression, canonical correlation analysis and principal component analysis. Through extracting the representative synthesis variables of a given system, PLSR can reduce the dimension of the independent variable system, simplify the network structure and improve the modeling efficiency. Meanwhile, the adverse effects of the variable multicollinearity can be overcome in this way. Moreover, many evolutionary algorithms were proposed for global optimization and employed to improve the performance of other algorithms, such as the dragonfly algorithm21, multi verse optimizer22, robust optimization23 and cooperative meta-heuristic algorithm24. As a population-based global optimization technique, the particle swarm optimization (PSO) has arisen extensive attention from the optimization community because of the simple structure, clear parameter meaning, high convergence rate and little manual intervention. Hence, PSO is adopted to solve the local convergence problem of BP neural network using its excellent global optimization capability, which means the satisfactory global optimal solution can be obtained with a relatively high speed. In this study, by taking advantage of the partial least-squares regression, particle swarm optimization and BP neural network, the PLSR-BP model for loess slope stability assessment is established. And the test results of the model show satisfactory precision and performance.

Partial least-squares regression

The partial least-squares regression (PLSR)25, as the second-generation regression analysis method, was developed for the global data treatment. PLSR can be employed to find the hidden structure of the dataset and extract the meaningful information based on the dimensional reduction of data and inverse calibration technology26. Through PLSR, several new representative synthesis variables can be extracted from the variable system by removing the redundant information. By this way, the adverse effects of multicollinearity among variables on the accuracy and reliability of modeling can be overcome effectively27.

Extract principal components using PLSR

Hypothetically, there are p independent variables r = {x1,x2,…,xp} and q dependent variables s = {y1,y2,…,yq} (where p and q are two positive integers). There are n groups of independent and dependent variable data, respectively (X=\({[{{\varvec{r}}}_{1},{{\varvec{r}}}_{2},\cdots ,{{\varvec{r}}}_{{\varvec{n}}}]}_{{\varvec{n}}\times {\varvec{p}}}^{{\varvec{T}}}\) and Y = \({[{{\varvec{s}}}_{1},{{\varvec{s}}}_{2},\cdots ,{{\varvec{s}}}_{{\varvec{n}}}]}_{{\varvec{n}}\times {\varvec{p}}}^{{\varvec{T}}}\)). n is the number of the selected samples. t1 and u1 are extracted from X and Y, respectively. Namely, t1 is a linear combination of x1, x2,…, xp and u1 is a linear combination of y1, y2, …, yq. Meanwhile, the following requirements must be met: (1) t1 and u1 should carry the variation information of their own data table as much as possible and (2) the correlation degree between t1 and u1 should be highest. After extracting the first principal components (t1 and u1), the partial least-squares regression will be carried out further to get the regression relations of X and t1 as well as Y and u1. The algorithm will be terminated when the precision of the regression equation is considered to be satisfactory by testing. Otherwise, the residual information of X and Y after being explained with t1 and u1 will be further used to extract the second components (t2 and u2). This process repeats until a satisfactory precision is reached.

Determine principal components using the cross validation (CV)

The principal components of a variable system can be determined by judging whether the prediction ability of the model will be improved significantly when adding the components. The cross-validation method is usually used for the determination of the principal components.

Hypothetically, yj represents the sample data and t1, t2, …, tA are the principal components extracted by PLSR. \({\widehat{y}}_{hji}\) is the fitted value of yj at the ith sample point using the regression model established with h principal components (t1, t2, …, th). These principal components are extracted using all the sample points. \({\widehat{y}}_{hj(-i)}\) is the fitted value of yj at the ith sample point using the regression model established with h principal components (t’1, t’2, …, t’h). These principal components are extracted using all the sample points except the ith sample point. Moreover, the sum of squared errors sshj of yj and the sum of squared prediction errors presshj of yj are defined as follows28:

Furthermore, the sum of squared errors ssh of Y which is described by h principal components extracted from all the sample points and the sum of squared prediction errors pressh of Y are defined as follows28:

In general, pressh > ssh and ssh < ssh−1. ssh−1 is the sum of squared errors of Y which is described by h−1 principal components extracted from all the sample points. Compared with ssh−1, pressh reflects not only the role of the principal component th, but also the disturbance error of the sample data. Hence, it is always expected that the value of pressh could be smaller than ssh−1 to a certain extent (i.e., the value of pressh/ssh−1 is considered to be the smaller the better). Thus, the cross validation of the principal component th can be defined as28:

Typically, when \({Q}_{h}^{2}\ge \) 0.0975, the addition of the principal component th will benefit the system; otherwise, there is no need to add the principal component th.

Extraction algorithm of PLSR

The extraction algorithm of PLSR can be summarized as follows:

(1) Complete the standardization process.

The standardization formula is shown as follows28:

where \(\widehat{{z}_{ij}}\) is the standardized value, zij is the real value, and \(\overline{{z }_{j}}\) and \({sd}_{j}\) are the arithmetic mean and standard deviation of the data in the jth column of the data matrix, respectively.

According to Eq. (6), the standardized data matrices of X and Y can be obtained and expressed as E0 = [E01,E02,…,E0p]n×p and F0 = [F01,F02,…,F0q]n×q. Hypothetically, t1 and u1 are the first principal components of E0 and F0, respectively.

(2) Extract the principal components.

Calculate the unit eigenvector w1 corresponding to the largest eigenvalue of the covariance matrix \(E_{0}^{T} F_{0} F_{0}^{T} E_{0}\). Note that w1 is the first axis of E0 and as a unit eigenvector, i.e., ‖w1‖ = 1. Simultaneously, calculate the unit eigenvector c1 corresponding to the largest eigenvalue of the covariance matrix \(F_{0}^{T} E_{0} E_{0}^{T} F_{0}\). Note that c1 is the first axis of F0 and as a unit eigenvector, i.e., ‖c1‖ = 1. After determining the vectors w1 and c1, the first principal components can be obtained as t1 = E0w1 and u1 = F0c1. After that, the two regression equations of E0 and F0 about t1 can be determined, respectively28.

where the regression coefficient vectors are as follows28:

(3) Test the cross validation.

If \({Q}_{h}^{2}\ge \) 0.0975, it means that the next principal components should be extracted. After replacing the residuals matrices E0 and F0 with E1 and F1, the second principal components t2 and u2 can be calculated in the same way. This process will repeat until \({Q}_{h}^{2}<\) 0.0975. If the rank of X is A, we have the following equations28:

BP neural network

Artificial neural network29 can be seen as a connected parallel architecture consisting of several layers of neurons. For ANN, the knowledge can be gained from the sample sets and be represented as ‘weights’ and ‘thresholds’ in the connections of the neural network. Through the weight and threshold matrices, the influence of the input variables on the output variables can be determined. On the other hand, the appropriate mathematical methods can be chosen to adjust the weights and thresholds to realize specific functions. The BP neural network is used in this study because of its reliability and applicability. According to the randomly initialized weight and threshold matrices, the error between the network output and the target values can be calculated. Then, based on a weight correction procedure, the error is propagated backward and used to update the weight and threshold matrices of the previous layers using the back-propagation algorithm30. In this way, the mapping function between the system input variables and output variables can be modelled by the BP neural network step by step.

The architecture of a standard BP neural network is shown in Fig. 1. Generally, it has one input layer composed of neurons corresponding to the input variables, no less than one hidden layer and one output layer composed of neurons corresponding to the output variables.

Typical architecture of BP neural network (MS Office 2019; www.microsoft.com/en-us/microsoft-365/get-started-with-office-2019).

In this study, the number of neurons (m) in the input layer is the same as the number of physical–mechanical parameters to be considered, and the number of neurons (n) in the output layer is the same as the number of evaluation indices. The evaluation index of the slope stability is the safety factor in this paper. Hence, the number n is 1. The number of neurons (p) in a hidden layer can be specified either manually or by an optimization method30. The training samples are used to update the weight and threshold matrices by making the summed squared error between the safety factor values and the output of the BP network a minimum using the back propagation algorithm.

The computing process of a three-layer BP neural network is shown in Fig. 2. W1 and b1 are the weight and threshold matrices between the input and hidden layers, respectively; W2 and b2 are the weight and threshold matrices between the hidden and output layers, respectively; f1 and f2 are the transfer functions between two adjacent layers. Tan-Sigmoid transfer function (tansig), Log-Sigmoid transfer function (logsig) and linear transfer function (purelin) are the three common transfer functions for multilayer artificial neural networks.

Flow chart of BP neural network (MS Office 2019; www.microsoft.com/en-us/microsoft-365/get-started-with-office-2019).

However, the summed squared error between the target values and the output values of the BP network depends on the randomly initialized weight and threshold matrices. The limitations such as slow convergence, local convergence and poor generalization ability hamper the performance of ANN seriously31. Therefore, an appropriate optimization algorithm with global optimization capability is necessary for the initial assignment of the weights and thresholds of BP neural network.

Particle swarm optimization

The particle swarm optimization algorithm (PSO) was proposed by Eberhart and Kennedy32. As a new intelligent swarm optimization algorithm, PSO is an important branch of the evolutionary algorithms. The velocity-position model is applied in PSO. The position of each particle represents a candidate solution in the solution space. The quality of the particle solution is measured by the previously defined fitness function.

The position and velocity of Particle i in the n-dimensional space can be set as oi = {oi1,oi2,…,oin} and vi = {vi1,vi2,…,vin}. PSO searches the optimal solution through iterating. Firstly, a group of particles are initialized randomly in the n-dimensional space. The velocity decides the displacement of a particle during one iteration in the solution space. Then, the particle velocity and position are adjusted dynamically according to the individual extremum pi = {pi1,pi2,…,pin} and global extremum g = {g1,g2,…,gn} using the following formulae32:

where w is the inertia weight coefficient, c1 and c2 are the learning factors, r1 and r2 are the random numbers between (0, 1), \({v}_{ij}^{k}\) and \({o}_{ij}^{k}\) are the jth components of the velocity vector and position vector of Particle i in the kth epoch, where j = 1,2,…,n (n is the dimension of the solution space). The first item of the velocity-updating formula reflects the inheritance of the previous velocity, which makes particles maintain inertial motion; the second item is usually called the cognition term. This item is only related to the particle's own experience and reflects the thinking on behalf of itself; the third item is usually called the social item, which reflects the information sharing and cooperation between particles. By learning from itself and other particles, the particle is targeted to obtain more effective information from its ancestors. This process ensures that the optimal solution can be obtained in a short time33.

The PLSR-BP model for loess slope stability assessment

The stability of loess slopes depends on a lot of internal and external factors involving the soil properties, structure characteristics, groundwater, climate change, weathering effects, seismicity, human activities and so on. The key of assessing the loess slope stability appropriately is to select the influence factors correctly. However, there are no widely accepted theories guiding the selection so far. A common approach is to analyze the practical conditions as well as refer to the experience of the geological experts and engineers. On the basis of analyzing the previous study34 and data availability comprehensively, seven parameters are determined as the independent variables affecting the loess slope stability in this study, i.e., density γ (x1), cohesion c (x2), internal friction angle ϕ (x3), slope height h (x4), slope ratio s (x5), pore water pressure coefficient γu (x6) and seismic intensity q (x7). Because the main means analyzing the slope stability quantitatively is to calculate the safety factor, the safety factor y1 is selected as the dependent variable. The 23 representative loess slopes in Northwest China described in the literature34 are selected as the analysis samples, as shown in Table 1.

Correlation between independent variables

The correlation analysis is carried out on MATLAB platform and the correlation coefficients between different independent variables are shown in Table 2.

It can be seen in Table 2 that the multicollinearity exists among independent variables. Especially, the linear correlations between some variables, such as x1, x2, x3, and x4 are significant. Thus, it is necessary to overcome the multicollinearity by extracting principal components using the partial least-squares regression.

Extract principal components by PLSR

The partial least-squares regression is implemented for the system y1 = f(x1, x2, x3, x4, x5, x6, x7) on MATLAB platform. The cross validation shown in Table 3 demonstrates that the first two principal components t1 and t2 are acceptable. The mathematical expression is shown as follows:

where \({[\widehat{{x}_{1}}\widehat{{,x}_{2},}\widehat{{x}_{3},}\widehat{{x}_{4},}\widehat{{x}_{5,}}\widehat{{x}_{6}},\widehat{{x}_{7}}]}^{T}\) is the standardized matrix of [x1, x2, x3, x4, x5, x6, x7]T.

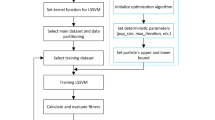

PLSR-BP model based on the particle swarm optimization algorithm

According to the cross-validation testing, a stability assessment model with good performance can be obtained by only choosing the first two principal components t1 and t2. Actually, if the following principal components could not offer more meaningful information for explaining the variable system Y, choosing too many principal components may mislead the understanding about the statistical trend and result in incorrect prediction conclusions.

In this study, the three-layer BP neural network is applied. The two principal components t1 and t2 are treated as the input of the neural network, and \(\widehat{Y}\) (the standardized value of Y) is treated as the output of the neural network, i.e., there are two neurons at the input layer and one neuron at the output layer. It has been proved that a three-layer BP neural network with M neurons at the input layer, 2M + 1 neurons at the hidden layer and N neurons at the hidden layer can express any continuous function accurately35. Hence, the structure of the neural network is set to be 2–5–1. Simultaneously, the logsig function is applied as the transfer function of the hidden layer and the purelin function is applied as the transfer function of the output layer.

Based on MATLAB platform, the neural network is established by learning knowledge from the 23 groups of sample data listed in Table 1. The initial weights and thresholds are optimized using PSO. Through trial calculation, the parameters of PSO are set as: the total number of particles n = 23, particle dimension d = 21, inertia weight coefficient w decreasing from 1.15 to 0.45 linearly, learning factors c1 = 2.2 & c2 = 2.0 and maximum evolution number m = 200. At the end of evolution, the mean square error (MSE) of the network drops to 0.0787. Then, the neural network is initialized with the optimal particle position and trained with the Levenberg-Marquard algorithm. During this process, the training target is 1 × 10–5, maximum iteration number is 1000, learning rate is 0.05, and display interval is 200. At the end of training, the final MSE of the neural network is 9.9667 × 10–5. The training process and results are displayed in Figs. 3 and 4. Figure 3 shows that the MSE gradually drops from around 0.8 × 10–2 to around 1 × 10–4 after 400 iterations and reaches 9.9667 × 10–5 after 1000 iterations, which indicates that the convergence is steady and fast. The total runtime is 30.50893 s on the computer with a i7-10510U CPU and 16 GB RAM. The time complexity of the code is O(m), in which m is the maximum evolution number of PSO. From Fig. 4, we can see that the simulated results of the trained BP neural network almost coincide with the real values, which indicates that the established analysis model can precisely describe the complex nonlinear relationship between the influencing factors and the safety factor and successfully capture the main features of the loess slope stability evaluation system.

Then, four new loess slope samples are used to verify the precision of the established neural network model and the related parameter values are listed in Table 4. Meanwhile, Table 5 shows the comparison of the calculated safety factors by the proposed PLSR-BP model, the PSO-BP model without the partial least-squares regression analysis and the traditional BP neural network. From the evaluation results shown in Table 5, it can be seen that the forecasting model has been significantly improved by extracting the new synthesis variables and overcoming the multicollinearity among variables, and the performance of the established PLSR-BP model is obviously superior to the other two models. Moreover, the maximum absolute error of the output safety factor of the PLSR-BP model is less than 0.040 and the relative error never exceeds 5.0%. The high precision further indicates that the established PLSR-BP model based on the partial least-squares regression is feasible and reliable. Simultaneously, from Table 5, we can see that the relative error of Sample 2 predicted by the PLSR-BP model is largest among all the test samples. That is because the real safety factor of this slope is only 0.790 which is a value lower than 95% of the training samples. Namely, such a low safety factor is not common, and the proposed model hasn’t learned much knowledge about low safety factor because of data availability.

Conclusion

The assessment of loess slope stability is a highly complex nonlinear problem. Some of the factors affecting the slope stability exhibit the characteristics of fuzziness, randomness and variability. Meanwhile, there is a complex nonlinear relationship between the influencing factors and the safety factor. In this study, by taking advantage of the artificial neural network and intelligent swarm optimization algorithm, the improved BP model for the stability assessment of loess slopes is developed based on the partial least-squares regression, i.e., the PLSR-BP model.

Although the stability assessment of loess slopes is a dynamic, nonlinear, uncertain and systematic problem, it has been proved that the BP neural network has the ability to approach the complex nonlinear relationship. It is appropriate and effective to evaluate the loess slope safety and stability using the BP neural network. Moreover, this study focuses on the multicollinearity problem. The correlation analysis indicates that the multicollinearity exists in the variable system. The existence of multiple correlation will affect the objectivity of stability analysis and prevent the model from making correct judgments. Therefore, the partial least-squares regression is carried out and two new synthesis variables with better interpretation to the dependent variables are extracted. In this way, the adverse effects of the variable multicollinearity are overcome. Simultaneously, the neurons at the input layer of BP neural network are also reduced to two, which simplifies the network structure and improves the modeling efficiency. Additionally, with the aim of converging to the global optimal solution more quickly, the BP neural network is initialized by PSO because of its global optimization ability. The test results show satisfactory precision, which indicates that the proposed model is feasible and reliable for the stability evaluation of loess slopes.

Combining the advantages of the particle swarm optimization, BP neural network and partial least-squares regression, the proposed assessment model can not only tackle with the variable correlation, local convergence and nonlinearity problems, but also present more extensive applicability. It can be used to determine the stability state and calculate the safety factor of loess slopes. Meanwhile, more influencing factors, such as rainfall density, groundwater level, weathering degree of geomaterials, etc., can be considered in the developed model based on data availability to conduct parameter sensitivity analysis and form a specific model reflecting the actual situation in a certain area. However, for such a specific model, the quality of the training samples may affect the effectiveness of the established model, i.e., the accurate parameter values should be ensured for the samples. Additionally, the developed model cannot give satisfactory results if the parameter values of an evaluated slope exceed the parameter ranges of the training sample. Although the loess slope stability assessment involves many aspects and a few challenges may be encountered, the proposed model has proved to be an effective and efficient approach for engineers in the field of loess slopes, and it shows potential in a variety of slope engineering applications.

Data availability

The datasets generated and/or analyzed during the current study are available from the corresponding author upon reasonable request.

References

Lu, P. & Rosenbaum, M. S. Artificial neural networks and grey systems for the prediction of slope stability. Nat. Hazards 30(3), 383–398 (2003).

Hoang, N. D. & Pham, A. D. Hybrid artificial intelligence approach based on metaheuristic and machine learning for slope stability assessment: A multinational data analysis. Expert Syst. Appl. 46, 60–68 (2016).

Ahangar-Asr, A., Faramarzi, A. & Javadi, A. A new approach for prediction of the stability of soil and rock slopes. Eng. Comput. 27(7), 878–893 (2010).

Leshchinsky, D. Slope stability analysis: Generalized approach. J. Geotech. Eng. 116(5), 851–867 (1990).

Raghuvanshi, T. K., Ibrahim, J. & Ayalew, D. Slope stability susceptibility evaluation parameter (SSEP) rating scheme—An approach for landslide hazard zonation. J. Afr. Earth Sci. 99, 595–612 (2014).

Fall, M., Azzam, R. & Noubactep, C. A multi-method approach to study the stability of natural slopes and landslide susceptibility mapping. Eng. Geol. 82(4), 241–263 (2006).

Song, Y. Q. et al. Susceptibility assessment of earthquake-induced landslides using Bayesian network: A case study in Beichuan. China. Comput. Geosci. 42, 189–199 (2012).

Esmaeili, M. et al. Multiple regression, ANN and ANFIS models for prediction of back break in the open pit blasting. Eng. Comput. 30(4), 549–558 (2014).

Yan, X. & Li, X. Bayes discriminant analysis method for predicting the stability of open pit slope. In Proceedings of the International Conference on Electric Technology and Civil Engineering (ICETCE), Lushan, China (2011).

Samui, P. & Kothari, D. P. Utilization of a least square support vector machine (LSSVM) for slope stability analysis. Sci. Iran. 18(1), 53–58 (2011).

Zhao, H., Yin, S. & Ru, Z. Relevance vector machine applied to slope stability analysis. Int. J. Numer. Anal. Methods Geomech. 36, 643–652 (2012).

Wang, K. & Xu, F. Slope stability evaluation based on PSO-PP. Appl. Mech. Mater. 580–583, 1708–1713 (2014).

Liu, B. W., Wang, Z. W. & Zhong, X. Y. Particle swarm optimization algorithm in numerical simulation of saturated rock slope slip. Math. Problems Eng. 2021, 6682659 (2021).

Himanshu, N., Burman, A. & Kumar, V. Assessment of optimum location of non-circular failure surface in soil slope using unified particle swarm optimization. Geotech. Geol. Eng. 38(2), 2061–2083 (2020).

Moayedi, H. et al. The feasibility of three prediction techniques of the artificial neural network, adaptive neuro-fuzzy inference system, and hybrid particle swarm optimization for assessing the safety factor of cohesive slopes. ISPRS Int. J. Geo-inf. 8(9), 391 (2019).

Wang, H. W. Elementary introduction to multiple correlation causing harm to principle component analysis. J. Beijing Univ. Aeronaut. Astronaut. 22(1), 60–65 (1996).

Wang, H. B., Xu, W. Y. & Xu, R. C. Slope stability evaluation using back propagation neural networks. Eng. Geol. 80, 302–315 (2005).

Zhou, K. P. & Chen, Z. Q. Stability prediction of tailing dam slope based on neural network pattern recognition. In Proceedings of the Second International Conference on Environmental and Computer Science ICECS’09 (IEEE Computer Society, 2009).

Das, S. K. et al. Classification of slopes and prediction of factor of safety using differential evolution neural networks. Environ. Earth Sci. 64(1), 201–210 (2011).

Jiang, J. P. BP neural networks for prediction of the factor of safety of slope stability. In Proceedings of International Conference on Computing, Control and Industrial Engineering (CCIE), Wuhan, China (2011).

Mirjalili, S. Dragonfly algorithm: A new meta-heuristic optimization technique for solving single-objective, discrete, and multi-objective problems. Neural Comput. Appl. 27(4), 1053–1073 (2016).

Mirjalili, S., Mirjalili, S. M. & Hatamlou, A. Multi-verse optimizer: A nature-in-spired algorithm for global optimization. Neural Comput. Appl. 27(2), 495–513 (2016).

Mirjalili, S., Lewis, A. & Mostaghim, S. Confidence measure: A novel metric for robust meta-heuristic optimization algorithms. Inf. Sci. 317, 114–142 (2015).

Abd Elaziz, M. et al. Cooperative meta-heuristic algorithms for global optimization problems. Expert Syst. Appl. 176, 114788 (2021).

Geladi, P. & Kowalski, B. R. Partial least-squares regression: A tutorial. Anal. Chim. Acta 185, 1–17 (1986).

Romera-Fernández, M. et al. Feasibility study of FT-MIR spectroscopy and PLS-R for the fast determination of anthocyanins in wine. Talanta 88, 303–310 (2012).

Wang, H. W. & Zhu, Y. H. PLS regression in the function of eliminating multi-correlation. Appl. Stat. Manag. 15(6), 48–52 (1996).

Wang, H. W. The Method and Application of Partial Least Squares Regression (National Defense Industry Press, 1999).

Fausett, L. V. Fundamentals of Neural Networks 1st edn. (Prentice Hall, 1994).

Naghadehi, M. Z. et al. A new open-pit mine slope instability index defined using the improved rock engineering systems approach. Int. J. Rock Mech. Min. Sci. 61, 1–14 (2013).

Li, B. et al. Slope stability analysis based on quantum-behaved particle swarm optimization and least squares support vector machine. Appl. Math. Model. 39, 5253–5264 (2015).

Eberhart, R. C. & Kenned, Y. J. A new optimizer using particle swarm theory. In Proceedings of Sixth International Symposium on Micro Machine and Human Science. Nagoya, Japan, 66–73 (1995).

Zhang, Q. K. et al. Vector coevolving particle swarm optimization algorithm. Inf. Sci. 394–395, 273–298 (2017).

Gao, J. Y., Xing, Y. C. & Chen, Y. X. Prediction model for stability of high loess slopes. Chin. J. Geotech. Eng. 33(s1), 163–169 (2011).

Jiao, L. C. The Theory of Neural Network System (Xidian University Press, 1990).

Acknowledgements

This work is financially supported by the China Postdoctoral Science Foundation (Grant Number 2020M680950), for which the author is grateful.

Author information

Authors and Affiliations

Contributions

Bin Gong conducted the research and wrote the manuscript.

Corresponding author

Ethics declarations

Competing interests

The author declares no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gong, B. Study of PLSR-BP model for stability assessment of loess slope based on particle swarm optimization. Sci Rep 11, 17888 (2021). https://doi.org/10.1038/s41598-021-97484-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-97484-0

This article is cited by

-

A new, fast, and accurate algorithm for predicting soil slope stability based on sparrow search algorithm-back propagation

Natural Hazards (2024)

-

Prevention/mitigation of natural disasters in urban areas

Smart Construction and Sustainable Cities (2023)

-

Failure mechanism and sedimentary characteristics of a catastrophic rockslide avalanche induced by the 2008 Wenchuan earthquake

Landslides (2023)

-

Failure mechanism of boulder-embedded slope under excavation disturbance and rainfall

Bulletin of Engineering Geology and the Environment (2023)

-

Slope stability analysis based on convolutional neural network and digital twin

Natural Hazards (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.