Abstract

Exponential Random Graph Models (ERGMs) have gained increasing popularity over the years. Rooted into statistical physics, the ERGMs framework has been successfully employed for reconstructing networks, detecting statistically significant patterns in graphs, counting networked configurations with given properties. From a technical point of view, the ERGMs workflow is defined by two subsequent optimization steps: the first one concerns the maximization of Shannon entropy and leads to identify the functional form of the ensemble probability distribution that is maximally non-committal with respect to the missing information; the second one concerns the maximization of the likelihood function induced by this probability distribution and leads to its numerical determination. This second step translates into the resolution of a system of O(N) non-linear, coupled equations (with N being the total number of nodes of the network under analysis), a problem that is affected by three main issues, i.e. accuracy, speed and scalability. The present paper aims at addressing these problems by comparing the performance of three algorithms (i.e. Newton’s method, a quasi-Newton method and a recently-proposed fixed-point recipe) in solving several ERGMs, defined by binary and weighted constraints in both a directed and an undirected fashion. While Newton’s method performs best for relatively little networks, the fixed-point recipe is to be preferred when large configurations are considered, as it ensures convergence to the solution within seconds for networks with hundreds of thousands of nodes (e.g. the Internet, Bitcoin). We attach to the paper a Python code implementing the three aforementioned algorithms on all the ERGMs considered in the present work.

Similar content being viewed by others

Introduction

Over the last 20 years, network theory has emerged as a successful framework to address problems of scientific and societal relevance1: examples of processes that are affected by the structural details of the underlying network are provided by the spreading of infectious diseases2,3,4, opinion dynamics5, the propagation of losses during financial crises6, etc.

Within such a context, two needs have emerged quite naturally7: 1) detecting the topological properties of a real network that can be deemed as statistically significant - typically those higher-order properties (e.g. the assortativity, the clustering coefficient, etc.) that may be not explained by local features of the nodes such as the degrees, 2) inferring the relevant details of a given network structure in case only partial information is available. Both goals can be achieved by constructing a framework for defining benchmarks, i.e. synthetic configurations retaining only some of the properties of the original system - the so-called constraints - and, otherwise, being maximally random.

Two different kinds of approaches have been proposed so far, i.e. the microcanonical and the canonical ones. Microcanonical approaches8,9,10,11,12,13,14 artificially generate many randomized variants of the observed network by enforcing the constraints in a ‘hard’ fashion, i.e. by creating configurations on each of which the constrained properties are identical to the empirical ones. On the other hand, canonical approaches15,16,17,18,19 enforce constraints in a ‘soft’ fashion, by creating a set of configurations over which the constrained properties are on average identical to the empirical ones. Softening the requirement of matching the constraints has a clear advantage: allowing the mathematical expression for the probability of a generic configuration, \({\mathbf {G}}\), to be obtained analytically, as a function of the enforced constraints.

In this second case, a pivotal role is played by the formalism of the Exponential Random Graph Models (ERGMs)20 whose popularity has steadily increased over the years. The ERGMs mathematical framework dates back to Gibbs’ (re)formulation of statistical mechanics and is based upon the variational principle known as maximum entropy, stating that the probability distribution that is maximally non-committal with respect to the missing information is the one maximizing the Shannon entropy21. This allows self-consistent inference to be made, by assuming maximal ignorance about the unknown degrees of freedom of the system.

In the context of network theory, the ERGMs framework has found a natural application to the resolution of the two aforementioned problems, i.e. 1) that of defining null models of the original network, in order to assess the significance of empirical patterns - against the hypothesis that the network structure is solely determined by the constraints themselves and 2) that of deriving the most probable configurations that are compatible with the available details about a specific network.

In both cases, after the functional form of the probability distribution has been identified, via the maximum entropy principle, one also needs to numerically determine it: to this aim, the likelihood maximization principle can be invoked, stating to maximize the probability of observing the actual configuration. This prescription typically translates into the resolution of a system of O(N) non-linear, coupled equations - with N representing the number of nodes of the network under analysis.

Problems like these are usually affected by the issues of accuracy, speed and scalability: the present paper aims at addressing them at once, by comparing the performance of three algorithms, i.e. Newton’s method, a quasi-Newton method and a recently-proposed fixed-point recipe22,23, to solve a variety of ERGMs, defined by binary and weighted constraints in both a directed and an undirected fashion.

We would like to stress that, while the theoretical architecture of ERGMs is well established, an exhaustive study of the computational cost required for their numerical optimization, alongside with a comparison among the most popular algorithms designed for the task, is still missing. Our work aims at filling precisely this gap. Additionally, we provide a Python code implementing the three aforementioned recipes on all the ERGMs considered in the present work.

General theory

Canonical approaches aim at obtaining the mathematical expression for the probability of a generic configuration, \({\mathbf {G}}\), as a function of the observed constraints: ERGMs realize this by maximizing the Shannon entropy15,16.

The Maximum Entropy Principle

Generally speaking, the problem to be solved in order to find the functional form of the probability distribution to be employed as a benchmark reads

where Shannon entropy reads

and \(\vec {C}({\mathbf {G}})\) is the vector of constraints representing the information defining the benchmark itself (notice that \(C_0=\langle C_0\rangle =1\) sums up the normalization condition). The solution to the problem above can be found by maximizing the Lagrangian function

with respect to \(P({\mathbf {G}})\). As a result one obtains

with \({\mathcal {H}}({\mathbf {G}},\vec {\theta })=\vec {\theta }\cdot \vec {C}({\mathbf {G}})=\sum _{i=1}^M\theta _iC_i({\mathbf {G}})\) representing the Hamiltonian, i.e. the function summing up the proper, imposed constraints and \(Z(\vec {\theta })=\sum _{\mathbf {G}}P({\mathbf {G}})=\sum _{\mathbf {G}}e^{-{\mathcal {H}}({\mathbf {G}},\vec {\theta })}\) representing the partition function, ensuring that \(P({\mathbf {G}})\) is properly normalized. Constraints play a pivotal role, either representing the information to filter, in order to assess the significance of certain quantities, or the only available one, in order to reconstruct the inaccessible details of a given configuration.

The maximum likelihood principle

The formalism above is perfectly general; however, it can be instantiated to study an empirical network configuration, say \({\mathbf {G}}^*\). In this case, the Lagrange multipliers ‘acting’ as unknown parameters in Eq. (4) can be numerically estimated by maximizing the associated likelihood function15,24. The latter is defined as

and must be maximized with respect to the vector \(\vec {\theta }\). Remarkably, whenever the probability distribution is exponential (as the one deriving from the Shannon entropy maximization), the likelihood maximization problem

is characterized by first-order necessary conditions for optimality reading

and leading to the system of equations

to be solved. These conditions, however, are sufficient to characterize a maximum only if \({\mathscr {L}}(\vec {\theta })\) is concave. This is indeed the case, as we prove by noticing that

i.e. that the Hessian matrix, \({\mathbf {H}}\), of our likelihood function is ‘minus’ the covariance matrix of the constraints, hence negative semidefinite by definition. The fourth passage is an example of the well-known fluctuation-response relation20.

A graphical representation of how the two principles work is shown in Fig. 1.

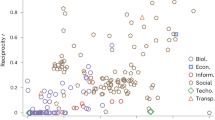

System diagram illustrating the models discussed in the present paper and implemented in the ‘NEMTROPY’ package, attached to it: it represents a sort of guide to individuate the best model for analysing the system at hand. Our package handles both monopartite and bipartite networks; while the latter ones have been considered only in their binary, undirected fashion, the former ones can be modeled either in a binary or a weighted fashion, allowing for both undirected and directed links.

Combining the MEP and the MLP principles

The Maximum Entropy Principle (MEP) and the Maximum Likelihood Principle (MLP) encode two different prescriptions aiming, respectively, at determining the functional form of a probability distribution and its numerical value. In optimization theory, the problem (the problem 1) is known as primal problem: upon noticing that the Shannon entropy is concave, while the imposed constraints are linear in \(P({\mathbf {G}})\), one concludes that the primal problem is convex (it is easy to see this, by rewriting it as a minimization problem for \(-S[P]\)).

As convexity implies strong duality, we can, equivalently, consider an alternative version of the problem to optimize, know as dual problem. In order to define it, let us consider the Lagrangian function

where, now, the generic expectation of the i-th constraint, \(\langle C_i\rangle\), has been replaced by the corresponding empirical value, \(C_i({\mathbf {G}}^*)\). As the dual function is given by

the dual problem reads

which is a convex problem by construction; this is readily seen by substituting Eq. (4) into Eq. (10), operation that leads to the expression

i.e. the likelihood function introduced in Eq. (5). In other words, Eq. (12) combines the MEP and the MLP into a unique optimization step whose score function becomes the Lagrangian function defined in Eq. (10).

Optimization algorithms for non-linear problems

In general, the optimization problem defined in Eq. (12) cannot be solved analytically, whence the need to resort to numerical methods. For an exhaustive review on numerical methods for optimization we refer the interested reader to25,26: in the following, we present only the concepts that are of some relevance for us. The problemis a Nonlinear Programming Problem (NLP). In order to solve it numerically, we will adopt a Sequential Quadratic Programming (SQP) approach. Starting from an initial guess \(\vec {\theta }^{(0)}\), SQP solves Eq. (14) by iteratively updating the vector of Lagrange multipliers

according to the rule

\(\forall \,i\), leading to the set of equations

which can be compactly rewritten as

The stepsize \(\alpha \in (0,1]\) is selected to ensure that \({\mathscr {L}}(\vec {\theta }^{(n+1)})>{\mathscr {L}}(\vec {\theta }^{(n)})\) via a backtracking, line search procedure: starting from \(\alpha =1\), if the Armijo condition

is violated, we set \(\alpha \leftarrow \beta \alpha\) (\(\gamma \in (0,0.5]\) and \(\beta \in (0,1)\) are the parameters of the algorithm). On the other hand, the term \({\mathbf {H}}^{(n)}\) can be selected according to a variety of methods. In the present contribution we focus on the following three ones.

Newton’s method. One speaks of Newton’s method in case \({\mathbf {H}}^{(n)}\) is chosen to be

where \(\nabla ^2{\mathscr {L}}(\vec {\theta })\) is the Hessian matrix of the likelihood function and the term \(\Delta {\mathbf {H}}^{(n)}\) is typically selected as small as possible in order to avoid slowing convergence and to ensure that \({\mathbf {H}}^{(n)}\) is negative definite (i.e. \(\nabla ^2{\mathscr {L}}(\vec {\theta }^{(n)})+\Delta {\mathbf {H}}^{(n)}\prec 0\)). This choice of \({\mathbf {H}}^{(n)}\) is also referred to as ‘exact Hessian’.

Quasi-Newton methods. Any Hessian approximation which is negative definite (i.e. satisfying \({\mathbf {H}}^{(n)}\prec 0\)) yields an ascent direction and guarantees convergence. Although one may choose to consider the simplest prescription \({\mathbf {H}}^{(n)}=-{\mathbf {I}}\), which yields the ‘steepest ascent’ algorithm, here we have opted for the following recipe, i.e. the purely diagonal version of Newton’s method: \(H_{ii}^{(n)}=\nabla ^2_{ii}{\mathscr {L}}(\vec {\theta }^{(n)})+\Delta H_{ii}^{(n)}<0\), \(\forall \,i\) and \(H_{ij}^{(n)}=0\), \(\forall \,i\ne j\).

Fixed-point iteration on modified KKT conditions. In addition to the (classes of) algorithms above, we will also consider an iterative recipe which is constructed as a fixed-point iteration on a modified version of the Karush-Kuhn-Tucker (KKT) conditions, i.e. \({\mathbf {F}}(\vec {\theta })=\vec {0}\) or, analogously, \(\vec {\theta }={\mathbf {G}}(\vec {\theta })\); the iterate can, then, be made explicit by rewriting the latter as

The condition above will be made explicit, for each network model, in the corresponding subsection. We also observe that this choice yields a non-standard SQP method as \({\mathbf {H}}^{(n)}\) is typically not symmetric, for our models.

A note on convergence. Provided that the Hessian approximation \({\mathbf {H}}^{(n)}\) is negative definite, the direction \(\Delta \vec {\theta }\) is an ascent one; as such, it is guaranteed to yield an improvement of the objective function, for a step size \(\alpha\) that is sufficiently small. The role of the backtracking line search is that of finding a step size \(\alpha\) that yields such an improvement, while making sufficient progress towards the solution. As discussed in25, Newton’s method has local quadratic convergence, while the quasi-Newton method and the fixed-point iteration algorithm have local linear convergence.

Applications

Let us now apply the algorithms described in the previous section to a number of specific cases of interest. The taxonomy of the models considered in the present paper is shown in Fig. 2 while the constraints defining each model are illustrated in Fig. 3.

UBCM: binary undirected graphs with given degree sequence

Let us start by considering binary, undirected networks (BUNs). The simplest, non-trivial set of constraints is represented by the degrees of nodes: the degree of node i, i.e. \(k_i({\mathbf {A}})=\sum _{j(\ne i)=1}^N a_{ij}\), counts the number of its neighbours and coincides with the total number of 1s along the i-th row (or, equivalently, along the i-th column) of the adjacency matrix \({\mathbf {A}}\equiv \{a_{ij}\}_{i,j=1}^N\). The benchmark defined by this set of constraints is known as Undirected Binary Configuration Model (UBCM) and its Hamiltonian reads

entropy maximization15,16 leads to the factorized graph probability

where \(p_{ij}=p_{ij}^\text {UBCM}\equiv \frac{e^{-\theta _i-\theta _j}}{1+e^{-\theta _i-\theta _j}}\). In this case, the canonical ensemble of BUNs is the set of networks with the same number of nodes, N, of the observed graph and a number of (undirected) links varying from zero to the maximum value \(\left( {\begin{array}{c}N\\ 2\end{array}}\right)\). The argument of the problem (6) for the specific network \({\mathbf {A}}^*\) becomes

whose first-order optimality conditions read

Resolution of the UBCM. Newton’s and the quasi-Newton method can be easily implemented via the recipe defined in Eq. (18) (see “Appendix A” for the definition of the UBCM Hessian).

The explicit definition of the fixed-point recipe, instead, requires a preliminary observation, i.e. that the system of equations embodying the UBCM first-order optimality conditions can be re-written as follows

i.e. as a set of consistency equations. The observation that the term \(e^{-\theta _i}\) appears on both sides of the equation corresponding to the i-th constraint suggests an iterative recipe to solve such a system, i.e.

originally proposed in22 and further refined in23. The identification \(p_{ij}^\text {UBCM}\equiv \frac{e^{-\theta _i^{(\infty )}-\theta _j^{(\infty )}}}{1+e^{-\theta _i^{(\infty )}-\theta _j^{(\infty )}}}\), \(\forall \,i<j\) allows the probability coefficients defining the UBCM to be numerically determined.

As any other iterative recipe, the one proposed above needs to be initialized as well. To this aim, we have tested three different sets of initial values: the first one is defined by the position \(\theta _i^{(0)}=-\ln \left[ \frac{k_i({\mathbf {A}}^*)}{\sqrt{2L}}\right]\), \(\forall \,i\) - usually, a good approximation of the solution of the system of Eq. (25), in the ‘sparse case’ (i.e. whenever \(p_{ij}^\text {UBCM}\simeq e^{-\theta _i-\theta _j}\) the second one is a variant of the position above, reading \(\theta _i^{(0)}=-\ln \left[ \frac{k_i({\mathbf {A}}^*)}{\sqrt{N}}\right]\), \(\forall \,i\); the third one, instead, prescribes to randomly draw the value of each parameter from a uniform distribution with support on the unit interval, i.e. \(\theta _i^{(0)}\sim U(0,1)\), \(\forall \,i\).

Reducing the dimensionality of the problem. The problem defining the UBCM can be further simplified by noticing that nodes with the same degree, say k, can be assigned the same value of the multiplier \(\theta\)24 - a result resting upon the observation that any value \(k_i({\mathbf {A}}^*)\) must match the sum of monotonic, increasing functions. This translates into the possibility of rewriting \({\mathscr {L}}_{\mathrm{UBCM}}(\vec {\theta })\) in a ‘reduced’ fashion, as

where the sums run over the distinct values of the degrees and f(k) counts the number of nodes whose degree is k. Rewriting the problem with respect to the set \(\{\theta _k\}_k\) leads one to recover simplified versions of the three algorithms considered here: Newton’s and the quasi-Newton methods can, now, be solved via a ‘reduced’ version of eq. (18) (since both the dimension of the gradient and the order of the Hessian matrix of the likelihood function are, now, less than N), while the iterative recipe defined in (26) can be rewritten in terms of the ‘non-degenerate’ degrees, as

\(\forall \,k\), where, at the denominator, the self-contribution (i.e. the probability that a node links to itself) has been explicitly excluded.

Performance testing. The performance of the three algorithms, considered in the present paper, to solve the reduced version of Eq. (25), has been tested on a bunch of real-world networks. The latter ones span a wide variety of systems, including natural, financial and technological ones. In particular, we have considered the synaptic network of the worm C. Elegans28, the network of the largest US airports29, the protein-protein interaction network of the bacterium H. Pylori30, Internet at the level of Autonomous Systems31 and eight daily snapshots of the so-called Bitcoin Lightning Network32, chosen throughout its entire history. Before commenting on the results of our numerical exercises, let us, first, describe how the latter ones have been carried out.

The accuracy of each algorithm in reproducing the constraints defining the UBCM has been quantified via the maximum absolute error metrics, defined, in a perfectly general fashion, as \(\max _i\{\left| C_i^*-\langle C_i \rangle \right| \}_{i=1}^N\) (where \(C_i^*\) is the empirical value of the i-th constraint, \(C_i\)). Naturally, in the UBCM case, \(C_i=k_i\), \(\forall \,i\) and the aforementioned error score becomes

(the acronym standing for Maximum Absolute Degree Error). Equivalently, it is the infinite norm of the difference between the vector of the empirical values of the constraints and that of their expected values.

For each algorithm, we have considered three different stopping criteria: the first one puts a condition on the Euclidean norm of the gradient of the likelihood function, i.e.

the second one puts a condition on the Euclidean norm of the vector of differences between the values of the parameters at subsequent iterations, i.e.

the third one concerns the maximum number of iterations: after 1000 steps, any of the three algorithms stops.

The results about the performance of our three algorithms are reported in Table 1. Overall, all recipes perform very satisfactorily, being accurate, fast and scalable; moreover, all algorithms stop either because the condition on the norm of the likelihood is satisfied or because the condition on the norm of the vector of parameters is satisfied.

For what concerns accuracy, the largest maximum error per method spans an interval (across all configurations) that amounts at \(10^{-10}\lesssim \text {MADE}_\text {Newton}^\text {reduced}\lesssim 10^{-6}\), \(10^{-6}\le \text {MADE}_\text {Quasi-Newton}^\text {reduced}\le 10^{-5}\) and \(10^{-8}\lesssim \text {MADE}_\text {fixed-point}^\text {reduced}\lesssim 10^{-6}\). By looking at each specific network, it is evident that the two most accurate methods are systematically Newton’s and the fixed-point ones.

For what concerns speed, the amount of time required by each method to achieve convergence spans an interval (across all configurations) that is \(0.005\le T_\text {Newton}^\text {reduced}\le 0.01\), \(0.014\le T_\text {Quasi-Newton}^\text {reduced}\le 0.15\) and \(0.002\le T_\text {fixed-point}^\text {reduced}\le 0.015\) (time is measured in seconds). The fastest method is the fixed-point one, although Newton’s method approximately requires the same amount of time, when compared to it on each specific configuration. Differences in the speed of convergence of any method, caused by the choice of a particular set of initial conditions, are indeed observable: the prescription reading \(\theta _i^{(0)}=-\ln \left[ \frac{k_i({\mathbf {A}}^*)}{\sqrt{N}}\right]\), \(\forall \,i\) outperforms the other ones.

Let us now comment on the scalability of our algorithms. What we learn from our exercise is that scalability is not related to the network size in a simple way: the factors seemingly playing a major role are the ones affecting the reducibility of the original system of equations, i.e. the ones ‘deciding’ the number of different equations that actually need to be solved.

While reducibility can be easily quantified a posteriori, e.g. by calculating the coefficient of reduction, \(c_r\), defined as the ratio between the number of equations that survive to reduction and the number of equations defining the original problem (hence, the smaller the better), providing an exhaustive list of the aforementioned factors a priori is much more difficult.

In the case of the UBCM, \(c_r\) is defined as the number of different degrees divided by the total number of nodes; one may, thus, argue that reducibility is affected by the heterogeneity of the degree distribution; upon considering that the latter can be quantified by computing the coefficient of variation (defined as \(c_v=s/m\), where s and m are, respectively, the standard deviation and the mean of the degree distribution of the network at hand), one may derive a simple rule of thumb: a larger coefficient of variation (pointing out a larger heterogeneity of the degree distribution) leads to a larger coefficient of reduction and a larger amount of time for convergence will be required. Notice that even if the degree distribution is narrow, outliers (e.g. hubs) may still play a role, forcing the corresponding parameters to assume either very large or very small values - hence, slowing down the entire convergence process.

In this sense, scalability is the result of a (non-trivial) interplay between size and reducibility. Let us take a look at Table 1: Internet is the most reducible network of our basket, although being the largest in size, while the neural network of C. Elegans is one of the least reducible networks of our basket, although being the second smallest one; as a consequence, the actual number of equations defining the UBCM on C. Elegans is \(\simeq 30\) while the actual number of equations defining the UBCM on Internet is \(\simeq 100\) - whence the larger amount of time to solve the latter. Remarkably, the time required by our recipes to ensure that the largest system of equations converges to the solution ranges from thousandths to tenths of seconds.

As a last comment, we would like to stress that, unlike several popular approximations as the Chung-Lu one27, the generic coefficient \(p_{ij}^\text {UBCM}\) always represents a proper probability, in turn implying that eq. (23) also provides us with a recipe to sample the canonical ensemble of BUNs, under the UBCM. Notice that the factorization of the graph probability \(P_\text {UBCM}({\mathbf {A}}|\vec {\theta })\) greatly simplifies the entire procedure, allowing a single graph to be sampled by implementing the Bernoulli trial

for each (undirected) pair of nodes, in either a sequential or a parallel fashion. The sampling process, whose computational complexity amounts at \(O(N^2)\), can be repeated to generate as many configurations as desired. The pseudo-code for explicitly sampling the UBCM ensemble is summed up by Algorithm 1.

We explicitly acknowledge the existence of the algorithm proposed in33 for sampling binary, undirected networks from the Chung-Lu model (i.e. the ‘sparse case’ approximation of the UBCM), a recipe that is applicable whenever the condition \(p_{ij}^\text {CL}=\frac{k_ik_j}{2L}<1\), \(\forall \,i<j\) is verified. As explicitly acknowledged by the authors of 33. However, it does not hold in several cases of interest: an example of paramount importance is provided by sparse networks whose degree distribution is scale-free. In such cases, \(k_{max}\sim N^{\frac{1}{\gamma -1}}\): hence, the hubs establish a connection with probability \(p_{ij}^\text {CL}\sim \frac{N^{\frac{2}{\gamma -1}}}{N-N^{\frac{1}{\gamma -1}}}\) that becomes larger than 1 when \(2<\gamma \le 3\) and diverges for \(\gamma \rightarrow 2\), thus leading to a strong violation of the requirement above.

DBCM: binary directed graphs with given in-degree and out-degree sequences

Let us now move to consider binary, directed networks (BDNs). In this case, the simplest, non-trivial set of constraints is represented by the in-degrees and the out-degrees of nodes, where \(k_i^{in}({\mathbf {A}})=\sum _{j(\ne i)=1}^Na_{ji}\) counts the number of nodes ‘pointing’ to node i and \(k_i^{out}({\mathbf {A}})=\sum _{j(\ne i)=1}^Na_{ij}\) counts the number of nodes i ‘points’ to. The benchmark defined by this set of constraints is known as Directed Binary Configuration Model (DBCM) whose Hamiltonian reads

as in the undirected case, entropy maximization15,16 leads to a factorized probability distribution, i.e.

where \(p_{ij}=p_{ij}^\text {DBCM}\equiv \frac{e^{-\alpha _i-\beta _j}}{1+e^{-\alpha _i-\beta _j}}\). The canonical ensemble of BDNs is, now, the set of networks with the same number of nodes, N, of the observed graph and a number of (directed) links varying from zero to the maximum value \(N(N-1)\). The argument of the problem (6) for the specific network \({\mathbf {A}}^*\) becomes

whose first-order optimality conditions read

and

Resolution of the DBCM. Newton’s and the quasi-Newton method can be easily implemented via the recipe defined in Eq. (18) (see “Appendix A” for the definition of the DBCM Hessian).

The fixed-point recipe for solving the system of equations embodying the DBCM first-order optimality conditions can, instead, be re-written in the usual iterative fashion as follows

Analogously to the undirected case, the initialization of this recipe has been implemented in three different ways. The first one reads \(\alpha _i^{(0)}=-\ln \left[ \frac{k_i^{out}({\mathbf {A}}^*)}{\sqrt{L}}\right]\), \(i=1\dots N\) and \(\beta _i^{(0)}=-\ln \left[ \frac{k_i^{in}({\mathbf {A}}^*)}{\sqrt{L}}\right]\), \(i=1\dots N\) and represents a good approximation to the solution of the system of equations defining the DBCM in the ‘sparse case’ (i.e. whenever \(p_{ij}^\text {DBCM}\simeq e^{-\alpha _i-\beta _j}\)); the second one is a variant of the position above, reading \(\alpha _i^{(0)}=-\ln \left[ \frac{k_i^{out}({\mathbf {A}}^*)}{\sqrt{N}}\right]\), \(i=1\dots N\) and \(\beta _i^{(0)}=-\ln \left[ \frac{k_i^{in}({\mathbf {A}}^*)}{\sqrt{N}}\right]\), \(i=1\ldots N\); the third one, instead, prescribes to randomly draw the value of each parameter from a uniform distribution defined on the unit interval, i.e. \(\alpha _i^{(0)}\sim U(0,1)\), \(\forall \,i\) and \(\beta _i^{(0)}\sim U(0,1)\), \(\forall \,i\). As for the UBCM, the identification \(p_{ij}^\text {DBCM}\equiv \frac{e^{-\alpha _i^{(\infty )}-\beta _j^{(\infty )}}}{1+e^{-\alpha _i^{(\infty )}-\beta _j^{(\infty )}}}\), \(\forall \,i\ne j\) allows the probability coefficients defining the DBCM to be numerically determined.

Reducing the dimensionality of the problem. As for the UBCM, we can define a ‘reduced’ version of the DBCM likelihood, accounting only for the distinct (pairs of) values of the degrees. By defining \(k^{out}\equiv k\) and \(k^{in}\equiv h\), in order to simplify the formalism, the reduced DBCM recipe reads

the implementation of the algorithms considered here must be modified in a way that is analogous to the one already described for the UBCM. In particular, the fixed-point recipe for the DBCM can be re-written by assigning to the nodes with the same out- and in-degrees (k, h) the same pair of values \((\alpha ,\beta )\), i.e. as

where the sums, now, run over the distinct values of the out- and in-degrees, n(k, h) is the number of nodes whose out-degree is k and whose in-degree is h and, as usual, the last term at the denominator excludes the self-contribution (i.e. the probability that a node links to itself).

Performance testing. As for the UBCM, the performance of the three algorithms in solving the reduced version of Eqs. (37) and (38) has been tested on a bunch of real-world networks. The latter ones span economic, financial and social networks. In particular, we have considered the World Trade Web (WTW) during the decade 1992–200234, a pair of snapshots of the Bitcoin User Network at the weekly time scale (the first day of those weeks being 13-02-12 and 27-04-15, respectively)35 and of the corresponding largest weakly connected component (whose size is, respectively, \(\simeq 65\%\) and \(\simeq 90\%\) of the full network size) and a snapshot of the semantic network concerning the Twitter discussion about the Covid-19 pandemics (more precisely: it is the network of re-tweets of the (online) Italian debate about Covid-19, collected in the period 21st February–20th April 2020)36. Before commenting on the results of our numerical exercises, let us, first, describe how the latter ones have been carried out.

The accuracy of each algorithm in reproducing the constraints defining the DBCM has been quantified via the maximum absolute error metrics that, in this case, reads

and accounts for the presence of two different degrees per node. As for the UBCM, it is the infinite norm of the difference between the vector of the empirical values of the constraints and that of their expected values.

The three different ‘stop criteria’ we have considered match the ones adopted for analysing the undirected case and consist in a condition on the Euclidean norm of the gradient of the likelihood function, i.e. \(||\nabla {\mathscr {L}}(\vec {\theta })||_2\le 10^{-8}\), in a condition on the Euclidean norm of the vector of differences between the values of the parameters at subsequent iterations, i.e. \(||\Delta \vec {\theta }||_2\le 10^{-8}\), and in a condition on the maximum number of iterations: after 10,000 steps, any of the three algorithms stops.

The results about the performance of our three algorithms are reported in Table 2. Overall, all recipes perform very satisfactorily, being accurate, fast and scalable; however, while Newton’s and the quasi-Newton methods stop because the condition on the norm of the likelihood is satisfied, the fixed-point recipe is always found to satisfy the limit condition on the number of steps (i.e. it runs for 10000 steps and, then, stops).

Let us start by commenting the results concerning the WTW. For what concerns accuracy, the largest maximum error per method spans an interval, across all configurations, that amounts at \(\text {MADE}_\text {Newton}^\text {reduced}\simeq 10^{-9}\), \(10^{-10}\lesssim \text {MADE}_\text {Quasi-Newton}^\text {reduced}\lesssim 10^{-6}\) and \(\text {MADE}_\text {fixed-point}^\text {reduced}\simeq 10^{-2}\). By looking at each specific network, it is evident that the most accurate method is systematically the quasi-Newton one.

For what concerns speed, the amount of time required by each method to achieve convergence spans an interval (across all configurations) that is \(0.5\le T_\text {Newton}^\text {reduced}\le 13\), \(0.21\le T_\text {Quasi-Newton}^\text {reduced}\le 0.33\) and \(2.5\le T_\text {fixed-point}^\text {reduced}\le 4.4\) (time is measured in seconds). The fastest method is the quasi-Newton one, followed by Newton’s method and the fixed-point recipe. The latter is the slowest method since it always reaches the limit of 10000 steps while the other two competing ones stop after few iterations. Appreciable differences in the speed of convergence of any method, caused by the choice of a particular set of initial conditions, are not observed.

The observations above hold true when the WTW is considered. The picture changes when very large networks, as Bitcoin and Twitter, are considered. First, let us notice that Bitcoin and Twitter ‘behave’ as the undirected version of Internet considered to solve the UBCM, i.e. they are very redundant, hosting many nodes with the same out- and in-degrees (in fact, the coefficient of reduction, \(c_r\), is, now, defined as the number of different ‘out-degree - in-degree’ pairs divided by twice the number of nodes). To provide a specific example, out of the original 676688 equations defining the DBCM for one of the two Bitcoin snapshots considered here, only \(\simeq 339\) equations survive the reduction; by converse, the WTW can be reduced to a much smaller extent (to be more specific, out of the original 324 equations defining the DBCM for the WTW in 1997, only \(\simeq 291\) equations survive the reduction). Interestingly, a good proxy of the reducibility of the directed configurations considered here is provided by their connectance (i.e. the denser the network, the less reducible it is).

On the one hand, this feature, common to the very large networks considered here, is what guarantees their resolution in a reasonable amount of time; on the other one, it seems not to be enough to let Newton’s and the quasi-Newton method be as fast as in the undirected case. For our binary, directed networks, in fact, the fastest (and, for some configurations, the most accurate) method becomes the fixed-point one. In order to understand this result we need to consider that both Newton’s and the quasi-Newton method require (some proxy of) the Hessian matrix of the DBCM to update the value of the parameters: since the order of the latter is \(O(N^2)\) for Newton’s method and O(N) for the quasi-Newton one, its calculation can be (very) time demanding - beside requiring a lot of memory for the step-wise update of the corresponding Hessian matrix. However, while this is compensated by a larger accuracy in the case of Newton’s method, this is no longer true when the quasi-Newton recipe is considered - the reason maybe lying in the poorer approximation provided by the diagonal of the Hessian matrix in case of systems like these.

As a last comment, we would like to stress that, as in the undirected case, the generic coefficient \(p_{ij}^\text {DBCM}\) represents a proper probability, in turn implying that Eq. (35) also provides us with a recipe to sample the canonical ensemble of BDNs, under the DBCM. Notice that the factorization of the graph probability \(P_\text {DBCM}({\mathbf {A}}|\vec {\theta })\) greatly simplifies the entire procedure, allowing a single graph to be sampled by implementing the Bernoulli trial

for each (directed) pair of nodes, in either a sequential or a parallel fashion. The sampling process, whose computational complexity amounts at \(O(N^2)\), can be repeated to generate as many configurations as desired. The pseudo-code for explicitly sampling the DBCM ensemble is summed up by Algorithm 2.

BiCM: bipartite binary undirected graphs with given degree sequences

So far, we have considered monopartite networks. However, the algorithm we have described for solving the DBCM can be adapted, with little effort, to solve a null model designed for bipartite, binary, undirected networks (BiBUNs), i.e. the so-called Bipartite Configuration Model (BiCM)37. These networks are defined by two distinct layers (say, \(\top\) and \(\bot\)) and obey the rule that links can exist only between (and not within) layers: for this reason, they can be compactly described via a biadjacency matrix \({\mathbf {B}}\equiv \{b_{i\alpha }\}_{i,\alpha }\) whose generic entry \(b_{i\alpha }\) is 1 if node i belonging to layer \(\bot\) is linked to node \(\alpha\) belonging to layer \(\top\) and 0 otherwise. The constraints defining the BiCM are represented by the degree sequences \(\{k_i\}_{i\in \bot }\) and \(\{d_\alpha \}_{\alpha \in \top }\) where \(k_i=\sum _{\alpha \in \top } b_{i\alpha }\) counts the neighbors of node i (belonging to layer \(\top\)) and \(d_\alpha =\sum _{i\in \bot }b_{i\alpha }\) counts the neighbors of node \(\alpha\) (belonging to layer \(\bot\)).

Analogously to the DBCM case,

where \(p_{i\alpha }=p_{i\alpha }^\text {BiCM}\equiv \frac{e^{-\gamma _i-\beta _{\alpha }}}{1+e^{-\gamma _i-\beta _{\alpha }}}\). The canonical ensemble of BiBUNs includes all networks with, say, N nodes on one layer, M nodes on the other layer and a number of links (connecting nodes of different layers) ranging from zero to the maximum value \(N\cdot M\).

The BiCM likelihood function reads

whose first-order optimality conditions read

Resolution of the BiCM. As for the DBCM case, Newton’s and the quasi-Newton methods can be implemented by adapting the recipe defined in Eq. (18) to the bipartite case (see “Appendix A” for the definition of the BiCM Hessian).

As for the DBCM, the fixed-point recipe for the BiCM can be re-written in the usual iterative fashion as follows

and the initialization is similar as well: in fact, we can employ the value of the solution of the BiCM in the sparse case, i.e. \(\gamma _i^{(0)}=-\ln \left[ \frac{k_i({\mathbf {B}}^*)}{\sqrt{L}}\right]\), \(\forall \,i\in \bot\) and \(\beta _\alpha ^{(0)}=-\ln \left[ \frac{d_\alpha ({\mathbf {B}}^*)}{\sqrt{L}}\right]\), \(\forall \,\alpha \in \top\) (only this set of initial conditions has been employed to analyse the bipartite case).

Reducing the dimensionality of the problem. Exactly as for the DBCM case, the presence of nodes with the same degree, on the same layer, leads to the appearance of identical equations in the system above; hence, the computation of the solutions can be sped up by writing

where f(k) is the number of nodes, belonging to layer \(\bot\), whose degree is k and g(d) is the number of nodes, belonging to layer \(\top\), whose degree is d.

Performance testing. The performance of the three algorithms in solving Eq. (49) has been tested on 16 snapshot of the bipartite, binary, undirected version of the WTW, gathering the country-product export relationships across the years 1995–201037. Before commenting on the results of our numerical exercises, let us, first, describe how the latter ones have been carried out.

The accuracy of each algorithm in reproducing the constraints defining the BiCM has been quantified via the maximum absolute error metrics that, now, reads

to account for the degrees of nodes on both layers.

The three different ‘stop criteria’ match the ones adopted for analysing the UBCM and the DBCM and consist in a condition on the Euclidean norm of the gradient of the likelihood function, i.e. \(||\nabla {\mathscr {L}}(\vec {\theta })||_2\le 10^{-10}\), in a condition on the Euclidean norm of the vector of differences between the values of the parameters at subsequent iterations, i.e. \(||\Delta \vec {\theta }||_2\le 10^{-10}\), and a condition on the maximum number of iterations: after 1000 steps, any of the three algorithms stops.

The results about the performance of our three algorithms are reported in Table 3. Overall, all recipes are accurate, fast and scalable; all methods stop because the condition on the norm of the likelihood is satisfied.

For what concerns accuracy, the largest maximum error per method spans an interval (across all configurations) that amounts at \(\text {MADE}_\text {Newton}^\text {reduced}\simeq 10^{-13}\), \(10^{-7}\lesssim \text {MADE}_\text {Quasi-Newton}^\text {reduced}\lesssim 10^{-5}\) and \(10^{-5}\lesssim \text {MADE}_\text {fixed-point}^\text {reduced}\lesssim 10^{-4}\). By looking at each specific network, it is evident that the most accurate method is systematically Newton’s one.

For what concerns speed, the amount of time required by each method to achieve convergence spans an interval (across all configurations) that is \(T_\text {Newton}^\text {reduced}\simeq 0.0023\) (on average), \(T_\text {Quasi-Newton}^\text {reduced}\simeq 0.016\) (on average) and \(T_\text {fixed-point}^\text {reduced}\simeq 0.018\) (on average) (time is measured in seconds). The fastest method is Newton’s one and is followed by the quasi-Newton and the fixed-point recipes. The gain in terms of speed due to the reducibility (now quantified by the ratio between the number of different pairs of degrees divided by the total number of nodes) of the system of equations defining the BiCM is also evident: while solving the original problem would have required handling a system of \(\simeq 10^3\) equations, the reduced one is defined by just \(\simeq 10^2\) distinct equations. Overall, a solution is always found within thousandths or hundredths of seconds.

As for the DBCM case, the ensemble of BiBUNs can be sampled by implementing a Bernoulli trial \(b_{i\alpha }\sim \text {Ber}[p_{i\alpha }]\) for any two nodes (belonging to different layers) in either a sequential or a parallel fashion. The computational complexity of the sampling process amounts at \(O(N\cdot M)\) and can be repeated to generate as many configurations as desired. The pseudo-code for explicitly sampling the BiCM ensemble is summed up by Algorithm 3.

UECM: weighted undirected graphs with given strengths and degrees

So far, we have considered purely binary models. Let us now focus on a class of ‘mixed’ null models for weighted networks, defined by constraining both binary and weighted quantities. Purely weighted models such as the Undirected and the Directed Weighted Configuration Model have not been considered since, as it has been proven elsewhere38, they perform quite poorly when employed to reconstruct networks. Let us start by the simplest model, i.e. the one constraining the degrees and the strengths in an undirected fashion. While \(k_i({\mathbf {A}})=\sum _{j(\ne i)=1}^Na_{ij}\) counts the number of neighbors of node i, \(s_i({\mathbf {W}})=\sum _{j(\ne i)=1}^Nw_{ij}\) defines the weighted equivalent of the degree of node i, i.e. its strength. For consistency, the binary adjacency matrix can be defined via the Heaviside step function, \(\Theta [.]\), as \({\mathbf {A}}\equiv \Theta [{\mathbf {W}}]\) a position indicating that \(a_{ij}=1\) if \(w_{ij}>0\), \(\forall \,i<j\) and zero otherwise. This particular model is known as Undirected Enhanced Configuration Model (UECM)38,39,40 and its Hamiltonian reads

it induces a probability distribution which is halfway between a Bernoulli and a geometric one39, i.e.

with

for any two nodes i and j such that \(i<j\) and \(p_{ij}^\text {UECM}=\frac{e^{-\alpha _i-\alpha _j-\beta _i-\beta _j}}{1-e^{-\beta _i-\beta _j}+e^{-\alpha _i-\alpha _j-\beta _i-\beta _j}}\). Notice that the functional form above is obtained upon requiring that the weights only assume (non-negative) integer values (i.e. \(w_{ij}\in [0,+\infty )\), \(\forall \,i<j\)): hence, the canonical ensemble is now constituted by the weighted configurations with N nodes and a number of (undirected) links ranging between zero and the maximum value \(\left( {\begin{array}{c}N\\ 2\end{array}}\right)\).

The argument of the problem (6) for the specific network \({\mathbf {W}}^*\) now becomes

whose first-order optimality conditions read

Resolution of the UECM Newton’s and the quasi-Newton methods can be easily implemented via the recipe defined in Eq. (18) (see “Appendix A” for the definition of the UECM Hessian).

As for the purely binary models, the fixed-point recipe for solving the UECM first-order optimality conditions transforms the following set of consistency equations

(with \(i=1\dots N\)) into the usual iterative fashion, by considering the parameters at the left hand side and at the right hand side, respectively at the n-th and at the \((n-1)\)-th iteration. It is important to remark that a reduced version of the iterative recipe above can indeed be written, by assigning the same pair of values \((\alpha ,\beta )\) to the nodes with the same pair of values (k, s): however, the larger heterogeneity of the strengths causes this event to happen more rarely than for purely binary models such as the UBCM and the DBCM.

As for the purely binary cases, three different sets of initial conditions have been considered, whose definition follows from the simplest conceivable generalization of the purely binary cases. In particular, the first set of values reads \(\alpha _i^{(0)}=-\ln \left[ \frac{k_i({\mathbf {A}}^*)}{\sqrt{2L}}\right]\), \(i=1\dots N\) and \(\beta _i^{(0)}=-\ln \left[ \frac{s_i({\mathbf {W}}^*)}{\sqrt{2W}}\right]\), \(i=1\dots N\); the second set is a variant of the first, reading \(\alpha _i^{(0)}=-\ln \left[ \frac{k_i({\mathbf {A}}^*)}{\sqrt{N}}\right]\), \(i=1\dots N\) and \(\beta _i^{(0)}=-\ln \left[ \frac{s_i({\mathbf {W}}^*)}{\sqrt{N}}\right]\), \(i=1\dots N\); the third recipe, instead, prescribes to randomly draw the value of each parameter from the uniform distribution defined on the unit interval, i.e. \(\alpha _i^{(0)}\sim U(0,1)\), \(\forall \,i\) and \(\beta _i^{(0)}\sim U(0,1)\), \(\forall \,i\).

Performance testing The performance of the three algorithms to solve the system of equations defining the UECM has been tested on a bunch of real-world networks. In particular, we have considered the WTW during the decade 1990–200041. Since the weights defining the configurations of the WTW are real numbers, we have rounded them to the nearest integer value, before running the UECM. Before commenting on the results of our numerical exercises, let us, first, describe how the latter ones have been carried out.

The accuracy of each algorithm in reproducing the constraints defining the UECM has been now quantified via the maximum relative error metrics, defined, in a perfectly general fashion, as \(\max _i\left\{ \frac{|C_i^*-\langle C_i\rangle |}{C_i}\right\} _{i=1}^N\) (where \(C_i^*\) is the empirical value of the i-th constraint, \(C_i\)). In the UECM case, we can define two variants of the aforementioned error, i.e.

(the acronyms standing for Maximum Relative Degree Error and Maximum Relative Strength Error). The reason driving this choice lies in the evidence that, in absolute terms, strengths are affected by a larger numerical error than degrees: this, however, doesn’t necessarily mean that a given algorithm performs poorly, as the magnitude of an error must be always compared with the numerical value of the quantity it refers to - whence the choice of considering relative scores.

The three different ‘stop criteria’ we have considered for each algorithm match the ones adopted for analysing the binary cases, consisting in a condition on the Euclidean norm of the gradient of the likelihood function, i.e. \(||\nabla {\mathscr {L}}(\vec {\theta })||_2\le 10^{-8}\), and in a condition on the Euclidean norm of the vector of differences between the values of the parameters at subsequent iterations, i.e. \(||\Delta \vec {\theta }||_2\le 10^{-8}\). The third condition concerns the maximum number of iterations: after 10000 steps, any of the three algorithms stops.

The results about the performance of our three algorithms are reported in Table 4. Overall, two out of three algorithms (i.e. Newton’s and the quasi-Newton methods) perform very satisfactorily, being accurate, fast and scalable; the third one (i.e. the fixed-point recipe), instead, performs very poorly. Moreover, while Newton’s method stops because the condition on the norm of the likelihood is satisfied, both the quasi-Newton and the fixed-point algorithms are always found to satisfy the limit condition on the number of steps (i.e. they run for 10000 steps and, then, stop).

For what concerns accuracy, the largest maximum error made by Newton’s method (across all configurations) amounts at \(10^{-10}\lesssim \text {MRDE}_\text {Newton}\lesssim 10^{-5}\) and \(\text {MRSE}_\text {Newton}\gtrsim 10^{-10}\); on the other hand, the largest maximum error made by the quasi-Newton method (across all configurations) amounts at \(10^{-5}\lesssim \text {MRDE}_\text {Quasi-Newton}\lesssim 10^{-1}\) and \(10^{-5}\le \text {MRSE}_\text {Quasi-Newton}\le 10^{-4}\). For what concerns speed, Newton’s method employs tenths of seconds to achieve convergence on each configuration while the quasi-Newton one always requires tens of seconds (specifically, almost thirty seconds for each considered configuration). The results above indicate that the fastest and two most accurate method is systematically Newton’s one, suggesting that the ‘complexity’ of the model is such that the information encoded into the Hessian matrix cannot be ignored without consequences on the quality of the solution. The fixed-point algorithm, instead, stops after seconds but is affected by errors whose order of systematically magnitude amounts at \(\text {MRDE}_\text {fixed-point}\simeq 10^2\) and \(1\lesssim \text {MRSE}_\text {fixed-point}\lesssim 10^2\).

We also explicitly notice that the MADE basically coincides with the MRDE for all considered configurations, meaning that the largest error, made by the algorithms considered here to solve the UECM, affects the nodes with the lowest degree (i.e. equal to one). On the other hand, strengths are affected by a larger absolute error (i.e. the MASE, defined as \(\text {MASE}=\max _i\{\left| s_i^*-\langle s_i \rangle \right| \}_{i=1}^N\)) than the degrees: if we calculate the MRSE, however, we realize that the largest errors affect very large strengths - hence being perfectly acceptable. For example, let us consider the WTW in 1993: the MASE amounts at 0.1 but, as the MRSE reveals, it affects a strength of the order of \(10^3\).

Lastly, differences in the speed of convergence of the two methods discussed in this section, caused by the choice of a particular set of initial conditions, are observable: the ‘uniform’ prescription outperforms the other ones.

Finally, let us comment on the algorithm to sample the UECM ensemble and that can be compactly achieved by implementing a two-step procedure. Let us look back at the formal expression for the pair-specific probability distribution characterizing the UECM: it induces coefficients reading

in turn suggesting that, for a specific pair of vertices i, j (with \(i<j\)), the appearance of the first link is ruled by a Bernoulli distribution with probability \(p_{ij}^\text {UECM}\) while the remaining \((w-1)\) ones can be drawn from a geometric distribution whose parameter reads \(e^{-\beta _i-\beta _j}\); in other words, the weight \((w-1)\) is drawn conditionally on the presence of a connection between the two considered nodes. The computational complexity of the sampling process is, again, \(O(N^2)\). The pseudo-code for explicitly sampling the DBCM ensemble is summed up by Algorithm 4. Notice that the way our sampling procedure is written requires the support of the geometric distribution to coincide with the positive integers.

DECM: weighted directed graphs with given strengths and degrees

Let us now extend the ‘mixed’ model introduced in the previous section to the case of directed networks. Constraints are, now, represented by four sequences of values, i.e. \(\{k_i^{out}\}_{i=1}^N\), \(\{k_i^{in}\}_{i=1}^N\), \(\{s_i^{out}\}_{i=1}^N\), \(\{s_i^{in}\}_{i=1}^N\) where the generic out-degree and in-degree are, respectively, defined as \(k_i^{out}({\mathbf {A}})=\sum _{j(\ne i)=1}^Na_{ij}\) and \(k_i^{in}({\mathbf {A}})=\sum _{j(\ne i)=1}^Na_{ji}\) and analogously for the generic out-strength and in-strength, reading \(s_i^{out}({\mathbf {W}})=\sum _{j(\ne i)=1}^Nw_{ij}\) and \(s_i^{in}({\mathbf {W}})=\sum _{j(\ne i)=1}^Nw_{ji}\). Consistency requires that \({\mathbf {A}}\equiv \Theta [{\mathbf {W}}]\) as for the UECM case. This model is known as Directed Enhanced Configuration Model (DECM) and its Hamiltonian reads

in turn, inducing the directed counterpart of the UECM distribution, i.e.

with

for any two nodes i and j such that \(i\ne j\) and \(p_{ij}^\text {DECM}=\frac{e^{-\alpha _i-\beta _j-\gamma _i-\delta _j}}{1-e^{-\gamma _i-\delta _j}+e^{-\alpha _i-\beta _j-\gamma _i-\delta _j}}\). As for the undirected case, weights are required to assume only (non-negative) integer values (i.e. \(w_{ij}\in [0,+\infty )\), \(\forall \,i\ne j\)): hence, the canonical ensemble is constituted by the weighted configurations with N nodes and a number of (directed) links ranging between zero and the maximum value \(N(N-1)\).

The argument of the problem (14) for the specific network \({\mathbf {W}}^*\) becomes

where \(z_{ij}=\left[ 1+e^{-\alpha _i-\beta _j}\left( \frac{e^{-\gamma _i-\delta _j}}{1-e^{-\gamma _i-\delta _j}}\right) \right]\), \(\,\forall \,i\ne j\) and whose first-order optimality conditions read

Resolution of the DECM. Newton’s and the quasi-Newton methods can be easily implemented via the recipe defined in Eq. (18) (see “Appendix A” for the definition of the DECM Hessian).

As for the UECM, the fixed-point recipe for solving the DECM first-order optimality conditions transforms the following set of consistency equations

(with \(i=1\dots N\)) into the usual iterative fashion, by considering the parameters at the left hand side and at the right hand side, respectively at the n-th and at the \((n-1)\)-th iteration. The reduced version of such a recipe would assign the same set of values \((\alpha ,\beta ,\gamma ,\delta )\) to the nodes for which the quantities \((k^{out},k^{in},s^{out},s^{in})\) have the same value: however, the larger heterogeneity of the strengths causes the DECM to be much less reducible than the purely binary models we have considered in the present contribution.

The three different sets of initial conditions that have been considered generalize the UECM ones: in particular, the first set of values reads \(\alpha _i^{(0)}=-\ln \left[ \frac{k_i^{out}({\mathbf {A}}^*)}{\sqrt{L}}\right]\), \(i=1\dots N\), \(\beta _i^{(0)}=-\ln \left[ \frac{k_i^{in}({\mathbf {A}}^*)}{\sqrt{L}}\right]\), \(i=1\dots N\), \(\gamma _i^{(0)}=-\ln \left[ \frac{s_i^{out}({\mathbf {W}}^*)}{\sqrt{W}}\right]\), \(i=1\dots N\) and \(\delta _i^{(0)}=-\ln \left[ \frac{s_i^{in}({\mathbf {W}}^*)}{\sqrt{W}}\right]\), \(i=1\dots N\); the second set of initial conditions can be obtained by simply replacing L with N; the third recipe, as usual, prescribes to randomly draw the value of each parameter from the uniform distribution defined on the unit interval.

Performance testing The performance of the three algorithms to solve the system of equations defining the DECM has been tested on a bunch of real-world networks. In particular, we have considered the Electronic Italian Interbank Market (e-MID) during the decade 2000–201042. Since e-MID weights are real numbers, we have rounded them to the nearest integer value, before running the DECM. Before commenting on the results of our numerical exercises, let us, first, describe how the latter ones have been carried out

The accuracy of each algorithm in reproducing the constraints defining the DECM has been quantified via the maximum relative error metrics, now reading

(the acronyms standing for for Maximum Relative Degree Error and Maximum Relative Strength Error) where we have defined \(k^{out}\equiv k\), \(k^{in}\equiv h\), \(s^{out}\equiv s\) and \(s^{in}\equiv t\) in order to simplify the formalism.

The three different ‘stop criteria’ we have adopted are the same ones we have considered for both the binary and the undirected, ‘mixed’ model, i.e. the condition on the Euclidean norm of the gradient of the likelihood function, i.e. \(||\nabla {\mathscr {L}}(\vec {\theta })||_2\le 10^{-8}\)), the condition on the Euclidean norm of the vector of differences between the values of the parameters at subsequent iterations (i.e. \(||\Delta \vec {\theta }||_2\le 10^{-8}\)) and the condition on the maximum number of iterations (i.e. after 10000 steps, any of the three algorithms stops).

The results about the performance of our three algorithms are reported in Table 5. Overall, Newton’s method performs very satisfactorily, being accurate, fast and scalable; the quasi-Newton method is accurate as well although (in some cases, much) slower. The fixed-point recipe, instead, performs very poorly, as for the undirected case. Moreover, while Newton’s method stops because the condition on the norm of the likelihood is satisfied, both the quasi-Newton and the fixed-point algorithms are always found to satisfy the limit condition on the number of steps (i.e. they run for 10,000 steps and, then, stop).

For what concerns accuracy, the largest maximum error made by Newton’s method (across all configurations) amounts at \(10^{-14}\lesssim \text {MRDE}_\text {Newton}\lesssim 10^{-7}\) and \(10^{-12}\lesssim \text {MRSE}_\text {Newton}\lesssim 10^{-5}\); on the other hand, the largest maximum error made by the quasi-Newton method (across all configurations) amounts at \(10^{-8}\lesssim \text {MRDE}_\text {Quasi-Newton}\lesssim 10^{-7}\) and \(10^{-4}\lesssim \text {MRSE}_\text {Quasi-Newton}\lesssim 10^{-3}\). For what concerns speed, Newton’s method employs tens of seconds to achieve convergence on each configuration; the time required by the quasi-Newton method is of the same order of magnitude, although it is systematically larger than the time required by Newton’s one. Overall, these results indicate that the fastest and two most accurate method is Newton’s one. As in the undirected case, the fixed-point algorithm, instead, stops after seconds but is affected by errors whose order of systematically magnitude amounts at \(10\lesssim \text {MRDE}_\text {fixed-point}\lesssim 10^2\) and \(1\lesssim \text {MRSE}_\text {fixed-point}\lesssim 10^2\).

As for the UECM, the MADE basically coincides with the MRDE, for all considered configurations, while strengths are affected by a larger absolute error than the degrees: still, upon calculating the MRSE, we realize that the largest errors affect very large strengths - hence being perfectly acceptable.

Lastly, differences in the speed of convergence of the two methods discussed in this section, caused by the choice of a particular set of initial conditions, are observable: the ‘uniform’ prescription outperforms the other ones.

Finally, let us comment on the algorithm to sample the DECM ensemble: as for the UECM, it can be compactly achieved by implementing the directed counterpart of the two-step procedure described above. Given a specific pair of vertices i, j (with \(i\ne j\)), the first link can be drawn by sampling a Bernoulli distribution with probability \(p^\text {DECM}_{ij}\) while the remaining \((w-1)\) ones can be drawn from a geometric distribution whose parameter reads \(e^{-\gamma _i-\delta _j}\). The computational complexity of the sampling process is, again, \(O(N^2)\) and the pseudo-code for explicitly sampling the DECM ensemble is summed up by Algorithm 5. Notice that the way our sampling procedure is written requires the support of the geometric distribution to coincide with the positive integers.

Two-step models for undirected and directed networks

The need of considering network models defined in a two-step fashion arises from a number of considerations. First, the amount of information concerning binary and weighted quantities is often asymmetric: as it has been pointed out in43, information concerning a given network structure ranges from the knowledge of just a single, aggregated piece of information (e.g. the link density) to that of entire subgraphs. Indeed, models exist that take as input any binary, either probabilistic or deterministic, network model (i.e. any \(P({\mathbf {A}})\)) while placing link weights optimally, conditionally on the input configurations19,43.

Second, recipes like the UECM and the DECM are, generally speaking, difficult to solve; as we have already observed, only Newton’s method performs in a satisfactory way, both for what concerns accuracy and speed: hence, easier-to-solve recipes are welcome.

In what follows, we will consider the conditional reconstruction method (hereby, CReM) induced by the Hamiltonian

in case of undirected networks, it induces a conditional probability distribution reading

where, for consistency, \(q_{ij}(w_{ij}=0|a_{ij}=0)=1\) and \(q_{ij}(w_{ij}=0|a_{ij}=1)=0\). The meaning of these relationships is the following: given any two nodes i and j, the absence of a link, i.e. \(a_{ij}=0\), admits the only possibility \(w_{ij}=0\); on the other hand, the presence of a link, i.e. \(a_{ij}=1\), rules out the possibility that a null weight among the same vertices is observed.

In general, the functional form of \(q_{ij}(w_{ij}|a_{ij}=1)\) depends on the domain of the weights. In all cases considered in19,43, weights are assumed to be continuous; since the continuous distribution that maximizes Shannon entropy while constrained to reproduce first-order moments is the exponential one, the following functional form

(for any undirected pair of nodes) remains naturally induced. As shown in43, the problem (14) has to be slightly generalized; still, its argument for the specific network \({\mathbf {W}}^*\) becomes

where the quantity \(f_{ij}=\sum _{\mathbf {A}}P({\mathbf {A}})a_{ij}\) represents the expected value of \(a_{ij}\) over the ensemble of binary configurations defining the binary model taken as input (i.e. the marginal probability of an edge existing between nodes i and j). It follows that the CReM first-order optimality conditions read

Resolution of the CReM Newton’s and the quasi-Newton method can still be implemented via the recipe defined in Eq. (18) (see “Appendix A” for the definition of the CReM Hessian).

As for the UECM and the DECM, the fixed-point recipe for solving the system of equations embodying the CReM transforms the set of consistency equations

into an iterative recipe of the usual form, i.e. by considering the parameters at the left hand side and at the right hand side, respectively at the n-th and at the \((n-1)\)-th iteration. Although a reduced recipe can, in principle, be defined, an analogous observation to the one concerning the UECM and the DECM holds: the mathematical nature of the strengths (now, real numbers) increases their heterogeneity, in turn causing the CReM algorithm to be reducible even less than the ‘mixed’ models defined by discrete weights.

The initialization of the iterative recipe for solving the CReM has been implemented in the usual threefold way. The first set of initial values reads \(\theta _i^{(0)}=-\ln \left[ \frac{s_i({\mathbf {W}}^*)}{\sqrt{2W}}\right]\), \(i=1\dots N\); the second one is a variant of the position above, reading \(\theta _i^{(0)}=-\ln \left[ \frac{s_i({\mathbf {W}}^*)}{\sqrt{N}}\right]\); the third one, instead, prescribes to randomly draw the value of each parameter from the uniform distribution defined on the unit interval, i.e. \(\theta _i^{(0)}\sim U(0,1)\), \(\forall \,i\).

When considering directed networks, the conditional probability distribution defining the CReM reads

for any two nodes i and j such that \(i\ne j\); the set of Eq. (73) can be generalized as follows

and analogously for the sets of values initializing them.

Rescaling the CReM algorithm. Although the equations defining the CReM algorithm cannot be effectively reduced, they can be opportunely rescaled. To this aim, let us consider directed configurations and the system

where the sufficient statistics has been divided by an opportunely defined factor (in this case, \(\kappa\)) and the symbols \(\alpha _i(\kappa )\), \(\alpha _j(\kappa )\), \(\beta _i(\kappa )\) and \(\beta _j(\kappa )\) stress that the solution we are searching for is a function of the parameter \(\kappa\) itself. In fact, a solution of the system above reads

as it can be proven upon substituting it back into Eq. (76) and noticing that \(\{\alpha ^*_i\}_{i=1}^N\) and \(\{\beta ^*_i\}_{i=1}^N\) are solutions of the system of equations defined in (76). As our likelihood maximization problem admits a unique, global maximum, the prescription above allows us to easily identify it. Rescaling will be tested in order to find out if our algorithms are enhanced by it under some respect (e.g. accuracy or speed).

Performance testing Before commenting on the performance of the three algorithms in solving the system of equations defining the CReM, let us stress once more that the formulas presented so far are perfectly general, working for any binary recipe one may want to employ. In what follows, we will test the CReM by posing \(f_{ij}\equiv p_{ij}^\text {UBCM}\) and \(f_{ij}\equiv p_{ij}^\text {DBCM}\).

To test the effectiveness of our algorithms in solving the CReM on undirected networks we have considered the synaptic network of the worm C. Elegans28 and the eight daily snapshots of the Bitcoin Lightning Network32; the directed version of the CReM has, instead, been solved on the Electronic Italian Interbank Market (e-MID) during the decade 2000-201042. Before commenting on the results of our numerical exercises, let us, first, describe how the latter ones have been carried out.

As for the discrete ‘mixed’ models, the accuracy of each algorithm in reproducing the constraints defining the CReM has been quantified via the Maximum Relative Degree Error and the Maximum Relative Strength Error metrics, whose definition is provided by Eqs. (57), (58) and (66), (67) for the undirected and the directed case, respectively. Analogously, the three ‘stop criteria’ for each algorithm are the same ones that we have adopted for the other models (and consist in a condition on the Euclidean norm of the gradient of the likelihood function, i.e. \(||\nabla {\mathscr {L}}(\vec {\theta })||_2\le 10^{-8}\), a condition on the Euclidean norm of the vector of differences between the values of the parameters at subsequent iterations, i.e. \(||\Delta \vec {\theta }||_2\le 10^{-8}\), and a condition on the maximum number of iterations, i.e. 10,000 steps).

The results about the performance of our three algorithms are reported in Tables 6 and 7. Let us start by commenting the results reported in Table 6 and concerning undirected networks. Generally speaking, Newton’s method is the most accurate one (its largest maximum errors span intervals, across all configurations, that amount at \(10^{-11}\lesssim \text {MRDE}_\text {Newton}\lesssim 10^{-8}\) and \(10^{-5}\lesssim \text {MRSE}_\text {Newton}\lesssim 10^{-4}\)) although it scales very badly with the size of the network on which it is tested (the amount of time, measured in seconds, required by it to achieve convergence spans an interval, across all configurations, that amounts at \(0.08\le T_\text {Newton}^\text {reduced}\le 1188)\).

The quasi-Newton method, on the other hand, is very accurate on the degrees (as already observed in the UBCM case) but not so accurate in reproducing the weighted constraints (its largest maximum errors span intervals, across all configurations, that amount at \(\text {MRDE}_\text {Quasi-Newton}\simeq 10^{-7}\) and \(10^{-6}\lesssim \text {MRSE}_\text {Quasi-Newton}\lesssim 6\)). Moreover, it scales even worse than Newton’s method with the size of the network on which it is tested (the amount of time, measured in seconds, required by it to achieve convergence spans an interval, across all configurations, that amounts at \(5\le T_\text {Quasi-Newton}\le 15888\)).

The performance of the fixed-point recipe is, somehow, intermediate between that of Newton’s and the quasi-Newton method. For what concerns accuracy, it is more accurate in reproducing the binary constraints than in reproducing the weighted ones (its largest maximum errors span intervals, across all configurations, that amount at \(\text {MRDE}_\text {fixed-point}\simeq 10^{-9}\) and \(10^{-8}\lesssim \text {MRSE}_\text {fixed-point}\lesssim 10^{-1}\)) although it outperforms Newton’s method, sometimes. For what concerns scalability, the fixed-point method is the less sensitive one to the growing size of the considered configurations: hence, it is also the fastest one (the amount of time, measured in seconds, required by it to achieve convergence spans an interval, across all configurations, that amounts at \(0.01\le T_\text {fixed-point}\le 550\)).

Moreover, while Newton’s and the fixed-point method stop because the condition on the norm of the likelihood is satisfied, the quasi-Newton method is often found to satisfy the limit condition on the number of steps (i.e. it runs for 10000 steps and, then, stops).

Interestingly, the fact that the CReM cannot be reduced (at least not to a comparable extent with the one characterizing purely binary models) reveals a dependence on the network size of Newton’s and the quasi-Newton algorithms. The reason may lie in the evidence that both Newton’s and the quasi-Newton method require (some proxy of) the Hessian matrix of the system of equations defining the CReM to update the value of the parameters: as already observed, the order of the latter - which is \(O(N^2)\) for Newton’s method and O(N) for the quasi-Newton one - can make its calculation (very) time demanding.

Let us now move to comment on the performance of our algorithms when applied to solve the directed version of the CReM (see Table 7). Overall, all methods perform much better than in the undirected case, stopping because the condition on the norm of the likelihood is satisfied.

In fact, all of them are very accurate in reproducing the purely binary constraints, their largest maximum errors spanning intervals, across all configurations, that amount at \(10^{-12}\lesssim \text {MRDE}_\text {Newton}\lesssim 10^{-6}\), \(10^{-14}\lesssim \text {MRDE}_\text {Quasi-Newton}\lesssim 10^{-6}\) and \(10^{-15}\lesssim \text {MRDE}_\text {fixed-point}\lesssim 10^{-8}\); for what concerns the weighted constraints, instead, the two most accurate methods are Newton’s and the quasi-Newton one, their largest maximum errors spanning intervals, across all configurations, that amount at \(10^{-13}\lesssim \text {MRSE}_\text {Newton}\lesssim 10^{-7}\) and \(10^{-6}\lesssim \text {MRSE}_\text {Quasi-Newton}\lesssim 10^{-3}\) (the fixed-point method performs worse than them, since \(10^{-3}\lesssim \text {MRSE}_\text {fixed-point}\lesssim 10^{-1}\)).

For what concerns speed, the amount of time, measured in seconds, required by Newton’s, the quasi-Newton and the fixed-point algorithms to achieve convergence spans an interval, across all configurations, that amounts at \(0.6\le T_\text {Newton}^\text {reduced}\le 1\), \(0.5\le T_\text {Quasi-Newton}^\text {reduced}\le 1.2\) and \(0.05\le T_\text {fixed-point}^\text {reduced}\le 0.2\), respectively. Hence, all methods are also very fast - the fixed-point being systematically the fastest one.

As already stressed above, the fact that the e-MID number of nodes remains approximately constant throughout the considered time interval masks the strong dependence of the performance of Newton’s and the quasi-Newton method on the network size.

Lastly, while rescaling the system of equations defining the CReM improves neither the accuracy nor the speed of any of the three algorithms considered here, differences in their speed of convergence, caused by the choice of a particular set of initial conditions, are observable: the ‘uniform’ prescription outperforms the other ones (for both the undirected and the directed version of the CReM).

As usual, let us comment on the algorithm to sample the CReM ensemble - for the sake of simplicity, on the undirected cae. As for the UECM it can be compactly achieved by implementing a two-step procedure, the only difference lying in the functional form of the distribution from which weights are sampled. Given a specific pair of vertices i, j (with \(i<j\)), the first link can be drawn from a Bernoulli distribution with probability \(p^\text {UBCM}_{ij}\) while the remaining \((w-1)\) ones can be drawn from an exponential distribution whose parameter reads \(\theta _i+\theta _j\). The computational complexity of the sampling process is, again, \(O(N^2)\) and the pseudo-code for explicitly sampling the CReM ensemble is summed up by Algorithm 6.

Discussion

The exercises carried out so far have highlighted a number of (stylized) facts concerning the performance of the three algorithms tested: in what follows, we will briefly sum them up.

Newton’s method Overall, Newton’s method is very accurate - often, the most accurate one - in reproducing both the binary and the weighted constraints; moreover, it represent the only viable alternative when the most complicated models are considered (i.e. the UECM and the DECM, respectively defined by a system of 2N and 4N coupled, non-linear equations). However, the time required to run Newton’s method on a given model seems to be quite dependent on the network size, especially whenever the corresponding system of equations cannot be reduced - see the case of the undirected CReM, run on the Bitcoin Lightning Network. Since one of the reasons affecting the bad scaling of Newton’s method with the network size is the evaluation of the Hessian matrix defining a given model, this algorithm has to be preferred for largely reducible networks.

Quasi-Newton method For all the networks considered here, the quasi-Newton method we have implemented is nothing else than the diagonal version of the traditional Newton’s method. Even if this choice greatly reduces the number of entries of the Hessian matrix which are needed (i.e. just N elements for the undirected version of the CReM, 2N elements for the UECM and the directed version of the CReM and 4N elements for the DECM) dimensionality may still represent an issue to achieve fast convergence. Moreover, since the diagonal approximation of the Hessian matrix is not necessarily always a good one, the quasi-Newton method may require more time than Newton’s one to achieve the same level of accuracy in reproducing the constraints. However, when such an approximation is a good one, the ‘regime’ in which the quasi-Newton method outperforms the competitors seems to be the one of small, non-reducible networks (e.g. see the results concerning the DBCM run on the WTW) - althogh, in cases like these, Newton’s method may still be a strong competitor.

Fixed-point method From a purely theoretical point of view, the fixed-point recipe is the fastest one, since the time required to evaluate the generic n-th step is (only) due to the evaluation of the model-specific map at the \((n-1)\)-th iteration. Strictly speaking, however, this holds true for a single step: if the number of steps required for convergence is large, in fact, the total amount of time required by the fixed-point method can be large as well. Overall, however, this algorithm has to be preferred for large, non-reducible networks: this is the case of the (undirected version of the) CReM, run on the 8-th snapshot of the Bitcoin Lightning Network (i.e. day 17-07-19) and requiring a little more than one minute to achieve an accuracy of \(\text {MRDE}_\text {fixed-point}\gtrsim 10^{-10}\) and \(\text {MRSE}_\text {fixed-point}\simeq 10^{-1}\); naturally, the method is not as accurate as Newton’s one, for which \(\text {MRDE}_\text {Newton}\gtrsim 10^{-12}\) and \(\text {MRSE}_\text {Newton}\simeq 10^{-6}\) but is much faster as Newton’s algorithm requires \(\simeq 1188\) seconds to converge.

The ‘NEMTROPY’ Python package As an additional result, we release a comprehensive package, coded in Python, that implements the three aforementioned algorithms on all the ERGMs considered in the present work. Its name is ‘NEMTROPY’, an acronym standing for ‘Network Entropy Maximization: a Toolbox Running On Python’, and is freely downloadable at the following URL: https://pypi.org/project/NEMtropy/.

Alternative techniques to improve accuracy and speed have been tested as well, as the one of coupling two of the algorithms considered above. In particular, we have tried to solve the (undirected version of the) CReM by running the fixed-point algorithm and using the solution of the latter as input for the quasi-Newton method. The results are reported in Table 8: as they clearly show, the coupled algorithm is indeed more accurate that the single methods composing it and much faster than the quasi-Newton one (for some snapshots, more accurate and even faster than Newton’s method). Techniques like these are, in general, useful to individuate better initial conditions than the completely random ones: a first run of the fastest method may be, in fact, useful to direct the most accurate algorithm towards the (best) solution. This is indeed the case, upon considering that the quasi-Newton method, now, stops because the condition \(||\nabla {\mathscr {L}}(\vec {\theta })||_2\le 10^{-8}\) is satisfied - and not for having reached the limit of 10000 steps.