Abstract

Given the capacity of Optical Coherence Tomography (OCT) imaging to display structural changes in a wide variety of eye diseases and neurological disorders, the need for OCT image segmentation and the corresponding data interpretation is latterly felt more than ever before. In this paper, we wish to address this need by designing a semi-automatic software program for applying reliable segmentation of 8 different macular layers as well as outlining retinal pathologies such as diabetic macular edema. The software accommodates a novel graph-based semi-automatic method, called “Livelayer” which is designed for straightforward segmentation of retinal layers and fluids. This method is chiefly based on Dijkstra’s Shortest Path First (SPF) algorithm and the Live-wire function together with some preprocessing operations on the to-be-segmented images. The software is indeed suitable for obtaining detailed segmentation of layers, exact localization of clear or unclear fluid objects and the ground truth, demanding far less endeavor in comparison to a common manual segmentation method. It is also valuable as a tool for calculating the irregularity index in deformed OCT images. The amount of time (seconds) that Livelayer required for segmentation of Inner Limiting Membrane, Inner Plexiform Layer–Inner Nuclear Layer, Outer Plexiform Layer–Outer Nuclear Layer was much less than that for the manual segmentation, 5 s for the ILM (minimum) and 15.57 s for the OPL–ONL (maximum). The unsigned errors (pixels) between the semi-automatically labeled and gold standard data was on average 2.7, 1.9, 2.1 for ILM, IPL–INL, OPL–ONL, respectively. The Bland–Altman plots indicated perfect concordance between the Livelayer and the manual algorithm and that they could be used interchangeably. The repeatability error was around one pixel for the OPL–ONL and < 1 for the other two. The unsigned errors between the Livelayer and the manual algorithm was 1.33 for ILM and 1.53 for Nerve Fiber Layer–Ganglion Cell Layer in peripapillary B-Scans. The Dice scores for comparing the two algorithms and for obtaining the repeatability on segmentation of fluid objects were at acceptable levels.

Similar content being viewed by others

Introduction

Optical Coherence Tomography (OCT) is a non-invasive, relatively inexpensive imaging technique which is based on low-coherence interferometry and captures high-resolution multi-dimensional images from biological tissue especially the retina1. Macular OCT images are widely used to assist ophthalmologists in diagnosing ocular deformities such as Diabetic Macular Edema (DME), Age-Related Macular Degeneration, glaucoma and retinal vascular accidents2,3. In addition to that, their interesting applications in diagnosis and effective treatment of neurodegenerative diseases like Multiple Sclerosis and Neuromyelitis Optica has attracted the neurologists4.

Ocular OCT images provide cross-sectional data from intra-retinal layers which are distinguishable by contrasting their intensities. These layers typically lose their standard features (like texture, thickness and location) with the occurrence of different diseases and measuring the quantitative amount of their structural conversion, provides instructive information about the type, severity and the must-be-employed treatment procedure of that disease5. Furthermore, macular OCT is the standard test for detection of intra-retinal (IRF) and sub-retinal (SRF) fluid. It is also an essential modality for evaluating the subsequent resolution of accumulated fluids as a response to treatment6.

OCT data may be acquired in different anatomical locations in the eye. In the macular OCTs, a set of 2-dimensional cross-sectional B-Scans are acquired and stacked to generate a 3-dimensional macular cube. Besides, a 2-dimensional circumpapillary-retinal nerve fiber layer (cp-RNFL) data may be taken from the area surrounding the bundle of nerve fibers at the back of the eye, called optic nerve head (ONH)2.

Macular OCT images carry a great deal of data which needs to be quantified to provide interpretable values. The main issue in this regard is segmentation of retinal layers and localization of abnormalities like intra-retinal or sub-retinal fluid accumulations. For segmentation of intra-retinal layers and fluids in each B-Scan, assorted manual, semi-automatic and full-automatic approaches have been suggested7,8,9,10,11. The manual segmentation method is both time-consuming and exposed to probable observer errors and therefore, automatic and semi-automatic algorithms have been introduced to solve these problems. Full-automatic methods for segmentation of retinal OCT images are prone to unavoidable errors in the presence of less predictable matters such as noisy, low-quality images and in B-Scans of patients with marked macular or retinal nerve fiber layer changes12,13,14,15. Accordingly, a semi-automatic approach is considered as an in-between method to overcome the disadvantages of manual and automatic methods and to take advantage of an expert’s knowledge of a correct delineation. Table 1 summarizes a list of previous works on semi-automatic OCT segmentation. However, available semi-automatic algorithms suffer from noticeable constraints because they mostly support only one data format, are unable to suggest alternative segmentation methods to the users, lack an effective implementation of a detailed process on the input data like denoising or filtering and finally, are not integrated in an open-source software environment to serve facilitated segmentation of OCT images.

The purpose of our study is to design a piece of software to provide semi-automatic segmentation of layers and fluids in macular OCTs. Our proposed software considerably resolves the above-mentioned difficulties that other similar algorithms have faced. By performing an accurate and user-friendly segmentation, it helps clinicians to evaluate structural changes, especially at inner macular and cp-RNFL, with more reliable data. It is also practical in supplying the gold standard data set needed for design and evaluation of full-automatic methods21,22.

To measure the validation of this software, we tested its performance on macular OCT B-Scans of eyes from healthy controls as well as those belonging to patients with DME diagnosis by calculating the agreement (to assess inter-rater variability and to decide whether the proposed technique could be substituted with the manual segmentation) and repeatability of the proposed method23. Moreover, to demonstrate one important application of the proposed method in clinical works, we designed a particular section for computation of the irregularity index in deformed OCT images, which could be used to assess irregularities frequently seen in many layered ocular structures (e.g. retina, iris, cornea) under pathologic circumstances such as DME, Age-Related Macular Degeneration, Epiretinal Membrane (ERM)24, Vitreoretinal Interface abnormalities, Fuchs uveitis in Anterior Segment OCT images25, and corneal dystrophies. Because the manual algorithms yield erroneous and unreliable segmentation of the retinal layers in macular OCT images, they could face major problems with respect to gauging this parameter and this is another situation where the semi-automatic and full-automatic segmentation algorithms are deemed extremely helpful.

In what follows, we explain about our main method and the overall structure of our designed software. Two disparate datasets (normal and DME) are introduced and the outcomes produced by applying our algorithm over the layers and fluid objects of these datasets are investigated by assessing the agreement and repeatability of the proposed method. Subsequently, the tables indicating validation results are provided in detail and an in-depth discussion of this research’s outcomes, performance and efficiency in comparison to other heretofore suggested methods is summed up in the closing section.

Materials and methods

Study population

To signify the clinical performance of the proposed method on retinal layers of a collected normal dataset and to calculate the agreement and repeatability of our algorithm’s layer segmentation section, 50 macular OCT B-Scans from 16 normal eyes were selected in this study. So as to identify the clinical performance of this method on the fluid objects appearing in abnormal datasets, we enrolled twenty eyes from 19 patients with the diagnosis of diabetic macular edema (DME)26. All patients had clinical and OCT-based diagnosis of DME. OCT examinations were performed using Spectralis Enhanced Depth Imaging-OCT and Spectralis Spectral Domain-OCT for the normal and DME patients, respectively. Both datasets were gathered using Heidelberg Eye Explorer (HEYEX) version 5.1 (Heidelberg Engineering, Heidelberg, Germany) by a trained technician with an automatic real-time (ART) function for image averaging and an activated eye tracker in a room with normal light. For macular volumes, 61 horizontal B-Scans (each with 512 A-Scans, with ART of 9 frames, axial resolution of 3.8 mm) with a scanning area of 6 mm \(\times\) 6 mm focusing on the fovea were taken. The normal population’s age ranged between 21 and 46 (Mean (± SD) = 30.81 ± 7.04) and 62.5 per cent of them were female. The DME group’s age were between 57 and 84 (Mean (± SD) = 68.11(± 8.58)) and 74 per cent of them were female. Protocols of the current investigation were approved by the Ethics Committee at Isfahan University of Medical Sciences (IR.MUI.RESEARCH.REC.1398.155). This study was carried out in accordance to the tenets of the Declaration of Helsinki and informed consent was obtained from all the patients.

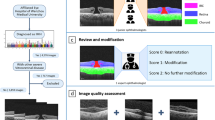

An overview of the proposed software

Two primary goals of this study are elaborated in the next sections. We first explain an overview of the proposed software and the embedded semi-automatic segmentation algorithm and then, describe the agreement and repeatability of the studying process using the supporting dataset of OCTs from healthy controls and DME sufferers. Our proposed software consists of five independent tabs, each responsible for a specific function. First of all, in the “File” tab, the user could open the desired data format (i.e. .mat, .vol or .octbin) which is then converted to a .mat file in order to be compatible with this software and any other substituted MATLAB27 code. The next three tabs are designed for layer and fluid segmentation of macular B-Scans. Starting with the “Manual Layer Segmentation” tab, a complete manual segmentation of retinal layers using the interactive freehand over each B-Scan is provided and the resulted curves that could be mainly used for construction of gold standards are produced. Meanwhile, the “Auto Layer Segmentation” tab is responsible for the software’s main algorithm, a graph-based semi-automatic segmentation, termed “Livelayer”. Two other options embedded in this tab are a layer correction procedure used for correction of the faulty boundaries, and a grid-based segmentation method which works by dividing the whole B-Scan by an arbitrary number of vertical lines and asking the user to click on a point of that lines where the intended boundary is exactly located. This section then extrapolates the boundary from the acquired points which would of a very limited number in most cases. Fluids’ identification and localization is the “Fluid Segmentation” tab’s duty that adopts both manual (interactive freehand object) and semi-automatic (Livelayer) techniques. The last tab named “Peripapillary” is created for analysis of circumpapillary scans. Our suggested method removes the curvature, localizes the vessel shadows, eliminates the shadows, and finally provides Livelayer semi-automatic segmentation of the peripapillary boundaries. The extracted information from above-mentioned tabs is finally saved in a MATLAB “.mat” file to be readable in next applications. Figure 1 represents our proposed software’s main tabs. A brief illustration of conducted operations in our software for macular and peripapillary images from accepting the input file with a .mat, .vol or .octbin format to outputting both the segmented B-Scan and the complementary information with respect to it is shown in Fig. 2.

Livelayer software’s scheme (a) Macular layer and fluid segmentation sections—after loading a proper data set, the software’s pre-processing block finds an appropriate background image on which the Livelayer could be applied and then, outputs the segmented image. (b) Peripapillary layer segmentation section—after loading a proper peripapillary data, the software aligns the B-Scan and then, tries to omit its blood vessels as much as possible. An appropriate background image for applying the Livelayer is detected and the corresponding segmented B-Scan is outputted.

The devised Livelayer algorithm

Our suggested algorithm is a graph-based semi-automatic method, called “Livelayer”, designed for straightforward segmentation of retinal layers and fluids. This method is chiefly based on Dijkstra’s Shortest Path First (SPF) algorithm28 and the Live-wire function29, together with some preprocessing operations on the to-be-segmented images. The Dijkstra’s algorithm and its interactive variant, the live-wire, find the shortest path between a pair of nodes (pixels) in a graph (that is constructed from the original image). Application of the conventional Live-wire over the original B-Scan is not capable of following the OCT boundaries due to their weak and vague appearance and it is essential to isolate and strengthen each individual boundary before being fed into the live-wire algorithm. The proposed Livelayer is created on the basis of the original live-wire and is applied to different processed versions of the original B-Scan which have the ability to sharpen the desired boundary. We detect these best sharpened boundaries by utilizing diverse methods of edge detection and morphological operations30,31,32,33,34. Livelayer can also be applied over B-Scans containing fluid objects and circumpapillary scans. To align the boundaries in the peripapillary section, we first considered ONL–IS/OS as our reference boundary and produced a shift vector by subtracting all Y-coordinates of the reference boundary from its maximum amount. We then circularly shifted each column of the image by this shift vector which has the same shape as the image’s width. In order for blood vessels to be detected, the mean value for each column of the flattened image from IPL–INL to the bottom is acquired. Local extrema of these mean values are vessels’ exact locations since they appear in black in ocular images. Following the vessel detection process, the right(left) half of the vessel is replaced with its adjacent right(left) columns and the blood vessels are entirely eliminated. Figure 3 goes into details about the pre-processing block exploited in the Livelayer. As can be seen, depending on each boundary’s brightness and location in the B-Scan, an apt background image for applying the Livelayer is designed using different image processing techniques. Additionally, the background image for fluid segmentation is acquired through implementation of an edge detection function followed by a morphological operation.

Validation of the proposed software

Our dataset in this study generally consists of the normal OCT B-Scans acquired from healthy controls and abnormal OCT B-Scans that are related to DME patients. The former group of data is used for validation of our suggested method’s layer segmentation section and the latter is for the algorithm’s fluid segmentation part. In the following paragraphs, we go into concise explanations for the procedure adopted to assess our algorithm’s performance and how it can be utilized as a useful tool for clinical purposes.

Layer segmentation section

The retinal boundaries in macular OCT scans that our software is able to segment include: inner limiting membrane (ILM), boundary between retinal nerve fiber layer (RNFL) and ganglion cell layer (GCL), boundary between GCL and inner plexiform layer (IPL), boundary between IPL and inner nuclear layer (INL), boundary between INL and outer plexiform layer (OPL), boundary between OPL and outer nuclear layer (ONL), boundary between ONL and photoreceptor layer, boundary between photoreceptor layer and retinal pigmented epithelium (RPE) and finally, outer level of RPE. The peripapillary section could segment all these boundaries except for the GCL–IPL.

In order to perform a validation of our suggested method, two ophthalmologists and an engineer familiar with the concept of OCT images delineated the three most clinically important retinal boundaries (ILM, IPL–INL, OPL–ONL) on the healthy controls’ dataset and with the aid of two discrete methods. In the first stage, we trained these three independent individuals to grid-manually segment the destined retinal boundaries, which is considered to be an impotent segmentation procedure as opposed to the Livelayer algorithm. Following that, three graders were also asked to segment the same B-Scans with the Livelayer. The main manual method in this software which was created utilizing the freehand function was intolerably time-consuming and susceptible to noticeable errors, which is why it was substituted with our grid-based algorithm.

To properly quantify the agreement and repeatability of the proposed semi-automatic method, we need to first define a basis for comparison that is the unsigned boundary errors. The unsigned boundary error for retinal boundaries is calculated by:

where \(w\) denotes the B-Scans’s width in pixels and \(x_{i} , y_{i}\) represent the ith point of the two acquired boundaries. The lower the value of the unsigned boundary error is, the more proximity we observe between the two boundaries.

Therefore, we found the average location of each boundary grid-manually outlined by three graders on each OCT B-Scan, and assumed it to be the ground truth in our studies. Next, we calculated the unsigned error between the ground truth and each of our three grid-manual delineations to achieve the inter-rater variability for this method. This ground truth data was then compared to each of our three semi-automatic data for obtaining both the inter-rater variability of our algorithm and determine whether Livelayer could be substituted with the manual segmentation approach. To quantify our measurements, the Bland–Altman plots for each boundary were sketched, obtaining the level of agreement between each grader’s semi-automatic segmentation and the gold standard data. For determining the repeatability of the Livewire, one of our observers segmented the 50 normal B-Scans for the second time a week later and the unsigned errors between these two measurements were computed.

To indicate the timesaving capability of the software, the required time for segmenting each boundary was measured by utilizing both the semi-automatic Livelayer and the grid-manual methods. Additionally, the mean number of needed clicks by the user in Livelayer method were figured out. It should be noted that the number of clicks in Livelayer depends both on the image quality and the intrinsic characteristics of that boundary on the image.

This section also assigns a particular field for computation of the irregularity index for IPL and OPL within the semi-automatic division. This index is set for quantitative smoothness evaluation of a specific layer in macular OCT images and is defined according to this equation:

where \(L_{r}\) is the Euclidean distance between the beginning and the end of that boundary and \(L_{b}\) is the length of the intended detached boundary.

Fluid segmentation section

This method is not primarily designed to compete with other full-automatic fluid segmentation available methods, but to be served as a handy tool for production of an outlined dataset which could be later required for training the automatic algorithms. For obtaining the agreement and repeatability of this division, two independent persons, an ophthalmologist and a senior ophthalmology resident were trained to both manually and semi-automatically localize the intra-retinal and sub-retinal fluid objects on the DME dataset.

Here, we supposed the Dice coefficient to be a proper basis for comparison of the located fluid objects. For retinal fluids, the Dice coefficient35 which gauges the basic similarity between two mask images in which the fluid objects’ regions of interest (ROIs) are apparent is calculated by:

where \(X\) and \(Y\) are the two intended mask images. The smaller values of Dice coefficient indicate less similarity between two set of identified fluids.

This coefficient was computed for the two observers’ manually segmented fluids as well as for one observer’s manual and semi-automatic results to resemble inter-observer errors and the method’s performance. Because the two graders did not agree on the overall number of fluids on each B-Scan, a particular threshold was defined and marginally small fluid objects were eliminated according to that threshold. For evaluating the repeatability of this section, one of the examiners delineated the fluid objects on the DME dataset for another time 6 weeks later and the Dice coefficients for these assessments were measured.

Results

Efficiency in time and the required number of clicks

For assessment of our recommended method’s efficiency in the matter of time and the needed number of clicks, all the 50 normal macular B-Scans that were segmented semi-automatically and 20 of grid-manually segmented B-Scans were involved. Table 2 demonstrates a precise comparison between the semi-automatic and the grid-manual segmentation methods’ acquisition time and depicts the average number of clicks required for both methods. The grid-manual segmentation in this part was conducted by splitting each B-Scan into 10 portions and using the layer correction functionality of the software around the fovea. Hence, the grid-manual algorithm needed at least 10 clicks in the best case.

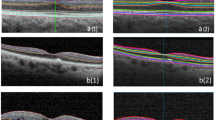

Feasibility on the DME dataset

As we mentioned earlier, Livelayer also performs pretty well on the B-Scans obtained from patients with DME, and despite the existence of large cysts in these images, it could segment relatively disorganized retinal layers generally caused by retinal disorders such as Diabetic Retinopathy. According to our experiments, segmenting a DME B-Scan with Livelayer required 5, 9, 16 click numbers for ILM, IPL–INL, OPL–ONL, respectively while the grid-manual method demanded 25 click numbers for each boundary. In addition to that, unsigned errors between the two methods for these layers were 1.18, 1.5, 2.79 which proves the feasibility of our software to be employed on specific kinds of datasets that could not be automatically segmented by the Heidelberg system. Figure 4 presents three B-Scan samples segmented by our introduced algorithm.

Agreement and repeatability analyses

Analysis of agreement and repeatability for the Livelayer was performed using 50 normal macular B-Scans. In Fig. 5 the three boundaries are marked by our observers in both modes. In Table 3, the unsigned errors between the gold standard segmentation and each examiner’s grid-manually labeled data, the gold standard and each examiner’s semi-automatically labeled data and the two independent semi-automatic segmentation data for one grader is represented.

Bland–Altman plots for each pair of the semi-automatic segmentation coming in Table 3 is drawn in Fig. 6. As can be seen, delineation of the ILM showed 96% total agreement for graders 1, 2 and 94% for grader 3. The total agreement between the Livelayer and the gold standard data for IPL–INL was also acceptable, being 98% for grader 1 and 96% for graders 2, 3. Among these three boundaries, the least amount of agreement was expected for OPL–-ONL because of the existence of some peculiar structures that frequently occur under this boundary, called the Henle’s fiber. However, despite the occurrence of these irregularities, a good agreement between two methods could be observed for this boundary which was comparable to the other two, 94% for graders 1, 3 and 92% for grader 2. In general, the Bland–Altman plots demonstrated no significant discrepancy between the two segmentation methods and our suggested semi-automatic algorithm could be reliably substituted with typical manual segmentation methods.

The Bland–Altman plots for correlating our proposed semi-automatic algorithm with the gold standard data (average of grid-manually delineated B-Scans by three examiners)—each row presents the B&A plots for each specific boundary and each column is assigned to the boundaries segmented by each examiner.

The software’s fluid segmentation performance was evaluated on 20 B-Scans of DME patients. Our proposed algorithm was proven to surpass the manual segmentation method (freehand function) by obtaining Dice scores of 0.854 and 0.743 for one grader’s manual and semi-automatic fluid (IRF and SRF) localization and two graders’ manual localization, respectively. Moreover, the fluid objects that were delineated by one of our graders for two times in 6 weeks showed 89.3% coincidence according to the calculated Dice scores. So, the proposed semi-automatic software worked with good precision on the fluid objects concerning its inter-rater variability and repeatability as well. Table 4 contains Dice coefficients for our experiments on IRF and SRF fluid segmentation.

One of our graders also segmented ILM and NFL–GCL of 5 peripapillary B-Scans with both semi-automatic and grid-manual methods. The mean unsigned errors between the two algorithms was 1.53 for ILM and 1.33 for NFL–GCL. We can confirm that there is minor difference between the Livelayer and grid-manual for peripapillary images, yet the Livelayer performs more efficiently requiring less number of clicks and amount of time.

Conclusion and discussion

In this paper, we introduced a semi-automatic segmentation software tool which can be used for segmentation of layers and fluids in OCTs of both normal participants and patients with DME. Then, as well as introducing a powerful clinical application of this program which is the calculation of the irregularity index of a specific boundary, we evaluated three of the software’s performance qualities including its segmentation agreement, repeatability and efficiency (in terms of time and complexity). With the purpose of achieving a reliable estimation of the software’s layer segmentation agreement, three independent individuals segmented the layers in both grid-manual and semi-automatic modes. The agreement was finally found by defining the gold standard data (which was the average of grid-manual measurements among three graders) and then comparing this data with the measured values pertinent to the grid-manual segmentation and the same type of values for the semi-automatic results. Our measurements for showing the total agreement between the grid-manual and gold standard data were carried out utilizing the unsigned errors. Those measurements for showing the total agreement between the gold standard data and Livelayer were quantified using both the unsigned errors and the Bland–Altman plots. In the next stage, the inter-rater variability of the algorithm’s fluid segmentation section was computed with the aid of Dice coefficients. Using this value, we figured out how much the mask images that were manually and semi-automatically segmented by one individual overlapped with each other, and compared their relevant Dice coefficient with the one obtained from semi-automatically detected fluids by two graders. In addition to that, we asked one observer to segment the layers and fluid objects twice and in a determined time interval to attain the repeatability of both sections, assuming that other conditions remained constant during this study.

Many ocular structures like retina and retinal pigment epithelium have layered constructions and this may open the door to quantitative evaluation of the abnormalities that commonly occur in these layers under pathologic conditions (e.g. Diabetes, Age-Related Macular Degeneration, Vitreoretinal Interface Abnormalities, Epiretinal Membrane). Due to the hyperreflective nature of IPL and OPL in OCT images when compared to their surrounding layers, these two layers can be used to calculate the smoothness or irregularity of the inner and outer retinal layers, respectively. Cho et al.24, in a retrospective cohort study, assessed correlations between the inner-retinal irregularity index (which was defined as the length ratio between the inner plexiform layer (IPL) and retinal pigment epithelium (RPE)) and visual outcomes before and after the ERM surgery. They deduced that the inner-retinal irregularity index was strongly associated with visual outcomes before and after the ERM surgery and this index had the potential to be a new alternative predictor for inner-retinal damages as well as a prognostic indicator in ERM. Inspection of the smoothness or irregularity of the outer layers of retina can also be potentially used in various retinal diseases, and OPL is suitable for this particular purpose owing to its hyperreflective nature36,37,38.

Examination of the software’s efficiency, conducted on 50 normal macular B-Scans, indicated that the proposed Livelayer algorithm, effectively integrated into the Livelayer software, was way less complicated than a common grid-manual segmentation method and thus, required a short period of time for delineation of layers in macular OCT images. Furthermore, the proposed algorithm’s performance level was evaluated by applying the Livelayer on the former set of B-Scans, deducing that even though the algorithm might vary slightly in its performance quality (i.e. the required number of clicks) depending on the image’s innate qualities, it worked more efficiently than a typical grid-manual method and almost always needed much less number of clicks. Testing the algorithm over the OCTs related to patients with the diagnosis of DME showed its practicality to segment unclear and detached retinal layers with a nearly high precision in comparison to the segmented data collected from the Heidelberg Eye Explorer (HEYEX) system.

Segmentation of retinal OCT images is an essential technique used in a variety of applications ranging from clinical to research studies. Due to the inherently layered structure of retina and changes in these layers in the presence of mild to severe retinal diseases such as diabetic retinopathy, studying these variations will help to understand the pathogenesis of various retinal disorders. Morphological analysis of each of these layers has yet to be comprehensively done except in a limited number of studies because of the large amount of time it takes to segment each layer manually. Therefore, designing a fast and reliable method that could overcome this obstacle should be of considerable importance. Manual segmentation methods are indeed tedious and complex, and full-automatic segmentation is actually deficient in its adaptability to human error correction. Semi-automatic algorithms are, however, capable of being the most preferred kind of ocular image segmentation methods among researchers and clinicians.

In Table 5 a set of elected automatic and semi-automatic segmentation methods on retinal OCT data are compared with our suggested method in terms of “simplicity and availability”, “processing time”, “capability in handling errors” and “capability in handling huge amount of data”. Lee et al. suggested a full-automatic software package, OCT Explorer (the Iowa Reference Algorithm)39,40,41 for segmentation of 12 retinal boundaries which is 3 more boundaries than what our software is capable of identifying. In contrast to our software’s error correction functionality that works independently from other segmented boundaries, in OCT Explorer, refining a single boundary highly affects other segmented boundaries on both the corresponding B-Scan and the other ones existing in the whole dataset. Furthermore, OCT Explorer fails to precisely find all boundaries of cystic B-Scans due to their obscure appearance on the image whereas the Livelayer’s ability to segment different types of OCTs was argued in the previous sections of this study. OCTMarker20 is an integrated software package that could only detect up to 3 retinal boundaries automatically. EdgeSelect16 and SAMIRIX20 are two semi-automatic methods for OCTA segmentation which are not available for free and their possible function is speculated as reported by their papers accordingly. Unlike the EdgeSelect that only segments 4 retinal boundaries, SAMIRIX is capable of segmenting 9 retinal boundaries, but accepting just one file format (.vol). However, Livelayer software reads 3 distinct input file formats making the user feel no need for volume converter software tools.

The most remarkable superiority of our software over other previously presented methods is its feasibility to firstly, accommodate different methods of layer and fluid segmentation required by someone who may not be expert in the concepts of image processing like an ophthalmologist or a neurologist and secondly, generate the output in either a .jpg format (image file), or a .xlsx one (excel files) which could be specially advantageous while working with bulk data. Another major capability of the Livelayer software is the different filtering processes adaptable to each layer’s properties it employs. Other proposed algorithms generally apply a particular set of filtering on the to-be-segmented images which would always be constantly used regardless of the resolution of the input data as well as the quality of retinal layers. The Livelayer, on the other hand, considers each of these factors for its filtering stage by applying independent filters on each boundary and including an editable file in which the filtering parameters could be adjusted. Figure 7 displays a hierarchy of how the software creates the corresponding folders for each section and how the software’s output information is saved. In addition to that, whereas the Heidelberg system from which our peripapillary data is acquired, is not capable of segmenting all the peripapillary layers except for the pRNFL, our software could accurately segment 8 peripapillary boundaries after omitting their blood vessels and applying a set of pre-processing operations on them. The HEYEX system is not also able to exactly pinpoint the detached retinal layers occurring on the OCT B-Scans related to DME or other akin ailments, yet the Livelayer could tackle this problem with a few more than average click numbers. On account of being an open-source product, it can be upgraded to newer versions by either adding more essential tabs or improving the current ones to achieve a specific performance quality like a better denoising or to be tailored for diverse types of OCTs (e.g. rat retinal OCTs), and thus, to become even more efficient.

As it is evident from the results section, the proposed software is reasonably efficient in being time-effective and straightforward. The semi-automatic segmentation time and the number of required clicks for each of the studied retinal boundaries was far less than what they were for the manual-grid method. Regarding this analysis, ILM was the most rapidly- and easily-segmented layer with the biggest disparity between its corresponding values for two intended methods, and OPL–ONL needed considerably longer time and a greater number of clicks to be segmented. This is because of the irregular Henle’s fiber appearing under the OPL, especially its foveal portion causing the Livelayer not to rightly discriminate between this layer and the ONL. The software also showed a good level of agreement between each of the manual-grid and semi-automatic methods, and the gold standard data. Comparing the gold standard data with each grader’s grid-manual delineation yielded less than 1 pixel unsigned errors for ILM, gradually increasing to reach near 2 pixel errors for OPL–ONL. On the other hand, since our proposed method locates ILM slightly higher than its actual location, the unsigned errors between the gold standard data and the semi-automatic segmentation of ILM was larger than that of other boundaries. This error for other two boundaries fluctuated around 2 pixels with generally smaller values for IPL–INL. Analysis of repeatability which is also shown in Table 3 achieved pretty small errors for ILM and IPL–INL, but a near 1 pixel error for OPL–ONL. The Bland–Altman plots which are brought to quantify the level of agreement achieved total agreements for all boundaries with quite analogous values for ILM and IPL–INL and with an approximately 2–4 per cent decline in these values for OPL–ONL. Concerning the fluid segmentation section, the Dice score coefficients for both inter-rater variability and repeatability studies were at an acceptable level and proved that this algorithm could be used as a substitute for manual methods. The software’s main code and the necessary functions could be found at the GitHub link: https://github.com/MansoorehMontazerin/Livelayer-Software (Supplementary Notes).

References

Podoleanu, A. G. Optical coherence tomography. Br. J. Radiol. 78(935), 976–988 (2005).

Hajizadeh, F. & Kafieh, R. Introduction to optical coherence tomography. In Atlas of Ocular Optical Coherence Tomography (ed. Hajizadeh, F.) 1–25 (Springer, 2018).

Mohammadzadeh, V. et al. Macular imaging with optical coherence tomography in glaucoma. Surv. Ophthalmol. 65, 597 (2020).

Schneider, E. et al. Optical coherence tomography reveals distinct patterns of retinal damage in neuromyelitis optica and multiple sclerosis. PLoS ONE 8(6), e66151 (2013).

Hee, M. R. et al. Optical coherence tomography of the human retina. Arch. Ophthalmol. 113(3), 325–332 (1995).

Wu, M. et al. Automatic subretinal fluid segmentation of retinal SD-OCT images with neurosensory retinal detachment guided by enface fundus imaging. IEEE Trans. Biomed. Eng. 65(1), 87–95 (2017).

Kafieh, R. et al. Automatic multifaceted matlab package for analysis of ocular images (AMPAO). SoftwareX 10, 100339 (2019).

Kafieh, R. et al. Intra-retinal layer segmentation of 3D optical coherence tomography using coarse grained diffusion map. Med. Image Anal. 17(8), 907–928 (2013).

Kafieh, R., Rabbani, H. & Kermani, S. A review of algorithms for segmentation of optical coherence tomography from retina. J. Med. Signals Sens. 3(1), 45 (2013).

Montuoro, A. et al. Joint retinal layer and fluid segmentation in OCT scans of eyes with severe macular edema using unsupervised representation and auto-context. Biomed. Opt. Express 8(3), 1874–1888 (2017).

González-López, A. et al. Robust segmentation of retinal layers in optical coherence tomography images based on a multistage active contour model. Heliyon 5(2), e01271 (2019).

de Azevedo, A. G. B. et al. Impact of manual correction over automated segmentation of spectral domain optical coherence tomography. Int. J. Retina Vitr. 6(1), 1–7 (2020).

Patel, P. J. et al. Segmentation error in Stratus optical coherence tomography for neovascular age-related macular degeneration. Investig. Ophthalmol. Vis. Sci. 50(1), 399–404 (2009).

Mansberger, S. L. et al. Automated segmentation errors when using optical coherence tomography to measure retinal nerve fiber layer thickness in glaucoma. Am. J. Ophthalmol. 174, 1–8 (2017).

Almobarak, F. A. et al. Automated segmentation of optic nerve head structures with optical coherence tomography. Investig. Ophthalmol. Vis. Sci. 55(2), 1161–1168 (2014).

Huang, Y. et al. Development of a semi-automatic segmentation method for retinal OCT images tested in patients with diabetic macular edema. PLoS ONE 8(12), e82922 (2013).

Sonoda, S. et al. Kago-Eye2 software for semi-automated segmentation of subfoveal choroid of optical coherence tomographic images. Jpn. J. Ophthalmol. 63(1), 82–89 (2019).

Zhao, L. et al. Semi-automatic OCT segmentation of nine retinal layers. Investig. Ophthalmol. Vis. Sci. 53(14), 4092–4092 (2012).

Liu, X. et al. Semi-supervised automatic segmentation of layer and fluid region in retinal optical coherence tomography images using adversarial learning. IEEE Access 7, 3046–3061 (2018).

Motamedi, S. et al. Normative data and minimally detectable change for inner retinal layer thicknesses using a semi-automated OCT image segmentation pipeline. Front. Neurol. 10, 1117 (2019).

Mehta, N. et al. Model-to-data approach for deep learning in optical coherence tomography intraretinal fluid segmentation. JAMA Ophthalmol. 138(10), 1017–1024 (2020).

Mishra, Z. et al. Automated retinal layer segmentation using graph-based algorithm incorporating deep-learning-derived information. Sci. Rep. 10(1), 1–8 (2020).

Ranganathan, P., Pramesh, C. & Aggarwal, R. Common pitfalls in statistical analysis: Measures of agreement. Perspect. Clin. Res. 8(4), 187 (2017).

Cho, K. H. et al. Inner-retinal irregularity index predicts postoperative visual prognosis in idiopathic epiretinal membrane. Am. J. Ophthalmol. 168, 139–149 (2016).

Zarei, M. et al. Quantitative analysis of the iris surface smoothness by anterior segment optical coherence tomography in Fuchs uveitis. Ocular Immunol. Inflamm. https://doi.org/10.1080/09273948.2020.1823424 (2020).

Tso, M. O. Pathology of cystoid macular edema. Ophthalmology 89(8), 902–915 (1982).

MATLAB, V., 9.4. 0 (R2018a). The MathWorks Inc. (2018).

Dijkstra, E. W. A note on two problems in connexion with graphs. Numer. Math. 1(1), 269–271 (1959).

Chodorowski, A. et al. Color lesion boundary detection using live wire. In Medical Imaging 2005: Image Processing (eds Michael Fitzpatrick, J. & Reinhardt, J. M.) (International Society for Optics and Photonics, 2005).

Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 6, 679–698 (1986).

Sobel, I. History and Definition of the Sobel Operator, Vol. 1505. Retrieved from the World Wide Web (2014).

Sobel, I. & Feldman, G. A 3x3 isotropic gradient operator for image processing. In A Talk at the Stanford Artificial Project 271–272 (1968).

Serra, J. Image Analysis and Mathematical Morphology (Academic Press, 1983).

Matheron, G. & Serra, J. The birth of mathematical morphology. In Proc. 6th Intl. Symp. Mathematical Morphology (2002).

Bertels, J. et al. Optimizing the dice score and Jaccard index for medical image segmentation: Theory and practice. In International Conference on Medical Image Computing and Computer-Assisted Intervention (eds Shen, D. et al.) (Springer, 2019).

Berry, D. et al. Association of disorganization of retinal inner layers with ischemic index and visual acuity in central retinal vein occlusion. Ophthalmol. Retina 2(11), 1125–1132 (2018).

Grewal, D. S. et al. Association of disorganization of retinal inner layers with visual acuity in eyes with uveitic cystoid macular edema. Am. J. Ophthalmol. 177, 116–125 (2017).

Ishibashi, T. et al. Association between disorganization of retinal inner layers and visual acuity after proliferative diabetic retinopathy surgery. Sci. Rep. 9(1), 1–6 (2019).

Garvin, M. K. et al. Automated 3-D intraretinal layer segmentation of macular spectral-domain optical coherence tomography images. IEEE Trans. Med. Imaging 28(9), 1436–1447 (2009).

Lee, K. et al. Automated segmentability index for layer segmentation of macular SD-OCT images. Transl. Vis. Sci. Technol. 5(2), 14–14 (2016).

Sonka, M. & Abràmoff, M. D. Quantitative Analysis of Retinal OCT (Elsevier, 2016).

Acknowledgements

The authors of this manuscript are truly appreciative of Dr. Vahid Mohammadzadeh at the University of California, Los Angeles for his great support while writing this paper’s initial draft and his invaluable suggestions on conceptualizing the clinical terms that were used in this study. This work was supported in part by the National Institute for Medical Research Development (NIMAD) under Grant 964582, and the Vice-Chancellery for Research and Technology of Isfahan University of Medical Sciences under Grant 398849 and 399416.

Author information

Authors and Affiliations

Contributions

M.M.: Conceptualization, Methodology, Software Design, Validation, Formal Analysis, Investigation, Visualization, Writing-Original Draft; Z.S.: Software Design, Formal Analysis, Visualization; E.K.: Methodology (Supporting Contribution), Data Segmentation, Labeled Data Checking, Writing-Review and Editing; H.R.E.: Data Segmentation, Labeled Data Checking, Writing-Review and Editing; T.M.: Formal Analysis, Data Segmentation; H.R.: Conceptualization, Writing-Review and Editing (Supporting Contribution); H.M.: Data Segmentation (Supporting Contribution); A.D. and M.A.: Labeled Data Checking (Supporting Contribution); R.K.: Conceptualization, Methodology, Validation, Investigation, Writing-Review and Editing, Supervision.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Supplementary Video 1.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Montazerin, M., Sajjadifar, Z., Khalili Pour, E. et al. Livelayer: a semi-automatic software program for segmentation of layers and diabetic macular edema in optical coherence tomography images. Sci Rep 11, 13794 (2021). https://doi.org/10.1038/s41598-021-92713-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-92713-y

This article is cited by

-

Assessment of area and structural irregularity of retinal layers in diabetic retinopathy using machine learning and image processing techniques

Scientific Reports (2024)

-

The effect of optical degradation from cataract using a new Deep Learning optical coherence tomography segmentation algorithm

Graefe's Archive for Clinical and Experimental Ophthalmology (2024)

-

Automated assessment of the smoothness of retinal layers in optical coherence tomography images using a machine learning algorithm

BMC Medical Imaging (2023)

-

Synthetic OCT data in challenging conditions: three-dimensional OCT and presence of abnormalities

Medical & Biological Engineering & Computing (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.