Abstract

Machine learning (ML) has been suggested to improve the performance of prediction models. Nevertheless, research on predicting the risk in patients with acute myocardial infarction (AMI) has been limited and showed inconsistency in the performance of ML models versus traditional models (TMs). This study developed ML-based models (logistic regression with regularization, random forest, support vector machine, and extreme gradient boosting) and compared their performance in predicting the short- and long-term mortality of patients with AMI with those of TMs with comparable predictors. The endpoints were the in-hospital mortality of 14,183 participants and the three- and 12-month mortality in patients who survived at discharge. The performance of the ML models in predicting the mortality of patients with an ST-segment elevation myocardial infarction (STEMI) was comparable to the TMs. In contrast, the areas under the curves (AUC) of the ML models for non-STEMI (NSTEMI) in predicting the in-hospital, 3-month, and 12-month mortality were 0.889, 0.849, and 0.860, respectively, which were superior to the TMs, which had corresponding AUCs of 0.873, 0.795, and 0.808. Overall, the performance of the predictive model could be improved, particularly for long-term mortality in NSTEMI, from the ML algorithm rather than using more clinical predictors.

Similar content being viewed by others

Introduction

Acute myocardial infarction (AMI) is a leading cause of mortality despite recent advances in percutaneous coronary intervention (PCI) based on the use of drug-eluting stents and pharmacotherapy, including beta-blockers and the renin-angiotensin system blocker1,2. A prediction of the severity and prognosis is vital for identifying patients at high risk and providing intensive treatment and monitoring3. Traditional risk stratification was based on risk score systems, such as the thrombolysis in myocardial infarction (TIMI), global registry of acute coronary events (GRACE), and acute coronary treatment and intervention outcomes network—Get With The Guidelines (ACTION-GWTG), which extracts the weight from the regression model3,4,5,6,7,8,9,10. GRACE and ACTION-GWTG presented a common model for ST-segment elevation myocardial infarction (STEMI) and non-ST-segment elevation myocardial infarction (NSTEMI), whereas TIMI suggested two distinct risk stratifications. Although these models were validated and are commonly accepted tools, concerns have been raised recently because most traditional risk stratifications were developed 20 years ago using randomized controlled trial (RCT) data before the introduction of drug-eluting stents and newer generation antiplatelets11. Moreover, the outcomes of the prediction models were limited to short-term mortality, such as in-hospital, 14-day, and 30-day mortality3,12,13. Therefore, one review study on conventional risk stratification models suggested that future models would permit more accurate risk stratification3.

Recently, machine learning (ML) was suggested to improve the performance of the prediction model because it could overcome the limitations of a regression-based risk score system, including parametric assumption, primary reliance on linearity, and limited capability in examining higher-order interactions14. Few attempts have been made to apply ML to risk prediction in patients with AMI, but the attempts made were inconsistent15,16. Recent research reported the possibility of performance enhancement using deep learning11,17. On the other hand, a direct comparison was not possible because far more predictors were included in the ML models than the traditional methods. Therefore, it is unclear if the performance improvement comes from the machine learning algorithms or the inclusion of more predictors in the ML models. Furthermore, the high computation power and many clinical predictors, which are difficult to extract from the electronic medical records, limit the use of prediction models using deep learning algorithms in clinical practice.

This study compared the performance of ML models in predicting the short- and long-term mortality using comparable predictors in AMI patients with the traditional risk score methods. Furthermore, this study also examined whether adding more predictors to the ML models would improve the performance of the prediction models.

Results

Patient enrollment and characteristics

Patients diagnosed with AMI were classified into STEMI and NSTEMI. Of the 5557 patients with STEMI, 273 patients (4.9%) died during the hospital stay (Supplementary Table 1). After excluding those with missing information on the variables during hospital admission, the final dataset for the three- and 12-month mortality contained 4911 survivors at hospital discharge. Among the survivors, 68 and 120 patients died within 3 and 12 months after hospital discharge, giving a mortality rate of 1.4% and 2.4%, respectively. For NSTEMI, 281 patients (3.3%) died after ED arrival among the 8626 patients examined. Of the 7716 survivors, 142 and 306 patients died within three and 12 months after hospital discharge, giving a mortality rate of 1.8% and 4.0%, respectively.

Table 1 lists the demographic characteristics, according to mortality, of the patients before excluding those with missing information during hospital admission. The cumulative 12-month mortality of the study participants was 7.2% and 7.1% in STEMI and NSTEMI, respectively. The differences in the patients’ characteristics according to survival in the STEMI group were similar to those of the NSTEMI group. Patients who survived at the 12-month follow up were younger than those who did not (62.4 vs. 73.9 years for STEMI, 66.3 vs. 76.5 years for NSTEMI). The proportion of female participants in the survival group was lower than those in the death group in both STEMI and NSTEMI. Moreover, those who survived at the 12-month follow up were less likely to have hypertension, diabetes, atrial fibrillation, and a history of MI, PCI, and stroke than those who expired during the 12 months after AMI. On the other hand, they were more likely to have dyslipidemia and be current smokers. Furthermore, those who survived at the 12-month follow up were likely to experience chest pain with sweating, have higher blood pressure at presentation, and lower troponin levels than those who had died by the 12-month follow up. The survival group had a lower proportion of heart failure, cardiogenic shock, left main disease, and three-vessel diseases. The survivors were more likely to take aspirin, beta-blockers, angiotensin-converting enzyme inhibitors, and statin than those who died by the 12-month follow up. In contrast, they were less likely to take oral hypoglycemic agents, warfarin, and non-vitamin K antagonist oral anticoagulants.

Performance of the predictive models in STEMI

When the prediction models were built by the ML algorithm using traditional variables in STEMI, the performance was enhanced marginally compared to the best performance among the traditional models (Fig. 1). An evaluation of the performance by the area under the receiver operating characteristic curve (AUC) revealed extreme gradient boosting (XGBoost) to be the best performing model, with an AUC of 0.912 in the ML models, followed by the modified GRACE in the original and modified traditional models (0.901) (Table 2). On the other hand, the other models using the ML algorithms except for the Support Vector Machine (SVM) showed excellent performance over or near the AUC of 0.9. The other traditional models had a lower AUC but were close to 0.9. Regarding the three-month mortality after discharge, the best performing models were XGBoost and GRACE with an AUC of 0.784 and 0.766, respectively, in the ML and traditional models. This was followed in descending order of the AUC by logistic regression regularized with an L2 penalty (Ridge regression), logistic regression regularized with an L1 penalty (Lasso regression), logistic regression regularized with an elastic net penalty (Elastic net), and a Random Forest (RF). For the 12-month mortality, the best performing models were Ridge regression and GRACE in the ML and traditional models, having AUCs of 0.840 and 0.826, respectively. This was followed in descending order of the AUC by Lasso regression and elastic net regression, RF, modified TIMI, and XGBoost. According to the F1-score, the best performing ML model had a score of 0.388, 0.107, and 0.179, respectively, for the in-hospital, three- and 12-month mortality; those were similar or slightly higher than the F1-score of the corresponding traditional models. The highest F1-scores of the modified traditional models were 0.345, 0.075, and 0.170 in predicting the in-hospital, three- and 12-month mortality, respectively.

ROC curves of the in-hospital, three-month, and 12-month mortality prediction models in acute myocardial infarction with the traditional predictors. (a) In-hospital mortality in STEMI, (b) In-hospital mortality in NSTEMI, (c) three-month mortality in STEMI, (d) three-month mortality in NSTEMI, (e) 12-month mortality in STEMI, (f) 12-month mortality in NSTEMI.

Performance of the predictive models in NSTEMI

The ML models in NSTEMI outperformed the traditional models in predicting the three and 12-month mortality when the ML algorithm was applied to the prediction models, including traditional variables (Table 3). The highest AUCs of the in-hospital mortality prediction models were 0.889 and 0.888 in RF and XGBoost, respectively, which were superior to TIMI (AUC: 0.669) but similar to the modified ACTION-GWTG (AUC: 0.884). For the three-month mortality, the best performing models were Lasso regression (AUC: 0.849) and elastic net regression (AUC: 0.849), which were superior to GRACE (AUC: 0.777) and ACTION-GWTG (AUC: 0.795). The ML models, except for SVM, maintained an AUC > 0.8 for the 12-month mortality, while the AUCs were 0.675 and 0.790 in TIMI and ACTION-GWTG, respectively. The modified GRACE and ACTION-GWTG maintained good performance in predicting the 12-month mortality in addition to GRACE. Based on the F1-score, the best performing ML models were Lasso regression, elastic net, and XGBoost with a score of 0.236, 0.130, and 0.225 for the in-hospital, three- and 12-month mortality, respectively, while the highest figures were 0.224, 0.114, and 0.196, respectively in the traditional models. For the modified traditional models, the highest F1-scores were 0.243, 0.110, and 0.206 in predicting the in-hospital, three- and 12-month mortality, respectively.

Comparison of the performance between the ML and traditional models

A comparison of all the ML models with three conventional models according to the statistical significance revealed the ML models to be superior to the traditional models in predicting the long-term mortality in NSTEMI (Supplementary Table 2). The ML models outperformed TIMI in predicting the in-hospital mortality among the NSTEMI patients, while they were similar to GRACE and ACTION-GWTG. On the other hand, Lasso and elastic net regression were superior to all three traditional models in predicting the three-month mortality for those who survived to discharge. Moreover, Lasso, Ridge, and elastic net regression, and XGBoost had significantly higher AUCs in predicting the 12-month mortality than TIMI, GRACE, and ACTION-GWTG. In contrast, with STEMI, RF and XGBoost were the only ML models that significantly outperformed TIMI in predicting the in-hospital mortality. Otherwise, the difference between the traditional and all the ML models was not statistically significant. A comparison of the ML models with the modified traditional models revealed consistent findings (Supplementary Table 3). The differences between the ML models and the modified traditional models were statistically significant among AMI patients, particularly in predicting long-term mortality.

Effect of optional clinical features and medication at discharge

The performance was not enhanced by including the optional predictors in the models (Fig. 2). The highest AUC was 0.911 for XGBoost in the ML model, including the optional predictors in STEMI, which was similar to the 0.912 for XGBoost, including the traditional predictors only (Supplementary Table 4). In the case of the three-month mortality in STEMI, the highest AUC was 0.813 for RF, including all predictors, which was similar to the 0.784 for XGBoost, including the traditional predictors only. For the 12-month mortality, the figures were 0.835 in Lasso regression, including all the predictors, and 0.840 in Ridge regression, including the traditional predictors only. With NSTEMI (Supplementary Table 5), the best performing ML models reached 0.887, 0.855, and 0.865 for in-hospital, three-month, and 12-month mortality, respectively, in the ML model including all the predictors, whereas the corresponding numbers were 0.889, 0.849, and 0.860 in the ML model including the traditional predictors. None of the ML models, except for the SVM, showed a significant difference in the AUCs when the performance of the models with the traditional predictors only was compared with the model applying all the predictors. Moreover, a comparison of the ML models, including the traditional and optional predictors, and the corresponding models, including medication at discharge, showed no significant difference in both STEMI and NSTEMI (Supplementary Table 6).

ROC curves of the in-hospital, three-month, and 12-month mortality prediction models in acute myocardial infarction with traditional and optional predictors. (a) In-hospital mortality in STEMI, (b) In-hospital mortality in NSTEMI, (c) 3-month mortality in STEMI, (d) 3-month mortality in NSTEMI, (e) 12-month mortality in STEMI, (f) 12-month mortality in NSTEMI.

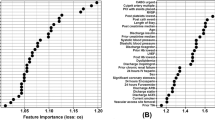

Supplementary Tables 7 and 8 list the importance of the variables. The variable importance was different for each prediction model. Some variables in the traditional predictors in the ML models were excluded, whereas some of the optional variables were included. Furthermore, the performance of the predictive models did not change significantly in both STEMI and NSTEMI when the problem of class imbalance was addressed (Supplementary Table 9 and 10). The highest AUC of the ML models was similar to that of the models using up-sampling, down-sampling, and SMOTE. Only the SVM benefited from balancing the classification using re-balancing methods.

Performance in external validation

The performance of the ML-based models was validated externally using the Korean Acute Myocardial Infarction Registry-National Institutes of Health (KAMIR-NIH) database, which is an independent prospective multicenter registry (Table 4). The AUCs exceeded 0.9 except for the SVM for in-hospital mortality among the patients with STEMI and NSTEMI, but those were close to 0.8 for the 12-month mortality. The ML models were superior to the traditional model in predicting the 12-month mortality in NSTEMI, which is similar to the finding using the test data. On the other hand, the F1 scores in the KAMIR-NIH registry were lower than those in the internal validation.

Discussion

Mortality prediction models were developed using several ML algorithms (Lasso regression, Ridge regression, elastic net, RF, SVM, and XGBoost). Their performance was comparable in predicting the short- and long-term mortality of patients with STEMI with those of traditional risk stratification with comparable predictors. On the other hand, the discrimination improved the existing the prognosis prediction tools in NSTEMI, particularly in predicting long-term mortality. Furthermore, adding more clinical variables to the models did not enhance the performance of the predictive models for mortality in AMI.

The ML algorithms outperformed the traditional risk score methods when the predictors were the same, but the difference was similar in STEMI, and the best working algorithms varied according to the predictors and outcomes. Some studies suggested applying ML algorithms to enhance the performance of the prognosis prediction model for patients with AMI11,17. A recent study reported that deep learning (AUC: 0.905) could outperform the GRACE score (AUC: 0.851) in predicting the in-hospital mortality of AMI patients11. The other study suggested that when predicting cardiac and sudden death during a one-year follow-up, the AUC in the ML models was improved by 0.08 compared to that in GRACE17. Another study reported AUCs of 0.828, 0.895, 0.810, and 0.882 in an artificial neural network (ANN), decision tree (DT), naïve Bayes (NB), and SVM, respectively, for the 30-day mortality, which were slightly higher than or similar to the values (0.83) from the GRACE risk score methods suggested in the validation study3,18. On the other hand, the previous study did not compare the performance between the conventional models and the ML models in the research data, so that it could only be inferred indirectly18. Although the above three studies showed that ML algorithms could enhance discrimination, other researchers proposed that the ML models were not always preferable to the traditional model. Some studies on the prognosis of AMI patients suggested that ML models were not superior but showed comparable performance to the regression-based approach19,20,21. One study using the administrative database of the National Inpatient Sample showed that RF (AUC: 0.85) was comparable to the traditional LR (AUC: 0.84) in predicting the in-hospital mortality among women with STEMI19. Another study showed that the best performance of ML models was similar to that of the GRACE score (AUC: 0.91 vs. 0.87)20. Austen et al. reported that when the cubic spline was included in the LR, it outperformed the ML models of the RF, regression trees (RT), bagged RT, and boosted RT21.

This study showed that ML models were better than the traditional models in NSTEMI but could not reach statistical significance in STEMI. The different superiority of the ML models compared to the traditional models in STEMI and NSTEMI may partially explain the inconsistency of the literature11,17,18,19,20. Two of the three studies showing comparable performance between the traditional and ML models included patients with STEMI only19,20. In contrast, all three studies showing superior performance of the ML models included all patients with STEMI and NSTEMI11,17,18. Although this could not explain all the inconsistency because subgroup analysis showed that ML also outperformed GRACE in STEMI in a previous study11, the different performances of the ML models between the STEMI and NSTEMI groups may have contributed to the inconsistent findings. The ML models may have higher discrimination in the NSTEMI group than the traditional model because NSTEMI has more heterogeneous clinical and pathological features than STEMI22,23. STEMI results from a complete thrombotic occlusion of the infarct-related artery, while NSTEMI occurs in more heterogeneous conditions, such as incomplete coronary occlusion, coronary artery spasm, coronary embolism, myocarditis, and others24. Moreover, ML-based models could outperform the traditional models when analyzing complex data because of the non-parametric assumption, non-linearity, and higher-order interaction. Furthermore, the inconsistency appears to be due to the relatively small difference in the AUC between the ML model and GRACE because the GRACE risk score was updated in 2014, and the continuous variables were divided into many categories to reflect the non-linear relationship8. The ML-based models also require tuning parameters that may influence the model performance, which may fit and perform differently in different datasets14.

Traditional risk stratification focused on predicting the short-term mortality, while only a few suggested the one-year mortality. The CADILLAC risk score developed in 2005 showed good performance for the one-year mortality (c-statistic of 0.79). Moreover, GRACE 2.0, which was updated in 2014 considering the non-linear relationship between mortality and continuous variables, showed an AUC of 0.823,8,25. After introducing the ML algorithms, some studies suggested that discrimination could be improved to predict the long-term mortality15,17. One recent study on the one-year mortality showed that the AUC of the prediction model could be up to 0.901 among patients admitted to the ICU with AMI, which was achieved using the Logistic Model Trees15. Another study also showed good discriminative power for the one-year mortality with an AUC of 0.898, which was achieved using either the Deep Neural Network or Gradient Boosting Machine17. The present study suggested that ML models maintained good discrimination for the 12-month mortality, but the AUC value was lower than those of the two previous studies15,17. This might be because the one-year mortality was defined not as the cumulative mortality, including in-hospital mortality, as in other studies, but as the mortality of those who survived at hospital discharge during the one-year follow-up. The current study aimed to help cardiologists make a treatment and management plan considering the risk of mortality when a patient is discharged.

This study showed that the performance of the prediction model was not increased significantly by adding the optional variables. This might be because the optional variables used in this study could not add more information to the ML models in predicting the mortality of patients with AMI. Only a few studies revealed the influence of features on the performance of prediction models. One study on the prediction model of the 30-day mortality after STEMI showed that the performance of most ML algorithms plateaued when the models introduced the highest 15 ranked variables among 54 variables20. Another study on the one-year mortality of patients with anterior STEMI showed a change in the performance of the prediction model when the top 20 ranked variables were selected instead of all 59 variables26. For RF, the AUC barely changed from 0.932 in the full model to 0.944 with the 20 features, while the changes depended on the model. The AUC decreased from 0.931 to 0.864 in LR, while it increased from 0.772 to 0.852 in the decision tree. The top 20 variables listed in their study were as follows: New York Heart Association Classification at discharge, heart failure at admission, heart rate, age, left ventricular ejection fraction, serum cystatin, initial BNP, platelet count, fibrinogen, serum creatinine, blood glucose, systolic blood pressure, diastolic blood pressure, total bilirubin, blood urea nitrogen, and revascularization type. Only five variables overlapped with the traditional variables in the present study. The predictive models using the ML algorithm appeared to be less dependent on the specific predictors because many clinical predictors influenced and reflected one another. ML algorithms, which allow non-linearity, higher-order effects, and interactions, may not depend on specific predictors as much as the traditional risk stratification methods.

This study suggested that the ML algorithm could enhance the performance of predictive models in AMI and pointed out the particular area where the predictive models could benefit from applying ML algorithms in AMI. Hence, clinicians can identify better those at high risk of mortality in NSTEMI using ML prediction models and focus on the high-risk group at admission and discharge. The ML-based prediction model could be integrated into the electronic medical records as a part of clinical decision support and be utilized in clinical practice. This model will inform clinicians of those who require close monitoring and intensive care during the hospital stay and require frequent follow-up and high medication adherence at discharge.

This study had some limitations. First, the ML algorithm is less intuitive than the risk scoring system developed using traditional statistical analysis. The prediction model developed using the ML algorithm. The importance of predictors in the model is more challenging to interpret because they could contain non-linear models and ensemble methods. Moreover, the proposed prediction model may be specific to the study population, Korean patients with AMI. A previous study reported different risk factors and responses to medical and interventional treatments between Korean and Western AMI patients. Hence, predictive models could show different performance measures in other populations, and ML algorithms should be compared to confirm which is best27,28. Despite the improvement of AUC, the F1 scores were low in both the ML and traditional models, and the difference in the F1 scores between the ML and traditional models was small. Moreover, the statistical difference in the F1 scores could not be evaluated. Ranganathan and Aggarwal demonstrated it with an example that a test with good sensitivity and specificity could have low precision when applied to a disease with a low pretest probability29. The low F1 score in the current study may be due to the low precision and low mortality rate. They suggested that it would be prudent to apply a diagnostic test only in those with a high pretest probability of the disease29, and it could be interpreted that the F1-score would increase if it is applied to patients with moderate to high severity. Future research should set a proper indication of the mortality prediction model or enhance the precision and F1 score for all patients with AMI.

Conclusion

A prediction model for short- and long-term mortality was generated in patients admitted with AMI using multicenter registries and validated using independent cohort data. The ML-based approach increased the discriminative performance of the patients with NSTEMI in predicting mortality compared to the traditional risk scoring method. On the other hand, the performance did not depend on the inclusion of more predictors.

Methods

Data source

A retrospective cohort study was conducted using the data from the Korean Registry of Acute Myocardial Infarction for Regional Cardiocerebrovascular Centers (KRAMI-RCC) registry. The KRAMI-RCC is a prospective multicenter registry of AMI in Korea. The data were collected from all 14 Regional Cardiocerebrovascular Centers (RCCVCs) established by the Ministry of Health and Welfare for the prevention and treatment of cardiovascular disease in Korea since 2008. The purpose and impact of RCCs on AMI are published elsewhere30,31. KRAMI-RCC is a web-based registry of consecutive AMI cases reflecting real-world information on the clinical practice in RCCs and consists of pre-hospital, hospital, and post-hospital data. The institutional review board of Inha University Hospital approved this study protocol, and the need for informed consent was waived because of the retrospective nature of the study using anonymized data with minimal potential for harm (IRB number: 2020–05-035). All methods were carried out in accordance with the relevant guidelines and regulations, and the data were obtained with the approval of the committee of RCCVCs after anonymization.

Study participants

All enrolled participants were patients diagnosed with AMI and admitted to the RCCs through the emergency department (ED). This study included 15,247 patients with AMI in KRAMI-RCC from July 2016 to July 2019 who finished the 12-month follow up in this research. The exclusion criteria were (1) less than 18 years of age, (2) chest pain onset more than 24 h in STEMI, and (3) missing data to calculate the traditional risk score: TIMI, GRACE, and ACTION-GWTG. Of the 15,247 patients enrolled in the KRAMI registry, 6177 and 9070 patients were diagnosed with STEMI and NSTEMI, respectively (Fig. 3). After excluding patients with missing data on the predictors at the emergency department (ED) or before ED arrival and those who visited the hospital 24 h after symptom onset, 5557 patients with STEMI were eligible for the final analysis of in-hospital mortality. Furthermore, patients who survived upon discharge were included in the final analysis of the three- and 12-month mortality. This study excluded missing data on the clinical predictors during hospital admission and rare categorical responses among the survivors at hospital discharge. Therefore, the number of patients with STEMI was 4911 for the final analysis of the three and 12-month mortality. For NSTEMI, the number of patients was 8626 for a final analysis of the in-hospital mortality after excluding missing data at the pre-ED or ED level. Regarding the three and 12-month mortality, the number of patients with NSTEMI was 7716 after excluding missing data during the hospital stay and in-hospital deaths.

Predictors

The possible predictors for mortality were extracted from the database based on previous studies, including demographic information, past medical history, initial symptoms, laboratory findings, events before ED arrival and during the hospital stay, and coronary angiographic findings3,4,6,8,9,10. The predictors were classified according to the time frame (pre-ED, ED, and hospital admission). The predictors used in the traditional risk stratification model were selected as the traditional variables3; the other predictors were categorized as optional variables, as described in Supplementary Table 11. The predictors for in-hospital mortality were limited to the variables available in the pre-ED and ED stage. In contrast, those for the three-month and 12-month mortality included all the variables in the pre-ED, ED, and hospital admission stage. Furthermore, medication at discharge was also included in the model for predicting the three- and 12-month mortality.

Outcomes

The outcomes of interest in this study were in-hospital, three-month, and 12-month mortality. The patients who survived to discharge were followed up by telephone at three and 12 months. The follow-up information was collected through contact with the patients or their families. If unavailable, a follow-up visit or death certificate on the electronic medical records was also checked to determine death.

Predictive models

ML algorithms, such as RF, SMV, XGBoost, Lasso, Ridge regression, and Elastic net, were applied to develop a mortality prediction model. RF builds multiple decision trees and merges them to make a more accurate and stable prediction, while XGBoost provides a parallel tree boosting with a gradient descent that solves many data science problems in a fast and accurate manner. SVM constructs a hyperplane or a set of hyperplanes in high- or infinite-dimensional space for classification.

For each prediction model, tenfold cross-validation was used to tune the hyperparameters, with the AUC as the evaluation standard. The hyperparameters in RF were tuned by searching for all the combinations of the number of trees (500, 1000, and 2000) and the number of variables (2, 4, 6, and 8). For XGboost, this study searched for all the combinations of the number of boosting iterations (25, 50, 75, 100, 125, and 150), learning rate (0.05, 0.1, and 0.3), minimum loss reduction (0 and 5), and the maximum depth of the tree (4, 6, and 8). Regarding SVM, the hyperparameters were optimized with combinations of the cost of constraints violation (0.0039, 0.0625, 1.0000, and 2.0000) and bandwidth of the radial kernel (0.0039, 0.0625, 1.0000, and 2.0000). For Lasso, Ridge regression, and elastic net, the default setting of ‘glmnet’ package in R was used to select the hyperparameters32.

Three different sampling methods were also considered to adjust the highly imbalanced classes: up-sampling, down-sampling, and synthetic minority oversampling technique (SMOTE). The number of study participants in the training set changed from 4443 to 8464, 422, and 1477 when up-sampling, down-sampling, and SMOTE, respectively, were applied to the in-hospital mortality data of STEMI. The number of participants was 13,422, 430, and 1505 in the datasets of up-sampling, down-sampling, and SMOTE for the in-hospital mortality data of NSTEMI.

Traditional and modified traditional models

TIMI and the updated version of GRACE and ACTION-GWTG were used as the references of the traditional models to compare with ML3,4,8,13,33. The TIMI risk scores for STEMI and NSTEMI were used in this study4,33. The TIMI for STEMI and NSTEMI was developed to predict the 30-day and 14-day mortality, respectively, whereas the prognostic capacity of TIMI for STEMI was stable over multiple time points from 24 h to one year after hospital admission4. GRACE v2.0, in which Anderson et al. updated the initial GRACE risk score in 2014, used non-linear functions to enhance discrimination8. Although it was developed to predict the six-month mortality, it was validated externally over the longer term with an AUC of 0.82 at one and three-year mortality. In another validation study, GRACE v2.0 also showed excellent discrimination with an AUC of 0.91 for predicting the in-hospital mortality34. The updated ACTION-GWTG developed in 2016 had high discrimination with an AUC of 0.88 to predict in-hospital mortality13.

These traditional models were fitted to the training data and modified by recalculating the model parameters. In addition to the original traditional models, the modified traditional model was compared with the ML models.

Analysis and Performance measures

The continuous variables, such as age and weight, are represented as the mean and standard deviation in statistical analysis, while the categorical variables are the frequency and proportion. After standardization, the data were split by random sampling into a training set (80%) for developing the ML-based models and a test set (20%) for internal validation. The performance of the mortality prediction model was evaluated using the test data, and was described by the sensitivity, specificity, accuracy, F1-score, and area under the receiver operating characteristic curves (AUC) in the tables and the receiver operating characteristics (ROC) curve in the plots. The AUC of the ML algorithms was suggested with a 95% confidence interval and was compared with traditional risk stratification (TIMI, GRACE, and ACTION-GWTG) using a DeLong Test35. All analyses were implemented using R software version 4.0.0 (R Development Core Team, Vienna, Austria)36.

Validation

In addition to internal validation using a test set, external validation was performed using the KAMIR-NIH registry, which is a prospective multicenter registry in Korea. The registry enrolled patients diagnosed with AMI at 20 tertiary university hospitals who were eligible for primary PCI from November 2011 to December 2015. The detailed study protocols are published elsewhere37. The performance of the ACTION-GWTG was not estimated because prior peripheral arterial disease was not collected in the KAMIR-NIH registry. Moreover, the three-month mortality was not available due to different follow-up schedules in the registry. The ML models were validated for the in-hospital and 12-month mortality after matching the operational definition of the pre-ED cardiac arrest and abnormal cardiac biomarkers.

Data availability

The data that support the findings of this study are available from KRAMI-RCC, but restrictions apply to the availability of these data. Data are available from the authors upon reasonable request and with permission of KRAMI-RCC.

Abbreviations

- AMI:

-

Acute myocardial infarction

- STEMI:

-

ST-segment elevation myocardial infarction

- NSTEMI:

-

Non-ST segment elevation myocardial infarction

- ML:

-

Machine learning

- TM:

-

Traditional model

- AUC:

-

Areas under the receiver operating characteristic curve

- RF:

-

Random forest

- SVM:

-

Support vector machine

- XGBoost:

-

Extreme Gradient Boosting

- Lasso:

-

Logistic regression regularized with L1 penalty

- Ridge regression:

-

Logistic regression regularized with L2 penalty

- Elastic net:

-

Logistic regression regularized with an elastic net penalty

- RCCVC:

-

Regional Cardiocerebrovascular Center

- KRAMI-RCC:

-

The Korean Registry of Acute Myocardial Infarction for Regional Cardiocerebrovascular Centers

- KAMIR-NIH:

-

Korean Acute Myocardial Infarction Registry-National Institutes of Health

References

Reddy, K., Khaliq, A. & Henning, R. J. Recent advances in the diagnosis and treatment of acute myocardial infarction. World J. Cardiol. 7, 243–276. https://doi.org/10.4330/wjc.v7.i5.243 (2015).

World_Health_Organization. The top 10 causes of death, https://www.who.int/news-room/fact-sheets/detail/the-top-10-causes-of-death

Castro-Dominguez, Y., Dharmarajan, K. & McNamara, R. L. Predicting death after acute myocardial infarction. Trends Cardiovasc. Med. 28, 102–109. https://doi.org/10.1016/j.tcm.2017.07.011 (2018).

Morrow, D. A. et al. TIMI risk score for ST-elevation myocardial infarction: A convenient, bedside, clinical score for risk assessment at presentation: An intravenous nPA for treatment of infarcting myocardium early II trial substudy. Circulation 102, 2031–2037. https://doi.org/10.1161/01.cir.102.17.2031 (2000).

Morrow, D. A. et al. Application of the TIMI risk score for ST-elevation MI in the National Registry of Myocardial Infarction 3. JAMA 286, 1356–1359. https://doi.org/10.1001/jama.286.11.1356 (2001).

Morrow, D. A. et al. An integrated clinical approach to predicting the benefit of tirofiban in non-ST elevation acute coronary syndromes. Application of the TIMI Risk Score for UA/NSTEMI in PRISM-PLUS. Eur. Heart J. 23, 223–229. https://doi.org/10.1053/euhj.2001.2738 (2002).

Fox, K. A. et al. Prediction of risk of death and myocardial infarction in the six months after presentation with acute coronary syndrome: Prospective multinational observational study (GRACE). BMJ 333, 1091. https://doi.org/10.1136/bmj.38985.646481.55 (2006).

Fox, K. A. et al. Should patients with acute coronary disease be stratified for management according to their risk? Derivation, external validation and outcomes using the updated GRACE risk score. BMJ Open 4, e004425. https://doi.org/10.1136/bmjopen-2013-004425 (2014).

Elbarouni, B. et al. Validation of the Global Registry of Acute Coronary Event (GRACE) risk score for in-hospital mortality in patients with acute coronary syndrome in Canada. Am. Heart J. 158, 392–399. https://doi.org/10.1016/j.ahj.2009.06.010 (2009).

Chin, C. T. et al. Risk adjustment for in-hospital mortality of contemporary patients with acute myocardial infarction: The acute coronary treatment and intervention outcomes network (ACTION) registry-get with the guidelines (GWTG) acute myocardial infarction mortality model and risk score. Am. Heart J. 161, 113–122. https://doi.org/10.1016/j.ahj.2010.10.004 (2011).

Kwon, J. M. et al. Deep-learning-based risk stratification for mortality of patients with acute myocardial infarction. PLoS ONE 14, e0224502. https://doi.org/10.1371/journal.pone.0224502 (2019).

McNamara, R. L. et al. Development of a hospital outcome measure intended for use with electronic health records: 30-Day risk-standardized mortality after acute myocardial infarction. Med. Care 53, 818–826. https://doi.org/10.1097/MLR.0000000000000402 (2015).

McNamara, R. L. et al. Predicting in-hospital mortality in patients with acute myocardial infarction. J. Am Coll. Cardiol. 68, 626–635. https://doi.org/10.1016/j.jacc.2016.05.049 (2016).

Gibson, W. J. et al. Machine learning versus traditional risk stratification methods in acute coronary syndrome: A pooled randomized clinical trial analysis. J. Thromb. Thrombolysis 49, 1–9. https://doi.org/10.1007/s11239-019-01940-8 (2020).

Barrett, L. A., Payrovnaziri, S. N., Bian, J. & He, Z. Building computational models to predict one-year mortality in ICU patients with acute myocardial infarction and post myocardial infarction syndrome. AMIA Jt. Summits Transl. Sci. Proc. 2019, 407–416 (2019).

Austin, P. C. & Lee, D. S. Boosted classification trees result in minor to modest improvement in the accuracy in classifying cardiovascular outcomes compared to conventional classification trees. Am. J. Cardiovasc. Dis. 1, 1–15 (2011).

Sherazi, S. W. A., Jeong, Y. J., Jae, M. H., Bae, J. W. & Lee, J. Y. A machine learning-based 1-year mortality prediction model after hospital discharge for clinical patients with acute coronary syndrome. Health Inform. J. 26, 1289–1304. https://doi.org/10.1177/1460458219871780 (2020).

Hsieh, M. H. et al. A fitting machine learning prediction model for short-term mortality following percutaneous catheterization intervention: A nationwide population-based study. Ann. Transl. Med. 7, 732. https://doi.org/10.21037/atm.2019.12.21 (2019).

Mansoor, H., Elgendy, I. Y., Segal, R., Bavry, A. A. & Bian, J. Risk prediction model for in-hospital mortality in women with ST-elevation myocardial infarction: A machine learning approach. Heart Lung. 46, 405–411. https://doi.org/10.1016/j.hrtlng.2017.09.003 (2017).

Shouval, R. et al. Machine learning for prediction of 30-day mortality after ST elevation myocardial infraction: An Acute Coronary Syndrome Israeli Survey data mining study. Int. J. Cardiol. 246, 7–13. https://doi.org/10.1016/j.ijcard.2017.05.067 (2017).

Austin, P. C., Lee, D. S., Steyerberg, E. W. & Tu, J. V. Regression trees for predicting mortality in patients with cardiovascular disease: What improvement is achieved by using ensemble-based methods?. Biom. J. 54, 657–673. https://doi.org/10.1002/bimj.201100251 (2012).

Rott, D. & Leibowitz, D. STEMI and NSTEMI are two distinct pathophysiological entities. Eur. Heart J. 28, 2685; author reply 2685, https://doi.org/10.1093/eurheartj/ehm368 (2007).

Cohen, M. & Visveswaran, G. Defining and managing patients with non-ST-elevation myocardial infarction: Sorting through type 1 vs other types. Clin. Cardiol. 43, 242–250. https://doi.org/10.1002/clc.23308 (2020).

Kingma, J. G. Myocardial infarction: An overview of STEMI and NSTEMI physiopathology and treatment. World J. Cardiovasc. Dis. 08, 498–517. https://doi.org/10.4236/wjcd.2018.811049 (2018).

Halkin, A. et al. Prediction of mortality after primary percutaneous coronary intervention for acute myocardial infarction: The CADILLAC risk score. J. Am. Coll. Cardiol. 45, 1397–1405. https://doi.org/10.1016/j.jacc.2005.01.041 (2005).

Li, Y. M. et al. Machine learning to predict the 1-year mortality rate after acute anterior myocardial infarction in Chinese patients. Ther. Clin. Risk Manag. 16, 1–6. https://doi.org/10.2147/TCRM.S236498 (2020).

Sim, D. S. & Jeong, M. H. Differences in the Korea acute myocardial infarction registry compared with western registries. Korean Circ. J. 47, 811–822. https://doi.org/10.4070/kcj.2017.0027 (2017).

Kim, Y. et al. Current status of acute myocardial infarction in Korea. Korean J. Intern. Med. 34, 1–10. https://doi.org/10.3904/kjim.2018.381 (2019).

Ranganathan, P. & Aggarwal, R. Common pitfalls in statistical analysis: Understanding the properties of diagnostic tests—Part 1. Perspect. Clin. Res. 9, 40–43. https://doi.org/10.4103/picr.PICR_170_17 (2018).

Kim, A., Yoon, S. J., Kim, Y. A. & Kim, E. J. The burden of acute myocardial infarction after a regional cardiovascular center project in Korea. Int. J. Qual. Health Care 27, 349–355. https://doi.org/10.1093/intqhc/mzv064 (2015).

Cho, S. G., Kim, Y., Choi, Y. & Chung, W. Impact of regional cardiocerebrovascular centers on myocardial infarction patients in Korea: A fixed-effects model. J. Prev. Med. Public Health 52, 21–29. https://doi.org/10.3961/jpmph.18.154 (2019).

Friedman, J., Hastie, T. & Tibshirani, R. Regularization paths for generalized linear models via coordinate descent. J. Stat. Softw. 33, 1–22 (2010).

Antman, E. M. et al. The TIMI risk score for unstable angina/non-ST elevation MI: A method for prognostication and therapeutic decision making. JAMA 284, 835–842. https://doi.org/10.1001/jama.284.7.835 (2000).

Firdous, S., Mehmood, M. A. & Malik, U. Validity of GRACE risk score as a prognostic marker of in-hospital mortality after acute coronary syndrome. J. Coll. Physicians Surg. Pak. 27, 597–601 (2017).

DeLong, E. R., DeLong, D. M. & Clarke-Pearson, D. L. Comparing the areas under two or more correlated receiver operating characteristic curves: A nonparametric approach. Biometrics 44, 837–845 (1988).

R Core Team (2020). R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. https://www.R-project.org/.

Kim, J. H. et al. Multicenter cohort study of acute myocardial infarction in Korea-interim analysis of the Korea acute myocardial infarction registry-national institutes of health registry. Circ. J. 80, 1427–1436. https://doi.org/10.1253/circj.CJ-16-0061 (2016).

Funding

WKL received funding for this work from the Bio & Medical Technology Development Program of the National Research Foundation funded by the Korean government (MSIT) (2019M3E5D1A0206962012). This research was supported by a fund (2016-ER6304-02) by Research of Korea Centers for Disease Control and Prevention. The funders had no role in study design, data collection, analysis, decision to publish, or manuscript preparation.

Author information

Authors and Affiliations

Contributions

W.L. and S.J. performed data analysis. J.L and J.W.B contributed to conceptualization and study idea. S.H.C and S.I.W contributed to the data collection and wrote the first draft. W.K.L is the principal investigator, contributed to the study idea and design and wrote the first draft. M.H.J provided the KAMIR-NIH data and contributed to the revision. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lee, W., Lee, J., Woo, SI. et al. Machine learning enhances the performance of short and long-term mortality prediction model in non-ST-segment elevation myocardial infarction. Sci Rep 11, 12886 (2021). https://doi.org/10.1038/s41598-021-92362-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-92362-1

This article is cited by

-

Machine learning prediction of mortality in Acute Myocardial Infarction

BMC Medical Informatics and Decision Making (2023)

-

The predictive value of machine learning for mortality risk in patients with acute coronary syndromes: a systematic review and meta-analysis

European Journal of Medical Research (2023)

-

A simple APACHE IV risk dynamic nomogram that incorporates early admitted lactate for the initial assessment of 28-day mortality in critically ill patients with acute myocardial infarction

BMC Cardiovascular Disorders (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.