Abstract

In recent years the emergence of high-performance virtual reality (VR) technology has opened up new possibilities for the examination of context effects in psychological studies. The opportunity to create ecologically valid stimulation in a highly controlled lab environment is especially relevant for studies of psychiatric disorders, where it can be problematic to confront participants with certain stimuli in real life. However, before VR can be confidently applied widely it is important to establish that commonly used behavioral tasks generate reliable data within a VR surrounding. One field of research that could benefit greatly from VR-applications are studies assessing the reactivity to addiction related cues (cue-reactivity) in participants suffering from gambling disorder. Here we tested the reliability of a commonly used temporal discounting task in a novel VR set-up designed for the concurrent assessment of behavioral and psychophysiological cue-reactivity in gambling disorder. On 2 days, thirty-four healthy non-gambling participants explored two rich and navigable VR-environments (neutral: café vs. gambling-related: casino and sports-betting facility), while their electrodermal activity was measured using remote sensors. In addition, participants completed the temporal discounting task implemented in each VR environment. On a third day, participants performed the task in a standard lab testing context. We then used comprehensive computational modeling using both standard softmax and drift diffusion model (DDM) choice rules to assess the reliability of discounting model parameters assessed in VR. Test–retest reliability estimates were good to excellent for the discount rate log(k), whereas they were poor to moderate for additional DDM parameters. Differences in model parameters between standard lab testing and VR, reflecting reactivity to the different environments, were mostly numerically small and of inconclusive directionality. Finally, while exposure to VR generally increased tonic skin conductance, this effect was not modulated by the neutral versus gambling-related VR-environment. Taken together this proof-of-concept study in non-gambling participants demonstrates that temporal discounting measures obtained in VR are reliable, suggesting that VR is a promising tool for applications in computational psychiatry, including studies on cue-reactivity in addiction.

Similar content being viewed by others

Introduction

Recent research has exploited the development of high-performance virtual reality (VR) technology to increase the ecological validity of stimuli presented in studies of cue-exposure1,2,3, counterconditioning4, equilibrium training5, social gazing6 and gambling behavior in healthy control participants7. Furthermore, it has been shown to increase immersion and arousal during gambling games8. However, before VR can be widely applied with confidence it is important to establish that commonly applied behavioral tasks still yield reliable data in a VR context. Research focusing on psychiatric disorders, where one goal is to create reliable diagnostic markers based behavioral tasks and model-based computational approaches, would benefit from behavioral tasks that produce reliable parameters on a single participant level in VR.

A core characteristic of many psychiatric and neurological disorders is a detrimental change in decision-making processes. This is especially evident in addiction-related disorders such as substance abuse9,10,11 or gambling disorder12,13,14. One approach to study such changes in decision making is computational psychiatry15, which employs theoretically grounded mathematical models to examine cognitive performance in relation to psychiatric disorders. Such a model-based approach allows for a better quantification of the underlying latent processes16.

One process that has been implicated in a range of psychiatric disorders is the discounting of reward value over time (temporal discounting): both steep and shallow discounting is associated with different psychiatric conditions9. In temporal discounting tasks, participants make repeated choices between a fixed immediate reward and larger but temporally delayed rewards17. Based on binary choices and/or response time (RT) distributions, the degree to which participants discount the value of future rewards based on the temporal delay provides a measure of individual impulsivity. Increased temporal discounting is thought to be a trans-diagnostic marker with relevance for a range of psychiatric disorders9, with addictions and related disorders being prominent examples18,19.

There is preliminary evidence that temporal discounting might be more pronounced when addiction related cues are present. Participants who suffer from gambling disorder for instance tend to exhibit steeper discounting12,20 and increased risk-taking21 in the presence of gambling-related stimuli or environments. These findings resonate with theories of drug addiction such as incentive sensitization theory22 which emphasize a prominent role for addiction-related cues in the maintenance of drug addiction (see below). Identifying the mechanisms underlying such behavioral patterns and how they are modulated by addiction-related cues is essential to the planning and execution of successful interventions that aim to reverse these changes in decision-making23,24.

Accordingly, the concept of cue-reactivity plays a prominent role in research on substance use disorders25, but has more recently also been investigated in behavioral addictions such as gambling disorder26. Cue-reactivity refers to conditioned responses to addiction-related cues in the environment and is thought to play a major role in the maintenance of addiction. Cue-reactivity can manifest in behavioral measures, as described above for temporal discounting and risk-taking, but also in subjective reports and/or in physiological measures25. Incentive-Sensitization Theory22,27 states that neural circuits mediating the incentive motivation to obtain a reward become over-sensitized to addiction-related cues, giving rise to craving. These motivational changes are thought to be mediated by dopaminergic pathways of the mesocorticolimbic system28,29,30. In line with this, craving following cue exposure correlates with a modulation of striatal value signals during temporal discounting12, and exposure to drug-related cues increases dopamine release in striatal circuits in humans30. While studying these mechanisms in substance use disorders is certainly of value, it is also problematic because substances might have direct effects on the underlying neural substrates. Behavioral addictions, such as gambling disorder, however, might offer a somewhat less perturbed view on the underlying mechanisms.

Studies probing cue-reactivity in participants suffering from gambling disorder have typically either used picture stimuli12,13,21,31,32,33,34,35,36,37,38 or real-life gambling environments (i.e. gambling facilities)20. Both methods come with advantages and disadvantages. While presenting pictures in a controlled lab environment enables researchers to minimize the influence of noise factors and simplifies the assessment physiological variables, it lacks the ecological validity of real-life environments. Conversely, a field study in a real gambling outlet arguably has high ecological validity but lacks the control of confounding factors and makes it difficult to obtain physiological measures.

By equipping participants with head-mounted VR-glasses and sufficient space to navigate within the VR-environment, a strong sense of immersion can be created, which in turn generates more realistic stimulation. In this way VR also offers a potential solution for the problem of ecologically valid addiction-related stimuli for studies in the field of cue-reactivity7,8. For example, Bouchard et al.2 developed a VR-design that is built to provide ecologically valid stimuli for participants suffering from gambling disorder by placing them in a virtual casino. The design can be used in treatment in order to test reactions and learned cognitive strategies in a secure environment. The present study builds upon this idea to create a design that allows assessment of behavioral, subjective and physiological cue-reactivity in VR-environments. Participants are immersed in two rich and navigable VR environments that either represent a (neutral) café environment or a gambling-related casino environment. Within these environments, behavioral cue-reactivity can be measured via behavioral tasks implemented in VR. Given that immersion in the virtual environment takes place in a controlled lab setting, the measurement of physiological variables like electrodermal activity39 and heart rate, as indicators of physiological cue-reactivity25,26, is also easily accommodated.

Studies using computational modeling to asses latent processes underlying learning and decision-making increasingly include not only binary decisions, but also response times (RTs) associated with these decisions, e.g. via sequential sampling models such as the drift diffusion model (DDM)40. This approach has several potential advantages. First, leveraging the information contained in the full RT distributions can improve the stability of parameter estimates41,42. Second, by conceiving decision making as a dynamic diffusion process, a more detailed picture of the underlying latent processes emerges43,44,45,46,47. Recent studies, for instance, applied these techniques to temporal discounting, where they revealed novel insights into effects of pharmacological manipulation of the dopamine system on choice dynamics46. Likewise, we applied these techniques to examine the processes underlying reinforcement learning impairments in gambling disorder48 and decision-making alterations following medial orbitofrontal cortex lesions45. Importantly, most standard lab-based testing settings use keyboards, button boxes and computer screens to record responses and display stimuli during behavioral tasks. In contrast, in the present study we used VR-controllers in a 3D virtual space. This represents a fundamentally different response mode, because in VR, participants have to physically move the controller to the location of the chosen option and then execute a button press to indicate their choice, adding additional motor complexity. In particular in the context of RT-based modeling, a crucial question is therefore whether responses obtained via VR-controllers allow for a comprehensive RT-based computational modeling, as previously done using standard approaches. Therefore, we also explored the applicability of drift diffusion modeling in the context of behavioral data obtained in VR.

Besides validating our VR-design with a healthy cohort of participants, the study at hand investigated the stability of parameters derived from temporal discounting tasks, in particular the discount rate log(k). Recently, the reliability of behavioral tasks as trait indicators of impulsivity and cognitive control has been called into question49,50, in particular when compared to questionnaire-based measures of self-control49. It has been argued that the inherent property that makes behavioral tasks attractive for group-based comparisons renders them less reliable as trait markers51. Specifically, Hedge et al.51 argue that tasks having a low between participant variability produce robust group effects in experimental studies and are therefore employed frequently. However, some of these tasks suffer from reduced test–retest-reliability for individual participants due to their low between-participant variability. Notably, Enkavi et al.49 reported a reliability of 0.65 for the discount rate k, the highest of all behavioral tasks examined in that study, and comparable to the reliability estimates of the questionnaire-based measures. This is in line with previous studies on the reliability of k, which provided estimates ranging from 0.7 to 0.7752,53. Importantly, as outlined above, both the actual response mode and the contextual setting of VR-based experiments differ substantially from standard lab-based testing situations employed in previous reliability studies of temporal discounting49,52,53,54,55. Therefore, it is an open question whether temporal discounting measures obtained in VR exhibit a reliability comparable to the standard lab-based tests that are typically used in psychology.

Taken together, by examining healthy non-gambling participants on different days and under different conditions (neutral vs. gambling-related VR environment, standard lab-based testing situation), we addressed the issue of reliability of temporal discounting in virtual versus standard lab environments. We furthermore explored the feasibility of applying the drift diffusion model in the context of RTs obtained via VR-compatible controllers. Finally, we also examined physiological reactivity during exploration of the different virtual environments. The specific virtual environments employed here are ultimately aimed to examine these processes in gambling disorder (e.g. the setup includes a gambling-related and a neutral cafe environment). However, the present study has more general implications for the application of behavioral and psychophysiological testing in virtual environments by examining the reliability of model-based analyses of decision-making in lab-based testing versus testing in different VR environments in a group of young non-gambling controls.

We hypothesized that the data produced on different days and under different conditions would yield only little evidence in favor of systematic shifts in temporal discounting behavior within a group of healthy non-gambling participants, suggesting only insubstantial effects caused by the different environments in our VR-design. Furthermore, we hypothesized that temporal discounting would show a strong reliability, adding further strength to the case that temporal discounting is stable over time and can be applied in VR. Finally, we hypothesized that we could capture latent decision variables in a VR context with the DDM.

Methods

Participants

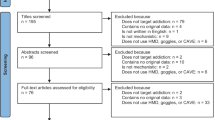

Thirty-four healthy participants (25 female) aged between 18 and 44 (mean = 26.41, std = 6.44) were invited to the lab on three different occasions. Participants were recruited via flyers at the University of Cologne and via postings in local internet forums. No participant indicated a history of traumatic brain injury, psychiatric or neurological disorders or severe motion sickness. Participants were additionally screened for gambling behavior using the questionnaire Kurzfragebogen zum Glückspielverhalten (KFG)56. The KFG fulfills the psychometric properties of a reliable and valid screening instrument. No participant showed a high level (> 15 points on the KFG) of gambling affinity (mean = 1.56, std = 2.61, range: 0 to 13).

Participants provided informed written consent prior to their participation, and the study procedure was approved by the Ethics Board of the Germany Psychological Society. The procedure was in accordance with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

VR-setup

The VR-environments were presented using a wireless HTC VIVE head-mounted display (HMD). The setup provided a 110° field of view, a 90 Hz refresh rate and a resolution of 1440 × 1600 Pixel per eye. Participants had an area of about 6 m2 open space to navigate the virtual environment. For the execution of the behavioral tasks and additional movement control participants held one VR-controller in their dominant hand. The VR-software was run on a PC with the following specifications: CPU: Intel Core i7-3600, Memory: 32.0 GB RAM, Windows 10, GPU: NVIDIA GeForce GTX 1080 (Ti). The VR-environments themselves were designed in Unity. Auditory stimuli were presented using on-ear headphones.

VR-environments

The two VR-environments both consisted of a starting area and an experimental area. The starting area was the same for both VR-environments. It consisted of a small rural shopping street and a small park. Participants heard low street noises. The area was designed for familiarization with the VR-setup and the initial exploration phase. The experimental area of the environments differed for the two environments. For the VRneutral environment it contained a small café with a buffet (Fig. 1a–c). Participants could hear low conversations and music. The gambling-related environment (VRgambling) contained a small casino with slot machines and a sports betting area (Fig. 1d–f). The audio backdrop was the sound of slot machines and sports. The floorplan of both of these experimental areas was identical but mirrored for the café (Fig. 1a, d). Both experimental areas additionally included eight animated human avatars. These avatars performed steady and non-repetitive behaviors like gambling and ordering food for the gambling-related and neutral environments, respectively. Both experimental areas (café and casino) had entrances located at the same position within the starting area of the VR-environments, which were marked by corresponding signs.

Experimental areas of the VR-environments. (a) Floorplan of the café within the VR-neutral environment. (b) View of the main room of the café. (c) View of the buffet area of the café. (d) Floorplan of the casino within the VR-gambling environment. (e) View of the main room of the casino. (f) View of the sports bar within the casino.

Experimental procedure

Participants were invited to the VR lab for three different sessions on three different days. The time between the sessions was between one day and nineteen days (mean = 3.85, std = 3.36). During the three sessions participants either explored one of two different VR environments (VR-sessions) followed by the completion of two behavioral tasks, or simply performed the same two behavioral tasks in a standard lab-testing context (Lab-session). If the session was a VR-session, electrodermal activity (EDA)39 was measured during a non-VR baseline period and the exploration of the VR-environments. The order of the sessions was pseudorandomized. At the first session, not depending on if VR was applied or not, participants arrived at the lab and the behavioral tasks were explained in detail. If the session was a Lab-session, participants proceeded with the two behavioral tasks. If the session was the first of the VR-sessions, participants were subsequently familiarized with the VR-equipment and handling. Participants were seated and a five-minute EDA baseline was measured (baseline phase). For both VR-sessions participants were then helped to apply the VR-equipment and entered the VR-environments. Within the VR-environments participants first explored the starting area for 5 min (first exploration phase). After these five minutes participants were asked to enter the experimental area of the environment (either the café or the casino) (Fig. 1). Participants were instructed to explore the interior experimental area for five minutes (second exploration phase). Each of the three phases was later binned into five one-minute intervals and labeled as B (1 to 5) for the baseline phase, F (1 to 5) for the first exploration phase and S (1 to 5) for the second exploration phase. During the exploration the experimenter closely monitored the participants and alerted them if they were about to leave the designated physical VR-space. After the second exploration phase participants were asked to proceed to a terminal within the VR-environment on which the behavioral tasks were presented.

Physiological measurements

EDA was measured using a BioNomadix-PPGED wireless remote sensor together with a Biopac MP160 data acquisition system (Biopac Systems, Santa Barbara, CA, USA). A GSR100C amplifier module with a gain of 5 V, low pass filter of 10 Hz and a high pass filter DC were included in the recording system. The system was connected to the acquisition computer running the AcqKnowledge software. Triggers for the events within the VR-environments were send to the acquisition PC via digital channels from the VR-PC. Disposable Ag/AgCl electrodes were attached to the thenar and hypothenar eminences of the non-dominant palm. Isotonic paste (Biopac Gel 101) was used to ensure optimal signal transmission. The signal was measured in micro-Siemens units (mS).

Behavioral tasks

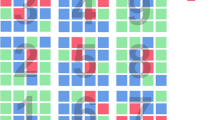

Participants performed the same two behavioral tasks with slightly varied rewards and choices in each of the three sessions: a temporal discounting task17 and a 2-step sequential decision-making task57,58. Results from the 2-step task will be reported separately. In the temporal discounting task participants had to repeatedly choose between an immediately available (smaller-but-sooner, SS) monetary reward of 20 Euros and larger-but-later (LL) temporally delayed monetary rewards. The LL options were multiples of the SS option (range 1.025 to 3.85) combined with different temporal delays (range 1 to122 days). We constructed three sets of six delays and 16 LL options. Each set had the same mean delay and the same mean LL option. Combining each delay with every LL option within each set resulted in three sets of 96 trials. The order of presentation of the trial sets was counter balanced across participants and sessions. All temporal discounting decisions were hypothetical59,60. In the VR-version of the task two yellow squares were presented to the participants (Fig. 2). One depicted the smaller offer of 20 Euros now, while the other depicted the delayed larger offer. For the lab-based testing session were presented in the same way except that the color scheme was white writing on a black background. Offers were randomly assigned to the left/right side of the display and presented until a decision was made. The next trial started 0.5 to 1 s after the decision. Participants indicated their choice either by aiming the VR-controller at the preferred option and pulling the trigger (VR-sessions) or by pressing the corresponding arrow key on the keyboard (Lab-session).

Presentation of the temporal discounting task in VR. Participants had to repeatedly decide between a small but immediate reward (SS) and larger but temporally delayed rewards (LL). Amounts and delays were presented in yellow squares. During the inter-trial intervals (.5–1 s) these squares contained only question marks. Participants indicated their choice by pointing the VR-controller at one of the yellow squares and pulling the trigger.

Model-free discounting data analysis

The behavioral data from the temporal discounting task was analyzed using several complementary approaches. First, we used a model-free approach that involved no a priori hypotheses about the mathematical shape of the discounting function. For each delay, we estimated the LL reward magnitudes at which the subjective value of the LL reward was equal to the SS (indifference point). This was done by fitting logistic functions to the choices of the participants, separately for each delay. Subsequently, these indifference points were plotted against the corresponding delays, and the area under the resulting curve (AUC) was calculated using standard procedures61. AUC values were derived for each participant and testing session, and further analyzed with the intra-class correlation (ICC) and the Friedman Test, a non-parametric equivalent of the repeated measures ANOVA model.

Computational modeling

Previous research on the effects of the delay of a reward on its valuation proposed a hyperbolic nature of devaluation62,63. Therefore, the rate of discounting for each participant was also determined employing a cognitive modeling approach using hierarchical Bayesian modeling16. A hierarchical model was fit to the data of all participants, separately for each session (see below). We applied a hyperbolic discounting model (Eq. 1):

Here, SV(LL) denotes the subjective (discounted) value of the LL. A and D represent the amount and the delay of the LL, respectively. The parameter k governs the steepness of the value decay over time, with higher values of k indicating steeper discounting of value over time. As the distribution of the discount rate k is highly skewed, we estimated the parameter in log-space (log[k]), which avoids numerical instability in estimates close to 0.

The hyperbolic model was then combined with two different choice rules, a softmax action selection rule64 and the drift diffusion model44. For softmax action selection, the probability of choosing the LL option on trial t is given by Eq. (2).

Here, the \(\beta\)-parameter determines the stochasticity of choices with respect to a given valuation model. A \(\beta\) of 0 would indicate that choices are random, whereas higher \(\beta\) values indicate a higher dependency of choices on option values. The resulting best fitting parameter estimates were used to test the ICC and systematic session effects via comparison of the posterior probabilities of group parameters.

Next, we incorporated response times (RTs) into the model by replacing the softmax choice rule with the drift diffusion model (DDM)43,44,45,46. The DDM models choices between two options as a noisy evidence accumulation that terminates as soon as the accumulated evidence exceeds one of two boundaries. In this analysis the upper boundary was set to represent LL choices, and the lower boundary SS choices. RTs for choices of the immediate reward were multiplied by − 1 prior to model estimation. To prevent outliers in the RT data from negatively impacting model fit, the 2.5% slowest and fastest trials of each participant were excluded from the analysis44,45. In the DDM the RT on trial t is distributed according to Wiener first passage time (wfpt) (Eq. 3).

Here α represents the boundary separation modeling the tradeoff between speed and accuracy. τ represents the non-decision time, reflecting perception and response preparation times. The starting value of the diffusion process is given by z, which therefore models a potential bias towards one of the boundaries. Finally, rate of evidence accumulation is given by the drift-rate \(v\).

We first fit a null model (DDM0), where the value difference between the two options was not included, such that DDM parameters were constant across trials45,46. We then used two different temporal discounting DDMS, in which the value difference between options modulated trial-wise drift rates. This was done using either a linear (DDML) or a non-linear sigmoid (DDMS) linking function47. In the DDML, the drift-rate \(v\) in each trial is linearly dependent on the trial-wise scaled value difference between the LL and the SS options (Eq. 4)44. The parameter \(v_{{{\text{coeff}}}}\) maps the value differences onto \(v\) and scales them to the DDM:

One drawback of a linear representation of the relationship between the drift-rate \(v\) and trial-wise value differences is that \(v\) might increase infinitely with high value differences, which can lead the model to under-predict RTs for high value differences45. In line with previous work45,46 we thus included a third version of the DDM, that assumes a non-linear sigmoidal mapping from trial-wise value differences to drift rates (Eqs. 5 and 6)43:

Here, the linear mapping function from the DDML is additionally passed through a sigmoid function S with the asymptote \(v\)max, causing the relationship between \(v\) and the scaled trail-wise value difference m to asymptote at \(v\)max.

We have previously reported detailed parameter recovery analyses for the DDMS in the context of value-based decision-making tasks such as temporal discounting45, which revealed that both subject-level and group-level parameters recovered well.

Hierarchical Bayesian models

All models were fit to the data of all participants in a hierarchical Bayesian estimation scheme, separately for each session, resulting in independent estimates for each participant per session. Participant-level parameters were assumed to be drawn from group-level Gaussian distributions, the means and precisions of which were again estimated from the data. Posterior distributions were estimated via Markov Chain Monte Carlo in the R programming language65 using the JAGS software package66. For the DDM’s the Wiener module for JAGS was used67. For the group-level means, uniform priors over numerically plausible parameter ranges were chosen (Table 1). Priors for the precision of the group-level distribution were Gamma distributed (0.001, 0.001). The convergence of chains was determined by the R-hat statistic68. Values between 1 and 1.01 were considered acceptable. Comparisons of relative model fit were performed using the Deviance Information Criterion (DIC), where lower values reflect a superior model fit69.

Systematic session effects on model parameters

Potential systematic session effects on group level posterior distributions of parameters of interest were analyzed by overlaying the posterior distributions of each group level parameter for the different sessions. Here we report the mean of the posteriors of the estimated group level parameters and the difference distributions between them, the 95% highest density intervals (HDI) for both of these as well as directional Bayes Factors (dBF) which quantify the degree of evidence for reductions versus increases in a parameter. Because the priors for the group effects are symmetric, this dBF can simply be defined as the ratio of the posterior mass of the difference distributions above zero to the posterior mass below zero70. Here directional Bayes Factors above 3 are interpreted as moderate evidence in favor of a positive effect, while Bayes Factors above 12 are interpreted as strong evidence for a positive effect71. Specifically, a dBF of 3 would imply that a positive directional effect is three times more likely than a negative directional effect. Bayes Factors below 0.33 are likewise interpreted as moderate evidence in favor of the alternative model with reverse directionality. A dBF above 100 is considered extreme evidence71. The cutoffs used here are liberal in this context, because they are usually used if the test is against a H0 implying an effect of 0. In addition, we report the effect size (Cohen’s d) based on the mean posterior distributions of the session means, the pooled standard deviations across sessions and the correlation between sessions.

ICC analysis

The test–retest reliability of the best fitting parameter values between the three sessions was analyzed using the intra-class correlation coefficient (ICC). The ICC-analysis was done in the R programming language65 and was based on a mean-rating of three raters, absolute agreement and a two-way mixed model. ICC values below 0.5 are an indication of poor test–retest reliability, whereas values in the range between 0.5 and 0.75 indicate a moderate test–retest reliability72. Higher values between 0.75 and 0.9 indicate a good reliability, while values above 0.9 suggest an excellent test–retest reliability.

Analysis of physiological data

A frequently used index of sympathetic activity is electrodermal activity, i.e. changes in skin conductance (SC)73. Here the physiological reactivity to the VR-environments is measured as the slowly-varying skin conductance level (SCL)39. Thus, the SCL was extracted from the EDA signal using continuous decomposition analysis (CDA) via the Ledalab toolbox74 for Matlab (MathWorks). For the deconvolution, default settings were used. The resulting signal was then transformed into percentage change from the mean signal of the five minutes baseline phase at the beginning of the experiment. Subsequently, five one-minute bins were constructed for each phase of the VR-session (baseline phase, the first exploration phase and the second exploration phase). An alternative way of classifying tonic sympathetic arousal can be the number of spontaneous phasic responses (SCR) in the EDA signal74. Again, the signal was divided in one-minute bins and the number of spontaneous SCRs during each bin was calculated from the phasic component of the deconvoluted EDA signal using the Ledalab toolbox. The resulting values were similarly transformed into percentage change from the mean number of SCRs during the five baseline bins. To test whether entering the VR-environments had a general effect on sympathetic arousal, we compared the values for the last time point of the base line phase (B5) with the first time point of the first exploration phase (F1) for both sessions using a non-parametric Wilcoxon Signed-Rank Test. To test whether there was a differential effect of entering the different experimental areas of the VR-environments on sympathetic arousal, for both measures the differences between the last time point of the first exploration phase (F5) and the first time point of the second exploration phase (S1) were compared across VR-sessions using a non-parametric Wilcoxon Signed-Tanks Test75. Effect sizes are given as r76, computed as the statistic Z divided by the square-root of N. Effect sizes between 0 and 0.3 are considered small and effect sizes between 0.3 and 0.5 are considered medium and r values > 0.5 are considered large effects.

Data and code availability

Raw behavioral and physiological data as well as JAGS model code is available on the Open Science Framework (https://osf.io/xkp7c/files/).

Results

Temporal discounting AUC

The analysis of the AUC values revealed no significant session effect across participants (Friedman Test: Chi-Squared = 1.235 df = 2 p = 0.539). Furthermore, the ICC value was 0.93 (95% confidence interval (CI): 0.89–0.96) (p < 0.001) indicating an excellent test–retest reliability of temporal discounting AUC values over the three sessions (Table 2). Pairwise correlations between all sessions can be found in the supplementary materials (Supplementary Fig. S1).

Softmax choice rule

For the hyperbolic model with softmax choice rule, the group level posteriors showed little evidence for systematic effects of the different sessions on log(k) (all BFs < 3 or > 0.33) (Fig. 3a, c and Table 2). In contrast, the softmax \(\beta\) parameter was higher (reflecting higher consistency) in the VRneutral session compared to the other sessions (vs. Lab: dBF = 0.01 and vs. VRgambiling: dBF = 0.048) (Fig. 3b, d and Table 2). This indicates that a higher \(\beta\) in the VRneutral session was approximately 100 (Lab) or 20 (VRgambling) times more likely than a lower \(\beta\). There was little evidence for a systematic effect between the Lab and VRgambling sessions (dBF = 0.446).

Posterior distributions of the parameters of the hyperbolic discounting model. Colored bars represent the corresponding 95% HDIs. (a) Posterior distribution of the log(k) parameter (reflecting the degree of temporal discounting) for all three sessions. (b) Posterior distribution of the \(\beta\) or inverse temperature parameter (reflecting decision noise). (c) Pairwise difference distributions between the posteriors of the log(k) parameters of all three sessions. (d) Pairwise difference distributions between the posteriors of the \(\beta\) parameters of all three sessions.

The ICC value for the log(k) parameter indicated an excellent test–retest reliability of 0.91 (CI: 0.86–0.96) (p < 0.001) (Table 3). For the \(\beta\)-parameter of the softmax choice rule the ICC value was 0.34 (CI: 0.17–0.53) (p < 0.001) indicating a poor test–retest reliability (Table 3). The pairwise correlations of estimated parameter values between all sessions can be found in the Supplement (Supplementary Figs. S2 and S3). Pairwise correlations between all sessions for both parameters can be found in the supplementary materials (Supplementary Figs. S2 and S3).

Drift diffusion model choice rule

Model comparison revealed that the DDMS had the lowest DIC in all conditions (Table 4) replicating previous work45,46,48. Consequently, further analyses of session effects and reliability focused on this model. For the log(k) parameter, the 95% HDIs showed a high overlap between all sessions indicating no systematic session effects, however the BFs showed moderate evidence for a reduced log(k) in the VRneutral-session (Fig. 4a, d and Table 5). A lower value in the VRneutral-session was about seven (Lab-session dBF = 6.756) or four times (VRgambling dBF = 3.86) more likely than a lower value. Similarly, the posterior distributions of \(v\)max, \(v\)coeff and α were highly overlapping, whereas some of the dBFs gave moderate evidence for systematic directional effects within these parameters (Figs. 4b, c, e, f, and 5b, e, Table 5). \(v\)coeff, mapping trial-wise value difference onto the drift rate, was lowest in the Lab-session and highest in VRneutral (Lab-VRneutral dBF = 0.074, Lab-VRgambling = 0.2, VRgambling-VRneutral = 0.228). Thus, an increase in vcoeff in VRneutral compared to the Lab-session was approximately thirteen times more likely than a decrease. Likewise, it was approximately five times more likely that there was an increase in the VRneutral compared to the VRgambling-session. For \(v\)max, the upper boundary for the value difference’s influence on the drift rate, the dBFs indicated that a positive shift from VRgambling to VRneutral was five times more likely than a negative shift (dBF = 0.203) but there was only very little indication of a systematic difference between both of them and the Lab-session. Finally, a reduction of the boundary separation parameter α was five times more likely than an increase when comparing the VRneutral to the Lab-session (dBF = 0.255). There was little evidence for any other systematic differences. The bias parameter z displayed high overlap in HDIs and little evidence for any systematic effects between sessions (all dBFs > 0.33 or < 3) (Fig. 5c, f and Table 5). For the non-decision time parameter τ there was extreme evidence for an increase in the VR-sessions compared to the Lab-session (both dBFs > 100), reflecting prolonged motor and/or perceptual components of the RT that was more than 100 times more likely than a shortening of these components (Fig. 5a, d and Table 5).

Posterior distributions of the parameters of the DDMS model. Colored bars represent the corresponding 95% HDIs. (a) Posterior distributions of the log(k) parameter for all three sessions. (b) Posterior distributions of the vcoeff parameter (mapping the drift rate onto the trial wise value difference). (c) Posterior distributions of the vmax parameter (setting an asymptote for the relation between the trial wise value difference and the drift rate). (d) Pairwise difference distributions between the posterior distributions of the log(k) parameters of the three sessions. (e) Pairwise difference distributions between the posterior distributions of the vcoeff parameters of the three sessions. (f) Pairwise difference distributions between the posterior distributions of the vmax parameters of the three sessions.

Posterior distributions of the remaining parameters of the DDMS model. Colored bars represent the corresponding 95% HDIs. (a) Posterior distributions of the τ parameter (non-decision time) for all three sessions. (b) Posterior distributions of the α parameter (separation between decision boundaries). (c) Posterior distributions of the z parameter (bias towards one decision option). (d) Pairwise difference distributions between the posterior distributions of the τ parameters of the three sessions. (e) Pairwise difference distributions between the posterior distributions of the α parameters of the three sessions. (f) Pairwise difference distributions between the posterior distributions of the z parameters of the three sessions.

The ICC value for the log(k) parameter was 0.7 (CI: 0.56–0.8) indicating a moderate test–retest-reliability (Table 5). For the other DDMS parameters, ICC values were substantially lower (Table 6). Pairwise correlations between all sessions for all parameters can be found in the supplementary materials (Supplementary Figs. S4–S9).

Split-half reliability control analyses for DDM parameters

In light of the lower ICC values for the DDMS parameters beyond log(k), we ran additional analyses. Specifically, we hypothesized that these lower ICC values might be attributable to fluctuations of state factors, e.g. mood, fatigue or motivation, between the different sessions. Therefore, we explored within-session reliability of these parameters, separately for each session. Trials where split into odd and even trials and modelled separately using the DDMS, as described above. In general, within-session split-half reliability was substantially greater than test–retest reliability, and mostly in a good to excellent range (range: − 0.1 for \(v\)coeff in VRgambling to 0.94 for τ in VRneutral). The lower test–retest reliabilities of some of the DDMS parameters are therefore unlikely to be due to the specifics of the parameter estimation procedure. Rather, these findings are compatible with the view that the parameters underlying the evidence accumulation process might be more sensitive to state-dependent changes in mood, fatigue or motivation. Full results for the split-half reliability analyses can be found in the supplementary materials (Supplementary Tables S3–S5).

Electrodermal activity (EDA)

The data of 8 of the 34 participants had to be excluded from the EDA analysis, due to technical problems or missing data during one of the testing sessions. Physiological reactivity in the remaining 26 (18 female) participants was analyzed by converting the SCL signal as well as the nSCRs into percent change from the mean level during the base line phase. Both signals were then binned into five one-minute intervals for each of the three phases (baseline, first exploration and second exploration phase). All comparisons were tested with the Wilcoxon Signed Rank Test. Entering the VR-environments (comparing bin B5 to bin F1 for both environments individually) resulted in a significant increase in the SCL values for both VR-environments (VRneutral: Z = − 3.67, p < 0.001, r = 0.72; VRgambling: Z = − 3.543, p = 0.002, r = 0.695) (Fig. 6c, d). The effect was large in both sessions (r > 0.5). However, for the number of spontaneous SCRs (nSCRs), this effect was only significant in the neutral VR-environment (neutral: Z = − 2.623, p = 0.009, r = 0.515; gambling: Z = − 0.013, p = 0.99, r = 0.002). There was no significant difference between the two sessions, but the effect was of medium size (Z = − 1.7652, p = 0.078, r = 0.346) (Fig. 6a, b). To test whether entering the specific experimental areas of the two VR-environments (virtual café vs. virtual casino) had differential effects on physiological responses, the increase in sympathetic arousal from the end of the first exploration phase to the start of the second exploration phase was examined (comparing bin F5 to bin S1, see Fig. 6b, d). The SCL (neutral: Z = − 0.7238, p = − 0.469, r = 0.142; gambling: Z = − 0.089, p = 0.929, r = 0.017) as well as the nSCRs (neutral: Z = − 1.943, p = 0.052, r = 0.381; gambling: Z = 0.982, p = 0.326, r = 0.193) assessed for each session individually showed no significant effect. The effect size was medium (r = 0.381) for the nSCRs of the VRneutral-session and small for all other comparisons (r < 0.3). Furthermore, the Wilcoxon Signed-Ranks test indicated no significant differences between the two experimental areas on both sympathetic arousal measures (SCL: Z = − 0.572, p = 0.381, r = 0.11; nSCRs.: Z = − 1.7652, p = 0.078, r = 0.346) (Fig. 6b, d). For the nSCRs however, the effect was of a medium size (r = 0.346).

Results of the EDA measurements divided into 15 time points over the course of the baseline phase, measured before participants entered the VR-environments, and the first and second exploration phases. Each of the three phases is divided into five one-minute bins (B1-5: pre-VR baseline, F1-5: first exploration phase in VR, S1-5: second exploration phase VR). (a) Median percent change from baseline mean for no. of spontaneous SCRs over all participants. (b) Boxplot of percentage change from baseline mean for no. spontaneous SCRs over all participants. (c) Median percent change from baseline mean of SCL over all participants. (d) Boxplots of percentage change from base line mean of SCL over all participants.

Discussion

Here we carried out an extensive investigation into the reliability of temporal discounting measures obtained in different virtual reality environments as well as standard lab-based testing. This design allowed us the joint assessment of physiological arousal and decision-making, an approach with potential applications to cue-reactivity studies in substance use disorders or behavioral addictions such as gambling disorder. Participants performed a temporal discounting task within two different VR-environments (a café environment and a casino/sports betting environment: VRneutral vs. VRgambling) as well as in a standard computer-based lab testing session. Exposure to VR generally increased sympathetic arousal as assessed via electrodermal activity (EDA), but these effects were not differentially modulated by the different VR environments. Results revealed good to excellent test–retest reliability of model-based (log(k)) and model-free (AUC) measures of temporal discounting across all testing environments. However, the DDMS parameters modelling latent decision processes showed substantially lower test–retest reliabilities between the three sessions. The split-half reliability within each session was mostly good to excellent indicating that the lower test–retest reliability was likely caused by the participants current state and not by factors within the modelling process itself.

To test how well temporal discounting, as a measure of choice impulsivity, performs in virtual environments we implemented a VR-design that is built for possible future application in a cue-reactivity context. Healthy controls displayed little evidence for systematic differences in choice preferences between the Lab-session and the VR-sessions. This was observed for model-free measures (AUC), as well as the log(k) parameter of the hyperbolic discounting model with the softmax choice rule and the drift diffusion model with non-linear drift rate scaling (DDMS). Model comparison revealed that the DDMS accounted for the data best, confirming previous findings43,45,46,48. Although generally, discount rates assessed in the three sessions were of similar magnitude, in the DDMS there was moderate evidence for reduced discounting (i.e., smaller values of log(k)) in the VRneutral session. The reasons for this could be manifold. One possibility is that environmental novelty plays a role, such that perceived novelty of the VRneutral session might have been lower than for the VRgambling and Lab-sessions. Exposure to novelty can stimulated dopamine release77, which is known to impact temporal discounting78. Nonetheless, effect sizes were medium (0.37 and 0.29) and the dBFs revealed only moderate evidence. Numerically, the mean log(k)’s of the softmax model showed the same tendency, but here effects were less pronounced. One possibility is that the inclusion of additional latent variables in the DDMS might have increased sensitivity to detect this effect. There was also evidence for a session effect on the scaling parameter (\(v\) coeff). Here, the impact of trial-wise value differences on the drift rate was attenuated in the Lab-session, with dBFs revealing strong (VRneutral) or moderate evidence (VRgambling) for a reduction in vcoeff in the Lab-session. Again, effect sizes were medium. Nevertheless, the data suggest increased sensitivity to value differences in VR. This effect might be due to the option presentation in the Lab-session compared to the VR-sessions. The presentation of options within VR might have been somewhat more salient, which might have increased attention allocated to the value differences within the VR-sessions. However, this remains speculative until further research reproduces and further assesses these specific effects on the DDM parameters. Boundary separation (α), drift rate asymptote (vmax) and starting point (z) showed little evidence for systematic differences between sessions. The only DDMS parameter showing extreme evidence for a systematic difference between the lab- and VR-sessions was the non-decision time (τ). This effect is unsurprising, as it describes RT components attributable to perception and/or motor execution. Given that indicating a response with a controller in three-dimensional space takes longer than a simple button press, this leads to substantial increases in τ during VR testing. Finally, the good test–retest reliability of log(k) from the DDMS furthermore indicates that RTs obtained in VR can meaningfully be modeled using the DDM. The potential utility of this modeling approach in the context of gambling disorder is illustrated by a recent study that reported reduced boundary separation (α) in participants suffering from gambling disorder compared to healthy controls in a reinforcement learning task48. Given that there are mixed results when it comes to the effect of addiction related cues on RTs79,80,81, the effects of these cues on the latent decision variables included in the DDM could provide additional insights. Taken together, these results show that VR immersion in general does not influence participants inter-temporal preferences in a systematic fashion and might open up a road to more ecologically valid lab experiments, e.g., focusing on behavioral cue-reactivity in addiction. This is in line with other results showing the superiority of VR compared to classical laboratory experiments6.

The present data add to the discussion concerning the reliability of behavioral tasks9,50,51,52,53,55 in particular in the context of computational psychiatry15,82. To examine test–retest reliability, the three sessions were performed on different days and with a mean interval of 3.85 days between sessions. The test–retest reliability for the AUC and the log(k) parameter of the hyperbolic discounting model with softmax choice rule were both excellent. For the log(k) of the DDMS the ICC was good, but slightly lower than for AUC and softmax. Nevertheless, the discount rate log(k) was overall stable regardless of the analytical approach. The ICC of 0.7 observed for the DDMS was comparable to earlier studies on temporal discounting reliability52,53. Kirby and colleagues52 for instance demonstrated a reliability of 0.77 for a 5-week interval and 0.71 for 1 year. This shows that at least over shorter periods from days to weeks, temporal discounting performed in VR has a reliability comparable to standard lab-based testing. Enkavi and colleagues49 stress that in particular difference scores between conditions (e.g. Stroop, Go-NoGo etc.), show unsatisfactory reliability due to the low between participants variation created by commonly used behavioral tasks. Assessment of difference scores was not applicable in the present study. Nevertheless, there was no positive evidence for systematic effects on log(k) (with the exception of the potential novelty effects discussed above), and the test–retest reliability between all conditions was at least good across analysis schemes, indicating short-term stability of temporal discounting measured in VR. It is worth noting, however, that temporal discounting shares some similarities with questionnaire-based measures. As in questionnaires, in temporal discounting tasks participants are explicitly instructed to indicate their preferences. This might be one reason why the reliability of temporal discounting is often substantially higher than that of other behavioral tasks49,52,53,55. Other parameters of the DDMS showed lower levels of test–retest reliability. Especially the \(v\)coeff parameters were less reliable, at least when estimated jointly with νmax. In the DDML, which does not suffer from potential trade-offs between these different drift rate components, the ICC of \(v\)coeff was good (Supplementary Table S2). Similarly, here log(k) also showed an excellent ICC.

The substantially lower test–retest reliability exhibited by the parameters of the DDMS that represent latent decisions processes, compared to log(k) or AUC warrants further discussion. Prior publications from our lab24,41 have extensively reported parameter recovery of the DDMs model and revealed a good recovery performance. The low test–retest reliability is therefore unlikely to be due to poor identifiability of model parameters. One possible reason for this discrepancy between log(k)/AUC and the other parameters is that the tendency to discount value over time might be a stable trait-like factor, while the latent decision processes reflected in the other DDMS parameters might be more substantially influenced by state effects. While this could explain the low test–retest reliability, it would predict that these parameters should nonetheless be stable within sessions. We addressed this issue in a further analysis of within-session split-half reliability (see Supplementary Tables S3–S5). The results showed a good-to-excellent within-session stability for most parameters, with the drift rate coefficient \(v\)coeff being a notable exception. This is compatible with the idea that latent decision processes reflected in the DDMS parameters might be affected by factors that differ across testing days, but are largely stable within sessions, such as mood, fatigue or motivation.

VR has previously been used to study cue-reactivity in participants suffering from gambling disorder2,3,83, but also in participants experiencing nicotine84 and alcohol1 use disorders. Our experimental set-up extends these previous approaches in several ways. First, we included both a neutral and a gambling-related environment. This allows us to disentangle general VR effects from specific contextual effects. Second, our reliability checks for temporal discounting show that model-based constructs with clinical relevance for addiction18,23 can be reliably assessed when behavioral testing is implemented directly in the VR environment. Together, these advances might yield additional insights into the mechanisms underlying cue-reactivity in addiction, and contextual effects in psychiatric disorders more generally.

Understanding how addictions manifest on a computational and physiological level is important to further the understanding the mechanisms underlying maladaptive decision-making. Although alterations in neural reward circuits, in particular in ventral striatum and ventromedial prefrontal cortex, are frequently observed in gambling disorder, there is considerable heterogeneity in the directionality of these effects85. Gambling-related visual cues interfere with striatal valuation signals in participants suffering from gambling disorder, and might thereby increase temporal discounting12. In the present work, assessment of physiological reactivity to VR was limited to electrodermal activity (EDA). EDA is an index of autonomic sympathetic arousal, which is in turn related to the emotional response to addiction related cues39,86,87,88. The skin conductance level (SCL) is increased in participants with substance use disorders in response to drug related cues86. Additionally, it has been shown that addiction related cues in VR can elicit SCR responses in teen87 and adult88 participants suffering from a nicotine addiction. In our study, we mainly used this physiological marker to assess how healthy participants react to VR exposure. For the number of spontaneous responses in the EDA signal (nSCRs), the increase upon exposure to VR (B5 vs. F1) was only significant in the VRneutral environment. The effect size for the difference between both environments was medium. Given that the two starting areas of the VR-environments were identical, this difference might have been caused by random fluctuations. However, an increase in the number of spontaneous SCRs during VR immersion has been reported previously5 and thus warrants further investigation. The SCL, on the other hand, increased substantially upon exposure to VR, as indicated by a significant increase between the last minute of baseline recording (B5) and the first minute of the first exploration phase (F1). The effect sizes indicated a large effect. SCL then remained elevated throughout both exploration phases (F1 to S5) but did not increase further when the virtual café/casino area was entered. These results suggest that exposure to VR increases sympathetic arousal as measured with SCL in healthy control participants independent of the presented VR environment.

There are several limitations that need to be acknowledged. First, there was considerable variability in test–retest intervals across participants. While most of the sessions were conducted within a week, in some participants this interval was up to 3 weeks, reducing the precision of conclusions regarding temporal stability of discounting in VR. Other studies, however, have used intervals ranging from 5 to 57 weeks52 or three months53, and have reported comparable reliabilities. Moreover, there is evidence for a heritability of temporal discounting of around 30 and 50 percent at the ages of 12 and 14 years respectively89. This increases the confidence in the results obtained here. Nevertheless, a more systematic assessment of how long these trait indicators remain stable in VR would be desirable and could be addressed by future research. Second, the sample size was lower compared to larger studies conducted online49, and the majority of participants was female. Both factors limit the generalizability of our results. However, large-scale online studies have shortcomings of their own, including test batteries that take multiple hours and/or multiple sessions to complete49,50, potentially increasing participants’ fatigue, and which might have detrimental effects on data quality. We also note that the present sample size was sufficiently large to reveal stable parameter estimates, showing that in our design participants performed the task adequately. Thirdly, the immersion in VR might have been reduced by the available physical lab space. To ensure safety, the experimenter had to at times instruct participants to stay within the designated VR-zone. This distraction might have reduced the effects caused by the VR-environments, because participants were not able to fully ignore the actual physical surroundings. Additionally, it might have influenced the EDA measurements in an unpredictable way. Future research would benefit from the implementation of markers within the VR-environments in order to ensure safety without breaking immersion. Moreover, participants had to spend about thirty minutes in the full VR-setup. The behavioral tasks were presented after the exploration phase, such that participants might have been fatigued or experienced discomfort during task completion. Finally, the study at hand did not include participants that gamble frequently or are suffering from gambling disorder and is therefore not a cue-reactivity study itself, but rather a methodological validation for future studies using this and similar designs. Due to the fact that participants here were supposed to be fairly unfamiliar with gambling environments this study could not determine how ecologically valid the gambling environment actually is. This needs to be addressed in future research. In relation to that, cue-reactivity in gambling disorder is determined by many individual factors37. The VR-design presented here is designed for slot machine and sports betting players, and thus not applicable for other forms of gambling.

Overall, our results demonstrate the methodological feasibility of a VR-based approach to behavioral and physiological testing in VR with potential applications to cue-reactivity in addiction. Healthy non-gambling control participants showed little systematic behavioral and physiological effects of the two VR environments. Moreover, our data show that temporal discounting is reliable behavioral marker, even if tested in very different experimental settings (e.g. standard lab testing vs. VR). It remains to be seen if such gambling-related environments produce cue-reactivity in participants suffering from gambling disorder. However, results from similar applications have been encouraging2,3. These results show the promise of VR applications jointly assessing of behavioral and physiological cue-reactivity in addiction science.

References

Ghiţă, A. et al. Cue-elicited anxiety and alcohol craving as indicators of the validity of ALCO-VR software: a virtual reality study. J. Clin. Med. 8, 1153 (2019).

Bouchard, S. et al. Using virtual reality in the treatment of gambling disorder: the development of a new tool for cognitive behavior therapy. Front. Psychiatry 8, 27 (2017).

Giroux, I. et al. Gambling exposure in virtual reality and modification of urge to gamble. Cyberpsychol. Behav. Soc. Netw. 16, 224–231 (2013).

Wang, Y. G., Liu, M. H. & Shen, Z. H. A virtual reality counterconditioning procedure to reduce methamphetamine cue-induced craving. J. Psychiatr. Res. 116, 88–94 (2019).

Peterson, S. M., Furuichi, E. & Ferris, D. P. Effects of virtual reality high heights exposure during beam-walking on physiological stress and cognitive loading. PLoS ONE 13, 1–17 (2018).

Rubo, M. & Gamer, M. Stronger reactivity to social gaze in virtual reality compared to a classical laboratory environment. Br. J. Psychol. https://doi.org/10.1111/bjop.12453 (2020).

Detez, L. et al. A psychophysiological and behavioural study of slot machine near-misses using immersive virtual reality. J. Gambl. Stud. 35, 929–944 (2019).

Dickinson, P., Gerling, K., Wilson, L. & Parke, A. Virtual reality as a platform for research in gambling behaviour. Comput. Hum. Behav. 107, 106293 (2020).

Amlung, M. et al. Delay discounting as a transdiagnostic process in psychiatric disorders: a meta-analysis. JAMA Psychiatr. https://doi.org/10.1001/jamapsychiatry.2019.2102 (2019).

Kirby, K. N. & Petry, N. M. Heroin and cocaine abusers have higher discount rates for delayed rewards than alcoholics or non-drug-using controls. Addiction 99, 461–471 (2004).

Peters, J. et al. Lower ventral striatal activation during reward anticipation in adolescent smokers. Am. J. Psychiatry 168, 540–549 (2011).

Miedl, S. F., Büchel, C. & Peters, J. Cue-induced craving increases impulsivity via changes in striatal value signals in problem gamblers. J. Neurosci. 34, 4750–4755 (2014).

Potenza, M. N. Review. The neurobiology of pathological gambling and drug addiction: an overview and new findings. Philos. Trans. R. Soc. Lond. B Biol. Sci. 363, 3181–3189 (2008).

Wiehler, A. & Peters, J. Reward-based decision making in pathological gambling: the roles of risk and delay. Neurosci. Res. 90, 3–14 (2015).

Huys, Q. J. M., Maia, T. V. & Frank, M. J. Computational psychiatry as a bridge from neuroscience to clinical applications. Nat. Neurosci. 19, 404–413 (2016).

Farrell, S. & Lewandowsky, S. Computational Modeling of Cognition and Behaviour (Cambridge University Press, 2018). https://doi.org/10.1017/CBO9781316272503

Miedl, S. F., Peters, J. & Büchel, C. Altered neural reward representations in pathological gamblers revealed by delay and probability discounting. Arch. Gen. Psychiatry 69, 177–186 (2012).

Bickel, W. K., Koffarnus, M. N., Moody, L. & Wilson, A. G. The behavioral- and neuro-economic process of temporal discounting: a candidate behavioral marker of addiction. Neuropharmacology 76(Pt B), 518–527 (2014).

Lempert, K. M., Steinglass, J. E., Pinto, A., Kable, J. W. & Simpson, H. B. Can delay discounting deliver on the promise of RDoC?. Psychol. Med. 49, 190–199 (2019).

Dixon, M. R., Jacobs, E. A., Sanders, S. & Carr, J. E. Contextual control of delay discounting by pathological gamblers. J. Appl. Behav. Anal. 39, 413–422 (2006).

Genauck, A. et al. Cue-induced effects on decision-making distinguish subjects with gambling disorder from healthy controls. Addict. Biol. 25, 1–10 (2020).

Robinson, T. E. & Berridge, K. C. The neural basis of drug craving: an incentive-sensitization theory of addiction. Brain Res. Rev. 18, 247–291 (1993).

Bickel, W. K., Yi, R., Landes, R. D., Hill, P. F. & Baxter, C. Remember the future: Working memory training decreases delay discounting among stimulant addicts. Biol. Psychiatry 69, 260–265 (2011).

Bickel, W. K., Moody, L. & Quisenberry, A. Computerized working-memory training as a candidate adjunctive treatment for addiction. Alcohol Res. Curr. 36, 123 (2014).

Carter, B. L. & Tiffany, S. T. Meta-analysis of cue–reactivity in addiction research. Addiction 94, 327–340 (1999).

Starcke, K., Antons, S., Trotzke, P. & Brand, M. Cue-reactivity in behavioral addictions: a meta-analysis and methodological considerations. J. Behav. Addict. 7, 227–238 (2018).

Berridge, K. C. & Robinson, T. E. Liking, wanting, and the incentive-sensitization theory of addiction. Am. Psychol. 71, 670–679 (2016).

Anselme, P. Motivational control of sign-tracking behaviour: a theoretical framework. Neurosci. Biobehav. Rev. 65, 1–20 (2016).

Berridge, K. C. From prediction error to incentive salience: mesolimbic computation of reward motivation. Eur. J. Neurosci. 35, 1124–1143 (2012).

Volkow, N. D. et al. Cocaine cues and dopamine in dorsal striatum: mechanism of craving in cocaine addiction. J. Neurosci. 26, 6583–6588 (2006).

van Holst, R. J., van Holstein, M., van den Brink, W., Veltman, D. J. & Goudriaan, A. E. Response inhibition during cue reactivity in problem gamblers: an fmri study. PLoS ONE 7, 1–10 (2012).

Brevers, D., He, Q., Keller, B., Noël, X. & Bechara, A. Neural correlates of proactive and reactive motor response inhibition of gambling stimuli in frequent gamblers. Sci. Rep. 7, 1–11 (2017).

Brevers, D., Sescousse, G., Maurage, P. & Billieux, J. Examining neural reactivity to gambling cues in the age of online betting. Curr. Behav. Neurosci. Rep. 6, 59–71 (2019).

Crockford, D. N., Goodyear, B., Edwards, J., Quickfall, J. & El-Guebaly, N. Cue-induced brain activity in pathological gamblers. Biol. Psychiatry 58, 787–795 (2005).

Goudriaan, A. E., De Ruiter, M. B., Van Den Brink, W., Oosterlaan, J. & Veltman, D. J. Brain activation patterns associated with cue reactivity and craving in abstinent problem gamblers, heavy smokers and healthy controls: an fMRI study. Addict. Biol. 15, 491–503 (2010).

Kober, H. et al. Brain activity during cocaine craving and gambling urges: an fMRI study. Neuropsychopharmacology 41, 628–637 (2016).

Limbrick-Oldfield, E. H. et al. Neural substrates of cue reactivity and craving in gambling disorder. Transl. Psychiatry 7, e992 (2017).

Potenza, M. N. et al. Gambling urges in pathological gambling. Arch. Gen. Psychiatry 60, 828 (2003).

Braithwaite, J. J., Watson, D. G., Jones, R. & Rowe, M. A guide for analysing electrodermal activity (EDA) and skin conductance responses (SCRs) for psychological experiments. Psychophysiology 49, 1017–1034 (2013).

Forstmann, B. U., Ratcliff, R. & Wagenmakers, E.-J. Sequential sampling models in cognitive neuroscience: advantages, applications, and extensions. Annu. Rev. Psychol. 67, 641–666 (2016).

Ballard, I. C. & McClure, S. M. Joint modeling of reaction times and choice improves parameter identifiability in reinforcement learning models. J. Neurosci. Methods 317, 37–44 (2019).

Shahar, N. et al. Improving the reliability of model-based decision-making estimates in the two-stage decision task with reaction-times and drift-diffusion modeling. PLoS Comput. Biol. 15, e1006803 (2019).

Fontanesi, L., Gluth, S., Spektor, M. S. & Rieskamp, J. A reinforcement learning diffusion decision model for value-based decisions. Psychon. Bull. Rev. 26, 1099–1121 (2019).

Pedersen, M. L., Frank, M. J. & Biele, G. The drift diffusion model as the choice rule in reinforcement learning. Psychon. Bull. Rev. 24, 1234–1251 (2017).

Peters, J. & D’Esposito, M. The drift diffusion model as the choice rule in inter-temporal and risky choice: a case study in medial orbitofrontal cortex lesion patients and controls. PLoS Comput. Biol. 16, 1–26 (2020).

Wagner, B., Clos, M., Sommer, T. & Peters, J. Dopaminergic modulation of human inter-temporal choice: a diffusion model analysis using the D2-receptor-antagonist haloperidol. bioRxiv (2020).

Miletić, S., Boag, R. J. & Forstmann, B. U. Mutual benefits: combining reinforcement learning with sequential sampling models. Neuropsychologia 136, 107261 (2020).

Wiehler, A. & Peters, J. Diffusion modeling reveals reinforcement learning impairments in gambling disorder that are linked to attenuated ventromedial prefrontal cortex value representations. bioRxiv 2020.06.03.131359 (2020). https://doi.org/10.1101/2020.06.03.131359.

Enkavi, A. Z. et al. Large-scale analysis of test-retest reliabilities of self-regulation measures. Proc. Natl. Acad. Sci. U. S. A. 116, 5472–5477 (2019).

Eisenberg, I. W. et al. Applying novel technologies and methods to inform the ontology of self-regulation. Behav. Res. Ther. 101, 46–57 (2018).

Hedge, C., Powell, G. & Sumner, P. The reliability paradox: why robust cognitive tasks do not produce reliable individual differences. Behav. Res. Methods 50, 1166–1186 (2018).

Kirby, K. N. One-year temporal stability of delay-discount rates. Psychon. Bull. Rev. 16, 457–462 (2009).

Ohmura, Y., Takahashi, T., Kitamura, N. & Wehr, P. Three-month stability of delay and probability discounting measures. Exp. Clin. Psychopharmacol. 14, 318–328 (2006).

Peters, J. & Büchel, C. Overlapping and distinct neural systems code for subjective value during intertemporal and risky decision making. J. Neurosci. 29, 15727–15734 (2009).

Odum, A. L. Delay discounting: trait variable?. Behav. Process. 87, 1–9 (2011).

Petry, J. Psychotherapie der Glücksspielsucht (Psychologie Verlags Union, 1996).

Daw, N. D., Gershman, S. J., Seymour, B., Dayan, P. & Dolan, R. J. Model-based influences on humans’ choices and striatal prediction errors. Neuron 69, 1204–1215 (2011).

Kool, W., Cushman, F. A. & Gershman, S. J. When does model-based control pay off?. PLoS Comput. Biol. 12, e1005090 (2016).

Johnson, M. W. & Bickel, W. K. Within-subject comparison of real and hypothetical money rewards in delay discounting. J. Exp. Anal. Behav. 77, 129–146 (2002).

Bickel, W. K., Pitcock, J. A., Yi, R. & Angtuaco, E. J. C. Congruence of BOLD response across intertemporal choice conditions: fictive and real money gains and losses. J. Neurosci. 29, 8839–8846 (2009).

Myerson, J., Green, L. & Warusawitharana, M. Area under the curve as a measure of discounting. J. Exp. Anal. Behav. 76, 235–243 (2001).

Green, L., Myerson, J. & Macaux, E. W. Temporal discounting when the choice is between two delayed rewards. J. Exp. Psychol. Learn. Mem. Cogn. 31, 1121–1133 (2005).

Mazur, J. E. An adjusting procedure for studying delayed reinforcement. Sci. Res. 1987, 55–73 (1987).

Sutton, R. S. & Barto, A. G. Reinforcement Learning: An Introduction (MIT Press, 1998).

R Core Team. R: A Language and Environment for Statistical Computing (R Core Team, 2013).

Plummer, M. A program for analysis of Bayesian graphical models. Work 124, 1–10 (2003).

Wabersich, D. & Vandekerckhove, J. Extending JAGS: a tutorial on adding custom distributions to JAGS (with a diffusion model example). Behav. Res. Methods 46, 15–28 (2014).

Gelman, A. & Rubin, D. B. Inference from iterative simulation using multiple sequences. Stat. Sci. 7(4), 457–472 (1992).

Spiegelhalter, D. J., Best, N. G., Carlin, B. P. & Van Der Linde, A. Bayesian measures of model complexity and fit. J. R. Stat. Soc. Ser. B Stat. Methodol. 64, 583–639 (2002).

Marsman, M. & Wagenmakers, E. J. Three insights from a Bayesian interpretation of the one-sided p value. Educ. Psychol. Meas. 77, 529–539 (2017).

Beard, E., Dienes, Z., Muirhead, C. & West, R. Using Bayes factors for testing hypotheses about intervention effectiveness in addictions research. Addiction 111, 2230–2247 (2016).

Koo, T. K. & Li, M. Y. A guideline of selecting and reporting intraclass correlation coefficients for reliability research. J. Chiropr. Med. 15, 155–163 (2016).

Bach, D. R., Friston, K. J. & Dolan, R. J. Analytic measures for quantification of arousal from spontaneous skin conductance fluctuations. Int. J. Psychophysiol. 76, 52–55 (2010).

Benedek, M. & Kaernbach, C. A continuous measure of phasic electrodermal activity. J. Neurosci. Methods 190, 80–91 (2010).

Kerby, D. The simple difference formula: an approach to teaching nonparametric correlation. Compr. Psychol. 3, 11 (2014).

Rosenthal, R., Cooper, H. & Hedges, L. Parametric measures of effect size. Handb. Res. Synth. 621(2), 231–244 (1994).

Duszkiewicz, A. J., McNamara, C. G., Takeuchi, T. & Genzel, L. Novelty and dopaminergic modulation of memory persistence: a tale of two systems. Trends Neurosci. 42, 102–114 (2019).

D’Amour-Horvat, V. & Leyton, M. Impulsive actions and choices in laboratory animals and humans: effects of high vs. low dopamine states produced by systemic treatments given to neurologically intact subjects. Front. Behav. Neurosci. 8, 1–20 (2014).

Juliano, L. M. & Brandon, T. H. Reactivity to instructed smoking availability and environmental cues: evidence with urge and reaction time. Exp. Clin. Psychopharmacol. 6(1), 45 (1998).

Sayette, M. A. et al. The effects of cue exposure on reaction time in male alcoholics. J. Stud. Alcohol 55, 629–633 (1994).

Vollstädt-Klein, S. et al. Validating incentive salience with functional magnetic resonance imaging: association between mesolimbic cue reactivity and attentional bias in alcohol-dependent patients. Addict. Biol. 17, 807–816 (2012).

Hedge, C., Bompas, A. & Sumner, P. Task reliability considerations in computational psychiatry. Biol. Psychiatry Cogn. Neurosci. 5, P837–P839 (2020).

Bouchard, S., Loranger, C., Giroux, I., Jacques, C. & Robillard, G. Using virtual reality to provide a naturalistic setting for the treatment of pathological gambling. In The Thousand Faces of Virtual Reality (ed. Sik-Lanyi, C.) (InTech, 2014). https://doi.org/10.5772/59240.

Gamito, P. et al. Eliciting nicotine craving with virtual smoking cues. Cyberpsychol. Behav. Soc. Netw. 17, 556–561 (2014).

Clark, L., Boileau, I. & Zack, M. Neuroimaging of reward mechanisms in Gambling disorder: an integrative review. Mol. Psychiatry 24, 674–693 (2019).

Havermans, R. C., Mulkens, S., Nederkoorn, C. & Jansen, A. The efficacy of cue exposure with response prevention in extinguishing drug and alcohol cue reactivity. Behav. Interv. Pract. Resid. Commun. Based Clin. Progr. 22(2), 121–135 (2007).

Bordnick, P. S., Traylor, A. C., Graap, K. M., Copp, H. L. & Brooks, J. Virtual reality cue reactivity assessment: a case study in a teen smoker. Appl. Psychophysiol. Biofeedback 30, 187–193 (2005).

Choi, J. S. et al. The effect of repeated virtual nicotine cue exposure therapy on the psychophysiological responses: a preliminary study. Psychiatry Investig. 8, 155–160 (2011).

Anokhin, A. P., Golosheykin, S., Grant, J. D. & Heath, A. C. Heritability of delay discounting in adolescence: a longitudinal twin study. Behav. Genet. 41, 175–183 (2011).

Acknowledgements

We thank Mohsen Shaverdy and Diego Saldivar for the implementation of the VR environments and task programming and all members of the Peters Lab at the University of Cologne for helpful discussions. This work was supported by Deutsche Forschungsgemeinschaft (DFG, Grant PE1627/5-1 to J.P.).

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Contributions

Conceptualization: J.P., L.B.; Data curation: L.B.; Formal analysis: L.B.; Funding acquisition: J.P.; Investigation: L.B., L.S.; Methodology: J.P., L.B.; Project administration: L.B.; Writing – original draft: L.B.; Writing – review & editing: J.P., L.B., L.S.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bruder, L.R., Scharer, L. & Peters, J. Reliability assessment of temporal discounting measures in virtual reality environments. Sci Rep 11, 7015 (2021). https://doi.org/10.1038/s41598-021-86388-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-86388-8

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.