Abstract

High-quality reconstruction under a low sampling rate is very important for ghost imaging. How to obtain perfect imaging results from the low sampling rate has become a research hotspot in ghost imaging. In this paper, inspired by matrix optimization in compressed sensing, an optimization scheme of speckle patterns via measurement-driven framework is introduced to improve the reconstruction quality of ghost imaging. According to this framework, the sampling matrix and sparse basis are optimized alternately using the sparse coefficient matrix obtained from the low-dimension pseudo-measurement process and the corresponding solution is obtained analytically, respectively. The optimized sampling matrix is then dealt with non-negative constraint and binary quantization. Compared to the developed optimization schemes of speckle patterns, simulation results show that the proposed scheme can achieve better reconstruction quality with the low sampling rate in terms of peak signal-to-noise ratio (PSNR) and mean structural similarity index (MSSIM). In particular, the lowest sampling rate we use to achieve a good performance is about 6.5%. At this sampling rate, the MSSIM and PSNR of the proposed scheme can reach 0.787 and 17.078 dB, respectively.

Similar content being viewed by others

Introduction

As a novel and non-local imaging technique, ghost imaging (GI) was first experimentally demonstrated by using quantum entanglement source generated by the spontaneous parameter down-conversion (SPDC) process1. Considering the difficulty of generating quantum entanglement source, it was proved in theory and experiment that GI could be implemented with thermal and pseudo-thermal light2,3,4,5,6,7,8. In recent years, with the proposal of various GI schemes6,9,10,11, GI has a bright application prospect in many related fields, such as remote sensing12,13, imaging through scattering media14,15,16,17, optical encryption18,19 and terahertz imaging20,21,22,23,24.

In a typical GI system, the target image can be obtained by correlation measurement between two distributed light beams1,2. Due to the existence of background noise, the signal-to-noise ratio (SNR) and visibility of the reconstructed image are lower than expected25,26. To address these issues, various schemes have been proposed and verified, among which differential GI (DGI)27 and normalized GI (NGI)28 demonstrate significant improvement in the SNR though at the cost of more measurements; intensity threshold29 and time–space-average domain30 schemes show that the visibility of the image restoration can be remarkable enhanced. Compressed sensing (CS)31 theory combined with GI technique9,32,33 can not only improve the performance of the image reconstruction, but also reduce the number of measurements. In addition, the sampling efficiency can be further improved, which is helpful to promote the practical application of GI. In general, the GI methods based on CS usually work better than traditional GI based on the second-order correlation when the sparsity is considered in the process of image restoration34. Deep learning-based GI schemes10,11,17,35 have also been proved to be effective in improving sampling efficiency and imaging quality. Furthermore, the imaging speed is faster compared with conventional GI method.

Despite that many schemes have been proposed to improve performance of GI from different perspectives, the problem of sampling efficiency still cannot be ignored36,37. The high sampling efficiency means that enough information about the target can be obtained with a few samplings, and then high-quality reconstruction can be achieved. Therefore, how to improve the sampling efficiency deserves further study in GI. In fact, the problem of enhancing sampling efficiency can be transformed into the speckle pattern optimization problem, that is, the sampling matrix optimization problem, which can learn from the matrix design methods in CS36,37,38,39,40. So far, various speckle patterns have been designed to optimize the light fields of GI, such as multi-scale speckle patterns36, sinusoidal patterns37 and hadamard patterns41. Furthermore, mutual coherence minimization39 and dictionary learning40 schemes have also been proved to be reasonably effective. It should be pointed out that the dictionary learning scheme can be regarded as using a data-driven framework or a task-driven framework42. Under this framework, the sparse coefficient matrix is obtained from high-dimensional training data and updated along with the sparse basis. Different from the dictionary learning scheme that optimizes the speckle patterns through the learned sparse basis or dictionary from a given training set40, in this paper we propose a novel optimization scheme from the perspective of optimizing the sampling matrix and sparse basis, which is based on the measurement-driven framework (MDF)43. Under the MDF, the sparse coefficient matrix is obtained by low-dimensional pseudo-measurement process and updated separately from the sparse basis.

In this work, we formulate the optimization problem of sampling matrix and sparse basis in GI as an Frobenius-norm minimization problem, and then propose an alternating optimization algorithm to solve the design problem and obtain the corresponding analytical solution. The proposed scheme also suggests a new binary quantization threshold for the optimized sampling matrix, which can effectively overcome the influence of quantization error. Compared with grayscale speckle patterns, binary speckle patterns refresh faster on the digital micro-mirror device (DMD)44, which may be used in some aspects of GI. We perform a simulation using given dataset to test the feasibility of proposed method, and evaluate the performance under different sampling rates and noise levels. Results show that our proposal can achieve the high imaging quality at low sampling rate.

Methods

Problem formulation

Mathematically, the sampling process in GI can be expressed as45

where \({{y}}\) is an M dimension column vector that denotes the signal measured by the bucket detector in the signal arm, \({\Phi }\) is an \(M \times N\) sampling matrix detected by the detector in the reference arm and preserves the light field intensity information, \({{x}}\) is an N dimension column vector that stands for the object to be reconstructed, \({\varepsilon }\) represents the additive noise.

According to the CS theory, the object \({{x}}\) can be approximately described in the form of

where \({\Psi }\) is a certain overcomplete sparse basis, \({{z}}\) is called sparse coefficient vector, \({{e}}\) is referred to as the sparse representation error (SRE). Denote \({{X}}\) and \({{Z}}\) as the training dataset and sparse coefficient matrix, respectively, as

here, P represents the number of training samples. And then, the total SRE is given by

The recent works in CS have shown that the accuracy of image reconstruction can be improved in designing a sensing matrix with consideration of possible SRE43,46,47. Motivated by these works, we first introduce the SRE into the optimization of speckle patterns in GI with the MDF. Next, we present our scheme in detail.

Let sampling matrix \({\Phi }\) be constrained by the form43

where \({{I}}_{M}\) is an M-dimensional unit matrix, \({{U}}\) and \({{V}}\) are two arbitrary orthonormal matrices of dimensions \(M \times M\) and \(N \times N\), respectively. A matrix restricted by Eq. (5) is actually a tight frame with good expected-case performance for CS applications48. Then, additional non-negative constraints are imposed on elements of the sampling matrix to meet the requirement of light field intensity, namely,

Inspired from the problem formulation in43, we combine the SRE with the above sampling matrix constraint conditions in our scheme. The following objective function to be minimized for optimizing sampling matrix and sparse basis is proposed:

where \({{I}}_{N}\) is an N-dimensional unit matrix, \(\Vert \cdot \Vert _{F}\) is the Frobenius-norm, \(\omega \) is a trade-off factor with the value of (0,1).

Optimization of sampling matrix and sparse basis based on MDF

We propose an alternating optimization algorithm via MDF to solve the optimization problem in Eq. (7). It consists of two processes, namely the pseudo-measurement process and the alternating optimization process. Note that we define the measurement process of the training samples as the pseudo-measurement process, in order to distinguish it from the measurement process of the target images or test set in our scheme. In fact, given the training samples, sampling matrix and sparse basis, we first acquire a pseudo measure of the training samples through randomly initialized sampling matrix. Then, according to the pseudo-measurement results, sampling matrix and sparse basis, a certain reconstruction algorithm is used to obtain the sparse coefficient matrix, for example using orthogonal matching pursuit (OMP)51. However, the actual measurement process is to sense the specific targets with the optimized sampling matrix. After obtaining the sparse coefficient matrix, sampling matrix and sparse basis are then updated alternately. The above two processes are specifically as follows.

Given the training dataset \({{X}}\), sampling matrix \({\Phi }_{0}\), sparse basis \({\Psi }_{0}\), sparsity level K, number of iterations Iter and trade-off factor \(\omega \). Firstly, pseudo-measurement process. With the pseudo-measurement result \({{Y}} = {\Phi }_{0} {{X}}\), solve the following problem:

where \(\Vert \cdot \Vert _{0}\) is the \(\ell _{0}\)-norm. Then sparse coefficient matrix \(\widehat{{{Z}}}\) is obtained by OMP algorithm51. Secondly, alternating optimization process. For i from 1 to Iter, with \({\Phi }_{i-1}\) fixed, update sparse basis \({\Psi }_{i}\) by:

then, with \({\Psi }_{i}\) fixed, update sampling matrix \({\Phi }_{i}\) by:

Obviously, with OMP algorithm, the \(\widehat{{{Z}}}\) can be obtained by the low-dimension pseudo-measurement \({{Y}}\), rather than the high-dimension training dataset \({{X}}\), which is different from data-driven or task-driven frameworks adopted in dictionary learning problems42. The detailed updating processes of sparse basis and sampling matrix are shown in Supplementary S1 and S2, respectively.

Constraints on the optimized sampling matrix

After getting the optimized sampling matrix \({\widehat{{\Phi }}}\), we need to perform the following two steps on the sampling matrix:

-

Non-negative constraint. Considering the non-negativity of speckle patterns displayed on the DMD, We need to impose the following constraint on the sampling matrix:

$$\begin{aligned} \widehat{{\Phi }}_{m,n} = 0 \quad \text{ if } \widehat{{\Phi }}_{m,n} < 0. \end{aligned}$$(11) -

Binarization threshold optimization. Compared with grayscale patterns, binary patterns have significant advantages in data storage and transmission. Moreover, binary patterns refresh faster on the DMD, which is extremely important in real-time ghost imaging applications44. Different from the previous binary threshold selection strategies49,50, an effective binarization threshold is provided to quantize the sampling matrix. Let \(\alpha > 0\) be the binarization threshold, which is defined as the average of all non-zero elements of the optimized sampling matrix, then

$$\begin{aligned} \widehat{{\Phi }}_{m,n}=\left\{ \begin{array}{ll} 1, &{} \text{ if } \widehat{{\Phi }}_{m,n} \ge \alpha , \\ 0, &{} \text{ if } \widehat{{\Phi }}_{m,n} < \alpha . \end{array}\right. \end{aligned}$$(12)

We notice that the (1, − 1) patterns are also selected to generate measurement results17. Compared with the (1, −1) patterns, the optimized non-negative speckle patterns can significantly reduce memory requirement. Moreover, it can be loaded directly onto the DMD while the (1, −1) patterns are in an indirect way. More importantly, it may be used to generate the (1, −1) patterns and produce better recovery quality in comparison with the (1, −1) patterns.

Results

Imaging schematic diagram

The schematic diagram of ghost imaging system is shown in Fig. 1. Through the Köhler illumination system, the light generated by a light-emitting diode (LED) is evenly projected on the DMD. After being modulated by the optimized speckle patterns preloaded on the DMD, the reflected light from the DMD is projected on the object to be measured through a lens. Then, the reflected light from the specific target is collected by the lens and eventually recorded by the bucket detector.

Imaging reconstruction

The validity of the proposed scheme via MDF is verified by simulations. All the simulations are performed in MATLAB R2016b. In our simulations, the MNIST database53 is selected to generate the training data, where pixel values of each digit image with a size of \(28 \times 28\) are normalized to the range of 0 to 1. We first randomly select 5000 digital images from the training set to optimize the sampling matrix and sparse basis. Next, the optimized sampling matrix is constrained by Eq. (11) and Eq. (12). Then, each row of the sampling matrix is reshaped into a speckle pattern consistent with the size of imaging object. Finally, the speckle pattern on the DMD is approximately regarded as the light field intensity distribution on the object plane. In each sampling process the result of dot product between the speckle pattern and imaging object is obtained and then all the intensity values of the result are summed as the measurement result of the bucket detector. We compare the proposed method with the unoptimized case and Xu’s method39. For convenience, we denote the optimized ghost imaging scheme based on the measurement-driven framework as OGIMDF, and the corresponding unoptimized case without MDF is denoted as UGI. The characteristics of these methods are summarized in Table 1.

For Xu’s method39, the Discrete Cosine Transform (DCT) basis is used as the sparse representation basis, while random Gaussian matrices with a Gaussian distribution of zero-mean and unit variance are chosen as sparse basis for UGI method. The sampling matrices of all test methods are subjected to non-negative constraint in Eq. (11) and binary threshold quantization in Eq. (12). For UGI and OGIMDF methods, the images are reconstructed by solving the following problem:

via the fast iterative shrinkage-threshold algorithm (FISTA)52, where \(\lambda > 0\) is a regularization parameter, \(\Vert \cdot \Vert _{1}\) is the \(\ell _{1}\)-norm, \({{X}}_{test}\) is chosen from MNIST test set, and each column of it can be reshaped into a target image. The reconstruction algorithm for Xu’s scheme is selected according to the corresponding reference.

Simulation results

Figure 2 presents the simulation results with various schemes. The corresponding time cost of matrix optimization and reconstruction is recorded in Table 2. The reconstruction time of each method is the average of the total reconstruction time of 500 test images under the corresponding sampling rate. The reconstruction images at the given sampling rate are shown in the Fig. 2a. The sampling rate (SR) is defined as the ratio of the number of samplings to the number of image pixels. Figure 2b,c show the peak signal-to-noise ratio (PSNR) and mean structural similarity (MSSIM) index curves of the restored images with respect to SR, respectively. As the quantitative metrics of the reconstructed image quality, PSNR and MSSIM54 are defined as follows:

where F and G are the original image and reconstructed image, respectively, H is the maximum value of the image pixels and \(H = 255\) in this paper, N is the total number of the image pixels, W is the number of local windows in the image, \(f_{j}\) and \(g_{j}\) are the corresponding windows with a size of \(14 \times 14\) pixels. The structural similarity (SSIM) is given by

where \((\mu _{fj}, \sigma _{fj})\) and \((\mu _{gj}, \sigma _{gj})\) are the means and variances of \(f_{j}\) and \(g_{j}\), respectively, \(\sigma _{fgj}\) is the co-variance of \(f_{j}\) and \(g_{j}\), \(c_{1} = (0.01 H)^{2}\) and \(c_{2} = (0.03H)^{2}\). We calculate the averaged values of PSNR and MSSIM of 500 reconstructed images and then plot the corresponding curves.

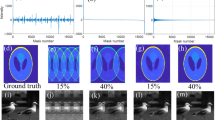

Simulation results of three schemes under different SRs. (a) The images in the first row are reconstructed using Xu method, the second row shows the images reconstructed using UGI, the third row shows the restored objects using OGIMDF, and the images in the last row are the ground truth. (b) and (c) show the change curves of PSNR and MSSIM of reconstructed images using Xu, UGI and OGIMDF under different SRs, respectively.

As seen from the Fig. 2, the recovered performance of the proposed OGIMDF is overall better than the other schemes. Compared with the UGI method, the proposed scheme can significantly improve the PSNR and MSSIM, especially at the low SR, mainly owing to the optimization of the sampling matrix. We can also see that the MSSIM for the Xu’s method performs comparably with the UGI scheme under different SRs, but the performance of PSNR in the Xu’s method is inferior to the UGI scheme when the SR exceeds 0.775. The main reason is the relatively large reconstruction error for the Xu’s method at the high SR ranges. It can also be seen from Fig. 2a that the reconstructed image quality of the proposed scheme is clearer than that of the other two schemes at the given SR.

Simulation results of the OGIMDF method at the low SRs. The SR is between 0.025 and 0.15. (a) The images in the first row are reconstructed using OGIMDF method, and the images in the second row are the ground truth. (b) and (c) show the change curves of PSNR and MSSIM of reconstructed images using OGIMDF under different SRs, respectively.

We study the performance of the proposed scheme at low SRs, which is what we are concerned about in practice. We present the performance of the OGIMDF scheme when the SR is between 0.025 and 0.15, and the results are shown in Fig. 3. In the Fig. 3a, the reconstructed images at the given SR are displayed. Fig. 3b,c show the averaged performance of PSNR and MSSIM of 500 restored images with regard to SR, respectively. As shown in Fig. 3, when the SR is less than 0.065, the image quality of the OGIMDF method is poor and the recovered images are blurred and damaged. With the increase of SR, the imaging quality is improved and the recovered images can be recognized. Therefore, we think that 0.065 may be the lowest SR that the OGIMDF method can achieve in the case of good imaging quality.

We note that the PSNR of the proposed scheme decreases slightly when the SR is between 0.80 and 0.90 in Fig. 2b. To explain this phenomenon, we draw the SNR curves of the reconstructed images for OGIMDF and UGI schemes at the different SRs, as shown in Fig. 4. For the OGIMDF scheme, the SNR has a similar downward trend with the PSNR in Fig. 2b when the SR ranges from 0.80 to 0.90. This means that the noise of the reconstructed images is enhanced in this SR region. However, the PSNR and SNR values of the proposed scheme are still higher than the UGI scheme in this SR range, and begin to increase again when the SR is more than 0.90, both of which prove the superiority of our proposal.

Simulation results of three schemes under different SNRs with SR = 0.50. (a) The images in the first row are reconstructed using Xu method, the second row shows the images reconstructed using UGI, the third row shows the restored objects using OGIMDF, and the images in the last row are the ground truth. (b) and (c) show the change curves of PSNR and MSSIM of reconstructed images using Xu, UGI and OGIMDF under different SNRs, respectively.

The above simulations do not take into account the impact of additional noise on the reconstruction performance. In the practical environment, noise is inevitable. To evaluate the influence of noise on the three schemes, we add Additive White Gaussian Noise (AWGN) to the bucket values. Fig. 5 presents the corresponding results of various schemes when SR is 0.50. In the Fig. 5a, the reconstructed images at the given SNR are displayed. Figure 5b,c show the averaged performance of PSNR and MSSIM of 500 restored images with regard to SNR, respectively. From the Fig. 5b,c, we can find that when the SNR does not exceed 20 dB, the PSNR values of three methods all lie in the relatively low level and the MSSIM of the UGI behaves slight advantages among these schemes. In the Fig. 5a, as a result, the images reconstructed by UGI, OGIMDF and Xu’s methods are blurred to a certain extent when SNR is 20 dB. Obviously, under the low SNR, the useful information is destroyed by noise, so it is difficult to restore the original image clearly. However, with the growth of SNR, both the PSNR and MSSIM of the OGIMDF accelerate rapidly. When SNR exceeds 30 dB, the OGIMDF begin to establish its superiority, i.e., when SNR is 40 dB, the PSNR values of UGI, OGIMDF and Xu’s methods are 18.83 dB, 30.50 dB, 20.04 dB, respectively. Moreover, the MSSIM value of the OGIMDF is 7.30\(\%\) higher than that of the Xu’s method at this SNR. Accordingly, from the Fig. 5a, we can also see that the reconstruction results of the OGIMDF are remarkably clearer than the other results. Therefore, in practical application, the noise acting on the bucket detector should be reduced as much as possible to obtain high quality reconstruction performance for the OGIMDF.

We further study the reconstruction quality of grayscale targets under different sampling numbers. The Frey faces database55 including 1965 face images with a size of \(28\times 20\) is selected to generate training and test data. In our simulation, 1915 faces randomly selected from the database are used as training samples, and the remaining faces are used for testing. Figure 6 presents the simulation results of UGI and OGIMDF schemes, and the sampling numbers are 476, 504 and 560, respectively. As shown in Fig. 6, with the increase of the number of sampling, the quality of the reconstructed image is also improved. when the sampling number is 560, the two methods can both reconstruct the image clearly. This verifies that the proposed scheme is also capable of reconstructing grayscale images.

We also verify the extensibility of the proposed method. The training data contains 5000 images randomly selected from the MNIST database53. We test the proposed scheme with English letters and double-seam patterns, and give the reconstruction results in the Fig. 7. We can see that it can still reconstruct well the English letters and the double-seam patterns when the SR is 0.15. At this SR, the PSNR and MSSIM are 17.47 dB and 0.85, respectively. When the SR increases to 0.65, the reconstruction images of the English letters and the double-seam patterns become clearer. These results well demonstrate the extensibility of the proposed scheme.

Conclusion

In this paper, we have proposed an optimization scheme of speckle patterns via measurement-driven framework to improve the reconstruction quality of GI. We have analyzed the performance of the proposed scheme under different SRs by using PSNR and MSSIM metrics, and compared it with the unoptimized case and Xu’s method. Simulation results show that it can produce better recovery quality in comparison with the other two methods especially at a low SR. We also verify the extensibility of the proposed scheme and the ability to reconstruct grayscale images. We believe that the proposed scheme can promote the development and applications of GI, for example binary sampling GI.

References

Pittman, T. B., Shih, Y. H., Strekalov, D. V. & Sergienko, A. V. Optical imaging by means of two-photon quantum entanglement. Phys. Rev. A 52, R3429–R3432. https://doi.org/10.1103/PhysRevA.52.R3429 (1995).

Bennink, R. S., Bentley, S. J. & Boyd, R. W. “two-photon” coincidence imaging with a classical source. Phys. Rev. Lett. 89, 113601. https://doi.org/10.1103/PhysRevLett.89.113601 (2002).

Gatti, A., Brambilla, E., Bache, M. & Lugiato, L. A. Ghost imaging with thermal light: Comparing entanglement and classical correlation. Phys. Rev. Lett. 93, 093602. https://doi.org/10.1103/PhysRevLett.93.093602 (2004).

Gatti, A., Brambilla, E., Bache, M. & Lugiato, L. A. Correlated imaging, quantum and classical. Phys. Rev. A 70, 013802. https://doi.org/10.1103/PhysRevA.70.013802 (2004).

Ferri, F. et al. High-resolution ghost image and ghost diffraction experiments with thermal light. Phys. Rev. Lett. 94, 183602 (2005).

Shapiro, J. H. Computational ghost imaging. Phys. Lett. A 78, 061802. https://doi.org/10.1103/PhysRevA.78.061802 (2008).

Valencia, A., Scarcelli, G., Angelo, M. D. & Shih, Y. Two-photon imaging with thermal light. Phys. Rev. Lett. 94, 063601. https://doi.org/10.1103/PhysRevLett.94.063601 (2005).

Zhang, D., Zhai, Y. H., Wu, L. A. & Chen, X. H. Correlated two-photon imaging with true thermal light. Opt. Lett. 30, 2354–2356. https://doi.org/10.1364/OL.30.002354 (2005).

Katz, O., Bromberg, Y. & Silberberg, Y. Compressive ghost imaging. Appl. Phys. Lett. 95, 131110. https://doi.org/10.1063/1.3238296 (2009).

Lyu, M. et al. Deep-learning-based ghost imaging. Sci. Rep. 7, 17865. https://doi.org/10.1038/s41598-017-18171-7 (2017).

He, Y. et al. Ghost imaging based on deep learning. Sci. Rep. 8, 6469. https://doi.org/10.1038/s41598-018-24731-2 (2018).

Erkmen, B. I. Computational ghost imaging for remote sensing. J. Opt. Soc. Am. A 29, 782–789. https://doi.org/10.1364/JOSAA.29.000782 (2012).

Gong, W. et al. Three-dimensional ghost imaging lidar via sparsity constraint. Sci. Rep. 6, 26133. https://doi.org/10.1038/srep26133 (2016).

Gong, W. & Han, S. Correlated imaging in scattering media. Opt. Lett. 36, 394–396. https://doi.org/10.1364/OL.36.000394 (2011).

Meyers, R. E., Deacon, K. S. & Shih, Y. Turbulence-free ghost imaging. Appl. Phys. Lett. 98, 111115. https://doi.org/10.1063/1.3567931 (2011).

Bina, M. et al. Backscattering differential ghost imaging in turbid media. Phys. Rev. Lett. 110, 083901. https://doi.org/10.1103/PhysRevLett.110.083901 (2013).

Li, F., Zhao, M., Tian, Z., Willomitzer, F. & Cossairt, O. Compressive ghost imaging through scattering media with deep learning. Opt. Express 28, 17395–17408. https://doi.org/10.1364/OE.394639 (2020).

Clemente, P., Durán, V., Torres-Company, V., Tajahuerce, E. & Lancis, J. Optical encryption based on computational ghost imaging. Opt. Lett. 35, 2391–2393. https://doi.org/10.1364/OL.35.002391 (2010).

Sun, M., Shi, J., Li, H. & Zeng, G. A simple optical encryption based on shape merging technique in periodic diffraction correlation imaging. Opt. Express 21, 19395–19400. https://doi.org/10.1364/OE.21.019395 (2013).

Stantchev, R. I. et al. Compressed sensing with near-field THz radiation. Optica 4, 989–992. https://doi.org/10.1364/OPTICA.4.000989 (2017).

Olivieri, L., Totero Gongora, J. S., Pasquazi, A. & Peccianti, M. Time-resolved nonlinear ghost imaging. ACS Photonics 5, 3379–3388. https://doi.org/10.1021/acsphotonics.8b00653 (2018).

Stantchev, R. I., Yu, X., Blu, T. & Pickwell-MacPherson, E. Real-time terahertz imaging with a single-pixel detector. Nat. Commun. 11, 1–8. https://doi.org/10.1038/s41467-020-16370-x (2020).

Totero Gongora, J. S. et al. Route to intelligent imaging reconstruction via terahertz nonlinear ghost imaging. Micromachines 11, 521. https://doi.org/10.3390/mi11050521 (2020).

Olivieri, L. et al. Hyperspectral terahertz microscopy via nonlinear ghost imaging. Optica 7, 186–191. https://doi.org/10.1364/OPTICA.381035 (2020).

Chan, K. W. C., Sullivan, M. N. O. & Boyd, R. W. Optimization of thermal ghost imaging: High-order correlations vs. background subtraction. Opt. Express 18, 5562–5573. https://doi.org/10.1364/OE.18.005562 (2010).

Yao, X. R. et al. Iterative denoising of ghost imaging. Opt. Express 22, 24268––24275. https://doi.org/10.1364/OE.22.024268 (2014).

Ferri, F., Magatti, D., Lugiato, L. & Gatti, A. Differential ghost imaging. Phys. Rev. Lett. 104, 253603. https://doi.org/10.1103/PhysRevLett.104.253603 (2010).

Sun, B., Welsh, S. S., Edgar, M. P., Shapiro, J. H. & Padgett, M. J. Normalized ghost imaging. Opt. Express 20, 16892–16901. https://doi.org/10.1364/OE.20.016892 (2012).

Basano, L. & Ottonello, P. Use of an intensity threshold to improve the visibility of ghost images produced by incoherent light. Appl. Opt. 46, 6291–6296. https://doi.org/10.1364/AO.46.006291 (2007).

Gong, W. & Han, S. A method to improve the visibility of ghost images obtained by thermal light. Phys. Lett. A 374, 1005–1008. https://doi.org/10.1016/j.physleta.2009.12.030 (2010).

Donoho, D. L. Compressed sensing. IEEE Trans. Inform. Theory 52, 1289–1306. https://doi.org/10.1109/TIT.2006.871582 (2006).

Katkovnik, V. & Astola, J. Compressive sensing computational ghost imaging. J. Opt. Soc. Am. A 29, 1556–1567. https://doi.org/10.1364/JOSAA.29.001556 (2012).

Yu, W. K. et al. Adaptive compressive ghost imaging based on wavelet trees and sparse representation. Opt. Express 22, 7133. https://doi.org/10.1364/OE.22.007133 (2014).

Du, J., Gong, W. & Han, S. The influence of sparsity property of images on ghost imaging with thermal light. Opt. Lett. 37, 1067–1069. https://doi.org/10.1364/OL.37.001067 (2012).

Higham, C. F., Murray-Smith, R., Padgett, M. J. & Edgar, M. P. Deep learning for real-time single-pixel video. Sci. Rep. 8, 1–9 (2018).

Chen, M., Li, E. & Han, S. Application of multi-correlation-scale measurement matrices in ghost imaging via sparsity constraints. Appl. Opt. 53, 2924–2928 (2014).

Khamoushi, S. M., Nosrati, Y. & Tavassoli, S. H. Sinusoidal ghost imaging. Opt. Lett. 40, 3452–3455. https://doi.org/10.1364/OL.40.003452 (2015).

Li, E., Chen, M., Gong, W., Yu, H. & Han, S. Mutual information of ghost imaging systems. Acta Opt. Sin. 33, 93–98. https://doi.org/10.3788/AOS201333.1211003 (2013).

Xu, X., Li, E., Shen, X. & Han, S. Optimization of speckle patterns in ghost imaging via sparse constraints by mutual coherence minimization. Chin. Opt. Lett. 13, 071101. https://doi.org/10.3788/COL201513.071101 (2015).

Hu, C. et al. Optimization of light fields in ghost imaging using dictionary learning. Opt. Express 27, 28734–28749. https://doi.org/10.1364/OE.27.028734 (2019).

Wang, L. & Zhao, S. Fast reconstructed and high-quality ghost imaging with fast Walsh-Hadamard transform. Photonics Res. 4, 240–244. https://doi.org/10.1364/PRJ.4.000240 (2016).

Mairal, J., Bach, F. & Ponce, J. Task-driven dictionary learning. IEEE Trans. Pattern Anal. Mach. Intell. 34, 791–804. https://doi.org/10.1109/TPAMI.2011.156 (2012).

Bai, H. & Li, X. Measurement-driven framework with simultaneous sensing matrix and dictionary optimization for compressed sensing. IEEE Access 8, 35950–35963. https://doi.org/10.1109/ACCESS.2020.2974927 (2020).

Zhang, Z., Wang, X., Zheng, G. & Zhong, J. Fast Fourier single-pixel imaging via binary illumination. Sci. Rep. 7, 12029. https://doi.org/10.1038/s41598-017-12228-3 (2017).

Bian, L., Suo, J., Dai, Q. & Chen, F. Experimental comparison of single-pixel imaging algorithms. J. Opt. Soc. Am. A 35, 78–87. https://doi.org/10.1364/JOSAA.35.000078 (2018).

Hong, T., Bai, H., Li, S. & Zhu, Z. An efficient algorithm for designing projection matrix in compressive sensing based on alternating optimization. Signal Process. 125, 9–20. https://doi.org/10.1016/j.sigpro.2015.12.015 (2016).

Hong, T. & Zhu, Z. An efficient method for robust projection matrix design. Signal Process. 143, 200–210. https://doi.org/10.1016/j.sigpro.2017.09.007 (2018).

Chen, W., Rodrigues, M. R. D. & Wassell, I. J. On the use of unit-norm tight frames to improve the average MSE performance in compressive sensing applications. IEEE Signal Process. Lett. 19, 8–11. https://doi.org/10.1109/LSP.2011.2173675 (2012).

Chen, W. & Chen, X. Grayscale object authentication based on ghost imaging using binary signals. EPL 110, 44002. https://doi.org/10.1209/0295-5075/110/44002 (2015).

Zou, X. P. F. et al. Imaging quality enhancement in binary ghost imaging using the Otsu algorithm. J. Opt. 22, 095201. https://doi.org/10.1088/2040-8986/aba22e (2020).

Pati, Y. C., Rezaiifar, R. & Krishnaprasad, P. S. Orthogonal matching pursuit: recursive function approximation with applications to wavelet decomposition. In Proceedings of 27th Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 40–44. https://doi.org/10.1109/ACSSC.1993.342465 (IEEE, 1993).

Beck, A. & Teboulle, M. A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2, 183–202. https://doi.org/10.1137/080716542 (2009).

Deng, L. The mnist database of handwritten digit images for machine learning research [best of the web]. IEEE Signal Process. Mag. 29, 141–142. https://doi.org/10.1109/MSP.2012.2211477 (2012).

Wang, Z., Bovik, A. C., Sheikh, H. R. & Simoncelli, E. P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 13, 600–612. https://doi.org/10.1109/TIP.2003.819861 (2004).

Roweis, S. https://cs.nyu.edu/~roweis/data.html.

Acknowledgements

This research was funded by National Nature Science Foundation of China (Grant Nos.11904410 and 61801522), and National Nature Science Foundation of Hunan Province, China (Grant No.2019JJ40352).

Author information

Authors and Affiliations

Contributions

H.K. performed the simulations and wrote the manuscript, Y.W. and L.Z. supervised the project, H.K. and D.H. designed the work, D.H. reviewed the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kang, H., Wang, Y., Zhang, L. et al. Improving the performance of ghost imaging via measurement-driven framework. Sci Rep 11, 6776 (2021). https://doi.org/10.1038/s41598-021-86275-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-86275-2

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.