Abstract

Among population-based metaheuristics, both Differential Evolution (DE) and Covariance Matrix Adaptation Evolution Strategy (CMA-ES) perform outstanding for real parameter single objective optimization. Compared with DE, CMA-ES stagnates much earlier in many occasions. In this paper, we propose CMA-ES with individuals redistribution based on DE, IR-CMA-ES, to address stagnation in CMA-ES. We execute experiments based on two benchmark test suites to compare our algorithm with nine peers. Experimental results show that our IR-CMA-ES is competitive in the field of real parameter single objective optimization.

Similar content being viewed by others

Introduction

The aim of real parameter single objective optimization is to find the best decision vector which can minimize (or maximize) an objective function in solution space. For years, real parameter single objective optimization is a hot spot of Artificial Intelligence (AI). A variety of population-based metaheuristics have been proposed in literature for the purpose.

Among types of population-based metaheuristic for real parameter single objective optimization, both Differential Evolution (DE)1 and Covariance Matrix Adaptation Evolution Strategy (CMA-ES)2 perform outstanding. In the six competitions on real parameter single objective optimization among population-based metaheuristics held by Congress of Evolutionary Computation (CEC), there are seven winners, including two joint winners in 2016. Among the seven winners, NBIPOP-aCMA-ES3 (2013) and HS-ES4 (2018) are based on CMA-ES, while L-SHADE5 (2014), L-SHADE-EpSin6 (2016), and IMODE? (2020) are based on DE. Moreover, UMOEAs-II7 (2016) is ensemble of CMA-ES and DE.

In execution of population-based metaheuristics, two phenomena, early convergence and stagnation, which both lead to the fact that no further improvement on solution can be made, are very common. The former phenomenon means that all individuals in population become same before a global optimum is found, while the other one means that difference between individuals is too low for operators of algorithm to obtain better solution although a global optimum is still not found. For non-trivial instance of real parameter single objective optimization, stagnation occurs much more often than early convergence in execution of types of population-based metaheuristics, including DE and CMA-ES.

The motivation of this paper is as below. Compared with DE, CMA-ES stagnates much earlier in many occasions. Therefore, measures, such as niching approach and restart strategy have been taken for years to help CMA-ES resist stagnation. Compared with niching approach, restart strategy makes more famous CMA-ES variants. For example, the winner in the CEC 2013 NBIPOP-aCMA-ES and the winner in the CEC 2018 HS-ES are both CMA-ES variants with restart. Beside restart, an improved version of univariate sampling is employed in HS-ES. The further comparison8 shows that HS-ES are one of the top performers among the six winners in the five competitions held in 2013, 2014, 2016, 2017, and 2018, respectively. It can be seen that, with the help of methods for resisting stagnation, CMA-ES performs better for real parameter single objective optimization than before. In fact, the above methods for resisting stagnation are very simple in idea. Now that such simple ideas are effective for improving CMA-ES, a more complicated strategy may be more promising. For example, DE may be a good choice for improving CMA-ES on resisting stagnation.

In fact, hybridisation techniques, such as memetic computing, are widely concerned in the field of AI9. Furthermore, there exist hybridisations of CMA-ES and another metaheuristic for different purposes. Examples are listed below. In CMA-ES/HDE10, CMA-ES and hybrid DE occupy a subpopulation, respectively. Migration occurs between the two subpopulations. In DCMA-EA11,12 operators of CMA-ES and those of DE are both used to produce new individuals. In UMOEAs-II7, CMA-ES and a variant of DE occupy a subpopulation, respectively. One of the constituent algorithms is executed in a part of generations, while both of them are executed in other generations. In above algorithms, CMA-ES works together with another constituent algorithm for search. A super-fit scheme based on CMA-ES is used to provide initialization for both re-sampled inheritance search and DE, respectively13,14. Here, CMA-ES is used as a method for initialization to replace random initialization.

In this paper, we propose CMA-ES with individuals redistribution based on DE, IR-CMA-ES. Once stagnation is detected, DE is executed to redistribute individuals. Here, stagnation is confirmed if improving ratio of the average fitness from the previous generation to the current one is lower than a threshold for a given number of successive generations. In a generation for DE, mutation and crossover of the original version of DE are executed and followed by offspring-surviving selection, which means that all offspring produced by mutation and crossover are selected, while parents are eliminated. Provided that CMA-ES is still caught in stagnation after a round of DE, a new round of DE with more generations is executed.

Compared with the existing hybridisations with CMA-ES, our algorithm is different in idea. Here, CMA-ES is used for search, while DE is used to redistribute individuals when CMA-ES faces towards stagnation. In our experiments based on the CEC 2014 and 2017 benchmark test suites, we compare our IR-CMA-ES with nine population-based metaheuristics. The experimental results show that our algorithm is competitive for real parameter single objective optimization.

DE and CMA-ES for real parameter single objective optimization

In this section, the most popular methods for real parameter single objective optimization, CMA-ES and DE, are further introduced. Then, our idea is analyzed based on the features of CMA-ES and DE.

In population of DE, operators such as mutation, crossover, and selection, are exerted on individuals, i.e., target vectors. In the initial generation of population, target vectors \(\vec x_{i,0} =(x_{1,i,0},x_{2,i,0},\ldots ,x_{D,i,0})\), where i is from 1 to NP and NP denotes the population size with dimensionality as D are produced randomly. In a given generation g, mutant vectors \(\vec v_{i,g}\) are produced based on target vectors \(\vec x_{i,g}\) by mutation. DE algorithms are compatible with different mutation strategies. Here, two mutation strategies among the popular ones, DE/rand/1 and DE/best/1, are presented in Eqs. (1) and (2) respectively, for instance

In the equations, r1, r2 and r3 are distinct integers randomly chosen from the range [1, NP], and different from i. F is the scaling factor. \(\vec {x}_{best,g}\) denotes the individual with the best fitness in the generation g. After mutation, trial vectors \(\vec u_{i,g}=(u_{1,i,g}, u_{2,i,g},\ldots ,u_{D,i,g})\) are generated based on \(\vec x_{i,g}\) and \(\vec v_{i,g}\) by crossover. A widely used crossover strategy—binomial crossover—is

where \(Cr\in [0,1]\) is the crossover rate, and randn(i) is an integer randomly generated from the range [1, NP] to ensure that \(\vec {u}_{i,g}\) has at least one component from \(\vec {v}_{i,g}\). In DE, crossover and mutation together are specified as trial vector generation strategy. For selection, the operation is

where \(f(\vec {u}_{i,g})\) and \(f(\vec {x}_{i,g})\) represent fitness of \(\vec {u}_{i,g}\) and \(\vec {x}_{i,g}\), respectively.

In population of CMA-ES, the \(g+1\)th generation is obtained based on the gth generation as follow,

where

represents the center of mass of the selected individuals in the gth generation, while \(I_{s e l}^{(g)}\) is the set of indices of the same individuals with \(\left| I_{s e l}^{(g)}\right| =\mu \cdot \sigma ^{(g)}\) is the global step size. The random vectors \(z_{k}\) in Eq. (5) are \(\mathcal {N}(\mathbf {0}, \varvec{I})\) distributed (n-dimensional normally distributed with expectation zero and the identity covariance matrix) and serve to generate offspring. We can calculate their center of mass as

The covariance matrix \(\varvec{C}^{(g)}\) of the random vectors \(\varvec{B}^{(g)} \varvec{D}^{(g)} \varvec{z}_{k}^{(g+1)}\) is a symmetrical positive \(n \times n\)-matrix. The columns of the orthogonal matrix \(\varvec{B}^{(g)}\) represent normalized eigenvectors of the covariance matrix. \(\varvec{D}^{(g)}\) is a diagonal matrix whose elements are the square roots of the eigenvalues of \(\varvec{C}^{(g)}\). Hence, the relation of \(\varvec{B}^{(g)}\) and \(\varvec{D}^{(g)}\) to \(\varvec{C}^{(g)}\) can be expressed by

where \(\varvec{b}_{i}^{(g)}\) represents the i-th column of \(\varvec{B}^{(g)}\) and \(\left\| \varvec{b}_{i}^{(g)}\right\| =1\) and \(d_{i i}^{(g)}\) are the diagonal elements of \(\varvec{D}^{(g)}\). Surfaces of equal probability density of the random vectors \(\varvec{B}^{(g)} \varvec{D}^{(g)} \varvec{z}_{k}^{(g+1)} \sim \mathcal {N}\left( \mathbf {0}, \varvec{C}^{(g)}\right)\) are (hyper-)ellipsoids whose main axes correspond to the eigenvectors of the covariance matrix. The squared lengths of the axes are equal to the eigenvalues of the covariance matrix.

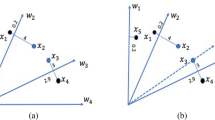

Either CMA-ES or DE is based on population. It can be seen that DE is much simpler in steps than CMA-ES. Hence, to resist stagnation, modification based on DE is easier than that based on CMA-ES. According to our idea, when CMA-ES is trapped in stagnation, DE takes over population. As a result, individuals are produced in a new manner. If the new individuals survive selection, the state of stagnation may be broken since distribution of population changes significantly. Details of our method are shown in “Methods”.

Results and discussion

In our experiments, our IR-CMA-ES is compared with nine population-based metaheuristics, L-SHADE5, UMOEAs-II7, jSO15, L-PalmDE16, HS-ES4, HARDDE17, NDE18, PaDE19, and CSDE20. Among the above competitors selected by us, UMOEAs-II is based on both CMA-ES and DE, while HS-ES is based on CMA-ES. The other algorithms are all DE variants. After all, compared with CMA-ES, DE has much more recent variants. Our experiments are based on the CEC 2014 and 2017 benchmark testing suites. Settings of the peers from literature are shown in Table 1, where D denotes dimensionality.

The criterion for termination, maximum number of fitness evaluations, is set 10,000 \(\cdot D\) in experiment.

Results for the CEC 2014 benchmark testing suite

When \(D=30\), 50, and 100, the peers and our algorithm are executed 30 times for each function, respectively. The results are given in Tables 2, 3 and 4. We notice that, when dimensionality is set 30, for F9, F11, F14, and F24 in the CEC 2014 suite, our algorithm loses to no peer. Thus, we give the convergence graph of all the algorithms for the functions with 30 in dimensionality in Fig. 1.

It can be seen from Table 2 that, for functions with 30 dimensions, our algorithm performs significant better than L-SHADE, UMOEAs-II, jSO, L-PalmDE, HS-ES, HARDDE, NDE, PaDE and CSDE in 12, 10, 10, 13, 13, 13, 19, 11, and 12 cases. Meanwhile, our IR-CMA-ES statistically loses to the peers in 8, 10, 8, 6, 5, 8, 2, 8, and 8 cases. There is no significant difference in other cases. In short, our algorithm shows better performance than L-SHADE, jSO, L-PalmDE, HS-ES, HARDDE, NDE, PaDE and CSDE, while is comparable to UMOEAs-II.

As shown in Table 3, for functions with 50 dimensions, our algorithm performs significant better than L-SHADE, UMOEAs-II, jSO, L-PalmDE, HS-ES, HARDDE, NDE, PaDE and CSDE in 16, 13, 15, 17, 11, 16, 22, 16, and 15 cases. Meanwhile, our IR-CMA-ES statistically loses to the peers in 8, 10, 11, 6, 5, 9, 5, 7, and 7 cases. There is no significant difference in other cases. In short, our algorithm show better performance than all the peers.

According to Table 4, for functions with 100 dimensions, our algorithm performs significant better than L-SHADE, UMOEAs-II, jSO, L-PalmDE, HS-ES, HARDDE, NDE, PaDE and CSDE in 16, 10, 12, 17, 11, 15, 20, 17, and 14 cases. Meanwhile, our IR-CMA-ES statistically loses to the peers in 8, 9, 10, 5, 6, 5, 3, 4, and 5 cases. There is no significant difference in other cases. In short, our algorithm shows better better performance than the all peers.

It can be seen from Fig 1 that, the algorithm mainly based on CMA-ES, HS-ES, stagnates much early. Nevertheless, our IR-CMA-ES shows quite different from HS-ES in convergence graph.

Experiment based on the CEC 2017 benchmark testing suite

When \(D=30\), 50, and 100, the peers and our algorithm are executed 30 times for each function, respectively. The results are given in Tables 5, 6 and 7.

It can be seen from Table 5 that, for functions with 30 dimensions, our algorithm performs significant better than L-SHADE, UMOEAs-II, jSO, L-PalmDE, HS-ES, HARDDE, NDE, PaDE and CSDE in 9, 8, 11, 11, 9, 13, 16, 7, and 9 cases. Meanwhile, our IR-CMA-ES statistically loses to the peers in 6, 14, 9, 5, 6, 7, 4, 6, and 5 cases. There is no significant difference in other cases. In short, our algorithm shows better performance than L-SHADE, jSO, L-PalmDE, HS-ES, HARDDE, NDE, PaDE and CSDE, while is defeated by UMOEAs-II.

As shown in Table 6, for functions with 50 dimensions, our algorithm performs significant better than L-SHADE, UMOEAs-II, jSO, L-PalmDE, HS-ES, HARDDE, NDE, PaDE and CSDE in 14, 9, 14, 16, 8, 15, 21, 14, and 15 cases. Meanwhile, our IR-CMA-ES statistically loses to the peers in 7, 9, 10, 5, 7, 8, 6, 5, and 7 cases. In short, our algorithm shows better performance than L-SHADE, jSO, L-PalmDE, HS-ES, HARDDE, NDE, PaDE and CSDE, while is comparable to UMOEAs-II.

According to Table 7, for functions with 100 dimensions, our algorithm performs significant better than L-SHADE, UMOEAs-II, jSO, L-PalmDE, HS-ES, HARDDE, NDE, PaDE and CSDE in 16, 10, 13, 17, 10, 15, 21, 16, and 14 cases. Meanwhile, our IR-CMA-ES statistically loses to the peers in 9, 9, 11, 7, 6, 6, 4, 5, and 5 cases. There is no significant difference in other cases. In short, our algorithm shows better better performance than the all peers.

Discussion

In our IR-CMA-ES, DE with offspring-surviving selection is employed when stagnation is detected. By this means, execution may jump out of stagnation at the cost of fitness going worse. Nevertheless, population may be further optimized. Therefore, IR-CMA-ES shows better performance than the peers with CMA-ES-UMOEAs-II and HS-ES. Meanwhile, it can be seen that our algorithm performs better than the other peers.

Conclusion

In the field of real parameter single objective optimization, algorithm based on CMA-ES, such as UMOEAs-II and HS-ES, is competitive. Nevertheless, such a type of algorithm stagnates much earlier than DE and requires to be further improved. Provided that measure to resist stagnation is applied in algorithm based on CMA-ES, better performance can be obtained. In this paper, DE is used to solve stagnation in CMA-ES. In our IR-CMA-ES, stagnation is confirmed if improving ratio of the average fitness is low for successive generations. Then, DE with offspring-surviving selection is called. If a round of DE cannot solve stagnation, one more round with more generations is executed. Our experiments are executed based on two CEC benchmark testing suites. Our algorithm is compared with five algorithms in the experiments. Experimental results show that our IR-CMA-ES performs competitively.

Our study shows that CMA-ES requires more study for real parameter single objective optimization. In the future, we will try to propose more schemes to enhance CMA-ES. Provided that stagnation in CMA-ES can be resisted better, a great progress in real parameter single objective optimization may be made.

Methods

Population-based metaheuristics for real parameter single objective optimization, including DE and CMA-ES, tend to stagnation. Furthermore, compared with DE, CMA-ES often stagnates even earlier. In fact, the tendency of stagnation in CMA-ES can be reversed by making changes in operators. Here, we choose to resist stagnation in CMA-ES by implement DE with offspring-surviving selection because operators of DE are much simpler than those of CMA-ES for adapting. In detail, we use Eq. (1) for mutation and Eq. (3) for crossover. More importantly, offspring-surviving selection

is employed by us. That is, offspring are always selected, while parents are certain to be eliminated from population. In this way, distribution of population varies significantly. Although fitness may go worse after the change of distribution, stagnation has been solved. Then, CMA-ES is recalled to search in a different region.

Our IR-CMA-ES is described in Algorithm 1.

Algorithm 1 is based on the same style used in21. We give parameters of IR-CMA-ES as below. Firstly, parameters for CMA-ES is set according to2 and omitted here. Meanwhile, for DE with offspring-surviving selection, \(F=1\) and \(Cr=0.5\). Then, for the parameters related to our scheme, we give suggested value in Table 8.

References

Storn, R. & Price, K. Differential evolution—a simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 11, 341–359 (1997).

Hansen, N., Müller, S. D. & Koumoutsakos, P. Reducing the time complexity of the derandomized evolution strategy with covariance matrix adaptation (CMA-ES). Evol. Comput. 11, 1–18 (2003).

Loshchilov, I. CMA-ES with restarts for solving CEC 2013 benchmark problems. In Proceedings of CEC, 369–376 (IEEE, 2013).

Zhang, G. & Shi, Y. Hybrid sampling evolution strategy for solving single objective bound constrained problems. In Proceedings of CEC, 1–7 (IEEE, 2018).

Tanabe, R. & Fukunaga, A. S. Improving the search performance of SHADE using linear population size reduction. In Proceedings of CEC, 1658–1665 (IEEE, 2014).

Awad, N. H., Ali, M. Z., Suganthan, P. N. & Reynolds, R. G. An ensemble sinusoidal parameter adaptation incorporated with L-SHADE for solving CEC2014 benchmark problems. In Proceedings of CEC, 2958–2965 (IEEE, 2016).

Elsayed, S., Hamza, N. & Sarker, R. Testing united multi-operator evolutionary algorithms-II on single objective optimization problems. In Proceedings of CEC, 2966–2973 (IEEE, 2016).

Škvorc, U., Eftimov, T. & Korošec, P. CEC real-parameter optimization competitions: Progress from 2013 to 2018. In Proceedings of CEC, 3126–3133 (IEEE, 2019).

Neri, F. & Cotta, C. Memetic algorithms and memetic computing optimization: A literature review. Swarm Evol. Comput. 2, 1–14 (2012).

Kämpf, J. H. & Robinson, D. A hybrid cma-es and hde optimisation algorithm with application to solar energy potential. Appl. Soft Comput. 9, 738–745 (2009).

Ghosh, S., Roy, S., Islam, S. M., Das, S. & Suganthan, P. N. A differential covariance matrix adaptation evolutionary algorithm for global optimization. In 2011 IEEE Symposium on Differential Evolution (SDE), 1–8 (IEEE, 2011).

Ghosh, S., Das, S., Roy, S., Islam, S. M. & Suganthan, P. N. A differential covariance matrix adaptation evolutionary algorithm for real parameter optimization. Inf. Sci. 182, 199–219 (2012).

Caraffini, F., Iacca, G., Neri, F., Picinali, L. & Mininno, E. A cma-es super-fit scheme for the re-sampled inheritance search. In 2013 IEEE Congress on Evolutionary Computation, 1123–1130 (IEEE, 2013).

Caraffini, F. et al. Super-fit multicriteria adaptive differential evolution. In 2013 IEEE Congress on Evolutionary Computation, 1678–1685 (IEEE, 2013).

Brest, J., Maučec, M. S. & Bošković, B. Single objective real-parameter optimization: Algorithm jSO. In Proceedings of CEC, 1311–1318 (IEEE, 2017).

Meng, Z., Pan, J.-S. & Kong, L. Parameters with adaptive learning mechanism (palm) for the enhancement of differential evolution. Knowl.-Based Syst. 141, 92–112 (2018).

Meng, Z. & Pan, J.-S. Hard-de: Hierarchical archive based mutation strategy with depth information of evolution for the enhancement of differential evolution on numerical optimization. IEEE Access 7, 12832–12854 (2019).

Tian, M. & Gao, X. Differential evolution with neighborhood-based adaptive evolution mechanism for numerical optimization. Inf. Sci. 478, 422–448 (2019).

Meng, Z., Pan, J.-S. & Tseng, K.-K. Pade: An enhanced differential evolution algorithm with novel control parameter adaptation schemes for numerical optimization. Knowl.-Based Syst. 168, 80–99 (2019).

Meng, Z., Zhong, Y. & Yang, C. Cs-de: Cooperative strategy based differential evolution with population diversity enhancement. Inf. Sci. 577, 663–696 (2021).

Wang, X., Li, C., Zhu, J. & Meng, Q. L-shade-e: Ensemble of two differential evolution algorithms originating from l-shade. Inf. Sci. 552, 201–219 (2021).

Acknowledgements

This work is supported by the Hubei Key Laboratory of Intelligent Geo-Information Processing.

Author information

Authors and Affiliations

Contributions

Z.C. conceived the experiments and conducted the experiments. Y.L. analysed the results. Both authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chen, Z., Liu, Y. Individuals redistribution based on differential evolution for covariance matrix adaptation evolution strategy. Sci Rep 12, 986 (2022). https://doi.org/10.1038/s41598-021-04549-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-04549-1

This article is cited by

-

Identification of material parameters in low-data limit: application to gradient-enhanced continua

International Journal of Material Forming (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.