Abstract

Motivated by the problem of detection of cascades of defaults in economy, we developed a detection framework for an endogenous spreading based on causal motifs we define in this paper. We assume that the change of state of a vertex can be triggered either by an endogenous (related to the network) or an exogenous (unrelated to the network) event, that the underlying network is directed and that times when vertices changed their states are available. After simulating default cascades driven by different stochastic processes on different synthetic networks, we show that some of the smallest causal motifs can robustly detect endogenous spreading events. Finally, we apply the method to the data of defaults of Croatian companies and observe the time window in which an endogenous cascade was likely happening.

Similar content being viewed by others

Introduction

Dynamical processes in complex systems are most ubiquitously modeled using stochastic cellular automata and their continuous time analogue, interacting particle systems. These stochastic models are employed in descriptions of epidemics1,2,3, systemic risk in financial industry4,5, propagation of information in society6, queuing7 etc. In some of these systems, dynamics can lead to the formation of cascades like, for example, a fast evolving contagion or a series of company defaults. Detecting and modeling such events can be very important, as in the case of a new, previously unknown pathogen or for understanding what drives some default avalanche in the economy.

However, the possibility that a network related contact process seems relevant for the description of a certain complex system does not imply that the temporal data collected from such system supports its usage in modeling. For example—the lack of contact data, necessary to reconstruct the network supporting the spreading processes (pathways a new pathogen takes8,9 or the reasons for companies default10); the lack of some layers in a multiplex network that conduct the spreading process in the real system11,12,13, or simply no network contribution in the spreading—are some of the instances in which the modeling of the process is best done as a non-network field effect.

Therefore, the question we are addressing in this work is, whether the observed dynamics in the data can, in a justified manner, be explained through stochastic cellular automata on the available network—hence being endogenous with respect to the data, or it would be more appropriate to model it as a purely exogenous (field effect) influence.

We define an endogenous event (endogenous to the available data) to be the change of state of an object (vertex), which was caused by a change of the state of another object (vertex), through its directed relation (direct edge) to the first object. A cascade is then loosely defined as a series of connected endogenous events.

An exogenous event is defined to be a change of state of an object (vertex) that happened unrelated to the change of state of any other object (vertex) with some directed relation to the first object in the data.

Since the relations in the data are directed, we can use event times to distinguish between these two types of events; it is for certain that if the time \(t_1\) on the beginning point of the edge is larger than time \(t_2\) on the ending point \(t_1 > t_2\), there was no causal relationship between the two events. However, the opposite time ordering is a necessary but not a sufficient condition to prove causality. There we turn to a statistical verification: we define causal motifs that contain only potentially causal edges (\(t_1 < t_2\)) (fully listed in the Supplement) and compare their frequency with an ensemble of networks with randomized event times. A presence of an endogenous component of the process is expected to show different causal motif frequencies compared to a randomized case, unlike a process that is completely exogenous.

Therefore, we take the statistically significant difference between the motif frequency in the original process and in the randomized ensemble as the indication of the presence of an endogenous part of the dynamical process in the data.

The motivation for this research is directly related to economics, more concretely—a problem of default cascades14,15. Knowing what causes companies to fail to repay its debt, i.e. go into default, is crucial for every economy. Sometimes it can be related mainly to exogenous events like for instance loss of market access, loss of access to finance, monetary shocks etc. In other cases the defaults in the system (network) are mostly endogenous and are related to inability of different actors (vertices) to fulfill their obligation to their creditors. This type of events is common in financial systems that are not well regulated and it is believed to be a significant contributor to largest systemic events14,15,16 even in the well regulated systems.

Financial contagion on networks is usually modeled using balance sheets of nodes5, where different categories in the balance sheet insulate against financial distress. Kobayashi et al. in17 illustrates the equivalence of the balance sheet approach with the threshold model of cascades presented in18. In19 Acemoglu et al. explore network architecture contributing to or insulating against financial contagion between banks. On the other hand, very few papers exist that study transmission of financial shock between firms that utilize entire networks. Contagion is mostly studied through the lens of credit chains firstly outlined in20 as important channels for propagation of financial distress. Defaults on trade credit have been identified as a major cause of firms distress, as explicitly modelled in both21 on US data and22 on Swedish data. Acemoglu et.al. in23, as well as24 and25 aim to develop a network theory of production which would meaningfully model links between firms. Others, such as26 construct a business network from supply chain data. Contagion within production network is explored in27 where two types of bankruptcies exist: either by a random shock to revenues or costs, or when a creditor is not paid by debtor. However, a way to discern between these two types of defaults has not yet been developed. The economic motivation for this paper is to develop a basis for an empirical strategy which would allow us to distinguish from the data the existence of shocks transmitted from other nodes as opposed to field effects that affect node failure.

Here we investigate the methodology for the detection of endogenous propagation in financial networks, which was previously used in the investigation of Croatian company defaults without theoretical justification28. There, the problem was treated using randomized reference models (RRM)29 of a directed temporal network with the companies and their times of default on vertices, and their mutual debts on edges, pointed from debtor to creditor. In addition to single causal edges used there, we introduce causal motifs with two and three edges and the largest component of causal edges as variables we use for the test statistics, as we expect them to provide more information of the existence of cascades. We omit any other data like debt (edge weights) in order to make the method usable in as many situations as possible. This methodology should in principle be applicable to any spreading phenomena (with two states) on any directed network.

Network motifs have a long tradition of being used as a tool for inference in complex networks30. They are defined as small subgraphs that can be observed with different frequencies in the data. They were previously used to understand metabolic and other biological networks30,31,32, the properties of ecological system through food webs33, in economical setting to understand corporate governance34,35, and organization of knowledge in Wikipedia36. Motif analysis was also efficiently extended to temporal networks37.

RRM were also used in the temporal embedding38 and the inference of structures in communication networks39. For analysing collective behaviour in social networks40, the author used two-event motifs without causal ordering and also benchmarked the results to the RRM.

Another line of research relevant for this work is the inference of external and internal influence on the propagation of information. Previous works were mainly embedded in social networks and questioned if the influence of peers in a network is dominant to outside influences or not41,42,43. The direction of this line of research is mostly related to the estimation of parameters to proposed models of information spreading.

In order to validate our methodology, besides using the original data of company defaults, we create artificial data with parameters that control the exogenous and endogenous components. Using Kolmogorov-Smirnov test, z-score and Mahalanobis distance, we determine which type of motifs is the most successful test statistic. All the scripts used in this paper and the company default data are available in our Git-Hub repository or in the Supplementary information44. Since the parameter space needed for the evaluation of all the possible processes and networks is enormous we focus on two types of processes on relatively small networks for which we expected the results to be the least conclusive, therefore providing a lower bound for the significance of the results.

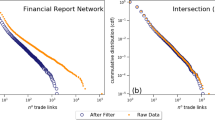

Data on defaults

The data is collected from the Croatian Financial Agency website, which publicly discloses all documents related to the Chapter 11 type bankruptcies, which involved debt renegotiation and restructuring in the Republic of Croatia. The orderly data consists of a list of debtors, an exhaustive list of their creditors, the starting time and the length of the pre-bankruptcy settlement and the amount of debt per creditor. It is turned into a temporal network representation where the institutions (creditors and debtors) are vertices, and the debts between debtors and creditors are edges. The starting times of the pre-bankruptcy settlements are attributed to the vertices that entered the settlement as debtors and are regarded as the time of default. The amount of debt and the length of the pre-bankruptcy settlement are not included, as our aim is to use the minimal possible information. A thorough description of data collection and network formation is provided in the Supplementary Information.

KS-test results for SI and VM processes on a network of \(N=1000\) vertices. The significant statistic \((p < 0.01)\) for a given percentage of default and \(\zeta\) is marked with the corresponding pattern. On x-axis we show the percentage of defaults in the network and on y-axis the average degree of the network.

Results

The final form of the results, after generating \(N_{graph}=100\) different graphs, simulating \(N_{process}=10\) processes on every graph, and creating \(N_{shuffle}=100\) randomized reference models for each process, are two distributions of the statistic counts. One of them is the distribution of a statistic recorded on the original process, and the other the distribution of the statistic on the networks that result from time-shuffling.

To quantify the difference between the distributions, we use the two-sample Kolmogorov-Smirnov test. For a more precise comparison between one instance of a process and its null distribution, we employ the z-score. Finally, to distinguish between the contributions of individual motifs within a class of motifs, we use the Mahalanobis distance, which is a generalization of the z-score.

SI process results. In panels (a) and (b) we present the fraction of one sided z-score results of one-edge motifs significant with \(p< 0.1\) (dark blue), depending on the percentage of defaults in the network (y-axis) and average degree of the network (x-axis), for an SI process with \(\zeta =1\) and \(\zeta =4\), respectively. In panels (c) and (d) we present in the same form the results of the Mahalanobis distance of two-edge motifs. For these figures, the number of vertices equals \(N=1000\), on each of 100 realized networks, 10 processes are simulated and 100 random shuffles are created. In panels (e) and (f) we fix the network default at \(25 \%\) and present the percentage of significant tests depending on the size of the network for (e) z-score and (f) Mahalanobis distance with \(\langle k\rangle =4\) and \(\zeta =10\). Horizontal lines represent the case in which \(75\%\) of simulations exhibit significant difference from the RRM-model.

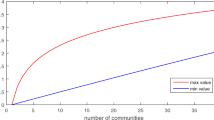

VM process results. In panels (a) and (b) we present the fraction of one sided z-score results of one-edge motifs significant with \(p< 0.1\) (dark blue), depending on the percentage of defaults in the network (y-axis) and average degree of the network (x-axis), for a VM process with \(\zeta =1\) and \(\zeta =4\), respectively. In panel (c) we present the results in the same form the results of the Mahalanobis distance of two-edge motifs with \(\zeta =1\). For these figures, the number of vertices equals \(N=1000\), on each of 100 realized networks, 10 processes are simulated and 100 random shuffles are created. In panel (d) we present the ratio of the number of nodes that defaulted through the exogenous process \(n_{\alpha }\) and the endogenous process \(n_{\beta }\), for different default percentages (\(\langle k\rangle =4\) and , \(\zeta =1\)). In panels (e) and (f) we fix the network default at \(25 \%\) and present the percentage of significant tests depending on the size of the network for (e) z-score and (f) Mahalanobis distance with \(\langle k\rangle =4\) and \(\zeta =10\). Horizontal lines represent the case in which \(75\%\) of simulations exhibit significant difference from the RRM-model44.

In the simulated processes, we quantify the exogenous component of the process with the parameter \(\alpha\)—which is equal to the probability per unit time that the vertex changes a state without any contributing effect from the network, and the endogenous component with the parameter \(\beta\)—which is related to probability that the change of state came from an immediate neighbour. The interpretation of the parameter \(\beta\) is process dependent and details are given in the “Methods” section. The ratio \(\zeta =\frac{\alpha }{\beta }\) between the rates of the two components is a control parameter that we use to quantify the endogeneity of a process, and we use it to present the results and interpret the validity of the method. For a single exogenous and a single endogenous process, it would represent the ratio of the changes of exogenously and endogenously defaulted vertices. However, as the exogenous default of every vertex is modeled with its own Poisson process with rate \(\alpha\), and the endogenous default of vertices with defaulted neighbours can be caused by transmissions through those edges, each with rate \(\beta\), the actual ratio (\(\Delta n_{\alpha }/\Delta n_{\beta }\)) will in general be different from \(\zeta\). As can be seen in the Supplement, it is related to the parameter \(\zeta\), but also to the total number of vertices, the mean degree of the network and the percentage of the network default.

We focus on small networks consisting of \(N=1000\) nodes (typical size of financial networks), they are small enough to allow for a comprehensive research and we later show that increasing the network size raises the significance of the detection substantially.

In the last section we show the results of applying the methodology to the real data of Croatian companies’ defaults.

Defaults of Croatian companies results. In (a) we present the frequency of one-edge motifs in all the data, compared to a RRM (1000 shuffled instances) histogram of the same statistic. In panel (b) we present the count of three-edge motifs (red points) compared to RRM realizations represented with blue points (mean) and a blue line (standard deviation) for different percentages of network default. In panels (c) (one-edge) (d) (two edge) and (f) (three edge) are the z-scores of the test statistics on data presented in previous figure. In panel (f) we show the two-edge Mahalanobis distance. Significant cases (\(p < 0.1\)) in the last four figures are colored dark blue.

SI model

The first model of a spreading process we investigate, is a variant of an SI model. As described in Methods, the results are interpreted using the two sample Kolmogorov–Smirnov test (Fig. 1), the z-score (Fig. 2) and the Mahalanobis distance (Fig. 3).

The distribution of simulated processes is compared to the respective randomized distribution, using KS-test, to infer if the distributions of realizations are different. If they are not, the individual realization can not be expected to give meaningful statistically significant signal. From Fig. 1, four left panels, we can see that the distributions of all investigated motifs are significantly different from our null model, except for the cases when almost the entire network is defaulted and the cases when the number of defaults is low. The samples with the largest values of \(\zeta\) (\(\zeta = 100\)) are significantly different for larger mean degrees and an intermediate range of the network default. For the largest values of \(\zeta\), one, two and three edge motifs provide significant difference in a bit larger part of the parameter space than the largest component.

Addressing the one-sided z-score results Fig. 2, one-edge motif statistics prevails for all simulated \(\zeta\) parameters if the network degree \(\langle k \rangle\) is 4 or less. Causal motif statistics always outperforms largest component statistics, by a large margin. Surprisingly, causal motifs of higher order with two and three edges show less significant results than one edge statistics, because even though they separate first moments of the two distributions better, they also introduce a larger variance. In Fig. 2a,b we present a fraction of simulated processes that were significantly different (\(p<0.1\)) from the null-model, and one can see how the increase of \(\zeta\) shrinks the area of satisfactory results. Unsurprisingly, with the increase of the system size we are able to find that our method can distinguish the existence of an endogenous process with statistical significance even in these extreme cases Fig. 2e.

The causal motif test statistics on both the original and randomized networks converge on average in time to a constant fraction of the total number of possible motifs. For one-edge motifs that fraction is 1/2 and for two-edge motifs 1/4 of their total number. On randomized networks, where time ordering of the process is completely destroyed, that kind of convergence is expected. Nevertheless, even with a strong endogenous driving, average numbers of causal motifs formed by an SI process converge in time to the same limits. If we observe a completely endogenous process of defaults until all vertices on the Erdős–Rényi network default, we can calculate the final number of causal one-edge motifs by summing the expected number of causal one-edge motifs in each step. The probability that one of the \(\langle k_{out} \rangle\) outgoing edges reaches a susceptible vertex equals to the fraction of susceptible vertices \(1-t/N\), since the Erdős–Rényi network is homogeneous and the SI process treats all out-neighbours as equal. We see then that the number of causal one edges is:

Therefore, the statistics of motif count at the end of process in the network is always doomed to fail for the SI process.

Furthermore, we use Mahalanobis distance to address the contributions of individual submotifs to the detection of the endogeneity of the process and present the results in Fig. 2c,d. The square of the Mahalanobis distance follows a chi-squared distribution, with the dimension of the submotifs being equal to the degrees of freedom. Thus, the Mahalanobis distance is in general greater or equal than the z-score of a given motif. For one-edge statistic it is equal to the absolute value of the two sided z-score, and it increases as the number of submotifs increases. In general we find that the one-edge z-score is a better statistic for low percentage of defaults in a network and for lower average degree, while two-edge Mahalanobis vastly outperforms for larger percentages of defaults and higher average degrees of the network. As we have shown previously the number of causal edges converges for SI model to the random limit, but the pattern of higher order causal motifs does not, and this is the case for using Mahalanobis distance for large defaults of the network. As before in Fig. 2f, one can see that the increase of the network size enables the detection of cascades even for extreme \(\zeta\) values.

Voter model

In the previous subsection we concluded that the properties of the Erdős–Rényi network and SI process make causal motif test statistics from both original and randomized process converge to the same limit. Therefore, in addition to the SI variant of the endogenous component of the process, we consider the voter model variant, which introduces scaling the number of incoming defaulted vertices with the number of all incoming vertices per vertex. It adds inhomogeneity in the defaulting process which allows the test statistic for the original process to have a different limit than for the random process, making the two more distinguishable.

KS-test results in Fig. 1 equivocally state that distribution of causal one-edge motifs in the case of endogenous voter model process significantly differs from purely exogenous distribution for broader range of parameters than the higher order motifs. In particular one edge statistics is superior for smaller percentages of defaults. Since the causal motif distributions do not converge to the same 100% default limit, as in the case of SI process, one-edge dominates for all the simulated mean degrees \(\langle k \rangle\).

Z-score results in Fig. 3a,b show a similar picture as for the SI endogenous component, except that for larger values of \(\zeta\) the results become less significant faster. For \(\zeta >1\) the voter model type of an endogenous process is harder to detect in the data compared to the SI type.

We conclude that the three-edge test statistic proves most robust at lower percentages of default for highly endogenous processes, while for all the other processes one-edge is the most successful test statistic, if we use the z-score.

The reason for this is again that the variance of the higher order motifs grows faster than the separation between the centers of the distributions.

We inspect the Mahalanobis distance and show the results of the comparison in Fig. 3c. We see that for \(\zeta = 1\) one-edge z-score statistic is better at lower percentages of default, while two-edge Mahalanobis statistic takes over for higher percentages, just like in the case of the SI type process. Larger values of \(\zeta\) do not show significant results.

The reason why the voter model process in the example above is harder to detect than SI is depicted in Fig. 3d. For the same values of \(\alpha\) and \(\beta\), less vertices defaulted endogenously for the VM model than for the SI model. Furthermore, because \(\beta\) is the rate of transition per individual edge connected to a defaulted vertex, and \(\alpha\) the rate of transition of each individual vertex, the actual ratio \(n_{\alpha }/n_{\beta }\) is in general different than the corresponding \(\zeta\), with their relation depending on the other parameters of the process and the network (Supplement). Towards the end of the process, for an SI process the value of the ratio is approximately \(n_{\alpha }/n_{\beta }\approx 2\) and, for a VM process \(n_{\alpha }/n_{\beta }\approx 3\), for the parameters reported in Fig. 3d. Therefore in the case of \(\zeta =1\), the number of actual causal defaults in the network is 2-3 times lower than the defaults related to field effect.

Defaults of Croatian companies

As we are trying to detect default cascades, we filter only the firms that can both have their own default caused endogenously, and spread the default into the network, that is, they have to be both debtors and creditors. Under that condition we are left with 549 firms, forming a network with 1198 edges (debts), a mean degree of 4.36 (mean out and in degree are 2.18), and maximal degree 60. Our method is then applied to the temporal network and the results are shown in Fig. 4.

Results for the completely defaulted network are shown in the form of histograms like in Fig. 4a, where the red line represents the one-edge causal statistic count on the real process, and the blue histogram is the null distribution created by RRM. Also the temporal evolution of the default process is shown in Fig. 4b for the example of three-edge causal motifs. We used all the available statistics and in Fig. 4c–f we show the significance of the scores for different default percentages in the network of companies. The method points to a possible default cascade happening in the early interval when \(10-15\%\) of the companies in data set defaulted and, much more significantly, to the later period when \(70-85\%\) of the network defaulted.

We can conclude that an endogenous propagation of defaults is borderline significant in this regions and this gives us confidence that a detailed analysis of balance sheets of companies as well as interviews with executives of companies that defaulted in that time period could reveal that there was a strong endogenous component of the defaults.

Discussion

Our aim was, given a set of data, to be able to determine from it whether the explanation of the observed default process requires the endogenous, network structure, or it is enough to model the dynamics as subject only to a field effect. We have developed a methodology which infers, from the data represented as a temporal network, whether there was a significant probability of a endogenous propagation of the default (contagion) or not, and we extensively tested limits of applicability of the method.

We tested the methodology on synthetic data, and were able to distinguish whether an endogenous component was present in the simulated process up to the value \(\zeta \approx 1\), for small networks of 1000 vertices. Using KS-test we have detected cases in which the distributions of statistics counts were significantly different and used z-score and Malanobis distance to distinguish between purely exogenous and exogenous-endogenous cases. In borderline cases, which are generally around \(\zeta \approx 1\) we show that power to distinguish processes depends not only on \(\zeta\) but also on percentage of defaults, average degree and size of the network. The border between the distinguishable and non-distinguishable range of parameters is complex and different for z-score and Mahalanobis distance. For large enough networks it is possible to distinguish processes even for a very large \(\zeta\). It is also important to stress that Mahalanobis in general performs either equivalent or better to z-score, in the case of SI, while z-score outperforms Mahalanobis distance in the case of VM.

Finally, we applied our method on the pre-bankruptcy settlement data of Croatian companies. Based on our analysis we can conclude that there probably was an endogenous propagation of defaults (prebankruptcy settlements) in the network, which is in agreement with previous research28 which used a more extensive dataset including the values of debts, assets of companies etc. Unfortunately, default cascades in economy are very different from other more studied cascade processes, like meme propagation, where it is easy to see who copied the meme from whom. The ground truth in economy necessary involves detective work of checking the numerous court filings, financial reports of the companies and interviews with employees. Our method points to which companies such endeavour should be focused to prove without any doubt that an endogenous cascade was really present.

We demonstrated that we can distinguish potential endogenous spreading even when using a minimal possible information, which was the aim of this paper.

A natural extension for the future work with respect to economic cascades would be adding the amounts of debt as weights on edges, as it is reasonable to assume that larger debts are more probable to serve for propagation of default than smaller debts. Also, the next refinement of the method could be a limit on the time that passes between the default of a debtor and the default of a creditor, since the probability that the debtor’s default was the cause of the creditor’s default decays with time that passes. Systematic inclusion of more and more information to distinguish between mechanisms of endogenous propagation up to a point of purely data driven mechanisms is a research direction for which the presented research is a good starting point.

In this paper we also did not enter into the question of different network classes like for instance correlated or scale-free networks45. Understanding the difference of detection in different classes would surely be of interest, especially since it is well known that they can have significant effects on contact processes45,46,47.

Furthermore as it is seen in this paper, sometimes it is impossible to recognize whether the endogenous process exists if the “noise” of the exogenous process is too strong. There should exist a fundamental phase transition between the detectable and the undetectable phase of epidemics, similar to the community detection detectability limit48. An analytical understanding of this limit is still challenging and would be of great importance for the researchers interested in the exogenous—endogenous interplay of interacting particle systems on complex networks.

Methods

Rationale

We devise our methodology to show the existence of a contact process component in a network process. In order to be able to observe a contact process, in data consisting of creditors and debtors there must exist debtors which are also creditors. Then we can assume two possible scenarios. In the first one, the default of that vertex is completely related to exogenous causes and the connection of the creditor and its debtor is irrelevant for the default of the creditor—perhaps the credit was too small to significantly influence the financial stability of the creditor, or there exists an institution that will repay the debtor’s obligations to the creditor. In the second scenario, the default of the debtor at time \(t_1\) causes the default of at least one of its creditors. This creditor is then present in the data as defaulted at the later time \(t_2\). One can observe that in the first scenario the default of the creditor and debtor can happen in any order, while in the second the order is clear \(t_1\) has to happen before \(t_2\).

Notice that the unknown default mechanisms for any of the cases can not change this very basic causal relationship. Whichever the true mechanism that causes vertices in the data to default, we expect that the pattern of timestamps on the network in which defaults are driven by a purely exogenous process is very different from the timestamp pattern generated by the default process that has an endogenous component. More precisely, the purely exogenous process should not depend on the timestamps that are present in the data, because in principle any vertex could have defaulted at any time compared to any other vertex. That means that permuting the timestamps of defaults creates a set of default events just as likely as the original set.

Counting causal motifs

Based on this observation we count the motifs30 with a purely causal structure on the network to verify if their count can be explained by a solely exogenous process. A directed edge pointing from i to j is associated with timestamps of defaults \(t_i\) and \(t_j\) of the vertices and we call this edge a causal edge if \(t_i<t_j\). We also define a causal motif as any motif with all causal edges. Examples of such causal motifs are presented in Supplementary Table S1. Our assumption is that such causal motifs are less common if the process is purely exogenous as compared with the mixed case in which both exogenous and endogenous dynamics exist. Therefore, our null-hypothesis states that the frequency of observed causal motifs is completely consistent with the purely exogenous default mechanism.

Ordered motifs49 were previously introduced in the case of food webs, and, unlike causal motifs, allow edges in the opposite direction of vertex ordering. Another recent related work uses process motifs50 as building blocks of dynamical systems operating on network. They are defined as small graphs composed of walks on them. Yet another usage of temporal motifs can be found in51, in which authors use similar concept to ours to detect anomalies in time series on networks.

In previous works motifs are classified by the number of vertices that comprise them. As every contact process uses edges for propagation, we choose a convention where causal motifs are ordered by the number of edges they consist of. All the causal motifs up to and including order 3 are depicted in Supplementary Table S1 of supplementary information.

Our methodology is based on counting the number of causal motifs of the given kind in the process data and comparing it to the count in the permuted data. A microcanonical RRM29 is used to create the permuted data, by shuffling the times on the defaulted vertices and keeping all the other network properties constrained. A distribution of motif counts obtained from the ensemble of realizations is then compared to the distribution one obtains from the real and simulated data.

As an alternative to motifs we also investigate the size of the largest causal component and compare it to the largest causal components drawn from RRM. The definition of this component is equivalent to the definition of the largest weakly connected component45 in the network in which all the edges except causal ones are deleted. While finding larger motifs than the one we investigate here is extremely tedious computational task, finding the largest component is easy. One can also think of the largest causal component as the largest causal motif one can find in the network and is therefore a limiting case for large causal motifs.

Measures used to compare data and RRM

In order to verify that there exists a possibility to distinguish between RRM and data, we perform a Kolmogorov-Smirnov test, which shows whether two distributions differ significantly or not. Only if we can find a significant difference in distributions, we could expect that the number of motifs generated by some individual process might be significantly different from the number of motifs generated by RRM.

The first measure we use is a usual z-score. The distribution of motif counts is very similar to a Gaussian distribution, thus making the z-score a good candidate for comparing the motif counts in data and RRM. In rare cases, where the number of motifs is very low, as in the beginning of the default process with extremely large \(\zeta\) and a low average degree, z-score is not applicable. For those parameter values, KS test also does not exhibit a significant difference between the distributions. Considering that the z-score statistic relies on the normality of distributions, we have performed a Shapiro-Wilk test on all the created distributions to check if they significantly differ from the normal distribution. We have found that for almost all parameters distributions the assumption of normality is not rejected, except for the case of very small default percentage (5%-10%) and for the case of largest component which is often non-normal, where it is rejected with \(p < 0.01\).

Furthermore, we separate the motifs within each order and compute the Mahalanobis distance as a generalization of the z-score, to test which motifs are better to be used for the endogenous process detection. In Supplementary Table S1, we can observe that the “train” motif (a sequence of causal edges) is the least probable to be created by chance (exogenous process) compared to other submotifs of the same order. We expect that this difference within the motifs of given order is more sensitive to the possible existence of endogenous process.

Therefore, for each count of causal motifs we create a vector \({\mathbf {C}}^{(i)}\) whose components represent the count of every type of submotifs of order i found in the network. The dimension of this vector is 1 for 1 edge motifs, 3 for two edge motifs and 9 for three edge motifs. After the reshuffling of times in the network, we compute Mahalanobis distance \(D^{(i)}\) of the causal motifs of order i given as:

where \(\mathbf {\mu }^{(i)}\) is average motif vector obtained in time reshuffled networks, and \(\Sigma _i\) is a covariance matrix of the vector of counts in reshuffled networks. In the case of diagonal covariance matrix, Mahalanobis distance is the square root of the sum of squares of z-scores of each vector component (individual causal motif). This property guarantees that Mahalanobis distance is always greater or equal to each of individual absolute z-scores, and it makes it more sensitive for the detection of endogenous propagation. The p value of Mahalanobis distance is computed using the method presented in52.

Simulating stochastic processes of defaults

We validate the methodology on data created by simulating default processes, with predetermined endogenous and exogenous contributions, on synthetic networks. Erdős-Rényi graphs are used as the underlying synthetic networks. We set the number of vertices N and the expected degrees \(\langle k_{in} \rangle\) and \(\langle k_{out} \rangle\) to generate an ensemble of \(N_{graph}\) directed networks. On each network a process of companies’ defaults, with exogenous and endogenous contributions determined by parameters \(\alpha\) and \(\beta\), is simulated \(N_{process}\) times, and a RRM containing \(N_{random}\) shuffled instances is created.

The simulated process is composed of two Poisson processes, used for sampling exogenous and endogenous default interevent times, with rates \(\alpha\) and \({\beta }_i = x_i \beta\), respectively. The endogenous rate is defined as a value common to the entire network \(\beta\), weighted by some inherent vertex property \(x_i\). Event times are therefore drawn from the exponential distribution with CDF:

The weight \(x_i\) will be set depending on the class of contact process used; SI type of process, as the simplest propagation process has \(x_i = 1, \forall i\), while voter model weighs the rate of a vertex i as \(x_i = 1/k_i^{in}\).

Therefore, the default times for both types of endogenous process components are obtained as:

and the simulation is performed using the event-driven algorithm3.

By changing the ratio \(\zeta := \alpha /\beta\) we control the endogenous component of the simulated process we use for the validation of our method. We stop the process after every 5 % of the original network’s vertices default and record the size of the largest component and the count of each motif. Then, we create an ensemble of time-shuffled networks and obtain distributions of the count of motifs and the size of the largest component.

Data availability

Data are available on Git-Hub which is listed in references44.

References

Anderson, R. M. & May, R. M. Population biology of infectious diseases: Part I. Nature 280, 361–367 (1979).

Anderson, R. M. & May, R. M. Infectious Diseases of Humans: Dynamics and Control (Oxford University Press, 1992).

Kiss, I. Z. et al. Mathematics of Epidemics on Networks Vol. 598 (Springer, 2017).

Battiston, S., Puliga, M., Kaushik, R., Tasca, P. & Caldarelli, G. Debtrank: Too central to fail? Financial networks, the fed and systemic risk. Sci. Rep. 2, 541 (2012).

Gai, P. & Kapadia, S. Contagion in financial networks. Proc. R. Soc. A Math. Phys. Eng. Sci. 466, 2401–2423 (2010).

Weng, L., Flammini, A., Vespignani, A. & Menczer, F. Competition among memes in a world with limited attention. Sci. Rep. 2, 335 (2012).

Kendall, D. G. Stochastic processes occurring in the theory of queues and their analysis by the method of the imbedded Markov chain. Ann. Math. Stat. 24(3), 338–354 (1953).

Volz, E. & Meyers, L. A. Susceptible-infected-recovered epidemics in dynamic contact networks. Proc. R. Soc. B Biol. Sci. 274, 2925–2934 (2007).

Blower, S. & Go, M.-H. The importance of including dynamic social networks when modeling epidemics of airborne infections: Does increasing complexity increase accuracy?. BMC Med. 9, 1–3 (2011).

Vodenska, I. & Becker, A. P. Interdependence, vulnerability and contagion in financial and economic networks. In New Perspectives and Challenges in Econophysics and Sociophysics (ed. Bask, M.) 101–116 (Springer, 2019).

Mucha, P. J., Richardson, T., Macon, K., Porter, M. A. & Onnela, J.-P. Community structure in time-dependent, multiscale, and multiplex networks. Science 328, 876–878 (2010).

Boccaletti, S. et al. The structure and dynamics of multilayer networks. Phys. Rep. 544, 1–122 (2014).

Cozzo, E., Ferraz de Arruda, G., Aparecido Rodrigues, F. & Moreno, Y. Multiplex Networks. SpringerBriefs in Complexity 1st edn. (Springer International Publishing, 2018).

Battiston, S., Gatti, D. D., Gallegati, M., Greenwald, B. & Stiglitz, J. E. Default cascades: When does risk diversification increase stability?. J. Financ. Stab. 8, 138–149 (2012).

Brummitt, C. D. & Kobayashi, T. Cascades in multiplex financial networks with debts of different seniority. Phys. Rev. E 91, 062813 (2015).

Zlatić, V., Gabbi, G. & Abraham, H. Reduction of systemic risk by means of Pigouvian taxation. PLoS One 10, e0114928 (2015).

Kobayashi, T. & Hasui, K. Efficient immunization strategies to prevent financial contagion. Sci. Rep. 4, 1–7 (2014).

Watts, D. J. A simple model of global cascades on random networks. Proc. Natl. Acad. Sci. 99, 5766–5771 (2002).

Acemoglu, D., Ozdaglar, A. & Tahbaz-Salehi, A. Systemic risk and stability in financial networks. Am. Econ. Rev. 105, 564–608 (2015).

Kiyotaki, N. & Moore, J. Credit Chains. Unpublished paper (London School of Economics, 1998).

Boissay, F. Credit Chains and the Propagation of Financial Distress (Technical Report, 2006).

Jacobson, T. & Von Schedvin, E. Trade credit and the propagation of corporate failure: An empirical analysis. Econometrica 83, 1315–1371 (2015).

Acemoglu, D., Carvalho, V. M., Ozdaglar, A. & Tahbaz-Salehi, A. The network origins of aggregate fluctuations. Econometrica 80, 1977–2016 (2012).

Hertzel, M. G., Li, Z., Officer, M. S. & Rodgers, K. J. Inter-firm linkages and the wealth effects of financial distress along the supply chain. J. Financ. Econ. 87, 374–387 (2008).

Oberfield, E. A theory of input–output architecture. Econometrica 86, 559–589 (2018).

Gao, J. The effects of firm network on banks’ portfolio consideration. Technical Report, Mimeo. Kelly School of Business, Indiana University (2015).

Battiston, S., Gatti, D. D., Gallegati, M., Greenwald, B. & Stiglitz, J. E. Credit chains and bankruptcy propagation in production networks. J. Econ. Dyn. Control 31, 2061–2084 (2007).

Košćak, S. et al. Hub think tank broj 3: Arhitektura mreže dugovanja hrvatskih tvrtki. https://www.hub.hr/hr/hub-think-tank-broj-3-arhitektura-mreze-dugovanja-hrvatskih-tvrtki (2015). https://www.hub.hr/hr/hub-think-tank-broj-3-arhitektura-mreze-dugovanja-hrvatskih-tvrtki. (Text is in Croatian).

Gauvin, L. et al. Randomized reference models for temporal networks. arXiv preprint arXiv:1806.04032 (2018).

Milo, R. et al. Network motifs: Simple building blocks of complex networks. Science 298, 824–827 (2002).

Shen-Orr, S. S., Milo, R., Mangan, S. & Alon, U. Network motifs in the transcriptional regulation network of Escherichia coli. Nat. Genet. 31, 64–68 (2002).

Alon, U. Network motifs: Theory and experimental approaches. Nat. Rev. Genet. 8, 450–461 (2007).

Ristl, K., Plitzko, S. J. & Drossel, B. Complex response of a food-web module to symmetric and asymmetric migration between several patches. J. Theor. Biol. 354, 54–59 (2014).

Ohnishi, T., Takayasu, H. & Takayasu, M. Network motifs in an inter-firm network. J. Econ. Interact. Coord. 5, 171–180 https://doi.org/10.1007/s11403-010-0066-6 (2010).

Takes, F. W., Kosters, W. A., Witte, B. & Heemskerk, E. M. Multiplex network motifs as building blocks of corporate networks. Appl. Netw. Sci. 3, 39 (2018).

Zlatić, V., Božičević, M., Štefančić, H. & Domazet, M. Wikipedias: Collaborative web-based encyclopedias as complex networks. Phys. Rev. E 74, 016115 (2006).

Paranjape, A., Benson, A. R. & Leskovec, J. Motifs in temporal networks. In Proceedings of the Tenth ACM International Conference on Web Search and Data Mining, 601–610 (2017).

Torricelli, M., Karsai, M. & Gauvin, L. weg2vec: Event embedding for temporal networks. Sci. Rep. 10, 1–11 (2020).

Lehmann, S. Fundamental structures in temporal communication networks. In Temporal Network Theory, 25–48 (Springer, 2019).

Mellor, A. Analysing collective behaviour in temporal networks using event graphs and temporal motifs. arXiv preprint arXiv:1801.10527 (2018).

Anagnostopoulos, A., Kumar, R. & Mahdian, M. Influence and correlation in social networks. In Proceedings of the 14th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 7–15 (2008).

Piškorec, M., Antulov-Fantulin, N., Miholić, I., Šmuc, T. & Šikić, M. Modeling peer and external influence in online social networks: Case of 2013 referendum in croatia. In International Conference on Complex Networks and Their Applications, 1015–1027 (Springer, 2017).

Myers, S. A., Zhu, C. & Leskovec, J. Information diffusion and external influence in networks. In Proceedings of the 18th ACM SIGKDD international conference on Knowledge discovery and data mining, 33–41 (2012).

Barjašić, I., Štefančić, H., Pribičević, V. & Zlatić, V. Supplementary information. https://github.com/ibarjasi94/default_process. https://github.com/ibarjasi94/default_process.

Newman, M. E., Barabási, A. -L. E. & Watts, D. J. The Structure and Dynamics of Networks. (Princeton University Press, 2006).

Pastor-Satorras, R. & Vespignani, A. Epidemic spreading in scale-free networks. Phys. Rev. Lett. 86, 3200 (2001).

D’Agostino, G., Scala, A., Zlatić, V. & Caldarelli, G. Robustness and assortativity for diffusion-like processes in scale-free networks. EPL Europhys. Lett. 97, 68006 (2012).

Nadakuditi, R. R. & Newman, M. E. Graph spectra and the detectability of community structure in networks. Phys. Rev. Lett. 108, 188701 (2012).

Paulau, P. V., Feenders, C. & Blasius, B. Motif analysis in directed ordered networks and applications to food webs. Sci. Rep. 5, 11926 (2015).

Schwarze, A. C. & Porter, M. A. Motifs for processes on networks. arXiv preprint arXiv:2007.07447 (2020).

LaRock, T. et al. Hypa: Efficient detection of path anomalies in time series data on networks. In Proceedings of the 2020 SIAM International Conference on Data Mining, 460–468 (SIAM, 2020).

Elfadaly, F. G., Garthwaite, P. H. & Crawford, J. R. On point estimation of the abnormality of a Mahalanobis index. Comput. Stat. Data Anal. 99, 115–130 (2016).

Acknowledgements

VZ and IB acknowledge partial support form QuantiXLie Centre of Excellence, a Project co-financed by the Croatian Government and European Union through the European Regional Development Fund - the Competitiveness and Cohesion Operational Program (Grant KK.01.1.1.01.0004, element leader N.P.). HS and VZ had their research supported by the European Regional Development Fund under the Grant KK.01.1.1.01.0009 (DATACROSS). VZ also acknowledges the Croatian Science Foundation (HrZZ) Projects No. IP-2016-6-3347 and IP-2019-4-3321. Authors also want to express gratitude to Andrea Gabrielli and Ingo Scholtes for helpful discussions.

Author information

Authors and Affiliations

Contributions

V.Z. and H.S. conceived the problem, V.Z., H.S. and V.P. gathered the data, I.B. made all the simulations, All authors analysed the results. and reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Barjašić, I., Štefančić, H., Pribičević, V. et al. Causal motifs and existence of endogenous cascades in directed networks with application to company defaults. Sci Rep 11, 24028 (2021). https://doi.org/10.1038/s41598-021-02976-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-02976-8

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.