Abstract

A high-precision camera intrinsic parameters calibration method based on concentric circles was proposed. Different from Zhang’s method, its feature points are the centers of concentric circles. First, the collinearity of the projection of the center of concentric circles and the centers of two ellipses which are imaged from the concentric circles was proved. Subsequently, a straight line passing through the center of concentric circles was determined with four tangent lines of concentric circles. Finally, the projection of the center of concentric circles was extracted with the intersection of the straight line and the line determined by the two ellipse centers. Simulation and physical experiments are carried out to analyze the factors affecting the accuracy of circle center coordinate extraction and the results show that the accuracy of the proposed method is higher. On this basis, several key parameters of the calibration target design are determined through simulation experiments and then the calibration target is printed to calibrate a binocular system. The results show that the total reprojection error of the left camera is reduced by 17.66% and that of the right camera is reduced by 21.58% compared with those of Zhang’s method. Therefore, the proposed calibration method has higher accuracy.

Similar content being viewed by others

Introduction

Accurate calibration of intrinsic and extrinsic parameters of cameras is a principal problem for machine vision that has been widely explored in the industrial inspection field. It is of the highest importance in camera calibration to find a sufficiently large number of known 3D points in world coordinates and their projections in 2D images1. The accuracy of camera calibration is largely dependent on the localization of image points, which is usually evaluated by reprojection errors2,3,4. The reprojection errors represent the error between real image coordinates and reprojected coordinates according to the calibration results.

Most calibration approaches are performed with high-precision or special structure targets that are difficult to manufacture. According to the employed targets, the calibration methods can be divided into 1D methods5,6,7,8,9, 2D method10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26 and 3D method2,3,27,28,29,30,31 and approximate 3D method32,33,34,35,36,37,38,39,40. The targets in 1D methods are usually calibration rods composed of several collinear points. Since they are easily captured by multiple cameras at the same time, this method is often used for multi-camera calibration. However, there are few collinear points, so the calibration accuracy is usually not high. The targets in 2D methods are usually calibration boards with given patterns, such as circles16,17,18,19,20,21,22,23,24,25, checkerboard10,11,12,13,14,15 or other star-shaped pattern26. The targets in 3D methods are usually composed of two or more planes with definite position relationships, which may be helpful to obtain higher calibration accuracy but also leads to the problems of high manufacturing cost and the complex calibration process. Compared with 1D and 3D targets, 2D patterns are easy to design, and there are enough feature points. Therefore, the 2D method is more accurate and simpler.

The chessboard calibration method proposed by Zhang10 is one of the most representative 2D calibration methods, which requires four corners and at least three poses of the target. The initial values of camera parameters are obtained through a linear model. Then, an objective function considering nonlinear distortion is established. The maximum likelihood estimation is employed to improve the estimation accuracy of camera parameters. Zhang’s method has high accuracy, and its results are usually used as the ground truth. However, Zhang's method is very time-consuming for multi-camera systems, and its robustness is easily affected by the position of the calibration plate.

To address the above problems, some improved methods of Zhang’s method have been proposed. An adaptive extraction and matching algorithm for checkerboard inner corners was proposed by Qi et al.12 to address the problems of manual operation and heavy time consumption in traditional calibration methods. Chung introduced the neural-network model to the checkerboard-based calibration method to compensate for lens distortion13. An illumination robust subpixel Harris corner algorithm was proposed, which was employed to improve the checkerboard-based calibration method to achieve high-precision calibration for complicated illumination conditions14. Liu et al.15 improved the checkerboard-based calibration method by introducing more constraints to the objective function that was established in the 3D coordinate system, such as the adjacent distance constraint, the collinear constraint and the right-angle constraint. Corner detection is a crucial step for Zhang’s method and its improvement, and most of the above improved methods are devoted to improving the accuracy and robustness of corner extraction. However, the corner detection still needs further work.

Because circles are common geometric features in industrial scenes and the circle detection algorithm is more robust than the corner detection algorithm for images with slight defocusing or images captured in low-light environments, circle patterns are typically used to make calibration targets. The camera parameters are calibrated by using the geometric properties and the projective invariances of the circle. A calibration target may contain a single circle or multiple circles. When there are multiple circles in the target, the circles can have various positional relationships, such as separation, concentricity and tangency.

A circular center extraction method based on dual conic geometric characteristics was proposed by Zhao and Liu21. And some pairs of end points of diameters can be subsequently determined, which were employed to obtain some pairs of vanishing points of orthogonal directions. Therefore, the intrinsic parameters can be calibrated on the basis of the principle of projective geometry. Liu et al. proposed an intrinsic parameter calibration algorithm by using the conjugate imaginary intersections of two ellipses projected from two coplanar intersecting circles22. Zhao et al.23 developed a plane target that consists of two concentric circles and straight lines passing through the center of the circles. Based on the invariance of the cross-ratio41, the coordinates of the center and vanishing points were obtained, and then the camera intrinsic parameters were calibrated according to the constraints between the image of absolute conic and vanishing points. Last, the lens distortions were corrected by minimizing the objective function established mainly according to the collinearity constraints. Shao et al. proposed a concentric circle-based calibration method24, of which the key is to obtain three eigenvectors of the concentric circle projection matrix. The corresponding eigenvectors of two identical eigenvalues represent points on the infinity line, and the other eigenvector is the circle center. Then, the vanishing line of the light plane is obtained from the image of concentric circles. Wang et al. extended Pascal’s theorem to the complex number field. And a pair of conjugate complex points which is the image of a circular point, was calculated accordingly by them. The equations of the camera intrinsic parameters and the conjugate complex points were established on the basis of projective principles, and then the camera intrinsic parameters were directly calibrated25. Most of the above methods are based on the principle of projective geometry and use the technique of circular points and the technique of vanishing points and/or vanishing lines. Meng and Hu19 were the first to calibrate the camera intrinsic parameters based on the technique of circular points with a hub-and-spoke plane target including six spokes. However, Li et al.26 pointed out that Meng and Hu’s method has two major shortcomings. A numerical method should be employed in these methods to solve the conjugate complex points, such as Newton iteration25. However, the Newton iterative method is greatly influenced by the initial value, and its calculation burden is relatively large.

Different from the above circle-based calibration method, Zhang et al.42 designed a 2.5D calibration target, which is a pyramid containing four coded calibration plates that employ circular features. Zhang’s method does not need complicated numerical calculations to extract the coordinates of the center of the circle. The coordinates of the center of the ellipse, which are equal to the mean values of the coordinates of pixels on the ellipse edge, are directly used as the coordinates of the image of the circle center. However, there is a principle error in using the center of the ellipse to replace the center of the circle, as proven by Ahn et al.43. Therefore, Liu et al.44 proposed a circle center extraction method, which employs a total of nine points to compensate the principle error mentioned above. However, the error is still large.

A concentric circle center extraction method based on projective properties and geometric constraints is studied in this paper, which only needs linear operation and does not need complex numerical calculation, especially nonlinear iterative operation. Subsequently, a camera intrinsic parameter calibration method based on a concentric circle array is proposed.

The rest of this paper is organized as follows. In “Mathematical model for extracting center coordinates of concentric circles” section introduces a mathematical model for the extraction of the center coordinates of concentric circles. In “Circle center extraction experiment and result analysis” section presents the circle center extraction experiment and result analysis. calibration target design, factor analysis, experimental results, comparisons and discussions are given in “Concentric calibration template and its application” section, and then conclusions are summarized in “Conclusion” section.

Mathematical model for extracting center coordinates of concentric circles

Imaging model of circles

The general algebraic equation of an ellipse is

where \({\varvec{Q}} = \left[ {\begin{array}{*{20}c} a & {b/2} & {d/2} \\ {b/2} & c & {e/2} \\ {d/2} & {e/2} & f \\ \end{array} } \right]\) and \(\user2{\mathop{X}\limits^{\rightharpoonup} } = \left[ {\begin{array}{*{20}c} x & y & 1 \\ \end{array} } \right]\).

Then, the coordinates of the center of the ellipse are

We establish a world coordinate system whose z-axis is perpendicular to the circle plane and whose origin is at the circle center; then, the circle equation is

where r is the radius of the circle and \({\varvec{C}} = \left[ {\begin{array}{*{20}c} 1 & 0 & 0 \\ 0 & 1 & 0 \\ 0 & 0 & { - r^{2} } \\ \end{array} } \right]\).

We suppose the homography matrix and its inverse are H and Ω, respectively.

According to the principle of projective geometry, Q and Ω satisfy the following relation.

According to Eq. (5), we can rewrite Eq. (2) as follows.

where A, B, C, D, E and F are six quantities related only to the elements of matrix H.

Two circles with radii of r1 and r2 are projected into two ellipses, and the central coordinates of the ellipses are (x1, y1) and (x2, y2), respectively. Two points determine a straight line on the image plane, and the slope k of the straight line is

This shows that the centers of ellipses projected from the concentric circles are on a straight line that is not related to the radii of the two circles.

If r1 > r2 and r2 = 0, Eq. (6) can be rewritten as

where (xc, yc) is the pixel coordinate of the circle center.

The slope k of the straight line determined by (x1, y1) and (xc, yc) is

This shows that the circle center is on the line determined by (x1, y1) and (x2, y2), and the pixel coordinates of the circle center can be extracted with the constraint.

Error of circle center extraction

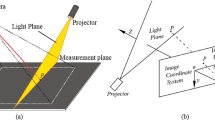

As shown in Fig. 1, o–xyz is the camera coordinate system, π1 is the space plane, and π2 is the image plane. When the circle on π1 is projected to π2, the image is an ellipse. AB is the diameter of the circle, and Aʹ and Bʹ are the images of point A and point B, respectively. Therefore, the midpoint C of AB is the circle center, of which the image is Cʹ, and the midpoint E of AʹBʹ is the ellipse center. When π1 and π2 are parallel, E and Cʹ coincide; otherwise, they do not coincide, which leads to error.

A simulation is carried out to further clarify the error of circle center extraction. The camera intrinsic parameters are fx = fy = 3000, u0 = 320 and v0 = 240. The camera external parameters are α = 20°, β = 15°, γ = 5°, x0 = 20 mm, y0 = 20 mm and z0 = 600 mm. The radii of the circles range from 1 to 10 mm. A total of 120 points equally spaced on each circle are taken, and each group of 120 points is fitted to obtain 10 ellipses, as shown in Fig. 2.

As shown in Fig. 3, the x-axis is δx where δx = xc − xce, and the y-axis is δy, where δy = yc − yce. We can confirm that: (1) the ellipse centers are collinear and (2) as the radius increases, the coordinate errors between the ellipse centers and the projection of the circle center also increase.

Circle center extraction based on projective invariance

In projective geometry, a straight line is still a straight line after projection. For a point on a straight line, its projection point is still on the projection line. A straight line is tangent to a circle and a projection line is tangent to the projection of the circle. As shown in Fig. 4, l1 and l2 are the tangents of c1 and their intersection is p1. l4 and l5 are the tangents of c2 circle and their intersection is p2. l3 is a straight line through p1 and p2. Line l6 intersects c1 at points p3 and p4 and c2 at points p5 and p6.

The equations of c1 and c2 are

If the homogeneous coordinate of \(p^{\prime}_{i}\) is \(\tilde{\user2{x}}_{i}\) where i = 3, 4, 5 and 6. Then the equations of lines \(l^{\prime}_{1}\), \(l^{\prime}_{2}\), \(l^{\prime}_{4}\) and \(l^{\prime}_{5}\) are

\(p^{\prime}_{1}\) is the intersection of \(l^{\prime}_{1}\) and \(l^{\prime}_{2}\) and \(p^{\prime}_{2}\) is the intersection of \(l^{\prime}_{4}\) and \(l^{\prime}_{5}\). The homogeneous coordinates of \(p^{\prime}_{1}\) and \(p^{\prime}_{2}\) are

The linear equation through \(p^{\prime}_{1}\) and \(p^{\prime}_{2}\) is

The homogeneous coordinate of the projection of the circle center \(p^{\prime}_{8}\) is

where \(l^{\prime}_{7}\) is a straight line through the centers of the ellipses of e1 and e2.

Multiple \(p^{\prime}_{8}\) can be obtained by involving more \(l^{\prime}_{6}\) and then the mean value of the coordinates of multiple \(p^{\prime}_{8}\) was obtained which is regarded as the projection of circle center.

Circle center extraction experiment and result analysis

Simulation experiment using equal-space points of circles

The intrinsic parameters of the camera remain unchanged. The camera external parameters are α = 0 rad, β = π/3 rad, γ = 0 rad, x0 = 20 mm, y0 = 20 mm and z0 = 600 mm. The radius of c2 ranges from 1 to 10 mm and the radius of c1 is twice that of c2. A total of 120 points are selected on each circle at equal intervals, and then 20 ellipses are fitted. The pixel coordinates of the projection of the concentric circle center are extracted by the big-ellipse method, the small-ellipse method, the cross ratio method41, the nine-point method44 and our method.

As shown in Fig. 5, the errors of the above methods increase with increasing radius of the circle. The error of our method is the smallest, which is 0.135 pixels, and the error of the cross ratio method is the second smallest, which is 0.453 pixels. The error of the large ellipse method is the largest, which is 4.824 pixels. Compared with the errors of the cross ratio method and the large ellipse method, those of the proposed method are 48.12% and 71.72% smaller, respectively.

When the radius of c2 is 1 mm and that of c1 is 2 mm, the camera external parameters α = γ = 0 rad, and β ranges from π/12 rad to π/3 rad. The influence of the camera external parameters on the pixel coordinate extraction accuracy of the circle center is shown in Fig. 6. The influence of Gaussian noise with μ = 0 and σ = 0.1 ~ 1.0 is shown in Fig. 7.

According to Fig. 6, only the error of the nine-point method increases with β. When β = π/12 rad, the error of our method is the smallest, which is only 0.007 pixels, while the error of the cross ratio method is 0.168 pixels. The error of our method is approximately 94.64% lower than that of cross ratio method. When β = π/3 rad, the error of our method is still the smallest, which is 0.282 pixels, while the error of the cross ratio method is 0.168 pixels. Compared with the cross ratio invariant method, the error of our method is 37.74% smaller.

According to Fig. 7, when σ = 0.9, the error of our method is 0.065 pixels larger than that of the cross ratio method. In addition, our method is least affected by noise, and the minimum error of our method is 0.066 pixels, which is 67.60% less than that of the cross ratio method.

Projective transformation-based simulations

A concentric circle template was drawn by CAD software and then projective geometric transformations are carried out, as shown in Fig. 8a. Then, the transition effects of contour edges are obtained by adding Gaussian noise with μ = 0 and σ = 0.8 to the transformed image, which is subsequently smoothed, as shown in Fig. 8b.

The radius of c2 is 1 mm, and that of c1 is 2 mm. The coordinates extracted by the cross ratio method, the nine-point method and our method are compared with the corner detection results in Table 1.

According to Table 1, the maximum error of the nine point method is 36.9471 pixels, the minimum error is 0.3297 pixels, the average is 11.6565 pixels and the standard deviation is 10.9788 pixels. The maximum and minimum errors of the cross ratio method are 0.3385 pixels and 0.2113 pixels, and the average error and standard deviation are 0.3039 pixels and 0.0355 pixels, respectively. The maximum and minimum errors of our method are 0.3946 pixels and 0.0355 pixels, respectively. The average error and standard deviation are 0.188 pixels and 0.107 pixels, respectively. The error of our method is the smallest, but the cross ratio method is relatively stable.

Circular imaging experiment and results

A concentric circle template is printed to further verify the accuracy of coordinate extraction of our method, as shown in Fig. 9.

Because of the large error of the nine-point method, this method is not used. Instead, the corner detection method, cross ratio method, small-ellipse method and our method are studied, and the results are shown in Fig. 10.

According to Fig. 10, compared with the corner method, the maximum error of the small-ellipse method is 28.211 pixels, the minimum error is 2.7074 pixels, the average error is 10.9562 pixels and the standard deviation is 7.5066 pixels. The maximum error of the cross ratio method is 4.8021 pixels, the minimum error is 0.7616 pixels, the average error is 2.2626 pixels and the standard deviation is 1.4764 pixels. The maximum and minimum errors of our method are 3.4 pixels and 0.5657 pixels, and the average error and standard deviation are 1.7491 pixels and 1.0533 pixels, respectively.

Therefore, the accuracy of our method is the highest. Compared with those of the cross ratio method, the maximum error, minimum error, average error and standard deviation of our method are 29.20%, 25.72%, 22.70% and 28.66% less, respectively. Compared with the small ellipse method, the accuracy of our method is higher and the maximum error, minimum error, average error and standard deviation are 87.95%, 79.11%, 84.04% and 85.97% less, respectively.

Our method was compared with a geometric-based algorithm45 and an algebraic-based algorithm46 to furtherly examine its performance of the feature extraction, both of which are used in newly published articles. The geometric-based algorithm uses the principle that the polar lines intersect at the center of the circles to establish an objective function to extract the center coordinates of the concentric circle. The algebraic-based algorithm obtains the center coordinates of concentric circles by solving the eigenvector of elliptic coefficient matrix. The validation experiments were carried out on a set of ten images and each algorithm was performed five times on images. The mean and standard deviation of the errors of the center coordinate extractions are counted and tabulated in Table 2.

According to Table 2, the feature extraction accuracy of the proposal is higher than that of the other two methods. For u-coordinates, the mean of circle center extraction errors of the geometry based algorithm, the algebra based algorithm and the proposal are 5.5970, 1.4556 and 1.0957 respectively and the results of the two algorithms are 4.5 and 0.36 larger than that of the proposal; the standard deviation are 2.0573, 0.8268 and 0.6839 pixels respectively and the results of the two algorithms are 1.37 and 0.14 pixels larger than that of the proposal. For v-coordinates, the mean are 1.4809, 0.9615 and 0.9424 pixels respectively and the results of the two algorithms are 0.54 and 0.02 pixels larger than that of the proposal; the standard deviation are 1.1301, 0.6650 and 0.6260 pixels respectively and the results of the two algorithms are 0.5 and 0.04 pixels larger than that of the proposal.

Although the accuracy of our method is high, its accuracy and robustness can be further improved by enhancing the ability of edge detection and eliminating outliers to reduce ellipse fitting error.

Concentric calibration template and its application

Simulation image generation and experiment

Simulation image generation

A rectangular array calibration template consists of m rows and n columns of concentric circles, and the origin is located in the upper left corner. The center coordinate of the first concentric circle in the upper left corner is (x0, y0), and the intervals of the center along the x-axis and y-axis are Δx and Δy, respectively. Then, the center coordinates of the ith column and the jth row are

where \(2 \le i \le n\) and \(2 \le j \le m\).

Here, r1 is the radius of c1, and r2 is the radius of c2. The interval [r2, r1] is divided into m equal parts, and the interval [0, 2π] is divided into n equal parts. According to the pinhole camera model, the noninteger pixel coordinates of projections of all discrete points on circles are obtained on the basis of projective transformation, which are enlarged to create a K times magnified image of the template. Then, the magnified image is downsampled with a scaling factor of 1/K and smoothed with a mean filter to create the simulation image.

Simulation experiment scheme

Here, the influences of image quantities, radius, feature point interval and feature point quantities on the calibration accuracy are analyzed in four groups of simulation experiments with camera parameters f = 8 mm, dx = dy = 0.00345 mm, u0 = 1228.3554 pixels, v0 = 1028.2165 pixels, s = 0, k1 = 0.0823 and k2 = − 0.02.

-

1.

The influences of circle radii are analyzed where the size of the calibration plate is 160 mm × 160 mm, the interval of feature points is 14 mm, the number of images is 21, the radius ratio of c1 and c2 is 2, and the radius of c2 ranges from 1 to 5 mm.

-

2.

The influences of intervals of feature points are analyzed where the radius of c2 is 3 mm, the interval of feature points ranges from 4 to 22 mm and other parameters remain unchanged.

-

3.

The influences of the number of images are analyzed where the number of images ranges from 3 to 39 with an interval of 3 and other parameters remain unchanged.

-

4.

The influences of the number of feature points are analyzed where the number of feature points ranges from 44 to 154 with an interval of 22 and other parameters remain unchanged.

Result of the simulation experiment

According to Fig. 11, when the radius of c2 is larger than 2 mm, the errors of the intrinsic parameters are more stable. The error of fx decreases with increasing radius, and the minimum error of our method is 0.0047, the minimum error of Zhang’s method is 0.0042; the two are basically the same. The maximum error of our method is 2.045, the maximum error of Zhang’s method is 2.164, and the error of our method is 5.50% less than that of Zhang’s method. The error of fy also decreases with increasing radius, and the minimum error of our method is 0.0047, the minimum error of Zhang’s method is 0.0055 and the error of our method is 12.96% less than that of Zhang’s method. The maximum error of our method is 2.069, the maximum error of Zhang’s method is 2.166, and the error of our method is 4.48% less than that of Zhang’s method. The minimum error of u0 is 0.115 pixels and that of Zhang’s method is 0.055 pixels, which is 52.17% smaller. The maximum error of u0 is 0.324 pixels and that of Zhang’s method is 0.396 pixels, which is 18.18% larger. The minimum error of our method v0 is 0.246 pixels, the minimum error of Zhang’s method is 0.279 pixels, and the error of our method is 11.83% less than that of Zhang’s method. The maximum error of our method v0 is 0.294 pixels, the maximum error of Zhang’s method is 0.716 pixels, and the error of our method is 0.422 pixels less than that of Zhang’s method.

According to Fig. 12, with the increase in the intervals between the feature points, the errors decrease. The minimum error of fx of our method is 1.738 and the minimum error of Zhang’s method is 0.094. The maximum error of our method is 19.336 and the maximum error of Zhang’s method is 30.882, which is 37.39% larger. The minimum error of fy of our method is 1.783 and the minimum error of Zhang’s method is 0.0069. The maximum error of our method is 19.374 and the maximum error of Zhang’s method is 30.262, which is 35.98% larger. The minimum error of u0 of our method is 0.113 pixels, and the minimum error of Zhang’s method is 0.284 pixels, so the minimum error of our method is half that of Zhang’s method. The maximum error of our method is 4.996 pixels, and the maximum error of Zhang’s method is 2.519 pixels, so the error of our method is larger than that of Zhang’s method. The minimum error of v0 of our method is 0.048 pixels, and the minimum error of Zhang’s method is 0.217 pixels, so the error of our method is 77.88% less than that of Zhang’s method. The maximum error of our method is 1.177 pixels, and the maximum error of Zhang’s method is 5.939 pixels, so the error of our method is 4.762 pixels less than that of Zhang’s method. Generally, when the interval is more than 14 mm, the error of camera intrinsic parameters is small. Therefore, the interval of 14 mm may be appropriate.

According to Fig. 13, when the number of images is 12 to 30, the error of our method is less than that of Zhang’s method. As the number of images increases, the error in fx decreases. The minimum error of our method is 0.0052, and the minimum error of Zhang’s method is 0.011, so the minimum error of our method is approximately half that of Zhang’s method. The maximum error of our method is 0.082, and the maximum error of Zhang’s method is 0.101, so the error of our method is 18.81% less. The minimum error of fy of our method is 0.0044, and the minimum error of Zhang’s method is 0.0107, so the error of our method is 58.88% less than that of Zhang’s method. The maximum error of our method is 0.087, and the maximum error of Zhang’s method is 0.103, so the error of our method is 15.53% less than that of Zhang’s method, and the error is small when the number of images is between 18 and 30. The minimum error of u0 of our method is 0.292 pixels, and the minimum error of Zhang’s method is 0.287 pixels, so the error of our method is 1.74% less than that of Zhang method. The maximum error of our method is 0.322 pixels, and the maximum error of Zhang’s method is 0.311 pixels, so the results of our method are 3.42% larger than that of Zhang’s method. The minimum error of v0 of our method is 0.285 pixels, and the minimum error of Zhang’s method is 0.265 pixels, so the result of the method is 7.02% larger than that of Zhang’s method. The maximum error of our method is 0.312 pixels, and the maximum error of Zhang’s method is 0.302 pixels, so the result of our method is 3.21% larger than that of Zhang’s method. Generally, when the number of images is between 18 and 27, the calibration error is small. Therefore, 21 images may be appropriate.

According to Fig. 14, when the number of feature points is 88, the calibration error is small. The error of fx of our method is 0.01 and is 0.02 for Zhang’s method. The error of our method is approximately half that of Zhang’s method. The error in fy of our method is 0.0092, and that of Zhang’s method is 0.0217, which is 57.6% larger. The error in u0 of our method is 0.292 pixels and that of Zhang’s method is 0.287 pixels. The result of our method is 1.7% larger that of Zhang’s method. The error in v0 of our method is 0.294 pixels and 0.279 pixels for Zhang’s method. The result of our method is 5.1% larger that of Zhang’s method. Although the error in u0 and v0 is slightly larger than that of Zhang’s method, the maximum difference is less than 0.015 pixels. Therefore, we carefully infer that when the radius is 3 mm, the number of images is 21, the interval of feature points is 14 mm and the number of feature points is 88, a better calibration result is achieved.

Reprojection error

Table 3 tabulates the reprojection errors of the two methods in the pixel coordinate system. According to Table 3, the reprojection error of our method along the u-axis is 0.26% less than that of Zhang’s method, while the projection error of our method along the v-axis is 16.41% less than that of Zhang’s method. This means that the calibration accuracy of our method is slightly higher than that of Zhang’s method.

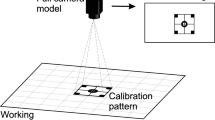

Binocular system calibration experiment

Based on the simulation results, the calibration board is made to calibrate a binocular vision system with cameras of MV-EM510M/C. The images of the calibration board collected by the left and right cameras are shown in Fig. 15.

The calibration results of camera intrinsic parameters including distortion parameters, are shown in Table 4. The results of our method are basically consistent with those of Zhang’s method, and our method is found to have high stability and reliability. Although the two cameras are of the same model, the intrinsic parameters are not the same. It is necessary to calibrate the parameters separately to ensure the measurement accuracy of the binocular system.

The reprojection errors of the two groups of experiments are calculated as shown in Table 5. In general, the proposed method achieves lower reprojection error. The minimum reprojection error for the u-axis is 0.17913 pixels, and that for the v-axis is 0.23204 pixels. However, the left camera reprojection error of Zhang's method for u-axis is 2.55% lower than that of our method in the second experiment. The total projection errors of the left camera and the right camera are also calculated. The total reprojection error of the left camera of our method is 0.4037 pixels, and that of the Zhang method is 0.4903 pixels, which is 17.66% less than that of the Zhang’s method. The total reprojection error of the right camera is 0.3136 pixels, and that of the Zhang method is 0.3999 Compared with Zhang’s method, the proposed method has a 21.58% smaller value, which shows that the overall accuracy of the proposed method is higher, but there are also a small number of problems of large deviation and discrete results, which may be caused by two reasons.

-

1.

There are inevitable errors in the manufacture of printed calibration plates, such as the flatness error of the plate and the roundness error of the concentric circle.

-

2.

The uniform illumination condition is difficult to guarantee and therefore the consistency of image quality is reduced, which leads to the fluctuation of ellipse fitting error and affects the accuracy of circle center extraction.

Conclusion

In this paper, a camera intrinsic parameter calibration method based on a concentric circle array was proposed without corner extraction. The main work included the following: a high precision extraction method of concentric circle centers based on projective characteristics and geometric constraints was proposed, methods of simulation image creation were explored, and corresponding simulation and physical experiments were carried out. The experimental results showed that the total reprojection errors of the left camera and the right camera were reduced by 17.66% and 21.58% compared with Zhang’s method, respectively. Therefore, the proposed calibration method has high accuracy. The calibration accuracy is expected to be further improved by improving the accuracy of edge detection and achieving the accuracy design of the calibration target.

Data availability

All data generated or analysed during this study are included in this published article.

References

Carsten, S., Markus, U. & Christian, W. Machine Vision Algorithms and Applications (Trans Hsinghua University Publishing, 2008).

Tsai, R. Y. A versatile camera calibration technique for high-accuracy 3D machine vision metrology using off-the-shelf TV cameras and lenses. IEEE Journal on Robotics and Automation 3(4), 323–344. https://doi.org/10.1109/JRA.1987.1087109 (1987).

Heikkila, J. Geometric camera calibration using circular control points. IEEE Trans. Pattern Anal. Mach. Intell. 22(10), 1066–1077. https://doi.org/10.1109/34.879788 (2000).

Lepetit, V., Moreno-Noguer, F. & Fua, P. EPnP: An accurate O(n) solution to the PnP problem. Int. J. Comput. Vis. 81(2), 155. https://doi.org/10.1007/s11263-008-0152-6 (2008).

Zhang, Z. Camera calibration with one-dimensional objects. IEEE Trans. Pattern Anal. Mach. Intell. 26(7), 892–899. https://doi.org/10.1109/TPAMI.2004.21 (2004).

Wu, F., Hu, Z. & Zhu, H. Camera calibration with moving one-dimensional objects. Pattern Recogn. 38(5), 755–765. https://doi.org/10.1016/j.patcog.2004.11.005 (2005).

Qi, F., Li, Q., Luo, Y. & Hu, D. Camera calibration with one-dimensional objects moving under gravity. Pattern Recogn. 40(1), 343–345. https://doi.org/10.1016/j.patcog.2006.06.029 (2007).

Geyer, C. & Daniilidis, K. Catadioptric camera calibration. In 7th IEEE International Conference on Computer Vision. Kerkyra, Greece, Greece, Vol. 1, 398–404 (1999). https://doi.org/10.1109/ICCV.1999.791248.

Barreto, J. P. & Araujo, H. Geometric properties of central catadioptric line images and their application in calibration. IEEE Trans. Pattern Anal. Mach. Intell. 27(8), 1327–1333. https://doi.org/10.1109/TPAMI.2005.163 (2005).

Zhang, Z. Flexible camera calibration by viewing a plane from unknown orientations. In Proceedings of the 7th IEEE International Conference on Computer Vision, Kerkyra, Greece, Greece, Vol. 1, 666–673 (1999). https://doi.org/10.1109/ICCV.1999.791289.

Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 22(11), 1330–1334. https://doi.org/10.1109/34.888718 (2000).

Bi, Q. et al. An automatic camera calibration method based on checkerboard. Trait. Signal 34(3–4), 209–226. https://doi.org/10.3166/ts.34.209-226 (2017).

Chung, B. M. Neural-network model for compensation of lens distortion in camera calibration. Int. J. Precis. Eng. Manuf. 19(7), 959–966. https://doi.org/10.1007/s12541-018-0113-0 (2018).

Li, J. P., Yang, Z. W., Huo, H. & Fang, T. Camera calibration method with checkerboard pattern under complicated illumination. J. Electron. Imaging 27(4), 11. https://doi.org/10.1117/1.jei.27.4.043038 (2018).

Liu, X. et al. Precise and robust binocular camera calibration based on multiple constraints. Appl. Opt. 57(18), 5130–5140. https://doi.org/10.1364/ao.57.005130 (2018).

Kim, J.-S., Gurdjos, P. & Kweon, I.-S. Geometric and algebraic constraints of projected concentric circles and their applications to camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 27(4), 637–642. https://doi.org/10.1109/TPAMI.2005.80 (2005).

Feng, G. Plane rectification using a circle and points from a single view. In 18th International Conference on Pattern Recognition, Hong Kong, China, Vol. 2, 9–12 (2006). https://doi.org/10.1109/ICPR.2006.936.

Xianghua, Y. & Hongbin, Z. Camera calibration from a circle and a coplanar point at infinity with applications to sports scenes analyses. In 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 Oct.–2 Nov. pp. 220–225 (2007). https://doi.org/10.1109/IROS.2007.4399329.

Meng, X. & Hu, Z. A new easy camera calibration technique based on circular points. Pattern Recogn. 36(5), 1155–1164. https://doi.org/10.1016/S0031-3203(02)00225-X (2003).

Jiang, G. & Quan, L. Detection of concentric circles for camera calibration. In 10th IEEE International Conference on Computer Vision, Beijing, China, Vol. 1, 333–340 (2005). https://doi.org/10.1109/ICCV.2005.73.

Zhao, Z. & Liu, Y. Applications of projected circle centers in camera calibration. Mach. Vis. Appl. 21(3), 301–307. https://doi.org/10.1007/s00138-008-0162-y (2010).

Liu, Y., Liu, G., Gao, L., Chu, X., & Liu, C. Binocular vision calibration method based on coplanar intersecting circles. In 2017 10th International Congress on Image and Signal Processing, Biomedical Engineering and Informatics, Shanghai, China (2017) 1–5.

Zhao, Y., Wang, X. C. & Yang, F. L. Method of camera calibration using concentric circles and lines through their centres. Adv. Multimed. https://doi.org/10.1155/2018/6182953 (2018).

Shao, M. W., Dong, J. Y. & Madessa, A. H. A new calibration method for line-structured light vision sensors based on concentric circle feature. J. Eur. Opt. Soc. Rapid Publ. 15, 11. https://doi.org/10.1186/s41476-019-0097-0 (2019).

Wang, X. C., Zhao, Y. & Yang, F. L. Camera calibration method based on Pascal’s theorem. Int. J. Adv. Robot. Syst. 16(3), 10. https://doi.org/10.1177/1729881419846406 (2019).

Li, G., Huang, X. & Li, S. G. A novel circular points-based self-calibration method for a camera’s intrinsic parameters using RANSAC. Meas. Sci. Technol. 30(5), 10. https://doi.org/10.1088/1361-6501/ab09c0 (2019).

Zhang, Z., Zhao, R. J., Liu, E. H., Yan, K. & Ma, Y. B. A single-image linear calibration method for camera. Measurement 130, 298–305. https://doi.org/10.1016/j.measurement.2018.07.085 (2018).

Liu, Z., Wu, S. N., Yin, Y. & Wu, J. B. Calibration of binocular vision sensors based on unknown-sized elliptical stripe images. Sensors 17(12), 17. https://doi.org/10.3390/s17122873 (2017).

Weng, J., Cohen, P. & Herniou, M. Camera calibration with distortion models and accuracy evaluation. IEEE Trans. Pattern Anal. Mach. Intell. 14(10), 965–980. https://doi.org/10.1109/34.159901 (1992).

Li, W. M., Shan, S. Y. & Liu, H. High-precision method of binocular camera calibration with a distortion model. Appl. Opt. 56(8), 2368–2377. https://doi.org/10.1364/ao.56.002368 (2017).

Zhao, Z. Q., Ye, D., Zhang, X., Chen, G. & Zhang, B. Improved direct linear transformation for parameter decoupling in camera calibration. Algorithms 9(2), 15. https://doi.org/10.3390/a9020031 (2016).

Teramoto, H. & Xu, G. Camera calibration by a single image of balls: From conics to the absolute conic. In 5th Asian Conference on Computer Vision, Melbourne, Australia, 23–25 (2002) 499–506.

Ying, X. & Zha, H. Geometric interpretations of the relation between the image of the absolute conic and sphere images. IEEE Trans. Pattern Anal. Mach. Intell. 28(12), 2031–2036. https://doi.org/10.1109/TPAMI.2006.245 (2006).

Agrawal, M. & Davis, L. S. Complete camera calibration using spheres: A dual-space approach. Anal. Int. Math. J. Anal. Appl. 34(3), 257–282 (2007).

Zhang, H., Wong, K.-Y.K. & Zhang, G. Camera calibration from images of spheres. IEEE Trans. Pattern Anal. Mach. Intell. 29(3), 499–502. https://doi.org/10.1109/TPAMI.2007.45 (2007).

Wong, K.-Y.K., Zhang, G. & Chen, Z. A stratified approach for camera calibration using spheres. IEEE Trans. Image Process. 20(2), 305–316. https://doi.org/10.1109/TIP.2010.2063035 (2011).

Ruan, M. & Huber, D. Calibration of 3D sensors using a spherical target. In 2nd International Conference on 3D Vision, Tokyo, Japan, 8–11, Vol. 1, (2014) 187–193. https://doi.org/10.1109/3DV.2014.100.

Sun, J., He, H. & Zeng, D. Global calibration of multiple cameras based on sphere targets. Sensors 16(1), 77–90. https://doi.org/10.3390/s16010077 (2016).

Liu, Z., Wu, Q., Wu, S. N. & Pan, X. Flexible and accurate camera calibration using grid spherical images. Opt. Express 25(13), 15268–15284. https://doi.org/10.1364/oe.25.015269 (2017).

Yang, F. L., Zhao, Y. & Wang, X. C. Calibration of camera intrinsic parameters based on the properties of the polar of circular points. Appl. Opt. 58(22), 5901–5909. https://doi.org/10.1364/ao.58.005901 (2019).

Xing, D., Da, F. & Zhang, H. Research and application of locating of circular target with high accuracy. Yi Qi Yi Biao Xue Bao/Chin. J. Sci. Instrum. 30(12), 2593–2598 (2009).

Zhang, J. et al. A robust and rapid camera calibration method by one captured image. IEEE Trans. Instrum. Meas. 68(10), 4112–4121. https://doi.org/10.1109/tim.2018.2884583 (2019).

Ahn, S. J., Warnecke, H. J. & Kotowski, R. Systematic geometric image measurement errors of circular object targets: Mathematical formulation and correction. Photogrammetric Record 16(93), 485–502. https://doi.org/10.1111/0031-868X.00138 (1999).

Liu, Z., Bai, R. & Wang, X. Accurate location of projected circular center in visual calibration. Laser Optoelectron. Prog. 52(9), 110–115. https://doi.org/10.3788/LOP52.091001 (2015).

Li, Y. & Yan, Y. A novel calibration method for active vision system based on array of concentric circles. Acta Electon. Sin. 49(3), 536–541. https://doi.org/10.12263/DZXB.20191033 (2021).

Bu, L., Huo, H., Liu, X. & Bu, F. Concentric circle grids for camera calibration with considering lens distortion. Opt. Lasers Eng. 140, 1–9. https://doi.org/10.1016/j.optlaseng.2020.106527 (2021).

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China (No. 51705238) and the Practice and Innovation Training Program for College Students of Jiang-Su Province (No. 202011276003Z).

Author information

Authors and Affiliations

Contributions

Conceptualization, J.S. and F.H.; methodology, J.S. and J.S.; software, Y.H. and C.Z.; validation, J.S., J.S., Y.H. and C.Z.; data curation, C.Z.; writing—original draft preparation, J.S.; writing—review and editing, F.H.; supervision, F.H.; project administration, F.H.; funding acquisition, F.H.. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hao, F., Su, J., Shi, J. et al. Conic tangents based high precision extraction method of concentric circle centers and its application in camera parameters calibration. Sci Rep 11, 20686 (2021). https://doi.org/10.1038/s41598-021-00300-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-00300-y

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.