Abstract

As the role played by statistical and computational sciences in climate and environmental modelling and prediction becomes more important, Machine Learning researchers are becoming more aware of the relevance of their work to help tackle the climate crisis. Indeed, being universal nonlinear function approximation tools, Machine Learning algorithms are efficient in analysing and modelling spatially and temporally variable environmental data. While Deep Learning models have proved to be able to capture spatial, temporal, and spatio-temporal dependencies through their automatic feature representation learning, the problem of the interpolation of continuous spatio-temporal fields measured on a set of irregular points in space is still under-investigated. To fill this gap, we introduce here a framework for spatio-temporal prediction of climate and environmental data using deep learning. Specifically, we show how spatio-temporal processes can be decomposed in terms of a sum of products of temporally referenced basis functions, and of stochastic spatial coefficients which can be spatially modelled and mapped on a regular grid, allowing the reconstruction of the complete spatio-temporal signal. Applications on two case studies based on simulated and real-world data will show the effectiveness of the proposed framework in modelling coherent spatio-temporal fields.

Similar content being viewed by others

Introduction

Data science plays a primary role in tackling climate and environmental challenges1,2. While the amount of observations from earth-observing satellites and in-situ weather monitoring stations keeps growing, climate modelling projects are generating huge quantities of simulated data as well. Analysing these data with physically-based models can be an extremely difficult task. Consequently, climate change researchers have become particularly interested in the role played by Machine Learning (ML) towards the advances of the state-of-the-art in climate modelling and prediction3. In parallel, ML researchers are becoming aware of the relevance of their work to help tackle the environmental and climate crisis4.

Environmental spatio-temporal data are usually characterized by spatial, temporal, and spatio-temporal correlations. Capturing these dependencies is an extremely important task. Deep Learning (DL) is a promising approach to tackle this challenge, especially because of its capability to automatically extract features both in the spatial domain (with Convolution Neural Networks (CNNs)) and in the temporal domain (with the recurrent structure of Recurrent Neural Networks (RNNs)). However, significant efforts must still be spent to adapt traditional DL techniques to solve environmental and climate-related spatio-temporal problems5.

Recent years have seen numerous attempts to use DL to solve environmental problems, such as precipitation modelling6,7 or extreme weather events8 and wind speed forecasting9. However, until now most of the efforts have been focused on transposing methodologies from computer vision to the study of climate or environmental raster data—i.e. measurements of continuous or discrete spatio-temporal fields at regularly fixed locations10. These data are generally coming from satellites for earth observation or from climate models outputs. Nevertheless, especially at the local or regional scales, there could be several reasons to prefer to work with direct ground measurements—i.e. measurements of continuous spatio-temporal fields on a set of irregular points in space—rather than with raster data11. Indeed, ground data are more precise than both satellite products and climate models output, generally with a higher temporal sampling frequency of phenomena and without missing data due to e.g. clouds. On the other hand, the direct adaptation of models coming from traditional DL is more complex in the case of spatio-temporal data collected over sparsely distributed measurement stations. In this case, the approaches discussed in literature to date only permit to perform modelling at the locations of the measurement stations and, up to our knowledge, no ML-based methodology has been proposed to solve spatio-temporal interpolation problems having as input spatially irregular ground measurements.

To tackle this gap, we propose an advanced methodological framework to reconstruct a spatio-temporal field on a regular grid using spatially irregularly distributed time series data. The spatio-temporal process of interest is described in terms of a sum of products between temporally referenced basis functions and corresponding spatially distributed coefficients. The latter are considered as stochastic and the problem of the estimation of the spatial coefficients is reformulated in terms of a set of regression problems based on spatial covariates, which are then learned jointly using a multiple output deep feedforward neural network. The proposed methodology permits to model non-stationary spatio-temporal processes.

Across two different experimental settings using both simulated and real-world data, we show that the proposed framework allows to reconstruct coherent spatio-temporal fields. The results have practical implications, as the methodology can be used to interpolate ground measurements of climate and environmental variables keeping into account the spatio-temporal dependencies present in the data.

The remainder of this paper is structured as follows. First, the literature related to our study is reviewed. Subsequently, the framework for the decomposition of spatio-temporal data and for the modelling of the obtained random spatial coefficients is introduced, elaborating on the technical details with respect to the regression models used in the experiments. The proposed methodology is then tested on synthetic and real-world case studies. Finally, results are discussed and future research directions are drawn.

Related works

In this section, we contextualize our methodological framework in relation to existing work in the field. Classical methods for spatio-temporal modelling include state-space models12 and Gaussian Processes based on spatio-temporal kernels13, including their applications in geostatistics14. The latter includes the widely adopted kriging models, which can encounter issues in correctly reproducing non-linear spatio-temporal behaviours, as in the case of complex climatic fields. Moreover, the use of kriging models assumes a deep knowledge of the dependence structure present in the data, to be applied to correctly specify its representation via the covariance function used while modelling15. Finally, in kriging the use of high number of covariates, despite being possible in what is generally referred to as co-kriging, is often complicated and has some very restrictive constraints. Traditional ML algorithms have also been used to solve spatio-temporal problems16. However, these techniques typically require human-engineered spatio-temporal features, while DL can automatically learn feature representations from the raw spatio-temporal data17.

The motivation for this study originates from recently proposed DL approaches to analyse spatio-temporal patterns. Geospatial problems have been studied with non-Euclideian spatial graphs via graph-CNNs18. Generative adversarial networks (GAN) have also been used together with local autocorrelation measures to improve the representation of spatial patterns19. Image inpainting techniques have been adapted to the imputation of missing values in gridded spatial climate datasets by reconstructing missing values using stacked partial convolutions and an automatic mask updating mechanism via transfer learning20. Still, this approaches did not consider the temporal dimension of the studied phenomena.

When the temporal dimension is considered and the data are collected as raster, the underlying spatio-temporal field can be modelled with techniques which already proved their effectiveness in extracting features from images and videos, like CNNs21, RNNs22 or with mixed approaches like in Convolutional Long Short-Term Memory networks7. Bayesian methods have also been proposed together with RNNs to quantify prediction uncertainties23.

Differently, few approaches have been proposed to model data collected at spatially irregular locations. Several studies investigated the ability of ML techniques to solve the problem of imputation of missing data in environmental time series24,25. Concerning forecasting problems, a common approach to take into account the correlation among the different measurement locations is to consider them as nodes in a graph, which can then be modelled using specific DL architectures26,27,28. The main limitation of such methodology is that prediction is only possible at the spatial locations of the measurement stations and not at any spatial location of potential interest.

Nonetheless, to the best of our knowledge, no study has been conducted on the possibility of performing interpolation of spatio-temporal data at any spatial location using DL, highlighting the novelty of the framework proposed in this paper.

Methods

As already discussed, when working with spatio-temporal phenomena it is difficult to realistically reproduce the spatial, temporal and spatio-temporal dependencies in the data. One way of keeping into account these dependencies is to adopt a basis function representation29. Numerous basis functions can be used to decompose spatio-temporal data, including complete global functions as with Fourier analysis, and non-orthogonal bases as with wavelets. Here we use the reduced-rank basis obtained through a principal component analysis (PCA), also known as an Empirical Orthogonal Functions (EOFs) decomposition in the fields of climatology, meteorology and oceanography30.

While in ML PCA is generally applied to reduce the dimensionality of the input space, here we use it on the output space—i.e. the spatio-temporal target variable we want to interpolate—in order to decompose the data into fixed temporal bases and their corresponding spatial coefficients. The latter are then considered as the target variable in a spatial regression problem, which can, in principle, be solved using any ML technique. More specifically, the framework that we propose for the interpolation of continuous spatio-temporal fields starting from measurements on a set of irregular points in space consist of the following steps. First, a basis function representation is used to extract fixed temporal bases from the spatio-temporal observations. Then, the stochastic spatial coefficients corresponding to each basis functions are modelled jointly at any desired spatial location with a DL regression technique. Finally, the spatio-temporal signal is recomposed, returning a spatio-temporal interpolation of the field.

In the following, we discuss details about the decomposition of the spatio-temporal signal using EOFs and about the structure of the regression problem to be solved to perform the spatial interpolation of the coefficients. We also provide the mathematical formulation of spatio-temporal semivariograms, which will be later used to empirically evaluate the quality of the prediction models.

Decomposition of spatio-temporal data using EOFs

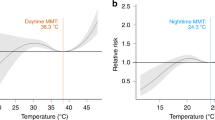

The architecture of the proposed framework. The temporal bases are extracted from a decomposition of the spatio-temporal signal using EOFs. Then, a fully connected neural network is used to learn the corresponding spatial coefficients. The full spatio-temporal field is recomposed following Eq. (1) and used for loss minimization.

Let us suppose that we have spatio-temporal observations \(\{ Z({\mathbf {s}}_i, t_j)\}\) at S spatial locations \(\{ {\mathbf {s}}_i : 1\le i \le S\}\) and T time-indices \(\{t_j : 1\le j \le T\}\). Let \({\tilde{Z}}({\mathbf {s}}_i, t_j)\) be the spatially centered data,

where

is the global mean at time \(t_j\).

The centred data \({\tilde{Z}}({\mathbf {s}}_i, t_j)\) can be represented with a discrete temporal orthonormal basis \(\{ \phi _k (t_j)\}_{k=1}^K\), i.e.

such that

where \(\alpha _k({\mathbf {s}}_i)\) is the coefficient with respect to the k-th basis function \(\phi _k\) at spatial location \({\mathbf {s}}_i\), and \(K = \min \{ T, S-1 \}\). Notice that the scalar coefficient \(\alpha _k({\mathbf {s}}_i)\) only depends on the location and not on time, while the temporal basis function \(\phi _k(t_j)\) is independent of space. The decomposition (1) is theoretically justified by the Karhunen-Loève expansion31, which is based on Mercer’s theorem32.

The basis computation is related to the spectral decomposition of empirical temporal covariance matrix. However, a singular value decomposition (SVD) is more efficient in practice. Furthermore, the SVD performed on \((S-1)^{-\frac{1}{2}} \cdot {\tilde{Z}}({\mathbf {s}}_i, t_j)\) yields directly the normalized spatial coefficients29.

Practically, EOFs computation is equivalent to a PCA33,34,35, where time-indices are considered as variables and the realizations of those variables correspond to the spatial realizations of the phenomenon. As in classical PCA, the relative variance of each basis is given by the square of the SVD singular values. Therefore, in case of a reconstruction with a truncated number \({\tilde{K}} \le K\) of components, the decomposition (1) compresses the spatio-temporal data and reduces its noise.

Modeling of the coefficients

The—potentially truncated—EOFs decomposition returns for each spatial location \({\mathbf {s}}_i\), corresponding to the original observations, \({\tilde{K}}\) random coefficients \(\alpha _k({\mathbf {s}}_i)\). These coefficients can be spatially modelled and mapped on a regular grid solving an interpolation/regression task.

To demonstrate the effectiveness of the proposed approach, we will model the coefficients using a deep feedforward fully connected neural network36. The structure of the network is described in Fig.1. Spatial covariates are used as inputs for the neural network having a first auxiliary output layer where the spatial coefficients are modelled. A recomposition layer will then use the \({\tilde{K}}\) modelled coefficients and the temporal bases \(\phi _k\) resulting from the EOFs decomposition in order to reconstruct the final output—i.e. the spatio-temporal field—following Eq. (1).

The described network has multiple inputs, namely the spatial covariates—which flow through the full stack of layers—and the temporal bases directly connected to the output layer. It also has multiple outputs, namely the spatial coefficients for each basis, all modelled jointly, and the output signal. While the network is trained by minimizing the loss function on the final output, having as auxiliary output the spatial coefficients maps ensures a better explainability of the model, which is of primary importance in earth and climate sciences.

It has to be highlighted that instead of the proposed structure, any other traditional ML algorithm could have been used to model the spatial coefficients as a standard regression problem, indicating a rather interesting flexibility of the proposed framework. However, the proposed DL approach has two main advantages. The first is that most classical ML regression algorithms cannot handle multiple outputs, and thus one would have to fit separate models for each coefficient map without being able to take advantage of the similarities between the tasks. The second advantage is that our proposed DL approach minimizes the loss directly on the final prediction target, i.e. the reconstructed spatio-temporal field of interest. Differently, when using single output models, each of these is separately trained to minimize a loss computed on the spatial coefficients. This should, in principle, lead to a lower performance than with the proposed DL approach minimizing directly the error computed on the full reconstructed spatio-temporal signal.

Spatio-temporal semivariograms

Semivariograms can be used to describe the spatio-temporal correlation structures of a dataset. The (isotropic) empirical semivariogram is given by29,37

where \(N_s(h)\) is the set of all location pairs separated by a Euclidean distance of h within some tolerance, \(N_t(\tau )\) is the set of all time points separated by a temporal lag of \(\tau\) within some tolerance and \(\#\) denotes the cardinality of a set.

In this study, variography is used to understand the quality and the quantity of spatio-temporal dependences extracted by the model from the original data through a residuals analysis38. The semivariograms are numerically computed with the gstat R library39,40.

Results

The proposed methodology has been applied on two different datasets: a simulated spatio-temporal field, and a real-world dataset of temperature measurements. For both applications we will first introduce the dataset. Data generation/collection as well as the approaches used to allocate training, testing and validation sets are described. Then, the main results from the spatio-temporal prediction approach are presented and discussed.

The spatial coefficient maps are modelled using a fully connected feedforward neural network. The network has been implemented in Tensorflow41. For both experiments the following configuration has been adopted. Kernel initializer: He initialization; Activation function: ELU; Regularization: Early Stopping. Optimizer: Nadam; Learning Rate scheduler: 1Cycle scheduling; Number of Hidden Layers: 6; Neurons per layer: 100; Loss function: Mean Absolute Error. Batch Normalization has been used in the real world case study.

Experiment on simulated dataset

To produce realistic 2-dimensional spatial patterns, 20 Gaussian random fields with Gaussian kernel are simulated from the RandomFields R package42 and noted \(X_k({\mathbf {s}}), k = 1, \dots , 20\). Time series \(Y_k(t_j)\) of length \(T = 1080\), for \(k = 1, \dots , 20\), are generated using an order 1 autoregressive model. Then, the simulated spatio-temporal dataset is obtained as a linear combination of the spatial random fields \(X_k({\mathbf {s}})\), where \(Y_k(t_j)\) plays the role of the coefficients at time \(t_j\), i.e.

where \(\varepsilon\) is a noise term generated from a Gaussian distribution having zero mean and standard deviation equal to the 10% of the standard deviation of the noise-free field. Spatial points \({\mathbf {s}}_i\), \(i=1, \dots , S=2000\) are sampled uniformly on a regular 2-dimensional spatial grid of size \(139\times 88\), which will constitute the training locations. The spatio-temporal training set \(\{ Z({\mathbf {s}}_i, t_j)\}\) is generated by evaluating the sequence of fields (2) at the training locations. Spatio-temporal validation and testing sets are generated analogously from 1000 randomly selected locations each.

The training and validation sets are decomposed following the methodology described in the previous section. The cumulative percentage of the relative variance for the first 50 components is represented in Fig. 2. Note that without the addition of the \(\varepsilon\) term the total variance would have been explained with the first 20 components, which is the number of elements used to construct the simulated dataset, see Eq. (2). Nonetheless, even in the presence of the additional noise these components explain about 99% of the variance. Therefore, the neural network is trained using two inputs, namely the spatial covariates corresponding to the x and y coordinates, and \({\tilde{K}} = 20\) outputs.

Model outputs for the simulated spatio-temporal field defined by Eq. (2). Top left : A snapshot of the true spatial field at the fixed time indicated by the vertical dashed in the temporal plot below. Top right : The predicted map at the same time. Bottom : The true time series (in black) and the predicted time series (in orange) at the fixed location marked by a cross in the maps above.

The Mean Absolute Error (MAE) computed on the test set after the modeling with the proposed neural network approach is indicated in Table 1. We also compare the output of the proposed framework to the results obtained using a different strategy. Specifically, instead of predicting all the spatial coefficients at once with the proposed multiple outputs model, we investigated the impact in terms of test error performance due to the separate modeling of each coefficient map. To this aim, both fully connected feedforward neural network (NN) and Random Forest (RF)—which is popular in environmental and climatic literature—have been used to predict the individual spatial coefficient maps, which are then used together with the temporal bases to reconstruct the spatio-temporal field. The neural network has the same structure as the one used with the multiple output strategy, with the exception of the recomposition layer, which is indeed absent. The RF models were implemented in Scikit-learn43 and trained using 5-fold cross validation. It is shown that the use of the proposed multiple output model helps to significantly improve the performances with respect to the approaches based on separate single output models, which have significantly worse performances.

Randomly chosen examples of prediction map and time series are shown and compared with the true spatio-temporal field in Fig. 3. The predicted map recovers the true spatial pattern, and the temporal behaviours are fairly well replicated too.

Figure 4 shows the spatio-temporal semivariograms of the simulated data, of the output of the model and of the residuals—i.e. the difference between the simulated data and the modelled one. All semivariograms are computed on the test points. The semivariogram on the modelled data shows how the interpolation recovered the same spatio-temporal structure of the (true) simulated data, although its values are slightly lower. This imply that the model has been able to explain most of the spatio-temporal variability of the phenomenon. However, it must be pointed out that even better reconstruction of the spatio-temporal structure of the data could be recognizable in the semivariograms computed on the training set, similarly to how the training error would be lower than the testing one. Finally, almost no structure is shown in the semivariogram of the residuals, suggesting that almost all the spatially and temporally structured information—or at least the one described by a two-point statistic such as the semivariogram—has been extracted from the data. It also shows a nugget corresponding to the noise used in the generation of the dataset.

Experiment on temperature monitoring network

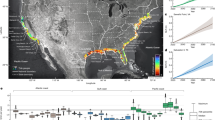

Temperature monitoring network, first 3 components of the EOFs decomposition. Top row : The temporally referenced basis functions. Center row : The normalized spatial coefficients of the corresponding EOFs. Bottom row: The corresponding predicted spatial coefficients provided by the auxiliary outputs of the fully connected neural network (all components).

The effectiveness of the proposed framework in modelling real-world climate and environmental phenomena is tested on a case study of air temperature prediction in a complex Alpine region of Europe. This area (having projected bounds 486660, 77183, 831660, 294683 meters in the spatial reference system CH1903/LV03) constitutes a challenging—but representative—example. Indeed, the region is traversed from south-west to north-east by the Alps chain. This forms a natural barrier, which leads to marked differences in temperature between the two sides of the mountain range. Starting from measurements sampled with hourly frequency from \(1^{st}\) July 2016 to \(30^{th}\) June 2018 over 369 meteorological stations, whose spatial distribution represents different local climates of the complex topography of the region, we will model the spatio-temporal temperature field on a regular grid with a resolution of 2500 meters. Data were downloaded from MeteoSwiss (https://gate.meteoswiss.ch) and include measurements from several meteorological monitoring networks of Switzerland, Germany and Italy. The data were thoroughly explored and obvious outliers were removed. Missing data, corresponding to approximately 1% of the entire dataset, were replaced by a local spatio-temporal mean obtained from the values of the eight stations closest in space over two contiguous timestamps, yielding an average over 24 spatio-temporal neighbours44,45. Before modelling, the dataset was randomly divided into training, validation and testing subsets, consisting of 220, 75 and 74 stations respectively.

The first three components resulting from the EOFs decomposition of the training and validation sets, together with the corresponding temporal bases functions and normalized spatial coefficients, are displayed in Fig. 5. The first two temporal bases clearly show yearly cycles, and a closer exploration of the time series would reveal other structured features such as daily cycles—here not appreciable because of the visualisation of a large amount of time-indices. The normalized spatial coefficient maps unveil varying patterns at different spatial scales.

Fig. 6 shows that 95% of the data variation is explained by the first 24 components. Hence, we implemented two different models. The first one is developed by using all the available components (\({\tilde{K}} = K = 294\)), while the second one adopts a compressed signal keeping 95 % of data variance (\({\tilde{K}} = 24\)). It is well known that temperature strongly depends on topography, and in particular on elevation46. Hence, in addition to latitude and longitude, altitude is added as a spatial covariate in the two models. The trained models will therefore have three inputs, i.e. the three spatial covariates, and \({\tilde{K}}\) outputs, with the latter changing in the two experiments conducted.

Model outputs for the temperature monitoring network. Top left : The predicted map of temperatures using all EOFs components, at the fixed time indicated by the vertical dashed line in the temporal plot below. Top right : The predicted map using only the first 24 components at the same time. Center : The true time series (in black) at a testing station marked by a cross in the maps above, the predicted time series with all EOFs components (in orange) and the predicted time series with the first 24 EOFs components (in green). For visualization purposes, only the first 42 days of the time series are shown. Bottom left: Accuracy plot at the testing station for the model with all EOFs components. Bottom right: Accuracy plot at the testing station for the model with the first 24 EOFs components.

Predicted maps of temperature at a randomly chosen fixed time are shown in Fig. 7 for both model, together with time series at a random testing station. The predicted temperatures at the testing station are compared with the true measurements on accuracy plots and on a time series plot. The model with \({\tilde{K}} = 294\) replicates extremely well the temperature behaviour. The predicted map captures the different climatic zones, while the predicted time series retrieves very well the temporal dependencies in the data. In particular, highly structured patterns such as daily cycles are recovered as well as the variability at smaller temporal scales and abrupt behaviour changes. The accuracy plot further highlights how well the predictions fit the true values. The model with \({\tilde{K}} = 24\) shows comparable results while the dimensionality of the data has been significantly reduced, indicating that it is possible to obtain similar accuracy with compressed data.

Even if not strictly required to model the spatio-temporal field, the spatial coefficient maps can be obtained from the neural network as auxiliary outputs (shown in Fig. 5). Their usage is extremely relevant from a diagnostic and interpretation perspective. Indeed, climate and Earth system scientists are accustomed to the use of EOFs as exploratory data analysis tool to understand the spatio-temporal patterns of atmospheric and environmental phenomena. Hence, the full reconstruction of the spatial coefficients on a regular grid represents a useful step towards a better explainability of the modelled phenomena. Specifically, these maps could be interpreted from a physical standpoint to analyze the contribution of each temporal variability pattern29,31. In our case study, the globally emerging structures correspond to different known climatic zones. As an example, the first map in the bottom row of Fig. 5 clearly shows the contribution of the first temporal basis in the Alps chain, while the third map indicates a strong negative contribution of the corresponding temporal basis at the south of the chain.

As in the case of the simulated dataset, we performed a comparison between the multiple output strategy, where the spatial coefficients are modelled jointly, and the use of separate regression models to predict each coefficient map (Table 1). Once more, the latter approach results in a higher error, both for the models with all the components and for the model with the first 24 ones. In both cases, the use of a single output strategy results in comparable error rates between the RF and the neural network models. Again, we can conclude that the use of a single network to model jointly the spatial coefficients and the spatio-temporal fields yields better performances, since the algorithm is trained to minimize a loss computed on the final output after the recomposition of the signal.

Variography for the temperature monitoring network models. Spatio-temporal semivariograms have been computed on the test points. Top row: semivariograms for the raw data (left), the model implemented using all the EOFs components (center) and its residuals (right). Bottom row: semivariograms for the model implemented using the first 24 EOFs components (left) and its residuals (right).

A variography study was performed on the test data. Figure 8 shows the spatio-temporal semivariograms for the raw test data and for the modelled data and residuals resulting from the models, with all the components and with only the first 24 ones. While both models are able to coherently reconstruct the variability of the raw data, the semivariogram for the model including all the components has a sill, i.e. the value attained by the semivariogram when the model first flattens out, comparable to the one of the raw data. The sill of the semivariogram computed on the modelled data using 24 components is slightly lower, indicating that a certain amount of the variability of the data has not been captured by the model. This is somehow related to the fact that about 5% of the variability of the training data was not explained by the first 24 components, as indicated in Fig. 6. The two semivariograms for the residuals show that the models were able to retrieve most of the spatio-temporal structure of the data. Nonetheless, it can be seen how residuals still show a small temporal correlation, corresponding to the daily cycle of temperature. This is likely because the spatial modeling of each component induces an error, which in the reconstructed field becomes proportional to its corresponding temporal basis. This suggests that even if the spatial component models are correctly modeled, some temporal dependencies may subsist.

Discussion

In this paper we introduced a framework for spatio-temporal prediction of climate and environmental data using DL. The proposed approach has two key advantages. First, the decomposition of the spatio-temporal signal into fixed temporal bases and stochastic spatial coefficients allows to fully reconstruct spatio-temporal fields starting from spatially irregularly distributed measurements. Second, while the spatial prediction of the stochastic coefficients can be performed using any regression algorithm, DL algorithms are particularly well suited to solve this problem thanks to their automatic feature representation learning. Furthermore, our framework is able to capture non-linear patterns in the data, as it models spatio-temporal fields as a combination of products of temporal bases by spatial coefficients maps, where the latter are obtained using a non-linear model. Moreover, it can be shown that the basis-function random effects representation induces a valid marginal covariance function without requiring a complete prior knowledge of the phenomena by the modeller, which would instead be necessary in the applications based on traditional geostatistics. Finally, even if the traditional ML and geostatistical techniques could be used to model separately each single spatial coefficient map, the use of a single DL model allows the development of a network structure with multiple outputs to model them all coherently. Besides, the recomposition of the full spatio-temporal field can be executed through an additional layer embedded in the network, allowing to train the entire model to minimize a loss computed directly on the output signal. We showed that the proposed framework succeeds at recovering spatial, temporal and spatio-temporal dependencies in both simulated and real-world data. Furthermore, the proposed framework can eventually be generalized to study other climate fields and environmental spatio-temporal phenomena—e.g. air pollution or wind speed—or to solve missing data imputation problems in spatio-temporal datasets collected by satellites for earth observation or resulting from climate models.

Researchers in the field of ML are becoming more aware of the relevance of their work in tackling climate change issues, environmental risks (e.g., pollution, natural hazards), renewable energy resources assessment, thus contributing to the new emerging field of environmental, and in particular climate, informatics. With this paper, we sought to broaden the agenda of spatio-temporal data analysis and modelling through deep learning.

Being adaptable to every machine learning models, the approach discussed in this paper may enable users interested in measuring the uncertainties of their model output to use methods allowing its explicit estimation, like for example Gaussian Processes. Nonetheless, further research has to be developed to define procedures to quantify the propagation of uncertainty through the diverse steps of the proposed framework. In addition, further studies must be conducted to analyse the consistency of the spatio-temporal predictions provided by this framework and more generally by any other data-driven method with the patterns observed in the real physical models determining the studied phenomena. With this concern, a promising research direction comes from the possibility of integrating physical and data-driven models. These integrated or hybrid models should ideally be constrained by physical laws while ensuring flexibility and adaptation capacity. Finally, additional fundamental studies will be conducted to extend our approach for spatio-temporal forecasting and multivariate analysis.

References

Jones, N. How machine learning could help to improve climate forecasts. Nature548, (2017).

Runge, J. et al. Inferring causation from time series in earth system sciences. Nat. Commun. 10, 1–13 (2019).

Callaghan, M. W., Minx, J. C. & Forster, P. M. A topography of climate change research. Nat. Climate Change 10, 118–123 (2020).

Rolnick, D. et al. Tackling climate change with machine learning (2019). arxiv:1906.05433.

Reichstein, M. et al. Deep learning and process understanding for data-driven earth system science. Nature 566, 195–204 (2019).

Kühnlein, M., Appelhans, T., Thies, B. & Nauss, T. Improving the accuracy of rainfall rates from optical satellite sensors with machine learning–a random forests-based approach applied to msg seviri. Remote Sens. Environ. 141, 129–143 (2014).

Xingjian, S. et al. Convolutional LSTM network: a machine learning approach for precipitation nowcasting. Adv. Neural Inf. Process. Syst. 28, 802–810 (2015).

Liu, Y. et al. Application of deep convolutional neural networks for detecting extreme weather in climate datasets. arXiv preprint arXiv:1605.01156 (2016).

Liu, H., Mi, X. & Li, Y. Smart deep learning based wind speed prediction model using wavelet packet decomposition, convolutional neural network and convolutional long short term memory network. Energy Convers. Manage. 166, 120–131 (2018).

Bischke, B., Helber, P., Folz, J., Borth, D. & Dengel, A. Multi-task learning for segmentation of building footprints with deep neural networks. In 2019 IEEE International Conference on Image Processing (ICIP), 1480–1484 (IEEE, 2019).

Hooker, J., Duveiller, G. & Cescatti, A. A global dataset of air temperature derived from satellite remote sensing and weather stations. Sci. Data 5, 1–11 (2018).

Fraccaro, M., Sønderby, S. K., Paquet, U. & Winther, O. Sequential neural models with stochastic layers. Adv. Neural Inf. Process. Syst. 2199–2207, (2016).

Datta, A., Banerjee, S., Finley, A. O. & Gelfand, A. E. Hierarchical nearest-neighbor Gaussian process models for large geostatistical datasets. J. Am. Stat. Assoc. 111, 800–812 (2016).

Pebesma, E. & Heuvelink, G. Spatio-temporal interpolation using gstat. RFID J. 8, 204–218 (2016).

Wackernagel, H. Multivariate Geostatistics: An Introduction with Applications (Springer, Berlin, 2013).

Shi, X. & Yeung, D.-Y. Machine learning for spatiotemporal sequence forecasting: A survey. arXiv preprint arXiv:1808.06865 (2018).

Wang, S., Cao, J. & Yu, P. S. Deep learning for spatio-temporal data mining: A survey. arXiv preprint arXiv:1906.04928 (2019).

Henaff, M., Bruna, J. & LeCun, Y. Deep convolutional networks on graph-structured data. arXiv preprint arXiv:1506.05163 (2015).

Klemmer, K., Koshiyama, A. & Flennerhag, S. Augmenting correlation structures in spatial data using deep generative models. arXiv preprint arXiv:1905.09796 (2019).

Kadow, C., Hall, D. M. & Ulbrich, U. Artificial intelligence reconstructs missing climate information. Nat. Geosci. 13, 1–6 (2020).

Shi, X. et al. Deep learning for precipitation nowcasting: A benchmark and a new model. In Advances in Neural Information Processing Systems, 5617–5627, (2017).

Srivastava, N., Mansimov, E. & Salakhudinov, R. Unsupervised learning of video representations using lstms. In International Conference on Machine Learning, 843–852, (2015).

McDermott, P. L. & Wikle, C. K. Bayesian recurrent neural network models for forecasting and quantifying uncertainty in spatial-temporal data. Entropy 21, 184 (2019).

Zhang, Y.-F., Thorburn, P. J., Xiang, W. & Fitch, P. Ssim–a deep learning approach for recovering missing time series sensor data. IEEE Internet of Things Journal 6, 6618–6628 (2019).

Bayma, L. O. & Pereira, M. A. Identifying finest machine learning algorithm for climate data imputation in the state of minas gerais, brazil. Journal of Information and Data Management 9, 259–259 (2018).

Defferrard, M., Bresson, X. & Vandergheynst, P. Convolutional neural networks on graphs with fast localized spectral filtering. In Advances in Neural Information Processing Systems 3844–3852, (2016).

Martin, H. et al. Graph convolutional neural networks for human activity purpose imputation. In NIPS Spatiotemporal Workshop at the 32nd Annual Conference on Neural Information Processing Systems (NIPS 2018) (2018).

Yu, B., Yin, H. & Zhu, Z. Spatio-temporal graph convolutional neural network: A deep learning framework for traffic forecasting. arXiv preprint arXiv:1709.04875 (2017).

Wikle, C., Zammit-Mangion, A. & Cressie, N. Spatio-Temporal Statistics with R (CRC Press, Boca Raton, 2019).

Preisendorfer, R. Principal Component Analysis in Meteorology and Oceanography Developments in atmospheric science (Elsevier, Amsterdam, 1988).

Cressie, N. & Wikle, C. Statistics for Spatio-Temporal Data (Wiley, Hoboken, 2011).

Rasmussen, C. E. & Williams, C. K. Gaussian Processes for Machine Learning (MIT press, Cambridge, 2006).

Bishop, C. M. Pattern Recognition and Machine Learning (Information Science and Statistics) (Springer, Berlin, 2006).

Hsieh, W. W. Machine Learning Methods in the Environmental Sciences: Neural Networks and Kernels (Cambridge University Press, Cambridge, 2009).

Jolliffe, I. Principal Component Analysis (Springer, New York, 2013).

Goodfellow, I., Bengio, Y. & Courville, A. Deep Learning (MIT press, Cambridge, 2016).

Sherman, M. Spatial Statistics and Spatio-Temporal Data: Covariance Functions and Directional Properties (Wiley, Hoboken, 2011).

Kanevski, M., Pozdnoukhov, A. & Timonin, V. Machine Learning for Spatial Environmental Data (EPFL Press, Lausanne, 2009).

Pebesma, E. J. Multivariable geostatistics in S: the SGTAT package. Comput. Geosci. 30, 683–691 (2004).

Gräler, B., Pebesma, E. & Heuvelink, G. Spatio-temporal interpolation using SGTAT. The R J. 8, 204–218 (2016).

Abadi, M. et al. TensorFlow: Large-scale machine learning on heterogeneous systems (2015). Software available from https://www.tensorflow.org.

Schlather, M., Malinowski, A., Menck, P. J., Oesting, M. & Strokorb, K. Analysis, simulation and prediction of multivariate random fields with package RandomFields. J. Stat. Softw. 63, 1–25 (2015).

Pedregosa, F. et al. Scikit-learn: machine learning in python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

Jun, M. & Stein, M. L. An approach to producing space-time covariance functions on spheres. Technometrics 49, 468–479. https://doi.org/10.1198/004017007000000155 (2007).

Porcu, E., Bevilacqua, M. & Genton, M. G. Spatio-temporal covariance and cross-covariance functions of the great circle distance on a sphere. J. Am. Stat. Assoc. 111, 888–898. https://doi.org/10.1080/01621459.2015.1072541 (2016).

Whiteman, C. Mountain Meteorology: Fundamentals and Applications (Oxford University Press, Oxford, 2000).

Acknowledgements

The research presented in this paper was partly supported by the National Research Program 75 “Big Data” (PNR75, Project No. 167285 “HyEnergy”) of the Swiss National Science Foundation (SNSF). Authors are also grateful to Mohamed Laib for the profitable discussions.

Author information

Authors and Affiliations

Contributions

F.A. and F.G. conceived the main conceptual ideas and conduct the residuals analysis. F.A., F.G. and S.R. preprocessed the data. F.A. performed the calculations, visualized the results and wrote the original draft. M.K. carried out the project administration and funding acquisition. All authors discussed the results, provided critical feedback, commented, reviewed and edited the original manuscript, and gave final approval for publication.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Amato, F., Guignard, F., Robert, S. et al. A novel framework for spatio-temporal prediction of environmental data using deep learning. Sci Rep 10, 22243 (2020). https://doi.org/10.1038/s41598-020-79148-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-79148-7

This article is cited by

-

Recently emerging trends in big data analytic methods for modeling and combating climate change effects

Energy Informatics (2024)

-

Exploring the Efficacy of Statistical and Deep Learning Methods for Large Spatial Datasets: A Case Study

Journal of Agricultural, Biological and Environmental Statistics (2024)

-

Evaluating Air Quality Status in Chicago: Application of Street View Imagery and Urban Climate Sensors

Environmental Modeling & Assessment (2023)

-

EEMD-ConvLSTM: a model for short-term prediction of two-dimensional wind speed in the South China Sea

Applied Intelligence (2023)

-

Seasonal analysis of long-term (1970–2020) rainfall variability using clustering and wavelet transform approach in the Mahi River Basin, India

Acta Geophysica (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.