Abstract

Since 2001, hundreds of thousands of hours of underwater acoustic recordings have been made throughout the Southern Ocean south of 60° S. Detailed analysis of the occurrence of marine mammal sounds in these circumpolar recordings could provide novel insights into their ecology, but manual inspection of the entirety of all recordings would be prohibitively time consuming and expensive. Automated signal processing methods have now developed to the point that they can be applied to these data in a cost-effective manner. However training and evaluating the efficacy of these automated signal processing methods still requires a representative annotated library of sounds to identify the true presence and absence of different sound types. This work presents such a library of annotated recordings for the purpose of training and evaluating automated detectors of Antarctic blue and fin whale calls. Creation of the library has focused on the annotation of a representative sample of recordings to ensure that automated algorithms can be developed and tested across a broad range of instruments, locations, environmental conditions, and years. To demonstrate the utility of the library, we characterise the performance of two automated detection algorithms that have been commonly used to detect stereotyped calls of blue and fin whales. The availability of this library will facilitate development of improved detectors for the acoustic presence of Southern Ocean blue and fin whales. It can also be expanded upon to facilitate standardization of subsequent analysis of spatiotemporal trends in call-density of these circumpolar species.

Similar content being viewed by others

Introduction

Underwater passive acoustic monitoring (PAM) for marine mammals is a fast-growing field due to increased availability of, and flexibility in deploying, underwater recording devices1,2,3. PAM has especially high potential to provide information about marine mammals in remote or difficult to access areas, such as Antarctic waters. Since 2001, hundreds of thousands of hours of long-term acoustic recordings that span many years have been collected throughout the Southern Ocean. Many of these recordings were made for the purposes of learning about two endangered species that are especially detectable by PAM: Antarctic blue whales (Balaenoptera musculus intermedia) and fin whales (B. physalus)4.

Monitoring blue and fin whales in the Southern Ocean

Historically, blue whales were heavily exploited throughout the Southern Ocean. Approximately 360,000 blue whales were caught across the Southern Hemisphere in the mid-twentieth century, depleting the population to less than 1% of their pre-whaling population5. The most recent abundance estimate of Antarctic blue whales suggest that the population contained between 1140 and 4440 individuals and may be slowly increasing at a rate between 1.6 and 14.8% per year (95% CI; mean of 8.2%), however this estimate was for the Antarctic summer of 1997/98, and thus is now dated by more than 20 years6. Over 725,000 fin whales were caught during the twentieth century7, yet circumpolar abundance of Southern Ocean fin whales has never been estimated since extant data sources are not sufficient to do so with fidelity8.

Given the endangered status of both blue and fin whales globally, the critically endangered status of Antarctic blue whales9, and the fact that both are long-lived species that are believed to reproduce every 2–3 years10, long-term monitoring is imperative to examine population trends and the effectiveness of current conservation measures (e.g. the moratorium on commercial whaling; https://iwc.int/commercial). PAM from fixed sensors is an ideal method for obtaining cost-effective broad spatial and long-term temporal coverage of blue and fin whale occurrences throughout a vast Southern Ocean region that is challenging to access. Blue and fin whales each produce distinct calls that can be repeated as songs, or produced as individual notes, which are unique to their respective species. In the case of Antarctic blue whales some sounds are unique to their population11,12,13. The repeated, loud, low-frequency, and long-travelling calls from these endangered species provide an extremely efficient means of identifying the presence of whales in the remote Antarctic waters of the Southern Ocean.

The Antarctic Blue and Fin Whale Acoustic Trends Project started in 2009 as one of the original projects of the International Whaling Commission’s Southern Ocean Research Partnership (IWC-SORP; https://iwc.int/sorp), and in 2017 expanded further to become a capability working group of the Southern Ocean Observing System (SOOS; www.soos.aq). The overarching goal of this project is to use acoustics to examine trends in Antarctic blue and fin whale population growth, abundance, distribution, seasonal movements and behaviour.

Through IWC-SORP, the Acoustic Trends Project working group has built upon the pioneering work done in the first decade of the twenty-first century, and has fostered an increasing number of passive acoustic studies focusing on the calls of Antarctic blue whales and to a lesser extent fin whales across broad time and spatial scales, as well as acoustic data processing and analysis methodology. The data from these studies have been collected both from ships during Antarctic voyages and from long-term moored recording devices4,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31. The project working group presently (as of March 2020) has access to more than 300,000 h of passive acoustic data that have been collected throughout the Southern Ocean over the past 20 years.

Automated detection of whale sounds

The volume of existing and incoming acoustic data far exceeds the capacity of human expert analysts to manually inspect it, and as a result automated algorithms have been relied upon to determine the presence of sounds from marine mammals in the recordings. Ecological results from long-term analyses have been reported in the form of presence (e.g. months, days, or hours of recordings with call presence), or as estimates of call numbers per time-period (e.g. see studies listed in Table 1). However, the results are not easily comparable because different studies had different data collection protocols and employed different analytical techniques, neither of which have been standardised. Furthermore, robust measures of bias and variability, which can be dataset-specific, are not always reported alongside results (Table 1).

A variety of automatic detection algorithms have been used to detect the calls of blue whales and fin whales. Algorithms to detect stereotyped calls of these species include matched filters32,33,34, energy detectors35, subspace projection detectors (blue whales only26,36). However, the most widely used algorithm has been spectrogram correlation37, and this has been implemented in a variety of software packages38,39,40 and has been used widely on a variety of different datasets16,17,18,19,20,30,41,42,43,44. Spectrogram correlation is similar to matched filtering except that it acts on the spectrogram, rather than purely in the time or frequency domains; instead of cross-correlating a time series or spectrum, it correlates an image template or kernel pixel-by-pixel with the spectrographic data of interest.

Factors that affect the detector performance

Three main factors can impact the performance of an automated detection algorithm: acoustic properties of the recording site, variability in signals that are being detected, and variability in the characteristics of the recording system. The acoustic recordings from the Southern Ocean span a wide geographic and temporal range and encompass a variety of environments, thus characteristics of the recording site (e.g. propagation loss and noise levels) are expected to be both site- and time-specific43,46.

In addition to site-specific features, the properties of blue and fin whale sounds can change over time and space. Sounds from most blue whale populations have changed slowly and in a predictable manner since they were first described in the 1970s14,47,48,49. On top of the well documented decreases in tonal frequency of sounds from year-to-year, there are predictable intra-annual changes that have also been observed14,48,50. There is some evidence that the properties of fin whale sounds vary geographically in the Antarctic15,18, and have been found to vary temporally in other oceans33,51,52. While these changes may seem small and/or occur over long time periods, they must nevertheless be accounted for when using automated detection algorithms to detect trends in long-term and widely dispersed datasets53.

Lastly, the acoustic recordings around the Antarctic have been made with a variety of instruments. These include: Scripps Acoustic Recording Packages (ARP); Multi-Electronique Autonomous Underwater Recorder for Acoustic Listening (AURAL); Australian Antarctic Division Moored Acoustic Recorders (AAD-MAR), Develogic Sono.Vaults; and Pacific Marine Environmental Laboratory—Autonomous Underwater Hydrophones (PMEL-AUH). Different instruments may have different capabilities, including depth rating, system frequency response, and duty cycle requirements, and these further affect the performance of an automated detector54. For example, the duty cycle of an instrument, for example, is known to affect the accuracy of predicting the presence of Antarctic blue whales in addition to the call rate55. Additionally, the depth of the recorder is expected to change the detection range and noise levels observed at a recorder4.

Here we create and document an open access set of recordings collected around the Antarctic and manual annotations of blue and fin whale call occurrences in a subset of those recordings. This dataset takes the form of an “annotated library” of Antarctic underwater sound recordings. We demonstrate how the library can be used to evaluate the performance of automated detectors over the variety of recording scenarios contained within the library. We also suggest methods to help standardise the reporting of results with a view towards facilitating long-term comparisons of PAM studies of baleen whales around Antarctica.

Methods

Towards a representative circumpolar dataset

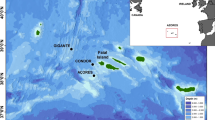

Our annotated library contains data from four geographic regions: the Atlantic, Pacific, and Indian sectors of the Southern Ocean and the Western Antarctic Peninsula (WAP; Fig. 1). In each region, we identified sites that had at least a full year of data from 2014 or 2015, and ideally had two consecutive years. When two consecutive years were not available, another year from the same site was included or two different sites were selected. The Indian sector site also included data from 2005 to increase the temporal span of the library, as well as a second location with data from 2014 and 2017. The data in this library were recorded using a variety of instruments: ARP, AURAL, AAD-MAR, Sono.Vault; and PMEL-AUH (Table 2).

Map of Antarctic underwater recording sites illustrating sites used in this study (red circles) and known locations of long-term recordings from 2001 to 2017 (open circles). Map created using M_Map version 1.4k56 and ETOPO 1 bathymetry (https://www.eoas.ubc.ca/~rich/map.html). Light, medium, and dark blue lines show 1000, 2000, and 3000 m depth contours respectively.

Subsampling from each dataset

Moorings in the Antarctic are typically recovered and serviced at the most once a year due to their remote locations, potentially long periods of ice cover, and reduced/negligible access during Antarctic winter. Thus we define a site-year as a recording from a single instrument and site that is approximately a year in duration. A subset of approximately 200 h of data was selected from each site-year for annotation. This number of hours was chosen a priori and was constrained by budgetary limits, but it was believed to be a reasonable trade-off among analyst time, maintaining adequate sample sizes within each site-year, and annotating a sufficient number of different site-years.

For each site-year a systematic random subsampling scheme was used to generate a representative set of acoustic recordings from the larger dataset. The systematic random subsampling scheme consisted of:

-

1.

splitting the dataset into “chunks” of time. The optimal length of a time chunk will be species and study specific. For the annotated library time was split into mostly hour-long chunks with some exceptions.

-

2.

calculating the spacing between chunks, ts to ensure that the desired sample size of time chunks is created and that there was broad representation of hours in the day across all chunks. For the annotated library, spacing was calculated such that there were at least 150, and usually nearer to 200, annotated periods between the first and last available chunks.

-

3.

Picking a random number between 1 and ts, the spacing, to determine the starting chunk (this was the random element of the subsampling scheme).

The aim of the subsampling scheme was to capture a representative sample of the signals recorded for a given site-year, i.e., to select periods of time with calls that spanned a range of signal-to-noise ratios (SNR) and periods of time without calls, as well as other sounds that might contribute to false positives (though rare events may have been missed). Having a temporally representative subsample of sounds was deemed necessary to understand how a detector would perform when used across the entire dataset.

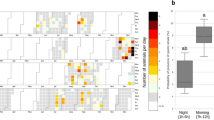

For each site, 10–18 h of data were annotated per month with the exceptions of the Ross Sea in 2014 which had no data for January, and Maud Rise 2014 which recorded only from Jan-Sep (Fig. 2). Over the whole year the subsampling scheme ensured a relatively even distribution of hours across a 24 h cycle, with each site containing between 5 and 10 h inspected for any given hour in the cycle.

However, four sites had been annotated previously, and thus used slightly different subsampling schemes. Additionally, three of these sites had recording duty cycles shorter than an hour. Elephant Island 2013 and 2014 had a duty cycle of 5 min/h. Maud Rise 2014 had a duty cycle of 25 min/hour. For Elephant Island 2014 9 days per month were selected (randomly, but with roughly even spacing between them), and all 5 min segments were analysed for selected days (total duration of all audio segments 216 h). For Maud Rise 2014 200 independent evenly spaced hours were selected and each 25 min segment was analysed (total duration of all audio segments 83.3 h). For Elephant Island 2013 all 5 min segments for every day were analysed from 12 Jan 2013 to 8 Apr 2013. For the remaining months (May–Dec 2013) all segments from one day each month were analysed. Lastly, Greenwich 64 S 2014 had 10 min long sub-samples that were annotated. These were spread over 190 unique hours throughout the year (31.6 h audio duration).

Manual annotations

For manual detection and annotation of calls, recordings were visualised in Raven Pro 1.557. Spectrogram details included a 120 s timespan, frequency limits between 0 and 125 Hz, Fast Fourier Transform (FFT) of approximately 1 s in duration; frequency resolution of approximately 1.4 Hz, and 85% time overlap between successive FFTs. Lower and upper limits of the spectrogram power (spectrogram floor and ceiling) were adjusted for each 1-h segment. The lower limit of spectrogram power was adjusted by the analyst until approximately 25% of the spectrogram was at or below the floor value (i.e. a visual estimate of 25th percentile spectral noise level). The ceiling of the spectrogram was then adjusted so that the difference between ceiling and floor was between 30 and 50 dB relative to full-scale. The ceiling of the spectrogram could then be adjusted further to provide additional contrast in the event of long loud broadband sounds such as ice or prolonged occurrence of baleen whale choruses.

Within each subsample the analyst marked the time–frequency bounds of all occurrences of blue and fin whale sounds. Each analyst had extensive expertise in the identification of blue and fin whale sounds, particularly those from the Southern Hemisphere including the Antarctic. The analyst assigned one of eight different classifications to annotations: Bm-Ant-A, Bm-Ant-B, Bm-Ant-Z, Bm-D, Bp-20, Bp-20Plus, Bp-Downsweep, and Unidentified. Detailed descriptions of each of these classifications (including citations) are provided in Table 3, Figs. 3, and 4. The first two letters of the classification correspond to genus and species, so sounds starting with Bm were produced by blue whales and Bp by fin whales. The remainder of the classification corresponds to particular call types for that species (or sub-species in the case of Antarctic blue whales).

Spectrograms showing examples of Bm-Ant-A (top); Bm-Ant-B (middle); and Bm-Ant-Z (bottom). All spectrograms used a sample rate of 250 Hz, 256 point FFT with 85% overlap. Bm-Ant-A example is from site-year Balleny Islands 2015 and starts at 25-Feb 07:24:52. Bm-Ant-B example is from site-year Elephant Island 2014 starting at 20-Jan 19:00:45. Bm-Ant-Z example is from site-year S Kerguelen Plateau 2014 and starts at 01-Mar 12:25:10. Red boxes are indicative of time–frequency boundaries of manual annotations.

Spectrograms showing Bm-D (top); Bp-Downsweep (middle) and Bp-20 Hz (bottom panel blue box) along with two forms of Bp-20Plus (bottom panel red and green boxes). All spectrograms in this figure used a sample rate of 250 Hz, 256 point FFT with 85% overlap. A chorus of blue and fin sounds is visible in the top and middle panels from 20–30 Hz. Bm-D spectrogram is from S Kerguelen Plateau and starts at 2015-04-16 19:18:00. Bp-Downsweep spectrogram is from S Kerguelen and starts at 2005-04-24 00:05:00. Bp-20 Hz and Bp-20Plus spectrogram is from Balleny Islands and starts at 2015-03-22 00:50:10.

In addition to marking the time–frequency boundaries of all potential detections, the analyst also noted qualitative information about background noise and other sources of sound that were present in each chunk that was inspected, including the presence and intensity of a “chorus” of elevated background noise in the 20–30 Hz band over which Antarctic blue whale z calls and fin whale 20 Hz pulses contain most of their energy18,19.

For each site and classification, the 5th and 95th percentile frequency limits and durations of annotations were measured and plotted to visually identify gross differences among sites as a rough form of “quality control” across sites and analysts. These percentiles also directly informed respective parameters for automated detectors (Fig. 5).

Duration (t90%) and frequency bounds (f5% and f95%) of a subset of individual annotations plotted as 99.9% transparent lines. Top left: blue whale sounds for all site-years. Top right: fin whale sounds for all site-years, excluding ElephantIsland2014 (see “Discussion” for explanation of this exclusion). Black line and black box show the kernel of the spectrogram correlation detector and time–frequency bounds for the spectrogram energy-sum detector respectively. For clarity, fin whale 20 Hz with higher frequency components (Bp-20Plus) are shown as point clouds at the minimum and maximum frequencies rather than vertical lines. Bottom left: unidentified sounds with colours representing each site.

Signal-to-noise ratio, as described by Lurton (2010)60, was then measured for each manual annotation. In brief, the root mean square (RMS) signal and noise power, Zs+n, was measured for the full duration of each detection over the frequency band of interest: 17–29 Hz for Bm-Ant-A, Bm-Ant-B, Bm-Ant-Z; 20–30 Hz for Bp-20 Hz, Bp-20Plus. Since some analysts marked time–frequency boundaries more tightly than others, a buffer of 1 s before and after the observation was then created to ensure that no residual signal was included in the measurement of noise. The noise measurement period was the same duration as the annotation, but split evenly before and after the buffer (i.e. the noise period was d/2 s before and d/2 s after the buffer, where d is the duration of the manual annotation). RMS noise power, Zn and variance of noise power, \(\sqrt {{\Sigma }_{n}^{2} }\) was measured for tnoise over the same band of interest. Finally, the SNR in dB was calculated as:

Automated detectors

In order to demonstrate the utility of the annotated library and compare the site-specific performance of automated detection algorithms, we characterised the performance of two automated detectors commonly used for detecting sounds of Antarctic blue and fin whales for each of the sites in the annotated library: an energy sum detector, and a spectrogram correlation detector. Energy sum detectors rely only on knowledge of the duration and frequency band of the call, so in general can be more flexible if calls are variable within the band of detection. The spectrogram correlation detectors relies on a priori knowledge of the shape of the call in the time–frequency domain, and thus perform better when calls are highly stereotyped with relatively little variation in shape from one call to the next. These two types of detectors were chosen because to demonstrate that the library was suitable for different types of detectors, and not because we believed they were optimal for their respective tasks.

For fin whale 20 Hz pulses (both Bp-20 Hz and Bp-20Plus classifications) we applied an energy sum detector38 which targeted the 20 Hz pulse of fin whales by summing the energy for each spectrogram slice in the band from 15 to 30 Hz. Thus, the detection score was the sum of the squared value of all spectrogram frequency bins (after noise normalisation) at that time step. In addition to the threshold for summed energy, a minimum and maximum time over threshold of 0.5 and 2.5 s as well as a minimum time between detections of 0.5 s were used as criteria for detection of individual fin whale 20 Hz pulses.

For Antarctic blue whale song (i.e. classifications of Bm-Ant-A, Bm-Ant-B, and Bm-Ant-Z), we applied a spectrogram correlation detector37 which targeted Bm-Ant-Z calls, but was also effective at detecting Bm-Ant-A and Bm-Ant-B since these call types are essentially each a subset of the full Z-call. The detection score for the spectrogram cross-correlation detector was the magnitude of the 2D cross-correlation between the correlation kernel and the spectrogram at each time step. Thus the values for threshold are in a somewhat arbitrary units of ‘recognition score’ which is the result of cross-correlation between normalised spectrogram and correlation kernel. In addition to a detection score threshold, a minimum time over threshold and minimum time between calls were also used37.

Detectors were run on the annotated library’s subsets of recordings for each site using Pamguard Version 2.01.0339. Each detector was applied to the subsample of data for each site using a range of thresholds (determined empirically) in order to create a receiver-operator characteristic (ROC) and precision-recall (PR) curve for each site61,62.

Feature extraction and detector design

The spectrogram correlation and energy sum detectors were parameterised by the time and frequency properties of calls, namely the duration and frequency of each unit of each call. The specific time–frequency properties that we used for each detector were chosen based on published descriptions of calls. The detector parameters were validated by simple comparison with measurements from manual annotations, specifically the 5th and 95th percentiles of the energy distribution for each annotation (Fig. 5).

The mean duration of all manual annotations for that classification, \(\overline{ d}\), was used to determine the time boundaries for each automated detection. A “refractory period” of length \(\overline{ d}\) was applied after each detection to prevent new detections from overlapping existing detections. The refractory period prevented multipath arrivals (e.g. reverberation from the seabed and surface that can arrive before or after the detection) from being detected by the automated detector. However, the refractory period had the downside of preventing legitimate detection of calls from different animals that arrived within \(\overline{ d}\) seconds of each-other. This was believed to be a prudent trade-off because multipath arrivals appeared to be far more common than overlapping calls from two different animals. Furthermore, by preventing automated detections from overlapping, the total number of possible automated detections (and true negative/false positive rates) could be calculated from the total duration of the recording,\(\overline{ d}\), refractory period, and total duration of all the manual annotations.

Noise normalisation was applied to the spectrogram prior to automated detection. The noise normalisation algorithm was Pamguard’s ‘Average Subtraction’ algorithm, and this involved subtracting a decaying average for each spectrogram frequency bin at each time step. Specific parameters for the fin whale 20 Hz pulse detector are described in Table 4 and Antarctic blue whale song in Table 5.

To parameterise the blue whale detector the equation

was used to determine the frequency (in Hz) of unit A of Antarctic blue whale calls. In this equation, derived from14, fa is the frequency of unit A, and t is the number of days since 12 March 2002. For each site-year t was set to be the 1st of June for detector parameters that required estimation of fa.

Evaluation of detector performance

Detections from the automated detectors were matched to the human analyst by comparing the start and end times of all pairs of manual and automated detections. Detections were considered a match if there was any time overlap between manual and automated observations. This criterion created the potential for duplicate matches between multiple automated and manual annotations. Duplicates were identified and labelled, but were neither counted as true positives nor false positives when calculating ROC and precision-recall curves.

For each threshold automated detections were tabulated to create a confusion matrix of true positives, false positives, their respective rates, precision, and recall. ROC curves and precision recall curves for each site and detector were then created from each set of true and false positives (Fig. 7).

To investigate the relationship between the number of automated detections and SNR, a generalised additive model (GAM)63 was fitted using results from the automated detection process. For each manually detected call, SNR and whether or not the call was automatically detected was recorded. Specifically, each manual annotation was assigned a value of 1 when any automated detections matched, and a value of 0 when no automated detections matched. The matches were modelled as the response of logistic regression with SNR as a predictor using a GAM with a binomial family error distribution, a logit link function. The GAM was fitted separately for each site using the default number of knots within the package ‘mgcv’63 in R version 3.6.164.

Results

Distribution of annotations throughout the library

The annotated library consisted of 1880.25 h (audio duration) of annotated data across 11 site-years and 7 sites. In total, there were 105,161 annotations across all sites, though the numbers of annotations were neither evenly distributed by site nor classification (Table 6). Bm-Ant-A was the most numerous annotation with 24,363 manual detections in total, while Bm-Ant-Z was the least numerous annotation with 2,515 manual detections in total. Ross Sea 2014 had the fewest annotations over all site-years with only 359 annotations (104 of Bm-Ant-A, and the remainder unidentified). Elephant Island 2014 had the most annotations of all site-years with 21,438 in total including unidentified sounds.

The percentage of hours with each type of annotation was also variable across sites (Table 6). Bm-Ant-A had the highest percentage across all sites ranging from 0.6 to 91.2% of hours, while Bp-20Plus had the lowest proportions across all sites with no Bp20Plus detections at Casey 2014 or Ross Sea 2014. Antarctic blue whale classifications were generally present in higher percentage of hours than fin whale sounds across most site-years (Table 6).

Description of classification features

Within each classification the 5th and 95th percentiles of the frequency bounds and durations were similar across sites, but with a few notable exceptions. Annotations of Bp-20 Hz, Bp-20Plus, and Bp-Downsweep from Elephant Island 2014, appeared to have longer durations than these classifications from other sites. However, visual comparison of these annotations suggest that this difference appeared to arise from the way the analyst marked annotations (i.e. more generous time-boundaries than other analysts) rather than true difference in the duration of the sound. This suggests that our use of the 90th percentile energy duration did not provide a measure of duration that was fully robust against analyst variability. Thus, different features or measures of duration may be more robust or appropriate for developing automated detectors and/or classifiers.

The stereotyped calls of Antarctic blue whales (Bm-Ant-A, Bm-Ant-B, and Bm-Ant-Z) and those of fin whales (Bp20Hz, Bp20Plus) are well described in the scientific literature, and are very distinctive from one another, and this was reflected in the plots of their 90% duration and 5th–95th percentile frequency bounds. In contrast, the properties of Bm-D and Bp-Downsweep, have not been as well defined in the literature and have forms that appear very similar to each other. Thus these classes have higher potential for confusion and a higher likelihood of being marked as unidentified. As a result, the time–frequency bounds of unidentified calls combined two categories: (1) calls that clearly did not fit into any of the defined classifications, and (2) calls that were intermediate between Bm-D and Bp-Downsweep. However, by restricting annotations to only signals that can be definitively attributed to one species or the other, they do appear to be distinguishable using duration and frequency (Fig. 5). This is an instance where having multiple experienced analysts annotate the same data set might converge on clear guidelines for distinguishing between the two call types. While decisions to only annotate or detect signals that are clearly attributable to a known species are necessary and justifiable, further research on acoustic behaviour would be required to determine whether this has downstream implications for making accurate population abundance estimates.

In contrast to the duration measurements, the upper frequency limit of the Bp-20Plus call type did show true differences across sites revealing geographic separation similar to that which has been described in previous studies15,18. Gedamke (2009)15 found that fin whales detected on recorders in the Indian Ocean (including sites south of 60°S had higher-frequency components near 100 Hz, while fin whales detected in the Tasman Sea (Pacific Ocean including sites south of 60° S) had higher frequency components at 82 and 94 Hz. Širović et al. (2009)18 found that fin whale sounds recorded off the WAP and Scotia Sea had higher frequency components around 90 Hz, while recordings off East Antarctica had higher frequency components near 100 Hz. In our study, the Indian and Atlantic sectors had higher frequency components around 100 Hz, while the WAP and Pacific sectors were around 90 Hz. Recordings investigated by Gedamke (2009)15 and Širović et al. (2009)18 were made from 2003 to 2007, whereas all but one of our recordings were made in 2013–2017. Thus, there appears to be decadal-scale stability in the broad geographic distribution and form of these sounds.

Temporal distribution of annotations within a site-year

In general there were more annotations from Feb through May (late summer through autumn) than in other months, though there were a number of exceptions to this general trend (Fig. 6). Bm-Ant-A had maximum number of annotations from June–August at Elephant Island 2014 and Kerguelen 2014. Bm-Ant-Z also peaked in July at Kerguelen 2014. At Casey 2014 Bm-D had a maximum in December, while at Elephant Island 2014 Bm-D had maximum monthly annotations in October. At Elephant Island 2014 Bp-Downsweep had a maximum in January.

Rate of annotations per season for each site and sound type. Rate is calculated as the total number of annotations in that season divided by the total effort (in hours) for that season. Antarctic blue whale tonal-sounds are in the left column. Fin whale 20 Hz pulses are in the middle column. Blue D, fin downsweeps, and unidentified calls are in the third column. Vertical scale may differ for each panel.

While there is a temptation to speculate on the drivers of these temporal trends, such analyses are beyond the scope of this work, which was the creation of a dataset suitable for characterising automated detectors. Rather, the purpose of plotting monthly number of annotations by site is simply to describe the contents of the Annotated Library and to identify months or seasons that do and do not have sufficient number of detections to allow characterisation of a detector. In that regard, there is a notable lack of fin whale annotations (Bp-20 Hz, Bp-20Plus, and Bp-Downsweep) from July-December.

An example of using the annotated data to examine the performance of automated detectors

ROC, precision-recall, and SNR

ROC and PR curves indicated that detector performance was fair-to-poor for these datasets. ROC and PR curves varied by site for both blue and fin whale detectors with some sites much worse than others (Fig. 7). For example, the true positive rate for the blue whale detector ranged from 8 to 55% at a false alarm rate of 1% (~ 2.8 false positives per hour). The true positive rate for the fin whale detector ranged from 1 to 76% at a false alarm rate of 1% (~ 14.4 false positives per hour).

In addition to variability in detector performance, the distribution of SNR also varied across sites with the combined Bp20 and Bp20 plus distributions showing more variability than the combined Bm-Ant-A, Bm-Ant-B, and Bm-Ant-Z distributions (Fig. 8). The modelled probability of detection at 1% false positive rate was similar across sites at high-SNR, but was more variable across sites at low SNR (e.g. < 0 dB) (Fig. 9).

Empirical cumulative distribution of signal-to-noise ratio (SNR) of manually annotated blue whale song (left) and manually annotated fin whale pulses (right) for each site. Blue whale distributions include calls classified as Bm-Ant-A, Bm-Ant-B, or Bm-Ant-Z. Fin whale distributions includes calls classified as Bp-20 Hz or Bp-20Plus.

Probability of automated detection of annotated call as a function of SNR. Specifically, these are the marginal effects for SNR from the binomial GAM from Eq. (3). Left: blue whale annotations (any of Bm-Ant-A, Bm-Ant-B, Bm-Ant-Z) for the spectrogram correlation detector using the theshold nearest to false positive rate of 0.01. Right: Bp-20 annotations (either Bp-20 Hz or Bp-20Plus) for the energy sum detector with the threshold nearest to false positive rate of 0.01. Shading shows 95% confidence intervals for each site, and rug plots show the distribution of data as.

Discussion

We created an annotated library of blue and fin whale sounds that spans four circumpolar Antarctic recording regions, five different years (2005, 2013, 2014, 2015, 2017), and five different types of instrument. The acoustic data in our library come from a variety of different data collection campaigns conducted by laboratories from five nations.

The distribution of calls in our library varied considerably across sites, years, and species. Antarctic blue whale sounds, particularly Bm-Ant-A, were the most numerous, and are well represented at all sites, and over most times throughout the year. Fin whale sounds had a much more seasonal representation in the annotated library with annotations in late summer and throughout autumn months, and few throughout the rest of the year. Fin whale Bp-20Plus sounds also revealed some degree of biogeographic separation with calls in the Atlantic and Indian sectors having higher upper-frequency components than those in the Pacific and WAP sectors. The annotations in the library form a representative ground-truth dataset that can be used to extract the features of each call type, and also to train and characterise the performance of automated detectors.

Detector performance

To test the utility of the library, we characterised the performance of a spectrogram correlation detector for blue whale calls and an energy sum detector for fin whale calls. The performance of the automated detectors varied by site-year. Neither detector performed particularly well, and some sites and years showed much worse performance than others (Fig. 7). Differences in detector performance broadly followed differences in SNR across sites such that sites with lower SNR had worse performance than those with higher SNR (Fig. 8). Across sites, the automated detectors showed greater variability at low SNR than at high SNR (Fig. 9).

Characterising the performance of an automated detector and estimating the probability of automatic detection as a function of SNR using a representative subset of data, as we have done here, can be important steps towards meaningful comparisons of animal sounds across sites and over time43,46. In addition to performance of the detector, differences in call density (a useful metric for such comparisons) can arise from site-specific factors such as differences in instrumentation (including depth)54, analyst variability53,65, ambient and local noise sources36,66, propagation46,67, and animal behaviour43. These factors are not mutually exclusive, and can interact in a complex manner. Addressing and accounting for how each of these factors affects the call density is beyond the scope of this manuscript, but is a requirement if one wants to make comparisons of acoustic detections that meaningfully address biological questions of distribution and temporal trends. The library and methods we present here for assessing the performance of the detectors are a step away from estimating call-density, which in turn is a step away from estimating animal density68.

None of the passive acoustic studies of Antarctic blue or fin whales to date (listed in Table 1) have completely reported on the performance of their detector over a representative subsample of their data. The methods we have presented here for characterising the performance of a detector on a representative subsample of data constitute a bare minimum of reporting for future studies that utilise automated detectors to study Antarctic blue and fin whale calls. Specifically, reporting should include all parameters for the automated detector including any noise pre-processing steps; distribution and SNR of ground-truth detections throughout the dataset; and true and false positive rates and/or precision and recall of the detector for a representative sample of the data.

We hope the open-access annotated library we have presented here can provide a base dataset upon which to develop improved detectors i.e. with higher true positive rates and lower false positive rates. Here we have extracted duration and frequency measurements from annotations, but the library can readily be used to extract more complex features such as pitch-tracks69 or other time–frequency features70 to train machine learning algorithms37,71,72, deep neural networks73, or other any other advanced detectors that may provide better performance than the spectrogram correlation detector. Better detectors would not only reduce a source of uncertainty in estimating call-density, but would also reduce the amount of analyst effort required to verify true positives and account for false positives.

Future development of this dataset will aim to expand the annotated library to serve as a test-bed for subsequent analyses that address the issues of noise, detection range, and analyst variability to produce standardised outputs that are appropriate for circumpolar comparisons of call-density. This additional development would entail (1) collating pressure calibration details for noise analysis at each site-year, (2) estimating detection range throughout each site-year and (3) having multiple analysts annotate the same subsets of data for the purposes of quantifying analyst bias and variability.

Conclusions

We created an annotated library of blue and fin whale sounds that spans four circumpolar Antarctic recording regions, five different years (2005, 2013, 2014, 2015, 2017), and five different types of instrument. The annotations in the library form a representative ground-truth dataset and we demonstrate how to train, test, and characterise the performance of two common automated detectors using the library. The annotated library we present here can serve as a benchmark upon which detectors can be developed, compared, and improved upon. It may also serve as a base dataset to develop additional analytical techniques to enable robust comparisons of acoustic detections of blue and fin whale across diverse circumpolar sites and over long spans of time.

We encourage further contributions of data and annotations to help expand the library, and in the future hope to include annotations of sounds from additional Antarctic species, as well as data from other recording locations throughout the southern hemisphere. The IWC-SORP/SOOS Acoustic Trends Annotated Library is freely available from http://data.aad.gov.au/metadata/records/AcousticTrends_BlueFinLibrary74. The larger datasets from which the Annotated Library was derived are available under the data sharing provisions of the Antarctic Treaty (1959), and these can be requested by contacting the authors and/or institutions that hold these data.

Code availability

Code available in the IWC-SORP/SOOS Annotated Library (https://data.aad.gov.au/metadata/records/fulldisplay/AcousticTrends_BlueFinLibrary).

Data availability

Data used in this study are publicly available under a Creative Commons 4.0 Attribution licence. They can be accessed via the Australian Antarctic Data Centre at http://data.aad.gov.au/metadata/records/AcousticTrends_BlueFinLibrary.

References

Mellinger, D. K., Stafford, K. M., Moore, S. E., Dziak, R. P. & Matsumoto, H. An overview of fixed passive acoustic observation methods for cetaceans. Oceanography 20, 36–45 (2007).

Van Parijs, S. et al. Management and research applications of real-time and archival passive acoustic sensors over varying temporal and spatial scales. Mar. Ecol. Prog. Ser. 395, 21–36 (2009).

Sousa-Lima, R. S., Norris, T. F., Oswald, J. N. & Fernandes, D. P. A review and inventory of fixed autonomous recorders for passive acoustic monitoring of marine mammals. Aquat. Mamm. 39, (2013).

Van Opzeeland, I. et al. Towards collective circum-Antarctic passive acoustic monitoring: The Southern Ocean Hydrophone Network (SOHN). Polarforschung 83, 47–61 (2013).

Branch, T. A., Matsuoka, K. & Miyashita, T. Evidence for increases in Antarctic blue whales based on Bayesian modelling. Mar. Mammal Sci. 20, 726–754 (2004).

Branch, T. A. Abundance of Antarctic blue whales south of 60 S from three complete circumpolar sets of surveys. J. Cetacean Res. Manag. 9, 253–262 (2007).

Rocha, R. C. Jr., Clapham, P. J. & Ivashchenko, Y. Emptying the oceans: A summary of industrial whaling catches in the 20th century. Mar. Fish. Rev. 76, 37–48 (2015).

Branch, T. A. & Butterworth, D. S. Estimates of abundance south of 60° S for cetacean species sighted frequently on the 1978/79 to 1997/98 IWC/IDCR-SOWER sighting surveys. J. Cetacean Res. Manag. 3, 251–270 (2001).

Cooke, J. G. Balaenoptera musculus ssp. intermedia. IUCN Red List Threat. Species e.T41713A50226962 (2018). https://doi.org/10.2305/IUCN.UK.2018-2.RLTS.T41713A50226962.en.

Sears, R., Ramp, C., Douglas, A. & Calambokidis, J. Reproductive parameters of eastern North Pacific blue whales Balaenoptera musculus. Endanger. Species Res. 22, 23–31 (2013).

Rankin, S., Ljungblad, D. K., Clark, C. W. & Kato, H. Vocalisations of Antarctic blue whales, Balaenoptera musculus intermedia, recorded during the 2001/2002 and 2002/2003 IWC/SOWER circumpolar cruises, Area V Antarctica. J. Cetacean Res. Manag. 7, 13–20 (2005).

Watkins, W. A., Tyack, P., Moore, K. E. & Bird, J. E. The 20-Hz signals of finback whales (Balaenoptera physalus). J. Acoust. Soc. Am. 82, 1901–1912 (1987).

McDonald, M. A., Mesnick, S. L. & Hildebrand, J. A. Biogeographic characterisation of blue whale song worldwide: using song to identify populations. J. Cetacean Res. Manag. 8, 55–65 (2006).

Gavrilov, A. N., McCauley, R. D. & Gedamke, J. Steady inter and intra-annual decrease in the vocalization frequency of Antarctic blue whales. J. Acoust. Soc. Am. 131, 4476–4480 (2012).

Gedamke, J. Geographic variation in Southern Ocean fin whale song. Submitt. to Sci. Comm. Int. Whal. Comm. SC/61/SH16, 1–8 (2009).

Shabangu, F. W., Yemane, D., Stafford, K. M., Ensor, P. & Findlay, K. P. Modelling the effects of environmental conditions on the acoustic occurrence and behaviour of Antarctic blue whales. PLoS ONE 12, e0172705 (2017).

Širović, A. et al. Seasonality of blue and fin whale calls and the influence of sea ice in the Western Antarctic Peninsula. Deep Sea Res. Part II Top. Stud. Oceanogr. 51, 2327–2344 (2004).

Širović, A., Hildebrand, J. A., Wiggins, S. M. & Thiele, D. Blue and fin whale acoustic presence around Antarctica during 2003 and 2004. Mar. Mammal Sci. 25, 125–136 (2009).

Thomisch, K. et al. Spatio-temporal patterns in acoustic presence and distribution of Antarctic blue whales Balaenoptera musculus intermedia in the Weddell Sea. Endanger. Species Res. 30, 239–253 (2016).

Tripovich, J. S. et al. Temporal segregation of the Australian and Antarctic blue whale call types (Balaenoptera musculus spp.). J. Mammal. 1–8 (2015). https://doi.org/10.1093/jmammal/gyv065.

Dréo, R., Bouffaut, L., Leroy, E., Barruol, G. & Samaran, F. Baleen whale distribution and seasonal occurrence revealed by an ocean bottom seismometer network in the Western Indian Ocean. Deep. Res. Part II Top. Stud. Oceanogr. 161, 132–144 (2019).

Bouffaut, L., Madhusudhana, S., Labat, V., Boudraa, A.-O. & Klinck, H. A performance comparison of tonal detectors for low-frequency vocalizations of Antarctic blue whales. J. Acoust. Soc. Am. 147, 260–266 (2020).

Bouffaut, L., Dréo, R., Labat, V., Boudraa, A.-O. & Barruol, G. Passive stochastic matched filter for Antarctic blue whale call detection. J. Acoust. Soc. Am. 144, 955–965 (2018).

Gedamke, J. & Robinson, S. M. Acoustic survey for marine mammal occurrence and distribution off East Antarctica (30–80°E) in January-February 2006. Deep Sea Res. Part II Top. Stud. Oceanogr. 57, 968–981 (2010).

Gedamke, J., Gales, N., Hildebrand, J. A. & Wiggins, S. Seasonal occurrence of low frequency whale vocalisations across eastern Antarctic and southern Australian waters, February 2004 to February 2007. Rep. SC/59/SH5 Submitt. to Sci. Comm. Int. Whal. Comm. Anchorage, Alaska SC/59, 1–11 (2007).

Leroy, E. C., Samaran, F., Bonnel, J. & Royer, J. Seasonal and diel vocalization patterns of Antarctic blue whale (Balaenoptera musculus intermedia) in the Southern Indian Ocean: a multi-year and multi-site study. PLoS ONE 11, e0163587 (2016).

Miller, B. S. et al. Validating the reliability of passive acoustic localisation: a novel method for encountering rare and remote Antarctic blue whales. Endanger. Species Res. 26, 257–269 (2015).

Miller, B. S. et al. Software for real-time localization of baleen whale calls using directional sonobuoys: A case study on Antarctic blue whales. J. Acoust. Soc. Am. 139, EL83–EL89 (2016).

Miller, B. S. et al. Circumpolar acoustic mapping of endangered Southern Ocean whales: Voyage report and preliminary results for the 2016/17 Antarctic Circumnavigation Expedition. Pap. SC/67a/SH03 Submitt. to Sci. Comm. 67a Int. Whal. Commision, Bled Slov. 18 (2017).

Samaran, F. et al. Seasonal and geographic variation of Southern blue whale subspecies in the Indian Ocean. PLoS ONE 8, e71561 (2013).

Samaran, F., Adam, O. & Guinet, C. Discovery of a mid-latitude sympatric area for two Southern Hemisphere blue whale subspecies. Endanger. Species Res. 12, 157–165 (2010).

Stafford, K. M., Fox, C. G. & Clark, D. S. Long-range acoustic detection and localization of blue whale calls in the northeast Pacific Ocean. J. Acoust. Soc. Am. 104, 3616–3625 (1998).

Weirathmueller, M. J. et al. Spatial and temporal trends in fin whale vocalizations recorded in the NE Pacific Ocean between 2003–2013. PLoS ONE 12, 1–24 (2017).

Harris, D., Matias, L., Thomas, L., Harwood, J. & Geissler, W. H. Applying distance sampling to fin whale calls recorded by single seismic instruments in the northeast Atlantic. J. Acoust. Soc. Am. 134, 3522–3535 (2013).

Morano, J. L. et al. Seasonal and geographical patterns of fin whale song in the western North Atlantic Ocean. J. Acoust. Soc. Am. 132, 1207–1212 (2012).

Socheleau, F.-X. et al. Automated detection of Antarctic blue whale calls. J. Acoust. Soc. Am. 138, 3105–3117 (2015).

Mellinger, D. K. & Clark, C. W. Recognizing transient low-frequency whale sounds by spectrogram correlation. J. Acoust. Soc. Am. 107, 3518–3529 (2000).

Mellinger, D. K. Ishmael 1.0 User’s Guide. (2001).

Gillespie, D. et al. PAMGUARD: Semiautomated, open source software for real-time acoustic detection and localisation of cetaceans. Proc. Inst. Acoust. 30, 54–62 (2008).

Figueroa, H. & Robbins, M. XBAT: an open-source extensible platform for bioacoustic research and monitoring. Comput. bioacoustics Assess. Biodivers. 143–155 (2008).

Balcazar, N. E. et al. Calls reveal population structure of blue whales across the southeast Indian Ocean and southwest Pacific Ocean. J. Mammal. gyv126 (2015). https://doi.org/10.1093/jmammal/gyv126.

Buchan, S. J., Hucke-Gaete, R., Stafford, K. M. & Clark, C. W. Occasional acoustic presence of Antarctic blue whales on a feeding ground in southern Chile. Mar. Mammal Sci. 34, 220–228 (2017).

Harris, D. V., Miksis-Olds, J. L., Vernon, J. A. & Thomas, L. Fin whale density and distribution estimation using acoustic bearings derived from sparse arrays. J. Acoust. Soc. Am. 143, (2018).

Aulich, M. G., McCauley, R. D., Saunders, B. J. & Parsons, M. J. G. Fin whale (Balaenoptera physalus) migration in Australian waters using passive acoustic monitoring. Sci. Rep. 9, 1–12 (2019).

Balcazar, N. E. et al. Using calls as an indicator for Antarctic blue whale occurrence and distribution across the southwest Pacific and southeast Indian Oceans. Mar. Mammal Sci. 33, 172–186 (2017).

Helble, T. A. et al. Site specific probability of passive acoustic detection of humpback whale calls from single fixed hydrophones. J. Acoust. Soc. Am. 134, 2556–2570 (2013).

McDonald, M. A., Hildebrand, J. A. & Mesnick, S. Worldwide decline in tonal frequencies of blue whale songs. Endanger. Species Res. 9, 13–21 (2009).

Leroy, E. C., Royer, J.-Y., Bonnel, J. & Samaran, F. Long-term and seasonal vhanges of large whale call frequency in the Southern Indian Ocean. J. Geophys. Res. Ocean. 1–13 (2018). https://doi.org/10.1029/2018JC014352.

Gavrilov, A. N., Mccauley, R. D., Salgado-kent, C., Tripovich, J. & Burton, C. L. K. Vocal characteristics of pygmy blue whales and their change over time. J. Acoust. Soc. Am. 130, 3651–3660 (2011).

Miller, B. S., Leaper, R., Calderan, S. & Gedamke, J. Red shift blue shift: Doppler shifts and seasonal variation in the tonality of Antarctic blue whale song. PLoS ONE 9, e107740 (2014).

Širović, A., Oleson, E. M., Buccowich, J., Rice, A. & Bayless, A. R. Fin whale song variability in southern California and the Gulf of California. Sci. Rep. 7, 1–11 (2017).

Nieukirk, S. L. et al. Sounds from airguns and fin whales recorded in the mid-Atlantic Ocean, 1999–2009. J. Acoust. Soc. Am. 131, 1102–1112 (2012).

Širović, A. Variability in the performance of the spectrogram correlation detector for North-east Pacific blue whale calls. Bioacoustics 25, 145–160 (2016).

Roch, M. A., Stinner-Sloan, J., Baumann-Pickering, S. & Wiggins, S. M. Compensating for the effects of site and equipment variation on delphinid species identification from their echolocation clicks. J. Acoust. Soc. Am. 137, 22–29 (2015).

Thomisch, K. et al. Effects of subsampling of passive acoustic recordings on acoustic metrics. J. Acoust. Soc. Am. 138, 267–278 (2015).

Pawlowicz, R. M_Map: A mapping package for Matlab. Version 1.4k (2019).

Center for conservation bioacoustics. raven pro: interactive sound analysis software. (2014).

Širović, A., Williams, L. N., Kerosky, S. M., Wiggins, S. M. & Hildebrand, J. A. Temporal separation of two fin whale call types across the eastern North Pacific. Mar. Biol. 160, 47–57 (2013).

Ou, H., Au, W. W. L., Oleson, E. M. & Rankin, S. Discrimination of frequency-modulated Baleen whale downsweep calls with overlapping frequencies. 137, 1 (2016).

Lurton, X. Underwater acoustic wave propagation. in An Introduction to Underwater Acoustics Principles and Applications 13–74 (Springer-Verlag, 2010).

Dawe, R. L. Detection Threshold Modelling Explained. http://oai.dtic.mil/oai/oai?verb=getRecord&metadataPrefix=html&identifier=ADA335337 (1997).

Davis, J. & Goadrich, M. The relationship between precision-recall and ROC curves. Proc. 23rd Int. Conf. Mach. Learn.—ICML’06 233–240 (2006). https://doi.org/10.1145/1143844.1143874.

Wood, S. N. Generalized Additive Models. Generalized Additive Models: An Introduction with R, Second Edition (Chapman and Hall/CRC, 2017). https://doi.org/10.1201/9781315370279.

R Core Team. R: A language and environment for statistical computing. (2019).

Leroy, E. C., Thomisch, K., Royer, J., Boebel, O. & Van Opzeeland, I. On the reliability of acoustic annotations and automatic detections of Antarctic blue whale calls under different acoustic conditions. J. Acoust. Soc. Am. 144, 740–754 (2018).

Tsang-Hin-Sun, E., Royer, J.-Y. & Leroy, E. C. Low-frequency sound level in the Southern Indian Ocean. J. Acoust. Soc. Am. 138, 3439–3446 (2015).

Samaran, F., Adam, O. & Guinet, C. Detection range modeling of blue whale calls in Southwestern Indian Ocean. Appl. Acoust. 71, 1099–1106 (2010).

Marques, T. A. et al. Estimating animal population density using passive acoustics. Biol. Rev. 88, 287–309 (2013).

Baumgartner, M. F. & Mussoline, S. E. A generalized baleen whale call detection and classification system. J. Acoust. Soc. Am. 129, 2889–2902 (2011).

Mellinger, D. & Bradbury, J. Acoustic measurement of marine mammal sounds in noisy environments. in Proc. Second International Conference on Underwater Acoustic Measurements Technologies and Results 25–29 (2007).

Urazghildiiev, I. R. & Clark, C. W. Acoustic detection of North Atlantic right whale contact calls using the generalized likelihood ratio test. J. Acoust. Soc. Am. 120, 1956–1963 (2006).

Dugan, P. et al. Using high performance computing to explore large complex bioacoustic soundscapes: case study for right whale acoustics. Procedia Comput. Sci. 20, 156–162 (2013).

Shiu, Y. et al. Use of deep neural networks for automated detection of marine mammal species. 1–29 (2020). https://doi.org/10.1038/s41598-020-57549-y.

Miller, B. S. et al. An annotated library of underwater acoustic recordings for testing and training automated algorithms for detecting Antarctic blue and fin whale sounds. Dataset hosted by the Australian Antarctic Data Centre http://data.aad.gov.au/metadata/records/AcousticTrends_BlueFinLibrary (2020) doi:https://doi.org/10.26179/5e6056035c01b.

Acknowledgements

The annotated library was made possible with funding from: IWC-SORP Grant “An annotated library of underwater acoustic recordings for testing and training automated algorithms for detecting Southern Ocean baleen whales”; IWC-SORP Grant “IWC-SORP Project 5—Acoustic trends in abundance, distribution, and seasonal presence of Antarctic blue whales and fin whales in the Southern Ocean: 5-year strategic meeting”; Australian Antarctic Science Projects 4101, and 4102—Antarctic baleen whale habitat utilisation and linkages to environmental characteristics, and Population abundance, trend, structure and distribution of the endangered Antarctic blue whale; Research grants from the Korean Ministry of Oceans and Fisheries (KIMST20190361; PM20020; PE20230). This report is NOAA/PMEL contribution number 5005. South African National Antarctic Programme Grant/Award Number: SNA 2011112500003.

Author information

Authors and Affiliations

Consortia

Contributions

B.S.M. and the IWC-SORP/SOOS Acoustic Trends Group conceived and planned the annotated library. D.H. conceived the sub-sampling scheme for acoustic data. I.V.O., B.S.M., F.W.S., K.F., R.P.D., W.S.L., and J.K.H. contributed acoustic data for annotation. N.B., S.N., E.C.L., M.A., and B.S.M. manually annotated subsamples of data. B.S.M. prepared figures and tables summarising the contents of the annotated library, and conceived and conducted the performance characterisation of automated detectors. All authors provided edits and comments on the draft manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Miller, B.S., The IWC-SORP/SOOS Acoustic Trends Working Group., Balcazar, N. et al. An open access dataset for developing automated detectors of Antarctic baleen whale sounds and performance evaluation of two commonly used detectors. Sci Rep 11, 806 (2021). https://doi.org/10.1038/s41598-020-78995-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-78995-8

This article is cited by

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.