Abstract

Prediction of material behavior using machine learning (ML) requires consistent, accurate, and, representative large data for training. However, such consistent and reliable experimental datasets are not always available for materials. To address this challenge, we synergistically integrate ML with high-throughput reactive molecular dynamics (MD) simulations to elucidate the constitutive relationship of calcium–silicate–hydrate (C–S–H) gel—the primary binding phase in concrete formed via the hydration of ordinary portland cement. Specifically, a highly consistent dataset on the nine elastic constants of more than 300 compositions of C–S–H gel is developed using high-throughput reactive simulations. From a comparative analysis of various ML algorithms including neural networks (NN) and Gaussian process (GP), we observe that NN provides excellent predictions. To interpret the predicted results from NN, we employ SHapley Additive exPlanations (SHAP), which reveals that the influence of silicate network on all the elastic constants of C–S–H is significantly higher than that of water and CaO content. Additionally, the water content is found to have a more prominent influence on the shear components than the normal components along the direction of the interlayer spaces within C–S–H. This result suggests that the in-plane elastic response is controlled by water molecules whereas the transverse response is mainly governed by the silicate network. Overall, by seamlessly integrating MD simulations with ML, this paper can be used as a starting point toward accelerated optimization of C–S–H nanostructures to design efficient cementitious binders with targeted properties.

Similar content being viewed by others

Introduction

In recent years, the quest for new and emerging high-performance materials has been increasing rapidly in the fields of infrastructure, aviation, energy, and communications. To address this challenge, machine learning (ML)-based approaches have emerged as promising avenues to accelerate the development of innovative materials design strategies1,2,3. Fundamental evaluation of composition-property relationships in highly heterogeneous systems is a key feature of such materials design strategies. ML, when judiciously used, can learn various complex composition-property relationships that would otherwise remain undetected using traditional approaches4,5. However, the application of such ML-based approaches is still limited, especially in the field of infrastructure materials6,7. It is critical to find bold and forward-thinking solutions in infrastructure that adopt modern methodologies for materials design and discovery so as to accelerate the development of next-generation of durable, high-performance materials.

Ordinary Portland cement (OPC) concrete is the most widely used construction material. Despite vast research on composition-property relationships over the last 3 decades8,9,10,11, the influence of the heterogeneous hierarchical structure of the material on the engineering performance still remains an active area of research8,9. Specifically, previous studies have highlighted that the mechanical performance and, durability of cementitious materials can be improved by optimizing the properties of calcium–silicate–hydrate (C–S–H) gel—the glue of concrete formed via hydration of cement11,12. C–S–H exhibits a poorly crystalline structure as observed from scattering experiments13,14. While fundamental composition–property relationships for C–S–H are crucial towards the design of high-performance and high-durability concrete via “bottom-up” approach15, complex hierarchical characteristics of C–S–H makes it exceedingly difficult to probe such relationships of C–S–H experimentally14,16.

To this extent, ML approaches are a promising solution to predict composition–property relationships toward the design of cementitious materials. However, evaluation and prediction of such relationships for C–S–H gel using ML present various well-known challenges. First, ML algorithms critically rely on the existence of accessible, consistent, accurate, and, representative datasets to provide enough information for training the models. Such large experimental data for C–S–H are limited or clustered to a few feasible regions. Second, ML, being a data-driven method, doesn’t provide insights into the fundamental laws of physics and, therefore, can potentially result in non-physical solutions4,5. Specifically, the black box ML methods such as NN, despite having high predictability, have little or no interpretability. To overcome these challenges, in this paper, we adopt a systematic and pragmatic approach where high-throughput molecular dynamics (MD) simulation is synergistically integrated with various advanced ML techniques especially Gaussian process (GP) and neural network (NN) to evaluate composition-dependent elastic moduli of C–S–H. Besides, various other ML techniques such as polynomial regression (PR), random forest (RF), support vector machine (SVM), k-nearest neighbors (k-NN), and decision trees (DT) are also evaluated for a comparative overview. Further, the interpretability of the black box models are explored using shapley additive explanations (SHAP)17,18 to gain insights into the fundamental factors governing the elastic response of C–S–H.

Precisely, a composition-dependent elastic constant database for C–S–H is developed using high-throughput MD simulation. MD simulations have been exhaustively used to investigate the structure of C–S–H19,20,21,22, exploring information that are not feasible in traditional experiments, despite recent advances in characterization. Such, MD-simulation-based database generation follows fundamental laws of physics and thus, helps to avoid non-physical solutions. However, the accuracy of MD simulations depends on the choice of interatomic potential. Here, reactive forcefield (ReaxFF)23 has been adopted which has been shown to yield a good correlation between the simulated and experimental responses of C–S–H21,24. While a large dataset is generated using physics-based MD simulations, supervised ML techniques are leveraged which explore the information by learning a pattern from the data generated by MD simulations. As discussed earlier, the application of ML techniques on cementitious materials is limited. A few studies6,7,25 have applied various ML techniques on experimental compressive strength data for concrete at the macro-scale. While these studies addressed the macro-scale relationship of a single target (such as compressive strength) from multiple inputs such as change of mixture proportions or starting materials for concrete, this paper evaluates multiple elastic constants (C11, C22, C33, C44, C55, C66, C12, C13, and C23 ) of the primary binding phase (C–S–H) with varying fraction of CaO, SiO2, and nanoconfined water.

To tackle such a multi-target problem, this paper employs both multiple single target (ST) approach (for PR, RF, SVM, k-NN, DT, and GP) and multi-target regression approach (for NN). While multiple single target (ST) regression splits the problem into multiple single-output regression problems where the outputs are assumed to be independent of each other, multi-target regression incorporates the statistical correlation among the outputs besides using the original input features. As such, multi-target regression is likely to offer superior response predictions for C–S–H due to its multivariate nature and the compound dependencies between the multiple feature and/or target variables26,27 which is explored in detail in this paper. Though NN can provide high accuracy of prediction, interpretation of results with NN alone challenging and it may not offer any new physical insights4,28. Along those lines, this study adopts a recent method called SHAP17,18 to address this challenging issue of interpretation of results from NN model. Overall, this paper, aimed at predicting composition-dependent multiple elastic constants for C–S–H, is expected to provide a valuable composition-property link for C–S–H which can help clarify efficient pathways to optimize the nanoscale C–S–H structures to enhance mechanical performance and, durability of cementitious materials.

Results

MD simulations to generate large dataset

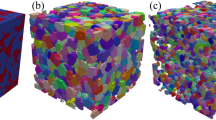

A total of 319 different C–S–H compositions are generated via MD simulations by varying the CaO, SiO2, and water content. C–S–H has been reported extensively in the literature16,19,20 to exhibit a layered structure. It consists of interlayer domains in between calcium silicate networks that contain water molecules. While Fig. 1a shows a representative atomistic structure of C–S–H with a Ca/Si ratio of 1.09, Fig. 1b plots the variations of water content as a function of Ca/Si molar ratio. The model construction process and relevant details are provided in the methods section. Figure 1b clearly shows a significant increase in water content with an increasing Ca/Si ratio. Such trend can be attributed to the increase in the degree of depolymerization and increase in interlayer spacing with increasing CaO content in C–S–H. A similar observation has also been reported in the literature20.

(a) Representative C–S–H structure for Ca/Si = 1.09 showing the Calcium silicate network and the interlayer spaces, and (b) water content (H2O/SiO2 molar ratio) as a function of Ca/Si molar ratio for representative C–S–H structures with saturation water content, (c) The bulk modulus, and (d) density as a function of Ca/Si molar ratio for representative C–S–H structures with saturation water content.

Figure 1c and d shows the computed bulk modulus and density respectively for C–S–H plotted with varying Ca/Si ratio. The values obtained from MD simulations in this present study are compared with experimental results available in the literature11,22,23,29,30,31,32,33,34,35. It is observed that the computed bulk modulus values are in good agreement with the experimental values which provides confidence in the reliability of the constructed C–S–H structures. In Fig. 1d, it is observed that the experimental density values, obtained from literature, are scattered within a large range which can be attributed to the process by which the hydrated samples were dried under various environmental conditions36. The densities obtained from MD simulations in the present work lie within the experimentally observed range. The general trend in Fig. 1c and d suggest that both bulk modulus and density decrease with an increase in the Ca/Si ratio. The influence of the composition of C–S–H on the elastic constants, as obtained from MD simulations, is detailed hereafter in the remainder of this section.

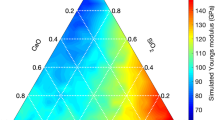

Figure 2 shows the ternary plot of elastic constants with respect to the CaO, SiO2, and H2O present in different C–S–H compositions. The general trend from the figures suggests that for the same concentration of water, the modulus decreases with an increase in CaO concentration. This is due to the fact that as the content of CaO concentration increases, the structure becomes more disordered, and depolymerization of the network structure increases (as shown in Fig. 3) resulting in a decrease in the elastic modulus. On the other hand, an increase in H2O concentration for constant molar fraction of CaO results in a decrease in the modulus value. However, with an increase in H2O concentration and the same molar fraction of SiO2 the elastic modulus increase. It can be observed that the variation of moduli with composition is non-systematic and coupled effects exist. For example, C33 value initially increases with an increase in SiO2 content up to a 0.4 molar fraction beyond which the value decreases with a further increase in water concentration. Similarly, from Fig. 2, it is evident that C11 and C22 are greater than C33. This is due to the presence of interlayer spacing in layered C–S–H structure where the load is applied perpendicular to the interlayer plane. Similarly, for the same reason, in the case of shear deformation C66 values are found to be higher than C44 and C55. Overall, the stiffness moduli exhibit a non-linear relationship with variations in composition, which prevents any assumption of a linear model to predict the stiffness moduli in the C–S–H system.

In general, the elastic modulus (or Young’s modulus) increases with increasing network connectivity37. To evaluate such a trend in this study, the connectivity in the structure is calculated by computing the degree of polymerization which is taken as the ratio of the number of BO (bridging oxygen) with respect to the number of tetrahedral networks (T). The ternary plot of the degree of polymerization with the composition of C–S–H is shown in Fig. 3. A higher degree of polymerization is observed when the concentration of SiO2 increases which is expected since Si serves as a network former in C–S–H. Conversely, a lower degree of polymerization is observed when water concentration is increased and SiO2 molar fraction is decreased. However, the trend reverses when the water content increases and CaO content decreases. This infers the existence of coupled effect in composition-structure properties in C–S–H, which is also observed for elastic moduli. Maximum network connectivity is observed when the SiO2 molar fraction is greater than 0.4 and the H2O molar fraction is below 0.1. But maximum elastic moduli are observed in the range between 0.2 and 0.4 molar fraction for both SiO2 and H2O. This indicates that the network connectivity alone is not sufficient enough to predict the elastic constants which makes it challenging to develop a robust physics-based predictive model.

Prediction of elastic constants using ML

While the MD simulations are leveraged to obtain a database of elastic constants for C–S–H as explained in the previous section, the forthcoming sub-sections use that database and implement various ML approaches to build prediction tools for elastic constants for C–S–H as explained hereafter.

Figure 4 shows the comparison of the elastic constant C33 predicted by PR, DT, RF, SVM (with RBF kernel), kNN, GPR (with both Matern and RBF kernels), and NN. From Fig. 4, it is clearly seen that GPR and NN perform the best among all the other models. Among GPR models, the RBF kernel shows better prediction than the Matern kernel. Henceforth, the forthcoming sections focus on GPR with RBF kernel and NN for a detailed evaluation of the constitutive relationships of C–S–H. The results and adopted methods for all other models are sufficiently detailed in the Supplementary document.

Comparison of the elastic constant C33 predicted by (a) PR, (b) DT, (c) RF, (d) SVM (with RBF kernel), (e) k-NN, (f) GPR (with Matern kernel), (g) GPR (with RBF kernel) and (h) NN with measured values computed by MD simulation. The error bars shown for each value represent the standard deviation around the mean values.

Prediction of elastic constants using Gaussian process (GP)

In this section, predictions based on GP regression (see “Methods” section) are the focus. Two kernels i.e., radial basis function (RBF) and Matern kernels which are commonly adopted in the literature and also have been shown to produce accurate results38 are implemented here.

Figure 5 shows the predicted elastic constants using GPR with rbf kernel against the measured values computed by MD simulation.

Here, the GPR model is trained using a train set by employing the rbf kernel along with white noise, and the model is updated till the hyperparameters converged to a global optimum. It is observed that the GPR model could predict for most of the elastic constants with a higher degree of accuracy except for C44, C13, and C23 for which the R2 values were relatively lower. The predicted results for the Matern kernel are provided in the Supplementary document. A comparison between the predictions from both kernels (rbf and Matern) reveals that the results are independent of the choice of kernel.

In a later section, the accuracy of the GP models is compared against NN and other traditional models such as polynomial regression, decision trees, and support vector machine. The advantage of GP regression is its ability to provide the uncertainty underlying in the model. The error bars shown for each value represent the standard deviation around the mean values. Thus, GP regression provides confidence in the predictions, which are lacking in other models. Furthermore, the standard deviation of the training sets represents the level of noise present in the data subjected to the training set. On the contrary, the standard deviation in the test sets corresponds to the uncertainty in the model prediction given the distribution of the training data.

Prediction of elastic constants using neural network (NN)

In this section, the model prediction using NN is assessed. The hyperparameters such as number of hidden layers, number of hidden nodes, optimizer, batch size, number of epochs have been optimized prior to prediction (please refer to Supplementary document). In this NN design, two hidden layers of 9 hidden nodes were used to prevent overfitting of data. With the implementation of NN, MSE for almost all the elastic constants dropped significantly as compared to those observed in the case of other ML techniques. Figure 6 exhibits the predicted responses (using NN with 2 hidden layers and 9 neurons) against the measured values computed by MD simulation. Overall, the prediction accuracy has improved significantly as compared to all other studied models. This is because the neural network implicitly considers all the outputs as dependent, which are overlooked in other models.

Discussion

Database adequacy

For adequacy, the database should be (1) balanced, (2) representative, (3) complete, and (4) consistent39. In this current study, the dataset is generated by varying the composition of CaO, SiO2, and H2O in a uniform fashion. This is to ensure that the data points from all possible regions in the ternary diagram are equally represented. To obtain a representative dataset, the data are split into training (80%) and test set (20%). The hyperparameters are tuned by implementing fivefold cross-validation. At every fold in cross-validation, the training data is further divided into 80% of the training set and 20% for validation. The errors (training error and validation error) calculated from each fold are averaged to represent the average model error. The performance of the model is then evaluated on the unseen test dataset. In this study, a good correlation between the test values and the predicted values is obtained as can be observed from the results presented in Table 2 and Fig. 5. Thus, the dataset can be considered representative. Besides, completeness of the dataset is ensured here by choosing all the possible ranges of the Ca/Si ratio for C–S–H observed experimentally. Moreover, the consistency of the dataset is carefully maintained by following the same C–S–H model construction, molecular loading conditions, and elastic constant evaluation procedure within the high throughput MD simulations for all the C–S–H compositions. Thus, the overall adequacy of the dataset is ensured by careful implementation of all the four above-mentioned criteria during the dataset generation and model training/testing procedure.

Discussion on comparative performance of different ML techniques

For a direct comparison of different ML techniques used in this study, MSE and R2 values (for both train and test set) obtained for different elastic constants are shown in Tables 1 and 2 respectively. The results for PR, RF, DT, kNN, and SVM are detailed in the Supplementary document for ease of reference. While the level of accuracy for the training data infers the interpolation ability of the known data, the level of accuracy for the test data evaluates the prediction ability of the model for unknown data. From all the results using different ML techniques, no direct correlation between MSE and R2 was observed. As it is observed that MSE of C55 for RF is comparatively low (5.17 GPa2 with test set, 1.527 GPa2 with train set), but has an R2 value of 0.78 was obtained. This signifies that model selection should not solely be based on the high R2 value but also should be associated with low MSE value. It is worth mentioning that all these models explained herein except NN consider the outputs to be independent which is likely to impart a significant difference in prediction accuracy for NN as compared to other adopted techniques. Nevertheless, models like GP could still provide good prediction when compared with NN.

From Tables 1 and 2, it is observed that the RF algorithm yield the least MSE and highest R2 value for the train set. However, RF suffered from low-level prediction accuracy. A similar observation is also reported for silicate glass in the literature39. Results in Tables 1 and 2 also suggest that RF offers better predictability than DT. This is because RF trains a large number of trees individually and its prediction accuracy depends on the decision trees ensemble. On the other hand, the DT algorithm depends on a nodal binary split. Also, in the DT algorithm, based on the selected features and values, the observations are placed to the left node or the daughter node. In the case of the RF algorithm, the output for all the trees is averaged which incorporates non-linearity especially when enough number of trees are used. This is the reason why RF could offer excellent interpolation for the training set but fair prediction of the test set.

It is ideally required for any model to minimize complexity while maintaining high interpretability. However, in general, models that provide higher prediction accuracy often suffer from higher computational complexity and limited or no interpretability. In this study, PR has high interpretability and it is associated with low complexity. Overall, although PR offered good accuracy with lower MSE and fair R2 for a train set, however, it falls short when predicting responses using the test set. Besides, the predictability for individual outputs are comparatively low compared to GP and NN. Nevertheless, PR provides us information that the composition–property is not linearly correlated, which is crucial to develop a predictive model.

Lastly, though the model complexity is high for NN as it is associated with two hidden layers and each layer has 9 hidden nodes. Overall, NN by far performed the best in terms of the accuracy for both train and test set for individual outputs. This shows the superiority of the NN for multiple outputs when enough data is trained. One of the drawbacks of NN is that it requires huge computation resources and takes a larger amount of time to train the model.

Discussion on model interpretation for NN

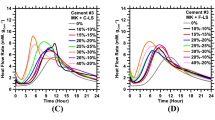

This discussion section demonstrates the interpretability of the NN predictions by using SHAP17. In SHAP, the impact of each feature on the prediction is obtained by assigning each feature an importance value for a respective prediction. The results are shown in Fig. 7.

The general trend in Fig. 6a–d suggests that all the elastic constants are primarily controlled by SiO2 content followed by water and CaO content. While the normal stiffness along the interlayer direction (C11) shows a relatively lower SHAP value for water, the value increases when the normal stiffness perpendicular to the interlayer direction (C33) is considered. Besides, the shear components (C44, C55 (please refer to Supplementary Fig. 14), and C66 ) show increased contribution from water. This could be attributed to the layered nature of CSH where the in-plane movements are primarily controlled by the water molecules, while the normal stiffness along the direction of the interlayers is mainly controlled by the silicate network (as observed in C11 case). Similarly, for other elastic constants such as C22, C55, C12, C13, and C23 (see Supplementary Fig. 14) SiO2 content primarily dominates followed by water content and CaO content.

Outlook

This paper establishes that the nature of the input–output relationship of a complex material such as C–S–H can be effectively predicted and interpreted using ML. Due to the limitation of the experimental data available in the literature, especially for different C–S–H compositions, this study uses physics-based MD simulations to generate the elastic constant dataset for different C–S–H compositions. Note that only the compositional ranges of C–S–H that is observed experimentally is used. The molecular structure for each composition is simulated by implementing ReaxFF. Further, instead of a single effective mechanical property such as Young’s modulus or hardness, this study evaluates different individual components of the stiffness moduli, in particular, nine stiffness components. Using the dataset generated from MD simulations, the elastic constants for C–S–H are predicted by implementing two ML techniques: Gaussian Process (GP) and neural network (NN). By judicious selection of optimal level of complexity, and accuracy reliable predictions of the properties can be obtained while ensuring there is no under- or overfitting. A comparative evaluation between the ML techniques reveals that GP and NN show significantly improved predictions as compared to other adopted techniques and NN is found to offer the highest level of accuracy with considerably lower MSE and good R2 values.

Furthermore, to interpret the influence of CaO, SiO2, and water on various stiffness components of C–S–H, obtained using the NN-based model, SHAP is leveraged which evaluates the importance of each model features on the model’s output after considering all the possible combinations. From evaluations using SHAP, the following conclusions are drawn: (1) all the stiffness components of C–S–H are dominantly influenced by SiO2 content followed by water and CaO content; (2) the influence of water content is more prominent for shear components. These results suggest that the in-plane movements are primarily controlled by the water molecules, while the normal stiffness along the direction of the interlayers is mainly controlled by the silicate network. Overall, by synergistically integrating high-throughput MD simulations with ML approaches, this paper shows the efficacy of using ML-based approaches to predict the mechanical behavior of C–S–H and this study can be adopted as a starting point towards developing integrated experiment-multiscale simulation-ML-based design strategies for exceptional materials performance.

Methods

High-throughput MD simulations

In this study, high-throughput MD simulations are performed to obtain an adequate dataset of elastic constants for different compositions of C–S–H. The C–S–H model construction procedure for varying Ca/Si ratios, molecular loading conditions, and evaluation of elastic constants for all the C–S–H compositions within the high-throughput MD simulations are presented in the forthcoming sub-sections.

C–S–H model construction

Here, the realistic molar percentages of SiO2, CaO, and H2O are adopted as 11–38%, 23–55%, and 7–66% (molar %) respectively. These ranges are chosen based on viable ranges (Ca/Si molar ratio) of constituents reported in the literature16,19,20,29 to form C–S–H. The CSH models are constructed by introducing defects in a layered 11 Å tobermorite40 structure. The 11 Å tobermorite configuration contains pseudo-octahedral calcium oxide sheets surrounded by silicate tetrahedral chains, which consists of bridging oxygen (BO) atoms and \({Q}^{2}\) silicon atoms (i.e., Si atom connected to two bridging and two non-bridging terminal oxygen atoms)41. Such configuration involves negatively charged calcium-silicate sheets which are separated from each other by interlayer spacings. The interlayer spacing is filled with interlayer water molecules and charged-balancing calcium cations. It is to be noted that the initial configuration of 11 Å tobermorite consists of a Ca/Si ratio equal to 1, this ratio is increased to the range of 1.09–2.06 as constructed in the present models by randomly removing charge-neutral SiO2 groups. This removal of SiO2 introduces defects in the silicate chains and provides possible sites for adsorption of extra water molecules. To this end the adsorption of water molecules in the structurally defected tobermorite model is performed by implementing the Grand Canonical Monte Carlo (GCMC)42 method, ensuring equilibrium with bulk water at constant volume, zero chemical potential, and room temperature. A similar model development procedure for C–S–H has been successfully implemented in the literature19,21,22,23,30 and the procedure is adequately detailed in several published articles22,30. ReaxFF is used here in the MD simulations which has been successfully implemented to evaluate the behavior of C–S–H21,24,30 and other similar materials43,44,45. These studies have successfully leveraged the features of ReaxFF to evaluate the dynamics of nano-confined water in C–S–H19, fracture toughness24, structural properties of C–S–H21,30, and radiation damage in C–S–H46. Besides, ReaxFF potential has been shown to model C–S–H47 reliably in terms of the structural and elastic properties as it is based on the bond-formation/breakages, which is useful for reactive mechanisms such as dissociation of nano-confined water in C–S–H. The generated structure is further relaxed at 300 K and zero pressure for 500 ps in the NVT and NPT ensemble with a timestep of 0.25 fs before computing the stiffness components. The molar range of Ca/Si ratio is maintained consistent with those from the literature16,29,48. To obtain different water content, water molecules are randomly removed from the saturated structure and equilibrated for 500 ps in NVT and NPT, respectively. All the simulations are performed in an open source code LAMMPS package49. The methodology for model construction for C–S–H is adequately detailed in the literature19,22,30.

Molecular loading conditions

Once the structures are adequately equilibrated, they are subjected to three axial and three shear deformations along the X, Y, and Z axes. To apply axial tensile load, the C–S–H structures are subjected to uniform tensile strain in the X-direction, and the process is continued for Y and Z-directions. Similarly, to simulate the shear loading in the C–S–H structures, a shear strain is applied along X, Y, and Z-directions, respectively.

Evaluation of elastic constants

During the deformations the elastic constants \(C_{ij}\) matrix is obtained as39:

where \(U\) is the potential energy, \(V\) is the volume of the structure, \(\epsilon\) is the strain, i and j are the indexes representing each Cartesian direction. In this study, 9 components of stiffness moduli are considered for prediction (\(C_{11} , C_{22} , C_{33} , C_{44} , C_{55} , C_{66} , C_{12} , C_{13}\) and \(C_{23}\)). The same has been adopted when calculating the elastic properties such as Young’s modulus, shear modulus, and bulk modulus from the stiffness matrix in glass structure using MD simulation50,51.

All the simulations are conducted using the Large-scale Atomic/Molecular Massively Parallel Simulator (LAMMPS) package49. Each C–S–H structure comprises at least 3000 atoms. ReaxFF is used as an interatomic potential. The process is repeated till all the elastic constants for different Ca/Si ratio with different water has been generated”.

Machine learning (ML) techniques

The database of the stiffness matrix is computed from the MD simulations to predict composition-dependent elastic constants for C–S–H using various ML techniques. This paper primarily focuses on Gaussian process (GP), and neural network (NN) which are discussed in the forthcoming sub-sections. Besides, this paper also evaluates other common ML techniques such as polynomial regression, random forest, support vector machine, k-nearest neighbors, and decision trees for comparative assessment of prediction abilities. These common ML techniques are detailed in the Supplementary document for ease of reference.

Gaussian processes regression

A Gaussian process is defined as a collection of random variables among which a finite subset has a joint Gaussian distribution52. One can implement it to describe a distribution over a given set of input(x) and output datasets (y). For a linear regression model with noise \(\epsilon\),

where the noise is assumed to follow an independent, identically distributed Gaussian distribution with zero mean and variance (\(\sigma_{\epsilon }^{2}\)). Without losing generality, a Gaussian process can be completely described by its mean function and covariance function,

where \({\mathcal{G}\mathcal{P}}\left( \cdot \right)\) is the specified Gaussian process, \(m\left( x \right)\) is the mean function which computed the expected values of output for a given input, and \(k\left( {x,x^{\prime } } \right)\) is the covariance function, a Gaussian prior function that captures the extent of correlation between function outputs for the given sets of inputs. The covariance function is expressed as:

Instead of using a specified functional form (as in the case of deterministic model), Gaussian processes describe the input–output relationship through distributions over functions of the input space, \(x \in {\mathcal{X}}\). The designated random variables follow Gaussian distribution. For Gaussian distribution, the marginalization and conditioning properties can be fully utilized to obtain the marginal likelihood and the conditional probability via the designated mean and covariance. For the mean-subtracted data set, the mean function is set to zero and the prior’s covariance is specified by assigning trial kernel functions with a set of hyperparameters. The widely used kernel functions are exponential kernel and squared exponential kernel. To obtain the posterior distribution over functions, one must restrict the joint prior to containing only those functions which agree with the training data through conditioning of the Gaussian prior. The joint distribution of the training outputs, y, and the test outputs \(f^{\prime}\) according to the prior is expressed as52:

If there are \(n\) training points and \(n^{\prime }\) testing points then \(K\left( {X,X} \right)\) is a \(n \times n\) matrix of the covariance between all observed training points, \(K\left( {X,X^{\prime } } \right)\) is the \(n \times n^{\prime }\) covariance matrix between the training and testing pairs and likewise for \(K\left( {X^{\prime } ,X} \right)\) and \(K\left( {X^{\prime } ,X^{\prime } } \right)\). Applying principles of conditionals, the marginal likelihood of the output can be assumed to follow a gaussian distribution with the predicted mean \(m\left( {f^{\prime } } \right)\) and covariance function \( k\left( {f^{\prime } } \right)\) as52:

The marginal likelihood of the output given the input can be obtained through the marginalization and the model is selected by updating the hyperparameters during training through the maximization of the marginal-likelihood (or log-marginal-likelihood). The set of hyperparameters should ideally converge to a global optimum.

Neural network (NN)

Neural network is a mathematical model which maps a given set of predictors, \(x\), to a set of desired response, \(y\). The early proposition of this idea is linked to the assumption of how the information is stored and processed in the brain53. The map between the predictor and the response is comprised of multiple layers of perceptron and activation functions and it is called the feed-forward neural network. The estimated response can be expressed as follows,

where \({\varvec{f}}_{{\varvec{N}}} \left( \cdot \right):{\mathbb{R}} \to {\mathbb{R}}\) is a continuous bounded function which is usually referred to as the activation function, \({\varvec{A}}_{{\varvec{i}}} :{\mathbb{R}}^{{{\varvec{d}}_{{\varvec{i}}} }} \to {\mathbb{R}}^{{{\varvec{d}}_{{{\varvec{i}} + 1}} }}\) is the transformation matrix that contains weights between two layers of perceptron54. The neural network received very much attention in academia and applications in engineering due to the proven universal approximation property which states that the feed-forward neural network architectures with a sigmoid activation function are capable of approximating any set of functions between two Euclidean spaces for the canonical topology55.

The weights can be solved by formulating the above mapping into a constrained optimization problem as stated below,

where λ is the regularization intensity constant and \(g\left( \cdot \right)\) is a functional form of the weights to be regularized. This optimization problem is usually solved by stochastic gradient descent or backward propagation algorithm. Since the non-convex nature of the neural network, the solution to this optimization problem is not unique. Moreover, the selection of the number of layers and the number of perceptron in each layer affects the result of the regression, and it is subjected to high variance problems when large numbers of neurons and layers are used. As such proper regularization is needed when the neural network is implemented. In this study, while training a neural network model, a rectified linear unit (ReLU) is implemented for performance-enhancement. Here, the data is trained using a feedforward multilayer perceptron where the weights are trained by the back propagation algorithm. Henceforth, the feedforward backpropagation multilayer perceptrons will be referred to as a neural network (NN), which is commonly used in the literature.

Model tuning and cross-validation

To avoid the possibility of overfitting the data, 20% of the data is set aside from the models for its intended use as a “test set” to assess the performance of the ML algorithms on these unseen data. To this end, a k-fold cross-validation (CV) technique is adopted in this study. In the CV technique, the dataset is split into k number of smaller sets, where in each fold the model is trained on a fraction of data (train set) and tested on the remaining data. The final value obtained is the average value which is iteratively run on each of the k-folds. To this end, this study adopts a nested two-level CV approach as detailed in the article by Cawley and Talbot56. First, the dataset is split into the training set (which is 80% of the data) and test set (20% of the data). In outer CV the model is run for the number of iterations and the average value of the scores (i.e. \(R^{2}\) and MSE) obtained from each fold is used to obtain a comparative performance-evaluation of various ML techniques. In order to obtain the appropriate hyperparameters, a fivefold inner CV is implemented for the training dataset. This nested CV technique alleviates some of the issues regarding the limitations of relatively smaller datasets.

It is challenging in ML to obtain a model that is accurate and simple at the same time. Simplistic models show a lower degree of prediction accuracy or are under fitted, whereas overly complex models often performed worst on the test data or unknown sets of data. Such models can capture perfect trends on the training dataset but show poor transferability to unknown sets of data and suffer from overfitting. Hence, models need to be optimized by tuning the hyperparameters so that an ideal trade-off between accuracy and computational demand is reached.

Model evaluation metrics: mean square error (MSE)

The mean square error measures the average Euclidean distance between the predicted and true or measured values and is expressed as:

where \(y_{t} \left( i \right)\) is the ith true output and \(y_{p} \left( i \right)\) is the ith predicted output. MSE serves as an indicator of prediction accuracy and MSE needs to be minimized in order to maximize the accuracy of ML algorithms.

Model evaluation metrics: Linear coefficient of determination, R2

In this regression problem, the MSE is majorly selected for the quantification of the model performance on the given data set. The coefficient of determination can be used to quantify the proportion of the variance in the dependent variable that is predictable from the independent variable. In this study, to further assist the model selection in this multiple-input multiple-output (MIMO) regression problem, the Pearson correlation coefficient57 is used to indicate the accuracy of the predicted results.

In the case of the sampled data, the Pearson correlation coefficient can be determined as follows:

Here \(y_{t} \left( i \right)\) is the ith true output and \(y_{p} \left( i \right) \) is the ith predicted output.

In this study, both MSE and R2 of the train and test data are used to evaluate the performance of ML algorithms.

Training process and model refinement

This section describes the training and model fitting (overfitting, underfitting, or balanced) for all the adopted methods. The total data is initially split into a training set and test set by 80:20 proportion. While the test dataset is kept unseen during the model training process, the training set is further split into 80% for training and 20% for validation. Here, to validate the model, a fivefold cross-validation is implemented. The optimum complexity is achieved when the minimum error for both the training error and validation error is achieved.

For PR, the complexity is increased with respect to polynomial order from 1 to 6, and an optimum polynomial order of 3 is obtained (please refer to Supplementary Fig. 1). For SVM with RBF kernel, two parameters are considered, which are C and gamma (\(\gamma\)). The model complexity is varied by varying C from 0.001 to 1000 and gamma from 0.1 to 10. The parameters are optimized using a grid search approach so as to minimize the error. Thus, optimum values of 100 and 0.46 are obtained for C and \(\gamma\) respectively (please refer to Supplementary Fig. 4). In the k-nearest neighbor method, the k-value is varied from 1 to 9 and an optimum value of 4 is achieved (please refer to Supplementary Fig. 6). In the decision tree algorithm, the model complexity is characterized by the maximum tree depth which is varied from 2–10. By evaluating the MSE and the R2 values, an optimum value of 5 for the maximum tree depth is chosen (please refer to Supplementary Fig. 8 for more details). For the random forest algorithm, the model complexity is varied by varying the number of trees from 2 to 10 from which an optimal number of 9 for the number of trees is selected which shows the least error for the validation dataset (please refer to Supplementary Fig. 10 for more details). For the Gaussian process, two covariance functions (RBF and Matern) with noise are implemented and the parameters are converged when the log marginal likelihood is maximized. Lastly, for NN the hyperparameters include the number of hidden nodes, size of hidden layers, optimizer function, learning rate, epoch, and batch size. In this study, the Adam optimizer is implemented. The learning is optimized for learning rate equal to 10–3, epoch = 400, batch size of 32, and two hidden layers with a number of hidden nodes (or neurons) equal to 9 (please refer to sSpplementary Fig. 13 for more details). Overall, a rigorous hyperparametric optimization methodology employing a grid search was used for model refinement, thereby, ensuring the optimality of the model without underfitting or overfitting. To evaluate the performance of each model, the models are tested using the unseen test dataset. The performance of various methods is evaluated by comparing the MSE and R2 values obtained from each model.

Model interpretability

The ability of the ML techniques such as NN to predict the target accurately by learning from data has been remarkable. However, because of the higher model complexity for algorithms such as NN, the model interpretability becomes challenging. Several studies have tried to address this issue by measuring a few specific features that are responsible for a model’s output58. Recently, Shapley Additive Explanations (SHAP) which is derived from Shapley values in game theory59 is employed to measure the importance of various features within the model17,18. SHAP has been used for various applications across a wide range of disciplines which includes identification of patient risk factors in tree-based medical diagnostic models60 and determination of various important features of satellite images which are crucial in generating poverty maps61. As per SHAP, the importance of feature \(j\) for the output of model \(f\), \(\phi^{j} \left( f \right)\), is a weighted sum of the feature’s contribution of the model’s output \(f\left( {x_{i} } \right)\) over all possible feature combinations62. \(\phi^{j} \left( f \right)\) is expressed as:

where \(x^{j}\) is feature \(j\), \(S\) is a subset of features, and \(p\) is the number of features in the model.

Data availability

The data that support the findings of this study are available from the corresponding authors upon reasonable request.

References

Curtarolo, S. et al. AFLOW: an automatic framework for high-throughput materials discovery. Comput. Mater. Sci. 58, 218–226 (2012).

Jain, A. et al. Commentary: the materials project: a materials genome approach to accelerating materials innovation. APL Mater. 1, 011002 (2013).

Ravinder, R. et al. Deep learning aided rational design of oxide glasses. Mater. Horiz. 7, 1819–1827 (2020).

Lookman, T., Alexander, F. J. & Rajan, K. Information Science for Materials Discovery and Design (Springer, Berlin, 2016). https://doi.org/10.1007/978-3-319-23871-5.

Ramprasad, R., Batra, R., Pilania, G., Mannodi-Kanakkithodi, A. & Kim, C. Machine learning in materials informatics: recent applications and prospects. NPJ Comput. Mater. 3, 1–13 (2017).

Yaseen, Z. M. et al. Predicting compressive strength of lightweight foamed concrete using extreme learning machine model. Adv. Eng. Softw. 115, 112–125 (2018).

Young, B. A., Hall, A., Pilon, L., Gupta, P. & Sant, G. Can the compressive strength of concrete be estimated from knowledge of the mixture proportions?: New insights from statistical analysis and machine learning methods. Cem. Concr. Res. 115, 379–388 (2019).

Biernacki, J. J. et al. Cements in the 21st century: challenges, perspectives, and opportunities. J. Am. Ceram. Soc. 100, 2746–2773 (2017).

Provis, J. L. Grand challenges in structural materials. Front. Mater. 2, 31 (2015).

Popovics, S. History of a mathematical model for strength development of portland cement concrete. MJ 95, 593–600 (1998).

Allen, A. J., Thomas, J. J. & Jennings, H. M. Composition and density of nanoscale calcium–silicate–hydrate in cement. Nat. Mater. 6, 311–316 (2007).

Mehta, P. & Monteiro, P. J. M. Concrete: Microstructure, Properties, and Materials (McGraw-Hill Education, New York, 2006).

Taylor, H. F. W. Cement Chemistry (Thomas Telford, London, 1997).

Soyer-Uzun, S., Chae, S. R., Benmore, C. J., Wenk, H.-R. & Monteiro, P. J. M. Compositional evolution of calcium silicate hydrate (C–S–H) structures by total X-ray scattering. J. Am. Ceram. Soc. 95, 793–798 (2012).

Ioannidou, K. et al. Mesoscale texture of cement hydrates. PNAS 113, 2029–2034 (2016).

Lothenbach, B. & Nonat, A. Calcium silicate hydrates: solid and liquid phase composition. Cem. Concr. Res. 78, 57–70 (2015).

Lundberg, S. M. & Lee, S.-I.A. Unified approach to interpreting model predictions. In Advances in Neural Information Processing Systems 30 (eds Guyon, I. et al.) 4765–4774 (Curran Associates Inc., Red Hook, 2017).

Cohen, S., Ruppin, E. & Dror, G. Feature selection based on the Shapley value. In Proceedings of the 19th international joint conference on Artificial intelligence. 665–670 (Morgan Kaufmann Publishers Inc., 2005).

Qomi, M. J. A., Bauchy, M., Ulm, F.-J. & Pellenq, R.J.-M. Anomalous composition-dependent dynamics of nanoconfined water in the interlayer of disordered calcium–silicates. J. Chem. Phys. 140, 054515 (2014).

Krishnan, N. M. A. et al. confined water in layered silicates: the origin of anomalous thermal expansion behavior in calcium–silicate–hydrates. ACS Appl. Mater. Interfaces 8, 35621–35627 (2016).

Bauchy, M., Qomi, M. J. A., Ulm, F.-J. & Pellenq, R.J.-M. Order and disorder in calcium–silicate–hydrate. J. Chem. Phys. 140, 214503 (2014).

Pellenq, R.J.-M. et al. A realistic molecular model of cement hydrates. Proc. Natl. Acad. Sci. 106, 16102–16107 (2009).

Manzano, H. et al. Confined water dissociation in microporous defective silicates: mechanism, dipole distribution, and impact on substrate properties. J. Am. Chem. Soc. 134, 2208–2215 (2012).

Bauchy, M. et al. Fracture toughness of calcium–silicate–hydrate from molecular dynamics simulations. J. Non-Crystall. Solids 419, 58–64 (2015).

Atici, U. Prediction of the strength of mineral admixture concrete using multivariable regression analysis and an artificial neural network. Expert Syst. Appl. 38, 9609–9618 (2011).

Kocev, D., Džeroski, S., White, M. D., Newell, G. R. & Griffioen, P. Using single- and multi-target regression trees and ensembles to model a compound index of vegetation condition. Ecol. Model. 220, 1159–1168 (2009).

Kužnar, D., Možina, M. & Bratko, I. Curve prediction with kernel regression. pp. 61–68 (2009).

Anoop Krishnan, N. M. et al. Predicting the dissolution kinetics of silicate glasses using machine learning. J. Non-Crystall. Solids 487, 37–45 (2018).

Geng, G., Myers, R. J., Qomi, M. J. A. & Monteiro, P. J. M. Densification of the interlayer spacing governs the nanomechanical properties of calcium–silicate–hydrate. Sci. Rep. 7, 10986 (2017).

Qomi, M. J. A. et al. Combinatorial molecular optimization of cement hydrates. Nat. Commun. 5, 4960 (2014).

Thomas, J. J., Jennings, H. M. & Allen, A. J. Relationships between composition and density of tobermorite, jennite, and nanoscale CaO−SiO2−H2O. J. Phys. Chem. C 114, 7594–7601 (2010).

Beaudoin, J. J., Gu, P. & Myers, R. E. The fracture of C–S–H and C–S–H/CH mixtures 11 communicated by M. Daimon. Cem. Concr. Res. 28, 341–347 (1998).

Muller, A. C. A., Scrivener, K. L., Gajewicz, A. M. & McDonald, P. J. Densification of C–S–H measured by 1H NMR relaxometry. J. Phys. Chem. C 117, 403–412 (2013).

Richardson, I. G. Model structures for C–(A)–S–H(I). Acta Crystall. B Struct. Sci. Cryst. Eng. Mater. 70, 903–923 (2014).

Pellenq, R.J.-M., Lequeux, N. & van Damme, H. Engineering the bonding scheme in C–S–H: the iono-covalent framework. Cem. Concr. Res. 38, 159–174 (2008).

Suda, Y., Saeki, T. & Saito, T. Relation between chemical composition and physical properties of C–S–H generated from cementitious materials. J. Adv. Concr. Technol. 13, 275–290 (2015).

Rouxel, T. Elastic properties and short-to medium-range order in glasses. J. Am. Ceram. Soc. 90, 3019–3039 (2007).

Bishnoi, S. et al. Predicting Young’s modulus of oxide glasses with sparse datasets using machine learning. J. Non-Crystall. Solids 524, 119643 (2019).

Yang, K. et al. Predicting the Young’s modulus of silicate glasses using high-throughput molecular dynamics simulations and machine learning. Sci. Rep. 9, 8739 (2019).

Hamid, S. A. The crystal structure of the 11 Ǻ natural tobermorite Ca2.25[Si3O7.5(OH)1.5]1H2O. Z. Kristall. New Cryst. Struct. 154, 189–198 (1981).

Qomi, M. J. A., Ulm, F.-J. & Pellenq, R.J.-M. Evidence on the dual nature of aluminum in the calcium–silicate–hydrates based on atomistic simulations. J. Am. Ceram. Soc. 95, 1128–1137 (2012).

Puibasset, J. & Pellenq, R.J.-M. Grand canonical Monte Carlo simulation study of water adsorption in silicalite at 300 K. J. Phys. Chem. B 112, 6390–6397 (2008).

Hahn, S. H. et al. Development of a ReaxFF reactive force field for NaSiOx/water systems and its application to sodium and proton self-diffusion. J. Phys. Chem. C 122, 19613–19624 (2018).

Lyngdoh, G. A., Kumar, R., Krishnan, N. M. A. & Das, S. Realistic atomic structure of fly ash-based geopolymer gels: insights from molecular dynamics simulations. J. Chem. Phys. 151, 064307 (2019).

Lyngdoh, G. A., Nayak, S., Kumar, R., Anoop Krishnan, N. M. & Das, S. Fracture toughness of sodium aluminosilicate hydrate (NASH) gels: Insights from molecular dynamics simulations. J. Appl. Phys. 127, 165107 (2020).

Krishnan, N. M. A., Wang, B., Sant, G., Phillips, J. C. & Bauchy, M. Revealing the effect of irradiation on cement hydrates: evidence of a topological self-organization. ACS Appl. Mater. Interfaces 9, 32377–32385 (2017).

Mishra, R. K. et al. Cemff: a force field database for cementitious materials including validations, applications and opportunities. Cem. Concr. Res. 102, 68–89 (2017).

Chen, J. J., Thomas, J. J., Taylor, H. F. W. & Jennings, H. M. Solubility and structure of calcium silicate hydrate. Cem. Concr. Res. 34, 1499–1519 (2004).

Plimpton, S. Fast parallel algorithms for short-range molecular dynamics. J. Comput. Phys. 117, 1–19 (1995).

Bauchy, M. Structural, vibrational, and elastic properties of a calcium aluminosilicate glass from molecular dynamics simulations: the role of the potential. J. Chem. Phys. 141, 024507 (2014).

Xiang, Y., Du, J., Smedskjaer, M. M. & Mauro, J. C. Structure and properties of sodium aluminosilicate glasses from molecular dynamics simulations. J. Chem. Phys. 139, 044507 (2013).

Rasmussen, C. E. & Williams, C. K. I. Gaussian Processes for Machine Learning (MIT Press, Cambridge, 2006).

Rosenblatt, F. The perceptron: a probabilistic model for information storage and organization in the brain. Psychol. Rev. 65, 386–408 (1958).

Goodfellow, I., Bengio, Y. & Courville, A. Deep Learning (The MIT Press, Cambridge, 2016).

Kratsios, A. Characterizing the Universal Approximation Property. https://arxiv.org/abs/1910.03344 [cs, math, stat] (2020).

Cawley, G. C. & Talbot, N. L. C. On over-fitting in model selection and subsequent selection bias in performance evaluation. J. Mach. Learn. Res. 11, 2079–2107 (2010).

Karl, P. Notes on regression and inheritance in the case of two parents. Proc. R. Soc. Lond. 58, 240–242 (1895).

Shrikumar, A., Greenside, P. & Kundaje, A. Learning Important Features Through Propagating Activation Differences. https://arxiv.org/abs/1704.02685 [cs] (2019).

Shapley, L. S. A Value for n-Person Games. (1952).

Lundberg, S. M. et al. Explainable AI for Trees: From Local Explanations to Global Understanding. https://arxiv.org/abs/1905.04610 [cs, stat] (2019).

Ayush, K., Uzkent, B., Burke, M., Lobell, D. & Ermon, S. Generating Interpretable Poverty Maps using Object Detection in Satellite Images. https://arxiv.org/abs/2002.01612 [cs] (2020).

Molnar, C. Interpretable Machine Learning: A Guide for Making Black Box Models Explainable. ISBN 9780244768522 (2020).

Acknowledgements

This research was conducted in the Multiscale & Multiphysics Mechanics of Materials Research Laboratory (M4RL) at the University of Rhode Island and the supports that have made this laboratory possible are acknowledged. The authors acknowledge Bluewaves High-Performance Research Computing at the University of Rhode Island for providing computer clusters and data storage resources that have contributed to the research results reported within this paper. The authors also thank the IIT Delhi HPC facility for the computational resources.

Author information

Authors and Affiliations

Contributions

S.D. and N.M.A.K. designed this research. G.A.L, H.L., and M.Z. conducted the ML analysis. G.A.L. wrote the first draft of this paper. S.D. and N.M.A.K. supervised the research and contributed to the manuscript revision. All authors approved the final version of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lyngdoh, G.A., Li, H., Zaki, M. et al. Elucidating the constitutive relationship of calcium–silicate–hydrate gel using high throughput reactive molecular simulations and machine learning. Sci Rep 10, 21336 (2020). https://doi.org/10.1038/s41598-020-78368-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-78368-1

This article is cited by

-

A novel interpretable machine learning model approach for the prediction of TiO2 photocatalytic degradation of air contaminants

Scientific Reports (2024)

-

Machine learning in concrete science: applications, challenges, and best practices

npj Computational Materials (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.