Abstract

This paper investigates the influence of a known cue on the oblique effect in orientation identification and explains how subjects integrate cue information to identify target orientations. We design the psychophysical task in which subjects estimate target orientations in the presence of a known oriented reference line. For comparison the control experiments without the reference are conducted. Under Bayesian inference framework, a cue integration model is proposed to explain the perceptual improvement in the presence of the reference. The maximum likelihood estimates of the parameters of our model are obtained. In the presence of the reference, the variability and biases of identification are significantly reduced and the oblique effect of orientation identification is obviously weakened. Moreover, the identification of orientation in the vicinity of the reference line is consistently biased away from the reference line (i.e., reference repulsion). Comparing the predictions of the model with the experimental results, the Bayesian Least Squares estimator under the Variable-Precision encoding (BLS_VP) provides a better description of the experimental outcomes and captures the trade-off relationship of bias and precision of identification. Our results provide a useful step toward a better understanding of human visual perception in context of the known cues.

Similar content being viewed by others

Introduction

The identification of orientation is a basic visual cognitive task for humans and some animals. The cardinal (i.e., vertical or horizontal) orientations are perceived with higher accuracy than the oblique ones. This visual neuropsychological phenomenon is called the oblique effect1,2. That is to say, the cardinal orientations can be estimated by the observers with smaller squared or absolute error than the oblique orientations. The oblique effect is the best-known examples of vision perception and it has been confirmed to exist in a great variety of tasks in visual cognition3,4. The oblique effect has underlying neural mechanisms. There are more neurons responding to cardinal orientations than to the oblique orientations in the primary visual cortex5,6. Significant anisotropy in human visual cortical activity is discovered through visual cortical fMRI7. There are two types of oblique effects: the merely related to low-level visual processing in the primary visual cortex (type I) and the higher-level oblique effect enhancing cognitive processes (e.g. remembering, categorizing or identifying) (type II)8,9. The oblique effect occurs after binocular fusion and depends on perceived, rather than physical orientation10. The perception results belong to the "type II" oblique effect in a fine-identification task11.

Many studies on orientation perception show that identification outcomes have some characteristics. Westheimer found that there is a prominent oblique effect in the two-interval forced choice orientation judgments. And the oblique effect also appears in some visual tasks involving widely-separated elements12. In reproducting angled lines experiment, these errors of perceived orientations show a sinusoidal variation and reproducted orientations always tend toward the nearest 45° oblique13. The magnitude of the deviation from the cardinal orientations has a non-monotonic pattern and exhibit repulsive biases away from the cardinal orientations in an orientation-estimation task14. In an orientation-estimation task, the subjects’ discriminability is best at the cardinals, showing that orientation judgements are more accurate at cardinal (horizontal and vertical) orientations15. And the perceptual bias toward oblique orientations was reduced when oriented stimuli were more uncertain15,16.

Moreover, the hidden reference axes or explicit references often affect perceptual outcomes in visual identification11,12,16,17,18,19,20,21. Specially, based on local contours, the observers may form an implicit axis (i.e., reference axes) in a visual task. It is assumed that the subjects use the cardinal directions as implicit “reference axes”17,18. In general, gravitational, egocentric and visual reference frames may be considered in the visual task3 and the three frames are often aligned19. In a fine direction discrimination task using moving random-dot stimuli, the subjects reported whether the direction of motion was clockwise or counterclockwise of a decision boundary indicated by a bar outside the edge of the dot-field. The observers’ perception of the direction of motion is biased away from the boundary (the unknown explicit reference)20. In forced-choice orientation discrimination, when the reference and the stimulus are presented simultaneously and there is a sufficient distance between them to avoid orientation interaction, the oblique effect of discrimination is reduced considerably12. Rauber and Treue conducted an estimation task of direction, in which an unknown-direction line segments presented simultaneously with the moving random-dot pattern at the edge of motion area. There are the robust systematic biases which deviate away from the nearest horizontal cardinal direction. Their results also show that the subjects may have selected different references and their values to estimate goals according to experimental tasks and stimuli18. Wiese and Wenderoth21 have reproduced Rauber and Treue’s experiments in which the moving dots and the reference line presented simultaneously. Their experiment results also exhibit reference repulsion from the horizontal cardinal directions. But there is no unified statement about the influnce of the references. Zamboni et al. asked subjects estimated the direction of motion in the presence or absence of unknown reference lines. The unknown reference line was randomly chosen for each trial and presented at the edge of a circular aperture. The estimated mean directions were influenced by the position of the reference line11. Patten et al. used experiments to explore the behavioral and neural consequences of manipulating stimulus certainty in the context of orientation processing. The reference line on outside of the stimulus was chosen to prevent a biased representation of cardinal and primary oblique orientations and was not used as an independent variable16.

Meanwhile, the congnitive process of cues integration is a higher-level process and the vision perception can be described as a Bayesian inference process11,15,22,23,24. Bayesian inference theories successfully explicate many visual neuropsychological phenomena25,26,27,28,29. The Bayesian inference framework provides the probabilistic way in which the target orientations are optimally estimated by integrating current sensory input and prior knowledge of these targets30. Once there exist the contextual cues in the visual task, humans can integrate available information of cues to achieve the optimal or suboptimal perception results in a complex environment, such as in sensorimotor learning26. Based on the framework of Bayesian inference, Ma and Jazayeri27 studied how subjects integrate the contextual cues information to obtain measurements of task. Furthermore, Ma et al.31 and Rich et al.32 concluded that Bayesian inference can be implemented through the probabilistic population coding model.

Although there are many studies on orientation identification, the psychophysical experiments and the involving Bayesian orientation identification model under the condition that the known reference line intersects with the target has not been considered in previous studies. Here we investigate the influence of a known oriented reference line on orientation identification, and provide an explanation of the identification outcomes under the Bayesian inference framework. To address this issue, we design the orientation identification task. Eleven participants completed the orientation identification task with and without reference line. The experimental results suggest that the known tilted reference line significantly affects the identification accuracy and the oblique effect of orientation identification is obviously weakened.

We then provide an explanation of the identification outcomes in the presence of the reference line under the Bayesian inference framework. We model the orientation identification as a probabilistic encoding–decoding process. The Equal-Precision encoding (the same precision across stimuli and trials) and Variable-Precision encoding (precision vary across stimuli) patterns are considered in the encoding process. Comparing the predictions of model to the experimental data, we find that the data are best explained by Bayesian Least Squares estimator under the Variable-Precision (BLS_VP) encoding pattern.

Materials and methods

Ethics statement

The procedures were carried out in accordance with the National Commission for the Protection of Subjects of Behavioral Research guidelines for human studies. Before the study was initiated, informed consent was acquired for each subject and approval was obtained from the local Ethics Committee of Nanjing University of Aeronautics and Astronautics (IRB number 201807).

Participants

Eleven subjects participated in the orientation identification task (four females and seven males; age range 18–28 years old). All of them have normal or corrected-to-normal visions and they were naive to the purpose of the experiments.

Apparatus and stimuli

Line orientation stimuli and a known reference line were presented on a 21 in. liquid crystal display (LCD) with 120 Hz refresh rate and 1024 × 768 pixel resolutions. In order to suppress the influence of the edges of the screen on identification, all stimuli were presented in a central gray disk with 400 pixels in radius. Luminance was set to the middle range (16 cd/m2). We kept the room’s lights off for the entire duration of each trial session. Subjects’ head positions were controlled using a chin and forehead rest located 54 cm away from the computer screen. The height was individually adjusted to hold the subjects’ eyes level to the center of the screen in order to ensure the gravitational, egocentric or body-centered are aligned. The PsychoPy software package33 was used to generate the stimuli and the reference line, and record timing and the estimates. The target stimulus was a green line (length 500 pixels; width 2 pixels; RGB [0, 255, 0]) and the reference line was a light gray dashed line (length 200 pixels; width 1 pixel; RGB [200, 200, 200]).

We chose the orientation of reference line at 45°. This is because the identification precisions of five oblique orientations (i.e., 15°, 30°, 45°, 60° and 75°) were lower than the precision of cardinal orientations34. And the oblique orientations near the 45° reference line may be least affected by the cardinal direction. We did the similar preliminary experiments of orientation identification. The results of preliminary experiments showed there is no significant difference in the identification precision in the vicinity of the cardinal orientations under two situations (reference presence and absence) (Supplementary Tables S1, S2 and Fig. S1). So we reduced the range of orietations only by looking at the orientation near the reference line. The stimulus orietations were selected randomly from Uniform (22, 68). And sampling the stimuli from the interval [22°, 68°] can decrease the impact of hidden cardinal contour on identification, since the edges corresponding to these stimulus angles are relatively far away from the horizontal and vertical axes. The subjects can use the cardinal (horizontal/vertical) directions as implicit “reference axes”19,35. For convenience, we chose 25 target stimulus orientations (22°, 24°, …, 44°, 45°, 46°, 48°, …, 68°) from the interval [22°, 68°]. Each of the 25 values was repeated 16 times, for a total of 400 trials.

Experimental procedure

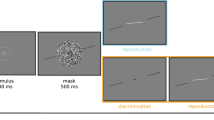

In this orientation identification task, the subjects identified a target orientation and entered estimated value via the keyboard. Each trial started with the appearance of a central fixed point in the gray disk for 700 ms. Then the target stimulus line and the known reference line were displayed simultaneously on the screen (Fig. 1a). The subjects were instructed to estimate and input the values of target orientations via the keyboard (if making a mistake, the subjects can press R to re-enter). The estimated values were recorded.

The orientation identification task in the presence of the 45° reference with the correct feedback. (a) Procedure of orientation identification task with the correct feedback in the learning phase. The trial started with the appearance of a central point presented in the gray disk. After 700 ms interval, a target stimulus (solid line) was displayed along with a 45° tilted reference line (dotted line) in the middle of the screen without time limit. Subjects entered the numbers of estimate for the target orientation via the keyboard. Then the true orientation values were given, followed by 700 ms interval. (b) The cognitive angle. The angle between the target line and the known tilted reference line is called the cognitive angle and is denoted as \(\alpha\). The orientation of the stimulus and the known reference are denoted as \(\theta\) and R respectively. (c) Reference repulsion: the small cognitive angles \(\alpha\) have a tendency to overestimate so that there are systematic biases away from the reference line.

To ensure that the subjects have enough time to adapt their responses to the range of target orientations and further obtain the prior knowledge of target orientations, each subject performed 400 trials before the experiment, which is called the Learning phase. In the Learning phase, the correct feedback of each stimulus was provided after the estimate was input, followed by 700 ms time interval (Fig. 1a). After completing all trials in the learning phase, each subject carried out the orientation identification experiments without feedback, which is called the Estimating phase. Each subject completed 800 trials in the estimating phase. These two phases of psychophysical tests were performed on different days. The subjects first performed control experiment without the known reference line. Other than the presence of the known reference line, the procedure of control experiment is the same as the one with reference. Prior to the experimental trials, each subject was informed about the degree of the reference line and underwent some practice to familiarize oneself with the tasks and procedure. The subjects were asked to rest for at least 10 s when the experiments were carried out in half. There was no time limit to identify a target stimulus for each subject.

Data analysis

To evaluate the perceptual performance in each situation, we first calculated three metrics for each target orientation separately. These three metrics are defined as follows.

-

1)

Identification bias (BIAS): the mean of the estimation errors of orientations. That is, the bias of the j th target stimulus \(s_{j}\) is \(BIAS_{j} = \sum\nolimits_{{{\text{i}} = 1}}^{n} {{{\left( {x_{ji} - s_{j} } \right)} \mathord{\left/ {\vphantom {{\left( {x_{ji} - s_{j} } \right)} n}} \right. \kern-\nulldelimiterspace} n}}\), where \(x_{ji}\) is the i th estimate of \(s_{j}\) and n is the number of the stimulus \(s_{j}\) appears in the experiment. The value BIAS is signed and reflects the degree to which a subject’s estimates deviates on average from the true stimulus value. If the sign is positive, it means that the true stimulus is overestimated, and vice versa.

-

2)

Standard deviation of identification (SD): The SD value of \(s_{j}\) is \(SD_{j} = \sqrt {\sum\nolimits_{{{\text{i}} = 1}}^{n} {{{\left( {x_{ji} - \overline{x}_{j} } \right)^{2} } \mathord{\left/ {\vphantom {{\left( {x_{ji} - \overline{x}_{j} } \right)^{2} } n}} \right. \kern-\nulldelimiterspace} n}} }\), where \(\overline{x}_{j} = \sum\nolimits_{i = 1}^{n} {x_{ji} /n}\) is the mean of estimates for \(s_{j}\). The SD value reflects the variability of the estimated orientations. The smaller the value SD, the higher the identification precision of the estimates, and vice versa.

-

3)

Mean squared error of identification (MSE): The MSE of \(s_{j}\) is \(MSE_{j} = \sum\nolimits_{{{\text{i}} = 1}}^{n} {{{\left( {x_{ji} - s_{j} } \right)^{2} } \mathord{\left/ {\vphantom {{\left( {x_{ji} - s_{j} } \right)^{2} } n}} \right. \kern-\nulldelimiterspace} n}}\). The value MSE is a measure of the overall quality of orientation identification. The smaller the value MSE, the better the quality, and vice versa.

In each situation with or without reference line, we computed these three metrics for each of 25 stimulus orientations (referred to by subscript j): \(BIAS_{j} ,SD_{j} \;and\;MSE_{j} \;(j = 1, \ldots ,25).\) The three metrics satisfied: \(MSE_{j} = BIAS_{j}^{2} + SD_{j}^{2} \;(j = 1, \ldots ,25).\) The overall BIAS and SD of single subject’s data are defined as: \(BIAS = \sqrt {\sum\nolimits_{j = 1}^{25} {{{BIAS_{j}^{2} } \mathord{\left/ {\vphantom {{BIAS_{j}^{2} } {25}}} \right. \kern-\nulldelimiterspace} {25}}} }\) and \(SD = \sqrt {\sum\nolimits_{{{\text{j}} = 1}}^{25} {{{SD_{j}^{2} } \mathord{\left/ {\vphantom {{SD_{j}^{2} } {25}}} \right. \kern-\nulldelimiterspace} {25}}} } .\) The corresponding overall mean squared error of identification is defined as: \(MSE_{S} = BIAS^{2} + SD^{2}\), which highlights the trade-off between bias and identification precision. We computed these three metrics for all subjects’ data. A repeated-measures two-way ANOVA was used to determine any significant influences of the reference on the identification outcomes.

Bayesian cue integration model of orientation identification

To quantitatively explain the psychophysical results, we put forward a cue integration model based on the Bayesian inference framework. The subjects are assumed to be Bayesian observers who can optimally estimate target orientations in a noisy environment by integrating prior knowledge of target orientations and current sensory input26. The identification process of orientation is modeled as Bayesian probabilistic inference, and consists of an encoding stage and a Bayesian decoding stage36,37 (Fig. 2a). We assume that the observers consider the known reference line as a distinct sensory cue, which provides the subjects with lots of additional contextual information. Let \(\theta\) be the target orientation, and \(R = 45^{{\text{o}}}\) be the known reference’s orientation. Define \(\alpha = \theta - R\) as the cognitive angle (Fig. 1b). Thus, the estimation of a target orientation is transformed into the estimation of an cognitive angle \(\alpha\).

Bayesian cue integration model of orientation identification in the presence of a known reference. (a) Diagram of the Bayesian inference process of orientation identification in the presence of a known reference. The process consists of the encoding stage and the Bayesian decoding stage. In the encoding stage, because of sensory measurement noise, the cognitive angle \(\alpha\) is encoded as a internal visual sensory measurement or evidence,\(\alpha_{m}\), which is characterized by the conditional probability distribution, \(p(\alpha_{m} |\alpha ,\sigma )\), where \(\sigma\) is the standard deviation of inter visual sensory measurement. In the Bayesian decoding stage, the angle estimator \(\alpha_{e}\) is specified based on the posterior distribution \(p(\alpha |\alpha_{m} ,\sigma )\) and the cost function \(L(\alpha ,\alpha_{e} )\). The estimator of target orientation \(\theta_{e}\) is equal to the sum of \(\alpha_{e}\) and the known reference orientation R. (b) Two types of encoding precision of internal visual sensory measurement. Equal-Precision encoding (EP): \(p(\alpha_{m} |\alpha_{i} ,\sigma ) = N(\alpha_{m} ;\alpha_{i} ,\lambda^{2} ).\) The conditional distribution has a fixed standard deviation for all cognitive angles. Variable-Precision encoding (VP): \(p(\alpha_{m} |\alpha_{i} ,\sigma ) = N(\alpha_{m} ;\alpha_{i} ,(\lambda \left| {\alpha_{i} } \right|)^{2} ).\) The standard deviation grows linearly with its mean,\(\sigma_{i} = \lambda |\alpha_{i} |\) (\(\lambda\) refer to as the Weber fraction).

In the encoding stage, as a result of sensory noise, the subjects obtain the cognitive angle’s sensory measurement or evidence \(\alpha_{m}\), which may differ from the true angle \(\alpha\). The primary visual cortex implements the encoding process in brain38. Repeated appearance of the same target orientation resulted in slightly different measurements, which could be described by a conditional probability distribution \(p(\alpha_{m} |\alpha ,\sigma )\). We model the conditional probability distribution of sensory measurement as a Gaussian distribution with mean of \(\alpha\) (Fig. 2b). Two types of encoding precision of the conditional probability distribution are considered39,40,41:

-

Equal-Precision (EP) encoding (Fig. 2b), in which sensory measurements have the same precision across trials and stimuli, \(\sigma = \lambda\). In this case, the conditional probability distribution of sensory measurement \(\alpha_{m}\) can be denoted by \(p_{EP} (\alpha_{m} |\alpha ,\lambda ) = N(\alpha_{m} ;\;\alpha ,\lambda^{2} )\).

-

Variable-Precision (VP) encoding (Fig. 2b), in which sensory measurement precision vary across stimuli. We take the standard deviation grows linearly with its mean, \(\sigma = \lambda |\alpha |\) (see Supplementary Fig. S2), where \(\lambda\) is referred as the Weber fraction associated with the measurement. The characteristic of the encoding is called scalar variability42,43,44. Then, \(p_{VP} (\alpha_{m} |\alpha ,\lambda ) = N(\alpha_{m} ;\;\alpha ,\lambda^{2} \alpha^{2} )\).

In the decoding stage, the Bayesian observer can combine with the prior probability distribution of target angle \(p(\alpha )\) and the conditional probability of sensory measurement \(p(\alpha_{m} |\alpha ,\lambda )\) to compute the posterior distribution of target angle \(p(\alpha |\alpha_{m} ,\lambda )\) (Fig. 2a). Then, given a cost function \(L(\alpha ,\alpha_{e} )\) which quantifies the cost of estimating true orientation \(\alpha\) as \(\alpha_{e}\), the observer obtains the Bayesian estimator \(\alpha_{e}\) of true angle \(\alpha\) by minimizing the expected posterior risk. According to the relationship between the target orientation \(\theta\) and the cognitive angle \(\alpha\), the estimator of the stimulus orientation is derived, that is, \(\theta_{e} = R + \alpha_{e}\).

To estimate the cognitive angle, the subjects need to compute the posterior distribution of target orientation \(p(\alpha |\alpha_{m} ,\lambda )\) using Bayes’ rule as follows:

where \(\alpha_{\min }\) and \(\alpha_{\max }\) are the minimum and maximum value of supporting set of prior distribution of cognitive angle, respectively. The prior distribution of cognitive angle \(p(\alpha )\) is a uniform distribution.

Given an objective cost function \(L(\alpha ,\alpha_{e} )\), Bayesian estimator of the angle \(\alpha\) is chosen to minimize the expected cost averaged over the posterior distribution of target orientation. According to the relationship of the cognitive angle and target orientation, the observer could infer the estimator of target orientation,

When the subjects chose the cost function \(L(\alpha ,\alpha_{e} ) = - \delta (\alpha - \alpha_{e} )\), where \(\delta ( \cdot )\) denotes the Dirac delta function, the corresponding Bayesian estimator is obtained by maximizing a posteriori (MAP), then

Under Equal-Precision (EP) encoding, the conditional probability of sensory measurement is \(p_{EP} (\alpha_{m} |\alpha ,\lambda ) = N{\kern 1pt} {\kern 1pt} (\alpha_{m} ;\alpha ,\lambda^{2} )\). Then, the Maximum posteriori estimator is denoted by

Under Variable-Precision (VP) encoding, the conditional probability of sensory measurement is \(p_{VP} (\alpha_{m} |\alpha ,\lambda ) = N(\alpha_{m} ;\alpha ,\lambda^{2} \alpha^{2} )\) and the Maximum posteriori estimator is a function of \(\alpha_{m}\) and \(\lambda\), that is,

When the cost function is the square error between the estimate and the stimulus, \(L(\alpha ,\alpha_{e} ) = (\alpha - \alpha_{e} )^{2}\), the corresponding Bayesian estimator is the mean of the posterior distribution of the angle which is called Bayesian least squares (BLS) (See Appendix A),

Under the EP and VP encoding, we can also obtain the corresponding Bayesian estimators \(\theta_{e,BLS\_EP}\) and \(\theta_{e,BLS\_VP}\), respectively. The two estimators have no analytical expressions because integrals of Eq. (6) are not analytically solvable. We can obtain the approximate values of two estimators using the Simpson rule to compute the two integrals in Eq. (6).

Parameter estimation of model

Based on the experimental data in the presence of reference line, the maximum-likelihood estimates of parameter of model are obtained using Monte Carlo simulations. There is one parameter \(\lambda\) in model: the standard deviation of the sensory measurement distribution, \(\sigma = \lambda\) (equal precision pattern), or Weber fraction, \(\sigma = \lambda |\alpha |\) (variable precision pattern). We wish to find the most probable parameter value which maximizes the likelihood function given the psychophysics experimental data set. Let \(\alpha^{j}\) be the j-th cognitive angle stimuli and \(\alpha_{e}^{ji}\) be the i-th estimate of \(\alpha^{j}\). The experimental data set is expressed as \(\left\{ {(\alpha^{j} ,\alpha_{e}^{ji} )} \right\}_{i = 1, \ldots ,n}^{j = 1, \ldots ,k}\), where \(k\) is the number of target orientations and \(n\) is the number of \(\alpha^{j}\) appears in the experiment. The joint conditional probability of \(\left\{ {\alpha_{e}^{ji} } \right\}_{i = 1, \ldots ,n}^{j = 1, \ldots ,k}\) is served as a likelihood function of parameter \(\lambda .\) Assuming that the experimental data \(\left\{ {\alpha_{e}^{ji} } \right\}_{i = 1, \ldots ,n}^{j = 1, \ldots ,k}\) associated with any angle \(\alpha\) are conditionally independent, the likelihood function can be expressed as the product of their individual conditional probabilities as follows:

where M denotes the one of four types of the estimators. By taking the logarithm of both sides, we can obtain

Based on the conditional independence of Bayesian network, the variables \((\alpha ,\lambda )\) and the estimator of angle \(\alpha_{e}\) are conditionally independent given sensory measurement \(\alpha_{m}\). The conditional probability \(p(\alpha_{e} |\alpha ,\lambda )\) can be expressed as

where \(\delta ( \cdot )\) denotes the Dirac delta function.

Since the probability in Eq. (8) cannot be computed analytically, we estimate numerically the sum of the logarithm likelihood function by Monte Carlo simulations41. For a given parameter value \(\lambda\), type M and the j th cognitive angle \(\alpha^{j} ,\) we perform the following procedure to compute the probability \(p(\alpha_{e}^{ji} |\alpha^{j} ,M,\lambda )\): 800 sensory measurement values are randomly drawn from the conditional probability distribution \(p(\alpha_{m} |\alpha^{j} ,\lambda )\) and the sensory measurement data set \(\{ \alpha_{m}^{j,i = 1, \cdots ,800} \}\) is obtained. Then each \(\alpha_{m}^{ji}\) is substituted separately into Eqs. (4), (5) and (6), and the data sets of the corresponding estimates \(\{ \hat{\alpha }_{e}^{j,i = 1, \ldots ,800} \}\) are obtained. The probability \(p(\alpha_{e}^{ji} |\alpha^{j} ,M,\lambda )\) is approximately equal to the frequency that \(\alpha_{e}^{ji}\) appears in \(\{ \hat{\alpha }_{e}^{j,i = 1, \ldots ,800} \}\). Thus, the sum of the logarithm likelihood function of experiment data (Eq. 8) can be calculated approximately.

We can find the maximum likelihood estimates of the parameters that maximized Eq. (8). Given subject’s data and the type M, we vary the value for \(\sigma\) in steps of 0.01 between 1 and 8, and the value for \(w\) in steps of 0.01 between 0.1 and 0.4. For each parameter value, we obtain the sum of logarithm likelihood function of experimental data. The parameter value which maximizes Eq. (8) is an approximated value for the maximum likelihood estimate of the parameter.

Results

Experimental results

For each subject, except for trials data in the learning phase, we collected 800 trials data in the estimating phase. By analyzing experimental data, the following three features are revealed from the experimental data.

First, the identification precision is obviously improved when the known reference line is presented (Fig. 3a). In the absence of reference, the SD values of 25 target oblique orientations range from 4.43° to 6.15° and are obviously larger than the cardinal orientations’ SD values (0° (0.501), 90° (1.114)) which is from the results of our preliminary experiment (Supplementary Table S1). In the presence of reference, as the target orientation moves closer to the reference line, the identification precision becomes higher. All subjects can accurately discriminate the stimuli of the 45°, 44° and 46° (Fig. 3a). We make 2 (reference situation) × 25 (orientation) repeated-measures two-way ANOVA for the SD. The results suggest that the known reference line significantly affects the precision of identification (\(F_{1,500} = 3{73},\;p < 0.0001\)) and the interaction between “reference situation” and “orientations” has significant effects on the SD (\(F_{24,500} = 3.57,\;p < 0.0010\)).

The standard deviation and bias of orientation identification across all subjects in both situations. (a) The precision of identification across all subjects as a function of target orientations. The triangles represent the SD values with reference and the dots represent the SD values without reference. (b) The contrast histogram for the BIAS of orientation identification across 11 subjects in the two situations.

Second, the biases of identification across all subjects in two situations present different characteristics. The known reference line significantly affects the BIAS (\(F_{1,500} = 4,\;p = 0.0455\)). When the reference line is absent, the stimuli of less than 45° are overestimated and the ones more than 45° are underestimated (Figs. 3b, 4a left panel). The phenomenon is known as the regression effect45,46,47. In the presence of reference, the reference line divides the stimulus interval into two parts and there exist two-regression effects in the two sub-intervals. Moreover, in the vicinity of the reference line, the stimuli of less than 45° are underestimated, while those over 45° are overestimated (Figs. 3b, 4a right panel). The sign of biases is opposite to the ones without the reference line. The biases of orientation perception show the reference repulsion phenomenon18. The biases of orientation identification in the vicinity of the orientation of 45° decrease dramatically. The results of repeated-measures two-way ANOVA illustrates the interaction between reference situation and orientations has significant effects on the BIAS (\(F_{24,500} = 106.93,\;p < 0.0001\)).

Orientation-identification results across all subjects in the presence of reference. (a) The error bar (mean ± 1 standard deviation across eleven subjects) for the 25 stimulus orientations with and without reference. The diagonal (dotted line) indicates that the estimates and target orientations coincide. (b) The SD versus BIAS for the data from each individual subject and total data in the presence of reference. Dashed quarter circles show the overall MSE values associated with the corresponding SD and BIAS. There is a trade-off between the BIAS, SD and MSE.

Third, in the presence of reference, experimental data show that there is a reasonable trade-off between the precision and the bias of identification (Fig. 4b). Suppose each subject has stable identification ability (MSES), according to the relationship among the three metrics, the BIAS decreases when the SD increases, and vice versa. Figure 4b shows that the precision of identification tends to be improved by adjusting the bias of estimation. They reasonably consider the relationship of BIAS and SD in an identification task.

In summary, the experimental results show that the known reference line has a remarkable impact on the orientation identification. The findings indicate that the subjects can integrate the information of the known reference into orientation identification. Moreover, the known reference line might be one of the important factors that affect the identification.

Model fitting and comparison

We put forward a Bayesian cue integration model to account for these identification results. Based on different assumptions of encoding precision of sensory stimulus and cost functions in decoding process, we obtain four Bayesian estimators: MAP_EP (Equal-Precision Maximum posteriori estimator), BLS_EP (Equal-Precision Bayesian least squares estimator), MAP_VP (Variable-Precision Maximum posteriori estimator), BLS_VP (Variable-Precision Bayesian least squares estimator). Each estimator has one parameter: the standard deviation of the sensory measurement distribution, \(\lambda = \sigma\) (equal precision pattern), or Weber fraction, \(\lambda = w\) (variable precision pattern). For each subject’s data and total data across eleven subjects, the maximum likelihood estimate of parameter (\(\sigma\) or \(w\)) is approximately obtained.

We compare each model’s predictions to the experimental data using the trade-off between the BIAS, SD and MSE. At the same time, we also used the cross-entropy (CE) to compare the four models. It is computed as \(CE = - \frac{1}{N}\sum\nolimits_{{\text{i = 1}}}^{N} {\sum\nolimits_{k = 1}^{25} {p_{ik} \log \hat{p}_{ik} } }\), where N is the number of samples, k is the number of classification labels, and \(p_{ik}\) and \(\hat{p}_{ik}\) are the actual probability and estimated probability respectively. The values for three metrics and cross-entropy can be found in Supplementary informations (Table S4).

We can also theoretically compute the mean and variance of four Bayesian estimators through the Eqs. (4), (5) and (6). The mean and variance of MAP_EP estimator are as follows:

The mean and variance of MAP_VP estimator are as follows:

The means and variances of BLS_EP and BLS_VP estimators can be numerically approximated using Monte Carlo simulations.

The Variable-Precision Bayesian models better fit the experimental data than the Equal-Precision models, and the BLS_VP estimator is better than the other three estimators. As we see from the Table 1, the MSES and CE scores of Variable-Precision estimators obviously are less than that of Equal-Precision counterparts. Figure 5 shows that the Variable-Precision models provide a reasonable description of the precision of experimental data, especially in the vicinity of 45°. Figure 6 displays four contrast histograms for the biases of total data and the biases of model predictions. The MAP_EP estimator is theoretically optimal due to the unbiasedness (Eq. 10), but it clearly fails to capture the characteristic of experimental data (Figs. 5b, 6a). The prediction biases of the MAP_VP and BLS_EP models also fail to account for the phenomenon of reference repulsion (Fig. 6b,c). The BLS_VP model best explains the reference repulsion and two-regression effects of the experimental data (Figs. 5e, 6d).

Identification of orientation as a function of stimulus orientation in the presence of reference. (a) Circles and error bar: the total data across eleven subjects. Shaded regions are mean ± 1 s.d. The MSE is the mean squared error between subjects’ identification and true stimuli. (b–e) Circles and error bar for predictions of each Bayesian model. The shaded regions are these predictions (simulated mean ± 1 s.d.). Each MSE is correspondingly the mean squared error between subjects’ identification and the prediction of each model. Each CE is correspondingly the cross entropy.

Figure 7 illustrates that the BLS_VP model is obviously better than the others at capturing the trade-off relationship between the bias and precision of the data. The BIAS of the MAP estimator are obviously less than that of experimental data and their SDs are larger than that of the data (Fig. 7a,c,d). The BLS_EP model also underestimates the BIAS of orientations and overestimates the SDs of the data across all subjects (Fig. 7b left panel, c,d). However, the BIAS and the SD of the BLS_VP estimator are consistent with the experimental results (Fig. 7b right panel, c,d).

The BIAS and SD of identification for experimental data and model predictions. (a) The BIAS (triangle) and SD from the MAP_EP (left) and MAP_VP (right) models as the functions of BIAS and SD computed directly from the data for each subject and total data. Diagonal dashed line represents that the simulated and real data coincide. (b) The BIAS and SD from the BLS_EP (left) and BLS_VP models (right). (c) The SD versus BIAS for total data in the presence of reference. Dashed quarter circles show that the MSE value associated with the BIAS and SD is 10.365. (d) The SD versus BIAS for the data from the third subject in the presence of reference. Dashed quarter circles show that the associated MSE value is 7.682.

Discussion

Visual perception can be influenced by contextual cues. In this paper, we have investigated the influence of a known reference line on the orientation identification. We design the experiments in which the subjects estimate the orientations of target lines in the presence of a known reference line, and the control experiments without reference are also conducted. In the absence of reference, the oblique effect and the regression effect exist, which is consistent with the results in the previous studies34,44,46.

In the presence of a known reference, the orientation identification performance is obviously improved. The oblique effect is weakened with the improvement of the identification precision of the oblique orientations, especially in the vicinity of the reference line. But the presentation of known reference line may not abolish the type II oblique effect completely. Moreover, we observe the phenomena of two-regression in intervals of (22°, 45°) and (45°, 68°) respectively. A reasonable explanation may be that the stimulus interval is divided into two subintervals by the known reference line, and the orientation identification in the two subintervals respectively presents regression effect. If the orientation of reference is not 45°, the centers of regressions may be correspondingly shifted. Furthermore, there exist robust systematic biases which are deviated from the reference line (i.e., reference repulsion11,18,20). The phenomenon may be caused by the overestimate of the angle between the stimulus and the reference.

At the same time, the observer’s visual system can reasonably trade off the relationship of the bias and the precision to improve the overall identification performance. The observer wants to be able to accurately identify target orientations. But in most cases, there exist systematic deviation and uncertainty. We suppose the identification ability is limited (MSE is fixed). The observer may lower the requirements of bias to improve the identification precision. The trade-off relationships also appeared in judgment-time task44. From the framework of efficient coding the oblique effect may be interpreted as a result of the neural representations of different orientations in sensory systems48,49. The experimental phenomena in the presence of reference could be the results of efficient coding in preference-based decisions.

Bayesian inference provides successful description for the phenomena in many cognitive tasks25,26,29,44,50. In the paper a Bayesian cue integration models are proposed to describe the experimental results. The process of identification consists of two stages: encoding stage and Bayesian decoding stage. For a given stimulus, the encoding in brain is characterized by the conditional probability distribution of the sensory measurement. The equal precision and variable precision patterns are considered in encoding stage.

In decoding stage, a Bayesian estimator is obtained based on the posterior distribution of the angle \(\alpha\) and the cost function. The results show that The Variable-Precision Bayesian models better fit the experimental data and the Variable-Precision Bayesian least squares estimator (BLS_VP) is superior to the others. Our results have revealed that human visual orientation identification can be reasonably described as the outcomes of a Bayesian inference process that optimally integrates the information of known reference into orientation identification. The findings provide an important step toward a better understanding of human visual perception given the known cues.

Data availability

The experiment code, data, and analysis code are available on https://osf.io/wrxs3/.

References

Appelle, S. Perception and discrimination as a function of stimulus orientation: The “oblique effect” in man and animals. Psychol. Bull. 78, 266–278 (1972).

Coppola, D. M., Purves, H. R., Mccoy, A. N. & Purves, D. The distribution of oriented contours in the real world. Proc. Natl. Acad. Sci. USA 95, 4002–4006 (1998).

Gentaz, E. & Ballaz, C. La perception visuelle des orientations et« l'effet de l'oblique ». Annee Psychol. 100, 715–744 (2000).

Appelle, S. & Countryman, M. Eliminating the haptic oblique effect: Influence of scanning incongruity and prior knowledge of the standards. Perception 15, 325 (1986).

Coppola, D. M., White, L. E., Fitzpatrick, D. & Purves, D. Unequal representation of cardinal and oblique contours in ferret visual cortex. Proc. Natl. Acad. Sci. USA 95, 2621–2623 (1998).

Furmanski, C. S. & Engel, S. A. An oblique effect in human primary visual cortex. Nat. Neurosci. 3, 535–536 (2000).

Mannion, D. J., Mcdonald, J. S. & Clifford, C. W. G. Orientation anisotropies in human visual cortex. J. Neurophysiol. 103, 3465–3471 (2010).

Essock, E. A. The oblique effect of stimulus identification considered with respect to two classes of oblique effects. Perception 9, 37–46 (1980).

Panagiota, B. et al. Independent sources of anisotropy in visual orientation representation: A visual and a cognitive oblique effect. Exp. Brain Res. 233, 3097–3108 (2015).

Borra, T., Hooge, I. T. & Verstraten, F. A. A dichoptic study of the oblique effect. Perception 39, 909–917 (2010).

Zamboni, E., Ledgeway, T., Mcgraw, P. V. & Schluppeck, D. Do perceptual biases emerge early or late in visual processing? Decision-biases in motion perception. P. Roy. Soc. B-Biol. Sci. 283, 1–9 (2016).

Westheimer, G. Meridional anisotropy in visual processing: Implications for the neural site of the oblique effect. Vision Res. 43, 2281–2289 (2003).

Lennie, P. Distortions of perceived orientation. Nat. New Biol. 233, 155–156 (1971).

De Gardelle, V., Kouider, S. & Sackur, J. An oblique illusion modulated by visibility: Non-monotonic sensory integration in orientation processing. J. Vision 10, 6–6 (2010).

Girshick, A. R., Landy, M. S. & Simoncelli, E. P. Cardinal rules: Visual orientation perception reflects knowledge of environmental statistics. Nat. Neurosci. 14, 926–932 (2011).

Patten, M.L., Mannion, D.J. & Clifford, C.W.G. Correlates of perceptual orientation biases in human primary visual cortex. J. Neurosci. 37, 3511–3516 (2017).

Campbell, F. W. & Maffei, L. Electrophysiological evidence for the existence of orientation and size detectors in the human visual system. J. Physiol. 207, 635–652 (1970).

Rauber, H. J. & Treue, S. Reference repulsion when judging the direction of visual motion. Perception 27, 393–402 (1998).

Luyat, M., Mobarek, S., Leconte, C. & Gentaz, E. The plasticity of gravitational reference frame and the subjective vertical: Peripheral visual information affects the oblique effect. Neurosci. Lett. 385, 215–219 (2005).

Jazayeri, M. & Movshon, J. A. A new perceptual illusion reveals mechanisms of sensory decoding. Nature 446, 912–915 (2007).

Wiese, M. & Wenderoth, P. What is the reference in reference repulsion?. Perception 37, 1380–1385 (2008).

Kersten, D., Mamassian, P. & Yuille, A. Object perception as Bayesian inference. Annu. Rev. Psychol. 55, 271–304 (2004).

Knill, D. C. & Richards, W. A. Perception as Bayesian Inference (Cambridge University Press, Cambridge, 1996).

Maloney, L. T. & Mamassian, P. Bayesian decision theory as a model of human visual perception: Testing Bayesian transfer. Vis. Neurosci. 26, 147–155 (2009).

Battaglia, P. W., Kersten, D. & Schrater, P. R. How haptic size sensations improve distance perception. PLoS Comput. Biol. 7, 193–195 (2011).

Körding, K. P. & Wolpert, D. M. Bayesian integration in sensorimotor learning. Nature 427, 244–247 (2004).

Ma, W. J. & Jazayeri, M. Neural coding of uncertainty and probability. Annu. Rev. Neurosci. 37, 205–220 (2014).

Fischer, B. & Peña, J. Optimal nonlinear cue integration for sound localization. J. Comput. Neurosci. 42, 37–52 (2017).

Xia, Y., Cambria, E., Hussain, A. & Zhao, H. Word polarity disambiguation using Bayesian model and opinion-level features. Cogn. Comp. 7, 369–380 (2015).

Ma, W. J. Organizing probabilistic models of perception. Trends Cogn. Sci. 16, 511–518 (2012).

Ma, W. J., Beck, J. M., Latham, P. E. & Pouget, A. Bayesian inference with probabilistic population codes. Nat. Neurosci. 9, 1432–1438 (2006).

Rich, D., Cazettes, F., Wang, Y., Peña, J. & Fischer, B. Neural representation of probabilities for Bayesian inference. J. Comput. Neurosci. 38, 315–323 (2015).

Peirce, J. W. PsychoPy—Psychophysics software in Python. J. Neurosci. Methods 162, 8–13 (2007).

Tschopp-Junker, C., Gentaz, E. & Viviani, P. Effect of selective and distributed training on visual identification of orientation. Exp. Brain Res. 202, 605–611 (2010).

Borra, T., Hooge, I. T. C. & Verstraten, F. A. J. The use of optimal object information in fronto-parallel orientation discrimination. Vision Res. 47, 3307–3314 (2007).

Stocker, A. A. & Simoncelli, E. P. Noise characteristics and prior expectations in human visual speed perception. Nat. Neurosci. 9, 578–585 (2006).

Knill, D. C. & Pouget, A. The Bayesian brain: The role of uncertainty in neural coding and computation. Trends Neurosci. 27, 712 (2004).

De Valois, R. L., Yund, E. W. & Hepler, N. The orientation and direction selectivity of cells in macaque visual cortex. Vision Res. 22, 531–544 (1982).

Berg, V. D. R., Shin, H., Chou, W. C., George, R. & Ma, W. J. Variability in encoding precision accounts for visual short-term memory limitations. Proc. Natl. Acad. Sci. USA 109, 8780–8785 (2012).

Bae, G. Y., Allred, S. R., Wilson, C. & Flombaum, J. I. Stimulus-specific variability in color working memory with delayed estimation. J. Vision 14, 1–23 (2014).

Keshvari, S., Van, D. B. R. & Ma, W. Probabilistic computation in human perception under variability in encoding precision. PLoS ONE 7, e40216 (2012).

Gibbon, J. Scalar expectancy and Weber’’s law in animal timing. Psychol. Rev. 84, 279–325 (1977).

Dehaene, S. The neural basis of the Weber–Fechner law: A logarithmic mental number line. Trends Cogn. Sci. 7, 145–147 (2003).

Jazayeri, M. & Shadlen, M. N. Temporal context calibrates interval timing. Nat. Neurosci. 13, 1020–1026 (2010).

Petzschner, F. H., Glasauer, S. & Stephan, K. E. A Bayesian perspective on magnitude estimation. Trends Cogn. Sci. 19, 285–293 (2015).

Petzschner, F. H. & Glasauer, S. Iterative Bayesian estimation as an explanation for range and regression effects: A study on human path integration. J. Neurosci. 31, 17220–17229 (2011).

Stevens, S. S. & Greenbaum, H. B. Regression effect in psychophysical judgment. Percept. Psychophys. 1, 439–446 (1966).

Polanía, R., Woodford, M. & Ruff, C. C. Efficient coding of subjective value. Nat. Neurosci. 1, 134–142 (2019).

Wei, X. X. & Stocker, A. A. A Bayesian observer model constrained by efficient coding can explain “anti-Bayesian” percepts. Nat. Neurosci. 18, 1509–1517 (2015).

Bergmann, J. H. M., Fei, J., Green, D. A., Hussain, A. & Howard, N. A Bayesian assessment of real-world behavior during multitasking. Cogn. Comp. 9, 1–9 (2017).

Acknowledgements

This work was supported by National Natural Science Foundation of China (61374183, 51535005, 51472117), National Key Research and Development Program of China (2019YFA0705400), the Research Fund of State Key Laboratory of Mechanics and Control of Mechanical Structures (MCMS-I-0419K01), the Fundamental Research Funds for the Central Universities (NJ2020003, NZ2020001), the Key Project Foundation of Natural Science Research in Universities of Anhui Province (KJ2019A0557) and the Natural Science Foundation of Anhui Province of China (1908085MA01).

Author information

Authors and Affiliations

Contributions

R.Y. and X.L. developed the design of the study, performed analyses, discussed the results, and contributed to the text of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ye, R., Liu, X. How the known reference weakens the visual oblique effect: a Bayesian account of cognitive improvement by cue influence. Sci Rep 10, 20269 (2020). https://doi.org/10.1038/s41598-020-76911-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-76911-8

This article is cited by

-

Reference induces biases in late visual processing

Scientific Reports (2023)

-

The effects of distractors on brightness perception based on a spiking network

Scientific Reports (2023)

-

A Novel Neural Metric Based on Deep Boltzmann Machine

Neural Processing Letters (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.