Abstract

Electroencephalography (EEG) is a method for recording electrical activity, indicative of cortical brain activity from the scalp. EEG has been used to diagnose neurological diseases and to characterize impaired cognitive states. When the electrical activity of neurons are temporally synchronized, the likelihood to reach their threshold potential for the signal to propagate to the next neuron, increases. This phenomenon is typically analyzed as the spectral intensity increasing from the summation of these neurons firing. Non-linear analysis methods (e.g., entropy) have been explored to characterize neuronal firings, but only analyze temporal information and not the frequency spectrum. By examining temporal and spectral entropic relationships simultaneously, we can better characterize how neurons are isolated, (the signal’s inability to propagate to adjacent neurons), an indicator of impairment. A novel time-frequency entropic analysis method, referred to as Activation Complexity (AC), was designed to quantify these dynamics from key EEG frequency bands. The data was collected during a cognitive impairment study at NASA Langley Research Center, involving hypoxia induction in 49 human test subjects. AC demonstrated significant changes in EEG firing patterns characterize within explanatory (p < 0.05) and predictive models (10% increase in accuracy). The proposed work sets the methodological foundation for quantifying neuronal isolation and introduces new potential technique to understand human cognitive impairment for a range of neurological diseases and insults.

Similar content being viewed by others

Introduction

Electroencephalography (EEG) detects the electrical activity of the brain and analysis of EEG permits tracking variations in brain wave patterns. EEG analysis provides information about a person’s cognitive state such as response inhibition, level of concentration, arousal, and even diagnostic information regarding diseases such as Alzheimer’s, post-cardiac arrest syndrome (hypoxic encephalopathies), and epilepsy1,2,3,4,5,6. There are many types of analyses designed to extract features from EEG signals that examine coherence, intensity of frequency bands, signal entropy, coupling, and source localization to acquire information about cognitive states2,7. These extracted EEG features are then used as the foundation for explanatory and predictive modeling. Typically, two or more of these features are utilized to generate a feature space for predictive models that can predict epilepsy, hypoxia, etc.2. Thus, capturing these new EEG features is paramount to uncovering nascent patterns that provide further insight into the complexities of the human brain and distinguishing impairments.

Literature has demonstrated that conditions like hypoxia, Alzheimer’s, epilepsy, and other neurological issues cause neuronal impairments that change firing patterns. Modification of firing can potentially occur at the intracellular level of an individual neuron or at the intercellular level in how neurons propagate information to each other (neuronal interactions). However, the non-linearity of the processes at both the intracellular and intercellular level are caused by dynamic behavior7, making it difficult to capture explanatory responses. At the intracellular level, neuronal firing, or the generation of an action potential, demonstrates non-linearities in how thresholding and saturation phenomenon are governed7. At the intercellular level, neuronal interactions occur spatially giving a second dimension to these non-linearities7. These combined neuronal interactions are summed, potentially enabling subsequent neurons to meet their threshold criteria and thus fire as well8,9. These dynamic behaviors that demonstrate changes in threshold criteria and signal propagation can be modeled mathematically9,10. However, if a neuron is impaired, it has the potential to impede transmission and prevent subsequent neurons from firing, causing neurons to be functionally and electro-physiologically isolated11. A single, simulated EEG oscillation that would be produced by functional and impaired networks of neurons has been created to provide insight into the development of the novel methods presented in this paper.

Prior work

EEG signals are characterized as non-linear time series because of complexities in cellular processes and signal propagation7,11,12. Though these complexities in neuronal firing occur even in the case of simple cognitive changes (e.g., sleeping), signal propagation is altered, which ultimately affects whether a neuron’s threshold criteria is met or not13. Collectively, changes in neuronal signal propagation affect global measurements of electrical activity as measured by the changing intensity/power of EEG band-limited waveforms (e.g., alpha, delta, theta). Based on this rudimentary and fundamental point, this relationship between cognitive states and EEG patterns was first documented by Berger14, who noted an attenuation in alpha waves (8–12 Hz) when comparing conscious waking states with rest/sleep15.

These observations became visibly apparent because the EEG alpha frequency is band-limited (8–12 Hz) and its intensity is more dominant during specific conditions (such as sleep). Thus, spectral intensity analysis methods have been the hallmark approaches for EEG analysis7,12, and Fourier methods have been the typical method for analyzing the intensity of specific frequency bands (i.e., delta δ, theta θ, alpha α)16,17. However, EEG signals are non-stationary12, making Fourier approaches problematic since they assume that the signal is infinitely long and stationary18. We are required to make this assumption about the signal being analyzed because Fourier uses sine and cosine waves as its basis function in order to decompose the signal into its appropriate frequency components. To overcome the issue of non-stationarity, Short-Time Fourier Analysis (STFT) is applied17. STFT permits the assumption of signal stationarity by applying windowing (typically into segments of 5–30 second windows)19. This type of analysis prevents one from examining how and when frequencies and intensities change within a windowed time segment. This dilemma has been previously identified as it relates to physiological signals18 and is known as the signal processing uncertainty principle20, which is related to Heisenberg’s uncertainty principle in physics21. This leads to the Heisenberg uncertainty principle’s fundamental trade-offs related to signal processing18: In order to obtain an increased time resolution, one loses frequency resolution. Likewise, in order to gain better frequency resolution, one loses time resolution18. Furthermore, strictly examining the raw intensity leads to high variability between subjects because variability is inevitably imposed by electrode conductance. Additional methodologies are required to combat this issue.

More current literature aims to determine more suitable non-linear analyses for examining EEG complexity using various types of entropy measurements to obtain informative features that can detect cognitive states and diseases2,7,12,22. The general underlying concept of these entropy measurements is based on examining complex sequences for similarities in patterns to quantify the predictability of the sequence. If the entropy is low, there are many patterns that are similar and the sequence is highly predictable. On the other hand, if the entropy is high, the sequence has fewer similar patterns and is less predictable.

There are numerous ways one can quantify similarity, and hence, various methods for calculating entropy23. Sleigh and Abasolo both discuss two possible families of entropy estimators with regard to EEG entropy signal analysis7,24. The first family consists of “phase-space embedding entropies”, which are designed to estimate the signal in the time domain. Popular methods within this family consist of approximate, Shannon, phase, sample, Kolmogorov, fuzzy, and permutation entropy2. As depicted in Table 1, these frequency-specific EEG waveforms (e.g., alpha, gamma) have been shown to indicate certain cognitive states and to have contextual meaning associated with brain damage and disease based on decades of supporting literature25,26,27. Even high gamma frequencies are now being related to motor and cognitive tasks28. However, this “phase-space” family of entropy methods does not examine similarity with regard to the frequency content of the signal. Thus, these temporal entropy approaches overlook a large part of the classical concepts of EEG analysis.

The second family of entropy estimators is referred to as “spectral entropy” methods, which include spectral and Normalized Bispectrum Entropy methods. These methods aim to examine entropy from a frequency perspective, but at the cost of losing temporal information due to spectral windowing limitations (i.e., STFT). Furthermore, these methods typically utilize Fourier analysis methods which, as stated above, are inappropriate for EEG analysis due to the stationarity assumptions Fourier analysis imposes and the lack of granularity in changes signal frequencies and intensities18. Furthermore, these methods typically utilize Fourier analysis methods which, as stated above, are inappropriate for EEG analysis due to the assumption of stationarity imposed by Fourier analysis and the inability to detect granular changes in signal frequencies and intensities18.

From an analytical standpoint, none of these entropy methods used to characterize the non-linearities of EEG signals capture the intensity of specific EEG waveform spectral properties continuously over time, nor do they attempt to calculate precise dynamic temporal changes. Thus, there is no complexity method that can explain both the temporal and spectral complexity relationships. An analysis method that identifies intensity changes over time would provide a new understanding of the non-linear dynamics present in EEG signals.

Motivation

From a physiological standpoint, the complex neuronal dynamics resulting from a method that would measure both temporal and spectral complexity relationships in firing patterns could potentially provide new information. The notion of complex neuronal networks, which generate these fundamental neuronal oscillations, has been backed by a vast amount of literature8,15,29. In order to capture the complexity of these dynamics, we propose the use of a rudimentary example with a simulated EEG that examines a simplistic network to enable the development of these methods (see Fig. 1). Figure 1 presents two cases: column one depicts a standard band-limited neuronal oscillation; and column two depicts a band-limited neuronal oscillation where a subset of neurons are functionally isolated (i.e., a neuron is impaired and has the potential to impede transmission and prevent subsequent neurons from firing). As previously discussed, we can observe these EEG oscillatory patterns through global field potentials at localized recording sites on the scalp, which are generated by the summation of large populations of neuronal action potentials. These populations of synchronized neuronal action potentials (in black) are shown in Fig. 1 in row two. In column two, the action potentials that did not fire are shown in red. These action potentials are summated at the macroscopic level and are viewed at the level of global field potentials shown in Fig. 1, row 1, in black, with the intensity in blue. It is worth noting that the intensity is attenuated via the analysis of the power spectrum (in row 3) and the characterized intensity over time, both in blue.

A rudimentary depiction of a standard neural oscillation in column 1 and a neural oscillation where functional neuronal isolation occurs, altering the local field potential in column 2. These two local field potentials in black are demonstrated in columns 1 and 2 in the first row. The characterized intensity of the signal over time is in blue. The second row of figures are the simulated spikes of individual neurons, where each dot represents an action potential in space and time. The red dots in the second column are the neurons that were suppressed and did not fire. The third row is the power spectrum using the Fourier transform of the local field potential neuronal oscillation. Intensity of the waveforms in the time domain and frequency domain are both highlighted in blue in rows 1 and 3.

Moreover, the chaotic nature of the intensity over time is increased. Currently, the non-linear dynamics of how and when these intensities are altered over time are not captured with these methods (seen in blue in Fig. 1 of the first row). Note how a band-limited frequency and its intensity can change over time and become more unpredictable. The temporal intensity dynamics can potentially be altered at higher rates, where the ranks of the different spectral bands can alter in dominance. Outside this simulation, higher rates of change within temporal dynamics during hypoxia-ischemia has been reported in sheep30 and epilepsy31. However, current entropy measurement windowing techniques do not pinpoint instantaneous changes with regard to intensity, frequency, and time. This limitation calls for development of techniques capable of assessing whether there is additional information that could provide explanatory responses induced by neurological impairments (e.g., stroke, cancer).

Therefore, this work raises four relevant research questions: (1) Can we specifically measure EEG spectral waveforms (e.g., alpha) continuously over time to better capture changes in events? (2) How does the complexity of the intensity change for specific EEG spectral waveforms over time? (3) How do the proposed EEG entropy signal analysis methods compare to other standard measurements?

Challenges

In order to detect instantaneous changes in intensity and relate them to the specified band-limited EEG waveforms in Table 1, a unique signal processing method must be developed. This method would share similarities to the DDWT method in order to capture intensity continuously over time. However, each filter would have to strictly capture the specified EEG waveforms and the adjacent filters would have to be considered in the method design to prevent redundant analysis of frequency content. If there is no redundancy, full reconstruction of the original signal can be achieved (plateau value of zero). This would mean that each filter is properly capturing only its specific intended EEG waveform. This requires intensive optimization since adjacent filter designs have dependencies on each other and are constrained by their cut-off frequencies with regard to specific EEG waveform bands. The second major consideration is how to formally apply these entropy measurements to the proposed signal processing methodologies. Although the wavelet entropy method analyzes the signal in a multi-resolution approach, it does not analyze instantaneous changes in the signal (granular time resolution). Instead, it examines entropy within the windowed time segment as a normalized sum of energy across the entire window. Thus, the resolution of any changes in the signal are generalized to the size of the window and do not examine these instantaneous changes in signals, which can inform granular, complex changes in firing. Finally, one must consider how to demonstrate that the new proposed approaches provide significant explanatory features when capturing these minuscule granular intensity changes from global field potentials.

Insights

First, we test the feasibility of the proposed EEG algorithms using hypoxia data. Hypoxia is a state in which the body is unable to provide adequate levels of oxygen to its tissue. When oxygen levels are adequate, proper signal propagation between neurons can occur11,32. However, when oxygen deprivation occurs, the energy substrate supplied for neurons, Adenosine Triphosphate (ATP), is depleted, preventing synaptic transmission to other neurons11,33. The reduction of oxygen to tissue leads to neuronal electrophysiological isolation because of the inability to continue signal propagation33,34, thus altering the global measurements of the EEG recordings11,34.

Von Tscharner developed a signal processing intensity analysis method designed for Electromyography (EMG) signals using a wavelet-based analysis35. The design of these filters not only overcomes these time-frequency trade-offs, but the filters were designed in the frequency domain in order to minimize the plateau value of the filters to fully reconstruct the EEG signal35. The filter’s center frequencies and bandwidths were not chosen to capture any specific frequency ranges but designed to minimize the plateau value of the filter bank. Using optimization methods and these core concepts that von Tscharner presents, we can argue that the current design to fit appropriate optimal bandwidths specifically for continuous EEG analysis.

Contributions

We propose a filter bank approach that addresses both the aforementioned challenges by examining the intensity of band-limited frequencies relevant to EEG in continuous time. Utilizing this developed intensity approach allows one to analyze entropy as a function of both time and frequency, unlike any current method available. We coin the term “EEG Activation Complexity” to refer to the calculation of entropy as the timing between a frequency band’s peak intensities. The contributions of this paper are:

- 1.

Utilizing synthetic stationary and non-stationary signals, we capture a one-to-one mapping of intensity for the designed filters as a function of time.

- 2.

We demonstrate that the timing of intensity peaks over a band-limited frequency is significantly less complex during normal oxygen conditions as compared to hypoxia conditions.

- 3.

We demonstrate that the proposed method provide more information and add another dimension to the analysis of EEG signal processing.

- 4.

We demonstrate activation complexity is a stronger predictor of cognitive impairment.

Method

The methods section is partitioned into four sections. The first and second sections discuss the data, filter design, and optimization methods applied to produce the time-frequency intensity analysis. The third and fourth sections describe how we apply entropy calculations to the proposed time-frequency intensity analysis methods, where we introduce the EEG Activation Complexity.

Hypoxia data set

The dataset was collected by a research team at NASA Langley Research Center (LaRC), who subjected 49 volunteers with current hypoxia training certificates to normobaric hypoxia to study the impact of hypoxia on aircraft pilot performance36,37. All participants consented to take part of the study as approved by the Institutional Review Board of NASA LaRC.

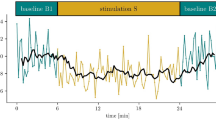

The goal of the study was to understand cognitive impairment resulting from exposure to mild hypoxia in order to develop and test psychophysiologically-based adaptive automation/autonomous systems. Subjects in the study experienced simulated altitudes of sea level (21% O2) and 15,000 feet (11.2% O2) induced by an Environics, Inc. Reduced Oxygen Breathing Device (ROBD-2). During non-hypoxic (i.e., sea level) and hypoxic exposures, each subject experienced three 10-minute bouts performing three different tasks consisting of a battery of written tests, Multiple Attribute Task Battery (MATB)38, and flight simulation tasks. In each exposure, the research team collected task performance measures, a subjective self-reported workload (NASA Task Load Index TLX)39, and multiple physiological responses (including EEG). This article discusses only the EEG data collected during hypoxic and non-hypoxic exposures for the MATB, where the electrode configuration is provided in Fig. 2. As literature historically has shown, hypoxia induces cognitive performance deficits and changes within the EEG11,30. This has shown within this dataset’s past analyzes, were we demonstrated that during the hypoxic phase of the experiment subjects experienced statistically higher levels of perceived workload difficulty (NASA TLX)36, decreases to task performance (MATB)40, and changes in EEG power37. The specified electrode placement was used to avoid complications with the aviator’s oxygen mask component of the ROBD-2 breathing device which was worn using straps around the subject’s head.

Filter bank intensity method

The goal of the filter bank design discussed in this paper is to develop a time-frequency intensity analysis for EEG-specific frequency bands. The general underlying concept of the filter design, which was motivated by von Tscharner35, is to extract the intensity of the signal as a function of time. In the proposed method, the filter bank comprises a collection of filters which, when summed, result in a relatively low plateau value across a range of frequencies (i.e., no one particular frequency dominates over any other).

Basis Function Definition

The spectral topology of a filter bank’s basis function is important because adjacent filters in the frequency domain must be summed to obtain a reasonably stable plateau value. Von Tscharner implemented a derivative of the Paul wavelet, where the filter bank design had arbitrary cutoff frequencies for the filters. Von Tscharner’s design was acceptable for analysis of EMG signals since it was only concerned with covering the entire range of possible frequencies and maintaining a low plateau value. However, this design choice is not suitable for our application, where individual EEG bands need to be extracted. Additionally, we found that the Paul wavelet could not handle the additional constraints required to capture the specific EEG waveform frequencies defined in Table 1. We adopt a pragmatic approach in this paper whereby optimization routines are used to find filter parameters that produce a reasonably optimal plateau value, EEG cutoff frequencies, and avoid the time-consuming process of manually adjusting filter bank parameters for each filter bank component.

For our filter bank, we selected the “flattened” Gaussian41 basis function topology to balance the extraction of frequency bands of the EEG spectrum while maintaining an acceptable filter bank plateau value. In the filter bank, the ith filter (i = 1, …, K, where K is the total number of filters) within the frequency space is defined as

which is parameterized by the center frequency fci, and the tuning parameters ai, and bi. The Heaviside function, Θ(f), constrains the design to only positive frequencies (f ≥ 0). The filter bank is constructed using non-linear scaling to the basis function (\(\widehat{\psi }\)) in the time domain by shifting each center frequency and tuning the parameters to achieve a better filter bank design. Since these tuning parameters are not constant and altered for each ith filter, we refrain from referring to this design as a wavelet implementation and instead refer to it simply as a filter bank design. However, these filters maintain the same generalized basis function (a “flattened” Gaussian) and the core concepts of design followed by von Tscharner35 while still fulfilling a wavelet’s admissibility criterion.

Filter bank optimization

The ultimate goal for our filter bank design was to find a set of filters with an acceptable (near optimal) plateau value. We attempted fitting filter parameters fci, ai, and bi by hand, but acceptable results were time-consuming to achieve. We also tried optimizing the entire filter bank, but the results were not acceptable and were found to be computationally complex. We modified our approach to optimize sets of three filters at a time. We were able to achieve better results in less time with this approach, but we encountered difficulties in seeding the optimization routines with reasonable center frequencies. We attempted to solve this problem by optimizing only the placement of center frequencies such that the center frequencies were uniformly spaced with considerations for the boundaries imposed by EEG band cutoffs. We then used these center frequencies to seed the optimization of sets of three filters. After iterating through all filters in the filter bank, slight manual adjustments to the parameters ai and bi were made to better adjust for the plateau value.

We introduced the following generalized optimization approach for developing the proposed filter bank for the constraints of this particular EEG. A general overview of the method is as follows:

- Step 1:

Select a number of wavelets to represent each band of the EEG spectrum and estimate the spacing of wavelet center frequencies by minimizing the sum of differences between separations of adjacent wavelet center frequencies.

- Step 2:

Use the set of center frequencies from Step 1 to approximate the optimal plateau value for the entire wavelet filter bank by optimizing sets of three wavelets.

The details of each of these steps is outlined in the following sections.

Step 1: Estimated Optimal Spacing

The goal of the Step 1 is to find a set of center frequencies (fc ∈ RK, where K is the total number of wavelets selected) to seed the optimization routine in Step 2. This set is found by minimizing the sum of differences between separations of adjacent wavelet center frequencies as defined in the following optimization problem

where \(f{c}_{i}^{lb}\) and \(f{c}_{i}^{ub}\) are the lower and upper constraint boundaries on the center frequencies. These boundaries can be considered either “hard” – set by the halfway point between the minimum lower and upper boundaries of the EEG band and the halfway point between the maximum lower and upper boundaries of the EEG band (as found in 1), or “soft” – given by the approximate acceptable regions of center frequencies that are not fully determined by the EEG band ranges in Table 1.

An example of a “hard” boundary exists for a single wavelet occupying the δ band (fc1; starting between 0.5 Hz to 1 Hz and ending between 3 Hz to 4 Hz; see Fig. 3). This constraint is considered “hard” because it is imposed by the adjacent θ band to the right and the undefined region of negative frequency to the left. This wavelet should have a center frequency that is between 1.75 Hz (\(f{c}_{1}^{lb}\); halfway between 0.5 Hz to 3 Hz) and 2.5 Hz (\(f{c}_{1}^{ub}\); halfway between 1 Hz to 4 Hz; see Fig. 3).

An example of a “soft” boundary exists for two wavelets occupying the α EEG band, which contains frequencies starting between 7 Hz to 8 Hz and ending between 12 Hz to 13 Hz (see Fig. 4). The constraints on these two center frequencies are considered “soft” because the single EEG band (α in this case) is represented by two wavelets, and at least one of the boundaries for each wavelet is “artificially” imposed. For the case of the first filter in the α band, the θ EEG band is to the left (ending between 7 Hz to 8 Hz) for the first wavelet, but the upper bound is not well-defined since we have some choice as to the next α band filter.

These constraints help ensure that the cutoff frequency (fcoi) of each filter would take the value of 1/e (i.e., \({\widehat{\psi }}_{i}(f=fc{o}_{i})=1/e\)) between the frequency range specified by the boundaries of certain EEG bands during Step 2. Furthermore, center frequencies that are spaced evenly were found to produce more stable plateau values in Step 2. The aforementioned optimization problem was solved using the non-linear constrained optimization routine (“fmincon”) in MATLAB.

Step 2: Optimize Basis Function Parameter Values The goal of Step 2 is to produce a filter bank that equally represents all EEG frequencies within the range of interest while providing reasonable separability of different EEG bands as defined in the scientific literature (see Table 1). One possible way to achieve such a filter bank is to find the set of filter parameters that minimize the path length integral of the sum of all filters in the filter bank. Colloquially, this amounts to finding the shortest distance between two points (a straight line in Euclidean geometry). Any deviations from a straight line result in having to “walk” a greater distance between the starting and ending frequencies. For an entire set of K filters, this objective can be operationalized as the path length integral between the first and last center frequencies, written as

where fc, a, b ∈ RK and L is the arc length of the sum of all filters between the center frequency of the first and last filter in frequency space

As in Step 1, the possible ranges of center frequencies are constrained (Eq. 2b) as well as the parameters a and b (Eqs. 2c and 2d). Additionally, Eq. 2e and Eq. 2f ensure that the lower and upper cutoff frequencies \(fc{o}_{j}^{L}(f{c}_{j},{a}_{j},{b}_{j})\) and \(fc{o}_{j}^{U}(f{c}_{j},{a}_{j},{b}_{j})\) for the jth filter given by

and

fall within the acceptable ranges for the associated EEG band. Finally, in order to produce a reasonably biorthogonal filter bank, the value of the jth filter at the center frequencies of the (j−1)th and (j+1)st filters are constrained to be less than or equal to ϵ = 0.0005 through constraints Eqs. 2g and 2h.

Unfortunately, our attempts at directly optimizing Eq. 2a were met with poor results. However, we were able to approximate the global optimum by sequentially considering only three filters at a time until all K filters’ parameters were determined (see Fig. 5). As such, Eq. 2a was modified to account for three filters (\({\widehat{\psi }}_{i}\), \({\widehat{\psi }}_{i+1}\), and \({\widehat{\psi }}_{i+2}\)) at a time as described in the following optimization problem

where the arc length along the three consecutive filters L is given by

and the constraints given in Eq. 6b to Eq. 6h serve the same functions as with the optimization problem in Eq. 2a.

For the first three filters, fc1, a1, b1, fc2, a2, b2, fc3, a3, and b3 are found such that Eq. 6a is minimized. The optimal values of filter 3 are then used in the next round to find fc4, a4, b4, fc5, a5, b5 such that Eq. 6a is minimized. This process is repeated until all K filter parameters have been optimized (see Fig. 5).

As with Step 1, the method in Step 2 was implemented using the non-linear constrained optimization (fmincon) routine in MATLAB.

Optimized filter parameters

Utilizing the proposed methodology in which the constraints of the filtering paradigm are accounted for and optimized, we obtain Table 2. These parameters are then applied to Eq. 8 and shown in Fig. 6, where we can note the plateau vector, PV(f), is defined as

where \(\forall f\in \bar{1,S}\), S = Fs∕2, and Fs is the sampling frequency. The plateau value, Pv, is obtained by calculating the standard deviation of the vector PV.

An optimized filter bank design defined by the parameters provided in Table 2.

EEG Filter Implementation

This discussed method deviates from Von Tscharner’s classical approach of the filter implementation because of the valid concerns presented by Gabriel42. This discussion in42, points out how applying the designed filters to the EEG’s source signal in the frequency domain, Xs(f), is inappropriate since we are applying the Fourier transform to a non-stationary signal, thus defeating one of the major purposes of the novel signal processing approach. As Borg highlights43, Von Tscharner’s implementation shares similarities to a basic equalizer which decomposes the EEG time domain’s source signal, xs(n), into its associated intensity components, ρi(n), with respect to each filtering process, κi, shown in Fig. 7.

The presented filtering process utilizes the EEG signal in the time domain, xs(n), where we obtain a frequency band-limit intensity, ρi(n), over time by applying convolution with the designed filters, \({\widehat{\psi }}_{i}(n)\) and Gaussian smoothing methods. We define this entire process as, \({\widehat{\kappa }}_{i}\), where i ∈ {1, …, K} filters.

In order to obtain \({\widehat{\psi }}_{i}(n)\), we transfer the designed respective frequency domain filter, \({\widehat{\psi }}_{i}(f{c}_{i},{a}_{i},{b}_{i})\), to the time domain by,

where \({{\mathscr{F}}}^{-1}\) is the inverse Fourier transform and \({{\mathscr{C}}}^{{\mathscr{L}}}\) is the circular shift of the numeric output of the function, where \(L=\frac{N}{2}\) and N is the length of the filter in the time domain. The \({{\mathscr{C}}}^{{\mathscr{L}}}\) operation with \(L=\frac{N}{2}\) is equivalent to performing a FFT shift, which adjusts the mirroring image in the frequency domain. However, this sequence happens to be in the time domain. Thus, the sequence {x(0), …, x(N − 1)} is cyclically shifted to {x(N∕2), x(N − 1), 0, …, x(N∕2 − 1)}. By applying Equation 9, we are able to move the filter designed in the frequency domain to the time domain described with real and imaginary components, shown in Fig. 8.

Utilizing the filter in time domain, \({\widehat{\psi }}_{i}(n)\), we obtain the intensity of the signal xs(n) by,

which is defined by the convolution of xs(n) with \({\widehat{\psi }}_{i}(n)\). The intensity sequence, ρi(n), is then smoothed using a Gaussian filter,

where \(\sigma =\frac{{F}_{s}}{2}\), Fs is the sampling frequency and \(x=\in \{\frac{-3{F}_{s}}{2},\ldots ,\frac{3{F}_{s}}{2}\}\). The intensity signal is convolved with the Gaussian filter to obtain the smoothed filtered EEG intensity:

Activation complexity

We used Activation Complexity to examine the predictability of the intensity, \({\widehat{\rho }}_{i}(n)\), of specific EEG frequency ranges (e.g., α, δ) as a function of time. We calculate Activation Complexity using the proposed filter bank design, a peak detector, and a temporal entropy measurement (e.g., sample entropy). The peak detector implemented in this work utilized the function “findpeaks” from MATLAB version 2017a, where the function will produce a vector of indices for the locations in time where the peaks occur, Ai(k). In the upper part of Fig. 9, we depict instances in time for the peaks of the intensity of the delta waveform frequencies. In the lower part of Fig. 9 is the vector ΔA1, the sequence of the timing differences between all the peaks of the intensity waveform calculated simply by

This figure represents the process for calculating Activation Complexity. The upper part of the figure shows the intensity of the designed delta waveform band, ρ1(n) in red, with the indicated peaks on the intensity band, ρ1(n) circled in blue. The differences in time between those peaks are calculated to form ΔAi(k), shown in the bottom image. A standard entropy measurement method can then be applied to this sequence.

Following this, we computed the sample entropy of the new sequence, ΔAi23 to produce the Activation Complexity measurement Aci for each EEG lead.

It is important to note that the type of entropy measurement applied to the sequence will be sensitive to the number of data points in the sequence, thus limiting the window size that can be analyzed. Typically, sample entropy and permutation entropy require a minimum of 100 samples, whereas approximate entropy requires a minimum of 1000 samples23.

Evaluation and Discussion

In this section, we address the following research questions regarding the filter design intensity method and entropy approaches to distinguish changes in EEG firing patterns during hypoxic conditions at 15,000 feet of altitude versus non-hypoxic conditions at sea level:

- RQ1

Can we accurately depict the intensity of the stationary and non-stationary signals proportional to the original time-series?

- RQ2

Does the proposed Activation Complexity method demonstrate the ability to extract complexity EEG dynamic trends to distinguish brain activity under hypoxia?

- RQ3

How do classical methods perform in distinguishing differences between hypoxia and sea level changes in the brain?

- RQ4

How do classical spectral intensity compare to activation complexity for distinguishing cognitive impairment?

RQ1: simulated continuous EEG intensity measurement

We hypothesize that by using the proposed filter bank methodology, we will be able to extract pertinent EEG frequency band intensity continuously over time. Utilizing the designed filter bank methodology, we simulate various stationary and non-stationary waveforms to evaluate intensity as a function of time. In Fig. 10a, we first modeled four stationary waveforms at frequencies of 2.3 Hz, 5.6 Hz, 8.75 Hz, and 11.4 Hz with amplitudes of 7.5, 4, 5.5, and 8, respectively. The fifth component is a non-stationary signal model using a chirp in which the frequency linearly increases from 0 to 15 Hz with an amplitude of 6. We then provide an example of a linear combination of two stationary signals at 2.3 Hz and 16.6 Hz with amplitudes of 2.3 and 6.5, respectively. All of these waveforms were concatenated together as a single time series. Thus, the transitions between waveforms were abrupt and discontinuous, causing mild perturbations in irrelevant filters to activate. Through visual inspection in Fig. 10, we can obtain an equivalent proportionality to the simulated waveform generated in Fig. 10a. Figure 10b can be represented in a two-dimension fashion, similar to how continuous wavelet transforms are presented using contour plots shown in Fig. 10c. This allows a clearer depiction of time, frequency (i.e., filter number), and intensity.

The synthetic time series consists of various stationary and non-stationary frequencies (a chirp from 20–30 seconds). We demonstrate two visual representations of the filter bank output. The 1-D representation allows for an enhanced comparison of an accurate depiction of intensity to the synthesized time series. The contour plot allows for a better global visualization of which filters are activated and their timing.

RQ2: activation complexity

Activation Complexity (AC) is applied to a hypoxia data set, where we hypothesize that this novel method can extract irregular neuronal firing patterns from global EEG recordings. This method was applied to EEG data collected from 49 subjects exposed to three 10 minute bouts of normobaric hypoxic (12% O2/15k ft) and non-hypoxic conditions (22% O2/sea-level) at NASA Langley Research Center. One-sample t-tests and bootstrapped t-tests for multiple comparisons were used between the two cohorts for the computerized MATB task bout of hypoxic and sea level conditions. The AC analysis using sample entropy used a template length of m = 2 and a threshold value of r = 0.25, producing 26 different statistically significant AC measurements across filters and EEG recording sites for when no multiple comparison correction (NMCC) as applied. When a bootstrap multiple comparison correction (MCC) was applied, 13 different statistically significant AC measurements across filters and EEG recording sites was demonstrated. The visual changes of AC between conditions are shown in Figs. 11, 12, 13 and 14. We explored various other threshold values of .15, .2, and .3, which produced 17, 19, and 25 statistically different activation complexities, respectively (For the NMCC case). The AC measurements that demonstrated significant changes utilizing the other threshold values demonstrated similar patterns with regard to EEG leads and frequency bands. The calculated AC complexity was normalized across all EEG leads, incorporating both the hypoxia and sea level cohorts associated with each filter bank’s intensity analysis. This was done to highlight the differences in complexity, as depicted in the colored contour map in Figs. 11, 12, 13 and 14. The details of the results regarding EEG site location and p-value for the one-sample test (for α ≤ 0.05 with N = 47) are provided in the comments below the figure. Additionally, Table 3, is provided for more details regarding the means and standard errors for instances of α ≤ 0.1.

AC Analysis, Aci, is shown between the hypoxia and sea level cohorts for the first three intensity filter designs (delta [0.6–4.0 Hz], theta [3.8–7.6 Hz], low alpha [7.2–10.4 Hz]), where i is the applied filter. Ac1 demonstrated no significant changes across any of the EEG sites. Ac2 demonstrated a significant increase in entropy (i.e., complexity) at the POz EEG site with a p-value of 0.033. Ac3 produced an increase in complexity at the P7, F2, and C1 with p-values of 0.046, 0.004, 0.0001, respectively.

Ac4 demonstrated no significant changes across any of the EEG sites. For hypoxia, Ac5 demonstrated a significant increase entropy (i.e., complexity) at P7, Pz, Oz, O2, F1, F2, C2 with p-values of 0.049, 0.021, 0.006, 0.007, 0.033, 0.018, and 0.028, respectively. During hypoxia Ac6 produced a significant increase in complexity at the P7, O1, F2, C1, P3 with p-values of 0.0003, 0.049, 0.017, 0.0464, and 0.033, respectively. It is also worth noting that O1 had p-values of 0.065 and 0.060 for Ac4 and Ac5, respectively.

The hypoxia cohort for Ac7 produced an increase in complexity for EEG sites P7, Poz, Pz, 02, C1, P3 with p-values 0.036, 0.048, 0.026, 0.040, 0.045, 0.035, respectively. The hypoxia cohort for Ac8 produced an increase in complexity for EEG sites P7, and P3 with p-values 0.031 and 0.035, respectively. Ac9 demonstrated no significant changes across any of the EEG sites.

Utilizing this novel AC approach, we demonstrate that there is a significant increase in complexity during hypoxia across numerous EEG sites in the theta, alpha, and beta EEG frequency regions. The only significant decrease in complexity exists in the high frequencies of the gamma region. The sites that demonstrated this significant decrease in complexity never overlapped with the reported higher complexity EEG sites in the lower frequency regions. The rear left side of the brain, P7, P3, and C1 had the most consistent amount of significant activation complexities across frequency bands having 5, 3, and 4, respectively. In the introduction, we discussed how hypoxia has been hypothesized to cause neuronal isolation in past literature11. This concept was pictorially demonstrated in Fig. 1, where the time-frequency intensity peaks were more prominent for the functional isolation case.

We found that this AC method is not ideal, however, for small windowed segments of data. It is ideal for long-term trend analysis applications and has the potential to indicate small, subtle, anomalous patterns within the EEG spectral bands. Sample entropy and other temporal entropy measurements typically require a minimum of 100 data points or more44. The 10-minute segments that were analyzed only produced a mean of 431.2 peaks for each intensity frequency band, \({\widehat{\rho }}_{i}(n)\). When analyzing the number of peaks in each \({\widehat{\rho }}_{i}(n)\), for each EEG lead against the hypoxia and sea level cohorts, the one-sample t-test produced 18 significant p-values. The informative value in measuring how intensity is maximized and fluctuates is further supported. However, only 5 of the 18 significant p-values intersected with the 26 different statistically significant AC measurements. This alludes to the fact that it is not simply the amount of peaks but the timing of these peaks, and intensity may hold a very different meaning when it comes to analysis of complex brain dynamics. Therefore, how a band-limited intensity is sustained may provide valuable information regarding neuronal firing and indicators for disease.

RQ3: classical methods

We hypothesize that classical EEG methods such as intensity (i.e., power) analysis can still produce valuable information, but that they provide an incomplete picture. Moreover, we specifically use spectral intensity analysis (SIA) since this method is the only approach in which we can compare effects on time, spectral bands, and intensity.

We discuss this hypothesis by first analyzing the changes in EEG intensity caused by hypoxia. Utilizing the filter banks’ summed intensity values for each 10-minute segment, we performed statistical one-sample t-tests. Due to the fact that electrical conductance can change from subject to subject and thereby alter the intensity values, each subject’s individual filter intensity values were normalized. When individual subject normalization was not applied, no significant difference was found. However, with proper normalization to account for electrical conductance changes, we found 26 filter intensity values across the 16 leads that were significantly different with one-sample t-tests when no multiple comparison correction (NMCC) was performed. A bootstrap t-test method was utilized to correct for multiple comparison correction (MCC) within the EEG analysis, which no single filter demonstrated significance between the hypoxic and non-hypoxic conditions. However, various correct methods could have been applied to adjust for multiple comparisons in which more conservative methods could greatly impact the significance and more liberal methods could potentially not impact initial result at all. Thus, we felt that the non-correction t-test still provides information on the trajectory of some leads that were close to being significant and still provides value to the reader.

Since our aim is not to directly discuss the effects of intensity and its relationship to hypoxia, but rather to determine the significant indicators that an intensity analysis provides, for brevity, we will only provide the results of significant EEG sites and their filters rather than the 768 means and standard errors associated with the intensity data (16 leads × 12 filters × 2 Conditions × 2 mean/SD).

Spectra of No Change (Using NMCC): The filters 3, 4, 10, and 11, which are associated to frequency bands (7.2–10.4 Hz), (10.0–12.8 Hz), (27.6–31.2 Hz), and (31.0–34.8 Hz), demonstrated no significant intensity change across any of the EEG electrodes for SIA. However, AC demonstrated significance for all four frequency bands, shown in Table 3. This demonstrates additional features that AC has extracted from the EEG signal.

Spectra of Significant Increases (Using NMCC): SIA did demonstrate significant increases in intensity only for Filters 1, 5, 6, 7, and 12 for the hypoxia cohort for a variety of EEG leads. More specifically, Filter 1 showed a significant increase in intensity for the O2 EEG site. Filters 1 and 5 showed an significant increase in intensity during Hypoxia for the O2 EEG site with p-values of 0.020 and 0.038, respectively. AC demonstrated no significance for Filter 1. However, for Filter 5, AC demonstrated increases for all the occipital recording sites (O1, Oz, and O2) as well as other EEG sites. For Filter 6, SIA showed the largest change across EEG leads, exhibiting a significant increase in intensity during hypoxia for POz, Pz, P8, O1, Oz, O2, AF4, F2, C1, and P4, with p-values of 0.0006, 0.007, 0.018, 0.015, 0.002, 0.024, 0.040, 0.039, 0.041, and 0.009, respectively. All of these sites, except for O1 and C1 are located on the right hemisphere. On the other hand, the AC measurement only reports significant increases on the left hemisphere (6 significant sites), demonstrating divergent findings across methods. For Filter 7, SIA also demonstrates significant increases in intensity for hypoxia for POz, F1, F2, and P4, with p-values of 0.045, 0.030, 0.024, and 0.027. These recording sites are essentially the frontal lobe and left parietal lead. AC provided 8 significant sites, but only overlaps with POz. The majority of AC are located in the posterior right part of the brain in the parietal and occipital recording sites for the 10–20 montage. For Filter 12, SIA exhibits a significant increase during hypoxia for Oz, with a p-value of 0.035, and AC reports an increase for P7 during hypoxia. Overall, we can note that although both methods unanimously demonstrate significant increases with their respective method, there is very little to no overlap with regard to EEG recording sites.

Spectra of Significant Decreases (Using NMCC): Filters 2, 8, and 9 showed a decrease in intensity during hypoxia across various EEG locations. Filter 2 showed a decrease in intensity for POz, Pz, Oz, and Pz with p-values of 0.038, 0.013, 0.0009, and 0.018, respectively. AC only reports POz as increased intensity during hypoxia. Filter 8 demonstrated a significant decrease during hypoxia for O2, AF3, and AF4, with respective p-values of 0.035, 0.047, and 0.018. We can also note an increased AC for the parietal recording site during hypoxia. Filter 9 also has AF3, and AF4, which exhibited a decrease in intensity with p-values of 0.029 and 0.026, respectively. AC only reported C1 as an increase during hypoxia.

Results Summary: In summary, we note that when comparing AC to SIA for NMCC the two methods are very divergent in their reported findings, specifically on the direction of the measurement (increasing vs decreasing), recording site of the brain, and spectral properties. These divergent results support the hypothesis that AC adds an additional dimensionality to the analysis. These SIA results share a resemblance to Papedelis’s work with hypoxia, where they reported an increase in spectral power11. One caveat was that the majority of the spectral intensity findings were on the right hemisphere, whereas Papadelis reported left hemisphere dominance11. However, our subjects utilized their left hands for the MATB tracking tasks whereas the subjects in Papadelis’s study used their right hands11. EEG asymmetries and cerebral lateralization in literature is well known and may explain the discrepancy between our results11,45. However, from the perspective of implementing MCC, the AC methodology demonstrates significant changes within the EEG signal during mild induction of hypoxia, unlike the standard spectral analysis.

RQ4: comparing EEG methods for cognitive impairment

In order to formally compare spectral intensity and the new proposed approach coined, “activation complexity”, we compare the two approaches through evaluating it’s predictability to detect cognitive impairment. Literature has already shown that as the level of hypoxia becomes increasingly critical, a human’s cognitive impairment increases as well46,47. For this comparison, segmented instances of induced hypoxia were annotated into four different levels: H1) Completely non-hypoxic state (100–95% O2); H2) Indifferent hypoxia (95–85% O2); H3) Compensatory Hypoxia (75–85% O2) ;H4) Critical (Disturbance) (Less than 75% O2). Both methods share the exactly same dimensionality, sample size and predictive model (K-Nearest Neighbour approach), allowing for a fair comparative analysis to gauge how a specific feature set provides better predictive discriminators for cognitive impairment. The predictive performance for detecting the four levels of hypoxia using activation complexity (Table 4) verse spectral intensity (Table 5) are highlighted using confusion matrices with an accuracy (Ac) of 80.2 vs 71.3 %, respectively. These results demonstrate that Activation Complexity features covers more informative variance in the data and provides a superior predictive lift with KNN modeling. Thus, this comparison highlights that activation complexity provides information in characterizing cognitive impairment in which classical spectral intensity analysis can not provide.

Conclusions

Our new complexity method for non-linear dynamic analysis for isolated EEG frequency bands and intensity analysis. This work added to the philosophy for decoding the amplitude and temporal dynamics has embedded information48 and how amplitude of the instantaneous frequency is related to unconsciousness49. However, our work demonstrates an elegant method that combines these philosophies to extract embedded information of the instantaneous frequency’s intensity (not amplitude) temporal dynamics and this relationships to impaired neuronal firing/neuronal isolation. This works supports the case for a new predictive EEG feature for hypoxia and opens up a novel avenue for analysis of diseases and physiological sensory changes.

More specifically, this method shows significant differences in hypoxic vs. non-hypoxic states, facilitating future analyses (specifically for diseases related to stroke, ischemia, and cognitive changes). We hypothesized that these methods are extracting features which manifest due to various forms of neuro-isolation, but to fully support this hypothesis, we require additional study designs. While EEG more directly measures the electrical activation of neurons, functional near infrared spectroscopy (fNIRS) can be used to detect local activity by measuring the hemodynamic response in the brain. As with functional magnetic resonance imaging (fMRI), measuring the hemodynamic response is a standard technique for quantifying brain activity based on neurovascular coupling. However unlike fMRI, fNIRS can be used passively while operationally-relevant, cognitively-engaging tasks are performed and without running costly trials50. Additionally, fNIRS can be used without the data acquisition and processing burdens of performing EEG source localization. The hemodynamic response, as measured by fNIRS, is heavily influenced by local activity in the capillary bed51 and vessels of diameter <1 mm52. Further, the neurovascular coupling relationship holds true where there is suppression of, or interference with, neuronal activity53. Thus, the use of fNIRS can supplement the newly proposed Activation Complexity measurements by quantifying local hemodynamic activity changes in the face of interference due to hypoxia. Further, the quantification of Activation Complexity as a more general measure of neuronal isolation in the brain would be supported by demonstrating a link between a decrease of blood oxygenation and an increase in Activation Complexity. If these global electrical and hemodynamic measurements are coupled, Activation Complexity has the potential of being a critical, fast, and low cost surrogate measurement for characterizing, and possibly detecting, numerous diseases such as mild localized strokes, cerebral ischemia, or brain trauma in which neuronal isolation plays an important part.

This work ultimately contributes an additional dimension of spectral and complexity analysis, opening the exploration of EEG signal for further explanatory analysis in the area of neurology and cognitive science. The ability to isolate the intensity of neuro-oscillations as a function of time allows for further explorations into not only the timing of peak intensity, but additional multi-variate features such as their trough and width dimensions. These additional complexity analyses can potentially address how these band-limited neuro-oscillations are sustained.

References

Harrivel, A. R. et al. Prediction of Cognitive States during Flight Simulation using Multimodal Psychophysiological Sensing. AIAA Infotech, Applications of Sensor and Information Fusion, (2017).

Acharya, U. R., Fujita, H., Sudarshan, V. K., Bhat, S. & Koh, J. E. Application of entropies for automated diagnosis of epilepsy using eeg signals a review. Knowledge-Based Syst. 88, 85–96 (2015).

Courtiol, J. et al. The multiscale entropy: Guidelines for use and interpretation in brain signal analysis. J. Neurosci. Methods 273, 175–190 (2016).

Bauer, G., Trinka, E. & Kaplan, P. Eeg patterns in hypoxic encephalopathies (post-cardiac arrest syndrome): fluctuations, transitions, and reactions. J. Clin. Neurophysiol. 30, 477–89 (2013).

Kanda, P. A., Oliveira, E. F. & Fraga, F. J. {EEG} epochs with less alpha rhythm improve discrimination of mild alzheimer’s. Comput. Methods Programs Biomed. 138, 13–22 (2017).

Napoli, N. J. et al. Exploring cognitive states: Temporal methods for detecting and characterizing physiological fingerprints. AIAA SciTech, Identification and Machine Learning. 1–10 (2020).

Abasolo, D., Hornero, R., Espino, P., Alvarez, D. & Poza, J. Entropy analysis of the eeg background activity in alzheimer’s disease patients. Physiol. Meas. 27 (2005).

Ham, F. M. & Kostanic, I. Principles of Neurocomputing for Science and Engineering (McGraw-Hill, 2001).

Kobuchi, Y. Signal propagation in 2-dimensional threshold cellular space. J. Math. Biol. 3, 297–312 (1976).

Faye, G. & Kilpatrick, Z. Threshold of front propagation in neural fields: An interface dynamics approach. arXiv:1801.05878v1 1–27 (2018).

Papadelis, C., Kourtidou-Papadeli, C., Bamidis, P. D., Maglaveras, N. & Pappas, K. The effect of hypobaric hypoxia on multichannel eeg signal complexity. Clin. neurophysiology official journal Int. Fed. Clin. Neurophysiol. 118, 31–52 (2007).

Ignaccolo, M., Latka, W., Mirek, J., Grigolini, P. & West, B. J. The dynamics of eeg entropy. J. Biol. Phys. 36, 185–196 (2010).

Pizzagalli, D. A. Electroencephalography and high- density electrophysiological source localization. In Cacioppo, J., Tassinary, L. and Berntson, G. (eds.) Handbook of psychophysiology (Cambridge University Press, Cambridge, UK, 2007).

Berger, H. Nervenkr. Arch. Psychiatry 87 (1929).

Buzsaki, G. & Draguhn, A. Neuronal oscillations in cortical networks. Science 304 (2004).

O’Toole, J. M., Pavlidis, E., Korotchikova, I., Boylan, G. B. & Stevenson, N. J. Temporal evolution of quantitative eeg within 3 days of birth in early preterm infants. Nat. Sci. Reports 8, 1–11 (2018).

Nordin, A. D., Hairston, W. D. & Ferris, D. P. Human electrocortical dynamics while stepping over obstacles. Nat. Sci. Reports 9, 1–12 (2018).

Napoli, N. J., Mixco, A. R., Bohorquez, J. E. & Signorile, J. F. An emg comparative analysis of quadriceps during isoinertial strength training using nonlinear scaled wavelets. Hum. movement science 40, 134–153 (2015).

Abdullah, H. & Cvetkovic, D. Electrophysiological signals segmentation for eeg frequency bands and heart rate variability analysis. The 15th Int. Conf. on Biomed. Eng. 43 (2014).

Gabor, D. Theory of communication. IEEE 93, 429–459 (1946).

Heisenberg, W. The Physical Principles of the Quantum Theory (Courier Corporation, Chicago, IL, 1949).

Thul, A. et al. {EEG} entropy measures indicate decrease of cortical information processing in disorders of consciousness. Clin. Neurophysiol. 127, 1419–1427 (2016).

Lake, D., Richman, J., Griffin, M. & Moorman, R. Sample entropy analysis of neonatal heart rate variability. Am. J. Physiol. 283, R789–R797 (2002).

Sleigh, J. W., Steyn-Ross, D. A., Steyn-Ross, M. L., Grant, C. & Ludbrook, G. Cortical entropy changes with general anaesthesia: theory and experiment. Physiol. Meas. 25, 921 (2004).

Cacioppo, J., Tassinary, L. & Berntson, G. Handbook of Pyschophysiology (Cambridge University Press, Cambridge, UK, 2007), 3 edn.

Stern, R., Ray, W. & Quigley, K. Psychophysiological Recording, chap. 7 (Cambridge University Press, Cambridge, UK, 2000), 3 edn.

Walter, W. The Living Brain (Norton, New York, NY, 1953).

Nakai, Y. et al. Three- and four-dimensional mapping of speech and language in patients with epilepsy. Brain 140, 1351–1370 (2017).

Wang, X.-J. Neurophysiological and computational principles of cortical rhythms in cognition. Physiol. Rev. 90, 1195–1268 (2010).

Abbasi, H. et al. Eeg sharp waves are a biomarker of striatal neuronal survival after hypoxia-ischemia in preterm fetal sheep. Nat. Sci. Reports 8, 1–8 (2018).

Frolov, N. S. et al. Statistical properties and predictability of extreme epileptic events. Nat. Sci. Reports 9, 1–8 (2019).

Fusheng, Y., Bo, H. & Qingyu, T. Approximate Entropy and Its Application to Biosignal Analysis, 72–91 (John Wiley and Sons, Inc., 2012).

Lipton, P. Ischemic cell death in brain neurons. Physiol. Rev. 79, 1432–1568 (1999).

Martin, R., Lloyd, H. & Cowan, A. The early events of oxygen and glucose deprivation: setting the scene for neuronal death? Trends Neurosci. 17, 251–7 (1994).

Von Tscharner, V. Intensity analysis in time-frequency space of surface myoelectric signals by wavelets of specified resolution. J. Kinesiol. Electromyogr. 6, 433–45 (2000).

Stephens, C. et al. Mild normobaric hypoxia exposure for human-autonomy system testing. Proc. Hum. Factors Ergonomics Soc. Annu. Meet. 61 (2017).

Stephens, C. et al. Effects on task performance and psychophysiological measures of performance during normobaric hypoxia exposure. Proc. Int. Symp. on Aviat. Psychol. Dayton, OH (2017).

Santiago-Espada, Y., Myer, R. R., Latorella, K. A. & Comstock, J. R. The multi-attribute task battery II (MATB-II) software for human performance and workload research: A user’s guide. An optional note (2011).

Hart, S. G. & Staveland, L. E. Development of nasa-tlx (task load index): Results of empirical and theoretical research. In Hancock, P. A. & Meshkati, N. (eds.) Human Mental Workload (North Holland Press, Amsterdam, 1988).

J. J., Beltran, K., K., K. E., N & C. L., Stephens Occurrence of heart arrhythmia’s during mild hypoxia induction and laboratory task/flight simulation performance. The 91th Aerosp. Med. Assoc. (AsMA) Annu. Meet. Atlanta, GA (2020).

Blazquez, J., Garcia-Berrocal, A., Montalvo, C. & Balbas, M. The coverage factor in a flatten-gaussian distribution. Metrologia 45 (2008).

Gabriel, D. A. & Kamen, G. Point:counterpoint comments. J Appl. Physiol. 105 (2008).

Borg, F. Filter banks and the “intensity analysis” of emg., http://arxiv.org/abs/1005.0696 (2003).

Napoli, N. J. et al. Uncertainty in heart rate complexity metrics caused by r-peak perturbations. Comput. Biol. Medicine 103, 198–207 (2018).

Bullmore, E., M, B., Harvey, I., Murray, R. & Ron, M. Cerebral hemispheric asymmetry revisited: effects of handedness, gender and schizophrenia measured by radius of gyration in magnetic resonance images. Psychol. Med. 25, 349–363 (1995).

Ma, H., Wang, Y., Wu, J., Luo, P. & Han, B. Long-term exposure to high altitude affects response inhibition in the conflict monitoring stage. Nat. Sci. Reports 5, 1–10 (2015).

Ma, H., Wang, Y., Wu, J., Luo, P. & Han, B. Aging of stimulus-driven and goal directed attentional processes in young immigrants with long-term high altitude exposure in tibet: An erp study. Nat. Sci. Reports 9, 1–12 (2019).

Hubbard, J., Kikumoto, A. & Mayr, U. Eeg decoding reveals the strength and temporal dynamics of goal-relevant representations. Nat. Sci. Reports 9, 1–11 (2019).

Tsai, F.-F., Fan, S.-Z., Cheng, H.-L. & Yeh, J.-R. Multi-timescale phase amplitude couplings in transitions of anesthetic-induced unconsciousness. Nat. Sci. Reports 9, 1–11 (2019).

Harrivel, A., Weissman, D., Noll, D., Huppert, T. & Peltier, S. Dynamic filtering improves attentional state prediction with fnirs. Biomed. Opt. Express 7, 979–1002 (2016).

Hillman, E. M. et al. Depth-resolved optical imaging and microscopy of vascular compartment dynamics during somatosensory stimulation. Neuroimage 35, 89–104 (2007).

Liu, H., Boas, D. A., Zhang, Y., Yodh, A. G. & Chance, B. Determination of optical-properties and blood oxygenation in tissue using continuous nir light. physics in medicine and biology. Neuroimage 40, 1983–1993 (1995).

Zhang, N., Liu, Z., He, B. & Chen, W. Noninvasive study of neurovascular coupling during graded neuronal suppression. journal of cerebral blood flow and metabolism. Knowledge-Based Syst. 28, 280–290 (2008).

Acknowledgements

The use of trademarks or names of manufacturers in this report is for accurate reporting and does not constitute an official endorsement, either expressed or implied, of such products or manufacturers by the National Aeronautics and Space Administration.

Author information

Authors and Affiliations

Contributions

N.J.N. Developed the simulation, signal processing methods, optimization, and wrote the paper, M.D. Developed the optimization and developed part the signal processing methods; C.L.S. Developed and carried out the experimental design, and wrote part of the introduction, method, and discussion; K.D.K. Developed and carried out the experimental design, and wrote part of the discussion; A.R.H. Contributed to the signal processing methods and contributed to writing the document; A.T.P. Consulted on the EEG algorithm development and content of the introduction and discussion; L.E.B. Contributed to writing the document, algorithm development, and optimization.

Corresponding author

Ethics declarations

Competing interests

The authors of these work have no financial and/or non-financial competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Napoli, N.J., Demas, M., Stephens, C.L. et al. Activation Complexity: A Cognitive Impairment Tool for Characterizing Neuro-isolation. Sci Rep 10, 3909 (2020). https://doi.org/10.1038/s41598-020-60354-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-60354-2

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.