Abstract

Multi-atlas-based segmentation (MAS) methods have demonstrated superior performance in the field of automatic image segmentation, and label fusion is an important part of MAS methods. In this paper, we propose a label fusion method that incorporates pixel greyscale probability information. The proposed method combines the advantages of label fusion methods based on sparse representation (SRLF) and weighted voting methods using patch similarity weights (PSWV) and introduces pixel greyscale probability information to improve the segmentation accuracy. We apply the proposed method to the segmentation of deep brain tissues in challenging 3D brain MR images from publicly available IBSR datasets, including images of the thalamus, hippocampus, caudate, putamen, pallidum and amygdala. The experimental results show that the proposed method has higher segmentation accuracy and robustness than the related methods. Compared with the state-of-the-art methods, the proposed method obtains the best putamen, pallidum and amygdala segmentation results and hippocampus and caudate segmentation results that are similar to those of the comparison methods.

Similar content being viewed by others

Introduction

Magnetic resonance (MR) imaging technology plays an important role in neuroscience research and clinical applications. Doctors often need to identify regions of interest (ROIs) or diseased tissues from multiple brain images and make judgements or develop treatment plans. At present, a large number of brain images are generated every day in hospitals, and it is a burden for doctors to accurately segment the brain structures by manual segmentation. Therefore, an automatic segmentation technique is needed to deal with the routine analysis of clinical brain MR images. Many automatic image segmentation algorithms have been proposed1,2, but due to the complexity of deep brain structures, it is still a challenging task to develop a fast and accurate automatic segmentation method. FreeSurfer3 and FIRST4 are widely used automatic segmentation methods. FreeSurfer uses non-linear registration and an atlas-based segmentation approach. FIRST takes the deformable-model-based active appearance model and puts it in a Bayesian framework. These two methods alleviate the burden of manual segmentation, but their accuracy still needs to be improved.

In the past decade, atlas-based methods have shown superior performance over other automatic brain image segmentation algorithms5. The main idea of the atlas-based method is to use the prior knowledge of the atlas to classify the target pixels. Each atlas contains an intensity image and a label image labelled by a doctor. This method registers the target image with the intensity images and then uses the obtained deformation field to propagate the label information to the target image space to achieve the classification of the target pixel. A disadvantage of atlas-based methods is that they are sensitive to noise and differences between images. This problem can be solved by MAS6 and atlas selection7.

MAS methods mainly include two steps: image registration and label fusion. At present, many registration algorithms have been proposed8. The registration performance of an MAS method has a great influence on the segmentation results. Therefore, most MAS methods use a highly accurate non-linear registration method. Alvén et al.9 proposed an Überatlas registration method for MAS, which effectively shortens the registration time. Alchatzidis et al.10 presented a method that integrates registration and segmentation fusion in a pairwise MRF framework.

Label fusion is a key step in MAS methods and can reduce the errors caused by registration errors or morphological differences between the target image and the atlas images. Weighted voting (WV) is a widely used label fusion method. This method can effectively improve the segmentation results. The weight value is mainly defined by the similarity between the atlas images and the target image; examples include the absolute value of the intensity difference11, the Gaussian function of the intensity difference12, and the local mutual information13. CoupeK et al.14 proposed a hippocampal and ventricular segmentation method based on non-local patches. Wang et al.15 proposed a multi-atlas active contour segmentation method and used a template optimization strategy to reduce registration error. Lin et al.16 proposed a label fusion method based on registration error and intensity similarity. Tang et al.17 proposed a low-rank based image recovery method combined with the sparse representation model, which successfully achieved the segmentation of brain tumours. Roy et al.18 proposed a patch-based MR image tissue classification method. This method uses the sparse dictionary learning method and combines the statistical atlas prior to the sparse dictionary learning. The entire segmentation process does not require deformation registration of the atlas images. Tong et al.19 combined discriminative dictionary learning and sparse coding techniques to design a fixed dictionary for hippocampus segmentation. Lee et al.20 proposed a brain tumour image segmentation method using kernel dictionary learning. This method implicitly maps intensity features to high-dimensional features to make classification more accurate. However, this method is time consuming and cannot be widely applied. To address this problem, a linearized-kernel sparse representative classifier has been proposed21.

In recent years, learning-based methods have been widely used in MR image segmentation fields, such as the support vector machine (SVM)22, random forest (RF)23 and convolutional neural network (CNN)24 methods. These methods are suitable for the image analysis of specific anatomical structures due to their advantages in terms of efficiency in pairwise registration and feature description. Huo et al.25 proposed a multi-atlas segmentation method combined with SVM classifiers and super-voxels. This method is not sensitive to pair registration and reduces registration time by using affine transformation. Xu et al.26 proposed an atlas forest automatic labelling method for brain MR images. They use random forest techniques to encode atlas images into the atlas forest to reduce registration time. Kaisar et al.27 proposed a convolutional neural network model combining convolution and prior spatial features for sub-cortical brain structure segmentation and trained the network using a restricted sample selection to increase segmentation accuracy.

Brain tissue boundary regions are difficult to segment for both the MAS and learning-based segmentation methods. It is mainly because the pixel values of the tissue boundary regions are very similar and it is difficult to identify whether the pixel points of these regions belong to the target organization. In order to overcome this shortcoming and make full use of the prior information of the atlas, we introduce pixel greyscale probability information into the label fusion method.

In this paper, we propose a label fusion method combining pixel greyscale probability (GP-LF) for brain MR image segmentation. The proposed method is mainly aimed at improving the segmentation results in the tissue boundary region and improving the segmentation accuracy. We use the SRLF method and the PSWV method to obtain the fusion results of target tissues and then introduce pixel greyscale probability information to fuse the fusion results obtained by the above two methods. The pixel greyscale probability is obtained through a large amount of segmentation training.

Methods

We propose our method based on the patch-based label fusion method of MAS. In this section, we introduce the patch-based label fusion method and describe the proposed method in detail.

Dataset

We tested the proposed method using the IBSR dataset. The IBSR dataset is a publicly available MR brain image dataset that is provided by the https://www.nitrc.org/projects/ibsr website. It contains data from 18 T1-weighted MR image data and the corresponding label images, which are defined by the Center for Morphometric Analysis at Massachusetts General Hospital. The 18 subject images were obtained using two different MRI scanners: GE (1.5T) and SIEMENS (1.5T). The images are from 14 males and 4 females and have dimension 256 × 256 × 128 and three different resolutions: 0.84 × 0.84 × 1.5 mm3, 0.94 × 0.94 × 1.5 mm3 and 1 × 1 × 1.5 mm3. Additionally, this dataset is part of the Child and Adolescent NeuroDevelopment Initiative28 (CANDI) and was provided under the Creative Commons: Attribute license29.

Patch-based label fusion method

The patch-based label fusion method maps the label information to the target image space by registering the target image and the atlas images and then identifies the target tissue pixel by a label fusion algorithm. The detailed process is as follows:

- (1)

Image registration: The image to be segmented is taken as the target image T, which is registered with the intensity images in the atlas. Saving the warped atlas \({A}_{1}({I}_{1},{L}_{1}),{A}_{2}({I}_{2},{L}_{2}),\cdots {A}_{n}({I}_{n},{L}_{n})\), where Ii is the warped intensity image of atlas Ai, Li is the warped label image of atlas Ai, and n is the number of atlases, is saved.

- (2)

Patch extraction: The patch Tpxj centring on the pixel xj of the target image is extracted, and then patches Ipi and Lpi are extracted from the xj position of images Ii and Li, respectively.

- (3)

Label fusion: A label fusion method is used to fuse the extracted patches.

- (4)

Label assignment: The fusion result is binarized to assign a label (background or target organization) to the pixel xj.

The WV method is a widely used label fusion method; its equation is as follows:

where wi is the weight coefficient, Fv(xj) is the fusion result, and m is the number of target image pixels. If LT(xj) = 0, the pixel xj is marked as the background, and if LT(xj) = 1, the pixel xj is marked as a target organization pixel.

The calculation of wi is the core of the WV method. There are two common methods for calculating label weight: one is to calculate the similarity of \(T{p}_{xj}\) and Ipi and take that as the weight wi, and the other is to calculate the weight wi by using the sparse representation method.

The SRLF method assumes that Tpxj, Ipi and Lpi are one-dimensional column vector signals and uses all the images Ipi to build an over-complete dictionary DI; the Tpxj approximately lie in the subspace spanned by the training patches in the library DI.

In this paper, we use \(PT{[p{t}_{1},p{t}_{2}\cdots p{t}_{len}]}^{T}\) to represent Tpxj, where pti corresponds to each pixel in the patch Tpxj, len is equal to the number of pixels in the Tpxj. We use the column vector \(P{I}_{i}{[p{i}_{1i},p{i}_{2i}\cdots p{i}_{leni}]}^{T},i=1,2,\cdots m\) to represent Ipi, and the over-complete dictionary DI is represented as \({D}_{I}[P{I}_{1},P{I}_{2}\cdots P{I}_{m}]\). The sparse coefficient \(\alpha ={[{\alpha }_{1},{\alpha }_{2}\cdots {\alpha }_{m}]}^{T}\in Rn\). Each element of the PT can be represented by the following formula:

The idea of sparse representation is to represent the signal PT by solving the most sparse solution α. This solution for α can be obtained by solving the following equation:

The solution for α is used as the weight wi of WV, and then the label fusion is performed by using Eq. (1).

Proposed method

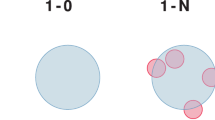

Equation (5) is an underdetermined equation, such that the number of non-zero coefficients in the sparse solution is equal to the number of elements of the vector Tpxj. Therefore, in the SRLF method, only a small number of patches participate in the fusion process, and most of the patches are discarded, which will lead to segmentation errors. The PSWV method combines all the patches, but is not as good at edge pixel recognition as the SRLF method.

In order to overcome the shortcomings of the above methods, we propose the GP-LF method. The overall framework of the proposed method is shown in Fig. 1. In order to make better use of the prior information of the atlas, we introduce the image grey probability information in the label fusion process and obtain the pixel greyscale probability value P(xj) of the target image through segmentation training.

- (1)

Multi-atlas registration: The current non-linear registration method has been able to achieve good registration results, so we used an existing non-linear registration method for multi-atlas registration. We selected the SuperElastix registration tool and used the affine and B-spline deformation methods to register the target image and the atlas intensity images. The SuperElastix registration tool is available at https://github.com/SuperElastix/.

- (2)

Initial label fusion: In order to avoid the influence of non-standard images on the segmentation results, we perform pixel value normalization on the target image and on the atlas intensity images. After multi-atlas registration, the obtained warped atlas is fused using the SRLF method, and the fusion result is represented by FvSRLF(xj). We use the normalized correlation coefficient (NCC)30 as a similar measure function to perform PSWV fusion on the warped atlas images, and the fusion result is represented by FvPSWV(xj).

- (3)

Establishment of the GP-LF model: The pixel greyscale probability information is introduced to fuse the results FvSRLF(xj) and FvPSWV(xj) obtained in the above steps. The fusion equation is as follows:

$${L}_{T}({x}_{j})=\{\begin{array}{cc}1 & {\rm{if}}\,F{v}_{SRLF}({x}_{j}) > 0.9\,{\rm{or}}\,F{v}_{PSWV}({x}_{j}) > {\rm{0.9}}\\ L{f}_{1}({x}_{j}) & {\rm{if}}\,0.4 < F{v}_{SRLF}({x}_{j})\le {\rm{0.9}}\,{\rm{and}}\,{\rm{0.4}} < F{v}_{PSWV}({x}_{j}) < 0.9\\ L{f}_{2}({x}_{j}) & {\rm{if}}\,F{v}_{SRLF}({x}_{j})\le 0.4\\ L{f}_{3}({x}_{j}) & {\rm{if}}\,F{v}_{PSWV}({x}_{j})\le 0.4\end{array}$$(6)where LT(xj) is a piecewise function. When FvSRLF(xj) and FvPSWV(xj) are greater than 0.9, almost all the identified pixels are target organization pixels. In order to reduce calculation time and not affect segmentation precision, we mark these pixels directly as target organization pixels. When FvSRLF(xj) and FvPSWV(xj) are between 0.4 and 0.9, the fusion is performed by using the formula for Lf1(xj), which is as follows:

$$L{f}_{1}({x}_{j})=\{\begin{array}{cc}1 & fusion1 > 0.5\\ 0 & else\end{array}\,fusion1={{\rm{\beta }}}_{1}\cdot F{v}_{SLRLF}({x}_{j})\cdot F{{v}}_{PSWV}({x}_{j})\cdot P({x}_{j})$$(7)when FvSRLF(xj) or FvPSWV(xj) is less than 0.4, the fusion is performed by using the formula for Lf2(xj) or Lf3(xj), which are as follows:

$$L{f}_{2}({x}_{j})=\{\begin{array}{cc}1 & fusion2 > 0.5\\ 0 & else\end{array}\,fusion2={{\rm{\beta }}}_{2}\cdot F{v}_{SLRLF}({x}_{j})\cdot P({x}_{j})$$(8)$$L{f}_{3}({x}_{j})=\{\begin{array}{cc}1 & fusion3 > 0.5\\ 0 & else\end{array}\,fusion3={{\rm{\beta }}}_{3}\cdot F{v}_{PSWV}({x}_{j})\cdot P({x}_{j})$$(9)where β1, β2, β3 are the fusion weight coefficients.

- (4)

P(xj) coefficient training:

Target tissue greyscale range setting consists of the following steps:

- a.

After the images are normalized, the pixel value ranges from 0 to 1. Divide the pixel value range 0–1 into NI intervals and then extract the greyscale distribution map. Judge the target tissue distribution range based on the peak value of the greyscale map. If the number of peaks is 2 or 3, the upper boundary value of the target organization is set to \(IS{N}_{\max }+Ov\), where \(IS{N}_{\max }\) is the maximum interval serial number corresponding to the peak and Ov is the offset value.

- b.

If the number of peaks is 1, then divide the pixel value range 0–1 into 2 × NI intervals and repeat step 1.

- c.

If the number of peaks is greater than 3, then divide the pixel value range 0–1 into NI/2 intervals and repeat step 1.

Establishment of the P(xj) coefficient training model.

The P(xj) coefficient training model is defined as follows:

where dsc denote the dice similarity coefficient (Dsc) of the intensity image segmentation result and the label image in an atlas, and sn denote the number of the atlas used for training. The P(xj) coefficient can be obtained by solving equation (11).

P(xj) coefficient training:

According to the spatial position of the target image, select the atlas images to participate in the P(xj) coefficient training. The P(xj) of the target tissue will be obtained through training and then Eq. (6) can be used to classify the target organization.

Experiments and Results

In this section, we use the leave-one-out cross-validation method to verify the segmentation performance and robustness of the proposed method. One atlas image from the IBSR dataset serves as the target image to be segmented, and the others in the dataset are used as the atlas. The above steps are repeated until each atlas image of the IBSR dataset is segmented. In the experiments, we mainly focused on the extraction of subcortical structures. We selected six subcortical structures for segmentation, including the thalamus, hippocampus, caudate, putamen, pallidum and amygdala. We also evaluated the segmentation results of our method, the SRLF method and the PSWV method.

Segmentation evaluation index

In order to verify the segmentation performance and robustness of the proposed method, we used the leave-one-out cross-validation method to test the proposed method. We applied four widely used segmentation metrics, Dsc, Recall, Precision and Hausdorff distance (HD) to measure the segmentation result of the proposed method. The Dsc, Recall and Precision segmentation metrics mainly measure the volume overlap ratio between the segmentation results and the manual labels, and the HD measures the surface distance between segmentation results and manual labels. These metrics are defined as follows:

where T is the target tissue pixel set in the target label image, F is the segmented target tissue pixel set, and d(pt, pf) is the distance between pixels pt and pf.

All experiments and training in this study were performed on a single desktop PC (Intel(R) Core(TM) 3.70 GHz CPU).

Target organization pixel greyscale range setting

We divide the pixel greyscale value range 0–1 into 20 intervals and determine the P(xj) of the pixels in each interval by training. In order to reduce the training time, we extracted the greyscale range of the target tissue from the greyscale distribution map. Due to the non-standard intensity between MR images, the same target tissue can have different grey distribution ranges in different images, so we determined the target tissue grey distribution range according to the grey distribution map.

As shown in Fig. 2, the pixel grey value span of deep brain tissue is between 0.3 and 0.6. We used the target tissue greyscale range-setting method mentioned above to obtain the \(IS{N}_{\max }\) of the target image greyscale distribution map and then set the grey value range of the deep brain tissues according to the greyscale distribution map. The thalamus has a pixel value span of 0.6 and its maximum pixel value is \(IS{N}_{\max }+0.15\); the hippocampus has a pixel value span of 0.5 and its maximum pixel value is \(IS{N}_{\max }\); the caudate has a pixel value span of 0.5 and its maximum pixel value is \(IS{N}_{\max }\); the putamen has a pixel value span of 0.5 and its maximum pixel value is \(IS{N}_{\max }+0.1\); the pallidum has a pixel value span of 0.5 and its maximum pixel value is \(IS{N}_{\max }+0.15\); and the amygdala has a pixel value span of 0.5 and its maximum pixel value is \(IS{N}_{\max }-0.05\).

Influence of the number of patches and atlas selection

It has been proven that selecting the appropriate atlas images in the MAS method can effectively improve the segmentation result31. The atlas images with low similarity to the target image will be discarded by atlas selection. There are large differences among slices in different positions of the human brain, so we selected atlas images to participate in target tissue segmentation based on the spatial location of the target image. Suppose the spatial position of the target image is TSP, SR is the search radius, and the images located in the space from TSP − SR to TSP + SR are selected as the images to be fused. The deep brain tissues belong to grey matter (GM) or white matter (WM). Therefore, we used GM and WM as the target tissues to test the influence of atlas selection on the segmentation results.

As shown in Fig. 3, the best WM and GM Dscs were obtained when SR = 4, and the Dsc was similar to SR = 4 when SR = 3 or SR = 5. However, for every 1.5 mm increase in SR, the registration time increased by approximately 1.5 hours. Therefore, we chose SR = 3 to consider the registration time and segmentation accuracy.

Fusing the patches with lower similarity to Tpxj will reduce the segmentation accuracy. In this study, we used the NCC method to measure the similarity between each Ipi and Tpxj and selected patches with higher similarity values for label fusion. We also tested the effect of varying the number of selected patches on the segmentation results.

It can be seen from Fig. 4 that the WM and GM segmentation results are best when the number of selected patches is 60, and as the number of selected patches increases, the label fusion time only increases by a few seconds. Therefore, we select 60 patches with high similarity values for label fusion.

P(x j) coefficient training and final segmentation results

In this section, we performed segmentation tests on the thalamus, hippocampus, caudate, putamen, pallidum and amygdala. Firstly, setting the weighting factor β for each target tissue. The β1 is set to 3.13 for all the deep brain tissues mentioned earlier; the β2 = 1.25 and β3 = 0.67 for thalamus; the β2 = 1.67 and β3 = 2.5 for hippocampus; the β2 = 0.625 and β3 = 0.83 for caudate; the β2 = 0.72 and β3 = 1 for putamen; the β2 = 0.83 and β3 = 1.25 for pallidum; the β2 = 1 and β3 = 0.25 for amygdala. Secondly, selecting the atlas images located in TSP − 3 mm to TSP + 3 mm as the training data. Thirdly, performing segmentation training on the target organization to obtain P(xj) coefficients. Finally, using Eq. (6) to identify the target tissues.

As shown in Fig. 5, there are some empty points (pixels that are not accurately classified) within the target tissues that are classified by the SRLF method, and the WV method does not work well for pixel classification of target tissue boundaries. The proposed method effectively overcomes the shortcomings of the above two methods and obtains a segmentation result similar to the target label image.

We use four metrics to verify the segmentation performance of the proposed method, the SRLF method and the PSWV method; the segmentation results are shown in Fig. 6. It can be seen from Fig. 6(a,b) that the proposed method achieves the best Dsc and Precision results. This shows that our method achieves the best segmentation accuracy and has fewer pixels for false recognition. As shown in Fig. 6(c), for hippocampus, putamen and pallidum segmentation, the proposed method obtains the best recall results. For thalamus, caudate and amygdala segmentation, the proposed method obtains Recall results similar to those of the PSWV method. As shown in Fig. 6(d), for thalamus, caudate, putamen and pallidum segmentation, the proposed method achieves the smallest HD. For hippocampus and amygdala segmentation, the proposed method achieves an HD similar to those of the SRLF and PSWV methods.

Discussion and Conclusion

In this section, we compare the performance of the proposed method to the related methods and state-of-the-art methods in deep brain tissue segmentation. We also introduce the limitations and future directions of the proposed method.

One disadvantage of the PSWV approach is the fusion of all extracted patches. Although the labels are assigned weights wi based on patch similarity, the fusion results are still affected by the label frequency. Due to the influence of registration error, there is a large difference between the organizational boundaries of warped atlas labels and those of the target image label, which will cause the PSWV method to be unsuitable for the identification of boundary pixels. The SRLF method selects the least element from the over-complete dictionary DI and represents PT by a linear combination of the selected elements. Therefore, most of the patches in the SRLF method are discarded, which will result in empty points in the classified target organization and reduce the segmentation accuracy.

In order to overcome the shortcomings of the above methods, We combine pixel greyscale probability information to fuse the fusion results obtained by the above two methods. Most of the segmentation errors in the multi-atlas patch-based label fusion method are concentrated on the tissue boundary. This is because the pixel gradient at the tissue boundary is not obvious, but there are still some differences in pixel grey values of different tissues. Therefore, the segmentation accuracy can be effectively improved by assigning a probability of belonging to the target tissue to different pixel values. As shown in Fig. 6, compared to the PSWV method and the SRLF method, the proposed method obtained the best Dsc and Precision results and obtained better or similar Recall and HD results.

We compared the proposed method with the state-of-the-art methods on the IBSR dataset in terms of Dsc and standard deviation. As shown in Table 1, the proposed method performed better than both FIRST and FreeSurfer for the six deep brain tissues. The overall Dsc mean for our method was significantly higher than for both of these other methods, with mean Dscs of 0.818, 0.757 and 0.872 for FISRT, FreeSurfer and the proposed method, respectively. In the pallidum and amygdala segmentation, the proposed method obtained the highest Dsc values of 0.878, 0.876, 0.801 and 0.795 for left pallidum, right pallidum, left amygdala and right amygdala, respectively. In the putamen segmentation, our method obtained the best left putamen segmentation results with a Dsc of 0.903 and obtained the same right putamen segmentation results as Xue’s method (Dsc = 0.905). The proposed method obtained similar caudate and hippocampus segmentation results to those obtained using Kaisar’s method. In the caudate segmentation, our method obtained Dscs of 0.885 and 0.898 for the left caudate and right caudate, respectively, and Kaisar’s method obtained Dscs of 0.896 and 0.896 for the left caudate and right caudate, respectively. In the hippocampus segmentation, our method obtained Dscs of 0.857 and 0.849 for the left hippocampus and right hippocampus, respectively, and Kaisar’s method obtained Dscs of 0.851 and 0.851 for the left hippocampus and right hippocampus, respectively. The proposed method obtained the third highest hippocampus segmentation accuracy among all the evaluated methods. This is because of the influence of the non-standard image intensity; the results of the thalamic segmentation of different target images using the same parameters are very different. Therefore, it is difficult to find a parameter that can fit all the images. Among the deep brain tissue segmentations mentioned in Table 1, the amygdala segmentation achieved the lowest segmentation accuracy. This is because the amygdala is relatively small in volume compared to other brain tissues and its registration effect is poor.

In the segmentation test of the deep brain tissues of the IBSR dataset, the multi-atlas registration time of each image of the proposed method was 2.83 hours. The training time of the pixel greyscale probability coefficient is 2.2–2.6 hours. The label fusion time for each image is around 15 seconds.

The limitations and future directions of the proposed method are as follows.

- (1)

The parameter setting in the GP-LF model directly affects the quality of the segmentation result. In this study, the same target organization set the same parameter β. In fact, due to the influence of differences between images and registration errors, the fusion results of PSWV and SRLF for the same target tissue segmentation of different images are quite different. Some images have a high fusion value while some images have a low fusion value, so it is difficult to find a suitable parameter β to accommodate all subjects. A good method is to set different parameters β for different target images through a majority of segmentation training and then match the optimal parameters according to the target image information features.

- (2)

The optimal P(xj) values differ for the same target organization of different images. In this paper, we selected the atlas images located in TSP − 3 mm to TSP + 3 mm for training to obtain adaptive P(xj), although this P(xj) is not the optimal P(xj) for each image segmentation.

- (3)

The selection of atlas images and patches has a great impact on the segmentation results of the patch-based label fusion method. In the test, not all selected atlas images and patches were suitable; there were still atlas and patches that were not similar to the target image or to Tpxj, which affected the segmentation accuracy. Consequently, establishing accurate atlas and patch selection models will not only reduces segmentation time but also improves segmentation accuracy.

- (4)

The pixels with the same grey value at the tissue boundary may belong to different tissues, which makes it difficult to classify these pixels accurately. The supervoxel-based multi-atlas segmentation method25 is suitable for addressing this problem and can improve the segmentation results.

Data availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Ivana, D., Bart, G. & Wilfried, P. Mri segmentation of the human brain: challenges, methods, and applications. Computational and Mathematical Methods in Medicine. 1–23(2015).

Sandra, G. et al. A review on brain structures segmentation in magnetic resonance imaging. Artificial Intelligence in Medicine. 73, 45–69 (2016).

Fischl, B. Freesurfer. Neuroimage. 62, 774–781 (2012).

Patenaude, B., Smith, S. M., Kennedy, D. N. & Jenkinson, M. A Bayesian model of shape and appearance for subcortical brain segmentation. NeuroImage. 56, 907–922 (2011).

Babalola, K. et al. An evaluation of four automatic methods of segmenting the subcortical structures in the brain. Neuroimage. 47, 1435–1447 (2009).

Heckemann, R. A., Hajnal, J. V., Aljabar, P., Rueckert, D. & Hammers, A. Automatic anatomical brain mri segmentation combining label propagation and decision fusion. Neuroimage. 33, 115–126 (2006).

Aljabar, P., Heckemann, R., Hammers, A., Hajnal, J. & Rueckert, D. Multi-atlas based segmentation of brain images: Atlas selection and its effect on accuracy. Neuroimage. 46, 726–738 (2009).

Wang, M. & Li, P. A Review of Deformation Models in Medical Image Registration. Journal of Medical and Biological Engineering. 39, 1–17 (2019).

Alven, J., Norlen, A., Enqvist, O. & Kahl, F. Uberatlas: fast and robust registration for multi-atlas segmentation. Pattern Recognition Letters. 80, 249–255 (2016).

Alchatzidis, S., Sotiras, A., Zacharaki, E. I. & Paragios, N. A discrete MRF framework for integrated multi-atlas registration and segmentation. International Journal of Computer Vision. 121, 169–181 (2017).

Isgum, I. et al. Multi-atlas-based segmentation with local decision fusion – application to cardiac and aortic segmentation in CT scans. IEEE Trans Med Imaging. 28, 1000–1010 (2009).

Sabuncu, M. R., Yeo, B. T., Leemput, K. V., Fischl, B. & Golland, P. A generative model for image segmentation based on label fusion. IEEE Trans Med Imaging. 29, 1714–1729 (2010).

Nie, J. & Shen, D. Automated segmentation of mouse brain images using multi-atlas multi-ROI deformation and label fusion. Neuroinformatics 11, 35–45 (2013).

Coupe, P. et al. Patch-based segmentation using expert priors: Application to hippocampus and ventricle segmentation. NeuroImage 54, 940–954 (2011).

Wang, M., Li, P. & Liu, F. Multi-atlas active contour segmentation method using template optimization algorithm. BMC medical imaging. 19 (2019).

Lin, X. B., Li, X. X. & Guo, D. M. Registration Error and Intensity Similarity Based Label Fusion for Segmentation. IRBM 40, 78–85 (2019).

Tang, Z., Sahar, A., Yap, P. T. & Shen, D. Multi-atlas segmentation of MR tumor brain images using low-rank based image recovery. IEEE Trans. Med. Imag. 37, 2224–2235 (2018).

Roy, S. et al. Subject-specific sparse dictionary learning for atlas-based brain MRI segmentation. IEEE J. Biomed. Health Inform. 19, 1598–1609 (2015).

Tong, T., Wolz, R., Coupe, P., Hajnal, J. V. & Rueckert, D. Segmentation of MR images via discriminative dictionary learning and sparse coding: Application to hippocampus labeling. NeuroImage. 76, 11–23 (2013).

Lee, J., Kim, S. J., Chen, R. & Herskovits, E. H. Brain tumor image segmentation using kernel dictionary learning. In Proc. 37th Annu. Int. Conf. IEEE EMBC, Milan, Italy, Aug. 658–661(2015).

Liu, Y., Wei, Y. & Wang, C. Subcortical Brain Segmentation Based on Atlas Registration and Linearized Kernel Sparse Representative Classifier. IEEE ACCESS. 7, 31547–31557 (2019).

Bai, W., Shi, W., Ledig, C. & Rueckert, D. Multi-atlas segmentation with augmented features for cardiac mr images. Medical image analysis. 19, 98–109 (2015).

Zikic, D., Glocker, B. & Criminisi, A. Encoding atlases by randomized classification forests for efficient multi-atlas label propagation. Medical image analysis. 18, 1262–1273 (2014).

Moeskops, P. et al. Automatic segmentation of mr brain images with a convolutional neural network. IEEE transactions on medical imaging. 35, 1252–1261 (2016).

Huo, J. et al. Supervoxel based method for multi-atlas segmentation of brain MR images. NeuroImage. 175, 201–214 (2018).

Xu, L. et al. Automatic labeling of mr brain images through extensible learning and atlas forests. Medical Physics. 44, 6329–6340 (2017).

Kaisar, K. et al. Automated sub-cortical brain structure segmentation combining spatial and deep convolutional features. Medical Image Analysis. 48, 177–186 (2018).

Kennedy, D. N. et al. CANDIShare: a resource for pediatric neuroimaging data. Neuroinformatics 10, 319–22 (2012).

About Te Creative Commons Licenses, http://creativecommons.org/about/licenses (2019).

Lemieux, L., Jagoe, R., Fish, D. R., Kitchen, N. D. & Thomas, D. G. T. A patient-to-computed-tomography image registration method based on digitally reconstructed radiographs. Medical Physics. 21, 1749–1760 (1994).

Zaffino, P., Ciardo, D., Raudaschl, P., Fritscher, K. & Spadea, M. F. Multi atlas based segmentation: should we prefer the best atlas group over the group of best atlases? Physics in Medicine and Biology. 63, 12NT01 (2018).

Acknowledgements

We thank to the Center for Morphometric Analysis Internet Brain Segmentation Repository for providing image data. This research was supported by the program for National Natural Science Foundation of China (No. 61572159), NCET (NCET-13-0756) and Distinguished Young Scientists Funds of Heilongjang Province (JC201302). The funding body didn’t have any influence on the collection, analysis, and interpretation of data or on writing the manuscript.

Author information

Authors and Affiliations

Contributions

M.W. conceived the research. P.L. performed the experiments and analyses and wrote the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, M., Li, P. Label fusion method combining pixel greyscale probability for brain MR segmentation. Sci Rep 9, 17987 (2019). https://doi.org/10.1038/s41598-019-54527-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-019-54527-x

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.