Abstract

The scalability and efficiency of numerical methods on parallel computer architectures is of prime importance as we march towards exascale computing. Classical methods like finite difference schemes and finite volume methods have inherent roadblocks in their mathematical construction to achieve good scalability. These methods are popularly used to solve the Navier-Stokes equations for fluid flow simulations. The discontinuous Galerkin family of methods for solving continuum partial differential equations has shown promise in realizing parallel efficiency and scalability when approaching petascale computations. In this paper an explicit modal discontinuous Galerkin (DG) method utilizing Implicit Large Eddy Simulation (ILES) is proposed for unsteady turbulent flow simulations involving the three-dimensional Navier-Stokes equations. A study of the method was performed for the Taylor-Green vortex case at a Reynolds number ranging from 100 to 1600. The polynomial order P = 2 (third order accurate) was found to closely match the Direct Navier-Stokes (DNS) results for all Reynolds numbers tested outside of Re = 1600, which had a normalized RMS error of 3.43 × 10−4 in the dissipation rate for a 603 element mesh. The scalability and performance study of the method was then conducted for a Reynolds number of 1600 for polynomials orders from P = 2 to P = 6. The highest order polynomial that was tested (P = 6) was found to have the most efficient scalability using both the MPI and OpenMP implementations.

Similar content being viewed by others

Introduction

Advances in modern computer hardware have enabled numerical computations to reach progressively larger scales. To handle the challenging and costly simulations, parallel computations have become widespread in both research and production Computational Fluid Dynamics (CFD) and other Computer-Aided Engineering (CAE) codes. To keep up with the demand for increasingly larger and more complex numerical models, the scalability and efficiency of a parallel implementation for a numerical discretization is an important factor. One way to improve the parallel efficiency of CFD software is to optimize the underlying code. Examples of these types of optimizations are the improvement of loop-level parallelism, serial efficiency of the code1, reducing the number of cache misses and optimizing the achievable memory bandwidth2.

Another possible path to improve the parallel efficiency is to consider the numerical method implemented in the software. One promising direction is the application of high-order methods for massively parallel CFD. In the CFD community, high-order methods are considered to be those which are third order and higher3. Low-order schemes are widely used in CFD, but there are applications for which they are considered insufficient, including turbulence, aeroacoustics, boundary layer flows, vortical flows, shock-boundary layer interactions and others4. For these types of flows, low-order methods require extremely small discretization scale lengths to accurately resolve the unsteady vortices over relevant length and time scales. This has led to a large amount of research in high-order methods aimed at solving physics problems which are not well suited to low-order methods. Outside of this “physics” argument for use of high-order methods, there is the issue of parallel scalability.

Since higher order polynomial approximations require more calculations to be carried out per element, it is expected that the scheme will exhibit a higher efficiency when higher order polynomials are used. The parallel algorithm requires a finite setup and communication time, which decreases its efficiency below the ideal linear speed-up. This overhead time depends on the scale of the parallel simulation, i.e. the number of parallel tasks or threads used. Since the higher order polynomials spend a longer time calculating the solution on a per degree of freedom basis, it is expected that the overhead time would be more negligible in comparison.

The objective of this paper is to demonstrate a scalable, parallel, high-order description of modal Discontinuous Galerkin (DG) elements for supersonic, turbulent boundary layer flows using Runge-Kutta explicit time marching. The spatial discretization scheme considered in the DG method can be made high-order by increasing the approximation order P of the interpolating polynomial. Polynomial approximations ranging from P = 2 to P = 6 are compared for a canonical problem of isotropic turbulence to study their parallel efficiency. In addition, the computational cost required to reach the same error as a lower-order polynomial is considered. This is an important metric to obtain the full picture of the computational cost of the different polynomial orders. Other authors have proposed using operation count instead of runtime comparisons, and found that for implicit solvers, high-order methods were more efficient than low-order ones5. Parallel scalability is important, but only if the underlying serial computational cost is not prohibitively expensive to the point where the benefit gained from a better scalability is lost. In addition to the isotropic turbulence, the method was also validated on a zero-pressure gradient supersonic Mach 2.25 turbulent boundary layer flow over a flat plate.

This paper is organized as follows. The next section describes the governing equations used in the study. Then an overview of the numerical method, including the DG spatial discretization, the numerical fluxes and the time integration is given. The ensuing section provides the background and results for the isotropic turbulence (Taylor-Green vortex) test case. Following that the results of the parallel scalability studies and performance comparisons of different polynomial orders are presented. Then, the turbulent boundary layer flow solution for a supersonic flow over a flat plate is documented. Finally the conclusions from these studies are summarized.

Governing Equations

To understand the fluid mechanics, one must appreciate the partial differential equations which govern fluid flow. This section describes these governing equations as well as other equations involved in this study.

Compressible Navier-Stokes equations

For a compressible Newtonian fluid, the multi-dimensional N-S equations in normalized conservative form can be written as

Here \(\bar{{\boldsymbol{\tau }}}\) denotes the viscous stress tensor which is given by Eq. (4). The term μ in the viscous stresses is the dynamic viscosity of the fluid and Sutherland’s law is used to define it. The term k denotes the thermal conductivity of the fluid with T being its temperature. This term comes from the Fourier’s Law of heat conduction. The thermal conductivity is obtained using the dynamic viscosity μ, Prandtl number (Pr) and specific heat (cp) of the fluid given by Eq. (5). The velocity vector is denoted by v, which includes the three components, u, v and w in streamwise (x), wall normal (y) and spanwise (z) directions, respectively.

Numerical Method

DG finite element was first presented by Reed and Hill6 to solve the neutron transport equations. Due to its inherent advantage of solving linear equation systems on an element-by-element basis, it has become one of the most promising computational technique to solve large equation systems with high parallel efficiency, even allowing the numerical formulation to approach an “embarrassingly parallel problem”. However, the next challenge was to solve the nonlinear systems of equations such as the hyperbolic conservation laws, which are prominent in most physical systems. For this, an explicit version of this method was devised7 which employed the use of Runge – Kutta time discretization with a Total Variation Diminishing in the Means (TVDM) and Total Variation Bounded (TVB) slope limiter. This method was called the RKDG method. This was extended to high order RKDG methods8 which showed P + 1 order of convergence for P order space discretization.

The development of DG methods for nonlinear hyperbolic systems occurred rapidly over the past two decades. The improvement of the computer architecture (for example, the advent of petascale computing machines) combined with the need to solve both hyperbolic and elliptic problems led to the extension of this method to convection-diffusion type problems. The first study of this form of equations was conducted on hydrodynamic models for semiconductor device simulations9,10. This was further studied for compressible Navier Stokes equations11 to achieve higher order of accuracy. It involved the simple breakdown of the second order equation into two first order equations with U and dU as independent variables and then solving the system using the original RKDG method. This method also known as the first Bassi – Rebay (BR1) method11 was further extended to achieve higher stability. This incorporated the explicit evaluation of the term dU without making it a new variable. This is also known as the second Bassi – Rebay (BR2) method12. There are numerous other methods13 to tackle these type of equation systems and can also be generalized as the Local Discontinuous Galerkin (LDG) methods14. It should also be noted that different methods have been implemented on the DG framework. Some of these methods include Spectral DG method and hp – adaptive methods. The first DG spectral method was conducted for elliptic problems15 and linear hyperbolic problems16. It was further studied for advection diffusion problems, compressible flow and complex geometries17,18,19. Implementation of adaptive methods in DG is straight forward. This is because there is no inter – element continuity requirement, which allows for simple changes of the element order based on the gradient. Lower orders are achieved by making the higher order terms zero. This method has been applied to both hyperbolic conservation laws20 and convection diffusion problems21,22.

The entire DG framework was implemented in an in-house code called the Multiscale Ionized Gas (MIG) flow code. This is a FORTRAN 90 modular code, which can be used to solve various problems like plasma drift diffusion equations23, hypersonic non-equilibrium flow24, magnetohydrodynamic equations25, and subsonic turbulent flow control26. The framework is parallelized via the message passing interface (MPI), which enables it to perform computations on multiple nodes on conventional supercomputing clusters. The sections ahead, will describe the space and time discretization for the Discontinuous Galerkin finite element framework, convergence study, implementation of slope limiters, and parallelization of the code.

Discontinuous Galerkin space discretization

To understand the discretization process for convection – diffusion problems, a generic scalar equation is chosen which can be extended to any equation system. This is given by

Where U denotes the conserved scalar variable, Finv and Fv denote the inviscid and viscous fluxes respectively and x ∈ Ω, which is the multidimensional domain. All the boundaries are considered periodic in this section. For an element, the approximate solution Uh (x, t) is represented by Eq. (8).

Where subscript K denotes the element, \({U}_{K}^{l}\) denotes the modal degrees of freedom of that element, φl denotes the basis function. Legendre polynomials are chosen as the local basis functions because of their property of L2 – orthogonality, which leads to a diagonal mass matrix and is beneficial when performing explicit calculations. The list of basis functions for a transformed coordinate system of \(x,y,z\in [-1,1]\) is provided in Table 1.

To obtain the weak form of the equation, the variable U is replaced by Uh and Eq. (6) is multiplied with the basis function φl. After integration by parts, Eq. (9) is obtained.

In Eq. (9), ne,K denotes the outward unit normal for the edge e (it can be a face or an edge) of element K. Figure 1 shows a representation of these elements. The element boundary space is denoted by Γ. For the terms in summation, where fluxes are to be evaluated at the element interfaces, the solution Uh is discontinuous and cannot be uniquely defined. Thus, the terms must be replaced by a locally Lipschitz, consistent, monotone flux to maintain the stability and convergence properties of the scheme with higher order of accuracy8. In Eq. (9), \({\mathop{F}\limits^{\rightharpoonup }}^{v}\) is a function of both U and ∇U, which implies that either ∇U needs to be evaluated as a new variable or treated explicitly. Detailed descriptions of the numerical integration, fluxes and terms are provided in the next two sections.

Numerical integration

All the integrals can be written in discrete form using Gauss – Legendre quadrature rules.

In Eq. (10) jac is obtained when transforming from global coordinate system to local coordinate system. Also for all the integrals shown, the basis functions vary with space, while the degrees of freedom vary in time as shown in Eq. (8). Since the basis functions are already in transformed space \({x^{\prime} }_{n}\) are the Gauss – Legendre points provided in Table 2. One should note that for multidimensional integration the single summation becomes multiple summations with quadrature points \({x^{\prime} }_{n}\) and weights wn being obtained via tensor product of one-dimensional weights and points.

Inviscid fluxes

As mentioned earlier, the discontinuity at the element interfaces requires the use of numerical fluxes. There are wide variety of numerical fluxes which satisfy the locally Lipschitz, monotone and consistent criteria27. However, the present work uses either Godunov flux or Local Lax-Friedrichs flux28. The later, also known as ENO-LLF, provides better shock capturing with improved accuracy. Although it is more diffusive than the Roe flux and the Godunov flux, its impact on the solution is insignificant for higher order approximations27. After replacing the inviscid flux in Eq. (9) with the numerical flux \({h}_{e,K}^{inv}\), the first summation term can be written as

The + and − states of the solution refer to the outside and inside solution along edge e as depicted in Fig. 1. The Godunov flux is given by

Eq. (12) can be interpreted as, if the neighbouring solution U+ is larger than the inside solution U− then choose the minimum flux \(({\rm{\min }}\,[{F}^{inv}({U}^{+}),\,{F}^{inv}({U}^{-})])\) otherwise choose the maximum of the two.

The Lax – Friedrichs flux is given by

In Eq. (13) αe,K is obtained by evaluating the largest absolute eigenvalue of the jacobian matrices for the outside and inside elements.

For Euler equations or Navier-Stokes equations the eigenvalues are u + a, u − a and u, where a is the speed of sound. In Eq. (14), \(\bar{U}\) is the mean solution of the inside or outside element depending on the λ being evaluated.

Viscous fluxes

The viscous terms in Eq. (9) can be modelled in numerous ways. Some of the common methods are LDG14, Bassi – Rebay (BR1 and BR2)11,12, Interior Penalty (IP)29, Baumann – Oden21 etc. type methods. A detailed comparison and insight on these methods can be found in Arnold et al.13. However, for brevity only the LDG, BR1 and BR2 schemes are described here.

The viscous fluxes include ∇U as an unknown which must be evaluated either a priori or along with the equation system. To evaluate ∇U, Eq. (6) is first changed to Eq. (15) and Eq. (16).

The same procedure as mentioned before is followed and finally equations like Eq. (9) are obtained.

It should be noted that in Eq. (17) and Eq. (18) θh denotes the approximate solution of the auxiliary variable θ as in the definition given in Eq. (8). As discussed earlier, the discontinuous interface requires the fluxes in the summation terms to be evaluated using a locally Lipschitz, consistent and monotone flux. Therefore the last terms in Eq. (17) and Eq. (18) are represented as Eq. (19) and Eq. (20).

The choice of numerical fluxes \({h}_{e,K}^{v}\) and \({h}_{e,K}^{\theta }\) gives rise to different methods.

Local discontinuous Galerkin method

The viscous numerical fluxes for this method can be written as

Using Eq. (21) and since \({\mathop{F}\limits^{\rightharpoonup }}^{v}\) for Eq. (16) is U, obtain the expressions for \({h}_{e,K}^{v}\) and \({h}_{e,K}^{\theta }\) are

A detailed discussion about the choice of constants c11 and c12, as well as the extension to multidimensional problems have been described by Cockburn and Shu14.

Bassi – Rebay method I

The numerical fluxes \({h}_{e,K}^{v}\) and \({h}_{e,K}^{\theta }\) are obtained by averaging the fluxes at the edge of the element and its neighbor. This is provided in Eq. (23) and Eq. (24)

The above method describes the BR1 scheme. However due to the method’s deficiencies, such as non – optimal accuracy for purely elliptic problems, spread stencil and increase in the number of degrees of freedom per element (specially for implicit algorithm)12, lead to the implementation of BR2 scheme.

Bassi – Rebay method II

This scheme uses the property that, the evaluation of a solution gradient inside the element is trivial and can be obtained using the gradients of the basis functions. However, for P = 0 elements and at interface discontinuities it is not trivial. To obtain ∇U without adding an extra equation a correction term R is added. This is known as the lift operator. After few mathematical manipulations12 Eq. (18) can be rewritten as Eq. (25).

Thus, we can write θh = ∇Uh + Rh, where Rh is defined like Eq. (8) and can be obtained using Eq. (26).

Using the global lifting operator leads to a non-compact stencil which can be avoided by using local lift operators rh. This is defined by

When performing volume integrals, global lift operators are used and for element boundary integrals, local lift operators are used. Using this scheme leads to a reduction in the number of degrees of freedom. The information from immediate neighbors is only required producing a compact stencil. This minimization of information needed from the local region means that the method spends most of its time computing local integrals, and the communication workload is far smaller than the computational workload. A scenario then arises where most of the calculations in each individual element are independent and thus almost “embarrassingly parallel” making them amenable to exploit maximum parallel efficiencies.

Temporal discretization

The choice of time integration depends on the problem in hand. For transient accuracy, high order time accurate schemes need to be implemented. Problems involving acoustic wave propagation fall in this category. This section will describe some of the common time integration methods implemented and their advantages and disadvantages.

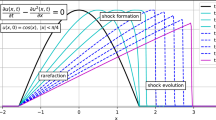

Explicit time integration

To solve the nonlinear hyperbolic conservation laws in a DG framework an explicit implementation of the method was introduced30. This overcame the issue of solving nonlinear problems on an element by element basis. However, an explicit method is restricted by the CFL condition. To improve the stability of the scheme a TVDM slope limiter was implemented31. However, this method was only first order accurate in time and the slope limiter affected the smooth regions of the solution reducing the spatial accuracy. This was finally overcome by using the RKDG method and a modified slope limiter which was second order in time and maintained the accuracy of the scheme in smooth regions7. This made the scheme stable for CFL ≤ 1/3. To show the explicit time integration Eq. (9) is written in a modified form given by Eq. (29).

The mass matrix [M], is diagonal for the present choice of basis functions. For the simple Euler explicit case, Eq. (29) can be written as Eq. (30) which yields first order accuracy in time.

Using the second order RKDG method the solution can be more time accurate. This is described in Eq. (31)

The RKDG method has been proven to give CFL ≤ 1/3 for P = 1 and CFL ≤ 1/5 for P = 2 case7. Although RKDG scheme has high parallelizability, being an explicit scheme it has CFL restrictions.

Implicit time integration

Since the problems studied are nonlinear in nature, the Newton’s method is employed to solve for the equation system. The goal here is to find a value iteratively, which would be closest to the actual solution. Thus, Eq. (29) is written as Eq. (32) for iteration q

To get the next time step solution Eq. (32) is discretized in time using the Euler Implicit algorithm to obtain Eq. (33).

Therefore, for q ≥ 1, Newton’s method can be applied to Eq. (33). It should be noted that when q = 1 in Eq. (33), \({U}_{K}^{l}({t}^{n+1,q-1})={U}_{K}^{l}({t}^{n})\).

Test Cases

Taylor green vortex

Background

This is one of the canonical problems studied for hydrodynamic turbulence. This has been extensively studied in literature to derive empirical and analytical relations in turbulent flow physics. Early in depth numerical investigation of this problem was done by Orszag32 and Brachet et al.33,34. This problem was also studied by Comte-Bellot and Corrsin35 experimentally as a grid turbulence problem. These studies have become the benchmark for turbulent code validation. Since then, different numerical methods36,37,38,39 have been used to improve or validate these studies. Results for different Reynolds number, mesh and spatial order of accuracy are compared and investigated. The domain size \(\,\Omega =(2\pi \times 2\pi \times 2\pi )\) with periodic boundaries on all faces. The initial conditions for this problem are

This problem is solved using RKDG method, which involves RK2 time marching and LDG scheme for viscous flux. Two types of inviscid fluxes are tested, namely Godunov flux and LLF flux. The mesh is uniform in all directions and the DOFs for an N3 mesh corresponds to \({N}^{3}\times {(P+1)}^{3}\). Although the cases can be run at different time step Δt, the solutions are obtained using Δt = 2.5 × 10−4, to have similar time diffusion. The time step is kept low since the Godunov flux requires more restrictive time stepping than the LLF flux. The simulations are run till t = 10. Three main parameters are used to study this case. These include the integrated kinetic energy Ek, kinetic energy dissipation rate ε and integrated enstrophy ζ. These parameters are given in Eq. (36). For incompressible flows ε and ζ can be related using the relation given in Eq. (37). It should be noted that evaluation of ε (ζ) requires additional degrees of freedom to reach the correct ε levels when compared to ε (Ek).

Effect of Reynolds number

To study the effect of Reynolds number (Re), the inviscid flux is kept as Godunov flux and a 603 (1803 degrees of freedom) mesh size is used. The third order accurate (P = 2) spatial accuracy is chosen. The Reynolds numbers tested are 100, 200, 400, 800 and 1600. The normalized Root Mean Square (RMS) error of \(\varepsilon ({E}_{k})\) in comparison with DNS data is given in Table 3. The norm error is evaluated using Eq. (38). The timestep is 10−3 sec and data is printed at every 250 steps within the 10 sec interval (N = 40) for all cases considered for Eq. (38). Except Re = 1600 all the other Reynolds number have results that are comparative to DNS results34. The profile of kinetic energy dissipation rate ε (Ek) is shown in Fig. 2. The dissipation rate is captured accurately by MIG DG ILES. However, in the next section it will be seen that using LLF inviscid flux has slightly more error than the Godunov flux due to its higher dissipation.

Energy dissipation rate at different Reynolds number using third order accurate DG solution on a 603 mesh compared with DNS results34.

Effect of inviscid numerical flux

To study the effect of numerical fluxes, the Godunov flux and LLF flux are tested for a 603 with P = 2 (1803 DOF), 453 with P = 3 (1803 DOF) and 363 with P = 4 (1803 DOF) mesh sizes. It should be noted that the total DOF is calculated by N3 × (P + 1)3. The Reynolds number for the cases here is kept at Re = 1600. The normalized RMS error of ε (Ek) in comparison with DNS data36 is given in Table 4. The dissipation rate has higher errors when the LLF scheme is used. The greater diffusive nature of LLF flux was also observed by Beck et al.40 when comparing with the Roe scheme. However, the differences are very low as the errors are two orders of magnitude lower than the variable value. It should be noted that although Godunov flux is more accurate due to its least dissipative nature, it creates larger oscillations which can result in backscatter and also requires a lower time step. Therefore, although LLF has higher numerical dispersion, it is preferable to be used with slightly higher degrees of freedom. For this problem using around 1.4 times the number of DOF in each direction matches the solutions for both the fluxes at P = 2. For higher orders, the differences in dissipation rate due to fluxes become negligible. This can be observed in Fig. 3 which depicts the similarity in solutions for the two fluxes at different degrees of freedom for a P = 2 and P = 4 case.

Effect of spatial order of accuracy

To study the effect of spatial order of accuracy LLF flux is chosen as the inviscid numerical flux. The problem is studied using orders P = 2, P = 3 and P = 4. The Reynolds number for the cases here is kept at Re = 1600. All the parameters mentioned in Eq. (36) and Eq. (37) are depicted in Fig. 4. Both ε (ζ) and ε (Ek) are compared to highlight the differences between ILES results and DNS results36 as well as to show that, capturing gradients in ILES requires more degrees of freedom. The DNS results are obtained using 13-point DRP scheme with 5123 grid. The solutions obtained using P = 2 have the largest error for the same DOF. This is a known property which is utilized in turbulent flow simulations using higher order methods. However, as shown in the previous paragraph, the differences between the fluxes are negligible.

Comparison of the MIG DG solution with published DNS results36. (A) Turbulent kinetic energy, (B) energy dissipation rate based on integral kinetic energy and (C) energy dissipation rate based on enstrophy.

To see if the solution converges, higher DOFs were compared to the DNS solution. This is depicted in Fig. 5. Although ε (Ek) has converged to the DNS solution, ε (ζ) has not converged yet. This behavior was also observed by DeBonis36 who performed a comparison between 4th, 8th and 12th order central finite difference schemes with a 13-point DRP scheme (DNS). Similar behavior has been found for DNS41 solutions using DG methods.

Energy spectrum

The kinetic energy spectrum for all the cases is plotted at t = 10 in Fig. 6. All the curves follow the standard turbulent spectrum of −5/3 slope. The differences between the spectrums for different order polynomials depicted in Fig. 6(A) are negligible. Note, the effect of flux is not significant on the energy spectrum.

Flow structures

The instantaneous iso-surface of Q – criterion (positive second scalar invariant of ∇u) colored with velocity magnitude is depicted in Fig. 7. The equation defining Q – criterion is provided in Eq. (39). The data corresponds to the simulation with P = 3 (DOF = 3203). The coherent structures keep breaking down into smaller structures as the time progresses and finally around t = 9 the flow becomes fully turbulent.

Parallel Algorithms

To parallelize the MIG code, open MPI was used and the code was tested at the University of Florida high performance computing center. All the tests were run on servers with Intel E5-2698 v3 processors with the capability to achieve HPL Rmax of 7.381 × 105 GFlops. The domain was decomposed lexicographically with equal elements in each processor. The solution time for N-S equations was studied for processor counts of 1, 8, 16, 32, 64, 128, 256 and 512. The parallel performance is studied by solving the time explicit N-S equations for the Taylor Green vortex isotropic turbulence problem. Two cases were tested with total number of elements, 323 (DOF = 5570560) and 643 (DOF = 44545480). A small number of elements was chosen to result in a partitioning scheme with significant communication time with respect to the calculations performed. The problem is run for 100 time steps to average out the total time duration and the all the tests are repeated three times.

Figure 8(A) shows that the speedup on a log-log plot is similar for both 323 and 643 cases up to 512 processors. The power data fit to 323 case shows a speedup slope of 0.94 while for 643 it shows 0.95. Based on the data fit the parallel speedup (speedup/ideal) efficiency ranges from 99% for 8 processors to 63% for 512 processors. In Fig. 8(B) the speedup is plotted on a linear scale and the 323 case starts to plateau due to increase in communication time between processors while the 643 case maintains a linear slope. The processors show different performances for different runs since each case is not run on the same server, which gives a deviation in speedup of up to 5%. The initial higher speedup for the 323 case compared to the 643 case is within this tolerance limit. Further improvements can be made by using non – blocking instead of blocking MPI send and receive commands. Also optimizing the domain decomposition can lower communication time.

The convergence study is shown in Fig. 9 compares the convergence rates for different orders of polynomials. As evident from the plots, the higher order methods show the higher convergence rates in agreement with theory.

A similar scaling study was performed on the Stampede2 machine at the Texas Advanced Computing Center. The study was performed on the new Intel Knights Landing architecture. This new hardware promises much better performance through improved memory bandwidth and larger cache memory per computational core.

The Knights Landing processor from intel consists of 36 active tiles each consisting of 2 processing computational cores, hence having a maximum total of 72 processing cores per compute node (Fig. 10). The cores are connected to each other via a two dimensional on-die ring type interconnect which can deliver an aggregate data bandwidth in excess of 700 gigabytes per second. Each tile containing 2 processing cores shares a 1-megabyte level 2 cache and each compute core has its individual L1 instruction and data caches of 32 kilobytes respectively. Additionally, each core has two vector processing units (VPUs) which allows for very fast floating-point arithmetic operations in parallel.

The local nature of the discontinuous Galerkin method makes it a prime candidate for peak performance on such a vectorized architecture. The fundamental idea behind the Galerkin method is the repeated interpolation and integral computations within each discretized element. As higher orders of the approximation polynomials are employed the amount of computations being performed on the data increases exponentially. This ideally suits the kind of architecture possessed by the Knights Landing processor which relies on bringing in chunks of data from higher level, slow memory like conventional RAM (Random Access Memory) to large caches less frequently and then working on them for longer periods of time.

Figures 11 and 12 show the scaling of different orders of polynomials with numerical accuracy via MPI and OpenMP implementations of the MIG code. It is quite evident from the initial results that the higher order polynomial solutions show the best promise of parallel scaling. The MPI scaling showed the most dramatic difference between the higher order polynomials. For 100 MPI tasks, the parallel efficiency was 56.6% for P = 2, 79.5% for P = 4 and 89.0% for P = 6. At 400 MPI tasks, the efficiency fell to 55.7% for P = 2 and 77.4% for P = 4. The OpenMP scaling showed much less of a difference between the three polynomial orders. The code was run for as many as 64 OpenMP threads on the Knights Landing processor. The highest order tested (P = 6) was marginally more efficient, with the biggest difference occurring as the number of threads was increased. For 64 threads, the efficiencies were 90.6%, 92.0% and 92.5% for P = 2, 4, and 6, respectively. Overall, the OpenMP efficiency was greater than the MPI efficiency for equivalent number of threads and tasks. As described earlier, improvements to the MPI implementation and domain decomposition method can improve the efficiency of the MPI scaling.

For scientific or an engineering problems one is interested in the eventual computational cost for a pre-specified level of acceptable error in the simulations. The combination of a better error convergence rate and superior scaling for higher order methods leads to the evaluation that for reasonably large numerical problem the computational cost for a specific level of numerical error will be the lowest for higher order methods.

Mach 2.25 Turbulent Boundary Layer Flow

The scheme described in the Numerical Method was used to simulate the zero pressure gradient developing turbulent boundary layer at Mach 2.25. This case serves as a good stepping stone for high speed turbulent flow simulations with higher freestream Mach numbers. The primary challenge in simulating high speed turbulent boundary layers is tied to the large temperature gradient that develops across the boundary layer caused by the increased influence of viscous dissipation (Spina et al. 1994).

The freestream conditions and the corresponding range of the momentum thickness-based Reynolds number \({{\rm{Re}}}_{\theta }={u}_{\infty }\theta /{\nu }_{\infty }\) (based on the freestream viscosity as opposed to the wall viscosity) for the present case are listed in Table 5. In order to transition the flow to turbulence, the flow is tripped using the method of Schlatter and Örlü (2012) through bypass transition. The tripping body force is in the wall-normal direction, and it is given by

The forcing function g(z,t) in Eq. (12) fluctuates as a function of time, and it also contains a random coefficient which varies in the spanwise direction. The full form of the forcing function is given in Schlatter and Örlü (2012).

The computational domain consists of \({N}_{x}\times {N}_{y}\times {N}_{z}=900\times 64\times 64\) finite elements. Within each element, a modal basis function representation is employed. Quadratic Legendre basis functions (p = 2) with third-order spatial accuracy are used to interpolate the solution. The grid in the direction normal to the wall is stretched, with the smallest grid spacing (based on the inner wall units) of Δy+ = 0.5 at the wall. In the spanwise and streamwise directions, the grid is uniform. The boundary conditions for the computational domain are as follows. The laminar boundary layer solution is used as an inflow boundary condition for the simulation. At the wall, the adiabatic and no-slip boundary conditions are prescribed. In the spanwise direction, symmetric boundary conditions are enforced. In the streamwise direction, a fringe region is added at the outlet to eliminate reflections from the outlet boundary. This concept has been successfully used in simulations of turbulent boundary layers in the past, e.g. Spalart and Watmuff42.

Instantaneous flow field

The features of the instantaneous flow are studied in Fig. 13, which gives the plot of the Q criterion iso-surfaces at the value of Q = 3. The iso-surfaces are colored by the magnitude of the streamwise velocity for a momentum thickness Reynolds number Reθ in the range of 400-1350. The plane below the iso-surfaces represents the flat plate. The flow is tripped and the initial coherent structures quickly break down into a fully turbulent flow. Asymmetric one-legged hairpin vortices can be observed along with the more typical structures. The flow in Fig. 13 is plotted after both the initial transients disappeared and the mean flow calculations were carried out, corresponding roughly to three flow-through times (the fluid convecting three times over the streamwise length of the plate).

Figure 14 shows the instantaneous normalized density, normalized streamwise velocity and temperature fields for the flow after two flow-through times. The slice location of the xy-plane corresponds to the center of the domain in the spanwise direction (z = 1.5 mm and k = 32). The height of the domain in the wall normal direction was found to be sufficient to avoid the reflections from the top boundary destroying the rest of the solution. A large-scale structure angle of about 45 degrees has been observed for this problem, along with a shallower 10 degree angle for structures closer to the wall. These angles can also roughly be seen in Fig. 14, despite the lesser level of resolution in this study in comparison with that of Poggie43.

The ratio of the freestream temperature to the wall temperature matches the expected value that can be obtained from a similarity solution of a compressible laminar boundary layer with the adiabatic wall boundary condition. The growth of the boundary layer is shown in Fig. 14(b) by plotting the boundary layer thickness.

Figure 15 shows the fluctuations of the normalized density, normalized streamwise velocity and temperature fields. The xy-plane slice location is identical to that in Fig. 14. The fluctuations are plotted to offer additional visualization of the turbulent flow field. The high intensity fluctuations in the boundary layer show packets of fluid which are hotter and lighter than the freestream fluid and which are pushed upward as the boundary layer grows.

In Fig. 16, the flow structures along a wall normal plane are visualized by plotting the velocity contours at the j = 15 plane (y ≈ 6 × 10−2 mm). The vortices are seen to produce low speed streaks which spread out as the flow becomes fully turbulent. The figure also shows that the fluid in the boundary layer is drawn upward away from the wall. The normalized density profile is plotted along various spanwise planes and shows the growth of the flow structures as the thickness of the boundary layer increases.

Mean Flow Field

Figure 17 shows the skin friction coefficient plotted against the momentum thickness Reynolds number in the range of Reθ = 700–1200. This range is chosen because the flow has already become fully turbulent. The skin friction decreases with increasing momentum thickness Reynolds number.

Figure 18 plots the mean velocity profiles for the turbulent boundary layer, scaled in both the inner and outer coordinates. For the inner wall coordinates, the mean velocity is plotted in the van Driest-transformed form26. The transformed velocity is given by

The transformed velocity is plotted at downstream locations of Reθ = 800–1100. The streamwise velocity profiles are seen to collapse reasonably well to the law of the wall in the viscous sublayer (indicated by a solid line in Fig. 18) and the buffer layer (dashed and dotted line in Fig. 18) for this particular case.

Figures 19 and 20 give the Reynolds stresses scaled by the wall shear stress. The transformed Reynolds stress is plotted using the inner coordinates in Fig. 19 and the outer coordinates in Fig. 20. The transformed Reynolds stress is calculated as

The Reynolds stresses are plotted at the downstream location of Reθ = 1000, which is not in close proximity to the outflow boundary but still sufficiently far from the flow tripping region. At this location, the peak value of the normal Reynolds stress occurs at approximately y+ = 11 (y/δ = 0.06). The wall normal and spanwise components of the Reynolds stress are smaller in comparison, and their peak values occur further away from the wall. After reaching their peak values, all the stresses decay with increasing y/δ sufficiently far away from the wall. The Reynolds stresses indicate that the majority of the turbulence is produced in the region of y+ = 10–100, fairly close to the wall.

Conclusions

A scalable, parallel, high-fidelity DG formulation was demonstrated for the test case of isotropic turbulent flow for a Taylor-Green vortex problem for Reynolds numbers ranging between 100–1600. The solutions for the DG ILES method were found to match up well with DNS results up to the Reynolds number of 1600, for which a higher resolution is necessary. Tests using the Godunov and LLF numerical fluxes showed negligible differences in the dissipation rates for polynomial orders beyond P = 2. The kinetic energy dissipation rate was found to converge to the DNS solution when a higher number of DOFs was used.

A Reynolds number of 1600 was then used to study the performance of different polynomial orders from P = 2 to P = 6 with regard to computational cost and scalability in parallel. The DG method demonstrated the advantages of the higher-order polynomials for parallel implementation. Namely, the higher-order polynomials showcased superior scalability and performance to achieve a given level of error over the range that was tested. The studies showed that increasing the order of the interpolating polynomial increased the parallel efficiency using both the MPI and OpenMP parallel implementations. The improvement in parallel efficiency was larger for the MPI implementation than for the OpenMP implementation. The MPI implementation showed improvements of 20–30% in parallel efficiency between P = 2 and P = 6 depending on the number of tasks. The difference between P = 2 and P = 6 for the OpenMP implementation was as small as 2%. It is not conclusive from this study whether this behaviour continues into higher order polynomials or if the efficiency saturates.

The same parallel framework was used to compute simulations of the development of a supersonic turbulent boundary layer at Mach 2.25. These computations employed nearly 33 million spatial degrees of freedom with the solution domain being approximated with quadratic Legendre polynomials. Numerical investigations into the physics such as the variation of the skin friction coefficient with the Reynolds number and characteristics of the Reynolds stress in the boundary layer are presented. These computations provide confidence in the capabilities of the numerical framework to perform more investigations to provide deeper physical insights in such phenomena in future work. The parallel scalability and efficiency of this framework suggests that such large studies can now be attainable in reasonable amount of time.

The focus of future studies is exploring polynomial orders beyond P = 6 to determine the diminishing returns on scaling when continuing to increase the polynomial order. In addition, domain decomposition will be performed by minimizing the surface area over which separate MPI tasks are required to communicate with each other. These changes should improve the scaling of the MPI implementation, which is not as optimized as the OpenMP scaling at the present time.

Data Availability

The data for the presented results is available and will be posted on a public link at APRG website.

Change history

21 January 2020

An amendment to this paper has been published and can be accessed via a link at the top of the paper.

References

Behr, M., Pressel, D. M. & Sturek, W. B. Comments on CFD code performance on scalable architectures. Comput. Methods Appl. Mech. Engrg. 263–277 (2000).

Gropp, W. D., Kaushik, D. K., Keyes, D. E. & Smith, B. F. High-performance parallel implicit CFD. Parallel Computing, 337–362 (2001).

Wang, Z. et al. High-order CFD methods: current status and perspective. International Journal for Numerical Methods in Fluids, 811–845 (2013).

Deng, X., Mao, M., Tu, G., Zhang, H. & Zhang, Y. High-Order and High Accurate CFD Methods and Their Applications for Complex Grid Problems. Commun. Comput. Phys. 1081–1102 (2012).

Huerta, A., Angeloski, A., Roca, X. & Peraire, J. Efficiency of high-order elements for continuous and discontinuous Galerkin methods. International Journal for Numerical Methods in Engineering, 529–560 (2013).

Reed, W. H., & Hill, T. R. Triangular mesh methods for the Neutron Transport equation. Los Alamos, NM: LA-UR-73-479 (1973).

Cockburn, B. & Shu, C. W. The Runge-Kutta local projection P1 - discontinuous Galerkin method for scalar conservation laws. ESAIM: Mathematical Modelling and Numerical Analysis 25(3), 337–361 (1991).

Cockburn, B. & Shu, C. W. TVB Runge-Kutta local projection discontinuous Galerkin finite element method for conservation laws. II. General framework. Mathematics of Computation 52(186), 411–435 (1989).

Chen, Z. & Cockburn, B. Analysis of a finite element method for the drift-diffusion semiconductor device equations. Numerische Mathematik 71(1), 1–28 (1995).

Chen, Z. A Finite element method for the quantum hydrodynamic model for semiconductor devices. Computers & Mathematics with Applications 31(7), 17–26 (1996).

Bassi, F. & Rebay, S. A high-order accurate discontinuous finite element method for numerical solution of the compressible Navier-Stokes equations. Journal of Computational Physics 131(2), 267–279 (1997).

Bassi, F., Crivellini, A., Rebay, S. & Savini, M. Discontinuous Galerkin solution of the Reynolds-averaged Navier-Stokes and k–ω turbulence model equations. Computer and Fluids 34(4), 507–540 (2005).

Arnold, D. N., Brezzi, F., Cockburn, B. & Marini, L. D. Unified analysis of discontinuous Galerkin methods for elliptic problems. SIAM Journal on Numerical Analysis 39(5), 1749–1779 (2002).

Cockburn, B. & Shu, C. W. The local discontinuous Galerkin method for time-dependent convection-diffusion systems. SIAM Journal on Numerical Analysis 35(6), 2440–2463 (1998).

Funaro, D. A multidomain spectral approximation of elliptic equations. Numerical Methods for Partial Differential Equations 2(3), 187–205 (1986).

Funaro, D. & Gottlieb, D. Convergence results for pseudospectral approximations of hyperbolic systems by a penalty-type boundary treatment. Mathematics of Computation 57(196), 585–596 (1991).

Hesthaven, J. S. & Gottlieb, D. A stable penalty method for the compressible Navier–Stokes equations: I. Open boundary conditions. SIAM Journal on Scientific Computing 17(3), 579–612 (1996).

Hesthaven, J. S. A Stable Penalty Method for the Compressible Navier–Stokes Equations: II. One-Dimensional Domain Decomposition Schemes. SIAM Journal on Scientific Computing 18(3), 658–685 (1997).

Hesthaven, J. S. A Stable Penalty Method for the Compressible Navier–Stokes Equations: III. Multidimensional Domain Decomposition Schemes. SIAM Journal on Scientific Computing 20(1), 62–93 (1998).

Bey, K. S., Oden, T. J. & Patra, A. A parallel hp-adaptive discontinuous Galerkin method for hyperbolic conservation laws. Applied Numerical Mathematics 20(4), 321–336 (1996).

Baumann, C. E. & Oden, T. J. A discontinuous hp finite element method for convection—diffusion problems. Computer Methods in Applied Mechanics and Engineering 175(3–4), 311–341 (1999).

Houston, P., Schwab, C. & Suli, E. Discontinuous hp-finite element methods for advection-diffusion-reaction problems. SIAM Journal on Numerical Analysis 39(6), 2133–2163 (2002).

Roy, S. & Gaitonde, D. Radio Frequency induced ionized collisional flow model for application at atmospheric pressures. Journal of Applied Physics 96(5), 2476–2481 (2004).

Bhatia, A., Roy, S. & Gosse, R. Effect of dielectric barrier discharge plasma actuators on non-equilibrium hypersonic flows. Journal of Applied Physics 116(16), 164904 (2014).

Das Gupta, A. & Roy, S. Discontinuous Galerkin Method for Solving Magnetohydrodynamic Equations. 53rd AIAA Aerospace Sciences Meeting. Kissimmee, Florida: AIAA (2015).

Das Gupta, A. & Roy, S. Three-dimensional plasma actuation for faster transition to turbulence. J. Phys. D: Appl. Phys. 50, 425201 (2017).

Cockburn, B. Discontinuous Galerkin Methods for Convection-Diffusion Problems. In T. J. Barth, & H. Deconinck (Eds), High-Order Methods for Computational Physics (pp. 69–224). Springer (2001).

Shu, C. W. & Osher, S. Efficient Implementation of Essentially Non-Oscillatory Shock-Capturing Schemes II. Journal of Computational Physics 83(1), 32–78 (1989).

Douglas, J. Jr. & Dupont, T. Interior Penalty Procedures for Elliptic and Parabolic Galerkin Methods. Computing Methods in Applied Sciences. Lecture Notes in Phys 58, 207–216 (1976).

Chavent, G. & Salzano, G. A finite element method for the 1D water flooding problem with gravity. Journal of Computational Physics 45(3), 307–344 (1982).

Chavent, G. & Cockburn, B. The local projection P0 P1 discontinuous-Galerkin finite element method for scalar conservation laws. ESAIM: Mathematical Modelling and Numerical Analysis 23(4), 565–592 (1989).

Orszag, S. A. Numerical simulation of the Taylor-Green vortex. In R. Glowinski, & J. L. Lions, Computing Methods in Applied Sciences and Engineering Part 2. Lecture Notes in Computer Science (Vol. 11, pp. 50–64). (Berlin, Heidelberg: Springer, 1974).

Brachet, M. E. Direct simulation of three-dimensional turbulence in the Taylor—Green vortex. Fluid dynamics research 8(1–4), 1–8 (1991).

Brachet, M. E. et al. Small-scale structure of the Taylor–Green vortex. Journal of Fluid Mechanics 130, 411–452 (1983).

Comte-Bellot, G. & Corrsin, S. Simple Eulerian time correlation of full-and narrow-band velocity signals in grid-generated, ‘isotropic’ turbulence. Journal of Fluid Mechanics 48(2), 273–337 (1971).

DeBonis, J. Solutions of the Taylor-Green vortex problem using high-resolution explicit finite difference methods. 51st AIAA Aerospace Sciences Meeting including the New Horizons Forum and Aerospace Exposition. Grapewine, Texas (2013).

Fureby, C. & Grinstein, F. F. Large eddy simulation of high-Reynolds-number free and wall-bounded flows. Journal of Computational Physics 181(1), 68–97 (2002).

Gassner, G. J. & Beck, A. D. On the accuracy of high-order discretizations for underresolved turbulence simulations. Theoretical and Computational Fluid Dynamics 27(3–4), 221–237 (2013).

Hickel, S., Adams, N. A. & Domaradzki, J. A. An adaptive local deconvolution method for implicit LES. Journal of Computational Physics 213(1), 413–436 (2006).

Beck, A. D., Gassner, G. J. & Munz, C. D. On the effect of flux functions in discontinuous Galerkin simulations of underresolved turbulence. In M. Azaïez, H. El Fekih, & J. Hesthaven, Spectral and High Order Methods for Partial Differential Equations - ICOSAHOM 2012. Lecture Notes in Computational Science and Engineering (Vol. 95, pp. 145–155). Springer, Cham (2014).

Chapelier, J. B., De La Llave Plata, M., Renac, F. & Lamballais, E. Evaluation of a high-order discontinuous Galerkin method for the DNS of turbulent flows. Computers & Fluids 95, 210–226 (2014).

Spalart, P. R. & Watmuff, J. H. Experimental and numerical study of a turbulent boundary layer with pressure gradients. Journal of Fluid Mechanics 249, 337–371 (1993).

Poggie, J. Compressible Turbulent Boundary Layer Simulations: Resolution Effects and Turbulence Modeling. AIAA Paper 2015–1983 (2015).

Acknowledgements

This work used the Extreme Science and Engineering Discovery Environment (XSEDE), which is supported by National Science Foundation grant number ACI-1548562. TH and SR acknowledge the support of this work by the AFRL Computational Sciences Center and the DoD HPCMP’s User Productivity Enhancement, Technology Transfer, and Training (PETTT) Program (Contract No: GS04T09DBC0017 through Engility Corporation).

Author information

Authors and Affiliations

Contributions

R.G. and S.R. conceived the research; T.H. and A.D. implemented the formulation and performed the numerical experiments; T.H., A.D. and S.G. made the figures; T.H., A.D.G., S.G. and S.R. analyzed the data and wrote the paper; R.G. and S.R. supervised the project.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Houba, T., Dasgupta, A., Gopalakrishnan, S. et al. Supersonic turbulent flow simulation using a scalable parallel modal discontinuous Galerkin numerical method. Sci Rep 9, 14442 (2019). https://doi.org/10.1038/s41598-019-50546-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-019-50546-w

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.