Abstract

Coastal planners and decision makers design risk management strategies based on hazard projections. However, projections can differ drastically. What causes this divergence and which projection(s) should a decision maker adopt to create plans and adaptation efforts for improving coastal resiliency? Using Norfolk, Virginia, as a case study, we start to address these questions by characterizing and quantifying the drivers of differences between published sea-level rise and storm surge projections, and how these differences can impact efforts to improve coastal resilience. We find that assumptions about the complex behavior of ice sheets are the primary drivers of flood hazard diversity. Adopting a single hazard projection neglects key uncertainties and can lead to overconfident projections and downwards biased hazard estimates. These results highlight key avenues to improve the usefulness of hazard projections to inform decision-making such as (i) representing complex ice sheet behavior, (ii) covering decision-relevant timescales beyond this century, (iii) resolving storm surges with a low chance of occurring (e.g., a 0.2% chance per year), (iv) considering that storm surge projections may deviate from the historical record, and (v) communicating the considerable deep uncertainty.

Similar content being viewed by others

Introduction

Coastal flood hazards are increasing in many regions around the world1,2. Decision makers are designing strategies to manage the resulting risks3,4,5,6,7,8,9,10. The design of such flood risk management strategies can hinge critically on flood hazard projections11,12. Decision makers face a potentially confusing array of flood hazard projections. These projections include scenarios without formal probabilistic statements (e.g.4,5,6,8,13), single probability density functions (e.g.14), and probabilistic scenarios (i.e., multiple probability density functions conditional on model assumptions) (e.g.1,15,16,17). Furthermore, the projections differ in crucial assumptions, for example, about the potential non-stationarity of storm surges and/or about future potential abrupt changes in ice-sheet dynamics15,16,18. Here, we synthesize and analyze published flood hazard projections. We hope that this synthesis can help to improve the understanding of what drives the apparent diversity of coastal flood hazard projections and, in turn, can help to improve the design of flood risk management strategies.

The synthesis and analysis of flood hazard projections is an area of active research with a rich body of excellent previous work. Relevant examples include the U.S. Army Corps of Engineers4,5,6, Tebaldi et al.19, Parris et al.13, Zervas20, Kopp et al.1, Hall et al.8, Kopp et al.15, Sweet et al.14, Wong and Keller16, Rasmussen et al.17, and Wong18. These studies have broken important new ground, but they are hard to compare due to differences in underlying assumptions and projection structure. Further, decision makers assess community vulnerability, and design and implement flood risk management strategies on a local to regional scale. Hence, we focus on a case study for the Sewell’s Point tide gauge in Norfolk, Virginia, USA, to be relevant to local-scale preparedness planning and risk management. We choose the city of Norfolk as a case study because it is prone to impacts from sea-level rise (SLR), nuisance flooding from high tides, heavy rainfall, and as well as tropical and extra-tropical storms. Additionally, it is the location of an active U.S. Navy base (Naval Station Norfolk). Although we restrict this study to the city of Norfolk, we analyze differences in published flood hazard projections that can be localized to other coastal cities following our methodology. As a result, this study can provide useful insights to the broader community interested in local coastal protection.

We consider multiple future SLR scenarios and characterizations of storm surge generated from different approaches to represent a range of choices in the coastal assessment, planning, and decision-making process. We expand upon the current state-of-the-art by assessing the differences, the potential consequences of these differences, and addressing the results in a local coastal protection context. We compare eight studies of SLR1,4,5,6,8,13,14,15,16,17, four studies of storm surge6,18,19,20, and one storm surge analysis that is new to this work. We choose to compare these studies for three reasons: 1) they depict knowledge gained over a decade of research, 2) they integrate global SLR scenarios with regional factors, and 3) the values intend to support stakeholder groups and communities in regard to coastal preparedness planning and risk management. The overall goal of this work is to evaluate the current scientific knowledge to identify and highlight current limitations and community needs that can support real coastal preparedness planning and risk management processes.

Results

Sea-level rise projections

The SLR scenarios evaluated here follow two different methods (Fig. 1). The first method provides probabilistic projections of individual components of SLR for representative concentration pathways (RCP)21 or target temperature stabilization scenarios, which are then downscaled to the local level1,15,16,17. The other considered studies follow the method of providing scenarios that describe plausible conditions across a broad range representing the scientific knowledge at the time of report development4,5,6,8,13,14.

Probabilistic projections

Kopp et al.1, Kopp et al.15, Wong and Keller16, and Rasmussen et al.17 are examples of probabilistic projections of SLR, which can be localized to the Sewell’s Point tide gauge. These studies are all based on the framework from Kopp et al.1 with the exception of Wong and Keller16. As such, understanding the method and framework behind Kopp et al.1 is of great importance to flood risk management strategies. Although Kopp et al.1 provides a valuable step forward in developing local probabilistic projections based on individual SLR components, it faces limitations with respect to the projection of the complex behaviors of the ice sheets, as well as the consideration of stabilization targets. Relevant studies that expand on these aspects include Kopp et al.15, Wong and Keller16, and Rasmussen et al.17.

Kopp et al.1 defines a set of probabilistic global and local SLR projections constructed with RCP scenarios by modeling individual processes that contribute to local SLR. The individual components include oceanic processes, ice sheet melt, glacier and ice cap surface mass balance, land-water storage, and long-term, local, non-climatic sea-level change. To calculate global sea-level probability distributions, Kopp et al.1 employs 10,000 Latin hypercube samples from cumulative SLR contributions. Kopp et al.1 then localizes these projections (i.e., at tide gauge locations) by applying sea-level fingerprints22.

Kopp et al.15 employs the same framework as Kopp et al.1, except that it replaces the Antarctic ice sheet (AIS) projections with those based on DeConto and Pollard23. The original AIS projections used in Kopp et al.1 account for simple assumptions of constant acceleration that underlies expert-judgement-based projections. Instead, the new approach influences buttressing ice shelves and hence accounts for marine ice cliff instability and hydrofracturing (for more details see23). However, the ensemble of AIS projections were developed using a simplified approach of sampling key physical parameters from a set of values and integrating paleo-observations with a pass/fail test rather than producing a probability distribution. Because Kopp et al.15 directly uses these AIS projections, the resulting SLR projections are potentially more conservative with respect to low probability AIS projections.

Wong and Keller16 employ two sets of simulated sea-level scenarios. One scenario assumes that there is no contribution of fast Antarctic ice sheet dynamics (e.g., ice cliff instability and hydrofracturing), while the other assumes that the fast dynamics is triggered. Wong and Keller16 emulate fast dynamics with a simplified approach that assumes a constant rate of disintegration once a critical temperature threshold is passed. For this study, we differentiate the scenarios as Wong and Keller16 FD and Wong and Keller16 no FD, respectively assuming fast dynamics is triggered and assuming fast dynamics is not triggered. Specifically, we use the results based on prior gamma distributions for the parameters that control the uncertain rate of disintegration and the threshold temperature that triggers fast dynamical disintegration.

Wong and Keller16 use a simple mechanistically motivated emulator to project coastal flooding hazards (BRICK: Building Blocks for Relevant Ice and Climate Knowledge model v0.2)24. The BRICK model simulates global mean surface temperature, ocean heat uptake, thermal expansion, changes in land-water storage, and ice melt from the AIS, Greenland ice sheet, and glaciers and ice caps. Wong and Keller16 calibrate this model to observational records (paleoclimate and instrumental data) using a Bayesian approach. We use this model to extend and derive localized sea-level projections to the Sewell’s Point tide gauge (see details in Methods).

Rasmussen et al.17 models local relative sea level using the Kopp et al.1 framework (as described above). The authors construct alternative ensembles that meet global mean surface temperature (GMST; relative to 2000) stabilization target scenarios. These scenarios stabilize warming at 1.5, 2.0, and 2.5 °C above pre-industrial levels, coinciding with targets identified in the Paris Agreement17,25,26. To ensure each scenario meets the stabilization target criteria, only the models that have a 21st century increase in GMST (extrapolated from the 2070 to 2090 trend) of 1.5, 2.0, and 2.5 °C (±0.25 °C) are used to create the ensembles. Scenarios beyond 2100 are ensembles that undershoot the target temperature with the exception of the 2.5 °C scenario.

Plausible scenarios

The U.S. Army Corps of Engineers4,5,6, Parris et al.13, Hall et al.8, and Sweet et al.14 all adopt an approach of providing a broad range of future conditions based on published studies. These studies linearly extract the historical tide gauge rate for the lowest scenario and use a global mean SLR model to represent non-linear scenarios. Specifically, they use a quadratic global mean SLR model3 in time (modified to begin in the year 2000 and to project in feet) for eustatic SLR.

The U.S. Army Corps of Engineers4,5,6 studies provide three scenarios of relative SLR: 1) a low scenario based on a linear extrapolation of the historical tide gauge rate, 2) an intermediate scenario, and 3) a high scenario (details on downscaling are provided in Methods, Supplementary Table 1).

Parris et al.13 expands on the research conducted in the U.S. Army Corps of Engineers4,5,6 by adding a fourth scenario and modifying scenarios based on scientific research of ocean warming and ice sheet loss (Supplementary Table 1). The highest scenario derives from the ocean warming estimates in the Meehl et al.27 global SLR projections along with the maximum estimates of glacier and ice sheet loss in Pfeffer et al.28. The intermediate-high scenario is derived from the average of high end, semi-empirical, global SLR projections29,30,31,32. The intermediate-low scenario is based on the B1 emissions scenario global SLR projection from Meehl et al.27 and lastly, the lowest scenario is a linear extrapolation of the historical SLR rate from 20th century tide gauge records33 (details on downscaling in Methods, Supplementary Table 1).

Hall et al.8 uses the same low and high scenario as Parris et al.13, but proposes intermediate scenarios that are 0.5 m (1.6 ft) increment subdivisions (Supplementary Table 1). The use of equally proportional subdivisions is chosen due to the imprecise nature of estimating future SLR, associated uncertainties, and the fact that this information is used for vulnerability, impact, and risk management purposes. Unfortunately, downscaled projections calculated by Monte Carlo resampling of fingerprints from Perrette et al.34 and Kopp et al.1 are not publicly available due to the sensitive nature of the data. Instead we downscale projections following an approach in the U.S. Army Corps of Engineers4,5,6 (details in Methods).

Sweet et al.14 provides an update of scenarios based on the National Research Council3 global mean SLR model (Supplementary Table 1). These scenarios include the same intermediate scenarios as in Hall et al.8, as well as an additional extreme case scenario and an updated low scenario. The upward revision of the low scenario is based on the 3 mm/yr global mean sea-level rate measured from tide gauges and satellite altimeters over the past quarter century33,35,36,37,38,39. Sweet et al.14 adds a worst-case scenario to account for potential acceleration of ice sheet mass loss from physical feedbacks23 and the growing number of studies with global mean sea level that exceeds 6.6 ft by 21001,40,41,42,43,44,45,46.

To project global and regional SLR, Sweet et al.14 follows the Kopp et al.1 framework. Specifically, Sweet et al.14 drives global and regional projections with RCP2.6, RCP4.5, and RCP8.5 and produces 20,000 Monte Carlo samples for each emissions scenario. Regional sea levels (relative to the year 2000) are projected on a 1-degree grid accounting for locations of the tide gauges. At each grid cell, the SLR scenarios are adjusted to account for shifts in oceanographic factors (e.g., circulation patterns), glacial isostatic adjustment from the melting of land-based ice, and non-climatic factors. Sweet et al.14 then combines the results from each emissions scenario and divides them into subsets according to the six scenarios. These subset distributions are not equal in sample size.

Storm surge projections

We compare stationary (i.e., not time varying) storm surge projections from four studies6,18,19,20 to historical observations and projections from an alternative model discussed below (details in Methods). Additionally, we compare stationary storm surge values to non-stationary values in the year 2065. These values are available to decision makers for the Sewell’s Point tide gauge location, are relative to the current NOAA national tidal datum epoch (NTDE; 1983–2001) local mean sea level (MSL), and are compared to historical observations47.

Zervas20 analyzes monthly mean highest water levels over a period from 1927–2010. In order to remove the longer-term signal, Zervas20 linearly detrends the data by removing the mean sea-level trend (based on data up to 2006), which is relative to the NTDE midpoint. These detrended monthly extremes are used to obtain the annual block maximum (the maximum observation in each year) if a year has four or more months of data. If a year has less than four months of data, then no annual block maxima is estimated for that year. Zervas20 fits the annual block maxima to a Generalized Extreme Value (GEV) distribution using the extRemes R package48,49 for estimation of the location, scale, and shape parameters. Using the maximum likelihood estimate of the GEV parameters and a range of exceedance probabilities, Zervas20 approximates flood return levels with a 95% confidence interval.

The U.S. Army Corps of Engineers6 study uses the same historic monthly extreme water level values as Zervas20, but analyzes a shorter time period from 1927 to 2007. Instead of following the GEV approach laid out in Zervas20, the U.S. Army Corps of Engineers6 study follows a percentile statistical function50 and only presents return periods that are within the time frame of the data record. For instance, they do not present the 100-yr return period for Sewell’s Point tide gauge because the data record is less than 100 years in length.

Tebaldi et al.19 uses a combination of hourly (1979–2008) and monthly (1959–2008) data. Assuming the long-term trends in local sea level are linear, Tebaldi et al.19 detrends the hourly data using a linear model fit to the monthly data. These detrended hourly values are used to compute the daily maxima and to perform a peak-over-threshold (POT) analysis. Tebaldi et al.19 performs a POT analysis by selecting a threshold corresponding to the 99th percentile and identifying daily values exceeding that threshold. To avoid counting a storm twice, Tebaldi et al.19 uses a 1-day declustering timescale identifying the maximum value among consecutive extremes. The exceedance values identified in the POT analysis are fit to a Generalized Pareto distribution (GPD) for parameter estimation. Using the maximum likelihood estimate of the GPD parameters, Tebaldi et al.19 computes flood return levels and return periods with a 95% confidence interval.

Wong18 analyzes 86 years (1928–2013) of hourly data from the tide gauge to generate storm surge projections. First, Wong18 detrends the data by subtracting a moving window 1-year average and calculates the daily maximum sea levels with the detrended data. Like the analysis in Tebaldi et al.19, Wong18 uses the 99th percentile as the threshold for extreme events. However, Wong18 differs by using a declustering timescale of 3 days to identify the maximum value among consecutive extremes. The exceedance values are then fit to a GPD model for parameter estimation using a Bayesian calibration approach with an adaptive Metropolis Hastings algorithm, where non-stationarity is incorporated into the parameters. Non-stationarity is incorporated using several covariates: time, sea level, global mean temperature, the North Atlantic Oscillation (NAO) index, and a combination of all the covariates generated by applying Bayesian model averaging.

Differences in flood hazard projections

We characterize the differences in published SLR projections for the city of Norfolk, VA. The localized SLR projections become less certain and increasingly diverge as time goes on (Fig. 2). The divergence between projections becomes more apparent with those based on high emissions scenarios and those accounting for uncertainty (Fig. 2c,f,i,l). It is especially noticeable in the year 2100 when comparing projection modes (Fig. 2l). These differences can not only be traced back to the assumptions made, but they can also impact coastal preparedness planning.

Comparison of localized sea-level rise projections for Norfolk, VA. Panels from left to right are projected with higher emissions scenarios (increasing in shade) and panels from top to bottom increase in time from 2030 to 2100. “FD” refers to fast Antarctic ice sheet dynamics and blocks depict the 5, 25, 50, 75, and 95% quantiles for Sweet et al.14.

Accounting for ice sheet feedback processes increases sea-level rise projections

In the 21st century, local SLR projections differ depending on the assumptions with respect to ice sheet processes (Figs 2 and 3a). Studies that incorporate ice sheet feedback processes project estimates of SLR that are greater than those that do not incorporate these processes. Compare, for example, the 95th percentile in 2100 of Wong and Keller16 FD to Wong and Keller16 no FD. Additionally, compare the 95th percentile in 2100 of Kopp et al.15 to Kopp et al.1 and also Sweet et al.14 (2.5 m) to Sweet et al.14 (2.0 m) (Fig. 3a). In all three cases, the projections incorporating ice sheet feedback processes project higher SLR by the year 2100 with an increase of roughly 1.7 to 4.5 ft (comparing the 95th percentiles). Moreover, the divergence between studies with and without feedback processes grows over time with an increase in acceleration in the later half of the century. These projections indicate that ice sheets play a small role in the projected SLR contributions during the first half of the century and a larger role in the second half. This change occurs when anthropogenic greenhouse gas emissions (particularly high emissions scenarios) trigger ice-cliff and ice shelf feedback processes in the AIS. The results in DeConto and Pollard23 suggest that the role of ice sheets in SLR contributions will continue to grow in the centuries following 2100. Hence, it is important to account for individual components comprising SLR in addition to ice sheet feedback processes because components interact on different timescales.

Comparison of different assumptions between sea-level projections. Panel (a) compares 90% credible/confidence intervals of high scenario projections that differ with respect to ice sheet assumptions. Opaque polygons with a dashed border represent projections accounting for ice sheet feedbacks. The arrows highlight the divergence between projections from the same model that differ by ice sheet assumptions. Panel (b) compares the timescale of projections. The gray block symbolizes the potential design life of infrastructure built in 2020.

Length of projection time can impact long-term adaptation strategies

Of the evaluated studies, half focus on timescales of 110 years or less (excluding the fact that we extend the projections of Wong and Keller16 to 2200) (Fig. 3b). This lack of information can pose problems for the design of coastal adaptation strategies. For instance, the U.S. Army Corps of Engineers typically designs projects to last for 20 to 100 years6. Yet, infrastructure often extends past its original design life due to continued operation and maintenance6. Consider for example, a project designed in the year 2020 with a design life of 100 years. This project could extend well past the year 2120 and would require SLR information for at least 20 years past 2100 for decision making (Fig. 3b). Hence, providing information about SLR beyond 2100 has the potential to improve the robustness and resilience of infrastructure as well as long-term coastal adaptation strategies. However, it is important to be cautious about long-term projections if these projections are based on models with simplified ice dynamics.

Lack of information about storm surge analysis can lead to surprises

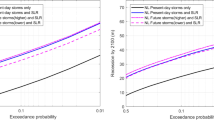

Areas prone to storm surge like Norfolk also require information about the frequency of extreme water-level events when defining coastal vulnerability. In particular, analyses resolving these extreme events, especially long return periods, require long records of data (70+ years) to stabilize estimates51. Our results are consistent with this conclusion. For example, the projections based on a 49 year record in Tebaldi et al.19 produce a low bias for long return periods in comparison to the observations, our model, and other studies, which are all based on records of 80 to 90 years in length6,18,20 (Fig. 4a).

Comparison of different assumptions between storm surge projections. Panel (a) compares stationary storm surge levels with their associated return periods (inverse flood probability). Panel (b) compares the median 100-yr storm surge values based on stationary models to those based on non-stationary models in the year 2065.

Based on historical events, additionally it is important to resolve and assess extreme water-level events that have a low probability of occurring such as those with return periods greater than the 100-yr event. This is especially important because the Federal Emergency Management Agency and the U.S. Army Corps of Engineers highly recommend that critical infrastructure or structures protecting critical infrastructure (e.g., levees and floodwalls) are built to withstand the 500-yr event plus freeboard10,52. Critical infrastructure are facilities that provide essential services to the community, protect the community, and are intended to remain open during and after major disasters. Such facilities include health care facilities, schools and higher education buildings, facilities storing hazardous material, and emergency response facilities (e.g., fire stations, police stations, and emergency operation centers)52. For example, in collaboration with the City of Norfolk’s resilience initiative and the U.S. Army Corps of Engineers, the Sentara Norfolk General Hospital (a level one trauma center located in the current 100-yr and 500-yr floodplains) is currently implementing measures to protect the hospital against SLR and storm surge in its five-year project (construction to be completed in 2020) to expand and modernize the hospital, in addition to a proposed storm surge barrier that would help protect the hospital and other nearby critical infrastructure7,10,53.

Despite a very low probability of occurring, these events do occur (Fig. 4a). Over the course of 70 years (the potential useful lifetime of a building), a 500-yr event has an 18% probability of occurring52. When these events occur, they are often considered high-impact disasters. Consider, for example, the storm surge of Hurricane Sandy, a roughly 400-yr event at the Battery in New York City54. More importantly, consider the lesser known (in modern history), but violent Norfolk-Long Island Hurricane of 1821 and Storm of 1749. The Norfolk-Long Island Hurricane made landfall on September 3rd, 1821, hitting Norfolk, VA, among other major cities along the Mid-Atlantic and East Coast55,56. The hurricane is estimated to have caused a storm surge of roughly 10 ft in some areas of the Virginia coastline55,56 (Fig. 4a). This is an approximately 285-yr storm surge event (approximated using a method to calculate the median probability return period; see Methods). Based on historical records, we can reasonably constrain the uncertainty bounds between the 279- and 306-yr storm surge event as a larger storm surge event may have occurred during a hurricane in 1825 and less documentation exists for storm surges prior to 180655. A study by the reinsurance company Swiss Re estimates that the Norfolk-Long Island Hurricane of 1821 would cause 50% more damage than Hurricane Sandy and more than 100 billion U.S. dollars in damages if it were to occur today55,56. The Storm of 1749 (a hurricane) hit the Mid-Atlantic coast during October of 1749 causing roughly 30 thousand pounds in damages in Norfolk at that time55,57. During this storm, a 15 ft storm surge and subsequent flooding of the lower Chesapeake Bay area was reported55,57 (Fig. 4a). Moreover this storm was largely responsible for forming Willoughby Spit, 2 mile long and 1/4 mile wide peninsula of land at Sewell’s point57. The storm surge of the Storm of 1749 is approximately a 389-yr storm surge event (approximated using a method to calculate the median probability return period; see Methods). Despite the potential high impact of low probability events and their importance in resilience planning, our model and Wong18 are the only models in this analysis to resolve return periods past the 100-yr event (Fig. 4a).

It is important also to note that storm surges may not be stationary (i.e., the statistics are not constant over time). While decision makers typically use stationary flood hazard information, neglecting non-stationary information can result in a low bias (Fig. 4b). In the year 2065, all non-stationary 100-yr storm surge values are greater than the storm surge values based on stationary models with a difference of up to a foot (with the exception of the non-stationary case based on the NAO index covariate time series; Fig. 4b). To put this into context, a difference of less than a foot in storm surge values can be the difference of millions of dollars in potential damages to the Norfolk area58. Despite the importance of considering the non-stationary behavior of storm surge projections, it is still an area of active research. In particular, there is an active debate on how best to account for and constrain potential non-stationary coastal surge behavior18,59. For example, a recent study by Wong18 analyzes how different climate variable time series (e.g., temperature, sea level, NAO index, and time) impact non-stationary storm surge values. Although non-stationary storm surge is an area of active research, non-stationary flood hazard information is available and can be used by decision makers.

Accounting for uncertainty in projected future flood hazards

Combining probabilistic projections of local SLR with storm surge analysis more accurately assesses flood risk (details in Methods; Fig. 5). The combined SLR and storm surge projections increase estimates of future flood risk (see also the discussion in60; Fig. 4b versus Fig. 5). Despite evaluating multiple studies, only the SLR projections from Kopp et al.1, Sweet et al.14, Wong and Keller16, and Rasmussen et al.17 provide enough information (i.e., full probability distributions) to account for interactions between uncertainties. Likewise our study and Wong18 are the only studies in this analysis that provide full probability distributions of storm surge estimates. To reduce complexity, we show four cases of the combined SLR and storm surge projections accounting for uncertainty. These cases depict how fast dynamics and non-stationarity impact storm surge estimates (Fig. 5). In particular, there is a roughly 0.87 ft difference between stationary and non-stationary combined storm surge and SLR estimates. Additionally, combined storm surge and SLR estimates increase as ice-cliff and ice shelf feedback processes are triggered in the year 2065, which are triggered in high emissions scenarios (i.e., a roughly 0.14 ft increase when triggered using RCP8.5; Fig. 5). Moreover, these few cases highlight the complexity of estimating a single point when there are multiple uncertainties to consider.

Discussion and Caveats

The choice of methods and assumptions used in a flood hazard study can impact the design of flood risk management strategies. These choices can limit the amount of information available to a vulnerable community interested in coastal preparedness planning. For instance, a community concerned about ice sheet feedback processes, individual components comprising SLR, long timescales, uncertainty, and the 500-yr storm surge event is limited in the considered sample of studies to the results in Kopp et al.15 and our extended projections of Kopp et al.16 FD for SLR projections, and the method outlined in this study and the results in Wong18 for storm surge projections (Fig. 4). This lack of information reduces the range of choices in the decision-making process and hence could lead to poor outcomes. The potential consequences of having insufficient data are (1) cities are unprepared for extreme events (i.e., like the 1821 Norfolk-Long Island Hurricane and/or abrupt changes in ice-sheet dynamics; Figs 4a and 5) or (2) cities over-invest in protection measures.

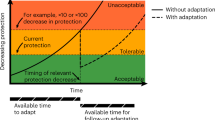

We analyze and synthesize multiple future SLR scenarios and storm-surge characterizations generated from different approaches. Our findings help to understand and quantify the sources and effects of the deep uncertainty surrounding coastal flood hazard projections. Our results highlight some of the current limitations of coastal flood hazard characterizations when used to inform the design of strategies to manage flood risks. Coastal flood hazard projections diverge considerably across decision-relevant timescales based on the adopted methods and assumptions. Relying on a single flood hazard projection can hence be interpreted as making a deeply uncertain gamble. This deep uncertainty stems, for example, from the difficulties associated with calibrating the model parameters and the divergent expert assessments. The models need to resolve the complex responses of glaciers and ice sheets where understanding the physics is important, but often challenging. As a consequence, modeled results and expert elicitation results can be biased and/or overconfident. One approach to inform decisions in the face of these deep and dynamic uncertainties is to adopt decision-making approaches that allow for the adaptation of decisions over time to meet changing circumstances, respond to abrupt changes, promote continual learning, and revisit coastal flood hazard projections in the future when necessary knowledge about uncertain physics is, hopefully, better understood61,62. One such approach is referred to as Dynamic Adaptive Policy Pathways. This approach searches for an adaptive plan that can deal with changing conditions and “supports the exploration of a variety of relevant uncertainties in a dynamic way, connects short-term targets and long-term goals, and identifies short-term actions while keeping options open for the future”61. Consider, as an example, the flexible adaptation pathways approach taken by the city of New York in their climate action strategy, which allows the city plan to adapt over time63,64. Due to adopting this approach, the city was able to revisit plans and respond in the aftermath of Hurricane Sandy64.

Despite our focus on coastal flood hazard assessment and coastal preparedness planning, we neglect the interaction between different hazard drivers beyond the interaction of uncertainties between SLR and storm surge. Specifically, we neglect to address the issue of compound flooding. Compound flooding refers to flooding caused by a combination of multiple drivers and/or hazards such as SLR, storm tides and waves, precipitation, or river discharge that lead to societal or environmental impacts65,66. For example, in 2017, compound flooding occurred in Jacksonville, FL and the greater Houston area because of the combination of storm surge and high discharge of the St. John’s river during Hurricane Irma and the combination of extreme precipitation and long-lasting storm surge during Hurricane Harvey, respectively65,66. We chose to neglect compound flooding in our assessment to provide a transparent analysis on what causes SLR and storm surge projections to diverge and how this divergence impacts coastal planning. This implies that larger values and uncertainties cannot be excluded when considering other hazard drivers that interact with SLR. Due to the importance of this issue, it is necessary to consider and analyze compound flooding in coastal risk assessments, decision-making, and future research.

Conclusions

Coastal communities rely on flood hazard projections to design risk management strategies. Studies evaluating future flood hazards often provide only a limited description of the deep uncertainties surrounding these projections and diverge in projections. Using Norfolk, VA, as an example, we show how the lack of information (i.e., extreme cases, non-stationarity, and ice sheet feedbacks) can lead to surprises. We highlight the importance of estimating the different components of SLR and accounting for AIS fast dynamics, especially when ice sheet contributions play a greater role in SLR at the end of this century and beyond23. Even though the considered studies that produce plausible scenarios provide a broad range of future conditions, they do not produce probability distributions. Without probabilistic distributions, we could not evaluate the combination of SLR and storm surge while accounting for interactions with uncertainties nor could we evaluate differences in the 500-yr return period between multiple studies. Although we evaluate flood hazard projections for Norfolk, our conclusions are transferable to many regions. Improving the representation of ice-sheet feedback processes, decision-relevant timescales, extreme events, non-stationarities, and known unknowns can improve risk assessments and decision-making.

Methods

Comparing data

We identify key existing studies relevant to the case-study location and then identify the background assumptions and methods for each study. Each study presents projections in different units of measurement, relative to different datums, start at different years, and do not always incorporate local rates of subsidence. For instance, Tebaldi et al.19 presents storm surge projections in meters above the mean high water datum, whereas Zervas20 presents storm surge projections in meters above the mean higher high water datum. Following a detailed review of these studies, we modify the projections to the same baseline conditions for comparability. For comparability and consistency across all SLR projections, we modify the scenarios and projections relative to the local mean sea level, update the start year to 2000, present projections in feet, and incorporate local subsidence in all projections. For storm surge, projections are in feet above the local mean sea level for the current NTDE (1983–2001). The NTDE represents the period of time used to define the tidal datum (e.g., mean high water and local mean sea level)67.

Extending and localizing sea-level rise projections

Unlike Wong and Keller16, we project the BRICK model to the year 2200 using the RCP2.6, 4.5, and 8.5 radiative forcing scenarios68. Wong and Keller16 only project the model to the year 2100 because the Greenland ice sheet, and glaciers and ice cap models do not account for what happens when the ice mass completely melts. In short, the models do not simulate the possibility of a net gain of ice. Hence, once the ice mass is at zero, there is no regrowth of ice. Running a simple diagnostic test, we test the reliability of our projections out to 2200 and find that only the ice mass from the glaciers completely melts in some high emissions scenarios with a lower initial ice mass (Supplementary Fig. 1)69. We then downscale global sea-level projections using sea-level fingerprints from Slangen et al.70 and localize sea-level projections by supplying the model with the coordinates of the Sewell’s Point tide gauge. These projections do not incorporate local subsidence; therefore, we add in the long-term, local, non-climatic sea-level change projections from Kopp et al.1.

With the exception of Sweet et al.14, we downscale each of the SLR studies based on the method of providing plausible scenarios (i.e.4,5,6,8,13). Following the U.S. Army Corps of Engineers4,5,6 approach, we downscale these scenarios to the local level using the local mean sea-level trend of 4.44 mm/yr71 as the rate of SLR in the National Research Council3 global sea-level model. The local mean sea-level trend at the Sewell’s Point tide gauge accounts for local and regional vertical land movement, coastal environmental processes, and ocean dynamics4,5,6,71.

Storm surge observations and projections

To generate historical observations with associated return periods (Fig. 4a and Supplementary Fig. 2), we use hourly records of observed water levels from the Sewell’s Point tide gauge47. The observed water levels are relative to the MSL datum of the NTDE. The record we use is 88 years in length from 1928 to 2015. These observations contain the longer-term signal (SLR), which masks the effects of day-to-day weather, tides, and seasons. Following previous work (e.g.60,72), we subtract the annual means from the record to approximately remove the SLR trend. We then approximate the annual block maxima by grouping the values into non-overlapping annual observation periods (Supplementary Fig. 2a). To calculate the return period associated with the annual block maxima (Supplementary Fig. 2b), we use a numerical median probability return period method73,74,75. We use this method to reduce plotting biases by calculating the median probability of a return period instead of the mean73,74,75. This method calculates the median probability of a return period for an annual block maxima by estimating the binomial distribution that places the ranked event at the median of the distribution73,74,75.

Similar to the approach in Oddo et al.72, our model uses hourly tide gauge data from 1926 to 2016 and a Bayesian calibration approach to fit an ensemble of stationary GEV distributions for the storm surge projections at the Sewell’s Point tide gauge. To set up the GEV analysis, we first subtract the annual means from the tide gauge record followed by calculating the annual block maxima from the detrended record. Using the detrended record, we calculate a maximum likelihood estimate for the GEV distribution parameters using the extRemes R package48,49. The resulting estimates act as the starting point for a 500,000-iteration Markov chain Monte Carlo simulation of the GEV parameters. We discard the first 50,000 iterations of each chain to remove the effects of starting values.

Combining sea-level and storm surge projections

Accounting for the interactions between SLR and storm surge is a crucial step in the assessment of coastal flood vulnerability. Specifically, it is necessary to account for the uncertainties surrounding SLR (see, for example, the discussion in60). Similar to the approach in Ruckert et al.60, we account for uncertainty in both SLR and storm surge by combining the distributions. Following this approach, we combine the Wong and Keller16 no FD SLR distribution for the year 2065 with the ensemble of stationary storm surge values obtained in Wong18. Because the distributions differ in ensemble size, we draw an ensemble size of 10,000 simulations of storm surge (each simulation projecting out to the 1000-yr return period) from the full distribution to correspond to the SLR ensemble size. We then approximate the 100-yr storm surge following the steps outlined in Ruckert et al.60 (see paper for details). Following the same procedure, we also combine (1) Wong and Keller16 FD SLR distribution with the stationary storm surge in Wong18, (2) Wong and Keller16 no FD SLR distribution with the non-stationary BMA storm surge in Wong18, and (3) Wong and Keller16 FD SLR distribution with the non-stationary BMA storm surge in Wong18. It is also possible to use the sea-level distributions from Sweet et al.14, Kopp et al.1, and Rasmussen et al.17, as well as the other non-stationary storm surge ensembles presented in Wong18; however, we choose to show the four cases stated above to make clear the differences between SLR with and without fast dynamics and stationary versus non-stationary storm surge.

Data Availability

All code, data, and output are available on Data Commons (https://doi.org/10.26208/z5e5-kh11) and http://www.github.com/scrim-network/local-coastal-flood-risk under the GNU general public open-source license. Data and analysis codes for our storm surge model are located at http://www.github.com/vsrikrish/SPSLAM. The results, data, software tools, and other resources related to this work are provided as-is without warranty of any kind, expressed or implied. In no event shall the authors or copyright holders be liable for any claim, damages or other liability in connection with the use of these resources.

References

Kopp, R. E. et al. Probabilistic 21st and 22nd century sea-level projections at a global network of tide-gauge sites. Earth’s Future 2, 383–406, https://doi.org/10.1002/2014EF000239 (2014).

Church, J. A. et al. Sea level change. In Stocker, T. F. et al. (eds) Climate Change 2013: The Physical Science Basis. Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change (Cambridge University Press, Cambridge, United Kingdom and New York, NY, USA, 2013).

National Research Council. Responding to Changes in Sea Level: Engineering Implications. (The National Academies Press, Washington, DC, 1987).

US Army Corps of Engineers. Sea-level change considerations in civil works programs. Tech. Rep. EC 1165-2-212, US Army Corps of Engineers, Retrieved from https://web.law.columbia.edu/sites/default/files/microsites/climate-change/usace_circular_no_2265-2-212.pdf (2011).

US Army Corps of Engineers. Incorporating sea level change in civil works programs. Tech. Rep. ER 100-2-8162, US Army Corps of Engineers, Retrieved from https://www.publications.usace.army.mil/Portals/76/Publications/EngineerRegulations/ER_1100-2-8162.pdf (2013).

US Army Corps of Engineers. Procedures to evaluate sea level change: Impacts, responses, and adaptation. Tech. Rep. ETL 1100-2-1, US Army Corps of Engineers, Retrieved from https://www.publications.usace.army.mil/Portals/76/Publications/EngineerTechnicalLetters/ETL_1100-2-1.pdf (2014).

The City of Norfolk. Norfolk’s resilience strategy. Tech. Rep., The City of Norfolk, Retrieved from http://100resilientcities.org/wp-content/uploads/2017/07/Norfolk_Resilient_Strategy_October_2015.pdf (2015).

Hall, J. A. et al. Regional sea level scenarios for coastal risk management: Managing the uncertainty of future sea level change and extreme water levels for department of defense coastal sites worldwide. Tech. Rep., US Department of Defense, Strategic Environmental Research and Development Program, Retrieved from https://www.hsdl.org/?abstract&did=792698 (2016).

Coastal Protection and Restoration Authority of Louisiana. Louisiana’s Comprehensive Master Plan for a Sustainable Coast. Baton Rouge, LA: Coastal Protection and Restoration Authority of Louisiana, Retrieved from http://coastal.la.gov/wp-content/uploads/2017/04/2017-Coastal-Master-Plan_Web-Book_CFinal-with-Effective-Date-06092017.pdf (2017).

US Army Corps of Engineers. Final integrated City of Norfolk coastal storm risk management feasibility study/environmental impact statement. Tech. Rep., US Army Corps of Engineers, Retrieved from https://usace.contentdm.oclc.org/utils/getfile/collection/p16021coll7/id/8557 (2018).

Keller, K. & Nicholas, R. Improving climate projections to better inform climate risk management. In Bernard, L. & Semmler, W. (eds) The Oxford Handbook of the Macroeconomics of Global Warming, https://doi.org/10.1093/oxfordhb/9780199856978.013.0002 (Oxford University Press, 2015).

Sriver, R. L., Lempert, R. J., Wikman-Svahn, P. & Keller, K. Characterizing uncertain sea-level rise projections to support investment decisions. PLoS One 13, e0190641, https://doi.org/10.1371/journal.pone.0190641 (2018).

Parris, A. et al. Global sea level rise scenarios for the US national climate assessment. Tech. Rep. NOAA Tech Memo OAR CPO, National Oceanic and Atmospheric Administration, Retrieved from https://cpo.noaa.gov/sites/cpo/Reports/2012/NOAA_SLR_r3.pdf (2012).

Sweet, W. V. et al. Global and regional sea level rise scenarios for the United States. Tech. Rep. NOAA Tech Memo NOS CO-OPS 083, National Oceanic and Atmospheric Administration, Retrieved from https://tidesandcurrents.noaa.gov/publications/techrpt83_Global_and_Regional_SLR_Scenarios_for_the_US_final.pdf (2017).

Kopp, R. E. et al. Evolving understanding of Antarctic ice-sheet physics and ambiguity in probabilistic sea-level projections. Earth’s Future 5, 1217–1233, https://doi.org/10.1002/2017EF000663 (2017).

Wong, T. E. & Keller, K. Deep uncertainty surrounding coastal flood risk projections: A case study for New Orleans. Earth’s Future 5, 1015–1026, https://doi.org/10.1002/2017EF000607 (2017).

Rasmussen, D. J. et al. Extreme sea level implications of 1.5 °C, 2.0 °C, and 2.5 °C temperature stabilization targets in the 21st and 22nd centuries. Environmental Research Letters 13, 034040, https://doi.org/10.1088/1748-9326/aaac87 (2018).

Wong, T. E. An integration and assessment of multiple covariates of nonstationary storm surge statistical behavior by bayesian model averaging. Advances in Statistical Climatology, Meteorology and Oceanography 4, 53–63, https://doi.org/10.5194/ascmo-4-53-2018 (2018).

Tebaldi, C., Strauss, B. H. & Zervas, C. E. Modelling sea level rise impacts on storm surges along US coasts. Environmental Research Letters 7, 014032, https://doi.org/10.1088/1748-9326/7/1/014032 (2012).

Zervas, C. Extreme water levels of the United States 1893–2010. Tech. Rep. NOAA Tech Memo NOS CO-OPS 067, National Oceanic and Atmospheric Administration, Retrieved from https://tidesandcurrents.noaa.gov/publications/NOAA_Technical_Report_NOS_COOPS_067a.pdf (2013).

Moss, R. H. et al. The next generation of scenarios for climate change research and assessment. Nature 463, 747–756, https://doi.org/10.1038/nature08823 (2010).

Mitrovica, J. X. et al. On the robustness of predictions of sea level fingerprints. Geophysical Journal International 187, 729–742, https://doi.org/10.1111/j.1365-246X.2011.05090.x (2011).

DeConto, R. M. & Pollard, D. Contribution of Antarctica to past and future sea-level rise. Nature 531, 591 (2016).

Wong, T. E. et al. BRICK v0.2, a simple, accessible, and transparent model framework for climate and regional sea-level projections. Geoscientific Model Development 10, 2741–2760, https://doi.org/10.5194/gmd-10-2741-2017 (2017).

UNFCCC. Report of the structured expert dialogue on the 2013–2015 review. In New York: United Nations, vol. FCCC/SB/2015/INF.1, Retrieved from https://unfccc.int/resource/docs/2015/sb/eng/inf01.pdf (2015).

UNFCCC. Report of the conference of the parties on its Twenty-First session, held in Paris from 30 November–13 December 2015. In New York: United Nations, vol. FCCC/CP/2015/10, Retrieved from https://unfccc.int/resource/docs/2015/cop21/eng/10.pdf (2015).

Meehl, G. A. et al. Global climate projections. In Solomon, S. et al. (eds) Climate Change 2007: The Physical Science Basis. Contribution of working group I to the fourth assessment report of the Intergovernmental Panel on Climate Change (Cambridge University Press, Cambridge, United Kingdom and New York, NY, USA, 2007).

Pfeffer, W. T., Harper, J. T. & O’Neel, S. Kinematic constraints on glacier contributions to 21st-century sea-level rise. Science 321, 1340–1343, https://doi.org/10.1126/science.1159099 (2008).

Horton, R. et al. Sea level rise projections for current generation CGCMs based on the semi-empirical method. Geophysical Research Letters 35, https://doi.org/10.1029/2007GL032486 (2008).

Vermeer, M. & Rahmstorf, S. Global sea level linked to global temperature. Proceedings of the National Academy of Sciences of the United States of America 106, 21527–21532, https://doi.org/10.1073/pnas.0907765106 (2009).

Grinsted, A., Moore, J. C. & Jevrejeva, S. Reconstructing sea level from paleo and projected temperatures 200 to 2100 AD. Climate Dynamics 34, 461–472, https://doi.org/10.1007/s00382-008-0507-2 (2010).

Jevrejeva, S., Moore, J. C. & Grinsted, A. How will sea level respond to changes in natural and anthropogenic forcings by 2100? Geophysical Research Letters 37, https://doi.org/10.1029/2010GL042947 (2010).

Church, J. A. & White, N. J. Sea-Level rise from the late 19th to the early 21st century. Surveys in Geophysics 32, 585–602, https://doi.org/10.1007/s10712-011-9119-1 (2011).

Perrette, M., Landerer, F., Riva, R., Frieler, K. & Meinshausen, M. A scaling approach to project regional sea level rise and its uncertainties. Earth System Dynamics 4, 11–29, https://doi.org/10.5194/esd-4-11-2013 (2013).

Nerem, R. S., Chambers, D. P., Choe, C. & Mitchum, G. T. Estimating mean sea level change from the TOPEX and Jason altimeter missions. Marine Geodesy 33, 435–446, https://doi.org/10.1080/01490419.2010.491031 (2010).

Boening, C., Willis, J. K., Landerer, F. W., Nerem, R. S. & Fasullo, J. The 2011 La Niña: So strong, the oceans fell. Geophysical Research Letters 39, https://doi.org/10.1029/2012GL053055 (2012).

Fasullo, J. T., Boening, C., Landerer, F. W. & Nerem, R. S. Australia’s unique influence on global sea level in 2010–2011. Geophysical Research Letters 40, 4368–4373, https://doi.org/10.1002/grl.50834 (2013).

Cazenave, A. et al. The rate of sea-level rise. Nature Climate Change 3, 358–361, https://doi.org/10.1038/nclimate2159 (2014).

Hay, C. C., Morrow, E., Kopp, R. E. & Mitrovica, J. X. Probabilistic reanalysis of twentieth-century sea-level rise. Nature 517, 481–484, https://doi.org/10.1038/nature14093 (2015).

Sriver, R. L., Urban, N. M., Olson, R. & Keller, K. Toward a physically plausible upper bound of sea-level rise projections. Climatic Change 115, 893–902, https://doi.org/10.1007/s10584-012-0610-6 (2012).

Bamber, J. L. & Aspinall, W. P. An expert judgement assessment of future sea level rise from the ice sheets. Nature Climate Change 3, 424, https://doi.org/10.1038/nclimate1778 (2013).

Miller, K. G., Kopp, R. E., Horton, B. P., Browning, J. V. & Kemp, A. C. A geological perspective on sea-level rise and impacts along the U.S. mid-Atlantic coast. Earth’s Future 1, 3–18, https://doi.org/10.1002/2013EF000135 (2013).

Rohling, E. J., Haigh, I. D., Foster, G. L., Roberts, A. P. & Grant, K. M. A geological perspective on potential future sea-level rise. Scientific Reports 3, 3461, https://doi.org/10.1038/srep03461 (2013).

Jevrejeva, S., Grinsted, A. & Moore, J. C. Upper limit for sea level projections by 2100. Environmental Research Letters 9, 104008, https://doi.org/10.1088/1748-9326/9/10/104008 (2014).

Grinsted, A., Jevrejeva, S., Riva, R. & Dahl-Jensen, D. Sea level rise projections for northern Europe under RCP8.5. Climate Research 64, 15–23, https://doi.org/10.3354/cr01309 (2015).

Jackson, L. P. & Jevrejeva, S. A probabilistic approach to 21st century regional sea-level projections using RCP and high-end scenarios. Global and Planetary Change 146, 179–189, https://doi.org/10.1016/j.gloplacha.2016.10.006 (2016).

National Oceanic and Atmospheric Administration. Station home page - NOAA tides & currents, https://tidesandcurrents.noaa.gov/stationhome.html?id=8638610, Accessed: 2016-11-11 (2013).

Gilleland, E., Ribatet, M. & Stephenson, A. G. A software review for extreme value analysis. Extremes 16, 103–119, https://doi.org/10.1007/s10687-012-0155-0 (2013).

R Core Team. R: A Language and Environment for Statistical Computing. (R Foundation for Statistical Computing, Vienna, Austria, 2016).

Kriebel, D. L. & Geiman, J. D. A coastal flood stage to define existing and future sea-level hazards. Journal of Coastal Research 30, 1017–1024, https://doi.org/10.2112/JCOASTRES-D-13-00068.1 (2013).

Wong, T. E., Klufas, A., Srikrishnan, V. & Keller, K. Neglecting model structural uncertainty underestimates upper tails of flood hazard. Environmental Research Letters 13, 074019, https://doi.org/10.1088/1748-9326/aacb3d (2018).

FEMA. Making critical facilities safe from flooding. In Design guide for improving critical facility safety from flooding and high winds, 1–102, FEMA 543 (Risk Management Series, Washington, DC, USA, 2007).

Gauding, D. Expansion announced for Sentara Norfolk General Hospital, Retrieved from https://www.sentara.com/hampton-roads-virginia/aboutus/news/news-articles/expansion-announced-for-sentara-norfolk-general-hospital.aspx (2016).

Lin, N., Kopp, R. E., Horton, B. P. & Donnelly, J. P. Hurricane Sandy’s flood frequency increasing from year 1800 to 2100. Proceedings of the National Academy of Sciences of the United States of America 113, 12071–12075, https://doi.org/10.1073/pnas.1604386113 (2016).

Ludlum, D. M. Early American hurricanes, 1492–1870, Retrieved from http://hdl.handle.net/2027/mdp.39015002912718 (American Meteorological Society, Boston, MA, 1963).

Linkin, M. The big one: The east coast’s USD 100 billion hurricane event. Tech. Rep., Swiss Re, Retrieved from http://media.swissre.com/documents/the_big_one_us_hurricane.pdf (2014).

Hampton Roads Planning District Commission (HRPDC). Hampton roads hazard mitigation plan. Tech. Rep., Hampton Roads Planning District Commission (HRPDC), Retrieved from https://www.hrpdcva.gov/uploads/docs/2017%20Hampton%20Roads%20Hazard%20Mitigation%20Plan%20Update%20FINAL.pdf (2017).

Fugro Consultants, Inc. Lafayette river tidal protection alternatives evaluation. Tech. Rep. Fugro Project No. 04.8113009, Fugro Consultants, Inc., City of Norfolk City-wide Coastal Flooding Contract, Work Order No. 7, Retrieved from https://www.norfolk.gov/DocumentCenter/View/25170 (2016).

Wahl, T. & Chambers, D. P. Climate controls multidecadal variability in US extreme sea level records. Journal of Geophysical Research: Oceans 121, 1274–1290, https://doi.org/10.1002/2015JC011057 (2016).

Ruckert, K. L., Oddo, P. C. & Keller, K. Impacts of representing sea-level rise uncertainty on future flood risks: An example from san francisco bay. PLoS One 12, e0174666, https://doi.org/10.1371/journal.pone.0174666 (2017).

Haasnoot, M., Kwakkel, J. H., Walker, W. E. & ter Maat, J. Dynamic adaptive policy pathways: A method for crafting robust decisions for a deeply uncertain world. Global Environmental Change 23, 485–498, https://doi.org/10.1016/j.gloenvcha.2012.12.006 (2013).

Garner, G. G. & Keller, K. Using direct policy search to identify robust strategies in adapting to uncertain sea-level rise and storm surge. Environmental Modelling & Software 107, 96–104, https://doi.org/10.1016/j.envsoft.2018.05.006 (2018).

Rosenzweig, C. et al. Developing coastal adaptation to climate change in the New York City infrastructure-shed: process, approach, tools, and strategies. Climatic Change 106, 93–127, https://doi.org/10.1007/s10584-010-0002-8 (2011).

Rosenzweig, C. & Solecki, W. Hurricane Sandy and adaptation pathways in New York: Lessons from a first-responder city. Global Environmental Change 28, 395–408, https://doi.org/10.1016/j.gloenvcha.2014.05.003 (2014).

Sadegh, M. et al. Multihazard scenarios for analysis of compound extreme events. Geophysical Research Letters 45, 5470–5480, https://doi.org/10.1029/2018GL077317 (2018).

Zscheischler, J. et al. Future climate risk from compound events. Nature Climate Change 8, 469–477, https://doi.org/10.1038/s41558-018-0156-3 (2018).

Flick, R. E., Kevin Knuuti, M. A. & Gill, S. K. Matching mean sea level rise projections to local elevation datums. Journal of Waterway, Port, Coastal and Ocean Engineering 139, 142–146, https://doi.org/10.1061/(ASCE)WW.1943-5460.0000145 (2013).

Meinshausen, M. et al. The RCP greenhouse gas concentrations and their extensions from 1765 to 2300. Climatic Change 109, 213, https://doi.org/10.1007/s10584-011-0156-z (2011).

Wong, T. E., Bakker, A. M. R. & Keller, K. Impacts of Antarctic fast dynamics on sea-level projections and coastal flood defense. Climatic Change 144, 347–364, https://doi.org/10.1007/s10584-017-2039-4 (2017).

Slangen, A. B. A. et al. Projecting twenty-first century regional sea-level changes. Climatic Change 124, 317–332, https://doi.org/10.1007/s10584-014-1080-9 (2014).

Zervas, C., Gill, S. & Sweet, W. Estimating vertical land motion from long-term tide gauge records. Tech. Rep. NOAA Tech Memo NOS CO-OPS 065, National Oceanic and Atmospheric Administration, Retrieved from https://tidesandcurrents.noaa.gov/publications/Technical_Report_NOS_CO-OPS_065.pdf (2013).

Oddo, P. C. et al. Deep uncertainties in sea-level rise and storm surge projections: Implications for coastal flood risk management. Risk Analysis, https://doi.org/10.1111/risa.12888 (2017).

Jenkinson, A. F. The analysis of meteorological and other geophysical extremes. Tech. Rep. 58, Met Office Synoptic Climatology Branch (1977).

Folland, C. & Anderson, C. Estimating changing extremes using empirical ranking methods. Journal of Climate 15, 2954–2960, https://doi.org/10.1175/1520-0442(2002)015<2954:ECEUER>2.0.CO;2 (2002).

Makkonen, L. Bringing closure to the plotting position controversy. Communications in Statistics - Theory and Methods 37, 460–467, https://doi.org/10.1080/03610920701653094 (2008).

Acknowledgements

We thank Robert Nicholas, Nancy Tuana, Irene Schaperdoth, Ben Lee, Francisco Tutella, and Randy Miller for their valuable inputs. We also thank Claudia Tebaldi and Tony Wong for sharing data and for their valuable inputs. Additionally, we thank K. Joel Roop-Eckart for sharing his function approximating median probability return periods for observations. This work was supported by the National Oceanic and Atmospheric Administration (NOAA) Mid-Atlantic Regional Integrated Sciences and Assessments (MARISA) program under NOAA grant NA16OAR4310179 and the Penn State Center for Climate Risk Management. We are not aware of any real or perceived conflicts of interest for any authors. Any conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the funding agencies. Any errors and opinions are, of course, those of the authors.

Author information

Authors and Affiliations

Contributions

K.L.R. drafted the main manuscript text, prepared the figures, and conducted the analysis. V.S. performed the Bayesian calibration for the storm surge analysis. K.K. initiated the study. All authors discussed the results and edited the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ruckert, K.L., Srikrishnan, V. & Keller, K. Characterizing the deep uncertainties surrounding coastal flood hazard projections: A case study for Norfolk, VA. Sci Rep 9, 11373 (2019). https://doi.org/10.1038/s41598-019-47587-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-019-47587-6

This article is cited by

-

Machine learning-based assessment of storm surge in the New York metropolitan area

Scientific Reports (2022)

-

Extreme sea levels at different global warming levels

Nature Climate Change (2021)

-

Neglecting uncertainties biases house-elevation decisions to manage riverine flood risks

Nature Communications (2020)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.