Abstract

Contagious yawning, emotional contagion and empathy are characterized by the activation of similar neurophysiological states or responses in an observed individual and an observer. For example, it is hard to keep one’s mouth closed when imagining someone yawning, or not feeling distressed while observing other individuals perceiving pain. The evolutionary origin of these widespread phenomena is unclear, since a direct benefit is not always apparent. We explore a game theoretical model for the evolution of mind-reading strategies, used to predict and respond to others’ behavior. In particular we explore the evolutionary scenarios favoring simulative strategies, which recruit overlapping neural circuits when performing as well as when observing a specific behavior. We show that these mechanisms are advantageous in complex environments, by allowing an observer to use information about its own behavior to interpret that of others. However, without inhibition of the recruited neural circuits, the observer would perform the corresponding downstream action, rather than produce the appropriate social response. We identify evolutionary trade-offs that could hinder this inhibition, leading to emotional contagion as a by-product of mind-reading. The interaction of this model with kinship is complex. We show that empathy likely evolved in a scenario where kin- and other indirect benefits co-opt strategies originally evolved for mind-reading, and that this model explains observed patterns of emotional contagion with kin or group members.

Similar content being viewed by others

Introduction

Learning enables organisms to adapt flexibly to their environment without waiting for natural selection to take its long and arduous route. However, the more complex the environment, the slower the adaptive gain from either learning or evolution. One of the most complex and relevant environments that organisms encounter is the social milieu. Here individuals are presented with a multitude of other individuals, each with a complex adaptive responses. Predicting these “black boxes”, a process called mind-reading1, is hard. Thus accurate social predictions would require a long adaptive process, requiring accurate information about each perceived stimulus and the possible responses. Luckily a shortcut is available: the organism holds an almost identical copy of the “black box” - its own decision making apparatus or neural circuitry, which can be used to extrapolate inferences concerning others’ behavior. Information acquired over evolutionary time and information acquired through individual learning can thus be used to speed up adaptation to social encounters2.

Since the discovery of mirror neurons3,4, a large number of neurophysiological studies have shown that similar brain regions are activated when observing and when performing a specific action, or perceiving a given emotional or sensory stimulus4,5,6,7,8. These mirroring phenomena supported the view that an observer can decipher an actor’s actions and states through at least a partial simulation of those same neural circuits and internal states elicited as an actor, in what de Waal termed “perception-action mechanisms” PAMs9,10. Neuroscientists proposed that these brain regions, activated both as observers or as actors, possibly underlie “shared representations” of the perceived stimuli and actions11. For an observer, a potential advantage of activating the as-actor neural configuration is to have access to information about one’s own sensory and motor programs. In the social context this information might help to interpret social cues and infer an observed actor’s actions or intentions12, allowing to solve the complex “black box” problem. We adopt here the term “simulation” to indicate any mind-reading strategy that relies on self-information experienced as an actor, rather than information acquired via observation of others10.

Simulative strategies face possible disadvantages and computational obstacles: the secondary activation of as-actor neural circuits during observation has to be discriminated precisely from primary activations of the same circuits when performing the corresponding action oneself. Otherwise, the partial activation of neural configuration which are usually used in the as-actor context may prime the corresponding autonomic and somatic responses, and unless properly inhibited would then evoke in the observer the responses of an actor4,9,13. We define these events in which a simulative observer performs the same action as an observed actor as accidental coordination (C; Fig. 1a–d). A pathological lack of inhibition results in compulsive imitation, as in echopraxia and echolalia, the involuntary repetition of others’ actions or language14, respectively. In specific situations, accidental coordination can be advantageous. However in the context of mind-reading and social interactions, individuals are often characterized by different states or competing motivations, thus for an observer it is generally not advantageous to copy blindly the action of an observed actor. The potential cost of accidental coordination is two-folded: first, it hinders the observer from performing the most appropriate response to an actor’s behavior; second, it might imply further specific costs: for instance, contagious distress15,16 can interfere with an optimal decision making, and potentially lead to costly actions, such as alarm calls or aggressive behavior (Fig. 1c). A well documented example, in which the first inherent cost is apparent, is motor interference, in which the observation of a movement impairs the performance of an incongruent movement in the observer13. For this reason, the inhibition of accidental coordination is likely shaped by natural selection, and of primary importance in mind-reading and mirroring processes14,17.

Cognitive circuits connecting stimuli (s ∈ S), actions (a ∈ A) and their representations (\(\hat{s}\in \hat{S}\) and â ∈ Â), for a contagious distress example. An actor (in red) perceives a dangerous stimulus (s+i e.g. a social interaction with an aggressive individual, in gray) and associates (thick arrow) its representation \({\hat{s}}_{+i}\) to a neural circuit underlying a fight-or-flight response \({\hat{a}}_{+i}\), in turn responding aggressively and defending itself (action a+i, B). (b–d) An observer (in blue) perceives (a) as a social stimulus s−i and can perform an appropriate response D (avoiding the conflict and possibly exploiting it to steal/forage resources) through different strategies: F, P and S. (b) F and P-strategies: by observing many similar social stimuli – over a lifetime or over evolutionary time– the observer learns (P) or evolves (F) to map (thick arrow) the social stimulus to the representation of the optimal action a−i. (c,d) S-strategy: the as-actor representations are recruited to infer an actor’s response \({\hat{a}}_{+i}\), via the simulation functions (dashed arrows, γs upward, γa downward). (c) When \({\hat{a}}_{+i}\) is uninhibited, the observer responds with \({a}_{+i}\) (C, contagious distress) with potential costs for itself (fight, energy) and benefits for the actor (help in the conflict). (d) When inhibited, â+i is mapped to a representation of the optimal response for the observer \({\hat{a}}_{-i}\), and this response is executed (D). In all figures arrows indicate cognitive functions as described in Table 2: stimulus-action mappings (learning functions) as thick arrows, activation functions as barred arrows (dark with inhibition, empty with activation), simulation functions as dashed arrows.

Previous theoretical models of the evolution of emotional contagion restricted their attention to cases in which coordination is advantageous18,19. Ackay et al. showed that other-regarding preferences are evolutionarily stable only when payoffs are synergistic between two individuals18. Nakahashi and Ohtsuki proposed a model in which emotional contagion works as a social learning strategy: for an observer it is advantageous to copy the emotion of an observed actor when it needs to respond analogously to a stimulus, but noise or differences between the two individuals hinder an exact copy of the observed behavior19.

Here we relax this assumption, allowing for the presence of costs in accidental coordination, modeling the antagonistic interactions between actor and observer and the divergence of their motivations as dis-coordination games. Following a game-theoretical approach similar to Nakahashi and Ohtuski19 we contrast several mind-reading strategies and show that in complex social contexts, where there may be insufficient social information to infer others’ behavior, simulative strategies will evolve to improve the ability to infer others’ actions. A by-product of this simulation is accidental coordination, possibly in the form of emotional contagion, even when this coordination is costly.

In the second part of the paper, we investigate the effects of assortment mechanisms such as kin and the implication for what regards the evolution of empathy. We emphasize that we do not aim at explaining all forms of prosocial behavior: here we focus on a strict definition of empathy, i.e. prosocial behavior that is mediated by the activation of similar neurophysiological responses in the cooperator and in the recipient individual9,20,21 and we do not make claims in regards to other forms of prosocial behavior, such as sympathy, i.e. prosocial attitudes not mediated by the as-actor network.

For clarity, we presented the model in terms of specific cognitive functions. However, note that the trade-offs that we identify are not specific to this implementation. This is shown with an evolutionary invasion analysis presented in Appendix section 2, and by implementing different variations of the model (Appendix section 2.4, section 4). In the discussion we will return to the question of the model’s generality.

Model

Interactions

We adopt an evolutionary game theory approach to investigate the evolution of a population of individuals genetically adopting different mind-reading strategies, i.e. strategies adopted by an observer to react to an observed actor. We first describe under which circumstances simulative strategies, denoted by S, would evolve against competing strategies, either relying on fixed evolved responses (F-strategies) or on learning (P-strategies). Later, we perform an evolutionary invasion analysis, examining how traits determining how simulative strategies inhibit coordination would evolve. We validate these results also with agent-based simulations (Appendix, section 6). We explore a simple evolutionary setup with pairwise interactions: each individual in the population will, at times, be an actor (Fig. 1a), who simply responds to an environmental stimulus, and at other times an observer (Fig. 1b–d), who responds to an actor on the base of its own mind-reading strategy. Hence, at each step, each individual is either an unpaired actor, with probability 1 − 2p, or is paired, with probability 2p, in which case it has 50% chance to be the actor, and 50% chance to be the observer. An observer is here defined as an individual potentially able to respond to an actor’s behavior, thus high values of p indicate accurate social cues as well as a high proportion of social interactions.

Both actors and observers perceive a stimulus s ∈ S, and respond with an action a ∈ A. We denote as-actor and as-observer elements with positive and negative subscripts, respectively. Thus an actor y+ receives a stimulus s = s+i ∈ S+, whereas an observer y− perceives the corresponding social cue/stimulus s = s−i ∈ S−. A social cue s−i can be interpreted either as the general perception of the context “actor + stimulus s + i for the actor”, or as the perception of a social cue suggesting the internal state of the actor.

Fitness

For each stimulus, we denote the best action for the focal individual, providing the largest payoff, with the same index. Hence, for an actor perceiving stimulus s+i, the best action is a+i, which we also denote as response B and provides a payoff b; for an observer with stimulus s−i the best action is a−i, denoted as D, and provides a payoffs d− (Table 1). Other actions with i ≠ j, here defined as either ∅ or 0 for actors and observers respectively, provide lower payoffs, here considered for simplicity as zero. A special case occurs when an observer performs the same action a + i as the actor, an event that is denoted as C, for coordination. This can occur when the observer activates the same neural circuit as the actor and does not inhibit it (see below Mind-reading strategies).

We consider an evolutionary-dynamics setting with additive payoffs, where the absolute fitness of a focal phenotype is simply given by the average probability of interactions resulting in B, ∅, C, D and 0 events, and their payoffs. We define the expectation of these probabilities respectively as PB, \({P}_{\varnothing }\), PC, PD, and P0. These probabilities depend on the mind-reading strategy adopted by an individual, as well as other continuous traits, determining features such as the inhibition of C responses, whose evolutionary dynamics we also explored. The fitness of a focal individual is determined by the sum of the average payoffs obtained as a solitary actor, π +, as an observer π−, or as an observed actor, \({\pi }_{\mp }\):

We denote with the superscript • the elements related to a focal individual or strategy, while we use the superscript ° to indicate non focal, interacting individuals y°. For large populations, the behavior of y° approaches that of the average for all the strategies in the population. In the solitary actor case, the average payoff is simply \({\pi }_{+}={P}_{B}^{\bullet }\,b\). In the absence of any form of assortment, the average payoff as an observer is \({\pi }_{-}={P}_{D}^{\bullet }\,{d}^{-}-{P}_{C}^{\bullet }\,{c}^{-}.\) Similarly, the average payoff of an observed actor is \({\pi }_{\mp }={P}_{B}^{\bullet }\,b-{P}_{D}^{\circ }\,{d}^{+}+{P}_{C}^{\circ }\,{c}^{+}\). These probabilities depend on the cognitive strategies as described next.

Mind-reading Strategies

We explore three main mind-reading strategies (Fig. 1), defined as the general organization of the cognitive strategy used by an observer to infer the best response a−i in response to a social cue s−i. First, we implement an “innate” strategy, in which an observer responds to social cues with a fixed s−i → a−i mapping that adapts only through evolution, but not learning (Fixed Strategy - F). Second, a strategy in which social responses are only learned through social interactions (Associative strategy - P). In the third type, (Simulative strategy - S) an observer takes advantage of its own as-actor set of responses to infer actors’ behavior. These different cognitive strategies are built with two basic building blocks: representations and cognitive functions.

Representations correspond to the neural configurations and physiological responses associated with a stimulus or action, represented within clouds in Fig. 1. A stimulus representation \({\hat{s}}_{-i}\in \hat{S}\) is triggered by the perception of a stimulus, while an action representation \({\hat{a}}_{\mp i}\) describes the neural configuration and physiological state priming a certain action, and thus may trigger \({a}_{\pm }\in \hat{S}\). For simplicity, we summarize the complexities of a stimulus, its salience and the accuracy of perception and of the corresponding representations with a single variable x, defined as intensity. This can be seen as the extent of activation of the underlying neural configuration. Thus formally a representation is a pair, comprising the action or stimulus represented and an intensity, e.g. \(\hat{s}=({s}_{\pm i},x)\).

Cognitive functions, represented in Fig. 1 as arrows, correspond instead to cognitive processes, determining the relationships between stimuli, actions and their representations, mapping from one space to another. For example, an actor perceives a stimulus s via a perception function (formally a stochastic map), that maps it to a stimulus representation \(\hat{s}\). The actor learns to map \(\hat{s}\) to an action \(\hat{a}\) via a learning function l, and in turn performs an action via an activation function α:

We assume that an individual’s behavior is only influenced by two variables in addition to its own strategy: the intensity of a stimulus and its own previous learning experience. Regarding the former we assume that the higher x, the higher the probability is that l would associate a correct action representation, and that an action would be performed. Learning, defined as the the reduction of the classification mistakes as experience is acquired, occurs in time t at a constant rate λl. The specific mathematical expressions used to describe cognitive functions are reported in Table 2.

Notice that actors encounter stimuli with probability 1 − p, while observers at a rate p. Hence, the scheme in Eq. 2 describes an actor, who perceives a stimulus s + i and responds with the best action a+i with higher probability as its experience increases as (1 − p)t. The same also describes an observer, who responds to social cues s−i, choosing the best response a−i with higher probability as its experience of social interactions increases as pt. We define this first type of observers/mind-reading strategy as P.

Detailed description of simulative strategies

Besides social interaction, an observer has also its own experience as an actor to infer of what an individual would respond to a stimulus s + i. Thus we contrast strategy P with a simulative strategy S, in which an observer takes advantage only of the information acquired as an actor, i.e. its own \({\hat{s}}_{+i}\to {\hat{a}}_{+i}\) mapping. Since this circuit leads, when the individual is an actor, to action a+i, a potential drawback of this strategy is that this action might be actually performed by the observer, resulting in a coordination event. This could be avoided if the underlying representation \({\hat{a}}_{+i}\) leading to a+i, is inhibited. In our model this inhibition is allowed to evolve (see Results and Appendix). To describe the recruitment of the as-actor network when having perceived a social cue \({\hat{s}}_{-i}\) we introduce two further cognitive functions, γs and γa (Fig. 1d): γs represents the probability of a correct association between an observed social cue and an as-actor stimulus \(({\hat{s}}_{-i}\to {\hat{s}}_{+i})\); γa represents the probability of performing an appropriate social response, given a correct inference about the actor’s action \(({\hat{a}}_{+i}\to {\hat{a}}_{-i})\). For simplicity the efficiency of these processes is described by fixed parameters named after the functions, γs and γa. However, it is possible to devise different variations of the model. For example, γa could improve through learning (Table 2, Appendix 5). Finally, we contrast strategies P and S with a Fixed strategy (F), representing observers who employ a fixed mapping \(\hat{s}\to \hat{a}\) optimized by evolution, rather than learning. Hence, their fitness depends entirely on the variability of the Environment (see below).

Environment and learning

In the deterministic model we use a continuous-time model, where both learning and payoffs depend on the amount of time spent in a given interaction type. This corresponds to individuals experiencing a large number of interactions. Stochasticity in the amount of interaction, stimuli and in the learning process is addressed later in our evolutionary simulations, implementing computational agents with simple learning rules. Learning, and thus the efficiency of individuals’ responses, depends on the time t spent experiencing a given set of stimulus-action responses. The environmental state specifies the best response for each stimulus, and is thus represented by a mapping S → A. In a constantly changing environment with rate λe and individuals dying at a rate λd = 1, the time experienced by an individual in a given environmental state is distributed exponentially with rate λ = λd + λe. For simplicity, we assume that environmental states are non correlated, so that individuals have to learn the appropriate reactions independently for each. Since strategies F rely on a fixed mapping, their fitness is proportional to the frequency of the most frequently visited environmental state. To be conservative in favor of F-strategies we assume that this is always visited once, so that it is the only experienced environment when \({\lambda }_{e}\ll {\lambda }_{d}\).

Results

Simulative strategies dominate in more complex environments

In our model a mind-reading strategy is determined by the mind-reading strategy-type and by a number of continuous traits, which affect the level of inhibition of coordination. We focus on two main questions: (1) Under which circumstances are as-actor networks recruited for mind-reading? (2) Can emotional contagion evolve as a consequence of mind-reading? The first question is addressed here by tracking the frequency of the different strategy-types, \(F\), \(P\) and \(S\), in an infinite population. We address the second question in the next section, performing an evolutionary invasion analysis on the continuous traits influencing simulative strategies, \({u}_{D}\) and \({u}_{B}\).

First, we compute the expected probabilities of a B response as actor, and D and C as an observer using one of the three different strategies, indicated by the superscripts P, F and S. These determine the fitness of the mind-reading strategies, following Eq. 1. We report here expressions for the simplified case where every stimulus has intensity x = 1 and is sufficient for correct response given that an individual has enough experience, i.e. α(1) = 1 and \(li{m}_{t\to \infty }\,l\mathrm{(1},t)={l}_{t}(t)=1-{e}^{-{\lambda }_{t}t}\). In this case the payoff of an actor is

while the expression for the fitness of an observer depends on its strategy, indicated as superscript:

with \({l}_{x}(x)=1-{e}^{-{\rho }_{l}}\). Notice that the payoffs of all observers depend on the behavior of the observed actors \({P}_{B}^{\circ }\), but not on other observers. Thus, when PB differs between individuals with different mind-reading strategies the evolutionary dynamics of this system are frequency-dependent. We explore this case in the appendix, where we show that different mind-reading strategies can be bistable. Here, we restrict to the simpler frequency-independent case, for which all main results hold. In this case, we can write the replicator dynamics of the system as:

where j indicates one of the strategies P, F or S, and where \(\varphi ={y}^{F}\,{\pi }^{F}+{y}^{P}\,{\pi }^{P}+{y}^{S}\,{\pi }^{S}\) is the average fitness, and \({\varphi }_{-}={y}^{F}\,{\pi }_{-}^{F}+{y}^{P}\,{\pi }_{-}^{P}+{y}^{S}\,{\pi }_{-}^{S}\) is the average of the as-observer components of the payoff. This system is characterized by transcritical bifurcations: each strategy dominates when its social fitness π− is higher than those of other strategies. Thus, we can explore under which conditions the different strategies would evolve by simply comparing the expression for the social fitness in Eqs 4–6 (Fig. 2a). These expressions reflect the different sources of information that the three strategies use to cope with uncertainty, which we explore here in the form of environmental variability, behavioral complexity, and inter-individual differences.

Evolutionary dynamics of the three mind-reading strategies, F (black), P (gray) and S (white), as a function of average time spent in a single environmental state (\(\bar{t}\,=\,\mathrm{1/(}{\lambda }_{e}+{\lambda }_{d})\), x-axis) and the fraction of effective as-observer vs as-actor stimuli (p−, y-axis). Gray dots indicate the combinations of parameters for which the evolutionary dynamics are shown in the ternary plots, when \(S\) (right vertex), P (top) or F (left) dominate. Here we consider a fixed uB, hence a single optimal S-strategy S* exists, independently of the frequencies of other strategies. In (a) the parameters are uB = 0.3, \({\rho }_{a}=13.8155/{u}_{B}\), normally distributed x with mean 0.5 and deviation 0.1, ρl = 5, λd = 1, \({d}^{-}={d}^{+}\mathrm{=5}\), \({c}^{-}={c}^{+}\mathrm{=3}\), \({\lambda }_{l}=50\). In (b) the learning rate is λ1 = 10, corresponding to the presence of 5 times more stimuli. In (c,d) individuals differ from each other in their responses with average probability ψ = 0.5. In addition, in (d) individuals interact twice as often with individuals with the same behavior.

In Fig. 2a we modify environmental variability through the parameter λe, the rate of environmental change. Strategy F does not rely on learning, but on a fixed mapping optimized by evolution. Hence while this strategy is very efficient for predictable environments (low λe), it cannot adapt to new environmental states. This is apparent in the term \({\lambda }_{d}/({\lambda }_{d}+{\lambda }_{e})\) in Eq. 5, that coincides with the fraction of time spent in a single predictable environmental state. Conversely, both strategy P and S use learning to adapt to new environments, and dominate when environmental variation increases. However, P learns only during social interactions, and the probability of successful D responses decreases linearly with p. For this reason, S evolves at lower values of p. Remarkably, S outperforms all other strategies as complexity increases. As information about the social context decreases, the experience gained as an actor becomes more valuable. This result is exemplified by simple analytical expressions obtainable in the simple case of an innate as-actor behavior. In this case the fitness of strategy S is independent of complexity, whereas the fitness of an observer using F or P strategies is \({d}_{-}\,{\lambda }_{d}/({\lambda }_{e}+\mathrm{1)}\) versus \({d}_{-}\,p\,{\lambda }_{l}/({\lambda }_{d}+{\lambda }_{e}+p{\lambda }_{l})\), respectively. Both of these decrease with environmental variability λe, whilst that of strategy P also decreases with reduced opportunity of direct as-observer learning p. Therefore S -strategies dominate when environmental complexity increases. This pattern is consistent across the assumptions and models tested. In general, learning as an actor is faster than as an observer (supplementary material section 1.5.4), due to the uncertainty involved in social cues. Information about other individuals is inherently noisy or inaccurate, since an observer may not know what stimulus or internal state precisely characterize an actor, or simply might misperceive a social cue. Hence, in complex environments (high λe) or when social stimuli are noisy and inaccurate (low p), using information acquired solely through social interactions may not suffice, requiring a large number of learning instances. Similar results are obtained when individuals can switch from S to p over their lifespan depending on the available information (Appendix).

The effect of behavioral complexity is manipulated by varying the learning rate, that we assume to be inversely proportional to the number of stimuli to be learned. As behavioral complexity increases, S strategies are favored (Fig. 2b).

We then explored the effect of inter-individual differences between individuals, that could arise because of differences in genetics, social status, or simply experience between the observer and the actor. These differences are detrimental to S strategies, since an S -observer infers the behavior of other individuals from its own, possibly extrapolating incorrect inferences if the two individuals differ in their responses to a given environmental stimulus, i.e. their own environmental state. We implemented these differences by using a probability ψ that individuals differ in the response to a particular as-actor stimulus (i.e. two different individuals would respond to a stimulus with two different optimal actions). S-observers have the possibility to make correct inferences only when the focal observer and the observed actor share the same behavioral response, and fail in a fraction ψ of the interactions. Thus, inter-individual differences impact negatively the fitness of S-strategies, reducing the range of parameters for which they would evolve (Fig. 2c). However, S -strategies still dominate in variable environments, and when p is low (Fig. 2c). This occurs because inter-individual differences do not only affect the fitness of S strategies, but also that of P strategies. In fact, only a fraction 1 − ψ of the individuals share the same response, and therefore the probability of encountering a specific stimulus-action is reduced by a factor 1 − ψ, requiring longer times to learn the behavior of the different individuals and mimicking the effects of environmental or behavioral complexity.

Note that all these results can be easily extended to cases in which individuals interact preferentially with some other individuals in a population, because of group structure, relatedness, or social status and dominance relationships. For example, individuals might interact more frequently with individuals with similar behavior. In this case, inter-individual differences will be partially masked, and exert a reduced effect on S -strategies, that in turn would be favored (Fig. 2d).

Concluding, as environmental complexity increases and self information becomes valuable, S strategies are able to invade even if this causes more coordination. In our simplified model, an upper bound for the probability of coordination is:

Accidental coordination is sustained at the evolutionary equilibrium

In our model coordination is costly for the observer. Eq. 8 establishes an upper bound to the amount of coordination that can be tolerated by a simulative strategy. We can now ask under what conditions will accidental coordination be present (PC > 0) by looking at how inhibition evolves.

With efficient inhibition, an S -observer will be able to extract information from its as-actor network, without generating the response usually exhibited as an actor, here C. These cases can be seen as True-Negatives, as inhibition is applied correctly to avoid a costly and inappropriate as-actor response (here C, see Fig. 3a). This inhibition of the as-actor network can be achieved in different ways, that here we can categorize on the basis the types of errors that they might incur on, i.e. false positives and false negatives, and thus facing different evolutionary trade-offs. A first mechanism is to modulate how the as-actor network is recruited as an observer, potentially affecting the accuracy of social inferences: the more the as-actor network is allowed to take over, the more accurate the simulation; however, the chance that an as-actor action is triggered increases, potentially leading also to an increased risk of coordination (PC, false positives, see Fig. 3a,c) together with accurate inferences (PD, true negatives). A second mechanism is to modify the structure of the as-actor network itself to make it easier to inhibit; however this would potentially lead to accidental inhibition of actions when the individual is an actor (false negatives, see Fig. 3a,b), decreasing PB as well as PC.

(a) Trade-offs constraining the recruitment of the as-actor network by simulative strategies. The as-actor network can be activated (left) or inhibited (right). For an actor (top), the inhibition of the as-actor network prevents an appropriate response B (False Negative), while if activated B can be performed (True Positive). When the individual is no actor but an observer (bottom), the activation of the as-actor network leads the observer to respond as an actor (C, False Positive). (b) Direction of the selection gradient for a resident population with traits uD, the intensity of simulation (x-axis), and uB, the inhibition threshold (y-axis). (c) Frequencies of D (PD, blue) and C (PC, red) responses, for a fixed value of \({u}_{B}={u}_{B}^{\ast }\). In this case uD evolves maximizing the as-observer fitness. (d) PD, (blue) and PC (red) and PB (black) for a fixed value \({u}_{D}={u}_{D}^{\ast }\). A singular strategy occur when the sum of the as-actor component (proportional to PB) and as-observer component (a payoff weighted sum of PD and PC) of the fitness is highest.

In the following, we model these two general inhibition mechanisms as two one-dimensional continuous traits, uD and uB respectively. Of these, uD can be seen as the intensity of the recruitment of the as-actor network in the as-observer context, or the extent of shared representation. The second, uB, can be seen as an activation threshold, inhibiting as-actor responses when the activation of the as-actor network is below the threshold. In the discussion we address the generality of results from this simple model.

When uB is fixed, PB is identical for all individuals, thus the system is frequency independent. In this case, the fitness of a mutant strategy S with uD value \({u}_{D}^{m}\) is independent of the fitness of the other S strategies present in the population. Therefore, a single S strategy dominates and reaches fixation, leading to an evolutionary equilibrium value \({u}_{D}^{\ast }\), for which the invasion fitness \(\frac{d{\pi }_{-}^{S}({u}_{D}^{\ast })}{d{u}_{D}}\) equals 0. This is the global maximum of the function \({\pi }_{-}^{S}({u}_{D})\), and is both an evolutionarily stable strategy (ESS) and convergence stable (CS).

We see that in most cases this equilibrium is internal, i.e. uD > 0, leading to a small level of accidental coordination (0.5 − 2% of the interactions in the examples tested), even though inhibition is allowed to evolve and other strategies to be used (Fig. 3b–d). We perform a general evolutionary invasion analysis in section 2 of the Appendix, where we identify the conditions required for the evolution of an internal equilibrium with accidental coordination. However, to explain intuitively why this occurs, we obtain here analytical expressions for \({u}_{D}^{\ast }\) by assuming specific expressions for the cognitive functions \(\alpha ({u}_{D},\,x)\) and \(l({u}_{D},\,x,\,t)\) in Eq. 4. In particular, we explore the simplified case of stimuli with identical intensities equal to 1, with step-linear dependencies on the representation intensities for the activation/inhibition and the learning function:

In this simplified case, a full inhibition of accidental coordination would occur if \({u}_{D}^{\ast }\le {u}_{B}\), whereas accidental coordination occurs if \({u}_{D}^{\ast } > {u}_{B}\), the higher uD the more coordination. When uD < uB evolution always leads to higher values of uD, whereas when \({u}_{D}\le \mathrm{(1}+{u}_{B}{\rho }_{a})/{\rho }_{a}\) it leads to lower values, i.e. the invasion fitness is respectively always positive \((\frac{d{\pi }_{-}^{S}({u}_{D})}{d{u}_{D}}={\gamma }_{a}\,{d}^{-})\) or always negative \((\frac{d{\pi }_{-}^{S}({u}_{D})}{d{u}_{D}}=-\,{c}^{-})\). For intermediate values \(({u}_{B}\le {u}_{D}\le (1+{u}_{B}{\rho }_{a})/{\rho }_{a})\) instead, a singular point satisfies:

Thus, \({u}_{D}\ast \) the evolves towards the maximum of the as-observer fitness:

Since accidental coordination evolves when \({u}_{D}\ast > {u}_{B}\), this indicates that accidental coordination can evolve even if costly for the observer, provided that \({\rho }_{a} < \frac{{\gamma }_{a}}{{u}_{B}}\frac{b}{b+c}\). This condition indicates that coordination can be fully inhibited only if 1/ρa is high enough, i.e. the slope of the inhibition function is very steep and discriminative (Appendix, Fig. S1). Thus, this simplified case shows that evolution will lead to higher values of uD - and in turn accidental coordination to increase - as long as the benefit of more accurate inferences outweighs the cost of a higher risk of accidental coordination events, that here increases proportionally to 1/ρa at increasing values uD (Fig. 3c).

Remarkably, accidental coordination can evolve even when also the activation threshold can evolve, alone (Appendix, section 2.2) or simultaneously with uD (Appendix, section 2.3). To see why, note that whenever uB evolves to values higher than \(1-{\rho }_{a}{u}_{D}\), also actions performed as an actor can be inhibited (Fig. 3d). This behavior is a specific example of a more general trade-off between true negatives and false positives: increasing the strength of inhibitory mechanisms can result in the unspecific inhibition of actions that could be advantageous for the observer. In particular, in the simplified case, the equilibrium value of uB, i.e. how selective the activation threshold is, decreases proportionally to (1 − p)/p, the odds of a potential false negative (Appendix section 2.2).

An important biological note is that this simplified model is likely conservative. First, also other as-observer actions could risk of being inhibited. Second, in real life scenarios, stimuli and the activation of neurophysiological pathways are also subjected to noise and variability. This uncertainty increases the chance of false negatives and false positives, and thus likely lead to scenarios that could be represented with smoother, more continuous activation functions, compared to the simplified model presented above. In these cases - that can be represented mathematically with a continuous activation function (e.g. a sigmoid) or normally distributed stimuli intensities - a small probability of accidental coordination always evolve (Appendix, section 2).

Finally, note that these results are not dependent on the specific functions used for the simplified model, and they reflect general payoffs underlying the trade-off between increasing the amount of true positives and negatives (appropriate responses B and D, respectively), and the risk of false poositives (C) and false negatives (unspecific inhibition of other actions, \(\varnothing \)). Thus, we show a generalization of these results in section 2.1–2.3 of the Appendix. Furthermore, we show that these results hold even for more complex models of inhibition and social responses (section 2.4 and 4 of the Appendix).

Kin selection and indirect benefits of coordination

We also investigated the interaction between simulative strategies and kin selection. To this aim, we modeled the indirect fitness benefits of kin selection by adopting a payoff structure similar as in Taylor and Nowak22, using r, an abstract assortment coefficient that can represent either relatedness or group structure22. We focus on the evolution of uD, the intensity of the recruitment of the as-actor network. The transformed payoff structure is shown in Table 3.

Notice that as r changes, the optimal strategy for the observer, which we called D, could change. For simplicity we first ignore this effect, assuming that D does not change with r.

Empirical evidence suggests that empathy, contagious yawning and emotional contagion are stronger with kin or in-group members, a phenomenon defined as empathic gradient. In our model, this would correspond to higher levels of recruitment of the as-actor network (uD) and coordination (PC) at increasing r. Therefore we investigate the effects of r on the recruitment of the as-actor network, uD. Our purpose is to estimate the effect of r on the evolutionary equilibrium for \({u}_{D}^{\ast }\), hence \(\frac{d{u}_{D}^{\ast }}{dr}\).

This can be obtained by implicit differentiation of the invasion fitness23 (see Appendix). We show that the conditions necessary to an empathic gradient analogous to what observed empirically are that c+/c− > d+/d−. Note that the effect of relatedness on coordination is non-trivial: r can either increase (Fig. 4, high values of c+/c−) or even decrease uD and PC (Fig. 4, low values of c+/c−). An increase is observed provided that a more efficient mind-reading, i.e. an higher proportion of D responses, provides a substantial benefit, either direct and aimed at defection (d−) or cooperation (rd+). Hence a benefit of mind-reading might explain observed behavioral patterns of emotional contagion and empathy. The patterns described above are even stronger when γa is small (Appendix).

Effects of relatedness (x-axis) on the intensity of simulation uD (level curves, top values) and on the probability of coordination (bottom values, in brackets), at varying payoffs. Payoffs are changed by varying the ratio of coordination payoff for actor and observer \({c}^{+}/{c}^{-}\), when \({d}^{+}/{d}^{-}\) is constant. Level curves indicate regions with different values of uD for the internal equilibrium (inhibition), when this exists. The shaded area indicates regions of the parameter space where full coordination (PC = 1) is evolutionarily stable. This can overlap regions of the parameter space where an internal equilibrium is also stable (inhibition + full inhibition), or not (full coordination, top right corner, in dark gray). In this plot we considered a sigmoid activation function and \({d}^{-}={d}^{+}\mathrm{=5}\) and \({\gamma }_{a}\mathrm{=1}\).

We see that for high relatedness, when cooperation is evolutionary advantageous, inhibition can go to 0 and the observer fully coordinates (Fig. 4, dark gray area). This effect could be called empathy–the observer does not inhibit simulated responses towards kin. However, one should remember here that we assumed that D is the same for higher values of r, while in this context it is of course possible that another strategy Dr other than C or D would be optimal for cooperation. In this case our framework can be applied without changes: an observer would simply use simulative strategies to infer the actor’s response and the best action to cooperate, while inhibiting coordination. Notice that for some intermediate values of r, full coordination and an internal equilibrium where coordination is inhibited can can be bistable (Fig. 4, light gray).

Discussion

Simulation is evolutionarily advantageous in complex environments

We have shown that private as-actor information, recovered through a partial recruitment of as-actor neural circuits, confers an important selective advantage in complex social contexts, when information on other individuals’ behavior is limited. Under these circumstances, simulative strategies evolve. A side effect of simulative strategies is that an observer could accidentally perform the same as an actor, a response that we define here as accidental coordination. We showed that even when this accidental coordination occurs, the fitness benefit of making more accurate inferences about others can buffer its costs. It has been questioned whether mirroring processes have evolved in the context of mind-reading, or simply emerged as by-products of general domain associative learning24. Here we have shown that alternative strategies, which rely only on information acquired as observer, are often outperformed by strategies relying on as-actor experience. An efficient mind-reading has a significant impact on the fitness of an individual. Thus it is unlikely that such an important process has not been subjected to the forces of evolution25. We have also observed that selective constraints act on inhibitory mechanisms of the as-actor neural network, avoiding action interference and modulating accidental coordination. Therefore even if associative learning plays a key role in the development of mirroring, selection is expected to shape the modulation of “shared representations”. This is supported by the evidence that a dysfunctional inhibition is pathological.

Brain regions involved in mind-reading and action or emotion recognition are often grouped in mentalizing ones (e.g. TPJ, dmPFC) and mirroring or empathy related-regions, also recruited as actors (e.g. somatosensory cortex, premotor and inferior parietal cortex)21,26. Both these circuits likely operate together in most real life tasks. Our model shows in which circumstances the latter ones might be beneficial and favored for mind-reading, by complementing the lack of social information, Clearly, in many instances learned information is abundant and a recruitment of the as-actor network is not necessary. Our model shows that this happens if enough social information is available, when enough learning opportunities are available and in easily predictable contexts.

Evolutionary trade-offs hinder a complete inhibition of coordination

We have shown that small probabilities of coordination can be maintained even when costly, and when inhibition is allowed to evolve (Fig. 2a). The model suggests that two evolutionary trade-offs might hinder the efficiency of inhibitory mechanisms of contagion: In the first trade-off (D-vs-C, captured by trait uD, Fig. 3a) important as-actor information might be retrieved only by faithfully recruiting the as-actor neural network. Partially renouncing the information stored in as-actor circuits by inhibiting them upstream might decrease the accuracy of the simulative process itself and in turn that of social inferences27 (Fig. 3c). Hence, excessively reducing the recruitment of the as-actor network in order to avoid accidental coordination is disadvantageous. Note that the only underlying requirement for the emergence of this trade-off is that more intense/accurate stimuli representations would lead to more intense/accurate response. This requirement is biologically plausible and a common feature of models of neurophysiological responses28, besides being supported by empirical evidence29,30. A challenge for future research is to assess the validity of this principle in the context of simulative strategies. Note however that different studies already provided evidence that a reduced or impaired recruitment of the as-actor network might result in reduced social inferences. For instance, a pathologically impaired activation of the amygdala results in an impaired ability to infer disgust of fear in other individuals31. In addition, this might be exemplified by phenomena like motor interference (in which the intensity/accuracy of the recruitment of the as-actor network is affected by another stimulus), and pathologies such as alexithymia and autism, which affect the access to self representations of internal states and in turn perspective taking4,26,31,32,33. More studies apt to investigate the role of mirror neurons in action recognition could provide further evidence in support of this assumption.

The second trade-off (B-vs-C, captured by trait uB) is that a downstream mechanism inhibiting coordination must be able to inhibit the actions driven by internal neural configurations (or motivational states) that are usually experienced as actors, when certain alternative responses are instead advantageous (Fig. 3b). Such an inhibitory mechanism has to be extremely specific for the as-observer context, any non-specificity hindering its evolutionary advantage. One can ask if this is realistic. Why would an organism not be able to conditionally inhibit a particular response in a social context? Here, it is important to remember that its own decision making machinery is being used as a “black box”, and that is where the gain in using the simulative strategy comes from: the inferred responses are not known prior to the simulation. This makes completely specific inhibition relatively hard to achieve, since the downstream responses are not known a priori, without sufficient information. In particular, the autonomous and more peripheral components of behaviors with a strong emotional7 and physiological16 valence might be particularly hard to inhibit efficiently, as in contagious distress. Vice versa, a complete inhibition might be achievable when sufficient social information is available, as we show with a temporal model of inhibition (Appendix, section 2.4) and it is documented empirically34,35,36.

Note that for didactic purposes we presented these trade-offs in terms of simple evolvable traits (uD and uB), each corresponding to a specific characteristic of a cognitive process. However, the trade-offs that we identified are not determined by the specific implementation or the specific cognitive functions used, as shown by a general evolutionary invasion analysis of the model (Appendix section 2). For example, although we presented uB as the threshold of an activation function, a trade-off between the inhibition of coordination (true negatives) and undesired unspecific inhibition (false negatives, B-vs-C trade-off) would emerge for any form of inhibition, with increasing importance the higher the chance of false negatives. This is particularly relevant for the simulative ‘black-box’: even though an observer would like to inhibit coordination, the specific action to inhibit is unclear before this is inferred via simulation.

Despite this trade-offs, we have shown that coordination is inhibited efficiently in most cases and occurs only occasionally: in 0.1–2% of the total interactions in our model. Hence simulation is relatively efficient, despite leaving room for contagion under conditions with intense above-threshold stimuli or cheap coordination events, where inhibition through learning might not be reinforced e.g. contagious yawning. Thus, although rare, we suggest that accidental coordination should be considered in null models of social interactions. If a simulative strategy is used for mind-reading, the null behavior of organisms should not necessarily be ‘no action’, but accidental coordination. This model supports a parsimonious bottom-up explanation for the emergence of contagion and rudimentary forms of empathy, without requiring ad-hoc explanations for each specific contexts, e.g. e.g. contagious yawning37. Such explanation is consistent with the widespread presence of mirroring processes and related forms of coordination, facilitation or contagion, in social cognition.

Empathy: mind-reading in cooperative contexts

Therefore, our model provides two non-mutually exclusive explanations for the evolution of emotional contagion and empathy, i.e. cooperation mediated by the recruitment of as- actor circuits. To illustrate them, we take the example of empathy towards an actor subjected to a painful or stressful stimulus.

First, the as-actor network might be simply recruited by a simulative observer to infer the state of an actor, in a context in which cooperation is evolutionarily advantageous for the observer as well as the actor, e.g. a simulative observer infers the state of a related actor and cooperate.

Second, when coordination is advantageous for the actor but not evolutionarily advantageous for the observer, we have shown that cooperation might still occasionally occur because of a missed inhibition of coordination from a simulative observer. For example, contagious distress can inhibit a conditioned response if this causes pain in another individual38,39, and in primates, grooming relieves the distress of the groomer, as well as the groomee40,41. Hence contagious distress might trigger in the observer a typical helping behavior like grooming, or hinder an action that is painful for the actor. A similar proximate mechanism is aversive-arousal reduction42,43: cooperation occurs when similar emotional responses are induced in an observer, who in turn helps the actor in order to relieve its own perceived distress. This phenomenon likely explains only a small fraction of seemingly altruistic interactions44, consistent with our predictions of a low probability of accidental coordination. Nevertheless, it is of a major theoretical interest since no ultimate mechanism promoting cooperation such as synergistic payoffs18 or assortment mechanisms (e.g. kin or group selection) is required45,46.

We suggest that the two mechanisms could be phylogenetically related. Simulative strategies, by recruiting the as-actor network, provide a simple proximate mechanism to synchronize the actions of an observer to the internal and motivational states of a recipient individual. Our model shows that the recruitment of the as-actor network and coordination increase when cooperation is promoted by assortment. Thus simulative strategies, evolved initially for mind-reading, could be have been later coopted for empathy and cooperation. A potential criticism is that a coordination of internal states and emotional responses is not sufficient, and may even hinder, cooperation. For example, it has been observed that contagious distress might undermine helping behavior44,47. However, we showed that coordination can evolve even when it is not the best possible cooperative response: coordination offers an evolutionary compromise, by synchronizing the internal state of an observer with that of an actor, and reducing the degrees of freedom for possible responses. For example, the perception of distress in the offspring elicits alertness in parents. From this rudimentary form of state-matching empathy, the activation of shared representations of pain or distress could have evolved to elicit prosocial responses, concurrently with the role of oxytocin in reducing avoidance responses triggered by distress47. This is supported by the evidence that the activation of stress-related responses in the observer often accompanies empathy and cooperation (e.g.48,49).

It has been proposed that parental care played a key role for the evolution of empathy. Our model is consistent with this hypothesis, since parental care would provide both a selective pressure for cooperation and the understanding of the needs of the offspring39,42,47. However, mind-reading benefits are not restricted to parental care, and in the spectrum of social complexity, parent-offspring interactions are relatively simple: because of their limited interaction with the environment, the behavior of offspring is mostly evolved as opposed to learned. Accordingly, instances of parental care exist throughout the animal world even in the absence of apparent empathy (e.g. eggs brooding, ritualized parental cares50). Thus, even though we cannot rule out that in mammals parental care provided the sufficient complexity to render simulative strategies adaptive, we suggest that simulative strategies might have evolved for mind-reading in a more general social context. Only later, might kin selection have coopted them for cooperation21,27,51, or emotional contagion when coordination is advantageous. These conclusions are supported by neurophysiological evidence, showing that the recruitment of the as-actor network extends beyond the cooperative context4,12; and behavioral studies, showing that contagion in non-cooperative context (e.g. contagious yawning, facial mimicry) is stronger with kin and unrelated but socially close individuals, with whom empathy and helping behavior (e.g. consolation21,52) are stronger53,54,55,56,57.

Future perspectives

Our model suggests potential directions for new empirical studies, that would allow to test some of its predictions. For example, we predict that simulative strategies are more advantageous in unpredictable environments. While empirical evidence seems to support the presence of empathy especially in primates and other mammals, a better characterization of the species in which empathy is present would improve this picture. In addition, more studies are needed both at the intra-specific and inter-specific level to assess whether perspective-taking skills correlate with emotional contagion and empathy driven cooperation. Do species or individuals who simulate more also show more empathy driven cooperation?

We also showed that specific features of cognitive processes involved in mind-reading would be necessary and favour the evolution of accidental coordination. Thus, more studies would help to clarify when these conditions apply. For instance, we showed that accidental coordination is favored when inhibitory mechanisms incur the risk of false positives - inhibiting off-target actions. Thus, studies investigating the specificity of inhibitory mechanisms would be precious to clarify the role of the B-vs-C trade-off. Furthermore, more studies would be beneficial in assessing the role and the extent to what as-actor network circuits are necessary for inferences, for example in the case of mirror neurons. Concluding, the characterization of inhibition mechanisms and their specificity at the neurophysiological level will certainly help to understand whether and to what extent accidental coordination contributes to prosocial behavior in different species.

Conclusions

Our model explores a simple assumption, that experience as actor can be useful to predict and interpret others’ actions, and shows that this has relevant implications and side-effects. First, we show that strategies relying on this source information can evolve under many circumstances. Second, we show that a side effect of the evolution of such strategies is that an observers could fail to inhibit the observed behavior coordinating with the state and actions of the observed actors. These two phenomena provide us with a simple unified perspective on biological phenomena as different as mirror neurons, motor interference, contagious yawning, contagious distress and empathy, and their higher stronger effects with kin. Many other biological pathological and non pathological behaviors could possibly be connected. For example, the comorbidity of alexythymia and autism had already been interpreted as the inability to understand others as a consequence of the impaired awareness of one’s own feelings4,26,31,32,33. The fact that information and neural circuits acquired as an actor are used to interpret others’ actions is also apparent for sensory stimuli in the activation of the somatosensory cortex26; for stimuli of different nature in phenomena like mirror touch synesthesia; and possibly even for collective inferences and abstract concepts20,58 and for inanimate or inter-specific entities (e.g. anthropocentrism59). Furthermore, the role of inhibition of the activation of the as-actor network to interpret others’ actions is apparent in pathologies like echopraxia and echolalia14. As an actor’s cognition is embodied, even an observer’s cognition is required to be embodied, despite the risk of accidental coordination10. Concluding, the importance of information acquired as an actor, either learned or evolved, is pivotal to understand social cognition and its evolution.

References

Krebs, J. R. & Dawkins, R. Animal signals: mind-reading and manipulation. In Behavioral Ecology: An Evolutionary Approach, 380=402 (Oxford Blackwell Scientific Publications, 1984).

Gallese, V. & Goldman, A. Mirror neurons and the simulation theory of mind-reading. Trends Cogn. Sci. 2, 493–501 (1998).

Gallese, V., Fadiga, L., Fogassi, L. & Rizzolatti, G. Action recognition in the premotor cortex. Brain: A J. Neurol. 119(Pt 2), 593–609 (1996).

Pineda, J. A. Mirror Neuron Systems: The Role of Mirroring Processes in Social Cognition (Springer, 2009).

Kohler, E. et al. Hearing Sounds, Understanding Actions: Action Representation in Mirror Neurons. Science 297, 846–848 (2002).

Fogassi, L. et al. Parietal Lobe: From Action Organization to Intention Understanding. Science 308, 662–667 (2005).

Bastiaansen, Ja. C. J., Thioux, M. & Keysers, C. Evidence for mirror systems in emotions. Philos. Transactions Royal Soc. B: Biol. Sci. 364, 2391–2404 (2009).

Keysers, C., Kaas, J. H. & Gazzola, V. Somatosensation in social perception. Nat. Rev. Neurosci. 11, 417–428 (2010).

Preston, S. D. & de Waal, F. B. M. Empathy: Its ultimate and proximate bases. Behav. Brain Sci. 25, 1–20 (2002).

Goldman, A. & Vignemont, F. D. Is social cognition embodied? Trends Cogn. Sci. 13, 154–159 (2009).

Lawrence, E. J. et al. The role of shared representations in social perception and empathy: An fMRI study. NeuroImage 29, 1173–1184 (2006).

Iacoboni, M. et al. Grasping the Intentions of Others with One’s Own Mirror Neuron System. PLoS Biol 3 (2005).

Kilner, J. M., Paulignan, Y. & Blakemore, S. J. An Interference Effect of Observed Biological Movement on Action. Curr. Biol. 13, 522–525 (2003).

Oztop, E., Kawato, M. & Arbib, M. A. Mirror neurons: Functions, mechanisms and models. Neurosci. Lett. 540, 43–55 (2013).

Buchanan, T. W., Bagley, S. L., Stansfield, R. B. & Preston, S. D. The empathic, physiological resonance of stress. Soc. Neurosci. 7, 191–201 (2011).

Engert, V., Plessow, F., Miller, R., Kirschbaum, C. & Singer, T. Cortisol increase in empathic stress is modulated by emotional closeness and observation modality. Psychoneuroendocrinology 45, 192–201 (2014).

Chersi, F., Ferrari, P. F. & Fogassi, L. Neuronal Chains for Actions in the Parietal Lobe: A Computational Model. PLoS ONE 6, e27652 (2011).

Akçay, E., Cleve, J. V., Feldman, M. W. & Roughgarden, J. A theory for the evolution of other-regard integrating proximate and ultimate perspectives. PNAS 106, 19061–19066 (2009).

Nakahashi, W. & Ohtsuki, H. When is emotional contagion adaptive? J. Theor. Biol. 380, 480–488 (2015).

Decety, J. The Social Neuroscience of Empathy (MIT Press, 2009).

deWaal, F. B. M. & Preston, S. D. Mammalian empathy: behavioural manifestations and neural basis. Nat. Rev. Neurosci. 18, 498–509 (2017).

Taylor, C. & Nowak, M. A. Transforming the dilemma. Evolution 61, 2281–2292 (2007).

Otto, S. P. & Day, T. A Biologist’s Guide to Mathematical Modeling in Ecology and Evolution (Princeton University Press, 2007).

Cook, R., Bird, G., Catmur, C., Press, C. & Heyes, C. Mirror neurons: From origin to function. Behav. Brain Sci. 37, 177–192 (2014).

Lotem, A., Halpern, J. Y., Edelman, S. & Kolodny, O. The evolution of cognitive mechanisms in response to cultural innovations. Proc. Natl. Acad. Sci. 114, 7915–7922 (2017).

Bernhardt, B. C. & Singer, T. The Neural Basis of Empathy. Annu. Rev. Neurosci. 35, 1–23 (2012).

Gazzola, V., Aziz-Zadeh, L. & Keysers, C. Empathy and the somatotopic auditory mirror system in humans. Curr. Biol. 16, 1824–1829 (2006).

Haykin, S. & Haykin, S. S. Neural Networks and Learning Machines. Google-Books-ID: K7P36lKzI_QC (Prentice Hall, 2009).

Palmer, J., Huk, A. C. & Shadlen, M. N. The effect of stimulus strength on the speed and accuracy of a perceptual decision. J. Vis. 5, 1–1 (2005).

Glazewski, S. & Barth, A. L. Stimulus intensity determines experience-dependent modifications in neocortical neuron firing rates. The Eur. J. Neurosci. 41, 410–419 (2015).

Adolphs, R., Tranel, D., Damasio, H. & Damasio, A. Impaired recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature 372, 669–672 (1994).

Calder, A. J., Keane, J., Manes, F., Antoun, N. & Young, A. W. Impaired recognition and experience of disgust following brain injury. Nat. Neurosci. 3, 1077–1078 (2000).

Bird, G. et al. Empathic brain responses in insula are modulated by levels of alexithymia but not autism. Brain 133, 1515–1525 (2010).

Hein, G. & Singer, T. I feel how you feel but not always: the empathic brain and its modulation. Curr. Opin. Neurobiol. 18, 153–158 (2008).

Hein, G., Silani, G., Preuschoff, K., Batson, C. D. & Singer, T. Neural Responses to Ingroup and Outgroup Members’ Suffering Predict Individual Differences in Costly Helping. Neuron 68, 149–160 (2010).

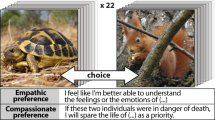

Singer, T. & Klimecki, O. M. Empathy and compassion. Curr. Biol. 24, R875–R878 (2014).

Gallup, A. C. & Gallup, G. G. Jr. Yawning as a brain cooling mechanism: Nasal breathing and forehead cooling diminish the incidence of contagious yawning. Evol. Psychol. 5, 92–101 (2007).

Watanabe, S. & Ono, K. An experimental analysis of “empathic” response: Effects of pain reactions of pigeon upon other pigeon’s operant behavior. Behav. Process. 13, 269–277 (1986).

de Waal, F. B. Putting the Altruism Back into Altruism: The Evolution of Empathy. Annu. Rev. Psychol. 59, 279–300 (2008).

Aureli, F. & Yates, K. Distress prevention by grooming others in crested black macaques. Biol. Lett. 6, 27–29 (2010).

Palagi, E., Leone, A., Demuru, E. & Ferrari, P. F. High-Ranking Geladas Protect and Comfort Others After Conflicts. Sci. Reports 8 (2018).

Batson, C. D. The Naked Emperor: seeking a more plausible genetic basis for psychological altruism. Econ. Philos. 26, 149–164 (2010).

PérezManrique, A. & Gomila, A. The comparative study of empathy: sympathetic concern and empathic perspective- taking in non-human animals. Biol. Rev. 93, 248–269 (2018).

Batson, C. D., Fultz, J. & Schoenrade, P. A. Distress and Empathy: Two Qualitatively Distinct Vicarious Emotions with Different Motivational Consequences. J. Pers. 55, 19–39 (1987).

Page, K. M. & Nowak, M. A. Empathy Leads to Fairness. Bull. Math. Biol. 64, 1101–1116 (2002).

Nowak, M. A. Five rules for the evolution of cooperation. Sci. (New York, N.y.) 314, 1560–1563 (2006).

Preston, S. D. The origins of altruism in offspring care. Psychol. Bull. 139, 1305–1341 (2013).

Hein, G., Lamm, C., Brodbeck, C. & Singer, T. Skin Conductance Response to the Pain of Others Predicts Later Costly Helping. PLoS ONE 6, e22759 (2011).

Burkett, J. P. et al. Oxytocin-dependent consolation behavior in rodents. Science 351, 375–378 (2016).

Kölliker, M. The Evolution of Parental Care (Oxford University Press, 2012).

de Waal, F. B. M. & Ferrari, P. F. Towards a bottom-up perspective on animal and human cognition. Trends Cogn. Sci. 14, 201–207 (2010).

Palagi, E., DallOlio, S., Demuru, E. & Stanyon, R. Exploring the evolutionary foundations of empathy: consolation in monkeys. Evol. Hum. Behav. 35, 341–349 (2014).

Palagi, E., Leone, A., Mancini, G. & Ferrari, P. F. Contagious yawning in gelada baboons as a possible expression of empathy. Proc. Natl. Acad. Sci. 106, 19262–19267 (2009).

Norscia, I. & Palagi, E. Yawn Contagion and Empathy in Homo sapiens. PLoS ONE 6, e28472 (2011).

Demuru, E. & Palagi, E. In bonobos yawn contagion is higher among kin and friends. PLoS ONE 7, e49613 (2012).

Palagi, E., Norscia, I. & Demuru, E. Yawn contagion in humans and bonobos: emotional affinity matters more than species. PeerJ 2, e519 (2014).

Palagi, E., Nicotra, V. & Cordoni, G. Rapid mimicry and emotional contagion in domestic dogs. Royal Soc. Open Sci. 2, 150505 (2015).

Nickerson, R. S. How we knowand sometimes misjudgewhat others know: Imputing one’s own knowledge to others. Psychol. Bull. 125, 737–759 (1999).

Birch, S. A. J. & Bloom, P. Understanding children’s and adults’ limitations in mental state reasoning. Trends Cogn. Sci. 8, 255–260 (2004).

Han, J. & Moraga, C. The influence of the sigmoid function parameters on the speed of backpropagation learning. In Mira, J. & Sandoval, F. (eds) From Natural to Artificial Neural Computation, Lecture Notes in Computer Science, 195–201 (Springer Berlin Heidelberg, 1995).

Rojas, R. Neural Networks: A Systematic Introduction (Springer-Verlag, Berlin Heidelberg, 1996).

Nielsen, M. A. Neural Networks and Deep Learning (2015).

Acknowledgements

M.L. is supported by a grant from the John Templeton Foundation. The opinions, interpretations, conclusions and recommendations are those of the authors and are not necessarily endorsed by the John Templeton Foundation.

Author information

Authors and Affiliations

Contributions

F.M. and M.L. wrote the manuscript. F.M. performed the analyses.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mafessoni, F., Lachmann, M. The complexity of understanding others as the evolutionary origin of empathy and emotional contagion. Sci Rep 9, 5794 (2019). https://doi.org/10.1038/s41598-019-41835-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-019-41835-5

This article is cited by

-

Cognitive Twists: The Coevolution of Learning and Genes in Human Cognition

Review of Philosophy and Psychology (2022)

-

Can I Feel Your Pain? The Biological and Socio-Cognitive Factors Shaping People’s Empathy with Social Robots

International Journal of Social Robotics (2022)

-

Effectiveness of the Mindfulness-Based OpenMind-Korea (OM-K) Preschool Program

Mindfulness (2020)

-

The Role of Sensorimotor Processes in Pain Empathy

Brain Topography (2019)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.