Abstract

Recent developments in integrated photonics technology are opening the way to the fabrication of complex linear optical interferometers. The application of this platform is ubiquitous in quantum information science, from quantum simulation to quantum metrology, including the quest for quantum supremacy via the boson sampling problem. Within these contexts, the capability to learn efficiently the unitary operation of the implemented interferometers becomes a crucial requirement. In this letter we develop a reconstruction algorithm based on a genetic approach, which can be adopted as a tool to characterize an unknown linear optical network. We report an experimental test of the described method by performing the reconstruction of a 7-mode interferometer implemented via the femtosecond laser writing technique. Further applications of genetic approaches can be found in other contexts, such as quantum metrology or learning unknown general Hamiltonian evolutions.

Similar content being viewed by others

Introduction

Linear optical networks have recently received increasing attention in the quantum regime thanks to the enhanced capability of building complex interferometers made possible by integrated photonics. This experimental achievement opened new perspectives in the adoption of linear optical networks for different quantum tasks, including quantum walks and quantum simulation1,2,3,4,5,6,7,8,9,10, quantum phase estimation11,12,13, as well as the experimental implementation of the Boson Sampling problem14,15,16,17,18,19,20,21. Within these contexts, it becomes crucial to learn the action of a linear process. On one side, the capability of efficiently reconstructing an unknown transformation provides an analysis tool for integrated devices. Indeed, it allows to verify the quality of the fabrication by checking the adherence of an implemented transformation with the desired one. Conversely, on the fundamental side precise knowledge of the unitary process is required to perform accurate tests on the experimental data. For instance, this holds in the case of Boson Sampling validation, where the adoption of statistical tests may require knowledge of the implemented unitary transformation19,20. Furthermore, the task of learning an unknown transformation can be in principle embedded into a larger class of problems, whose objective is to learn physical evolutions from training sets of data22,23,24.

While initial efforts have been dedicated to the characterization of generic quantum processes25,26,27,28,29,30,31,32, different methods have been specifically adopted and tested to reconstruct an unknown linear transformation \({\mathscr{U}}\) 33,34,35,36,37. Most of these approaches rely on single-photon and two-photon measurements. Intuitively, single-photon states can be used to obtain information on the square moduli of the unitary matrix, while two-photon interference provides knowledge of the complex phases of the elements of \({\mathscr{U}}\). Different data analysis approaches have been proposed and adopted to convert the raw measured data in an estimated unitary \({{\mathscr{U}}}_{r}\), exploiting conventional numerical minimization techniques33 or by analytically inverting the relations between experimental data and the elements of \({\mathscr{U}}\) 34. Other methods exploit classical light as input in the interferometer35. In this case, knowledge on the moduli is obtained by sending classical light on a single input, while knowledge on the phases is obtained by sending light on pairs of input modes and by measuring the interference fringes as a function of the relative phase.

In this article we discuss and test experimentally an approach for the reconstruction of linear optical interferometers based on the class of genetic algorithms38,39,40. The latter is a general method that exploits the principles of natural selection in the evolution of a biological system, and has found application to find the solution to optimization and search problems in several fields, including first applications in quantum information tasks41,42. In these papers, genetic approaches have been adopted to determine the unitary transformation solving a given computational problem41, and to optimize the digital implementation of a given Hamiltonian from a set of imperfect gates within a quantum simulation framework42. Furthermore, genetic approach have also found successful application for quantum control tasks43,44,45. We first discuss the general principles of operations of the broad class of genetic algorithms. Then, we show how to adapt these principles of operations to the specific case of linear optical networks tomography. Finally, we test experimentally the genetic algorithm by performing the reconstruction of a m = 7 modes integrated interferometer built by the femtosecond laser-writing technique46,47.

Results

Genetic reconstruction algorithm for unitary transformations

Genetic algorithms are a broad class of algorithms inspired by the natural evolution of biological systems, which evolve following the principle of natural selection38,39,40. This principle can be briefly described as follows: within an ecosystem, individuals struggling for survival coexist within the same population. Genetically fittest individuals, e.g. those with highest adaption to environmental variables, are more likely to survive and reproduce. The fitness of an individual is determined by its genetic signature, the DNA, which is composed by a set of genes representing its fundamental units. The set of genes belonging to all the individuals of a given population is called genetic pool. Two individuals generate the offspring that inherits a combination of the genes belonging to both the parents by means of reproduction. Thus, a single gene is or is not inherited but cannot be partially inherited. If the combination of inherited genes determines a better fitness than the parents’ one, the son will have higher survival probability. Since weaker individuals are more unlikely to survive, fittest genes are more likely to spread over the population and, consequently, a gradual improvement of the average fitness of the population is expected. The described evolution, however, would be destined to reach a local maximum since the evolved genetic pool would be composed of just a subset of the initial one. Indeed, the mechanism of reproduction does not allow the creation of new genes. This would imply that the maximum possible fitness reached within the population would strongly depend on the initial state. Hence, it is crucial to consider in this model also the mechanism of mutation38,39,40, a rare event that manifests when an inherited gene changes in a random fashion. This mutated gene would likely not be present in any of the parents’ DNA and could possibly provide new advantageous features, causing the increase of the individual’s probability of survival. This will allow the mutated gene to spread over the population by reproduction, increasing the maximum fitness achievable within the given genetic pool and thus rendering the evolution no longer limited by the initial conditions.

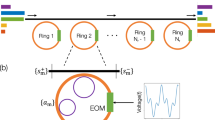

The principles of genetic algorithms can be applied to learning an unknown linear transformation \({\mathscr{U}}\) (Fig. 1a). The goal is to find the unitary matrix \({{\mathscr{U}}}_{r}\) whose action best describes a set of experimental data. The training set is composed of single-photon probabilities \({{\mathscr{P}}}_{i,j}\), describing the transition from input i to output j (Fig. 1b), and Hong-Ou-Mandel48 visibilities, describing two-photon interference from inputs (i, j) to outputs (p, q) (Fig. 1c). Hong-Ou-Mandel visibilities are defined as \({{\mathscr{V}}}_{ij,pq}=({{\mathscr{P}}}_{ij,pq}^{{\rm{d}}}-{{\mathscr{P}}}_{ij,pq}^{{\rm{q}}})/{{\mathscr{P}}}_{ij,pq}^{{\rm{d}}}\). Here \({{\mathscr{P}}}_{ij,pq}^{{\rm{d}}}\) is the probability for two distinguishable particles, obtained for a relative delay Δτ much larger than the coherence time, and \({{\mathscr{P}}}_{ij,pq}^{{\rm{q}}}\) is the probability for two indistinguishable photons (Δτ = 0). These quantities are related to the matrix elements of \({\mathscr{U}}\) as \({{\mathscr{P}}}_{i,j}={|{{\mathscr{U}}}_{j,i}|}^{2}\), \({{\mathscr{P}}}_{ij,pq}^{{\rm{d}}}={|{{\mathscr{U}}}_{p,i}{{\mathscr{U}}}_{q,j}|}^{2}+{|{{\mathscr{U}}}_{q,i}{{\mathscr{U}}}_{p,j}|}^{2}\) and \({{\mathscr{P}}}_{ij,pq}^{{\rm{q}}}={|{{\mathscr{U}}}_{p,i}{{\mathscr{U}}}_{q,j}+{{\mathscr{U}}}_{q,i}{{\mathscr{U}}}_{p,j}|}^{2}\). The training set is thus composed by the measured values \({\tilde{{\mathscr{P}}}}_{i,j}\) and \({\tilde{{\mathscr{V}}}}_{ij,pq}\), with associated gaussian 1σ experimental errors \({\rm{\Delta }}{\tilde{{\mathscr{P}}}}_{i,j}\) and \({\rm{\Delta }}{\tilde{{\mathscr{V}}}}_{ij,pq}\).

(a) Learning an unknown linear unitary transformation via a genetic approach. The training set, measured from the unknown transformation, is processed by an algorithm based on the principles of biological systems. The unitary transformation is decomposed in elementary units, i.e. the genes \({G}_{k}^{l}\) composing its DNA: beam-splitters (BSs) with transmittivity \({t}_{k}^{l}\) and phase-shifts (PSs) \({\alpha }_{k}^{l}\), \({\beta }_{k}^{l}\). Crossover and mutation mechanisms rule the evolution for each step of the algorithm. (b) Schematic view of single-photon measurements corresponding to data set \({\tilde{{\mathscr{P}}}}_{i,j}\). (c) Schematic view of two-photon measurements corresponding to data set \({\tilde{{\mathscr{V}}}}_{ij,pq}\). (d) Internal structure of the implemented m = 7 integrated linear interferometer. Blue regions indicate directional couplers, that is, integrated versions of beam-splitters, while cyan regions indicate phase shifts (4 layers L1–L4), introduced by modifying the optical path of the waveguides (W1–W7).

Let us now describe how to adapt the principles of genetic evolution to develop the actual algorithm (described in Supplementary Note 1). In this scenario, the group of individuals is a set of unitary transformations. At the initial step, the population Φ is composed of N randomly-chosen individuals E l : Φ = {E 1, …, E N }. Every individual E l has to be completely determined by a set of real parameters, that represent its DNA. It is thus necessary to identify a suitable choice to decompose a unitary transformation in a set of elementary blocks. At a first glance, one could consider the elements of the unitary transformation (moduli and phases) to compose the DNA of the individuals. However, this is not an appropriate choice since the generation of new offsprings from a random recombination of the parents according to this mechanism can lead to a non-unitary matrix. A better approach is obtained by exploiting the result by Reck et al.49, which showed that it is possible to decompose any linear transformation in a network composed of phase shifters (PS) and beam splitters (BS) (see Fig. 1a). A single gene \({G}_{k}^{l}=({t}_{k}^{l},{\alpha }_{k}^{l},{\beta }_{k}^{l})\) of the unitary’s DNA is composed of a PS-PS-BS set, defined by the transmittivity \({t}_{k}^{l}\in \mathrm{[0},1)\), and by the phases \({\alpha }_{k}^{l},{\beta }_{k}^{l}\in [0,\pi ]\). The DNA of an individual is thus represented by an ordered vector \({E}_{l}=\{{G}_{1}^{l},{G}_{2}^{l},\ldots ,{G}_{M}^{l}\}\), where \(M={\sum }_{g=1}^{m-1}\,g=m(m-\mathrm{1)}/2\). The global unitary \({{\mathscr{U}}}_{{E}_{l}}\) of the individual E l can be obtained by multiplying the set of unitary matrices \({{\mathscr{U}}}_{k}^{l}\) describing the action of the k-th gene \({G}_{k}^{l}\). With such a parametrization, unitariety of the overall transformation is naturally guaranteed. Note that this decomposition does not necessarily represent the actual internal structure of the system, which may be in general unknown. Indeed, it represents a mathematical tool to parametrize a unitary matrix as the combination of independent genes.

Once defined the decomposition of a unitary transformation in elementary units, it is necessary to implement the mechanism of genetic evolution. The first ingredient is the fitness function \(f(E)\in {\mathbb{R}}:\mathrm{[0},\infty )\), which quantifies the survival probability of a given individual. In our case f(E) is chosen to be inversely proportional to the distance between the training set and theoretical predictions. Assuming \({{\mathscr{P}}}_{i,j}^{{E}_{l}}\) and \({{\mathscr{V}}}_{ij,pq}^{{E}_{l}}\) to be one-photon and two-photon predictions calculated from \({{\mathscr{U}}}_{{E}_{l}}\), we define the fitness function as f(E l ) = 1/χ 2, where \({\chi }^{2}={\chi }_{{\mathscr{P}}}^{2}+{\chi }_{{\mathscr{V}}}^{2}\) is the chi-square function composed by two terms

In other words, the fitness f(E l ) represents the quality of the solution E l . At the beginning of each step of the evolution, the individuals are sorted in decreasing order according to their fitnesses. The second half of the population corresponding to the lowest fitness is removed, and replaced with a new set of individuals according to the crossover function. The latter governs the reproduction mechanism within the population. Two individuals E A and E B generate one child E C whose DNA is composed of half genes from parent E A and the other half from parent E B , chosen randomly. In the crossover mechanism, the position of the genes to be inherited from E A or E B occupy the same place in the child’s DNA sequence. Finally, the third ingredient is the mutation process. For any iteration of the algorithm any gene \({G}_{k}^{l}\) has a probability γ (called mutation rate) of being replaced by a new random triple \(\{{\tilde{t}}_{k}^{l},{\tilde{\alpha }}_{k}^{l},{\tilde{\beta }}_{k}^{l}\}\). One of the main advantages of genetic algorithms38,39,40 with respect to other methods is their capability of performing an exhaustive search in the parameters space. This is achieved by controlling the mutation rate, and by including a meaningful sampling probability for the DNA elements to avoid exploring sparser regions of the parameters space. Furthermore, genetic algorithms are particularly suitable to be implemented using parallel computation strategies, thus enabling to exploit this approach to significantly reduce the computational time. The price to pay with respect to other methods is the reduction of the system governability, since the evolution is not deterministic.

Before applying the developed algorithm, it is necessary to determine in a training stage the optimal combination of hyperparameters, that cannot be derived a priori and may depend on the dimension m of the network. It is thus possible to evaluate the convergence of the algorithm by tuning the hyperparameters with numerically simulated data (see Supplementary Note 2 and Supplementary Fig. 1). Indeed, an inappropriate choice of the hyperparameters can prevent the algorithm to reach the desired global minimum. The two most important hyperparameters are mutation rate and population size. The former is a crucial parameter, since its incorrect setting may lead the algorithm to avoid convergence to the global minimum. Indeed, an exceedingly high mutation frequency would reduce the search process to a random walk in the space of solutions, while an extremely small value would prevent the algorithm to reach the global maximum of f(E). Population size has to be adjusted to avoid an unnecessary large population, which contains redundant elements and may significantly increase the number of iterations to reach convergence, or a too small population, not allowing to explore a sufficient set of gene combinations. Other less crucial hyperparameters involve: the number of iterations to wait until packing is performed, and the number N 1 of unitaries to pick from the analytic algorithm (only if they are included in the initial population).

The convergence of the algorithm once determined the correct set of hyperparameters has been tested with numerically simulated data, showing that the algorithm is able to reach a value of the χ 2 close to its expectation value (equal to the number of degree of freedom ν, see Supplementary Note 2 and Supplementary Fig. 1). More specifically, we observe that a single set of hyperparameters can be employed for all unitary matrices at fixed m, while it is likely that by further increasing the interferometer dimension m, the set of hyperparameters has to be tuned to optimize and guarantee convergence of the algorithm.

Experimental results

We tested the genetic algorithm by reconstructing the transformation induced by a 7-mode integrated interferometer, fabricated in a borosilicate glass substrate by means of the femtosecond laser waveguide writing46,47 technique. This approach exploits the permanent and localized increase in the refraction index obtained by nonlinear absorption of focused femtosecond pulses, thus directly writing waveguides in the material. The internal structure of the implemented interferometer is shown in Fig. 1d, and is composed by a network of symmetric 50–50 directional couplers and a phase pattern. We observe that the internal structure of the interferometer is different from the triangular structure adopted in the genetic algorithm, being the latter only a mathematical tool.

Single-photon and two-photon input states, necessary to measure the data set of the algorithm, were prepared by a spontaneous parametric down conversion source. The 750 mW pump beam at λ P = 392.5 nm is obtained by second-harmonic generation of a λ = 785 nm pulsed Ti:Sa laser source, with 76 MHz repetition rate and Δτ = 250 fs pulse duration. The photon source is a type-II, 2 mm length BBO crystal (Beta-Barium Borate), which generates pairs of photons with opposite polarization. Photons after generation are spectrally selected by 3 nm interference filters, analyzed in polarization, collected in single-mode fibers, and then propagated through two independent delay lines to adjust the time difference Δτ between the input particles. Then, after fiber polarization compensation, the generated photons are injected in the input modes of the interferometer through a single-mode fiber array, and are then collected by a multi-mode fiber array before detection with a set of single-photon avalanche photodiodes (APD). Output single photon counts and two-fold coincidences are collected by an electronic acquisition system. The overall apparatus (source, interferometer and detectors) does not require phase stabilization. Indeed, due to the integrated implementation of the unitary transformation, the interferometer is intrinsically stable with respect to internal phases. Furthermore, injection and detection of Fock states renders the system insensitive to fluctuating phases at the input and at the output of the device, differently from other methods relying on classical light35.

The learning method based on the genetic approach has been applied to the 7-mode chip. The complete set of experimental measurements consists of d 1 = 49 = m 2 single-photon transition probabilities \({\tilde{{\mathscr{P}}}}_{i,j}\) and d 2 = 441 = m 2(m − 1)2/4 two-photon Hong-Ou-Mandel visibilities \({\tilde{{\mathscr{V}}}}_{ij,pq}\), corresponding to an overall amount of d = d 1 + d 2 = 490 data. The complete set of collected experimental data is reported in Fig. 2. The two-photon visibilities \({\tilde{{\mathscr{V}}}}_{ij,pq}\), insensitive to photon losses, are estimated by recording the input-output two-fold coincidences \({\tilde{{\mathscr{P}}}}_{ij,pq}({\rm{\Delta }}\tau )\) as a function of the relative delay Δτ between the input photons. The resulting pattern is analyzed by performing a best fit according to the function \({\tilde{{\mathscr{P}}}}_{ij,pq}({\rm{\Delta }}\tau )=C(1+{\tilde{{\mathscr{V}}}}_{ij,pq}{e}^{-{\sigma }^{2}{(\tau -{\tau }_{0})}^{2}})\), where \((C,{\tilde{{\mathscr{V}}}}_{ij,pq},\sigma ,{\tau }_{0})\) are free-parameters and τ is the independent variable. Experimental errors on the single-photon probabilities \({\rm{\Delta }}{\tilde{{\mathscr{P}}}}_{i,j}\) and on the two-photon coincidences \({\tilde{{\mathscr{P}}}}_{ij,pq}({\rm{\Delta }}\tau )\) are due to the Poissonian statistics of the measured events, while errors on the visibilities \({\tilde{{\mathscr{V}}}}_{ij,pq}\) are obtained from the fitting procedure.

The genetic algorithm maximizes the fitness function f(E l ) [Eq. (1)] between the training set and the predictions \({{\mathscr{P}}}_{i,j}^{{E}_{l}}\) and \({{\mathscr{V}}}_{ij,pq}^{{E}_{l}}\) obtained from the unitary \({{\mathscr{U}}}_{{E}_{l}}\) belonging to the population of the genetic algorithm. The starting point of the protocol is a random population of N = 100 unitaries. In Fig. 3 we report the evolution of the best χ 2 in the genetic pool during the running time of the algorithm. We observe that an almost stable value of the χ 2 is obtained after \({N}_{{\rm{iter}}}\sim 60000\) iterations. The genetic approach can be improved by modifying the starting point. At the initial step, a subset of N 1 unitaries can be chosen starting from the algorithm of ref.34. With that method, a minimal set of two-photon data is exploited to retrieve analytically the elements of the unitary matrix. This approach can be extended by considering that m 2 independent estimates of \({\mathscr{U}}\) can be obtained by recording the full set of single- and two-photon measurements, and by appropriately permuting the mode indexes17 to select m 2 independent minimal data sets. For the genetic algorithm, we then choose the N 1 = 20 unitaries (among the m 2 = 49 possible matrices) presenting the lower values of χ 2 (higher fitnesses). This provides a reasonable starting point for the genetic pool. Finally, the remaining subset of N 2 = 80 are randomly generated from the Haar measure. In such a way, the algorithm reaches convergence after a smaller number of iterations \({N}_{{\rm{iter}}}\sim 40000\), corresponding to a computational time of t~1 h on a laptop (see Fig. 3). The convergence of the genetic algorithm is confirmed by the decrease of χ 2 from the starting value \({{\rm{\min }}}_{{{\mathscr{U}}}_{r}^{(a)}}\,{\chi }^{2}\sim 255000\), obtained from the best unitary \({\overline{{\mathscr{U}}}}_{r}^{(a)}\) of the analytic approach, to a final value of \({\chi }_{r,(g)}^{2}\sim 17096\), leading to an improvement of one order of magnitude. Given the number of degrees of freedoms ν for this problem size \(\nu ={m}^{2}+{(\begin{array}{c}m\\ n\end{array})}^{2}-3m(m-\mathrm{1)/2}=427\), the final result corresponds to a value of the reduced \({\chi }_{\nu }^{2}={\chi }^{2}/\nu \sim 40\). This value of the reduced \({\chi }_{\nu }^{2}\) indicates that the adopted model for the unitary transformation and for the input photons needs to be improved. Indeed, the algorithm employs as internal structure the general decomposition given by Reck’s lemma49, and does not take into account the actual layout of the device. In particular, internal and output losses can lead to non-unitary behavior and are not taken into account by the present model. Furthermore, the input photons for two-photon measurements are not perfectly indistiguishable. The value of the reduced \({\chi }_{\nu }^{2}\) can be thus improved by including these features in the fitness evaluation. For instance, partial photon indistinguishability can be included as an additional parameter p in the χ 2 that reduces the value of the two-photon visibilities. By adding it only in the final calculation of the χ 2, the latter diminishes from \({\chi }^{2}\sim 17096\) to \({\chi }^{2}\sim 14067\), corresponding to a reduced value \({\chi }_{\nu }^{2}\sim 33\) (for a value of p = 0.95 pre-characterized with an Hong-Ou-Mandel interference measurement). Improved results are expected if this parameter is included directly in the fitness evaluation.

Evolution of the minimum χ 2 in the genetic pool through the running time of the algorithm, as a function of the number of iterations. Dark yellow dashed lines: the starting point is provided by a random set of individuals. Green solid lines: the initial population includes the best N 1 = 20 unitaries obtained with the analytic method. Horizontal blue dotted lines: best χ 2 obtained with the analytic method. Inset: highlight for N iter ∈ [0; 6600] of the green curve. The χ 2 values achieved during the algorithm iterations correspond to a range \(40\mathop{ < }\limits_{ \tilde {}}{\chi }_{\nu }^{2}\mathop{ < }\limits_{ \tilde {}}585\) for the reduced \({\chi }_{\nu }^{2}\).

As an additional figure of merit, we consider the similarities \({S}_{r}^{(a)}\) between the experimental two-photon visibilities and the predictions obtained from the analytic unitaries \({{\mathscr{U}}}_{r}^{(a)}\), according to the definition \({S}_{r}^{(a)}=1-{\sum }_{i,j,p,q}\,|{\tilde{{\mathscr{V}}}}_{ij,pq}-{{\mathscr{V}}}_{ij,pq}^{r,(a)}|/\mathrm{(2}{d}_{2})\) (and analogous definition for the genetic approach). The similarity \({S}_{r}^{(g)}\) obtained for the output unitary \({{\mathscr{U}}}_{r}^{(g)}\) from the genetic algorithm, equal to \({S}_{r}^{(g)}=0.957\pm 0.001\), clearly outperforms the maximum value obtained from the analytic algorithm: \({{\rm{\max }}}_{{{\mathscr{U}}}_{r}^{(a)}}\,{S}_{r}^{(a)}=0.920\pm 0.001\). This suggests that, while a direct analitic inversion34 from \({{\mathscr{V}}}_{ij,pq}\) to \({\mathscr{U}}\) permits to obtain m 2 independent estimates each requiring a smaller amount of data (≈2 m 2), the adoption of a larger training set and the capability of taking into account experimental errors (in the χ 2) in the genetic approach permits to increase the robustness with respect to experimental noise.

Finally, we observe that the decrease of χ 2 occurs with two different trends (see inset of Fig. 3). Smooth variations are due to the crossover mechanism between members of the population, converging to the best possible unitary within the available genetic pool. Conversely, fast jumps in χ 2 are due to random mutations in the genetic pool.

The results for the obtained transformation are reported in Fig. 4 and in Supplementary Note 3, where the output unitary of the genetic algorithm \({{\mathscr{U}}}_{r}^{(g)}\) is compared with the theoretical one \({\mathscr{U}}\). The latter is calculated from fabrication parameters according to the layout of Fig. 1d. This comparison indicates how close the implemented interferometer is with respect to the ideal one. A quantitative parameter is the gate fidelity between \({\mathscr{U}}\) and \({{\mathscr{U}}}_{r}^{(g)}\), defined as \({F}_{r}^{(g)}=|{\rm{Tr}}[{{\mathscr{U}}}^{\dagger }{{\mathscr{U}}}_{r}^{(g)}]|/m\). The value obtained for the implemented interferometer is \({F}_{r}^{(g)}=0.975\pm 0.013\), thus showing the quality of the fabrication process. The error on the gate fidelity \({F}_{r}^{(g)}\) has been estimated by a M = 10000 Monte-Carlo simulation of unitary reconstructions with the analytic method.

Further optimizations of the protocol can be envisaged. The χ 2 function in the fitness may be replaced with a weighted function \({\chi }_{w}^{2}=w{\chi }_{{\mathscr{P}}}^{2}+\mathrm{(1}-w){\chi }_{{\mathscr{V}}}^{2}\). We then performed the reconstruction method for different values of the weight w, observing that for the present data the best choice is obtained for the symmetric case w = 0.5. The optimal weight w can nevertheless vary with the dimension m of the network. Additionally, the number of unitaries N 1 taken at the initial step from the analytic algorithm can be optimized depending on the problem size. Room for optimization can be also found by checking the possibility of adding childs with random permutations in the location of the genes. Finally, as previously discussed the reported method can be modified to exploit knowledge on the internal structure of the device, including internal losses due to propagation and to the directional couplers. In the experimental case shown above, the universal structure can be replaced by the actual structure of the interferometer, thus redefining its DNA.

Conclusions

In this article we have described an approach to learn an unknown linear optical process \({\mathscr{U}}\) by exploiting a specifically tailored genetic algorithm. We have then tested this approach for the reconstruction of an unknown 7 × 7 integrated linear optical interferometer built by the femtosecond laser-writing technique. The experimental results show that this methodology is suitable for the characterization of linear optical networks. The involved resources (number of parameters for the DNA and size of the data set) scale polynomially with the size m of the network. Furthermore, the evaluation of the fitness functions requires resources scaling polynomially as m 4. Thus, this approach could be suitable to be employed on systems with increasing number of modes, with applications in different contexts such as quantum simulation and quantum interferometry. Several perspectives can be envisaged by applying these genetic approaches in the context of learning unknown patterns50 or general Hamiltonian evolutions23. The algorithmic approach itself may be adapted to progressively change the parameters of its evolution or the measured data set sequence depending on the results of the previous steps.

References

Perets, H. B. et al. Realization of quantum walks with negligible decoherence in waveguide lattices. Phys. Rev. Lett. 100, 170506 (2008).

Broome, M. A. et al. Discrete single-photon quantum walks with tunable decoherence. Phys. Rev. Lett. 104, 153602 (2010).

Peruzzo, A. et al. Quantum walks of correlated photons. Science 329, 1500–1503 (2010).

Schreiber, A. et al. Decoherence and disorder in quantum walks: From ballistic spread to localization. Phys. Rev. Lett. 106, 180403 (2011).

Owens, J. O. et al. Two-photon quantum walks in an elliptical direct-write waveguide array. New Journal of Physics 13, 075003 (2011).

Kitagawa, T. et al. Observation of topologically protected bound states in photonic quantum walks. Nature Communications 3, 882 (2012).

Schreiber, A. et al. A 2d quantum walk simulation of two-particle dynamics. Science 336, 55–58 (2012).

Sansoni, L. et al. Two-particle bosonic-fermionic quantum walk via integrated photonics. Phys. Rev. Lett. 108, 010502 (2012).

Crespi, A. et al. Anderson localization of entangled photons in an integrated quantum walk. Nature Photonics 7, 322–328 (2013).

Pitsios, I. et al. arXiv:1603.02669 (2016).

Spagnolo, N. et al. Quantum interferometry with three-dimensional geometry. Scientific Reports 2, 862 (2012).

Chaboyer, Z., Meany, T., Helt, L. G., Withford, M. J. & Steel, M. J. Tuneable quantum interference in a 3d integrated circuit. Scientific Reports 5, 9601 (2015).

Ciampini, M. A. et al. Quantum-enhanced multiparameter estimation in multiarm interferometers. Scientific Reports 6, 28881 (2016).

Broome, M. A. et al. Photonic boson sampling in a tunable circuit. Science 339, 794–798 (2013).

Spring, J. B. et al. Boson sampling on a photonic chip. Science 339, 798–801 (2013).

Tillmann, M. et al. Experimental boson sampling. Nature Photonics 7, 540–544 (2013).

Crespi, A. et al. Integrated multimode interferometers with arbitrary designs for photonic boson sampling. Nature Photonics 7, 545–549 (2013).

Spagnolo, N. et al. General rules for bosonic bunching in multimode interferometers. Phys. Rev. Lett. 111, 130503 (2013).

Spagnolo, N. et al. Experimental validation of photonic boson sampling. Nature Photonics 8, 615–620 (2014).

Carolan, J. et al. On the experimental verification of quantum complexity in linear optics. Nature Photonics 8, 621–626 (2014).

Bentivegna, M. et al. Experimental scattershot boson sampling. Science Advances 1, e1400255 (2015).

Bisio, A., Chiribella, G., D’Ariano, G. M., Facchini, S. & Perinotti, P. Optimal quantum learning of a unitary transformation. Phys. Rev. A 81, 032324 (2010).

Granade, C. E., Ferrie, C., Wiebe, N. & Cory, D. G. Robust online hamiltonian learning. New J. Phys. 14, 103013 (2012).

Bang, J., Ryu, J., Yoo, S., Pawlowski, M. & Lee, J. A strategy for quantum algorithm design assisted by machine learning. New J. Phys. 16, 073017 (2014).

Altepeter, J. B. et al. Ancilla-assisted quantum process tomography. Phys. Rev. Lett. 90, 193601 (2003).

O’Brien, J. L. et al. Quantum process tomography of a controlled-not gate. Phys. Rev. Lett. 93, 080502 (2004).

Rohde, P. P., Pryde, G. J., O’Brien, J. L. & Ralph, T. C. Quantum-gate characterization in an extended hilbert space. Phys. Rev. A 72, 032306 (2005).

Mohseni, M. & Lidar, D. A. Direct characterization of quantum dynamics. Phys. Rev. Lett. 97, 170501 (2006).

Mohseni, M., Rezakhani, A. T. & Lidar, D. A. Quantum-process tomography: Resource analysis of different strategies. Phys. Rev. A 77, 032322 (2008).

Lobino, M. et al. Complete characterization of quantum-optical processes. Science 322, 563–566 (2008).

Bongioanni, I., Sansoni, L., Sciarrino, F., Vallone, G. & Mataloni, P. Experimental quantum process tomography of non-trace-preserving maps. Phys. Rev. A 82, 042307 (2010).

Ferreyrol, F., Spagnolo, N., Blandino, R., Barbieri, M. & Tualle-Brouri, R. Heralded processes on continuous-variable spaces as quantum maps. Phys. Rev. A 86, 062327 (2012).

Peruzzo, A., Laing, A., Politi, A., Rudolph, T. & O’Brien, J. L. Multimode quantum interference of photons in multiport integrated devices. Nat. Commun. 2, 224 (2011).

Laing, A. & O’Brien, J. L. arXiv:1208.2868v1 (2012).

Rahimi-Keshari, S. et al. Direct characterization of linear-optical networks. Optics Express 21, 13450–13458 (2013).

Dhand, I., Khalid, A., Lu, H. & Sanders, B. C. Accurate and precise characterization of linear optical interferometers. J. Opt. 18, 035204 (2016).

Tillmann, M., Schmidt, C. & Walther, P. On unitary reconstruction of linear optical networks. J. Opt. 18, 114002 (2016).

Whitley, D. A genetic algorithm tutorial. Statistics and Computing 4, 65–85 (1994).

Mitchell, M. An introduction to Genetic Alghorithms (Cambridge, MA: MIT Press, 1996).

Schmitt, L. M. Theory of genetic algorithms. Theoretical Computer Science 259, 1–61 (2001).

Bang, J. & Yoo, S. A genetic-algorithm-based method to find unitary transformations for any desired quantum computation and application to a one-bit oracle decision problem. J. Korean Phys. Soc. 65, 2001 (2014).

Las Heras, U., Alvarez-Rodriguez, U., Solano, E. & Sanz, M. Genetic algorithms for digital quantum simulations. Phys. Rev. Lett. 116, 230504 (2016).

Assion, A. et al. Control of Chemical Reactions by Feedback-Optimized Phase-Shaped Femtosecond Laser Pulses. Science 282, 919–922 (1998).

Bardeen, C. J. et al. Feedback quantum control of molecular electronic population transfer. Chem. Phys. Lett. 280, 251–258 (1999).

Rabitz, H., de Vivie-Riedle, R., Motzkus, M. & Kompa, K. Whither the Future of Controlling Quantum Phenomena? Science 288, 824–828 (2000).

Gattass, R. & Mazur, E. Femtosecond laser micromachining in transparent materials. Nature Photonics 2, 219–225 (2008).

Della Valle, G., Osellame, R. & Laporta, P. Micromachining of photonic devices by femtosecond laser pulses. Journal of Optics A: Pure and Applied Optics 11, 013001 (2009).

Hong, C. K., Ou, Z. Y. & Mandel, L. Measurement of subpicosecond time intervals between two photons by interference. Phys. Rev. Lett. 59, 2044–2046 (1987).

Reck, M., Zeilinger, A., Bernstein, H. J. & Bertani, P. Experimental realization of any discrete unitary operator. Phys. Rev. Lett. 73, 58–61 (1994).

Schuld, M., Sinayskiy, I. & Petruccione, F. An introduction to quantum machine learning. Contemporary Physics 56, 172–185 (2015).

Acknowledgements

We acknowledge very useful discussions with D. J. Brod and E. F. Galvão. This work was supported by the ERC-Starting Grant 3D-QUEST (3D-Quantum Integrated Optical Simulation; grant agreement no. 307783): http://www.3dquest.eu, and by the H2020-FETPROACT-2014 Grant QUCHIP (Quantum Simulation on a Photonic Chip; grant agreement no. 641039): http://www.quchip.eu.

Author information

Authors and Affiliations

Contributions

E.M., N.S. and F.S. conceived the genetic approach for learning an unknown transformation and developed the algorithm. A.C., R.R. and R.O. fabricated the integrated device. N.S., C.V., M.B., E.M., F.S. performed the quantum measurements. All authors discussed the experimental implementation and results, and contributed to writing the paper.

Corresponding authors

Ethics declarations

Competing Interests

The authors declare that they have no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Spagnolo, N., Maiorino, E., Vitelli, C. et al. Learning an unknown transformation via a genetic approach. Sci Rep 7, 14316 (2017). https://doi.org/10.1038/s41598-017-14680-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-017-14680-7

This article is cited by

-

A machine learning approach to Bayesian parameter estimation

npj Quantum Information (2021)

-

Learning models of quantum systems from experiments

Nature Physics (2021)

-

Objective function estimation for solving optimization problems in gate-model quantum computers

Scientific Reports (2020)

-

Basic protocols in quantum reinforcement learning with superconducting circuits

Scientific Reports (2017)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.