Abstract

Brain imaging genetics intends to uncover associations between genetic markers and neuroimaging quantitative traits. Sparse canonical correlation analysis (SCCA) can discover bi-multivariate associations and select relevant features, and is becoming popular in imaging genetic studies. The L1-norm function is not only convex, but also singular at the origin, which is a necessary condition for sparsity. Thus most SCCA methods impose \({\ell }_{{\bf{1}}}\)-norm onto the individual feature or the structure level of features to pursuit corresponding sparsity. However, the \({\ell }_{{\bf{1}}}\)-norm penalty over-penalizes large coefficients and may incurs estimation bias. A number of non-convex penalties are proposed to reduce the estimation bias in regression tasks. But using them in SCCA remains largely unexplored. In this paper, we design a unified non-convex SCCA model, based on seven non-convex functions, for unbiased estimation and stable feature selection simultaneously. We also propose an efficient optimization algorithm. The proposed method obtains both higher correlation coefficients and better canonical loading patterns. Specifically, these SCCA methods with non-convex penalties discover a strong association between the APOE e4 rs429358 SNP and the hippocampus region of the brain. They both are Alzheimer’s disease related biomarkers, indicating the potential and power of the non-convex methods in brain imaging genetics.

Similar content being viewed by others

Introduction

By identifying the associations between genetic factors and brain imaging measurements, brain imaging genetics intends to model and understand how genetic factors influence the structure or function of human brain1,2,3,4,5,6,7,8,9,10,11,12,13,14. Both genetic biomarkers such as single nucleotide polymorphisms (SNPs), and brain imaging measurements such as imaging quantitative traits (QTs) are multivariate. To address this problem, bi-multivariate association models, such as multiple linear regression15, reduced rank regression16,17,18, parallel independent component analysis19, partial least squares regression20,21, canonical correlation analysis (CCA)22 and their sparsity-inducing variants23, have been widely used to uncover the joint effect of multiple SNPs on one or multiple QTs. Among them, SCCA (Sparse CCA), which can discover bi-multivariate relationships and extract relevant features, is becoming popular in brain imaging genetics.

The CCA technique has been introduced for several decades24. CCA can only perform well when the number of observations is larger than the combined feature number of the two views. Unfortunately, the problem usually is a large-p-small-n problem in the biomedical and biology studies. And it gets even worse because in CCA we are facing a large-(p + q)-small-n problem. In order to overcome this limitation, sparse CCA (SCCA)25,26,27,28,29,30,31,32,33,34,35,36 employs a sparsity inducing regularization term to select a small set of relevant features and has received increasing attention. The \({\ell }_{1}\)-norm based SCCA method25 has gained great success for its sparsity pursuing capability. After that, there are many SCCA variants based on the \({\ell }_{1}\)-norm. For examples, the fused lasso penalty imposes the \({\ell }_{1}\)-norm onto the ordered pairwise features25, and the group lasso penalty imposes the \({\ell }_{1}\)-norm onto the group of features29,32. Further, the graph lasso or the graph guided lasso can be viewed as imposing the \({\ell }_{1}\)-norm onto the pairwise features defined by an undirected graph29.

However, the \({\ell }_{1}\)-norm penalty shows the conflict of optimal prediction and consistent feature selection37. In penalized least squares modeling, Fan and Li38 showed that a good penalty function should meet three properties. First, the penalty function should be singular at the origin to produce sparse results. Second, it should produce continuous models for stable model selection, and third, the penalty function should not penalize large coefficients to avoid estimation bias. The \({\ell }_{1}\)-norm penalty is successful in feature selection because it is singular at the origin. On the contrary, the \({\ell }_{1}\)-norm penalty over-penalizes large coefficients, and thus it may be suboptimal with respect to the estimation risk39,40. The \({\ell }_{0}\)-norm function which only involves the number of nonzero features is an ideal sparsity-inducing penalty. However, it is neither convex nor continuous, and thus solving \({\ell }_{0}\)-norm constrained problem is NP-hard41.

A number of non-convex penalties are proposed as the surrogate of the \({\ell }_{0}\)-norm to handle this issue. These penalties includes the \({\ell }_{\gamma }\)-norm (0 < γ < 1) penalty42, the Geman penalty43, the Smoothly Clipped Absolute Deviation (SCAD) penalty38, the Laplace penalty44, the Minimax Concave Penalty (MCP)45, the Exponential-Type Penalty (ETP)46 and the Logarithm penalty47. These non-convex functions have attractive theoretical properties for they all are singular at the origin and leave those larger coefficients unpenalized. Though they have gained great success in generalized linear models (GLMs), it is an unexplored topic to apply them to the SCCA models for achieving sparsity and unbiased prediction simultaneously.

Therefore, it is essential and of great interest to investigate performances of various SCCA models based on these non-convex penalties. A major challenge of non-convex function is the computational complexity. The local quadratic approximation (LQA) technique is introduced to solve the SCAD penalizing problem38. LQA approximates the objective by a locally quadratic expression which can be solved like a ridge constrained problem. Inspired by this, in this paper, we propose a generic non-convex SCCA models with these non-convex penalties, and propose a unified optimization algorithm based on the LQA technique and the Alternate Convex Search (ACS) method48. Using both synthetic data and real imaging genetic data, the experimental results show that with appropriate parameters, the non-convex SCCA methods have better performance on both canonical loading patterns and correlation coefficients estimation than the \({\ell }_{1}\)-norm based SCCA methods.

Methods

Throughout this paper, scalars are denoted as italic letters, column vectors as boldface lowercase letters, and matrices as boldface capitals. The \(\Vert {\bf{u}}\Vert \) denotes the Euclidean norm of a vector u.

Preliminaries

Sparse Canonical Correlation Analysis (SCCA)

Let \({\bf{X}}\in { {\mathcal R} }^{n\times p}\) be a matrix representing the SNP biomarkers data, where n is the number of participants and p is the number of SNPs. Let \({\bf{Y}}\in { {\mathcal R} }^{n\times q}\) be the QT data with q being the number of imaging measurements. A typical SCCA model is defined as

where Xu and Yv are the canonical variables, u and v are the corresponding canonical vectors we desire to estimate, and c 1, c 2 are the tuning parameters that control the sparsity level of the solution. The penalty function could be the \({\ell }_{1}\)-norm penalty, or its variants such as the fused lasso, group lasso and graph lasso25,27,29,32,34.

Non-convex Penalty Functions for SCCA

In this paper, we investigate seven non-convex surrogate penalties of \({\ell }_{0}\)-norm in the SCCA model. They are singular at the origin, which is essential to achieve sparsity in the solution. And they do not overly penalize large coefficients. In order to facilitate a unified description, we denote the non-convex penalty as

where λ and γ are nonnegative parameters, and P λ,γ (|u i |) is a non-convex function. We absorb λ into the penalty because it cannot be decoupled from several penalties, such as the SCAD function38. We here have seven penalties and they are described in Table 1 and visualized in Fig. 1, where for clarity we have dropped the subscript i in u i . There is a sharp point at the origin for each of them, indicating that they are singular at the origin. This is essential to achieve sparseness in the solution. Besides, these curves are concave in |u i | and monotonically decreasing on (−∞, 0], and monotonically increasing on [0, ∞). Therefore, though these penalties are not convex, they are piecewise continuously differentiable and their supergradients exist on both (−∞, 0] and [0, ∞)49. Table 1 also shows their supergradients P′ λ,γ (|u i |) with respect to |u i |.

The Proposed Non-convex SCCA Model and Optimization Algorithm

Replacing the \({\ell }_{1}\)-norm constraints in the SCCA model, we define the unified non-convex SCCA model as follows

where Ωnc(u) and Ωnc(v) can be any of the non-convex functions listed in Table 1.

To solve the non-convex SCCA problem, we use the Lagrangian method,

which is equivalent to

from the point of view of optimization. α 1, α 2, λ 1, λ 2 and γ are nonnegative tuning parameters. Next we will show how to solve this non-convex problem.

The first term −u Τ X Τ Yv on the right of equation (5) is biconvex in u and v. \({\Vert {\bf{X}}{\bf{u}}\Vert }^{2}\) is convex in u, and \({\Vert {\bf{Y}}{\bf{v}}\Vert }^{2}\) is convex in v. It remains to approximate both Ωnc(u) and Ωnc(v) and transform them into convex ones.

The local quadratic approximation (LQA) technique was introduced to quadratically expresses the SCAD penalty38. Based on LQA, we here show how to represent these non-convex penalties in a unified way. First, we have the first-order Taylor expansion of \({P}_{{\lambda }_{1},\gamma }(\sqrt{\mu })\) at μ 0 P λ,γ ((μ)1/2) at μ 0

where μ 0 and μ are neighbors, e.g., the estimates at two successive iterations during optimization. Substituting \(\mu ={u}_{i}^{2}\) and \({\mu }_{0}={({u}_{i}^{t})}^{2}\) into (6), we have

with \({P^{\prime} }_{\lambda ,\gamma }(|{u}_{i}^{t}|)\) being the supergradient of \({P}_{\lambda ,\gamma }(|{u}_{i}^{t}|)\) (as shown in Table 1) at \(|{u}_{i}^{t}|\).

Then we obtain a quadratic approximation to Ωnc(u):

where

is not a function of u and thus will not contribute to the optimization.

In a similar way, we can construct a quadratic approximation to Ωnc(v)

where

is not a function of v and makes no contribute towards the optimization.

Denote the estimates of u and v in the t-th iteration as u t and v t, respectively. To update the estimates of u and v in the (t + 1)-th iteration, we substitute the approximate functions of Ωnc(u) and Ωnc(v) in equations (8) and (9) into \( {\mathcal L} {\boldsymbol{(}}{\bf{u}}{\boldsymbol{,}}{\bf{v}}{\boldsymbol{)}}\) in 5, and solve the resultant approximate version of the original problem:

Obviously, the equation (10) is a quadratical expression, and is biconvex in u and v. This means it is convex in terms of u given v, and vice versa. Then according to the alternate convex search (ACS) method which is designed to solve biconvex problems48, the (t + 1)-th estimation of u and v can be calculated via

Both equations above are quadratic, and thus their closed-form solutions exist. Taking the partial derivative of \( {\mathcal L} {\boldsymbol{(}}{\bf{u}}{\boldsymbol{,}}{\bf{v}}{\boldsymbol{)}}\) in (5) with respect to u and v and setting the results to zero, we have

where \({{\bf{D}}}_{1}^{t}\) is a diagonal matrix with the i-th diagonal entry as \(\frac{{P^{\prime} }_{{\lambda }_{1},\gamma }(|{u}_{i}^{t}|)}{|{u}_{i}^{t}|}\) (i∈[1, p]). It can be calculated by taking the partial derivative of equation (7) with respect to u i . \({{\bf{D}}}_{2}^{t}\) is also a diagonal matrix with the j-th diagonal entry as \(\frac{{P^{\prime} }_{{\lambda }_{1},\gamma }(|{v}_{j}^{t}|)}{|{v}_{j}^{t}|}\) (j∈[1, q]), and can be computed similarly. However, the i-th element of \({{\bf{D}}}_{1}^{t}\) does not exist if \({u}_{i}^{t}=0\). According to perturbed version of LQA50, we address this by adding a slightly perturbed term. Then the i-th element of \({{\bf{D}}}_{1}^{t}\) is

where ζ is a tiny positive number. Hunter and Li50 showed that this modification guarantees optimizing the equation (11). Then we have the updating expressions at the (t + 1)-th iteration

We alternate between the above two equations to graduate refine the estimates for u and v until convergence. The pseudo code of the non-convex SCCA algorithm is described in Algorithm 1.

Computational Analysis

In Algorithm 1, Step 3 and Step 6 are linear in the dimension of u and v, and are easy to compute. Step 4 and Step 7 are the critical steps of proposed algorithm. Since we have closed-form updating expressions, they can be calculated via solving a system of linear equations with quadratic complexity which avoids computing the matrix inverse with cubic complexity. Step 5 and 8 are the re-scale step and very easy to calculate. Therefore, the whole algorithm is efficient.

Data Availability

The synthetic data sets generated in this work are available from the corresponding authors’ web sites, http://www.escience.cn/people/dulei/code.html and http://www.iu.edu/ shenlab/tools/ncscca/. The real data set is publicly available in the Alzheimer’s Disease Neuroimaging Initiative (ADNI) database repository, http://adni.loni.usc.edu.

Experiments and Datasets

Data Description

Synthetic Dataset

There are four data sets with sparse true signals for both u and v, i.e., only a small subset of features are nonzero. The number of features of both u and v are larger than the observations to simulate a large-(p + q)-small-n task. The generating process is as follows. We first generate u and v with most feature being zero. After that, the latent variable z is constructed from Gaussian distribution N(0, I n × n ). Then we create the data X from \({{\bf{x}}}_{i}\sim N({z}_{i}{\bf{u}},{\sum }_{x})\) and data \({{\bf{y}}}_{i}\sim N({z}_{i}{\bf{v}},{\sum }_{y})\), where (∑ x ) jk = exp(−|u j − u k |) and (∑ y ) jk = exp(−|v j − v k |). The first three sets have 250 features for u and 600 ones for v, but they have different correlation coefficients. There are 500 features and 900 features in u and v respectively for the last data set. We show the true signal of every data set in Fig. 2 (top row).

Canonical loadings estimated on four synthetic data sets. The first column shows results for Data1, and the second column is for Data2, and so forth. The first row is the ground truth, and each remaining one corresponds to an SCCA method: (1) Ground Truth. (2) L1-SCCA. (3) L1-NSCCA. (4) L1-S2CCA. (5) \({\ell }_{\gamma }\)-norm and so forth. For each data set and each method, the estimated weights of u is shown on the left panel, and v is on the right. In each individual heat map, the x-axis indicates the indices of elements in u or v; the y-axis indicates the indices of the cross-validation folds.

Real Neuroimaging Genetics Dataset

Data used in the preparation of this article were obtained from the ADNI database (adni.loni.usc.edu). The ADNI was launched in 2003 as a public-private partnership by the National Institute on Aging (NIA), the National Institute of Biomedical Imaging and Bioengineering (NIBIB), the Food and Drug Administration (FDA) etc, led by Principal Investigator Michael W. Weiner, MD. The primary goal of ADNI has been to test whether serial magnetic resonance imaging (MRI), positron emission tomography (PET), other biological markers, and clinical and neuropsychological assessment can be combined to measure the progression of mild cognitive impairment (MCI) and early Alzheimer’s disease (AD). For up-to-date information, see www.adni-info.org. The study protocols were approved by the Institutional Review Boards of all participating centers (Northwestern Polytechnical University, Indiana University and ADNI (A complete list of ADNI sites is available at http://www.adni-info.org/)) and written informed consent was obtained from all participants or authorized representatives. All the analyses were performed on the de-identified ADNI data, and were determined by Indiana University Human Subjects Office as IU IRB Review Not Required.

The real neuroimaging genetics dataset were collected from 743 participants, and the details was presented in Table 2. There were 163 candidate SNP biomarkers from the AD-risk genes, e.g., APOE, in the genotyping data. The structural MRI scans were processed with voxel-based morphometry (VBM) in SPM851,52. Briefly, scans were aligned to a T1-weighted template image, segmented into gray matter (GM), white matter (WM) and cerebrospinal fluid (CSF) maps, normalized to MNI space, and smoothed with an 8mm FWHM kernel. We subsampled the whole brain and generated 465 voxels spanning the whole brain ROIs. The regression technique was employed to remove the effects of the baseline age, gender, education, and handedness for these VBM measures. The aim of this study is to evaluate the correlation between the SNPs and the VBM measures, and further identify which SNPs and ROIs are associated.

Experimental Setup

Benchmarks

In this paper, we are mainly interested in whether these non-convex SCCA methods could enhance the performance of \({\ell }_{1}\)-SCCA method based on our motivation. It is reasonable to employ the \({\ell }_{1}\)-norm based methods in comparison. Therefore, the structure-aware SCCA methods such as28,29,32,34 are not contained here as benchmark. Based on different mathematical techniques, there are three different \({\ell }_{1}\)-SCCA algorithms. They are the singular value decomposition based method25, the primal-dual based method29 and the LQA based method32. Though the latter two are proposed for capturing group or network structure, they can be easily reformulated to the \({\ell }_{1}\)-norm constrained methods, such as setting the parameters associated with the structure penalty to zero29. Therefore, to make the comparison fair and convincing, we choose all of them as benchmarks. With a slight abuse of notation, we use the penalty name to refer a non-convex SCCA method, e.g. ETP for ETP based SCCA method. For the \({\ell }_{1}\)-norm based methods, we call them L1-SCCA25, L1-S2CCA32, and L1-NSCCA29.

Parameter Tuning

There are four parameters λ i (i = 1, 2) and α i (i = 1, 2) associated with the non-convex SCCA methods, and one pivotal parameter γ. According to their equations, these non-convex penalties can approximate the \({\ell }_{0}\)-norm by providing an appropriate γ. In this situation, the λ i and α i play a very weak role because theoretically the \({\ell }_{0}\)-norm penalized problem does not rely on the parameters. Based on this consideration, we here only tune the γ other than tuning λ i and α i by a grid search strategy. This reduces the time consumption dramatically but does not affect the performance significantly. Further, we observe that two γ's perform similarly if they are not significantly different. Thus the tuning range of γ is not continuous. Besides, we set γ = 3.7 for SCAD penalty since38 suggested that this is a very reasonable choice. The details of tuning range for each penalty are contained in Table 3. For λ i and α i , we simply set them to 1 in this study.

Termination Criterion

We use \({{\rm{\max }}}_{i}|{u}_{i}^{t+1}-{u}_{i}^{t}|\le \varepsilon \) and \({{\rm{\max }}}_{j}|{v}_{j}^{t+1}-{v}_{j}^{t}|\le \varepsilon \) as the termination condition for Algorithm 1, where ε is the user defined error bound. In this study, we set ε = 10−5 according to experiments. All methods use the same setup, i.e., the same partition of the five-fold cross-validation, running on the same platform.

Results on Synthetic Data

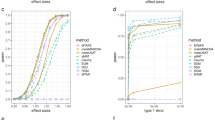

Figure 2 shows the heat maps of canonical loadings estimated from all SCCA methods, where each row corresponds to an experimental method. We clearly observe that the non-convex SCCA methods and L1-SCCA correctly identify the identical signal positions to the ground truth across four data sets. Besides true signals, L1-SCCA introduces several undesired signals which makes it be inferior to our methods. As a contrast, L1-NSCCA finds out an incomplete proportion of the ground truth, and L1-S2CCA performs unstably as it fails on some folds. Moreover, we also prioritize these methods using the AUC (area under ROC) criterion in Table 4, where a higher value indicates a better performance. The results exhibit that the non-convex SCCA methods have the highest score at almost every case. L1-SCCA scores similarly to the proposed methods, but later we can see it pays the price at a reduced prediction ability. Table 5 presents the estimated correlation coefficients on both training and testing data, where the best values are shown in boldface. The proposed SCCA methods alternatively gain the best value, and the Log method wins out for the most times. This demonstrates that the non-convex methods outperform \({\ell }_{1}\)-norm based SCCA methods in terms of the prediction power. In summary, the proposed methods identify accurate and sparse canonical loading patterns and obtain high correlation coefficients simultaneously, while those \({\ell }_{1}\)-norm based SCCA methods cannot.

Results on Real Neuroimaging Genetics Data

In this real data study, the genotyping data is denoted by X, and the imaging data is denoted by Y. The u is a vector of weights of all SNPs, and v is a vector of weights of all imaging markers.The canonical correlation coefficients are defined as Pearson correlation coefficient between Xu and Yv, i.e., \({({\bf{X}}{\bf{u}})}^{{\rm{{\rm T}}}}{\bf{Y}}{\bf{v}}/(\Vert {\bf{X}}{\bf{u}}\Vert \Vert {\bf{Y}}{\bf{v}}\Vert )\).

Figure 3 presents the heat maps regrading the canonical loadings generated from the training set. In this figure, each row shows two weights of a SCCA method, where a larger weight stands for a more importance. The weight associated with the SNPs is on the left panel, and that associated with the voxels is on the right. The proposed non-convex SCCA methods obtain very clean and sparse weights for both u and v. The largest signal on the genetic side is the APOE e4 SNP rs429358, which has been previously reported to be related to AD53. On the right panel, the largest signal for all SCCA methods comes from the hippocampus region. This is one of the most notable biomarkers as an indicator of AD, since atrophy of hippocampus has been shown to be related to brain atrophy and neuron loss measured with MRI in AD cohort53. In addition, the L1-S2CCA and SCAD methods identify a weak signal from the parahippocampal gyrus, which is previously reported as an early biomarker of AD54. On some folds, the Log method also finds out the lingual region, parahippocampal gyrus, vermis region. Interestingly, all the three regions have shown to be correlated to AD, and could be further considered as an indicating biomarker that can be observed prior to a dementia diagnosis. For example, Sjöbeck and Englund reported that molecular layer gliosis and atrophy in the vermis are clearly severer in AD patients than in the health controls55. This is meaningful since the non-convex SCCA methods identify the correct clue for further investigation. On this account, both L1-SCCA and L1-NSCCA are not good choices since they identify too many signals, which may misguide subsequent investigation. The figure shows that L1-S2CCA could be an alternative choice for sparse imaging genetics analysis, but it performs unstably across the five folds. And, the non-convex methods is more consistent and stable than those \({\ell }_{1}\)-SCCA methods. To show the results more clearly, we map the canonical weights (averaged across 5 folds) regarding the imaging measurements from each SCCA method onto the brain in Fig. 4. The figure confirms that the L1-SCCA and L1-NSCCA find out many signals that are not sparse. The L1-S2CCA identifies fewer signals than both L1-SCCA and L1-NSCCA, but more than all these non-convex SCCA methods. All the non-convex SCCA only highlights a small region of the whole brain. This again reveals that the proposed methods have better canonical weights which reduces the effort of further investigation.

Canonical loadings estimated on real imaging genetics data. Each row corresponds to a SCCA method: (1) L1-SCCA, (2) L1-NSCCA, (3) L1-S2CCA, (4) \({\ell }_{\gamma }\)-norm and so forth. For each method, the estimated u is shown on the left panel, and v is on the right one. In each individual heat map, the x-axis indicates the indices of elements in u or v (i.e., SNPs or ROIs); the y-axis indicates the indices of the cross-validation folds.

Mapping averaged canonical weight v's estimated by every SCCA method onto the brain. The left panel and right panel show five methods respectively, where each row corresponds to a SCCA method. The L1-SCCA identifies the most signals, followed by the L1-NSCCA and L1-S2CCA. All the proposed methods identify a clean signal that helps further investigation.

Besides, we include both training and testing correlation coefficients in Table 6, where their mean and standard deviation are shown. The training results of all methods are similar, with the Log method gains the highest value of 0.33 ± 0.03. As for the testing results, which is our primary interest, all the non-convex SCCA methods obtain better values than these \({\ell }_{1}\)-SCCA methods. Besides, the difference between the training and testing performance of the proposed methods is much smaller than that of three \({\ell }_{1}\)-SCCA methods. This means that the non-convex methods have better generalization performance as they are less likely to fall into overfitting issue. The result of this real imaging genetics data reveals that the proposed SCCA methods can extract more accurate and sparser canonical weights for both genetic and imaging biomarkers, and obtain higher correlation coefficients than those \({\ell }_{1}\)-SCCA methods.

Conclusion

We have proposed a unified non-convex SCCA model and an efficient optimization algorithm using a family of non-convex penalty functions. These penalties are concave and piecewise continuous, and thus piecewise differentiable. We approximate these non-convex penalties by an \({\ell }_{2}\) function via the local quadratic approximation (LQA)38. Therefore, the proposed algorithm is effective and runs fast.

We compare the non-convex methods with three state-of-the-art \({\ell }_{1}\)-SCCA methods using both simulation data and real imaging genetics data. The simulation data have different ground truth structures. The results on the simulation data show that the non-convex SCCA methods identify cleaner and better canonical loadings than the three \({\ell }_{1}\)-SCCA methods, i.e. L1-SCCA25, L1-S2CCA32, and L1-NSCCA29. These non-convex methods also recover higher correlation coefficients than \({\ell }_{1}\)-SCCA methods, demonstrating that \({\ell }_{1}\)-SCCA methods have suboptimal prediction capability as they may over penalize large coefficients. The results on the real data show that the proposed methods discover a pair of meaningful genetic and brain imaging biomarkers, while the \({\ell }_{1}\)-SCCA methods return too many irrelevant signals. The correlation coefficients show that the non-convex SCCA methods hold better testing values. This verifies our motivation that the non-convex penalty can improve the prediction ability, and thus has better generalization capability. Obviously, the parameter γ plays a key role in these non-convex penalties. In the future work, we will investigate how to choose a reasonable γ; and explore how to incorporate structure information into the model as structure information extraction is an important task for brain imaging genetics as well as biology studies.

References

Hibar, D. P., Kohannim, O., Stein, J. L., Chiang, M.-C. & Thompson, P. M. Multilocus genetic analysis of brain images. Frontiers in Genetics 2, 73 (2011).

Hariri, A. R., Drabant, E. M. & Weinberger, D. R. Imaging genetics: perspectives from studies of genetically driven variation in serotonin function and corticolimbic affective processing. Biological psychiatry 59, 888–897 (2006).

Viding, E., Williamson, D. E. & Hariri, A. R. Developmental imaging genetics: challenges and promises for translational research. Development and Psychopathology 18, 877–892 (2006).

Mattay, V. S., Goldberg, T. E., Sambataro, F. & Weinberger, D. R. Neurobiology of cognitive aging: insights from imaging genetics. Biological psychology 79, 9–22 (2008).

Bigos, K. L. & Weinberger, D. R. Imaging genetics - days of future past. Neuroimage 53, 804–809 (2010).

Scharinger, C., Rabl, U., Sitte, H. H. & Pezawas, L. Imaging genetics of mood disorders. Neuroimage 53, 810–821 (2010).

Potkin, S. G. et al. Genome-wide strategies for discovering genetic influences on cognition and cognitive disorders: methodological considerations. Cognitive neuropsychiatry 14, 391–418 (2009).

Kim, S. et al. Influence of genetic variation on plasma protein levels in older adults using a multi-analyte panel. PLoS One 8, e70269 (2013).

Shen, L. et al. Whole genome association study of brain-wide imaging phenotypes for identifying quantitative trait loci in MCI and AD: A study of the ADNI cohort. Neuroimage 53, 1051–63 (2010).

Winkler, A. M. et al. Cortical thickness or grey matter volume? the importance of selecting the phenotype for imaging genetics studies. Neuroimage 53, 1135–1146 (2010).

Meda, S. A. et al. A large scale multivariate parallel ica method reveals novel imaging–genetic relationships for alzheimer’s disease in the adni cohort. Neuroimage 60, 1608–1621 (2012).

Nho, K. et al. Whole-exome sequencing and imaging genetics identify functional variants for rate of change in hippocampal volume in mild cognitive impairment. Molecular psychiatry 18, 781 (2013).

Shen, L. et al. Genetic analysis of quantitative phenotypes in AD and MCI: imaging, cognition and biomarkers. Brain imaging and behavior 8, 183–207 (2014).

Saykin, A. J. et al. Genetic studies of quantitative MCI and AD phenotypes in ADNI: Progress, opportunities, and plans. Alzheimer’s & Dementia 11, 792–814 (2015).

Wang, H. et al. Identifying quantitative trait loci via group-sparse multitask regression and feature selection: an imaging genetics study of the ADNI cohort. Bioinformatics 28, 229–237 (2012).

Vounou, M., Nichols, T. E. & Montana, G. Discovering genetic associations with high-dimensional neuroimaging phenotypes: A sparse reduced-rank regression approach. NeuroImage 53, 1147–59 (2010).

Vounou, M. et al. Sparse reduced-rank regression detects genetic associations with voxel-wise longitudinal phenotypes in alzheimer’s disease. Neuroimage 60, 700–716 (2012).

Zhu, X., Suk, H.-I., Huang, H. & Shen, D. Structured sparse low-rank regression model for brain-wide and genome-wide associations. In International Conference on Medical Image Computing and Computer-Assisted Intervention, 344–352 (Springer, 2016).

Liu, J. et al. Combining fmri and snp data to investigate connections between brain function and genetics using parallel ica. Human brain mapping 30, 241–255 (2009).

Geladi, P. & Kowalski, B. R. Partial least-squares regression: a tutorial. Analytica chimica acta 185, 1–17 (1986).

Grellmann, C. et al. Comparison of variants of canonical correlation analysis and partial least squares for combined analysis of mri and genetic data. NeuroImage 107, 289–310 (2015).

Hardoon, D., Szedmak, S. & Shawe-Taylor, J. Canonical correlation analysis: An overview with application to learning methods. Neural Computation 16, 2639–2664 (2004).

Hardoon, D. R. & Shawe-Taylor, J. Sparse canonical correlation analysis. Machine Learning 83, 331–353 (2011).

Hotelling, H. Relations between two sets of variates. Biometrika 28, 321–377 (1936).

Witten, D. M., Tibshirani, R. & Hastie, T. A penalized matrix decomposition, with applications to sparse principal components and canonical correlation analysis. Biostatistics 10, 515–34 (2009).

Witten, D. M. & Tibshirani, R. J. Extensions of sparse canonical correlation analysis with applications to genomic data. Statistical applications in genetics and molecular biology 8, 1–27 (2009).

Parkhomenko, E., Tritchler, D. & Beyene, J. Sparse canonical correlation analysis with application to genomic data integration. Statistical Applications in Genetics and Molecular Biology 8, 1–34 (2009).

Chen, X., Liu, H. & Carbonell, J. G. Structured sparse canonical correlation analysis. In International Conference on Artificial Intelligence and Statistics, 199–207 (2012).

Chen, X. & Liu, H. An efficient optimization algorithm for structured sparse cca, with applications to EQTL mapping. Statistics in Biosciences 4, 3–26 (2012).

Chen, J. & Bushman, F. D. et al. Structure-constrained sparse canonical correlation analysis with an application to microbiome data analysis. Biostatistics 14, 244–258 (2013).

Lin, D., Calhoun, V. D. & Wang, Y.-P. Correspondence between fMRI and SNP data by group sparse canonical correlation analysis. Medical image analysis 18, 891–902 (2014).

Du, L. et al. A novel structure-aware sparse learning algorithm for brain imaging genetics. In International Conference on Medical Image Computing and Computer Assisted Intervention, 329–336 (2014).

Yan, J. et al. Transcriptome-guided amyloid imaging genetic analysis via a novel structured sparse learning algorithm. Bioinformatics 30, i564–i571 (2014).

Du, L. et al. Structured sparse canonical correlation analysis for brain imaging genetics: An improved graphnet method. Bioinformatics 32, 1544–1551 (2016).

Du, L. et al. Sparse canonical correlation analysis via truncated l 1-norm-norm with application to brain imaging genetics. In IEEE International Conference on Bioinformatics and Biomedicine, 707–711 (IEEE, 2016).

Du, L. et al. Identifying associations between brain imaging phenotypes and genetic factors via a novel structured scca approach. In International Conference on Information Processing in Medical Imaging, 543–555 (Springer, 2017).

Meinshausen, N. & Bühlmann, P. High-dimensional graphs and variable selection with the lasso. The annals of statistics 1436–1462 (2006).

Fan, J. & Li, R. Variable selection via nonconcave penalized likelihood and its oracle properties. Journal of the American Statistical Association 96, 1348–1360 (2001).

Zou, H. The adaptive lasso and its oracle properties. Journal of the American Statistical Association 101, 1418–1429 (2006).

Shen, X., Pan, W. & Zhu, Y. Likelihood-based selection and sharp parameter estimation. Journal of the American Statistical Association 107, 223–232 (2012).

Fung, G. & Mangasarian, O. Equivalence of minimal l 0-and l p -norm solutions of linear equalities, inequalities and linear programs for sufficiently small p. Journal of optimization theory and applications 151, 1–10 (2011).

Frank, L. E. & Friedman, J. H. A statistical view of some chemometrics regression tools. Technometrics 35, 109–135 (1993).

Geman, D. & Yang, C. Nonlinear image recovery with half-quadratic regularization. IEEE Transactions on Image Processing 4, 932–946 (1995).

Trzasko, J. & Manduca, A. Highly undersampled magnetic resonance image reconstruction via homotopic l 1-minimization. IEEE Transactions on Medical imaging 28, 106–121 (2009).

Zhang, C. Nearly unbiased variable selection under minimax concave penalty. Annals of Statistics 38, 894–942 (2010).

Gao, C., Wang, N., Yu, Q. & Zhang, Z. A feasible nonconvex relaxation approach to feature selection. In AAAI, 356–361 (2011).

Friedman, J. H. Fast sparse regression and classification. International Journal of Forecasting 28, 722–738 (2012).

Gorski, J., Pfeuffer, F. & Klamroth, K. Biconvex sets and optimization with biconvex functions: a survey and extensions. Mathematical Methods of Operations Research 66, 373–407 (2007).

Lu, C., Tang, J., Yan, S. & Lin, Z. Generalized nonconvex nonsmooth low-rank minimization. In IEEE Conference on Computer Vision and Pattern Recognition, 4130–4137 (2014).

Hunter, D. R. & Li, R. Variable selection using mm algorithms. Annals of statistics 33, 1617 (2005).

Ashburner, J. & Friston, K. J. Voxel-based morphometry–the methods. Neuroimage 11, 805–21 (2000).

Risacher, S. L. & Saykin, A. J. et al. Baseline MRI predictors of conversion from MCI to probable AD in the ADNI cohort. Current Alzheimer Research 6, 347–61 (2009).

Hampel, H. et al. Core candidate neurochemical and imaging biomarkers of alzheimer’s disease. Alzheimer’s & Dementia 4, 38–48 (2008).

Echavarri, C. et al. Atrophy in the parahippocampal gyrus as an early biomarker of alzheimer’s disease. Brain Structure and Function 215, 265–271 (2011).

Sjöbeck, M. & Englund, E. Alzheimer’s disease and the cerebellum: a morphologic study on neuronal and glial changes. Dementia and geriatric cognitive disorders 12, 211–218 (2001).

Acknowledgements

Data collection and sharing for this project was funded by the Alzheimer’s Disease Neuroimaging Initiative (ADNI) (National Institutes of Health Grant U01 AG024904) and DOD ADNI (Department of Defense award number W81XWH-12-2-0012). ADNI is funded by the National Institute on Aging, the National Institute of Biomedical Imaging and Bioengineering, and through generous contributions from the following: AbbVie, Alzheimer’s Association; Alzheimer’s Drug Discovery Foundation; Araclon Biotech; BioClinica, Inc.; Biogen; Bristol-Myers Squibb Company; CereSpir, Inc.; Cogstate; Eisai Inc.; Elan Pharmaceuticals, Inc.; Eli Lilly and Company; EuroImmun; F. Hoffmann-La Roche Ltd and its affiliated company Genentech, Inc.; Fujirebio; GE Healthcare; IXICO Ltd.; Janssen Alzheimer Immunotherapy Research & Development, LLC.; Johnson & Johnson Pharmaceutical Research & Development LLC.; Lumosity; Lundbeck; Merck & Co., Inc.; Meso Scale Diagnostics, LLC.; NeuroRx Research; Neurotrack Technologies; Novartis Pharmaceuticals Corporation; Pfizer Inc.; Piramal Imaging; Servier; Takeda Pharmaceutical Company; and Transition Therapeutics. The Canadian Institutes of Health Research is providing funds to support ADNI clinical sites in Canada. Private sector contributions are facilitated by the Foundation for the National Institutes of Health (www.fnih.org). The grantee organization is the Northern California Institute for Research and Education, and the study is coordinated by the Alzheimer’s Therapeutic Research Institute at the University of Southern California. ADNI data are disseminated by the Laboratory for Neuro Imaging at the University of Southern California. L. Du was supported by the National Natural Science Foundation of China (61602384); the Natural Science Basic Research Plan in Shaanxi Province of China (2017JQ6001); the China Postdoctoral Science Foundation (2017M613202); and the Fundamental Research Funds for the Central Universities (3102016OQD0065) at Northwestern Polytechnical University. This work was also supported by the National Institutes of Health R01 EB022574, R01 LM011360, U01 AG024904, P30 AG10133, R01 AG19771, UL1 TR001108, R01 AG 042437, R01 AG046171, R01 AG040770; the Department of Defense W81XWH-14-2-0151, W81XWH-13-1-0259, W81XWH-12-2-0012; the National Collegiate Athletic Association 14132004 at Indiana University.

Author information

Authors and Affiliations

Consortia

Contributions

L.D., L.G. and L.S. conceived and designed the research. L.D., K.L. and J.H. carried out the study analysis. X.Y., J.Y, S.L.R. and A.J.S. collected the data from ADNI database. L.D., K.L., L.S. and A.J.S. analyzed the results and wrote the paper. Data used in preparation of this article were obtained from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) database (adni.loni.usc.edu). As such, the investigators within the ADNI contributed to the design and implementation of ADNI and/or provided data but did not participate in analysis or writing of this report.

Corresponding authors

Ethics declarations

Competing Interests

The authors declare that they have no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

A comprehensive list of consortium members appears at the end of the paper

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Du, L., Liu, K., Yao, X. et al. Pattern Discovery in Brain Imaging Genetics via SCCA Modeling with a Generic Non-convex Penalty. Sci Rep 7, 14052 (2017). https://doi.org/10.1038/s41598-017-13930-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-017-13930-y

This article is cited by

-

Machine Learning for Brain Imaging Genomics Methods: A Review

Machine Intelligence Research (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.