Abstract

Sensitive and fast optical imaging is needed for scientific instruments, machine vision, and biomedical diagnostics. Many of the fundamental challenges are addressed with time stretch imaging, which has been used for ultrafast continuous imaging for a diverse range of applications, such as biomarker-free cell classification, the monitoring of laser ablation, and the inspection of flat panel displays. With frame rates exceeding a million scans per second, the firehose of data generated by the time stretch camera requires optical data compression. Warped stretch imaging technology utilizes nonuniform spectrotemporal optical operations to compress the image in a single-shot real-time fashion. Here, we present a matrix analysis method for the evaluation of these systems and quantify important design parameters and the spatial resolution. The key principles of the system include (1) time/warped stretch transformation and (2) the spatial dispersion of ultrashort optical pulse, which are traced with simple computation of ray-pulse matrix. Furthermore, a mathematical model is constructed for the simulation of imaging operations while considering the optical and electrical response of the system. The proposed analysis method was applied to an example time stretch imaging system via simulation and validated with experimental data.

Similar content being viewed by others

Introduction

Time stretch imaging technology enables fast continuous blur-free acquisition of quantitative phase and intensity image, offering high throughput image analysis for various applications such as industrial and biomedical imaging1,2,3,4,5,6,7,8,9,10,11. The imaging technology exploits spatial and temporal dispersion of broadband optical pulses, which realizes frame rates equivalent to the pulse repetition rates of mode-locked laser ranging from a few MHz to GHz12. The operating principle of the time stretch imager consists of the optical dispersion process in both space and time, the acquisition and the recording of optical scan signal, and reconstruction of image. First, spatial information of imaging target is mapped into spectrum of an ultrashort optical pulse (as a broadband flash illumination), which is realized with spatial dispersion. The optical spectrum is then mapped into a temporal waveform by temporal dispersion through a process known as time stretch transform2, 3, 13,14,15. The temporal data stream of encoded spatial information is serially collected and recorded by a single detection system consisting of a photodetector and a digitizer. Finally, the image is reconstructed by inversely mapping the recorded temporal data stream into the corresponding spatial coordinates.

A new advancement and variant of the time stretch imaging technology, warped stretch imaging16, 17 (also known as foveated time stretch imaging) features real-time optical compression of image while keeping the rapid imaging speed of its predecessor. Warped stretch imaging is realized by intentional warping of the formerly “linear” frequency-to-time mapping process with highly nonlinear temporal dispersion. The temporal stream of optical signal becomes a warped representation of the real image. Some portions of the temporal image signal are dilated in the time domain and other part are contracted. Optical image compression is effectively delivered by engineering the warp of the group delay dispersion profile, where higher image sampling densities are assigned to information-rich regions. Inspired by the sharp central vision (foveal vision) found in the human eye, where the photoreceptors (pixels) are concentrated in the center of gaze18 – warped stretch imaging is successfully demonstrated by real-time optical image compression in a time stretch infrared imaging system16 and an adaptive codec for digital image compression19.

In both conventional time stretch and warped stretch imaging systems, the optical front-end -- consisting of spatial and temporal dispersion elements -- is the significant and reconfigurable factor in designing the imaging operation. The optical dispersion encoding process in the front-end should be rigorously traced in space and time with respect to frequency (wavelength) for robust image construction and precise performance analysis of the imaging system. The time stretch process (frequency-to-time mapping) is typically performed within a single dispersive element. The process is theoretically well-established and experimentally reliable with the uniform form of time-stretch dispersive Fourier transform (TS-DFT) and nonuniform form of warped stretch transform2, 13,14,15, 17, 20, 21. In contrast, the one-dimensional spatial dispersion process (frequency-to-space mapping) is a relatively complicated step as it is achieved with combination of free-space optical components (i.e., diffraction grating and focusing lens), which there are a multitude of possible configurations. In addition, the nonlinear spatial dispersion present in both time stretch and warped stretch imaging systems should be considered as it has non-negligible warping effect in the mapping process. Due to such complexities in the frequency-to-space mapping process, it is a non-trivial task to establish a general description of the warped stretch imaging operation. We note that a theoretical analysis of time stretch imaging system has been formalized previously22. However, the study relies on an approximation of a system to a linear dispersion model which limits the accuracy of performance analysis and its use in image reconstruction. The only existing way to precisely analyze a warped stretch imaging system is by experimental observation of a constructed system10, 11.

To address these limitations, we introduce a numerical method aimed for analyzing arbitrary configurations of warped stretch imaging systems. The method allows accurate evaluation of frequency-space-time mappings and the simulation of complete imaging processes. Our analysis method is divided into two parts, consisting of (i) a modified matrix method and (ii) mathematical models of the components. The modified matrix method is built upon a ray-pulse matrix formalism developed by Kostenbauder23, which we modified to consider the nonlinear dispersion of the optical system. This allows for compact and comprehensive means of tracking the ray-pulse structure (frequency-space-time map) in an arbitrary free-space optical system by matrix computation. The mathematical model is constructed in order to numerically simulate the imaging process including the wave nature of the optical signal. We derive the mathematical expressions for each optical and electrical component in the system and incorporate the frequency-space-time map obtained from the modified matrix method. Employing our analysis method, we demonstrate numerical evaluation of important parameters such as field-of-view, number of pixels, reconstruction map, detection sensitivity and spatial resolution. In addition, we highlight the influence of nonlinear spatial dispersion on the acquired image. This demonstrates that the construction of warped stretch imaging can be extended to engineering spatial dispersion profiles. As seen in the studies of other imaging techniques24, 25, physically rigorous analysis should assist further development of the imaging system.

Methods

Principles of time/warped stretch imaging

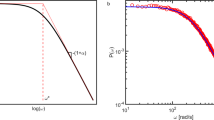

The key principle of time/warped stretch imaging is conceptually illustrated in Fig. 1a. Optical mapping processes distribute the frequency components of a broadband ultrashort optical pulse in space and time according to the optical components involved. (1) Frequency-to-time mapping projects the spectral information to the time domain and (2) frequency-to-space mapping encodes the spatial information into the spectrum. These two central optical processes can be cascaded regardless of their order, and enable the ultrafast acquisition of image in single-pixel scanner-like procedure. Figure 1a shows the arrangement of beam-pulselets for each mapping process in time and space, and the final space-time relationship of warped stretch imaging. The beam-pulselets can be addressed with their respective space (x), slope (θ), time (t) and frequency (f). The optical matrix computation yields the resultant inter-domain (space, time and frequency) relationship after the propagation. Here, the mapping relationships can be engineered by using optical dispersive elements of choice. We note that various optical elements were proposed and implemented since the advent of the time stretch imaging concept. Dispersive fibres, chirped fibre Bragg gratings, chromo-modal dispersion devices26 and free-space angular-chirped-enhanced delay devices27 can be used to define the frequency-to-time relationship, while diffraction gratings, diffractive optical elements, virtually imaged phased arrays, tilted fibre gratings and optical prisms are a few of available options for establishing the desired frequency-to-space relationship. As shown in Fig. 1b, the space-time relationship curve of warped stretched imaging system is uniformly point-sampled along the time axis, according to the fixed sampling rate of the ADC. Yellow colored circles on the image sample represent the spatially sampled points (pixels) corresponding to the discrete time domain of acquisition electronics. The warped optical mapping and pixel distribution of the acquired scan signal is highly foveated (Fig. 1c). The features in the center of the gaze are fully resolved, and the less important surrounding areas are imaged with lower resolution, reducing the required ADC sampling rate. Finally, the warped scan signal is reconstructed by remapping the temporal data-stream to the original image space according to the space-time relationship. We note that there are many variants of time/warped stretch imaging systems with different means of encoding spatial information and acquiring optical signals; however, they all follow the same mapping processes.

Principles of time/warped stretch imaging. (a) Time/warped stretch imaging employs frequency-to-time and frequency-to-space mapping of an ultrashort optical pulse to perform a line scan. Here, to clarify the method, we use a one-dimensional spatial disperser, which leads to a line scan per pulse. A 4 × 4 ray-pulse matrix computation of vector (space (x), slope (θ), time (t) and frequency (f)) specifies the optical mapping relationship. Colored circles represent uniformly spaced frequency components (beam-pulselets) and rainbow gradient lines represent their continuous distribution in both space and time domains. (b) Uniform temporal sampling under this space-to-time relationship determines the spatial sampling position on the image space. Yellow dots on the fingerprint sample image account for the warped pixel distribution of a given imaging system. (c) The corresponding temporal scan signal from the sampled points is acquired by a single-pixel photodetector and an analog-to-digital converter (ADC). The three pronounced peaks in the scan signal correspond to the fingerprint ridges in the center of the line scan. The scan signal is reconstructed by remapping the temporal data-stream back to the original image space. Regions with higher temporal dispersion are effectively assigned more samples (central region), while the part of the waveform that is not highly time-stretched corresponds to fewer imaging pixels (peripheral regions).

Analysis Method

The imaging performance of time/warped stretch imaging systems is determined by the frequency-space-time mapping process, the electrical bandwidth and the overall sampling rate of the acquisition system, including photodetection and digitization22. In order to deliver a complete and accurate analysis of an imaging system, we devise an analysis method that replicates the imaging process by means of numerical simulation. Our analysis method consists of two sub-formalisms: (i) a modified matrix method and (ii) mathematical modeling. We are able to characterize the frequency-space-time relationship in an arbitrary time/warped stretch imaging system using the modified matrix method. Subsequently, the mathematical model incorporates the obtained mapping relationship with mathematical expressions for optical and electrical components. Numerical simulation of the mathematical model provides the basis of our analysis method.

In the following section, we first introduce the modified matrix method and validate the accuracy of the method by its application to a well-known laser pulse compressor. Second, we apply the matrix analysis to a time stretch imaging system where we obtain frequency-space-time mapping and important parameters such as field-of-view, number of pixels and image warp of the imager. Finally, we derive the mathematical model of time stretch imaging operation.

Modified Matrix Method

The modified matrix method is based on a 4 × 4 optical matrix formalism developed by Kostenbauder which is widely used for characterizing spatiotemporally dispersive optical systems23, 28. The Kostenbauder matrix method uses vector with four elements [x θ t f]T to define deviation of space, angle, time and frequency from the reference optical pulse. Here, the space and angle is designated to a transverse direction, and the time is determined in the propagating direction of the pulse. As shown in eq. (1), computing the product of an input vector ν in = [x in θ in t in f in ]T and a 4 × 4 optical matrix yields an output vector ν out = [x out θ out t out f out ]T which reflects the structure of the pulse after propagation.

The 4 × 4 optical matrix in eq. (1) is able to model time invariant optical element or system with the convention:

where the constants A, B, C and D are identical to the elements of the ABCD ray matrix, E, F, G, H, and I represent the spatial dispersion, angular dispersion, pulse-front tilt, time-angle coupling, and group delay dispersion respectively. The optical matrices for conventional optical components such as free-space, lens, mirror, dispersive slab, prism and diffraction gratings are derived with simple geometric calculations23. We find that as the elements are constant values corresponding to first-order derivatives in the neighborhood of the given reference point, the optical matrix is accurate in dispersion up to the second order (linear dispersion). However, when imaging with broadband ultrashort pulses, higher-order dispersion should be considered in order to fully characterize an actual optical system. Therefore, we have constructed a generalized non-constant matrix as a modified form of the original Kostenbauder matrix. We distinguish our notation from the linear analysis where the modified vector is expressed as [Δx Δθ Δt Δf]T. With the following optical matrix, high-order spatial, angular and temporal dispersion (E, F, I) can be considered. We substitute the elements in the 4 × 4 optical matrix with frequency dependent functions as

The elements A, B, C, D, G and H are kept unchanged from the original form of Kostenbauder matrix (first-order derivative). In order to include high-order dispersion, E, F and I are now replaced as arbitrary function of Δf in . The modified elements E, F and I, can be defined by inserting corresponding frequency-dependent function of an optical component. We provide matrices for the dispersive optical components in Table 1.

An arbitrary optical system can be analyzed with the optical matrix calculation in a numerical fashion. The frequency components of the pulse are segmented over the whole spectrum as series of vectors where they are computed with the optical matrices. As a demonstration of our method, we analyzed a laser pulse compressor, a well-known and simple optical system consisting of diffraction grating pairs. Optical matrices for the laser pulse compressor are expressed as

where \({\rm{\Delta }}{x}_{out}\) is the computed output vector with information of position, \({\rm{\Delta }}{\theta }_{out}\) is slope, \({\rm{\Delta }}{t}_{out}\) is time and \({\rm{\Delta }}{f}_{out}\) is frequency. Thus, the output vector completely defines the frequency-space-time relationship of the pulse after propagation through the system. Here, we have omitted the optical matrix for the free-space between the diffraction grating pairs, as it does not affect the temporal dispersion of a perfectly aligned pulse compressor.

To illustrate the method, the frequency components of the input pulse are segmented into three vectors as shown in Fig. 2a. The red, green and blue colors represent lowest frequency, reference (center) frequency and highest frequency respectively. We obtain three output vectors by computing the optical matrices with the three input vectors. As we analyze the output vectors of an ideal laser compressor, the deviation in space and slope in the output vectors are zero while the temporal deviation (group delay) are \({\rm{\Delta }}{t}_{r}\) 0, \({\rm{\Delta }}{t}_{b}\) for each frequency components (red, green and blue respectively). Using this convention, we acquire group delay profiles with sufficient number of frequency samples over the 20 nm optical bandwidth. We calculated the temporal dispersion and compared with the analytical result29 to validate our analysis method. The temporal dispersion of the system, group delay (GD), group delay dispersion (GDD) and third-order dispersion (TOD) can be evaluated by

where \({\tau }_{g}\) is the group delay, equivalent to the value \({\rm{\Delta }}{t}_{out}\) at the given frequency component and θ d is the frequency-dependent first-order diffraction angle.

Demonstration of modified matrix analysis for laser pulse compressor. Red, blue and green line represent optical paths for each frequency component. (a) Schematic of laser pulse compressor. The center wavelength of the pulse is 810 nm, and the incident angle of the pulse at the first diffraction grating is 63 degrees (Littrow angle), and the separation distance between the diffraction grating pair is 1 cm. (b) Group delay, (c) group-delay dispersion, and (d) third-order dispersion of the laser pulse compressor evaluated by analytical formula (blue solid line), modified matrix (red circle) and original Kostenbauder matrix (grey dashed line).

Figure 2b–d show the comparison of GD, GDD and TOD results obtained from our modified matrix method, the original Kostenbauder matrix and analytical expression29, 30. Our analysis method shows good agreement with the result of the analytical solution, which exhibits high-order dispersion. In contrast, when using the Kostenbauder matrix, the accuracy of the results is limited to second-order dispersion where the analyzed GD is linearly related to the frequency. Due to the limitation, the Kostenbauder matrix method results in a constant GDD and a zero high-order dispersion (TOD). We note that evaluation of higher-order dispersion using our method is limited only by the floating point accuracy of numerical differentiation.

Matrix Analysis of Time/Warped Stretch Imaging System

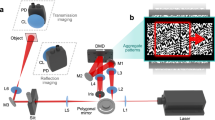

We employ the matrix analysis method for a laser scanning type time stretch imaging system11 shown in Fig. 3a. The operation of imaging entails optical dispersion in time and space domain. A broadband optical pulse is temporally dispersed with dispersive fiber in the TS-DFT process. It is then spatially dispersed by a pair of diffraction grating into a one-dimensional rainbow. After being respectively mapped in the temporal and spatial domain, the pulse is incident on imaging target and performs the scan. The schematic of the imager in Fig. 3a is expressed mathematically as

Matrix analysis of time stretch imaging system. (a) Schematic of time stretch imaging system (laser scanner-type). Ultrashort optical pulses with a center wavelength of 810 nm and an optical bandwidth of 20 nm is generated by a Ti:Sapphire mode-locked laser. The group delay dispersion of the dispersive fiber is −650 ps/nm and the groove density of the diffraction grating pair is 2200 lines/mm. The light blue and light green bounded graphs represent the spectro-spatio-temporal maps of the optical pulse after the dispersive fiber and after the diffraction grating, respectively. The temporal position is t = −z/c (z: propagation direction and c: velocity of light) (b) Frequency-to-time mapping function \({t}_{\omega }(\omega )\) after the dispersive fiber. (c) Frequency-to-space relationship x ω (ω) and (d) time-to-space mapping function x t (t) after the diffraction grating. Different types of spatial dispersion configuration are shown in (e) and (f). Either transmission or reflection type diffraction gratings can be used in any of these configurations.

Figure 3b shows the frequency-to-time mapping established by the propagation of the pulse through a dispersive fiber. Figure 3c and d show the frequency-to-space and time-to-space mappings after passing through the diffraction grating pair. The numerical results obtained by our matrix analysis agree well with the measured data from our previous study11. We note that different types of spatial dispersion configurations are possible as shown in Fig. 3e and f. The total optical matrix for each cases can be simply derived by multiplication of the corresponding optical matrices (i.e., focusing lens and free-space).

Several important parameters can be directly obtained from the overall frequency-space-time mapping resulting from the matrix evaluation; these include the overlap condition between consecutive scanning pulses, field-of-view (FOV), total number of pixels, detection sensitivity, and the explicit representation of the reconstruction map2, 22. When the scan rate of time stretch imaging system is represented by R, the temporal window for the time stretched pulse is limited by the pulse repetition period of R −1. In order to avoid overlap between consecutive pulses the following condition needs to be satisfied.

where \({\rm{\Delta }}\tau \) is the time stretched pulse width, \({t}_{\omega }\) is the frequency-time relationship obtained from the matrix analysis as shown in Fig. 3b, \({\omega }_{low}\) and \({\omega }_{high}\) are the cutoff optical frequencies at lowest and highest end of the spectrum respectively. The FOV of the system is determined by the spectral width of the pulse and frequency-to-space mapping of the spatial dispersion component. The FOV is given by

where \({x}_{\omega }\) is the frequency-space relationship as shown in Fig. 3c. The number of pixel N is equivalent to the number of temporally sampled points in the digitizer, which is expressed as

where f dig is the sampling rate of the digitizer. The detection sensitivity of the imager is largely determined by the fundamental noise mechanisms (shot noise, dark current noise and thermal noise) of the photodetector22 and the frequency-to-time mapping profile. Assuming far field conditions for frequency-to-time mapping13, 21, the number of collected photoelectrons at the pixel corresponding to the frequency ω is

where η is the quantum efficiency of the photodetector, and S(ω) is the spectral density of incident photon flux. Without loss of generality, considering both dispersive Fourier transformation with Raman amplification and without, the total noise of the imaging system becomes22, 31,

where G(ω) is the optical amplification spectrum, Q is the amplification noise figure, \({\sigma }_{s}(\omega )\) is the shot noise which can be approximated by \({\sigma }_{s}(\omega )={P}_{in}{(\omega )}^{1/2}\), \({\sigma }_{d}\) is the dark current noise and \({\sigma }_{T}\) is the thermal noise. With the signal and noise defined, we may express signal-to-noise ratio (SNR) as:

The spectral description of SNR (denoted by SNR ω (ω)) can be conveniently changed to its corresponding spatial description by \(SN{R}_{x}(x)=SN{R}_{\omega }({\omega }_{x}(x))\). Finally, the reconstruction of image can be simply achieved by mapping the temporal position of the recorded signal to the spatial coordinate using the time-to-space mapping \({x}_{t}(t)\).

In the next section, we build a mathematical model of the time stretch imaging operation. In order to quantify the signal broadening (spatial resolution) of the imaging system, the optical and electrical response of the system needs to be mathematically considered. The optical mapping result from the matrix analysis (temporal dispersion profile t ω and spatial dispersion profile x ω ) is integrated to the mathematical model for simulating the optical wave propagation in the dispersive elements. At the final stage of the model, the time-to-space mapping x t is used for reconstructing the image from the electrical scan signal.

Mathematical Model

The imaging performance of the system is determined by the spectro-spatio-temporal map of the optical pulse in conjunction with the responses of diffraction grating pair, dispersive fiber, photodetector, and digitizer. In order to rigorously investigate the imaging process, we have established a mathematical model for the time stretch imaging system (laser scanner type)11.

We assume the initial optical pulse to be a transform-limited Gaussian pulse with its electrical field in the frequency domain expressed as

where E 0 is the pulse amplitude and T 0 is the pulse half-width. The pulse after propagation through the dispersive fiber with frequency response \({H}_{fiber}(\omega )\) is expressed as

where \({\tau }_{g}(\omega )\) is the group delay of the dispersive element. Due to TS-DFT, the group delay is identical to the frequency-time relationship \({t}_{\omega }(\omega )\) obtained from the matrix analysis. We note that the group-delay dispersion of the dispersive fiber is required to meet the far-field condition13, 21 to successfully map the spectrum into time domain. The diffraction grating pair spectrally encodes the spatial information of the sample \({S}_{x}\) where the process can be viewed as amplitude spectral mask with spectrally varying broadening profile \(g(\omega ,\omega ^{\prime} )\) for each frequency component ω′. The frequency response of the diffraction grating pair and imaging target can be calculated as

where \({S}_{\omega }(\omega )={S}_{x}({x}_{\omega }(\omega ))\), which is the spectral representation of \({S}_{x}\) obtained by a change of domain using the frequency-to-space mapping function \({x}_{\omega }(\omega )\). We have assumed that each spectral component to be a Gaussian beamlet when incident on the sample. The finite beam width of the Gaussian spectral component ω′ results in a Gaussian spectral broadening profile \(g(\omega ,\omega ^{\prime} )\), which can be expressed as

where spectral width \({w}_{\omega }(\omega )={w}_{x}\cdot {[d{x}_{\omega }(\omega )/d\omega ]}^{-1}\) is a product of the full width half maximum (FWHM) incident beam width of the spectral component \({w}_{x}\) on the sample and the inverse derivative of frequency-space relationship \({x}_{\omega }(\omega )\). In the case of the 2-f configuration shown in Fig. 3e (grating – focusing lens – sample), the beam width on the sample plane becomes \({w}_{x}=\sqrt{2\,{\rm{l}}{\rm{n}}(2)}F{\lambda }_{0}\,\cos \,{\theta }_{i}/(\pi W\,\cos \,{\theta }_{d})\) where W is the input beam waist, F is the focal length of the lens after the diffraction grating, λ 0 is the optical wavelength, θ i , and θ d are the incident and diffraction angle respectively. Here, the beam width \({w}_{x}\) can be numerically determined by performing Finite Difference Time Domain simulations when using unconventional spatial dispersion devices. Using the derived frequency response, the electric field after the diffraction grating pair and the sample becomes

The transmitted or reflected stream of light from the sample is then converted into the electrical signal by the photodetector and recorded by the digitizer. The photocurrent \({I}_{P}(t)\) and the frequency domain representation of the photocurrent \({I}_{e}(\omega )\) after photodetection and digitization becomes

where ω now represents electrical signal (RF) frequency, K is the responsivity of the photodetector and \({H}_{e}(\omega )\) is the frequency response of the electrical back-end consisting of photodetector and digitizer. We may either use an equivalent RC circuit model2, 21 or the frequency response acquired from the actual photodetector for \({H}_{e}(\omega )\). Finally, the signal is digitally sampled and recorded by the digitizer as

where T is the sampling period of the digitizer and n is the pixel index of the recorded scan signal. Each pixel of the recorded image signal \({I}_{dig}[n]\) is located at its corresponding discrete spatial coordinate \(X[n]\), which can be expressed as

Finally, the imaging result \({I}_{x}[X]\) is reconstructed by mapping the signal \({I}_{dig}[n]\) to the spatial domain \(X[n]\).

Results and Discussion

In this section, we consider the time stretch imaging system used in ref. 11 to demonstrate our analysis method. First, we perform numerical simulations and compare the results with the experimental data to validate our method. We then analyze the spatial resolution in terms of line-spread function (LSF) and modulation transfer function (MTF), which are widely used metrics for evaluating imaging systems. By taking note of the scan signal warp in the imaging system11, we also discuss the effects of high-order spatial dispersion on the resultant image.

Simulation of Time/Warped Stretch Imaging

Discrete-time complex envelope analysis21 was used for the numerical simulation of the mathematical model. We have compared and analyzed the imaging result of simulation and experiment from ref. 11 and the results are plotted in Fig. 4. As shown in Fig. 4a, total of 49 scans were performed while 0.5 mm wide slit was translated by interval of 0.5 mm for each scan. The transmitted light through the slit is captured and recorded by the photodetector and digitizer. The recorded scan from the previous experiment11 is shown in Fig. 4b which displays a nonlinear relationship between time (pixel number) and space. We reconstruct the image by mapping the signal to corresponding spatial coordinate (eq. 25) where the result is shown in Fig. 4d. We can see that the reconstructed image from the simulation Fig. 4c matches well with the image from the experiment. In addition, we have observed the broadening feature of the image across the FOV as shown in Fig. 4c and d which implies nonuniform spatial resolution of the image.

Reconstruction and numerical simulation of time stretch imaging system from ref. 11. (a) A 0.5 mm wide slit is placed immediately before the target sample and translated transversely along the scanning beam at intervals of 0.5 mm. The total number of scans is 49. The FOV of the system is limited to 24.5 mm, since the time stretched pulse width is limited by 11 ns to avoid overlapping of the consecutive pulses. (b) Recorded scan data from the digitizer11. (c) Reconstructed image from simulation. (d) Reconstructed image from the experimental scan data. The inset shows a comparison of the reconstructed image from the simulation (solid red) and from experimental data (solid blue). The 24th scan number is indicated by the dashed grey line.

Spatial Resolution

The spatial resolution of the imager is defined by a combination of factors, including the diffraction grating, dispersive fiber, photodetector and digitizer. We summarized the spatial resolution limits for each contributing factors in Table 2. The spatial resolution limit of the diffraction grating can be directly expressed with the beam width w x of a spatially dispersed frequency component at the imaging target, whereas the spectral ambiguity of the time stretch process (TS-DFT)13 for the dispersive fiber is translated into a spatial resolution by the frequency-to-space conversion factor \(|d{x}_{\omega }(\omega )/d\omega |\). We note that the far-field condition13, 21 should be satisfied for the spatial resolution expression to be valid for time stretch processes. The temporal resolution which arises from the finite electrical bandwidth of the detection system also limits the spatial resolution. We define the temporal resolution \({\tau }_{FWHM}\) as the FWHM of detection system’s temporal impulse function and convert it into spatial resolution by using frequency-to-time \(|d{t}_{\omega }(\omega )/d\omega |\) and frequency-to-space \(|d{x}_{\omega }(\omega )/d\omega |\) conversion factors, respectively. In a similar manner, the resolution limit arises from the temporal sampling rate of the digitizer \({f}_{dig}\) 2. Here, the spatial resolution limits for each factor are derived as a function of optical frequency.

The overall spatial resolution can be obtained by evaluating the net response of the system. In the spatial domain, LSF represents the net broadening caused by the imaging system32, 33. To obtain the LSF, we perform imaging simulation of Dirac delta function as the imaging sample. As previously shown, since the spatial resolution is not uniform over the scanning beam, the LSF should also be considered as a shift-variant and locally defined function. Therefore, we take series of \(LSF(x,x^{\prime} )\) corresponding to imaging simulation result of Dirac delta function \(\delta (x-x\text{'})\) where \(x^{\prime} \) is the spatial coordinate of interest within the FOV.

In this study, we define the overall spatial resolution as the FWHM of the LSF. As shown in Fig. 5a as a purple-dotted line, the total spatial resolution clearly exhibits a nonuniform profile along the line scan. When assessing the contributions from each individual optical elements, we find that the spatial resolution is primarily limited by the diffraction grating and the photodetector. While the resolution profile of the diffraction grating pair is constant and equal to the incident beam width (1.5 mm). The nonlinear frequency-to-space mapping introduced by the diffraction grating pair causes the resolution profile of the other optical elements to vary across the FOV.

LTF and MTF spatial resolution analysis of time stretch imaging system in ref. 11. The temporal response of the detection system was assumed to have a rise-time of 750 ps, and a sampling rate of 20 GS. (a) The spatial resolution of a single line scan. Grating: diffraction grating pair; Fiber: dispersive fiber; EB: electrical bandwidth; SR: sampling rate; Total: overall spatial resolution as defined by the FWHM of the LSF. (b) Three-dimensional color plot of MTF. The black-dashed line represents the MTF cut-off frequency at half-maximum. (c) MTF as evaluated for x = 2, 13, 24 mm each corresponding to the white-dashed lines in (b).

Another widely used metric for spatial resolution of an imaging system is the MTF, which represents the resolving power in terms of spatial frequency32, 33. Like the LSF, the MTF is also shift-variant and should be also determined independently at each spatial position \(x^{\prime} \). The locally - defined MTF is evaluated by taking the Fourier transform of the LSF at the corresponding location. It can be expressed as

where \({f}_{x}\) is the spatial frequency, k is the normalization constant and \({F}\) is the Fourier transform operator. Figure 5b shows the three-dimensional color plot of the MTF defined at each spatial position \(x^{\prime} \). As we move away from the spatial position x = 0, the FWHM of the LSF (purple-dotted line in Fig. 5a) increases while the MTF bandwidth (black-dashed line in Fig. 5b) decreases. This means that a smaller line broadening (LSF) allows for the detection of features at a higher spatial frequency (i.e., MTF bandwidth). The correlation is also evident from the Fourier transform relationship between the MTF and the LSF in eq. (26). Figure 5c shows the calculated MTFs for three different locations as denoted by white dashed lines in Fig. 5b. When imaging a sinusoidal pattern sample with a single spatial frequency, the contrast of the imaged pattern will be different across the FOV. The sinusoidal image obtained at spatial positions x = 2, 13, 24 mm will have different contrast proportional to their MTF (Fig. 5c) shown as red, green and blue solid lines, respectively.

Influence of Spatial Dispersion Element

Time/warped stretch imaging systems generally have fixed temporal dispersion elements, each of which is governed by their respective dispersion profiles and the propagation length within each dispersive element. However, the spatial dispersion of diffraction grating pairs can be adjustable simply by repositioning the gratings. For example the FOV is proportional to the distance between the grating pair. In particular, changing the incident angle of the optical input into the diffraction grating affects the FOV and spatial resolution profile (warp profile) simultaneously. To illustrate this effect in a quantitative fashion, we numerically analyze the FOV and spatial resolution of the imaging system for three different incident angles (55.2°, 56.7° and 63°) at the diffraction grating pair used in ref. 11. The angular tuning of the diffraction grating results in varying frequency-space relationship x ω and time-space relationship x t as shown in Fig. 6a. It shows that a mere difference of 8 degrees in the incident angle yields a more than four-fold difference in the FOV. Similarly, the degree of nonlinearity in the time-to-space mapping function is also affected by changes in the incident angle, being the most significant for the incident angle of 55.2° and the least significant for the Littrow configuration (63°). This feature is evident from the grating equation where spatial (angular) dispersion becomes more nonlinear as we move away from the Littrow angle. Figure 6b shows a comparison of the reconstructed images at the three specified angles. We used a picket fence pattern with a period of 5 mm as the imaging sample. Visual inspection of the edge sharpness indicates that the nonlinearity in the space-time relationship promotes an increasing spatial resolution along the scan line. A detailed analysis of the spatial resolution is shown in Fig. 6c–e for each incidence angle, respectively. As mentioned in the spatial resolution analysis, the spatial resolution imposed by the diffraction grating pair is fixed by the incident beam width, which is identical for all cases. However, the nonlinear spatial dispersion enhances the varying amount of \(|d{x}_{\omega }(\omega )/d\omega |\) over the spectrum which boosts the nonuniformity in spatial resolution limits of other elements (cf. Table 2). In addition, the spatial resolution becomes coarser with wider FOV which results from high average value of \(|d{x}_{\omega }(\omega )/d\omega |\). The analysis results display that the reconfiguration of spatial dispersion component can significantly influence the FOV and spatial resolution profile of the acquired image. The above analysis shows that the physical adjustments to the spatially dispersive elements, such as in the case of angular tuning of the incident angle of the optical beam upon the grating pair, may provide an additional pathway for designing the spectrotemporal mapping profile of warped stretch transform imaging systems.

The effects of incidence angle on the space-to-time mapping and spatial resolution. (a) The space-to-time mapping at three different incident angles 55.2° (“1”, solid red), 56.7° (“2”, solid blue), 63° (“3”, solid green). (b) The reconstructed images from numerical simulation at the three incidence angles. The 5-mm period picket fence pattern used as the sample is shown in the background for reference. (c,d, and e) Spatial resolution profiles at the three incidence angles. The FOV is 45.1 mm, 28.2 mm, and 10.9 mm at each respective angle, respectively.

Conclusions

Here, we have presented a general analysis method for characterizing arbitrary spatial, spectral and temporal dispersion profiles, the crucial components used in warped stretch imaging systems. We have established the modified matrix method to accommodate arbitrary frequency-space-time map pings of dispersive optical systems and constructed a mathematical model to numerically simulate the imaging operation. Employing our numerical analysis, we have quantified the spatial resolution in terms of its LSF and MTF, and the design parameters such as the FOV, total number of pixels, detection sensitivity and reconstruction map. Finally, we investigated the effect of tuning the nonlinear spatial dispersion on the imaging operation. Our method serves as a comprehensive tool for characterizing arbitrary reconstruction mappings and for empirically optimizing the performance of actual warped stretch imaging systems, and enables the ability to fully design a warped stretch imaging system prior to its construction. We anticipate that the increased accessibility to warped stretch systems that this method provides would encourage its adoption for precision imaging and ultrafast optical inspection applications.

Data availabilty

The datasets generated and analyzed during the current study are available from the corresponding author on reasonable request.

References

Goda, K., Tsia, K. K. & Jalali, B. Serial time-encoded amplified imaging for real-time observation of fast dynamic phenomena. Nature 458, 1145–1149 (2009).

Goda, K. & Jalali, B. Dispersive Fourier transformation for fast continuous single-shot measurements. Nat. Photonics 7, 102–112 (2013).

Mahjoubfar, A. et al. Time stretch and its applications. Nat. Photonics 11, 341–351 (2017).

Goda, K. et al. High-throughput single-microparticle imaging flow analyzer. Proc. Natl. Acad. Sci. 109, 11630–11635 (2012).

Goda, K. et al. Hybrid Dispersion Laser Scanner. Sci. Rep. 2, 445 (2012).

Chen, C. L. et al. Deep Learning in Label-free Cell Classification. Sci. Rep. 6, 21471 (2016).

Mahjoubfar, A., Chen, C., Niazi, K. R., Rabizadeh, S. & Jalali, B. Label-free high-throughput cell screening in flow. Biomed. Opt. Express 4, 1618–25 (2013).

Mahjoubfar, A. et al. High-speed nanometer-resolved imaging vibrometer and velocimeter. Appl. Phys. Lett. 98, 101107 (2011).

Mahjoubfar, A., Chen, C. L. & Jalali, B. Artificial Intelligence in Label-free Microscopy, doi:10.1007/978-3-319-51448-2 (Springer International Publishing, 2017).

Chen, H. et al. Ultrafast web inspection with hybrid dispersion laser scanner. Appl. Opt. 52, 4072–4076 (2013).

Yazaki, A. et al. Ultrafast dark-field surface inspection with hybrid-dispersion laser scanning. Appl. Phys. Lett. 104, 251106 (2014).

Xing, F. et al. A 2-GHz discrete-spectrum waveband-division microscopic imaging system. Opt. Commun. 338, 22–26 (2015).

Goda, K., Solli, D. R., Tsia, K. K. & Jalali, B. Theory of amplified dispersive Fourier transformation. Phys. Rev. A 80, 43821 (2009).

Bhushan, A. S., Coppinger, F. & Jalali, B. Time-stretched analogue-to-digital conversion. Electron. Lett. 34, 1081 (1998).

Coppinger, F., Bhushan, A. S. & Jalali, B. Photonic time stretch and its application to analog-to-digital conversion. IEEE Trans. Microw. Theory Tech. 47, 1309–1314 (1999).

Chen, C. L., Mahjoubfar, A. & Jalali, B. Optical Data Compression in Time Stretch Imaging. PLoS One 10, e0125106 (2015).

Jalali, B. & Mahjoubfar, A. Tailoring Wideband Signals With a Photonic Hardware Accelerator. Proc. IEEE 103, 1071–1086 (2015).

Curcio, C., Sloan, K., Packer, O., Hendrickson, A. & Kalina, R. Distribution of cones in human and monkey retina: individual variability and radial asymmetry. Science (80-.). 236, 579–582 (1987).

Chan, J. C. K., Mahjoubfar, A., Chen, C. L. & Jalali, B. Context-Aware Image Compression. PLoS One 11, e0158201 (2016).

Jalali, B., Chan, J. & Asghari, M. H. Time–bandwidth engineering. Optica 1, 23–31 (2014).

Mahjoubfar, A., Chen, C. L. & Jalali, B. Design of Warped Stretch Transform. Sci. Rep. 5, 17148 (2015).

Tsia, K. K., Goda, K., Capewell, D. & Jalali, B. Performance of serial time-encoded amplified microscope. Opt. Express 18, 10016 (2010).

Kostenbauder, A. G. Ray-Pulse Matrices: A Rational Treatment for Dispersive Optical Systems. IEEE J. Quantum Electron. 26, 1148–1157 (1990).

Zhou, Z. et al. Artificial local magnetic field inhomogeneity enhances T2 relaxivity. Nat. Commun. 8, 15468 (2017).

Huang, C., Nie, L., Schoonover, R. W., Wang, L. V. & Anastasio, M. A. Photoacoustic computed tomography correcting for heterogeneity and attenuation. J. Biomed. Opt. 17, 61211 (2012).

Diebold, E. D. et al. Giant tunable optical dispersion using chromo-modal excitation of a multimode waveguide. Opt. Express 19, 23809 (2011).

Wu, J.-L. et al. Ultrafast laser-scanning time-stretch imaging at visible wavelengths. Light Sci. Appl. 6, e16196 (2016).

Aktürk, S., Gu, X., Gabolde, P. & Trebino, R. In Springer Series in Optical Sciences 132, 233–239 (2007).

Treacy, E. B. Optical Pulse Compression With Diffraction Gratings. IEEE J. Quantum Electron. 5, 454–458 (1969).

Diels, J.-C., Rudolph, W., Diels, J.-C. & Rudolph, W. In Ultrashort Laser Pulse Phenomena 61–142, doi:10.1016/B978-012215493-5/50003-3 (2006).

Agrawal, G. P. In Fiber-Optic Communication Systems 128–181 (John Wiley & Sons, Inc., 2011). doi:10.1002/9780470918524.ch4

Goodman, J. W. Introduction to Fourier optics (Roberts & Co, 2005).

Rossmann, K. Point Spread-Function, L Spread-Function, and Modulation Transfer Function. Radiology 93, 257–272 (1969).

Acknowledgements

The work at Advanced Photonics Research Institute (APRI) was partially supported by “Research on Advanced Optical Science and Technology” grant funded by the Gwangju Institute of Science and Technology (GIST) in 2017. The work at UCLA was partially supported by the Office of Naval Research (ONR) Multidisciplinary University Research Initiatives (MURI).

Author information

Authors and Affiliations

Contributions

Y.N. and C.K. conceived the analysis method; C.K. wrote the code for the method and performed simulations; A.M. and B.J. conceived the warped stretch imaging technique; A.Y., C.K., A.M and J.C. performed the experiment and analyzed the results; All authors prepared the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare that they have no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kim, C., Mahjoubfar, A., Chan, J.C.K. et al. Matrix Analysis of Warped Stretch Imaging. Sci Rep 7, 11150 (2017). https://doi.org/10.1038/s41598-017-11238-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-017-11238-5

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.