Abstract

Reconstruction of networks underlying complex systems is one of the most crucial problems in many areas of engineering and science. In this paper, rather than identifying parameters of complex systems governed by pre-defined models or taking some polynomial and rational functions as a prior information for subsequent model selection, we put forward a general framework for nonlinear causal network reconstruction from time-series with limited observations. With obtaining multi-source datasets based on the data-fusion strategy, we propose a novel method to handle nonlinearity and directionality of complex networked systems, namely group lasso nonlinear conditional granger causality. Specially, our method can exploit different sets of radial basis functions to approximate the nonlinear interactions between each pair of nodes and integrate sparsity into grouped variables selection. The performance characteristic of our approach is firstly assessed with two types of simulated datasets from nonlinear vector autoregressive model and nonlinear dynamic models, and then verified based on the benchmark datasets from DREAM3 Challenge4. Effects of data size and noise intensity are also discussed. All of the results demonstrate that the proposed method performs better in terms of higher area under precision-recall curve.

Similar content being viewed by others

Introduction

In recent years, there has been an explosion of various datasets, which are collected in scientific, engineering, medical and social applications1, 2. They often contain information that represents a combination of different properties of the real world. Identifying causality or correlation among datasets3,4,5,6,7 is increasingly vital for effective policy and management recommendations on climate, epidemiology, neuroscience, economics and much else. With these rapid advances in the studies of causality and correlation, complex network reconstruction has become an outstanding and significant problem in interdisciplinary science8,9,10,11,12. As we know, numerous real networked systems could be represented as network of interconnected nodes. But in lots of situations, network topology is fully unknown, which is hidden in the observations acquired from experiments. For complex systems, accompanied by the complexity of system dynamics, the limited observations with noisy measurements make the problem of network reconstruction even more challenging. An increased attention for network reconstruction is being attracted in the past few years13,14,15,16,17,18,19.

Among the developed methods, vector autoregressive model (VAR) is able to estimate the temporal dependencies of variables in multivariate model, which gains growing interest in recent years20,21,22. As one of the most prevalent VAR methods, Granger Causality (GC) can be efficiently applied in causal discovery23. Conditional Granger Causality (CGC) is put forward to differentiate direct interactions from indirect ones24. To extend the application of GC limited by linear dynamics, nonlinear GC is developed25,26,27,28, which is relatively less considered until now. Generally speaking, the application of these GC methods might get in trouble, especially when the size of samples is small and the number of variables is large. To conquer such problem, some composed methods are presented by integrating variable selection into CGC model, such as Lasso-CGC29, 30 and grouped lasso graphical granger31. Group lasso is also used in multivariate regression and multi-task learning32,33,34,35,36. Compressive sensing or sparse regression is popularly applied in the network reconstruction and system identification. However, most of these methods are confined to identify parameters of complex systems governed by pre-defined models18, 37, 38. Moreover, some methods consider taking some polynomial and rational functions as a prior information which is usually difficult to obtain in advance, for subsequent model selection39, 40.

In this paper, we concern over inferring complex nonlinear causal networks from time-series and propose a new method termed as group lasso nonlinear conditional granger causality (GLasso-NCGC). Particularly, we establish a general data-driven framework for nonlinear network reconstruction from time-series with limited observations, which doesn’t require the pre-defined model or some polynomial and rational functions as a prior information. With obtaining multi-source datasets based on the data-fusion strategy introduced in recent high quality paper3, we first introduce the formulation of multivariate nonlinear conditional granger causality model (NCGC), and exploit different groups of radial basis functions (RBF) to approximate the nonlinear interactions between each pair of nodes respectively. Then we decompose the task of inferring the whole network into local neighborhood selections centered at each target node. Together with the natural sparsity of real complex networks, group lasso regression41 is utilized for each local structure recovery, where different RBF variables from different centers in each node should be either eliminated or selected as a group. Next, we obtain the candidate structure of the network by resolving the problem of group lasso regression. As a result, the final network can be judged by significance levels with Fisher statistic test for removing false existent links.

For the purpose of performance evaluations for our proposed method, simulated datasets from nonlinear vector autoregressive model are firstly generated. Compared with CGC, Lasso-CGC and NCGC, we find GLasso-NCGC outperforms other methods in terms of Area Under Precision-Recall curve (AUPR) and Receiver-Operating-Characteristic curve (AUROC). Effects of data size and noise intensity are also investigated. Besides, the simulation based on nonlinear dynamic models with network topology given in advance is carried out. We consider two classical nonlinear dynamic models which are used for modelling biochemical reaction networks40 and gene regulatory networks42 respectively. Especially for gene regulatory networks with Michaelis-Menten dynamics, we simulate gene regulatory model on random, small-world and scare-free networks. Then we explore the performance of these methods influenced by different average degrees, noise intensities and amounts of data simultaneously for these three types of networks. Based on the sub-challenge of Dialogue on Reverse Engineering Assessment and Methods (DREAM), we finally use the benchmark datasets of DREAM3 Challenge4 for investigation. All of the results demonstrate the proposed method GLasso-NCGC executes best on the previous mentioned datasets, which is fully certified to be optimal and robust to noise.

Models and Methods

Multivariate conditional granger causality

Consider N time-course variables {X, Y, Z 1, Z 2, …, Z N−2} in multivariate conditional granger causality model, the current value of Y t can be expressed by the past values of Y and Z 1, Z 2, …, Z N−2 in Equation (1). Meanwhile, Y t can be also written as the joint representation of the past values of Y, X and Z 1, Z 2, …, Z N−2 in Equation (2).

where, \({a}_{i},{c}_{k,i},{a}_{i}^{^{\prime} },{b}_{i}^{^{\prime} },{c}_{k,i}^{^{\prime} }\) are the coefficients in VAR model, p is the model order, ε 1 and ε 2 are the noise terms. Let Z = {Z 1, Z 2, …, Z N−2}, then the CGC index can be shown as CGCI X→Y|Z = ln (var(ε 1)/var(ε 2)), which is used to analyze the conditional causal interaction between two temporal variables. If the variance var(ε 1) is larger than the variance var(ε 2), i.e. CGCI X→Y|Z > 0, X causes Y conditioned on the other sets of N − 2 variables Z. Generally, the statistic judgement of terminal results can be executed by significant levels with F-test.

Group lasso nonlinear conditional granger causality

The unified framework of multivariate nonlinear conditional granger causality (NCGC) model is proposed as shown in Equation (3).

where, target variables \({\bf{Y}}=[{{\bf{y}}}_{1}\quad {{\bf{y}}}_{2}\cdots {{\bf{y}}}_{N}]\), coefficient matrix \({\bf{A}}=[{{\boldsymbol{\alpha }}}_{1}\,{{\boldsymbol{\alpha }}}_{2}\cdots {{\boldsymbol{\alpha }}}_{N}]\), noise terms \({\bf{E}}=[{\varepsilon }_{1}\,{\varepsilon }_{2}\cdots {\varepsilon }_{{\boldsymbol{N}}}]\), Φ(X) is data matrix of nonlinear kernel functions. The detailed descriptions for all the elements above are given in Equation (4).

For each target variable y i ∈ Y, we can obtain N independent equations as follows.

where,

N is the number of variables (time-series), T is the length of each time-series. The value of i-th variable x i (t) can be observed at time t. Here, the form of \({\phi }_{\rho }({{\bf{X}}}_{j}^{k})\) is taken as radial basis function. \({\{{\tilde{{\bf{X}}}}_{j}^{\rho }\}}_{\rho =1}^{n}\) is the set of n centers in the space of X j , which can be acquired by k-means clustering methods. α i is the coefficient between target variable y i and Φ(X). a ij (n) is the coefficient corresponding to the function \({\phi }_{n}({{\bf{X}}}_{j}^{k})\).

Generally, we can use least square method to deal with Equation (4). But the solution procedure of Equation (4) may be problematic when the number of variables is relatively larger than the number of available samples. With the knowledge of sparsity in A, we can turn to regularization-based methods for help. In this case, it’s noteworthy that apparent groupings exist among variables. So variables belonging to the same group should be regarded as a whole. Here, a series of RBF variables from the different centers in the same time-series should be either eliminated or selected as a group. Then group lasso is adopted to solve this problem of sparse regression as follows.

In Equation (5), we take l 2 norm as the intra-group penalty.

The detailed algorithm of Group Lasso Nonlinear Conditional Granger Causality is shown in Algorithm 1.

With small samples at hand, first-order vector autoregressive model is usually considered20, 21. Besides, in our consideration, model order is also set as p = 1, which is in accordance with the characteristics of dynamic systems governed by state-space equations. For the time-delayed dynamic systems, the implementation of higher-order vector autoregressive model can be straightly extended. In addition, we can use cross validation criterion for the selection of optimal parameters, such as the number of RBF centers n and the coefficient of penalty terms λ i . Then we mainly describe the selection of λ i as follows. We use two stages of refined selection which are similarly adopted by Khan et al.43. In the first stage, we set the coarse values of the search space λ ∈ {λ 1, λ 2, λ 3, λ 4, λ 5}, which determines the neighborhood of the optimal λ i . In the second stage, we obtain the neighborhood of the optimal λ i for refined search, i.e. λ ∈ [0.5λ i , 1.5λ i ], the interval Δλ = kλ i (0 < k < 1). Here, λ 1 = 10−4, λ 2 = 10−3, λ 3 = 10−2, λ 4 = 10−1, λ 5 = 1, k = 0.1. For example, if we choose λ 3 = 10−2 in the first stage, we next confine the refined search in the range of λ ∈ [0.005, 0.015], where Δλ = 10−3. For large-scale networks, we can just take relatively larger k to reduce the range of search space and ensure the low computational cost.

Meanwhile, in order to ensure the variety of datasets, multiple measurements are often carried out under different conditions (adding perturbations or knocking out nodes et al.). As a result, the extension of Equation (3) can be written as the following formula.

In Equation (6), m denotes the number of measurements. At m-th measurement, \({\tilde{{\bf{Y}}}}_{m}\) and \({\tilde{{\bf{X}}}}_{m}\) are acquired for integrating them in Equation (6). Then Equation (6) can be also divided into N independent equations that would be solved by group lasso optimization with Equation (5). Finally, we execute nonlinear conditional granger causality with F-test in terms of the given significant level P val . In our paper, we set P val

Algorithm 1 Group Lasso Nonlinear Conditional Granger Causality |

1: Input: Time-series data {x i (t)}, i = 1, 2, …, N; t = 1, 2, …, T. N is the number of variables. T is the length of each time-series. |

2: According to model order p, form the data matrix X = [X 1, X 2, …, X N ] and target matrix Y = [y 1, y 2, …, y N ]. |

3: Formulize the data matrix X into Φ(X) based on radial basis functions as shown in Equation (4). |

4: For each target variable y i ∈ Y |

• Execute group lasso: |

\({\tilde{\alpha }}_{i}=\mathop{{\rm{a}}{\rm{r}}{\rm{g}}{\rm{m}}{\rm{i}}{\rm{n}}}\limits_{{\alpha }_{i}}({\Vert {{\rm{y}}}_{i}-{\rm{\Phi }}({\bf{X}}){\alpha }_{i}\Vert }_{2}^{2}+{\lambda }_{i}\sum _{j=1}^{N}{\Vert {\alpha }_{ij}\Vert }_{2}).\) |

• Obtain candidate variable sets S i for each y i according to \({\hat{\alpha }}_{i}\). Rearrange data matrix Φ(X) with S i expressed as \({{\rm{\Phi }}}_{{S}_{i}}\) and reform the expression of nonlinear conditional granger causality model. |

• Execute nonlinear conditional granger causality with F-test in terms of the given significant level P val . Confirm the causal variables of y i . |

end |

5: Output: Causal interactions among N variables, i.e. adjacency matrix \({{\mathbb{R}}}^{N\times N}\). |

= 0.01 for all the statistic analysis.

Results

Nonlinear vector autoregressive model

The first simulation is based on a nonlinear vector autoregressive model with N = 10 nodes (variables), see Equation (7).

The directed graph from Equation (7) together with true adjacency matrix are shown in Fig. 1. In Fig. 1(a), black lines are linear edges and blue lines are nonlinear edges. In Fig. 1(b), white points are existent edges among nodes, while black areas stand for nonexistent edges. Next, the synthetic datasets (M = 100 samples) are obtained based on the model of Equation (7), where ε is zero-mean uncorrelated gaussian noise terms with identical unit variance.

Networks inferred by CGC, Lasso-CGC, NCGC and GLasso-NCGC are shown in Fig. 2. According to the number of edges correctly recovered (True Positives), we can find that GLasso-NCGC almost captures all the existing edges except for the edges 2 → 2, 3 → 3, 3 → 8. Compared with GLasso-NCGC, NCGC additionally fails to recover two existing edges 6 → 8, 10 → 7. Due to neglect the nonlinear influences among nodes, both CGC and Lasso-CGC fail to find many existing edges, which leads to many edges falsely unrecovered (False Negatives).

For further measuring performance we plot ROC curves and PR curves. Figure 3 is PR and ROC curves generated by CGC, Lasso-CGC, NCGC, GLasso-NCGC respectively. Obviously, it can be seen from Fig. 3 that GLasso-NCGC outperforms its competitors with the highest score (AUROC and AUPR).

In the following section, the performance of robust estimation with different methods is explored. Firstly, multi-realizations are carried out, here the number of realizations is set as 100. Figure 4 demonstrates discovery rate matrixes from multi-realizations. Table 1 shows the comparison of discovery rate of true positives inferred by these methods. Discovery rate means the total number of each edge rightly discovered over multi-realizations. Compared with CGC, Table 1 summarizes Lasso-CGC improves the performance with relatively larger discovery rate in true edges. However, due to neglecting the nonlinearity of modeling, they can’t manifest better performance than NCGC and GLasso-NCGC. In general, for nonlinear edges, we can discover GLasso-NCGC nearly outperforms other methods. Specially, for edges 1 → 6, 2 → 7, 3 → 8 and 4 → 9, GLasso-NCGC and NCGC greatly identify these true causal edges at large percentages of 100 independent realizations. For linear edges, both GLasso-NCGC and NCGC also maintain high discovery rate.

Relatively, GLasso-NCGC utilizes group lasso to promote the performance of NCGC by silencing many false positives and realize the best reconstruction of adjacency matrix at last. Average AUROC and Average AUPR of different methods over 100 realizations are calculated in Table 2.

Then, we explore the performance of CGC, Lasso-CGC, NCGC and GLasso-NCGC influenced by different number of samples. Given that the number of samples varies from 100 to 300, at each point, we get the Average AUROC and Average AUPR over 100 independent realizations respectively. From Fig. 5(a) and (b), we find the performance of these four methods improves as the number of samples increases. GLasso-NCGC scores highest in both Average AUROC and Average AUPR.

Simulation of nonlinear VAR model. Average AUROC and Average AUPR of different methods acrossing samples (100~300), noise intensities σ (0.1~1) respectively. The values of points in these figures are computed by averaging over 100 independent realizations. (a,b) with noise \({\mathscr{N}}\mathrm{(0,1)}\), while (c,d) with samples M = 100.

Next, given samples with M = 100, the performance of CGC, Lasso-CGC, NCGC and GLasso-NCGC influenced by different noise intensities σ (0.1~1) is also compared as shown in Fig. 5(c) and (d). At each intensity of noise, the Average AUROC and Average AUPR over 100 independent realizations are computed respectively. GLasso-NCGC also wins the best score in both Average AUROC and Average AUPR.

Nonlinear dynamic model

Consider the complex dynamic system of N variables (nodes) with the following coupled nonlinear equations.

where state vector x = [x 1, x 2, …, x N ]T, the first term on the right-hand side of Equation (8) describes the self-dynamics of the i-th variable, while the second term describes the interactions between variable i and its interacting partners j. The nonlinear functions F(x i ) and H(x i , x j ) represent the dynamical laws that govern the variables of system. Let B be the adjacent matrix which describes the interactions among variables. b ij ≠ 0 if the j-th variable connects to the i-th one; otherwise, b ij = 0. ε i is zero-mean uncorrelated gaussian noise with variance σ 2. Indeed, these is no unified manner to establish the nonlinear framework for all the complex networked systems. But Equation (8) has the broad applications in many science domains. With an appropriate choice of F(x i ) and H(x i , x j ), Equation (8) is used to model various known systems, ranging from ecological systems, social systems and physical systems11, 18, 40, 42. Specifically, Equation (8) can be transformed into discrete-time expressions.

where, t k+1 − t k = 1. Here, the simulation systems are as follows.

S1: Biochemical reaction network (BRN).

Here, x 1, x 2 and x 3 are concentrations of the mRNA transcripts of genes 1,2,3 respectively; x 4, x 5 and x 6 are concentrations of the proteins of genes 1,2,3 respectively; α 1, α 2, α 3 are maximum promoter strength for the corresponding gene; γ 1, γ 2, γ 3 are mRNA decay rates; γ 4, γ 5, γ 6 are protein decay rates; β 1, β 2, β 3 are protein production rates; n 1, n 2, n 3 are hill coefficients.

The parameters of Equation (9) are given as follows. α 1 = 4, α 2 = 3, α 3 = 5; γ 1 = 0.3, γ 2 = 0.4, γ 3 = 0.5; γ 4 = 0.2, γ 5 = 0.4, γ 6 = 0.6; β 1 = 1.4, β 2 = 1.5, β 3 = 1.6; n 1 = n 2 = n 3 = 4. Meanwhile, we set the number of samples M = 50 and noise \(\varepsilon \sim {\mathscr{N}}\,{\mathrm{(0,0.1}}^{2})\).

Based on the true network [Fig. 6(a)] derived from Equation (9), we can find GLasso-NCGC almost recover all of the real edges over 100 independent realizations as shown in Fig. 6(c). From Fig. 6(c), CGC, Lasso-CGC and NCGC can’t discover the entire true positives but result in lots of false positives. In detail, we next choose some representative edges for further analysis. The discovery rate of these representative edges are calculated in Table 3. Compared with CGC, Lasso-CGC and NCGC, GLasso-NCGC demonstrates a considerable advantage for both these true positives and false positives.

With the quantitative comparison of these methods in Fig. 6(b), we can observe GLasso-NCGC get the highest Average AUROC and Average AUPR with the smallest standard deviations (resp. 0.9905 (0.0234) and 0.9037 (0.0235)).

Here, we also explore the performance of robust estimation with different Granger Causality methods. Figure 7 demonstrates the curves of Average AUROC and Average AUPR of different methods acrossing samples (20~80) and noise intensities σ (0.1~1) respectively. The values are obtained by averaging over 100 independent realizations.

S2: Gene Regulatory Networks (GRN).

Suppose that gene interactions can be modeled by Michaelis-Menten equation as follows.

where, f = 1, h = 2.

We first construct adjacent matrix B with N nodes and simulate gene regulatory model with three classical types of networks, including random, small-world and scare-free networks (resp. RN, SW, SF). Then synthetic datasets of M samples are generated from the gene regulatory model of Equation (10). In order to reconstruct large-scale complex networks, multiple measurements from different conditions should be executed by adding perturbations in some different ways or with random initial values. Meanwhile, the outputs of model are supposed to be contaminated by gaussian noise. The number of multiple measurements is set as m. T time points are obtained at each measurement. As a result, data matrix with M × N is collected (M = mT).

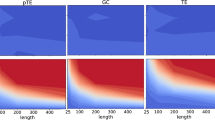

Here, we take size 100 SW network with average degree 〈k〉 = 5 for detailed analysis. We set gaussian noise intensity as 0.1 and generated 400 samples with 100 multi-measurements (each measurement with 4 time points). True SW network are given in Fig. 8(a) together with reconstructed networks which are inferred by different methods. From Fig. 8(a), we can observe that the network inferred by GLasso-NCGC is most similar to the true network. To some extent, both CGC and Lasso-CGC have similar structures compared with the true network. However, they generated lots of false positives. Meanwhile, it can be seen that NCGC almost failed in this case. Because there are more parameters involved in NCGC model and the ratio of the number of samples to the number of parameters is relatively smaller than that of CGC model. Actually, the best performance of GLasso-NCGC proves that regularization-based methods have superiority in the case of more parameters and relatively smaller samples. In Fig. 8(b), we plot PR curves and ROC curves of CGC, Lasso-CGC, NCGC and GLasso-NCGC. The almost perfect reconstruction is ensured by GLasso-NCGC. Furthermore, reconstructed values of elements in different inferred matrixes for size 100 SW network can be shown in Fig. 9(a). Red points are existent edges and green points are nonexistent edges. CGC, Lasso-CGC and NCGC cannot make a distinction between existent and nonexistent edges, because there are so many overlaps between red and green points without a certain threshold (P val ) for separation. However, GLasso-NCGC shows a vast and clear gap between existent and nonexistent edges. Based on the consideration of robustness, we next apply our method with respect to different intensities of noise \({\mathscr{N}}\,{\mathrm{(0,0.3}}^{2})\) and \({\mathscr{N}}\,{\mathrm{(0,0.5}}^{2})\) respectively. From Fig. 9(b), GLasso-NCGC also maintains a relatively good performance with strong measurement noise.

Simulation of GRN model on SW network (N = 100, \(\langle k\rangle \) = 5, M = 400). (a) Reconstructed values of elements in different inferred matrixes with noise \({\mathscr{N}}{\mathrm{(0,0.1}}^{2})\). (b) Reconstructed values of elements in recovered matrixes by GLasso-NCGC with noise \({\mathscr{N}}{\mathrm{(0,0.3}}^{2})\) and \({\mathscr{N}}{\mathrm{(0,0.5}}^{2})\) respectively.

Then we explore the performance of these methods influenced by different average degrees, noise intensities and amounts of data simultaneously for different types of networks. The detailed results are shown in Table 4. In general, all of the results demonstrate the optimality and robustness of GLasso-NCGC.

In order to compare the computational requirement by these methods, we simulate the SW and SF networks for comparison (N = 100, 〈k〉 = 5, M = 400). And we calculate the average computational time over 10 independent realizations as shown in Table 5. The specifications of the computer used to run the simulations are as follows. Matlab version: R2013a (64 bit); Operating system: Windows 10 (64 bit); Processor: Intel(R) Core(TM) i7-4770 CPU @ 3.40GHZ 3.40GHZ; RAM: 16GB.

DREAM3 Challenge4

Dialogue on Reverse Engineering Assessment and Methods (DREAM) projects establish the general framework for the verification of various algorithms, which have the broad applications in many areas of research (http://dreamchallenges.org/). There are so many challenges in DREAM projects. Here, DREAM3 Challenge4 is used for the further assessment. The aim of DREAM3 Challenge4 is to infer gene regulatory networks with multiple types of datasets.

DREAM3 Challenge4 has three sub-challenges corresponding to gene regulatory networks with size 10, size 50 and size 100 nodes respectively. In our work, time-series of size 50 and size 100 are used, together with their gold standard networks. There are five sub-networks of Yeast or E. coli, all of which are with size 50 and size 100 respectively. Meanwhile, time-series datasets of multiple measurements are acquired under some different conditions. For the networks of size 50 and size 100, the number of measurements are set as 23 and 46 respectively (each measurement with 21 time points).

To assess these results of different methods, we also calculate the AUROC and AUPR. We can discover that some methods get the highest AUROCs, while their AUPRs are pretty small. In many cases, good AUROC might accompany by a low precision because of a large ratio of FP/TP. Thus, AUPR is taken as the final evaluation metric. In Table 6, GLasso-NCGC gets the best AUPR in all the datasets only except for size 50 network of Yeast1. Furthermore, the average AUPRs of these methods are subsequently computed and plotted as shown in Fig. 10. Finally, GLasso-NCGC is also found to execute optimally with the highest average AUPR.

Conclusions

Reconstructing complex network is greatly useful for us to analyze and master the collective dynamics of interacting nodes. In our work, with getting multi-source datasets based on the data-fusion strategy, the new method namely group lasso nonlinear coditional granger causality (GLasso-NCGC) is proposed for network recovery with time-series. The evaluations of performance address that GLasso-NCGC is superior to other mentioned methods. Effects of data size and noise intensity are also discussed. Although the models or applications we mainly focus on here are biochemical reaction network and gene regulatory network, our method can be also used to other complex networked systems, such as kuramoto oscillator network and mutualistic network40, 42. Here, it is also important to remember that we just adopt the model of first-order nonlinear conditional granger causality (NCGC), which is in accordance with the characteristics of dynamic systems governed by state-space equations. For the time-delayed dynamic systems, such as coupled Mackey-Glass system28, the framework of our method can be flexibly extended to higher-order NCGC model with group lasso regression, which can be waited for the prospective researches. In the information era with explosive growth of data, our proposed method provides a general and effective data-driven framework for nonlinear network reconstruction, especially for the complex networked systems that can be turned into the form of Y = Φ(X)A.

References

Reichman, O. J., Jones, M. B. & Schildhauer, M. P. Challenges and opportunities of open data in ecology. Science 331, 703–705, doi:10.1126/science.1197962 (2011).

Marx, V. Biology: The big challenges of big data. Nature 498, 255–260, doi:10.1038/498255a (2013).

Bareinboim, E. & Pearl, J. Causal inference and the data-fusion problem. Proceedings of the National Academy of Sciences 113, 7345–7352, doi:10.1073/pnas.1510507113 (2016).

Reshef, D. N. et al. Detecting novel associations in large data sets. Science 334, 1518–24, doi:10.1126/science.1205438 (2011).

Sugihara, G. & Munch, S. Detecting causality in complex ecosystems. Science 338, 496–500, doi:10.1126/science.1227079 (2012).

Zhao, J., Zhou, Y., Zhang, X. & Chen, L. Part mutual information for quantifying direct associations in networks. Proceedings of the National Academy of Sciences 113, 5130–5135, doi:10.1073/pnas.1522586113 (2016).

Yin, Y. & Yao, D. Causal inference based on the analysis of events of relations for non-stationary variables. Scientific Reports 6, 29192, doi:10.1038/srep29192 (2016).

Margolin, A. A. et al. Reverse engineering cellular networks. Nature protocols 1, 662–671, doi:10.1038/nprot.2006.106 (2006).

de Juan, D., Pazos, F. & Valencia, A. Emerging methods in protein co-evolution. Nature Reviews Genetics 14, 249–261, doi:10.1038/nrg3414 (2013).

Marbach, D. et al. Wisdom of crowds for robust gene network inference. Nature methods 9, 796–804, doi:10.1038/nmeth.2016 (2012).

Wang, W.-X., Lai, Y.-C. & Grebogi, C. Data based identification and prediction of nonlinear and complex dynamical systems. Physics Reports 644, 1–76, doi:10.1016/j.physrep.2016.06.004 (2016).

Chai, L. E. et al. A review on the computational approaches for gene regulatory network construction. Computers in biology and medicine 48, 55–65, doi:10.1016/j.compbiomed.2014.02.011 (2014).

Villaverde, A. F., Ross, J. & Banga, J. R. Reverse engineering cellular networks with information theoretic methods. Cells 2, 306–329, doi:10.3390/cells2020306 (2013).

Zou, C. & Feng, J. Granger causality vs. dynamic bayesian network inference: a comparative study. BMC bioinformatics 10, 122, doi:10.1186/1471-2105-10-122 (2009).

Wang, W.-X., Yang, R., Lai, Y.-C., Kovanis, V. & Harrison, M. A. F. Time–series–based prediction of complex oscillator networks via compressive sensing. EPL 94, 48006, doi:10.1209/0295-5075/94/48006 (2011).

Shandilya, S. G. T. M. Inferring network topology from complex dynamics. New Journal of Physics 13, 013004, doi:10.1088/1367-2630/13/1/013004 (2011).

Han, X., Shen, Z., Wang, W. X., Lai, Y. C. & Grebogi, C. Reconstructing direct and indirect interactions in networked public goods game. Scientific Reports 6, 30241, doi:10.1038/srep30241 (2016).

Mei, G., Wu, X., Chen, G. & Lu, J. A. Identifying structures of continuously-varying weighted networks. Scientific Reports 6, 26649, doi:10.1038/srep26649 (2016).

Iglesiasmartinez, L. F., Kolch, W. & Santra, T. Bgrmi: A method for inferring gene regulatory networks from time-course gene expression data and its application in breast cancer research. Scientific Reports 6, 37140, doi:10.1038/srep37140 (2016).

Fujita, A. et al. Modeling gene expression regulatory networks with the sparse vector autoregressive model. BMC Systems Biology 1, 39, doi:10.1186/1752-0509-1-39 (2007).

Opgen-Rhein, R. & Strimmer, K. Learning causal networks from systems biology time course data: an effective model selection procedure for the vector autoregressive process. BMC bioinformatics 8, S3, doi:10.1186/1471-2105-8-S2-S3 (2007).

Michailidis, G. & D’Alché-Buc, F. Autoregressive models for gene regulatory network inference: Sparsity, stability and causality issues. Mathematical biosciences 246, 326–334, doi:10.1016/j.mbs.2013.10.003 (2013).

Granger, C. W. Investigating causal relations by econometric models and cross-spectral methods. Econometrica: Journal of the Econometric Society 424–438 (1969).

Siggiridou, E. & Kugiumtzis, D. Granger causality in multivariate time series using a time-ordered restricted vector autoregressive model. IEEE Transactions on Signal Processing 64, 1759–1773, doi:10.1109/TSP.2015.2500893 (2016).

Marinazzo, D., Pellicoro, M. & Stramaglia, S. Kernel method for nonlinear granger causality. Physical Review Letters 100, 144103, doi:10.1103/PhysRevLett.100.144103 (2008).

Ancona, N., Marinazzo, D. & Stramaglia, S. Radial basis function approach to nonlinear granger causality of time series. Physical Review E 70, 056221, doi:10.1103/PhysRevE.70.056221 (2004).

Fujita, A. et al. Modeling nonlinear gene regulatory networks from time series gene expression data. Journal of bioinformatics and computational biology 6, 961–979, doi:10.1142/S0219720008003746 (2008).

Kugiumtzis, D. Direct-coupling information measure from nonuniform embedding. Physical Review E 87, 062918, doi:10.1103/PhysRevE.87.062918 (2013).

Shojaie, A. & Michailidis, G. Discovering graphical granger causality using the truncating lasso penalty. Bioinformatics 26, i517–i523, doi:10.1093/bioinformatics/btq377 (2010).

Arnold, A., Liu, Y. & Abe, N. Temporal causal modeling with graphical granger methods. Proceedings of the 13th ACM SIGKDD international conference on Knowledge discovery and data mining, 66–75 (2007).

Lozano, A. C., Abe, N., Liu, Y. & Rosset, S. Grouped graphical granger modeling for gene expression regulatory networks discovery. Bioinformatics 25, i110–i118, doi:10.1093/bioinformatics/btp199 (2009).

Yuan, M. & Lin, Y. Model selection and estimation in regression with grouped variables. Journal of the Royal Statistical Society 68, 49–67, doi:10.1111/rssb.2006.68.issue-1 (2006).

Bolstad, A., Van Veen, B. D. & Nowak, R. Causal network inference via group sparse regularization. IEEE Transactions on Signal Processing A Publication of the IEEE Signal Processing Society 59, 2628–2641, doi:10.1109/TSP.2011.2129515 (2011).

He, D., Kuhn, D. & Parida, L. Novel applications of multitask learning and multiple output regression to multiple genetic trait prediction. Bioinformatics 32, i37–i43, doi:10.1093/bioinformatics/btw249 (2016).

Puniyani, K., Kim, S. & Xing, E. P. Multi-population gwa mapping via multi-task regularized regression. Bioinformatics 26, i208–16, doi:10.1093/bioinformatics/btq191 (2010).

Argyriou, A., Evgeniou, T. & Pontil, M. Convex multi-task feature learning. Machine Learning 73, 243–272, doi:10.1007/s10994-007-5040-8 (2008).

Chang, Y. H., Gray, J. W. & Tomlin, C. J. Exact reconstruction of gene regulatory networks using compressive sensing. BMC Bioinformatics 15, 400, doi:10.1186/s12859-014-0400-4 (2014).

Han, X., Shen, Z., Wang, W.-X. & Di, Z. Robust reconstruction of complex networks from sparse data. Physical review letters 114, 028701, doi:10.1103/PhysRevLett.114.028701 (2015).

Brunton, S. L., Proctor, J. L. & Kutz, J. N. Discovering governing equations from data by sparse identification of nonlinear dynamical systems. Proceedings of the National Academy of Sciences 113, 3932–3937, doi:10.1073/pnas.1517384113 (2016).

Pan, W. & Yuan, Y. A sparse bayesian approach to the identification of nonlinear state-space systems. IEEE Transactions on Automatic Control 61, 182–187, doi:10.1109/TAC.2015.2426291 (2016).

Yang, G., Wang, L. & Wang, X. Network reconstruction based on grouped sparse nonlinear graphical granger causality. 35th Chinese Control Conference, 2229–2234 (2016).

Gao, J., Barzel, B. & Barabási, A. Universal resilience patterns in complex networks. Nature 530, 307–312, doi:10.1038/nature16948 (2016).

Khan, J., Bouaynaya, N. & Fathallah-Shaykh, H. M. Tracking of time-varying genomic regulatory networks with a lasso-kalman smoother. Eurasip Journal on Bioinformatics and Systems Biology 2014, 3, doi:10.1186/1687-4153-2014-3 (2014).

Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grant Nos. 61473189 and 61374176, the Science Fund for Creative Research Groups of the National Natural Science Foundation of China (No. 61521063).

Author information

Authors and Affiliations

Contributions

G.Y. designed and conducted the research. G.Y., L.W. and X.W. discussed the results and wrote the manuscript. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare that they have no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yang, G., Wang, L. & Wang, X. Reconstruction of Complex Directional Networks with Group Lasso Nonlinear Conditional Granger Causality. Sci Rep 7, 2991 (2017). https://doi.org/10.1038/s41598-017-02762-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-017-02762-5

This article is cited by

-

Inferring species interactions using Granger causality and convergent cross mapping

Theoretical Ecology (2021)

-

Reconstruction of ensembles of nonlinear neurooscillators with sigmoid coupling function

Nonlinear Dynamics (2019)

-

Inferring a nonlinear biochemical network model from a heterogeneous single-cell time course data

Scientific Reports (2018)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.