Abstract

Public health and safety measures (PHSM) made in response to the COVID-19 pandemic have been singular, rapid, and profuse compared to the content, speed, and volume of normal policy-making. Not only can they have a profound effect on the spread of the disease, but they may also have multitudinous secondary effects, in both the social and natural worlds. Unfortunately, despite the best efforts by numerous research groups, existing data on COVID-19 PHSM only partially captures their full geographical scale and policy scope for any significant duration of time. This paper introduces our effort to harmonize data from the eight largest such efforts for policies made before September 21, 2021 into the taxonomy developed by the CoronaNet Research Project in order to respond to the need for comprehensive, high quality COVID-19 data. In doing so, we present a comprehensive comparative analysis of existing data from different COVID-19 PHSM datasets, introduce our novel methodology for harmonizing COVID-19 PHSM data, and provide a clear-eyed assessment of the pros and cons of our efforts.

Similar content being viewed by others

Introduction

From lockdowns to travel bans, government responses to the SARS CoV-2 virus radically affect virtually every dimension of society, from how governments govern to how businesses operate and how citizens live their lives. Comprehensive, high quality and timely access to COVID-19 public health and social measures (PHSM) data is thus crucial for understanding not only what these responses are, but the scale, scope and duration of their effect on various policy areas. These include e.g. the economy, the environment and society, to say nothing of their ostensible function of reducing the spread of the virus itself. Though dozens of research groups have documented COVID-19 PHSM, these individual data tracking efforts provide only an incomplete portrait of COVID-19 PHSM, with many having stopped their efforts entirely, often due to funding constraints1.

This article shows how data harmonization, the process of making comparable and compatible conceptually similar data, can create a more comprehensive dataset of COVID-19 PHSM relative to any single dataset alone. Specifically, this article introduces a novel, rigorous methodology for harmonizing COVID-19 PHSM data from December 31, 2019 until September 21, 2021 for the 8 largest existing PHSM tracking efforts:

-

ACAPS COVID-19 Government Measures (ACAPS)2

-

Canadian Dataset of COVID-19 Interventions (CIHI)5

-

CoronaNet Research Project COVID-19 Government Response Event Dataset (CoronaNet)6,7

-

Johns Hopkins Health Intervention Tracking for COVID-19 (HIT-COVID)8,9

-

Oxford COVID-19 Government Response Tracker (OxCGRT)10

-

World Health Organization EURO (WHO EURO) dataset on COVID-19 policies (retrieved from the WHO Public Health and Safety Measures (WHO PHSM)11)

-

US Center for Disease Control (WHO CDC) dataset on COVID-19 policies (retrieved from the WHO Public Health and Safety Measures (WHO PHSM)11)

With the help of 500+ research assistants around the world, these datasets are harmonized into the CoronaNet taxonomy to provide a fuller picture of government responses to the pandemic. This amounts to harmonizing around 150,000 observations external to the CoronaNet dataset, which at the time of writing itself has more than 180,000 observations. Not only can harmonizing these different datasets provide a more accurate and complete basis for understanding the drivers and effects of this pandemic, it can also ensure that the data collected by trackers that have stopped their work are not lost and that the original sources underlying this data are preserved to aid future research.

The value of this work is multifaceted. In terms of its value for aiding understanding of COVID-19 PHSM, our discussion of the various challenges faced in harmonizing these 8 datasets not only illuminates difficulties in the data harmonization process but also provides rich detail as to the relative strengths and weaknesses among different datasets. Such a discussion has thus far been missing in the literature and can help researchers adjudicate which dataset best meets their research needs. Initiating this discussion also makes transparent the difficulties in collecting PHSM data more generally, which, to our knowledge, has not been documented in such extensive and comparative detail before. Meanwhile, with respect to its value to COVID-19 research, our ongoing data harmonization efforts represents an enormous improvement over what was previously available from any one dataset alone. While any improvement to PHSM data coverage across time or government jurisdictions can provide a more robust and accurate basis for forwarding research on the drivers and effects of the pandemic, harmonizing data from the 8 largest such existing datasets ensures that our resulting dataset will be able to provide the most comprehensive and detailed information about COVID-19 PHSM possible. Finally, from a methodological perspective, our PHSM harmonization effort can serve as a guide for the harmonization of other datasets, especially in the social sciences. That is, in contrast to virtually all other harmonization efforts we could identify12, ours is largely implemented manually, providing us with an unusually intimate knowledge of data harmonization at the level of the individual observation.

In the results section, we provide readers with an overview of our data harmonization efforts from a variety of different angles. We begin from a forward-looking perspective by providing readers insight into the challenges we faced in harmonizing incomplete and dirty data which often suffered from missing sources in order to provide context for the methodological steps we employed to pursue data harmonization. Interested readers can find a fuller accounting of our methodology in the methodology section. The results section ends with a backward-looking perspective by providing an assessment of different aspects of our harmonization efforts, including e.g. the likely gains and limitations of our efforts. Overall, our data harmonization efforts significantly outperform the only other effort we are aware of to harmonize COVID-19 PHSM data, the WHO PHSM effort11 (see Supplementary Information, section 3). We conclude with a discussion of how data harmonization illuminates complexities in the data generation process.

Results

Choosing which datasets to harmonize is one of the most significant decisions a researcher must make when harmonizing data because the particularities of any given dataset can have substantial downstream effects on how they can be made to fit together. Given this, we start this section by outlining our rationale for choosing to harmonize the particular datasets that we do. Having established this basis, we then dive into the the challenges of harmonizing complex, dirty, and incomplete COVID-19 PHSM data. We then briefly show how our methodology can address these challenges (see our methodology section for more information). We end this section by providing an assessment of our efforts to harmonize COVID-19 PHSM data, the criteria for which is based on separate guidance we developed on the topic of data harmonization more generally12.

Which datasets to harmonize?

In adjudicating which COVID-19 PHSM datasets to harmonize, we weighed the potential benefits of data harmonization among a number of different dimensions, including the:

-

Geographical coverage

-

Temporal coverage

-

Volume of data collected

-

Relative similarity of policy taxonomies to the CoronaNet taxonomy

-

Relative capacity of external dataset partners for collaboration

As can be seen in Supplementary Table 1, we identified more than 20 datasets which could potentially be harmonized into the CoronaNet taxonomy. We ultimately chose datasets to harmonize that (i) aspired to world-wide geographic coverage with (ii) at least ten thousand observations in each dataset and were (iii) based on original coding of sources (as opposed to recoding of existing sources). Datasets that fit this criterion were ACAPS, COVID AMP, HIT-COVID, OxCGRT, and the Complexity Science Hub COVID-19 Control Strategies List (CCCSL)13,14 (note, we started but did not finish harmonizing CCCSL data, see below for more information).

One clear exception to this criteria was the inclusion of the CIHI dataset, which focuses on Canadian policies and had fewer than ten thousand policies. We decided to include the CIHI dataset for consideration because i) it already formed a substantial part of subnational data collection for other data collection efforts, including the OxCGRT dataset and ii) of substantial cooperation and access to researchers with expertise in both the CoronaNet and CIHI taxonomies. Similarly, though the WHO EURO dataset aims for a regional, rather than a world-wide focus, given that (i) it is part of the WHO PHSM dataset, which we compare our efforts to in Section 3 of our Supplementary Information (ii) CoronaNet is partially supported by the EU Commission for its EU data collection, we decided to include it for harmonization. Because the WHO CDC dataset follows the same taxonomy as the WHO EURO dataset and also contains a substantial number of policies, it was also included for harmonization.

September 2021 was chosen as the cutoff date given our available resources and because most data tracking efforts had stopped or significantly slowed their data collection by this date except for OxCGRT, CIHI and WHO EURO (OxCGRT stopped in 2023 while the latter two stopped in 2022). Should more resources become available we will expand our efforts to harmonize records for these datasets beyond this date.

Challenges of Data harmonization

Harmonizing data is rarely straightforward, but harmonizing COVID-19 PHSM data was particularly challenging because standards which researchers usually abide by before releasing data were not observed due to the emergency nature of the pandemic. For one, to the extent that researchers generate event-based datasets, they normally concern past events, not ongoing ones. Indeed a given event must run its course in order for researchers to both i) conceptualize the event being captured into a structured and logically organized taxonomy ii) estimate the amount of work needed in order to build a dataset based on that taxonomy. For another, because dirty data can significantly bias subsequent research findings, researchers often err on the side of caution by spending substantial additional time rigorously cleaning and validating the data before release. Researchers also have personal incentives to delay releasing data given that i) they generally wish to be the first to conduct analyses on data that they themselves have collected and ii) unclean datasets can significantly negatively affect professional reputations. Meanwhile, to promote replicability and transparency about the data generating process, documentation of original sources and coding decisions are often extensive. Due to the pandemic, however, PHSM data exceptionally were:

-

Collected based on taxonomies that were developed inferentially from research group to research group while the COVID-19 pandemic was still ongoing.

-

Released without extensive cleaning.

-

Inconsistently preserved with regards to original raw sources.

-

Absent regular documentation on changes to taxonomies or data collection methods.

There were a number of research-based reasons to prioritize speed over rigor. Not only did launching data collection during rather than after the pandemic help jump start early COVID-19 research, in many cases it was critical to document these policies in as close to real time as possible because primary sources on the pandemic can and have disappeared from the Internet over time. Meanwhile, though many COVID-19 trackers surely would have continued to improve their data quality, unfortunately many stopped their efforts because of lack of funding support. Our efforts to harmonize this external data into the CoronaNet dataset thus not only ensures that their substantial contributions can live on, but are also improved insofar as any errors in the data or discrepancies between datasets have a higher chance of being identified and resolved before being harmonized. This job is made more difficult however, because many trackers did not have rigorous guidelines for preserving raw sources. In what follows, we expand upon how each of these pandemic-related challenges have affected our data harmonization efforts and subsequent methodological decisions.

The challenge of harmonizing different taxonomies

Different conceptualizations of what ultimately ‘counts’ as PHSM data lie at the root of different taxonomic approaches to collecting such data. While one benefit of independently developing taxonomies is that it encourages greater flexibility and adaptability in conceptualizing COVID-19 PHSM while simultaneously validating common themes that independently appear across taxonomies, it also makes reconciling the differences among them more challenging. A particular challenge with our data harmonization efforts is that the CoronaNet taxonomy on the whole captures more policy dimensions than other datasets do. While this means that our data harmonization efforts will yield much more fine-grained information on a given policy than would be available in its original form, mapping a simpler taxonomy into a more complex taxonomy is also a much more challenging task compared to vice-versa. In what follows, we discuss what challenges we faced when mapping taxonomies for COVID-19 policy types in particular as well as for other important dimensions of COVID-19 policies.

There are at least four broad issues to consider when mapping the substance of different COVID-19 policies: (i) when taxonomies use the same or similar language to describe a policy but ultimately conceptualize them differently (ii) when taxonomies have the same or similar conceptual understandings of a given event but differ in how they record the data structurally (iii) when taxonomies have similar but ultimately different conceptual understandings of a given event and (iv) when taxonomies capture and conceptualize different events. We elaborate with examples for each of these issues in what follows:

Different datasets can often use similar language to describe conceptually different phenomena. An example of why it is important to be sensitive to these semantic details can be seen with regards to the term ‘restrictions on internal movement.’ While all datasets that use this terminology understand this to entail policies that restrict movement, some have different understandings of the term ‘internal.’ For instance, because OxCGRT generally codes policies from the perspective of the country (note OxCGRT does document subnational data for a select number of countries: the United States, Canada and China. Further note that although OxCGRT also collects subnational data for Brazil, in this case, it appears that their subnational Brazilian data is also coded at the level of the country), their ‘C7_Restrictions on internal movement’ indicator captures any restriction of movement within a country. Meanwhile, because CoronaNet codes policies from the perspective of the initiating government, its ‘Internal Border Restrictions’ policy type captures policies that restrict movement within the jurisdiction of a given initiating government while policies that restrict movement outside a given jurisdiction are coded as ‘External Border Restrictions.’ As such, if the state of California restricts its citizens from leaving the state, this would be captured in OxCGRT’s ‘C7_Restrictions on internal movement’ indicator but would be coded as an ‘External Border Restriction’, not an ‘Internal Border Restriction’ using the CoronaNet taxonomy. Parsing out these differences can only be automated to a limited extent, especially if the taxonomies being mapped simply do not make the same distinctions.

Meanwhile an example of how different datasets implemented different taxonomic structures to capture a similar conceptual understanding of a policy is how they captured policies related to older adults. OxCGRT organized its taxonomy by creating an ordinal variable with its “H8_Protection of elderly people” index. Specifically, this index records “policies for protecting elderly people (as defined locally) in Long Term Care Facilities (LTCFs) and/or the community and home setting” on an ordinal scale (it takes on a value of 0 if no measures are in place, 1 if restrictions are recommended, 2 if some restrictions are implemented and 3 if extensive restrictions are implemented; see the OxCGRT codebook10 for further details). In contrast, the CoronaNet and COVID AMP taxonomies document policies toward older adults not in its policy type variable but in a separate variable which records the demographic targets of a given policy (in CoronaNet, this is the ‘target_who_gen’ variable while in COVID AMP this is the ‘policy_subtarget’ variable). Both datasets record whether a policy is targeted toward ‘People in nursing homes/long term care facilities.’ CoronaNet additionally makes it possible to document whether a policy is targeted toward ‘People of a certain age’ (where the ages are captured separately in a text entry) or ‘People with certain health conditions’ (where the health conditions are captured separately in a text entry) while COVID AMP additionally makes it possible to document whether a policy is targeted toward ‘Older adults/individuals with underlying medical conditions.’ When mapping different taxonomies, these differences in taxonomic structure must additionally be taken into account.

Furthermore, taxonomies may capture similar, yet conceptually still quite distinct events which makes one-to-one matching between datasets difficult, if not impossible. For instance, the CIHI taxonomy’s policy type of ‘Travel-restrictions’ does not make any distinctions between restrictions made within or outside of a given government’s borders. Meanwhile, to revisit the example of policies related to older adults, by developing a ‘nursing homes’ category, HIT-COVID taxonomy targets not older adults per se, but the institutional settings in which they are likely to be the most vulnerable. The WHO PHSM dataset meanwhile generalizes this idea in its policy category of ‘Measures taken to reduce spread of COVID-19 in settings where populations reside in groups or are restrained or limited in movement or autonomy (e.g., some longer-term health care settings, seniors’ residences, shelters, prisons).’ This taxonomy implicitly suggests that it may be prudent to investigate not only the effects of policies on older adults but for all those with limited mobility at the expense of easily extractable information on older adults in particular. Cases such as these are perhaps the most difficult to resolve as it is impossible to directly map distinctions that one taxonomy makes into other taxonomies where no such distinctions are made.

Finally, while all datasets generically sought to capture policies governments made in response to COVID-19, different datasets focused on different policy areas. For instance, virtually all external datasets have separate policy categories to capture economic or financial policies (e.g. government support of small businesses) while such policies are not systematically captured in the CoronaNet taxonomy. In these cases, such policies are thus simply not mappable.

The fact that different projects undertook such a variety of approaches in capturing PHSM policies also underscores the idea that there is no one correct taxonomy for capturing such policies; each has its own pros and cons. For instance, aggregating all policies towards older adults in one indicator as OxCGRT does facilitates research on how the pandemic affects older adults but makes it difficult to easily compare the effect of the pandemic on other vulnerable populations. Meanwhile though the CoronaNet and COVID AMP approach allows more flexibility in what kind of policies toward older adults can be captured, it also lacks the cohesiveness the OxCGRT indicator for older adults enjoys. With regards to data harmonization meanwhile, the sheer variety of approaches substantially increases the challenge of transforming PHSM data to adhere to one taxonomy.

Indeed, despite a strong partnership with CCCSL, we opted not to harmonize data from the CCCSL dataset because of these taxonomic challenges. We found CCCSL’s structure and semantics were too different from CoronaNet’s, such that we estimated we would ultimately only be able to use less than half of CCCSL’s observations. To illustrate by example, an observation with the CCCSL id of 4547 notes in its description that ‘Ski holiday returns should take special care.’ Such an observation would not be considered a policy in the CoronaNet taxonomy because it is does not provide specific enough information about what is meant by ‘special care’ and the link for the original source of this observation is dead. While many observations do contain high quality information and descriptions, a substantial number do not contain any or only very minimal descriptive information. Combined with the difficulty in accessing original sources, we decided the relative effort required to consistently map the remaining observations into the CoronaNet taxonomy would be too high, especially considering that we are also harmonizing similar data from 7 other datasets.

So far we have only discussed the challenge of mapping taxonomies specific to policy types. However all datasets also capture additional important contextual information for understanding, analyzing and comparing government COVID-19 policies. In Table 1 below, we show the variety of approaches different datasets undertook to capture some of the most important of these dimensions including: the data structure (Structure), whether a given dataset captures end dates (End Dates?), has a protocol for capturing and linking updates of a policy to its original policy (Updates?), has a standardized method for documenting policies occurring at the provincial ISO-2 level (Location standardized at ISO-2 level?), captures information about the geographic target of a policy (Geog. Target?) or captures information about the demographic target of a given policy (Demog. Target?).

As Table 1 shows, while most external datasets are formatted in event dataset format which facilitates comparability across these datasets, OxCGRT data is available only in panel format, which presents unique challenges. With regards to the data structure, in order to facilitate data harmonization, the OxCGRT data must be reformatted to an event format (see the Supplementary Information, Section 2, to access the taxonomy map). However, the panel structure also has knock-on effects on how other policy dimensions are captured, which we discuss in greater detail later in this section.

Datasets also differ with regards to how they capture the timing of a policy. Although knowing the duration of a policy is crucial for understanding its subsequent impact, if any, neither ACAPS and nor HIT-COVID systematically captured information about policy end dates. Though CIHI did make this data available through its textual description, it was not available as an individual field and had to be separately extracted. When harmonizing data from these datasets then, additional work must be done to provide information on end dates.

Relatedly, datasets have also taken inconsistent approaches to capturing policy updates, if at all. Taxonomies that capture such updates are arguably better equipped to capture the messiness and uncertainty of the COVID-19 policy making process (e.g. policy makers often lengthen or shorten the timing of a given policy in response to changing COVID-19 conditions). ACAPS and CIHI however do not separately capture and link policy updates to the original policy. Meanwhile, OxCGRT’s inability to capture information on how policies may be linked is largely due to its panel dataset structure. In contrast, though both the CoronaNet and COVID AMP taxonomies have rules for linking policies together, these differ across datasets. Specifically, CoronaNet links policies together if there are any changes to the original policy’s time duration (e.g. extended or reduced over time), quantitative amount (e.g. number of quarantine days), directionality of the policy (e.g. whether a policy targets outbound or inbound travel), travel mechanisms (e.g. whether a policy targets air or land travel), compliance (e.g. whether a policy is recommended or mandatory), or enforcer (e.g. which ministry is responsible for implementation). COVID AMP meanwhile, has separate fields to document i) whether an original policy was extended over time (see the ‘prior row id’ in the COVID AMP dataset) or ii) whether a given policy implemented at the local level has a relationship with a higher level of government (see the ‘Parent policy number’ field in the COVID AMP dataset).

While all datasets use a standardized taxonomy for documenting country level information about where a policy originated from, some datasets did not use a standardized taxonomy for capturing this information at the subnational ISO-2 level, in particular ACAPS and the WHO. Even when the taxonomy was standardized within a given dataset, different datasets used slightly different taxonomies at both the country and subnational levels which also necessitates further reconciliation and standardization across datasets .

Of all the datasets processed for data harmonization, only the CoronaNet and COVID AMP datasets capture information on both the particular geographic (e.g. country, province, city) and demographic targets (e.g. general population, asylum seekers) of a given policy. To the extent that other datasets also capture this information, it is either very broad or not standardized enough. For instance, though the various indicators in the OxCGRT data capture whether a policy overall applies to the general population or a targeted population, no further information about the specific targets is provided. Meanwhile, the WHO PHSM dataset does have a separate field which documents demographic targets but these entries are not standardized resulting in more than 5900 unique categories, many of which have typos (see Supplementary Information, Section 3, for more). It is thus impossible to use them for analysis without substantial additional cleaning and harmonization.

All in all, harmonizing different datasets can be challenging when considering only two taxonomies, much less eight. This is true not only with regards to taxonomies specific to the substance of COVID-19 policies themselves but also with respect to additional policy dimensions like policy timing and targets.

The challenge of harmonizing dirty data

Dirty data refers to data that is miscoded with reference to a given taxonomy. In our investigation of the cleanliness of different datasets, we distinguish between policies that are (i) inaccurately coded relative to a given taxonomy or (ii) incomplete or missing. Harmonizing dirty data would be challenging even if taxonomies across datasets were the same; these problems are only compounded when taxonomies are different. Unfortunately, because of the pandemic emergency, all datasets considered here suffer from problems with dirty data.

For instance, although within the ACAPS taxonomy, all policies related to curfews should theoretically be coded as ‘Movement Restrictions’ and ‘Curfew’ in their ‘category’ and ‘measure’ fields respectively, text analysis of the descriptions accompanying these observations suggests that curfew policies were mistakenly coded into at least 8 other policy categories. For example policies relevant to curfews which likely should have been coded as ‘Movement Restrictions – Curfew’ were also found as being coded under other categories like: ‘Lockdown – Partial Lockdown’, ‘Movement restrictions – Surveillance and monitoring’, and ‘Movement Restrictions – Domestic travel restrictions.’ Although admittedly, the aforementioned categories are conceptually quite close to the concept of curfews, curfew measures were also found to be coded under categories that are arguably quite father afield like: ‘State of Emergency’, ‘Movement Restrictions – Border closures’, ‘Public health measures – Isolation and quarantine policies’, ‘Governance and socio-economic measures – Emergency administrative structures activated or established’ and ‘Governance and socio-economic measures - Military deployment.’

Data can also be dirty for other important policy dimensions, e.g. the start dates of a given policies. Many governments maintain websites where they note the most current policies without detailed information as to when the policy started or will end. To draw an example of a government source for Latvian polices (see https://web.archive.org/web/20210621102402/https://covid19.gov.lv/en/support-society/how-behave-safely/covid-19-control-measures), the date the information on policies was updated was June 21, 2021 but the policies themselves were not necessarily implemented on that day. Further research would be needed to triangulate the start date of a given policy listed on this website. Similarly, in some cases, coders will simply record the date that they accessed the policy as the start date as opposed to the true start date. That these types of issues were found across all datasets is no surprise given the unusual circumstances that such data are collected and released. Nevertheless, they can pose immense challenges; blindly harmonizing such data risks compounding the original errors in the data.

While it is difficult to quantify the relative cleanliness of different datasets (and thus, how much of an issue it poses to data harmonization), we provide some sense of their relative data quality with regards to the quality of their textual descriptions in Table 2 below. Good textual descriptions of a given policy are crucial for helping users understand what policies a given dataset is actually documenting and organizing. Table 2 shows how informative these descriptions are by counting, per dataset, the average number of characters each description has (Description Length (Average)), how many descriptions have less than 50 characters (Descriptions with less than 50 characters (Total)) and how many observations have no descriptions at all (Missing Descriptions (Total)).

Generally, descriptions with less than 50 characters contain only limited information about a given policy. The following examples from each dataset shows that often missing from these shorter descriptions are dates, places and enforcers of policies and even sometimes the nature of the policy itself: “Albania banned all flights to and from the UK.” (CoronaNet); “Blida extended until at least 19. april 2020” (ACAPS); “Lockdown extended. Lockdown extended” (WHO CDC); “The state of emergency in WA has been extended.” (COVID AMP); “Delay of international flights have been extended” (EURO); “Extends school closures until March 16” (HIT-COVID); “orders extended until April 30” (OxCGRT). The table shows that textual descriptions from the ACAPS dataset have on average the least number of characters compared to others, with more than two thousand having descriptions of less than 50 characters and more than 100 having no description at all. While OxCGRT has the third highest average description length, it also has the most number of descriptions with less than 50 characters. Meanwhile HIT-COVID has the most number of policies without any description, at more than 1600.

With regards to the content of the descriptions, only the CoronaNet and CIHI databases appear to standardize what should be included in this textual description (see ‘Description Standardized?’ column in Table 2). Coders for CoronaNet are instructed to include the following information in their textual descriptions: (i) the name of the country from which a policy originates (ii) the date the policy is supposed to take effect (iii) information about the ‘type’ of policy (iii) if applicable, the country or region that a policy is targeted toward (iv) if applicable, the type of people or resources a policy is targeted toward and (vi) if applicable, when a policy is slated to end. The CIHI descriptions take a regularized format in which the government initiating the policy is clearly specified, the policy type is described and the end date of a given policy is recorded if applicable. With regards to the other datasets, we were unable to find any documentation that suggested that text descriptions should follow a standardized format nor were we able to find evidence of any by reading through a sample of the text descriptions themselves.

For each dataset, we randomly selected one description that accorded to the average description length for that dataset to illustrate what kind of information could be gleaned from them in the ‘Example of Average Description’ column in Table 2. These descriptions suggest that while the CoronaNet and CIHI descriptions include information about the date the policy is enacted and the policy initiator, this information is not always reliably made available for descriptions from other datasets. While this information is generally also subsequently captured in separate variable fields, having detailed textual descriptions are important for helping to adjudicate whether the subsequent coding of these separate policy dimensions is accurate or not.

While it would be useful to have a similar quality assessment for other variables of each dataset, as far as we know, only the CoronaNet dataset provides an empirical assessment of the quality of its data. CoronaNet implements a multiple validation scheme in which it samples 10% of its raw sources for three independent coders to separately code. If 2 out of 3 of the coders document a policy in the same way, then it is still considered valid. Though data validation is still ongoing, preliminary data from Table 3 suggests that inter-coder reliability is around 80% for how its policy type variable is coded, a level which is generally accepted to be indicative of high inter coder reliability15,16,17 An exception to the generally high validity of the policy type variable is the relatively poor coder interreliability for the ‘Health Testing’ and ‘Health Monitoring’ categories. This is likely related to changes in the CoronaNet taxonomy, which while important to make to better adapt to the changing policy-making environment, also increases the dirtiness of the data. A full accounting of taxonomy changes can be found by accessing the CoronaNet Data Availability Sheet https://docs.google.com/spreadsheets/d/1FJqssZZqjQcA-jZhRnC_Av9rlii3abG8r7utBeuzTEQ/editgid=1284601862.

Other external datasets have likely faced similar issues which subsequently affect their data quality although we were unable to locate public documentation of these changes. Note however, if there were any taxonomy changes for OxCGRT or HIT-COVID, they are likely recoverable from their Git repository histories. The closest similar information that other datasets provide on data quality are with regards to their cleaning procedures. More information on the steps other datasets took to ensure data quality can be found in their respective documentation (see: CoronaNet6; ACAPS2, OxCGRT10, HIT-COVID8 and the WHO PHSM18; no documentation on data quality procedures was found for CIHI). Given that a number of external trackers stopped their data collection efforts as well as the relatively high level of data quality of the CoronaNet data for the dimensions that we have information on, we can cautiously infer that harmonizing external data to the CoronaNet dataset will help improve the quality of the subsequently harmonized data.

Data completeness is also an important factor in a dataset’s overall quality. The more complete a datatset is, the more accurate subsequent analyses based on this data can be. All datasets harmonized here are by definition incomplete given that they made their datasets publicly available while their data collection efforts are ongoing. This issue is compounded by the fact that many datasets have had to stop or substantially slow their data collection efforts, particularly ACAPS, HIT-COVID, WHO CDC and COVID AMP. Because policies often continue past the lifetime of the group collecting the data itself, issues of data incompleteness only grow over time for datasets that stop collecting data. While a full assessment of the completeness of each dataset is not possible (one would need a perfectly complete dataset in order to judge the completeness of other datasets) in Table 4 below, we provide some sense of each dataset’s relative completeness by assessing how many policies lack end dates in terms of the raw data and the harmonized data as well as the average start and end dates of policies and the last submission date of a given policy.

The first column, ‘Missing End Dates (Total Raw)’ shows that on an absolute basis, CoronaNet has by far the greatest number of missing end dates. However this large number is largely a function of the large size of its dataset (see Table 6 for information on size of different datasets). When we turn to the second column ‘Missing End Dates (% Raw)’, which assesses the percentage of end dates missing from the data before it is manually assessed for data harmonization, the table shows that following ACAPS and HIT-COVID which, as previously mentioned, do not collect information on any end dates at all, CIHI has the highest percentage of missing end dates while the OxCGRT data has the lowest percentage. However, this column should be contrasted with the subsequent one, ‘Missing End Dates (% Harmonized)’ (note that the ‘raw’ version of the data presented here corresponds to ‘Step 3’ of the data and the ‘harmonized’ version of the data presented here correspond to ‘Step 5’ of the data. See Table 6 for a precise breakdown of this data and the methodology section for more information as to how the data was processed during this different steps), which shows the prevalence of missing end dates for policies that have been assessed for harmonization. Here we can see that both ACAPS and HIT-COVID have improved in terms of the prevalence of missing data — this suggests that while they themselves did not systematically gather information on end dates, it was possible for research assistants to recover this information from the raw sources that they were based on during the harmonization process.

The difference between what was reported in the raw data and what was assessed during the harmonization process is particularly drastic in the case of OxCGRT data and given that it suggests that the OxCGRT data is of substantially poorer quality than it appears, it deserves some additional attention. In particular, though OxCGRT was originally assessed as having missing end dates for around 3% of the data when based on the raw data, this percentage explodes to nearly 40% during the harmonization process. A likely explanation for this discrepancy is that the OxCGRT data is collected as an ordinal index in a panel form. To take border policies as an example, the OxCGRT index for border closures takes a value of 3 if borders are closed to all countries and a 4 if it is closed to all countries. If country X (i) only bans travel from country A in March 2020 (ii) then only bans travel from country B (lifting the ban for country A) in April 2020, and finally (iii) bans travel all countries in May 2020, it will take on a value of 3 according to the OxCGRT in March and April of 2020 and a value of 4 in May of 2020. However, it is not necessarily the case that the OxCGRT data will accurately record the end date of the travel ban against country A since for the purposes of its index, the same value of 3 is maintained throughout March and April. Note that generally, all of OxCGRT ordinal indexes follow a similar logic insofar as they document whether a restriction applied to all, none or some but do not provide further specifics when the restriction only applies to some. Such lapses in documentation likely explain the comparatively high number of missing end dates for the OxCGRT data.

Overall, when considering the numerous different dimensions of the missing end dates problem, our analysis suggests that COVID AMP and CoronaNet have the highest data quality in terms of having the lowest percentage of data without missing end dates, though COVID AMP’s relatively better performance is likely a function of the smaller size of its dataset. All datasets however, are evaluated as having a more severe problem with missing end dates when they are being assessed for harmonization, rather than in their raw form, with the exception of ACAPS and HIT-COVID which did not systematically collect data on end dates. This discrepancy underscores the challenge in harmonizing dirty data. Note that there is no value given for CoronaNet in terms of the percentage of missing end dates for the harmonized data because the harmonized and raw versions of the dataset are identical for CoronaNet.

Meanwhile, based on the raw version of the data, though the average start date and end dates for all datasets center around the last half of 2020, the earliest average start dates are found in ACAPS, and HIT-COVID, with OxCGRT, CIHI and WHO EURO being relatively farther along and CoronaNet, CDC and COVID AMP in the middle of the pack. Meanwhile WHO CDC and WHO EURO have the earliest average end dates while OxCGRT, CIHI and CoronaNet have the latest average end dates. The last submission date (relative to September 2021 when the data was last retrieved for all datasets except CoronaNet) shows when datasets have stopped or slowed their data collection efforts. Overall then, this table suggests that data harmonization of these 7 other datasets into the CoronaNet dataset may substantially raise the data completeness of the CoronaNet dataset.

As outlined above, all datasets considered in this paper suffer in various degrees from problems of miscoded or missing or incomplete data. However, though dirty data substantially raises the complexity and challenge of accurate data harmonization, the data harmonization process can also improve the quality of such data, which we will discuss in more detail later on.

The challenge harmonizing data with missing information on original sources

Given both the challenges in harmonizing (i) data coded from multiple different taxonomies as well as (ii) dirty data, it is essential to have access to the original raw source of data of a given policy to harmonize the data accurately. Original sources are necessary to substantiate the content or nuances of a given policy or to resolve any confusion or disagreement about a given coding decision.

In Table 5, we illustrate differences among each dataset in terms of how they make source data available (Source Data) and how many observations do not have any source data attached to it (Missing Links (Total)). The table also shows, relative to external data that has already been assessed for harmonization, the percentage of observations that have been found to be based on sources with dead links for which corroborating information was unable to be found after a good faith effort (Unrecoverable links (Percent of total harmonized)) as well as the percentage of observations which have been found to be based on dead links but for which corroborating information was subsequently recovered (Recovered Links (Percent of total harmonized)).

We find that while all datasets provide reference to the URL links used to code a given policy, only CoronaNet, COVID AMP and HIT-COVID also provide links to static PDFs of raw sources which ensure that this information can continue to be available in the future. Note however, COVID AMP has around 150+ observations which only have a URL link and no PDF link attached to it while early observations entered into the HIT-COVID dataset also only have URL links with no accompanying PDF links. With regards to the extent to which a given observation is missing a URL or PDF link to its raw source, the WHO EURO and OxCGRT datasets have the most number of missing links while this is not an issue for the CoronaNet and CIHI datasets. Meanwhile, based on the amount of external data that has been harmonized thus far, around 10.2% of the external data is based on links that were dead which were not possible to recover corroborating information for. This was a particular problem for the WHO EURO and WHO CDC datasets though not an issue for the CIHI or COVID AMP datasets. Meanwhile around 4.7% of the external dataset assessed for harmonization to date, were based on dead links but for which it was possible to recover corroborating information. Because these data points are recoded using the CoronaNet methodology, PDFs of these recovered links were also uploaded, ensuring that they will continue to be preserved for future records. Observations coded by the WHO EURO database were found to be particularly recoverable. Note that we do not make an assessment for unrecoverable or recovered links for CoronaNet because the CoronaNet methodology ensures that PDFs are always saved (the data is collected via a survey and uploading a PDF is mandatory for a policy response to be considered valid). All told, at least 17% of the external data (3% of the external data have no links, 10.2% of the data are based on links with unrecoverable information and 4.7% of the data are based on links with recoverable information) are based on data with some issues with regards to their original sources, which only increases the challenge of smoothly harmonizing information from different datasets.

COVID-19 PHSM Data Harmonization methodology overview

The challenges posed by harmonizing multiple complex taxonomies of dirty data based on inconsistently preserved original sources led us to the conclusion that ultimately, only manual harmonization would allow us to harmonize data from different PHSM trackers in a way that would ensure high data quality and validity. Given the sheer number of policies in the external dataset however, to the extent possible, we sought to support these manual efforts with automated tools to harmonize data across 8 different datasets into the taxonomy for capturing COVID-19 PHSM developed by the CoronaNet Research Project. To that end, we followed the methodology laid out in Fig. 1. That is, after we evaluated the set of COVID-19 PHSM data to harmonize, we made taxonomy maps between the different external data and the CoronaNet taxonomy (Step 1), performed basic data cleaning as well as data trimming of policies from the external dataset irrelevant to the CoronaNet taxonomy (Step 2), and automatically deduplicated a portion of the external data (Step 3). After having piloted manual harmonization for a sample of the data (Step 4), we are currently manually harmonizing the remaining external data into the CoronaNet dataset (Step 5).

PHSM Data Harmonization Process. This figure visualizes the volume of data processed across the different steps of our harmonization process for a given PHSM dataset. During Step 1, the taxonomy for each dataset is mapped into the CoronaNet taxonomy and represents the absolute amount of data that is possible to harmonize. During Step 2, basic cleaning and subsetting of each dataset is performed in order to remove observations that are clearly not mappable into the CoronaNet taxonomy. During Step 3, an algorithm is employed to remove as many duplicate observations as possible. Step 4, not pictured, refers to our pilot harmonization efforts for select countries and datasets. Step 5 refers to our ongoing efforts to manually harmonize the remaining data. Please see the methodology section for more details.

Table 6 provides a numerical breakdown of how different data have been processed along these steps and an overview of our harmonization efforts to date. It shows that after preprocessing and standardizing the raw data using automated methods (Step 1 through Step 3), there remain 150,052 policies from the 7 datasets external to CoronaNet to harmonize manually (Step 5). While Step 5 is still ongoing, more than 50% of the external policies have been assessed for whether they overlap with the CoronaNet data or not and around 36% has been assessed for harmonization into the CoronaNet dataset. Policies recoded into the CoronaNet dataset originally found from these external datasets can be identified in our publicly available dataset (see https://www.coronanet-project.org) using the ‘collab’ and ‘collab_id’ fields which refer to the external dataset source and original unique id respectively. For a fuller accounting of our data harmonization methodology, please see the methodology section of this article.

Assessing the Value of Harmonizing COVID-19 PHSM Data

Given the apparent intricacy of harmonizing data, to say nothing of harmonizing complex, unclean and incomplete data, it is easy to miss the forest for the trees. As such, in this section, we take a step back in order to provide an assessment of the overall value of harmonizing COVID-19 PHSM data. To do so, in the following we draw from guidance we developed in a separate commentary12 to explore the benefits, costs, limitations, necessary resources and alternatives to data harmonization of PHSM data.

What can be gained from data harmonization?

To our knowledge, no individual effort to document PHSM has been able to do so for all countries. Indeed, though at the time of writing, there are 145k+ observations unique to the CoronaNet dataset (of the 180k+ observations available in total, which includes harmonized data), we identified 150,052 observations for the 7 datasets external to CoronaNet combined for data available through September 2021. According to our efforts so far, around 83% of external data do not overlap with the CoronaNet dataset, and of these around 45% can be recoded, suggesting there are potentially 55k additional observations to recode.

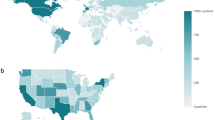

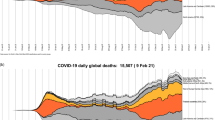

Data harmonization would thus lead to a dataset that is more complete and consistently coded across time and space then is currently available. Indeed, Fig. 2 shows that while most datasets have fair coverage of PHSM until the summer of 2020, with data from CoronaNet being especially rich, data after this time is limited especially for trackers that stopped data collection (e.g. HIT-COVID, ACAPS). OxCGRT in comparison to other datasets, has been able to document more policies for later months.

Meanwhile Fig. 3 illustrates differences in the number of policies captured across continents. Clearly, all trackers have asymmetrically focused on countries in Europe and North America.

While data harmonization cannot compensate for this relative unevenness in data coverage, it can significantly improve coverage of non-European and non-North American countries on an absolute level.

Moreover, as Fig. 4 shows, most external datasets either focus on gathering national-level data for countries around the world or subnational data for a more limited number of countries, but rarely both.

As such, data harmonization efforts will substantially improve the availability of PHSM data initiated at the national level and to some degree, the provincial level as well.

Overall, data harmonization greatly advances the completeness of PHSM data on a number of dimensions, including time, space, and administrative levels. Moreover, our data harmonization methodology also allows each policy in the external dataset to be evaluated independently, which can improve the quality of the PHSM data overall. This is all the more valuable given that while PHSM data has generally been made publicly accessible in close to real time because of the emergency nature of the pandemic, research groups have not been able to guarantee data cleanliness (see the ‘Challenges of Data Harmonization’ section). Progress on these dimensions greatly improve the research community’s ability to conduct analyses on the COVID-19 pandemic which can yield results with both greater external validity and generalizability (in e.g. cross national analyses) as well as analyses that can yield results with greater internal validity and with fewer potential confounders (in e.g. subnational analyses).

What can be lost from data harmonization?

The main loss when harmonizing PHSM data into the CoronaNet taxonomy is with regards to measures that CoronaNet does not capture and for which, the benefit of its relative fine-grained taxonomy are moot. The most prominent of these measures are the economic ones, such as business subsidies or rental support. For measures for which there is conceptual overlap between the CoronaNet taxonomy and other taxonomies, the fact that the data were harmonized to the CoronaNet taxonomy, which by far has the most detailed taxonomy of the 8 datasets, minimizes the extent to which information was lost from the harmonization process.

Meanwhile, the benefits of data harmonization aside, there can be real scientific value when different researchers approach similar research topics with different research designs19. In support of this, we further make taxonomy maps between the CoronaNet taxonomy and the taxonomy of each respective dataset publicly available through our Supplementary Information, Section 2. These maps can not only help users better understand how to use different datasets, but can also provide robustness checks of COVID-19 related research and bolster the transparency and replicability of our data harmonization efforts.

What are the limits of data harmonization?

While we believe that our efforts to harmonize data across 8 different datasets will provide the most complete picture possible of COVID-19 PHSM, they will still fall short of a dataset that will reflect all COVID-19 PHSM ever implemented. Though it is inherently impossible to assess how much data will still be missing after data harmonization is finalized — a complete dataset needs exist to make this assessment and it does not — we offer some insights as to where and why data may be incomplete. Specifically, our complete, harmonized dataset will still (i) lack information on subnational policy making for a number of countries as well as low state capacity governments and (ii) be unable to ensure complete data cleanliness.

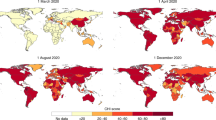

Our review of projects gathering COVID-19 policies suggests that most projects focus on national level policies, limiting what data harmonization can achieve. Table S1 in the Supplementary Information shows the coverage of data on subnational policy making for all datasets that we know to be in existence, using data available at the time of writing. Most datasets aside from CoronaNet do not collect subnational data and to the extent that they do, they overwhelmingly focus on the United States. Meanwhile, though the CoronaNet data does capture subnational data for some countries, given the volume of policies generated and limited resources, we are only able to capture this data for reduced time periods. However, available evidence suggests that subnational policy-making has taken place in many other countries beyond the ones listed in Table S1 in the Supplementary Information. Data from both the Varieties of Democracy Pandemic Backsliding Project (PanDem)20 as well as CoronaNet’s internal surveys suggest that there was subnational policy making in anywhere from 30 to 90 countries at any given point in time, as visualized in Figs. 5 and 6. Note that the CoronaNet internal surveys followed the same coding scheme as PanDem’s [subvar] variable; at the time of writing, CoronaNet’s internal assessment covers 115 countries for 6 quarters while PanDem’s data covers 144 countries for 5 quarters, with 91 countries covered in common across both.

Meanwhile, we also identify how issues of low state capacity can make it difficult to document COVID-19 policies at all. Some problems that CoronaNet researchers have reported include:

-

No announcement of policies in any official government sources: In the absence of any official government sources about a policy, research assistants must rely on media reports which can often have conflicting information about the nature or timing of a given policy. It is also not uncommon for governments to announce policies on social media without providing further information in the form of official government sources.

-

Policies being communicated in mediums other than the Internet: In places with low internet connectivity, governments have been known to make policy announcements in non-digital forms used most prevalently by the local population e.g. radio, local news bulletins, town criers.

-

NGOs and/or IOs implementing policies that are normally under the purview of governments: When governments lack the capacity to respond the COVID-19 pandemic, NGOs or IOs have been known to step in. While it is possible to capture these policies, policy trackers to date have largely focused on documenting government initiated policies.

In short, large scale data collection efforts of PHSM data have been predicated on: (i) the capacity to capture PHSM polices made at all different administrative levels (ii) the availability, access and durability of web-based documentation on PHSM policies and (iii) the assumption that governments are the primary policy responders to the COVID-19 pandemic. However, these conditions are not always present in low state capacity states. While the enormous undertaking described here will greatly advance our collective knowledge of COVID-10 PHSM policies, much more funding and support is needed to document all PHSM.

Finally, as we elaborate more fully in the ‘Challenges of Data Harmonization’ section, PHSM data is unusually challenging to harmonize because the emergency nature of the pandemic gave rise to multiple complex taxonomies and corresponding datasets that have had varying levels of quality, completeness, and underlying source material.

While we employ some automated processes to harmonize taxonomies and deduplicate data, our methodology is overwhelming reliant on the analog process of recoding external data based on the original sources found in the external data rather than relying directly on the observations available in the external data itself. In doing so, we can ensure that whatever errors might have been made in the automated taxonomy harmonization processes, which itself was adjusted to account for systemic errors in the external data (see the ‘Step 1. Making Automated Taxonomy Maps’ section below), can be rectified manually later. Meanwhile we have also additionally vigorously tested our automated deduplication strategies to ensure that we are biased towards keeping duplicates to be removed later manually rather than mistakenly removing observations that are not duplicates (see the ‘Step 3. Automated Deduplication’ section below). However, despite out best efforts, we can nevertheless not guarantee that the likely 55k+ observations that we will eventually recode from the external datasets into the CoronaNet taxonomy will be completely free of error.

What cooperative resources are available for harmonizing data?

External data partners were either co-hosts or participants in the two conferences hosted by CoronaNet: the PHSM Data Coverage Conference (February and March 2021) and the PHSM Research Outcomes Conference (September 21, 2021). During both conferences though especially the first, trackers discussed common challenges and solutions to their data collection efforts, especially with regards to taxonomy and organization. Both the planning of the conferences and conferences themselves helped increase mutual understanding and collegiality among trackers1. For more information, please see https://covid19-conference.org or the shared statement written by conference participants outlining a framework for cooperation and collaboration (PHSM 2021).

Meanwhile, bilateral exchanges also played an important role in identifying and overcoming specific challenges with regards to mapping and harmonizing data for a given dataset. For instance, our ability to harmonize the CIHI dataset, was contingent on close cooperation with the CIHI team. Aside from explicit coordination on COVID-19 vaccines taxonomy, three volunteer researchers for CoronaNet were contracted to work on the CIHI database. This shared expertise greatly facilitated our ability to build a taxonomy map between CIHI and CoronaNet and to pilot our harmonization efforts.

Similarly, researchers from both CoronaNet and HIT-COVID were involved in building the HIT-COVID taxonomy map, which greatly facilitated the mapping process. They were also involved in piloting the data harmonization process, which also increased the speed at which it could be done. The fact that HIT-COVID and CoronaNet built their taxonomies for COVID-19 vaccine policies with mutual feedback from the other also facilitated the mapping of this particular policy type.

Meanwhile, ACAPS, COVID AMP, and OxCGRT generously made themselves available for clarifying confusion or misunderstandings about their respective taxonomies which helped make the mappings more accurate. However, despite repeat inquires to the WHO PHSM dataset to initiate such cooperation, we found them to be unresponsive which made the taxonomy mapping exercise with the WHO PHSM dataset comparatively difficult. Overall, we found that greater communication and cooperation between leaders of different datasets was an important intangible in facilitating the data harmonization process.

What are alternatives to data harmonization?

While in this paper we concentrate on presenting our rationale and methodology for qualitatively harmonizing PHSM data, in Kubinec et al. (2021) we introduce a Bayesian item response model to create policy intensity scores of 6 different policy areas (general social distancing, business restrictions, school restrictions, mask usage, health monitoring and health resources) which combines data from both CoronaNet and OxCGRT21. As this latter paper shows, researchers should be cognizant that while statistical harmonization can be an effective form of data harmonization, the resulting indices or measures may sometimes need to be interpreted or used differently than the underlying raw data. For example, our policy intensity scores for mask wearing can be interpreted as the amount of time, resources and effort that a given policy-maker has devoted to the issue of mask restrictions in a given country compared to that of other countries. This is different from what the underlying raw data measures: whether a given mask restriction is in place or not. Researchers choosing to engage in statistical harmonization should thus provide a thorough accounting of the underlying concept that they seek to measure and a corresponding justification of why their statistical method provides a good operationalization of it.

Discussion

This article presents our efforts to harmonize COVID-19 PHSM data for the 8 largest existing datasets into a coherent, unified one, based on the taxonomy developed by the CoronaNet Research Project. To do so, we provide a thorough accounting of the various challenges we faced in harmonizing such data as well as the methodology we used to address these challenges. Along the way, we open a window into understanding the strengths and weaknesses of different COVID-19 PHSM datasets and create a new path for future researchers interested in harmonizing data more generally to follow.

We also show that there are substantial gains to harmonizing PHSM data across 8 different datasets, particularly in terms of the time, spatial and administrative coverage of PHSM data. While some conceptual diversity is always lost when harmonizing data, we argue that by harmonizing PHSM data to the CoronaNet taxonomy, this issue is minimized due to the CoronaNet taxonomy’s comparative richness. Data harmonization of these 8 datasets will still fall short of a complete PHSM dataset, especially for countries for which there is a great deal of subnational policy making or low state capacity but this effort nevertheless will provide the fullest picture yet of COVID-19 government policy making. Moreover, it substantially improves upon the existing WHO PHSM effort to harmonize data both in terms of scale and quality (see Supplementary Information, Section 3). More resources would allow us to complete data harmonization more quickly, which given the ongoing nature of the COVID-19 pandemic, would be welcome. However, even if data harmonization is completed only after the pandemic is overcome, it will still present a tremendous historical resource for generations of researchers.

Our experience in data harmonization has underscored for us that the production of data may be understood not only as a mere reflection of reality, but a framing or even creation of reality. That is, by producing certain measures and not others, data can frame certain aspects of the world as more or less deserving of attention. Meanwhile creating a measure in the first place can bring forth concepts that previously did not exist in the public consciousness22. Harmonizing data cannot escape these dynamics and in fact invites greater scrutiny of them as it adds another layer of negotiation and complexity in terms of determining what is worthy of being measured and how to measure it. Undergirding all of this are social processes that produce data in the first place and which can have important influence on what data ultimately is or is not harmonized23. Though in a number of fields, researchers have developed novel platforms that aim to help facilitate data harmonization24,25, ultimately effective data harmonization requires researchers to identify clear goals for their harmonization process, a high level of attention to detail in designing a rigorous plan to carry out, and a robust working culture to ultimately successfully implement it. We hope our experience with PHSM data harmonization can provide a roadmap for researchers embarking on similar journeys for their own research.

Methodology

COVID-19 PHSM Harmonization Methodology

In this section, we provide greater detail for the 5 step methodology we employed to semi-manually harmonize data from 7 PHSM datasets into the CoronaNet taxonomy for policies implemented by governments before September 21, 2021. We start with outlining each of these different steps before expanding on each step in separate subsections later on. A note to the reader: unless explicitly noted, any subsequent analysis or description of the external data refers to data recorded by September 21, 2021.

-

1.

Step 1: Create taxonomy maps for each external dataset and CoronaNet, which we make publicly available (see Supplementary Information, Section 2). Based on these maps, we then mapped data available for each external dataset into the CoronaNet taxonomy

-

2.

Step 2: Perform basic cleaning and subsetting of external data to only observations clearly relevant to existing CoronaNet data collection efforts.

-

3.

Step 3: Remove a portion of duplicated policies using customized automated algorithms with respect to:

-

Duplication within each respective external dataset

-

Duplication across the different external datasets

-

-

4.

Step 4: Pilot our data harmonization efforts for a select few countries (over the summer of 2021)

-

5.

Step 5: Release the resulting curated external data to our community of volunteer research assistants to:

-

Manually assess the overlap between PHSM data found in CoronaNet with that found in the ACAPS, COVID AMP, CIHI, HIT-COVID, OxCGRT, the WHO EURO and WHO CDC datasets respectively and;

-

Manually recode data found in the external datasets that were not already in the CoronaNet dataset into the CoronaNet taxonomy.

-

Step 1. Making Automated Taxonomy Maps

Given the variety and complexity of approaches that different groups have taken to document PHSM policies, asking research assistants to not only become experts in one taxonomy but multiple taxonomies would have been unfeasible. Instead, we created maps between the CoronaNet taxonomy and other datasets so that all datasets could be understood in the CoronaNet taxonomy for the following principal fields:

-

Policy timing

-

The start date of the policy

-

When available, the end date of policy

-

-

Policy initiator

-

The country from which a policy is initiated from

-

When available, the ISO-2 level region from which a policy is initiated from

-

-

Policy Type

-

Broad policy type

-

When possible, the policy sub type

-

-

Sources/URLs

-

URL links

-

When available, links of original pdfs

-

-

Textual description

When possible, other fields, such as the geographic and demographic targets, are also matched. As outlined in the ‘Challenges of Data Harmonization’ section, because of conceptual and organizational differences across different taxonomies, one to one mappings were not always possible especially with regards to the substance of COVID-19 policies. In such cases, one to two or one to three mappings were suggested. For the COVID AMP and WHO PHSM mappings (relevant for the WHO EURO and WHO CDC datasets), we also employed machine learning models to predict the most likely policy type an observation was likely to be in the CoronaNet taxonomy based on the textual description of the policy. Both because one to one mappings based on the taxonomies themselves were often not possible and because of issues with dirty data, in some cases, the mappings were often adjusted to so that they were based not only on the formal taxonomy but also on when certain key words were used in the dataset. For example, though policies originally coded in the WHO taxonomy of ‘Social and physical distancing measures (Category) - Domestic Travel (Sub-Category) - Closing internal land borders (Measure)’ might reasonably map onto CoronaNet’s ‘Internal Border Restriction’ policy type, when the word ‘quarantine’ appears in the text description of such policies, we reclassify them in the taxonomy map as a ‘Quarantine’ policy instead. As such, these taxonomy mappings are not always based strictly on how different policy types theoretically should map onto each other, but attempt to account for mistakes and miscodings in the external data to create the best mapping possible between the existing data and the CoronaNet dataset. In this first automated step, our aim was to ensure that most mappings were correctly mapped but did not take pains to make sure that every mapping was correctly mapped, because, as we explain later on, each observation was ultimately assessed and evaluated for harmonization by human coders who are better equipped to make these more fine-grained and nuanced judgements.

As part of this mapping exercise, in order to keep track of the original dataset that each observation came from, we also ensured that each record was associated with its own unique identifier (unique_id). In some cases, reformatting the data also impacted how the unique_id assigned by the original dataset was formatted, though we ensured that our transformation method nevertheless still allows others to trace a given observation back to the original dataset. For example, in HIT-COVID, border restrictions for people leaving or entering a country are coded in separate observations. However, in CoronaNet, if a policy for restricting both entry and exit to or from the same countr(ies) on the same date, they are coded as one observation. In this case, the HIT-COVID data is collapsed to fit into one observation and the unique identifier is also collapsed such that two or more of the original unique identifiers are collapsed into one when they are mapped to the CoronaNet taxonomy. In the case of OxCGRT, no unique identifiers are provided in the original dataset and in this case we create them using a combination of the policy indicator, date, country and where applicable, province.

Please see the Supplementary Information, Section 2, for more information about how to access the specific taxonomy mappings we created between CoronaNet and other datasets.

Step 2. Basic cleaning and subsetting of external data

With the help of the taxonomy maps, we were able to roughly transform the external datasets into the CoronaNet taxonomy. Before moving forward with manual data harmonization, we first implemented some basic cleaning and subsetting of the data. Because, as discussed in the ‘Challenges of harmonizing different taxonomies’ subsection above, most datasets do not use a consistent reference for identifying policies originating from the ISO-2 provincial level, we created code to clean these text strings up as much as possible. Given the sheer number of observations that needed such cleaning, we could not ensure full standardization for these text strings. However, we took pains to ensure that the 430+ provinces for which CoronaNet systematically seeks to collect subnational data for were consistently documented in the external data. Specifically, these are subnational provinces for the following countries: Brazil, China, Canada, France, Germany, India, Italy, Japan, Nigeria, Russia, Spain, Switzerland, and the United States.

Next we subset the external data to exclude regions that CoronaNet is currently not collecting data for. In particular, we excluded observations from the COVID AMP dataset documented at the county or tribal level in the United States as well as observations for Greenland, the United States Virgin Islands and Guam from our harmonization efforts. In addition, we also subset the external dataset to exclude policy types that CoronaNet is currently not collecting data for, in particular economic or financial measures taken in response to the pandemic.

Step 3. Automated Deduplication

After making taxonomy maps for each external dataset to the CoronaNet taxonomy and conducting some basic cleaning of the data, we also took steps to deduplicate the data using automatic methods to the extent possible. Deduplication was assessed along three criteria: i) duplicates within each external dataset ii) duplicates across the external datasets and iii) duplicates between the CoronaNet and external datasets. We outline the steps we took to assess the level of duplication along each of these criteria and when possible, to remove duplicates accordingly. All in all, we took a conservative approach in our automated deduplication efforts insofar as we rather left many potential duplicates in the dataset rather than removed too many policies which may have not been duplicates.

Step 3a. Deduplication within External Datasets

Given the sheer amount of data collected and coordination needed to collect such data, it is not surprising that there is some duplication within datasets. Duplicates can occur for a number of reasons including (i) structural differences between taxonomies (ii) the lack of one to one matching between taxonomies (e.g. a policy that may be coded as several policies in one taxonomy may only be coded as one policy in the CoronaNet taxonomy) (iii) coder error.

We first needed to deal specifically with duplication that occurs as a result OxCGRT’s method of collecting data to fit a panel data. In particular, OxCGRT coders are generally instructed to provide an assessment of whether a policy was in place or not for each given day that they are either recording the policy or for which they have evidence for a policy being in place or not. For instance, if a coder finds that the same policy has been in place over several weeks, the same textual description may be copied and pasted into the notes section of the dataset for each day that the coder happened to review the status of policy-making for that indicator, even if the ordinal indicator itself does not change. When initially extracting and reshaping the OxCGRT data into an event dataset format, each textual description is initially retained, even though it may not contain new information. To deal with this, we built a custom function to identify policies that repeated the exact same description, keeping the ‘latest’ instance of the policy description and removing earlier ones (see the OxCGRT-CoronaNet taxonomy map available through the Supplementary Information, Section 2, for more detail).