Abstract

We present a new large-scale three-fold annotated microscopy image dataset, aiming to advance the plant cell biology research by exploring different cell microstructures including cell size and shape, cell wall thickness, intercellular space, etc. in deep learning (DL) framework. This dataset includes 9,811 unstained and 6,127 stained (safranin-o, toluidine blue-o, and lugol’s-iodine) images with three-fold annotation including physical, morphological, and tissue grading based on weight, different section area, and tissue zone respectively. In addition, we prepared ground truth segmentation labels for three different tuber weights. We have validated the pertinence of annotations by performing multi-label cell classification, employing convolutional neural network (CNN), VGG16, for unstained and stained images. The accuracy has been achieved up to 0.94, while, F2-score reaches to 0.92. Furthermore, the ground truth labels have been verified by semantic segmentation algorithm using UNet architecture which presents the mean intersection of union up to 0.70. Hence, the overall results show that the data are very much efficient and could enrich the domain of microscopy plant cell analysis for DL-framework.

Measurement(s) | phenotypic annotation • plant cell classification • plant cell segmentation • plant morphology trait • plant cell |

Technology Type(s) | machine learning • image segmentation • bright-field microscopy |

Factor Type(s) | physical grading of plant tissue • morphological grading of plant tissue • tissue grading of potato tuber images |

Sample Characteristic - Organism | Solanum tuberosum |

Machine-accessible metadata file describing the reported data: https://doi.org/10.6084/m9.figshare.12957506

Similar content being viewed by others

Background & Summary

Microscopy image analysis by employing machine learning (ML) techniques advances the critical understanding of several characteristics of biological cells, ranging from the visualization of biological structures to quantification of phenotypes. In recent years, deep learning (DL) has revolutionized the area of ML, especially, in computer vision technology by evidencing vast technological breakthroughs in several domains of image recognition tasks including object detection, medical and bio-image analysis, and so on. In general, the ML implicates complex statistical techniques on a set of images and its recognition efficiency heavily relies on the handcrafted data features; whereas, the DL processes the raw image data directly and crams the data representation automatically. Indeed, its performance highly depends on the large number of diverse images with accurate and applicable labelling. Following the trend, the DL is emerging as a powerful tool for microscopy image analysis, such as cell segmentation, classification, and detection by exploring the dynamic variety of cells. Moreover, the DL pipeline allows discovering the hidden cell structures, such as single-cell size, number of cells in a given area, cell wall thickness, intercellular space distribution, subcellular components, and its density, etc. from microscopy images by extracting the complex data representation in hierarchical way. Meanwhile, it expressively diminishes the burden of feature engineering in traditional ML.

Certainly, several works have been attempted in cell biology and digital pathology domain to provide quantitative support in automatic diagnosis and prognosis by detecting mitosis, nucleus, cells, and the number of cells from breast cancer1,2, brain tumour3, and retinal pigment epithelial cell4 images in DL framework. Consequently, the DL network successfully applied in plant biology for stomata classification, detection5,6,7, and counting8, plant protein subcellular localization9, xylem vessels segmentations10, and plant cell segmentation for automated cells tracking11,12. Therefore, it is necessary to assemble a large number of annotated microscopy images and its ground truth for the successful application of DL based microscopy image analysis13. Certainly, there are numerous publicly available microscopy image datasets, mostly medical images for DL based diagnosis and prognosis, such as Human Protein Atlas14, H&E-stained tissue slides from the Cancer Genome Atlas15, DeepCell Dataset16,17, Mitosis detection in breast cancer18, lung cancer cell19. In contrast, there are very few number of publicly accessible biological microscopy image datasets of plant tissue cells, which are suitable for the DL framework. Furthermore, the existing datasets have limited number of diverse images with proper annotation. In such context, we have generated an optical microscopy image dataset of potato tuber with a larger number of diverse images, and appropriate annotation. This publicly available dataset will be beneficial in analysing the plant tissue cells with great details by employing DL based techniques.

Microscopy image analysis has become more reliable in understanding the structure, texture, geometrical properties of plant cells and tissues which pay a profound impact on botanical research. Such studies have significant aspects in interpreting the variety of different plant cells, tissues, and organs by discriminating cell size, shape, orientation, cell wall thickness, distribution, and size of intracellular spaces20, tissue types, and mechanical21,22 properties like shear, compressive stiffness etc. For instance, the shape and size of cell guides to determine the size and texture23 of a plant organ; while, the tissue digestibility and plant productivity24,25 are controlled by the cell wall thickness; similarly, the mechanical properties of the cell wall plays a crucial role in plant stability and resistance against pathogens26; whereas, the intercellular spaces influence the physical properties of tissues, like firmness, crispness, and mealiness27. Certainly, it has been practiced in various domains of plant cell research, such as fruits and vegetables23,28. In this connection, there are various ways to generate microscopy images, such as brightfield microscopy, fluorescence microscopy, and electron microscopy. All these methods have their own advantages and disadvantages as well. Besides, sample preparation is one of the crucial steps in microscopy image generation which includes fixation, paraffin embedding, and different staining techniques for better visualization of cell segments. The most widely used stains are safranin-o29,30 and toluidine blue-o31 for visualizing cell walls and lugol’s iodine32 for starch detection.

In this view, we present a large brightfield optical microscopy image dataset of plant tissues of potato tuber, as it is one of the principal and high productive tuber crops and a valuable component of our regular diet. Usually, potato tubers are of oval or round shape with white flesh and pale brown skin with bud and stem end. Three major parts of the tuber are cortex, perimedullary zone (outer core), and pith (inner core) with medullary rays, which are made up of parenchyma cells. The cell structures are distinct for different tuber variety33, even within the same tuber, especially inner core and outer core34. The same structural differences can also be observed between the stem and bud ends. In addition, the cell division and enlargement in various regions play an important role35 on potato tuber growth. Following such variations in cell structure, we have generated a large dataset consisting of 15,938 fully annotated unstained and stained images with three-fold labelling. The labelling has been prepared based on the tuber size (large, medium, and small), collections area (bud, middle and stem part), and tissue zones (inner and outer core) and the images have been graded as physical, morphological and tissue grading respectively. In addition, 60 ground truth segmentation labels of the images from the inner core have been prepared for the different tuber weight. To check the quality of the images, technical validation has been conducted by the DL based classification and segmentation tasks, which displayed significant recognition accuracy. Thus, this dataset is very much suitable for studying plant cell microstructures including cell size and shape, cell wall thickness, intercellular space, starch, and cell density distribution in potato tubers using DL based pipeline. Indeed, such properties can be explored explicitly as the dataset includes the images from the entire region of the tuber covering two tissue zones from stem to bud end for different tuber weights. In addition, large number of images in this dataset will provide new opportunities for evaluating and developing DL based plant biology classification and segmentation algorithms. Furthermore, the unstained along with stained images will be suitable to develop virtual-staining algorithms in the DL framework. Therefore, the dataset could enrich the DL based microscopy cell assessment in plant biology substantially.

Methods

Potato tuber selection and microscopic specimen preparation

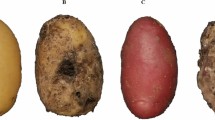

The raw potato tubers (Solanum tuberosum L.) of an Indian variety, Kufri Pukhraj have been chosen in this work. The Kurfi Pukhraj, an excellent source of vitamin C, potassium, and fibre is one of the popularly grown commercial cultivars in India. The tubers have been collected immediately after harvesting in mid of December 2019 from Kamrup, a district of Assam state, India. All the samples without any outer damages have been collected and stored in the temperature of 19.2 °C–29.2 °C with 70% relative air humidity. Based on the weight of the tuber, samples have been graded into large, medium, and small of weight 80–100 gm, 40–50 gm, and 15–25 gm respectively. From each of these groups, 5 samples (total of 15 samples) have been selected for image generation at the laboratory maintaining stable room temperature and humidity. The whole experiment including collection of the tuber samples and image generation has been accomplished in 20 days. Different graded tuber samples are chosen alternate days during the experiment.

The major parts of potato tuber, periderm (skin) with the bud and stem ends, cortex, perimedullary zone (outer core), and pith (inner core) with medullary rays have been displayed in Fig. 1b,c. The periderm, the outermost layer, protects a tuber from dehydration, infection, and wounding during harvest and storage. The cortex, outer core, and inner core tissues appear successively after the skin where starch granules are stored in parenchyma cells. The thickness of the cortex is about 146–189 µm36 and the largest cells are found here. The outer core spreads about 75% of the total tuber volume and contains the maximum amount of starch37. The innermost region i.e., inner core expands from stem to bud end38 along longitude direction; whereas, the medullary rays spread toward the cortex. The samples have been collected from the inner and outer core which covers most of the areas of a tuber. Besides, the cell structures34 and the amount of starch are distinct in these two tissue zones. Similar samples have been collected from three areas, named Z1, Z2, and Z3 as indicated in Fig. 1b. The samples have been extracted with a cork borer of a diameter of 4 mm and rinsed in distilled water. After that, 5 thin sections from the inner core as well as the outer core of each of the three areas have been collected. Therefore, from a tuber sample, 30 thin sections (5 sections ×3 section areas ×2 tissue zones) have been analysed. Furthermore, to capture images, fresh thin potato sections (i.e., unstained samples) have been placed under the microscope. In addition, for the better visualization of cell boundaries and subcellular components, especially starch, the samples have been stained. Safranin-o (1% solution) and toluidine blue-o (0.05% solution) has been used to visualize all cell walls; whereas, lugol’s iodine solution helped to distinguish starch granules. An optical microscope (Labomed Lx300, Labomed America) accompanied by a smartphone camera (Redmi Note 7 Pro) was used to generate and capture microscopy images as shown in Fig. 1f. The brightfield microscopy images have been generated using a 10x lens (field number = 18, numerical aperture = 1.25) which provides a field of view (FOV) of diameter 0.18 mm. The camera of the smartphone has been fixed on the microscope eyepiece by using an adaptor. Certainly, the exposure and white balance state has been secured by the adequate brightness level of the microscope’s built-in light-emitting diode (LED) and a clear FOV. The exposure time of the smartphone camera has been kept in the range of 1/200 s– 1/100 s which provides satisfactory brightness level; whereas, the focus setting of the camera has been locked that maintains fixed magnification among all the images. The images have been captured in the highest quality JPG format with maximum of 10% compression only to retain the image quality reasonably high. The mobile camera has been fixed to 3x zoom which offers a FOV of 890 × 740 µm2 with an approximate resolution of 0.26 µm/pixel. Following this setting, three images have been taken for each field of view by changing the focus distance of 3 µm. Similarly, around 15 images have been acquired from a section by continuous precision shifting of the microscope stage along the x-y plane before the samples get dried. Thus, in total 9,811 unstained and 6,127 stained images have been captured and saved in JPG format in 24-bit RGB color and of resolution 3650 × 3000.

Demonstration of potato tuber anatomy, sample preparations, and image acquisition set up for microstructure visualization: (a) A potato tuber sample. (b) Longitudinal cross-section of a tuber. The samples have been divided into three parts, named Z1, Z2, and Z3 nearer to bud, middle and stem respectively as indicated by dotted lines for microscopic observations. (c) Transverse cross-sections of the tuber where sample collection areas, inner and outer core are highlighted by red circle. (d) Tissue samples have been collected by using a cork borer of diameter 4 mm from specified zones. (e) Thin free-hand unstained sections have been obtained. The stained samples have been prepared by using safranin-o (1%), toluidine blue-o (0.05%), and lugol’s iodine. (f) Image capturing set up in which, the camera of the smartphone has been fixed on the microscope eyepiece by using an adaptor. Two types of microscopy images, unstained and stained images have been captured independently without drying the sections.

Image grading

Previous studies identified that the potato tuber weight is directly associated with the number of cells and cell volume in different tissue zones. Nevertheless, the cell numbers are considered as a significant factor compared to mean cell volume for a tuber weight variation39. Hence, potato tuber weight has been recognized as one of the important physical parameters to achieve versatility in the image database. Therefore, in this work, based on the weight, potato tubers are categorized into three groups as large, medium, and small. Certainly, the captured microscopic images are composed of discrete cells with thin nonlignified cell walls surrounded by starch granules40. In a tuber, the cell size differs considerably in the two tissue zones— inner and outer core34. In general, the outer core occupies the maximum volume of the tuber and stores the largest number of starch granules as reserve material. On the contrary, the inner core cells are smaller34 with lower starch content which makes this tissue zone wet and translucent as displayed in Fig. 2. Such variation of cell sizes and starch distribution can be observed in the stem, bud, and middle section of tubers as well. Therefore, the images have been graded into three categories namely (1) physical grading, (2) morphological grading and, (3) tissue grading based on tuber weight, section areas, and tissue zones respectively.

Physical grading

Tubers of three different weight ranges have been selected for the image dataset, as it has a correlation with the cell features. Three different weight groups of tubers, such as large (L), medium (M), and small (S) with weight 80–100 gm, 40–50 gm and 15–25 gm respectively, have been considered for this microscopy image dataset. The generated images have been labelled with L, M, and S followed by sample number 1–5 to distinguish tuber weight along with sample number; for instance, L1 refers to the first sample of a large tuber. The labels associate with weights and related parameters along with sample numbers for physical grading have been listed in Table 1.

Morphological grading

The bud and stem ends of potato tubers are connected with the apical and basal end of the shoot respectively. These areas displayed compositional variations41,42 with distinct cell features. The images of the tuber middle part (separates the bud, and stem end) have been incorporated in this dataset to visualize structural variations along the longitudinal direction. Therefore, for morphological grading, the tubers have been divided into three parts namely Z1, Z2, and Z3 which specify the bud, middle, and stem areas respectively as shown in Fig. 1b. Certainly, the images have been captured from these areas for each physically graded sample and labelled accordingly.

Tissue grading

A significant variation in cell sizes within the same potato tuber can be observed in inner core and outer core tissue zones. The cell size of the outer core is larger than that of the inner core and contains most of the starch material. Therefore, in tissue grading, these two zones have been identified. Certainly, the images have been captured from these zones for each morphologically graded sample and labelled as IC and OC which indicates the inner and outer core of the potato tuber respectively. Example of unstained and stained images of large, medium, and small potato tuber from different section areas and tissue zones have been displayed in Figs. 3–5 respectively.

Example of unstained and stained images of large (80–100 gm) potato tubers. Rows and columns indicate respective tissue zones (inner and outer core) and different staining agents. The first column specifies the unstained images, whereas, the subsequent columns are for stained images of safranin-o, toluidine blue-o, and lugol’s iodine. The images are from (a) Bud Region (Z1), (b) Middle Region (Z2), and (c) Stem Region (Z3).Note: All the images are with the scale at top-left corner on unstained image.

Example of unstained and stained images of medium (40–50 gm) potato tubers. Rows and columns indicate respective tissue zones (inner and outer core) and different staining agents. The first column specifies the unstained images, whereas, the subsequent columns are for stained images of safranin-o, toluidine blue-o, and lugol’s iodine. The images are from (a) Bud Region (Z1), (b) Middle Region (Z2), and (c) Stem Region (Z3). Note: All the images are with the scale at top-left corner on unstained image.

Example of unstained and stained images of small (15–25 gm) potato tubers. Rows and columns indicate respective tissue zones (inner and outer core) and different staining agents respectively. The first column specifies the unstained images, whereas, the subsequent columns are for stained images of safranin-o, toluidine blue-o, and lugol’s iodine. The images are from (a) Bud Region (Z1), (b) Middle Region (Z2), and (c) Stem Region (Z3). Note: All the images are with the scale at top-left corner on unstained image.

Database Summary

There are total 15,938 (9,811 unstained and 6,127 stained) numbers of images in this dataset. The images are categorized based on different grading and labelling basis, and listed in Table 2. The first two columns refer to grading and labelling basis followed by the number of images for unstained and stained cases. Furthermore, the stained images with three stains (safranin-o, toluidine blue-o, and lugol’s iodine) are also specifically mentioned.

Ground truth label generation for cell boundary segmentation

Segmentation is performed to split an image into several parts to identify meaningful features or objects. In microscopy image analysis, a common problem is to identify distinct parts which correspond to a single cell or cell components to quantify the spatial and temporal coordination. Furthermore, as a precursor to geometric analysis, such as cell size and its distributions, image segmentation is essential. Such a task can be performed manually, which is very much time-consuming, irreproducible, and tedious for larger image sets. Nonetheless, it can be automated by the ML techniques which require proper ground truth labels. Therefore, we have generated ground truth labels of cell boundaries for the automated segmentation task. The images have been captured from different parts of the tubers as mentioned earlier, and labelled accordingly. Certainly, to generate the ground truth labels for cell boundary segmentation, the unstained images of inner core from the Z2 area have been selected, as cell boundaries are comparatively prominent in this zone due to presence of fewer amounts of starch granules.

Segmentation of potato cell images can be very much challenging because of its complex cell boundaries and non-uniformity in image background which leads to poor contrast between cell boundary and background. Therefore, to generate the ground truth cell boundaries, a few steps have been involved: (1) pre-processing (2) thresholding, and (3) morphological operations. The pre-processing steps have been mainly implicated in background correction and image filtering. Generally, the uneven thickness of the tuber section results non-uniform microscopy image background. Thus, to minimize such non-uniformity a well-known rolling ball algorithm43,44 has been employed. It eliminates the unnecessary background information by converting a 2D image I(x,y) into a 3D surface; where, the pixel values are considered as the height. Then, a ball of a certain radius (R) is rolled over the backside of the surface which creates a new surface S(x,y). Furthermore, a new image with a uniform background is created by, \({I}_{new}(x,y)=(I(x,y)+1)-(S(x,y)-R)\)44. To achieve an optimal image with the best uniform background, the values of R must be selected carefully. In our work, empirically, the values of R have been kept as 30 < R < 60. Next, for image filtering, bandpass filter has been used to enhance the cell edges by eliminating shading effects. In this purpose, Gaussian filtering in Fourier space has been considered. A bandpass filter having two cut-off frequencies, lower (fcl) and higher (fch) are kept within a range for intensity variation in the captured image. Empirically, it has been kept as 10 < fcl < 30 and 60 < fch < 120. Furthermore, the adaptive thresholding method45 has been implemented to binarize the images for discriminating the cell boundaries. Moreover, morphological operators, such as opening, closing, and hole filling has been chosen to refine cell boundaries. Several values of fcl, fch, and R have been chosen to get the best binary images. Although, very few starch granules and some disconnected cell boundaries can be observed in the resultant binary images, which could lead to a weak cell boundary segmentation. Certainly, such discrepancies have been further refined by very well-known manual process46 which involves removal of the starch granules and contouring cell boundaries. The whole process of cell boundary segmentation ground truth label generation has been shown in Fig. 6.

Steps involved in generating the ground truth segmentation labels for the inner core tissues. The original images pre-processed by employing rolling ball algorithm and bandpass filtering. Next, the adaptive thresholding has been employed to obtain binary images. Furthermore, morphological operations have been performed to refine the cell boundaries and remove the starch granules. By changing fcl, fch and R at pre-processing steps, possible binary images have been generated. Then, the best image has been selected for manual correction.

Data Records

This dataset is publicly available on figshare47 (https://doi.org/10.6084/m9.figshare.c.4955669) which can be downloaded as a zip file. The zip file contains three folders named as “stain”, “unstain”, and “segmentation”. All the images are in JPG format. The raw microscopy images of potato tubers can be found in “stain” and “unstain” folder; whereas, the segmentation folder provides raw images with ground truth segmentation labels. The “stain” folder contains different stained images in the respective folders named as “safranin”, “toluidine”, and “lugols”. The image labels information can be extracted from the image filenames itself. The file naming format for unstained image is as < physical grading with sample no. > _ < morphological grading > _ < tissue grading > _ < section no. > _ < image no. > ; for example, M1_Z1_IC_Sec. 1_02.jpg refers to an image (first section out of five) taken from the inner core of Z1 of medium weight potato tuber (sample no. 1). Similarly, the stained image file naming format is as < physical grading with sample no. > _ < morphological grading > _ < tissue grading > _ < section no. > _ < stain type > _ < image no.>; for example, S1_Z2_OC_Sec. 3_lugol_02.jpg refers to an lugol’s iodine stained image (third section out of five) taken from the outer core of Z2 of small weight potato tuber (sample no. 1). The whole file naming format can be understood by following Table 3. The segmentation folder contains two subfolders, named as “images” and “groundtruth”. The image files naming format is as < physical grading > _ < image no. >; for example, “L_2.jpg” represents the second image of a large potato tuber sample. Besides, the ground truth label images are kept in binary image format having the same dimensions of the raw images.

Technical Validation

The technical validation has been conducted by employing the DL based classification and segmentation tasks on the acquired image dataset as illustrated in Fig. 7. Multi-label cell classification has been conducted to verify the quality of the assigned labels. It has been examined by considering two specific image labels— physical (L, M, and S) and tissue grading (IC and OC). Besides, to verify the ground truth segmentation labels, semantic segmentation has been performed using the DL pipeline. The first test can yield information about the possible separation of labels and the later can access individual cells in different tuber weights.

Overall technical verification of image and ground truth segmentation label. Two types of microscopy images have been chosen independently. The image labels have been verified by VGG1648 deep neural network employing transfer learning. The Unet50 architecture has been used to employ the semantic segmentation using the generated ground truth segmentation labels and hence verify the same.

Multi-label cell classification

The CNN classification network, VGG1648 has been employed for multi-label cell classification using input images of 256 × 256 pixels with two labels, physical (L, M, and S) and tissue grading (IC and OC). The first 13 layers of the neural network have been pre-trained on ImageNet [ILSVRC2012] dataset. On top of it, task-specific fully-connected layers have been attached and activated by the sigmoid function. The complete network has been fine-tuned on our datasets. The network performance has been evaluated based on the train-test scheme. Therefore, the entire image dataset (unstained and stained) has been partitioned randomly into two subsets, with 80% for training and 20% for test. The network has been trained using SGD49 optimizer with a learning rate of 10−2, momentum 0.9, and the binary cross-entropy as loss function for both the image dataset. With the iterative learning technique, performance metrics, such as accuracy and F2-score (assessing the correctness of the image labels), have been obtained for test images. The results have been listed for the test set in Table 4. It shows that for the same number of epochs (30), the unstained image dataset gives a better result than the stained image dataset.

Cell segmentation

In this task, Unet50, a very well recognized image segmentation neural network has been employed. It has shown remarkable performance in biomedical image segmentation. The input images have been generated by subdividing each ground truth labels and raw images into 20 sub-images, which further resized to 512 × 512 pixels before training. The network has been trained using Adam51 optimizer with learning rate of 10−1. Two types of inputs, namely raw and normalized images have been given separately into the network. The entire image dataset has been partitioned randomly into two subsets, with 80% for training and 20% for test. Then, performance evaluation has been conducted by employing normal adaptive learning rate-based training. During the training period, early stopping has been used to choose the model with the highest validation performance. The mean intersection of union (IOU) has been chosen as a performance metric that measures how much predicted boundary overlaps with the ground truth (real cell boundary) and the results have been displayed in Table 5. For the same deep neural network, normalize input images give better result than the raw images. A representative result of cell segmentation for raw and normalized input images has been displayed in Fig. 8 in which (a), (b), (c), and (d) refer to raw RGB image, ground truth, the result for raw and normalized input images respectively.

Example of different image sets used during cell segmentation by Unet50 for ground truth labels validation. The first and second rows indicate the train and test images respectively. (a) Unstained raw RGB images, (b) Ground Truth images, (c) Segmentation result for raw RGB input images, and (d) Segmentation result for normalized input images.

References

Cireşan, D. C., Giusti, A. & Gambardella, L. M. & Schmidhuber. Mitosis detection in breast cancer histology images with deep neural networks. In Proc. 16th Int. Conf. Med. Image Comput. Comput. -Assist. Intervent. 8150, 411–418 (2013).

Veta, M., Van Diest, P. J. & Pluim. Cutting out the middleman: Measuring nuclear area in histopathology slides without segmentation. In Proc. 19th Int. Conf. Med. Image Comput. Comput. -Assist. Intervent. 632–639 (2016).

Xing, F., Xie, Y. & Yang, L. An automatic learning-based framework for robust nucleus segmentation. IEEE Trans Med. Imaging. 35, 550–566 (2015).

Xie, W., Noble, J. A. & Zisserman, A. Microscopy cell counting and detection with fully convolutional regression networks. In Proc. 1st Workshop Deep Learn. Med. Image Anal. (MICCAI). 1–8 (2015).

Bhugra, S. et al. Deep Convolutional Neural Networks based Framework for Estimation of Stomata Density and Structure from Microscopic Images. In Proc. Eur. Conf. Comput. Vis. (ECCV). (2018).

Aono, A. H. et al. A stomata classification and detection system in microscope images of maize cultivars. Preprint at https://www.biorxiv.org/content/10.1101/538165v1 (2019).

Saponaro, P. et al. Deepxscope: Segmenting microscopy images with a deep neural network. In Proc. IEEE Conf. Comput. Vis. Pattern Recognit. Workshops. 91–98 (2017).

Fetter, K. C., Eberhardt, S., Barclay, R. S., Wing, S. & Keller, S. R. Stomata Counter: a neural network for automatic stomata identification and counting. New Phytol. 223, 1671–1681 (2019).

Shao, Y.-T., Liu, X.-X., Lu, Z. & Chou, K.-C. pLoc_Deep-mPlant: Predict Subcellular Localization of Plant Proteins by Deep Learning. Nat. Sci. 12, 237–247 (2020).

Garcia-Pedrero, A. et al. Xylem vessels segmentation through a deep learning approach: a first look. IEEE Int. Work Conf. Bioinspir. Intell. (IWOBI). 1–9 (2018).

Jiang, W., Wu, L., Liu, S. & Liu, M. CNN-based two-stage cell segmentation improves plant cell tracking. Pattern Recognit. Lett. 128, 311–317 (2019).

Liu, M., Wu, L., Qian, W. & Liu, Y. Cell tracking across noisy image sequences via faster R-CNN and dynamic local graph matching. IEEE Int. Conf. Bioinform. Biomed. (BIBM). 455–460 (2018).

Moen, E. et al. Deep learning for cellular image analysis. Nat. Methods. 16, 1–14 (2019).

Thul, P. J. et al. A subcellular map of the human proteome. Sci. 356, eaal3321 (2017).

Kumar, N. et al. A dataset and a technique for generalized nuclear segmentation for computational pathology. IEEE Trans. Med. Imaging. 36, 1550–1560 (2017).

Bannon, D. et al. DeepCell 2.0: Automated cloud deployment of deep learning models for large-scale cellular image analysis. Preprint available at https://www.biorxiv.org/content/early/2018/12/22/505032 (2018).

Falk, T. et al. U-Net: deep learning for cell counting, detection and morphometry. Nat. Methods. 16, 67–70 (2019).

Roux, L. et al. Mitosis detection in breast cancer histological images. An ICPR 2012 Contest. J. Pathol. Informatic. 4 (2013).

Cano, A., Masegosa, A. & Moral, S. ELVIRA biomedical data set repository. (2005).

Pierzynowska-Korniak, G., Zadernowski, R., Fornal, J. & Nesterowicz, J. The microstructure of selected apple varieties. Electron. J. Pol. Agric. Univ. 5 (2002).

Sadowska, J., Fornal, J. & Zgórska, K. The distribution of mechanical resistance in potato tuber tissues. Postharvest Biol. Tech. 48, 70–76 (2008).

Haman, J. & Konstankiewicz, K. Destruction processes in the cellular medium of a plant-theoretical approach. Int. Agrophys. 14, 37–42 (2000).

McAtee, P. A., Hallett, I. C., Johnston, J. W. & Schaffer, R. J. A rapid method of fruit cell isolation for cell size and shape measurements. Plant Methods. 5 (2009).

Moghaddam, P. R. & Wilman, D. Cell wall thickness and cell dimensions in plant parts of eight forage species. J. Agric. Sci. 131, 59–67 (1998).

Ahmad, N., Amjed, M., Rehman, A. & Rehman, A. Cell walls digestion of ryegrass and Lucerne by cattle. Sarhad J. Agric. 23, 475 (2007).

Vogler, H., Felekis, D., Nelson, B. J. & Grossniklaus, U. Measuring the mechanical properties of plant cell walls. Plants. 4, 167–182 (2015).

Volz, R., Harker, F., Hallet, I. & Lang, A. Development of Texture in Apple Fruit– a Biophysical Perspective. XXVI Int. Hortic. Congress: Deciduous Fruit and Nut Trees. 636, 473–479 (2004).

Konstankiewicz, K., Pawlak, K. & Zdunek, A. Influence of structural parameters of potato tuber cells on their mechanical properties. Int. Agrophys. 15, 243–246 (2001).

van de Velde, F., Van Riel, J. & Tromp, R. H. Visualisation of starch granule morphologies using confocal scanning laser microscopy (CSLM). J. Sci. Food Agric. 82, 1528–1536 (2002).

Dürrenberger, M. B., Handschin, S., Conde-Petit, B. & Escher, F. Visualization of food structure by confocal laser scanning microscopy (CLSM). LWT-Food Sci. Tech. 34, 11–17 (2001).

Soukup, A. Selected simple methods of plant cell wall histochemistry and staining for light microscopy. Methods Mol. Biol. 1080, 25–40 (2014).

Smith, A. M. & Zeeman, S. C. X. Quantification of starch in plant tissues. Nat. Protoc. 1, 1342–1345 (2006).

Bordoloi, A., Kaur, L. & Singh, J. Parenchyma cell microstructure and textural characteristics of raw and cooked potatoes. Food Chem. 133, 1092–1100 (2012).

Konstankiewicz, K. et al. Cell structural parameters of potato tuber tissue. Int. Agrophys. 16, 119–128 (2002).

Xu, X. & Vreugdenhil, D. & Lammeren, A. A. v. Cell division and cell enlargement during potato tuber formation. J. Exp. Bot. 49, 573–582 (1998).

Troncoso, E., Zúñiga, R., Ramírez, C., Parada, J. & Germain, J. C. Microstructure of potato products: Effect on physico-chemical properties and nutrient bioavailability. Glob. Sci. Books. 3, 41–54 (2009).

Salunkhe, D. K. & Kadam, S. Handbook of vegetable science and technology: production, compostion, storage, and processing. (CRC press, 1998).

Böl, M., Seydewitz, R., Leichsenring, K. & Sewerin, F. A phenomenological model for the inelastic stress-strain response of a potato tuber. J. Mech. Phys. Solids. 103870 (2020).

Liu, J. & Xie, C. Correlation of cell division and cell expansion to potato microtuber growth in vitro. Plant Cell Tiss. Organ Cult. 67, 159–164 (2001).

Ramaswamy, U. R., Kabel, M. A., Schols, H. A. & Gruppen, H. Structural features and water holding capacities of pressed potato fibre polysaccharides. Carbohydr. Polym. 93, 589–596 (2013).

Liu, B. et al. Differences between the bud end and stem end of potatoes in dry matter content, starch granule size, and carbohydrate metabolic gene expression at the growing and sprouting stages. J. Agric. Food Chem. 64, 1176–1184 (2016).

Sharma, V., Kaushik, S., Singh, B. & Raigond, P. Variation in biochemical parameters in different parts of potato tubers for processing purposes. J. Food Sci. Tech. 53, 2040–2046 (2016).

Sternberg, S. R. Biomedical image processing. Comput. 22–34 (1983).

Shvedchenko, D. & Suvorova, E. New method of automated statistical analysis of polymer-stabilized metal nanoparticles in electron microscopy images. Crystallogr. Rep. 62, 802–808 (2017).

Chan, F. H., Lam, F. K. & Zhu, H. Adaptive thresholding by variational method. IEEE Trans. Image Process. 7, 468–473 (1998).

White, A. E., Dikow, R. B., Baugh, M., Jenkins, A. & Frandsen, P. B. Generating segmentation masks of herbarium specimens and a data set for training segmentation models using deep learning. Apl. Plant Sci. 8, e11352 (2020).

Biswas, S. & Barma, S. A large-scale optical microscopy image dataset of potato tuber for deep learning based plant cell assessment. figshare https://doi.org/10.6084/m9.figshare.c.4955669 (2020).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. Preprint at https://arxiv.org/abs/1409.1556 (2014).

Ruder, S. An overview of gradient descent optimization algorithms. Preprint at https://arxiv.org/abs/1609.04747 (2016).

Ronneberger, O., Fischer, P. & Brox, T. U-Net: convolutional networks for biomedical image segmentation. Preprint at https://arxiv.org/abs/1505.04597 (2015).

Kingma, D. P. & Ba, J. Adam: A method for stochastic optimization. Preprint at https://arxiv.org/abs/1412.6980 (2014).

Acknowledgements

The work has been supported by the Department of Science and Technology, Govt. of India under IMPRINT-II with the file number IMP/2018/000538.

Author information

Authors and Affiliations

Contributions

Both the authors have equal contribution in writing the manuscripts, carried outing main research, and analysis tasks of this work.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

The Creative Commons Public Domain Dedication waiver http://creativecommons.org/publicdomain/zero/1.0/ applies to the metadata files associated with this article.

About this article

Cite this article

Biswas, S., Barma, S. A large-scale optical microscopy image dataset of potato tuber for deep learning based plant cell assessment. Sci Data 7, 371 (2020). https://doi.org/10.1038/s41597-020-00706-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41597-020-00706-9

This article is cited by

-

Evaluation of dry matter content in intact potatoes using different optical sensing modes

Journal of Food Measurement and Characterization (2023)