Abstract

Neural computations are currently investigated using two separate approaches: sorting neurons into functional subpopulations or examining the low-dimensional dynamics of collective activity. Whether and how these two aspects interact to shape computations is currently unclear. Using a novel approach to extract computational mechanisms from networks trained on neuroscience tasks, here we show that the dimensionality of the dynamics and subpopulation structure play fundamentally complementary roles. Although various tasks can be implemented by increasing the dimensionality in networks with fully random population structure, flexible input–output mappings instead require a non-random population structure that can be described in terms of multiple subpopulations. Our analyses revealed that such a subpopulation structure enables flexible computations through a mechanism based on gain-controlled modulations that flexibly shape the collective dynamics. Our results lead to task-specific predictions for the structure of neural selectivity, for inactivation experiments and for the implication of different neurons in multi-tasking.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$209.00 per year

only $17.42 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

Trained models are available at https://github.com/adrian-valente/populations_paper_code.

Code availability

All codes used to train models and generate figures are available at https://github.com/adrian-valente/populations_paper_code.

References

Barack, D. L. & Krakauer, J. W. Two views on the cognitive brain. Nat. Rev. Neurosci. 22, 359–371 (2021).

Hubel, D. H. & Wiesel, T. N. Receptive fields of single neurones in the cat’s striate cortex. J. Physiol. 148, 574–591 (1959).

Moser, E. I., Moser, M.-B. & McNaughton, B. L. Spatial representation in the hippocampal formation: a history. Nat. Neurosci. 20, 1448–1464 (2017).

Hardcastle, K., Maheswaranathan, N., Ganguli, S. & Giocomo, L. M. A multiplexed, heterogeneous, and adaptive code for navigation in medial entorhinal cortex. Neuron 94, 375–387 (2017).

Adesnik, H., Bruns, W., Taniguchi, H., Huang, Z. J. & Scanziani, M. A neural circuit for spatial summation in visual cortex. Nature 490, 226–231 (2012).

Ye, L. et al. Wiring and molecular features of prefrontal ensembles representing distinct experiences. Cell 165, 1776–1788 (2016).

Kvitsiani, D. et al. Distinct behavioural and network correlates of two interneuron types in prefrontal cortex. Nature 498, 363–366 (2013).

Hangya, B., Pi, H.-J., Kvitsiani, D., Ranade, S. P. & Kepecs, A. From circuit motifs to computations: mapping the behavioral repertoire of cortical interneurons. Curr. Opin. Neurobiol. 26, 117–124 (2014).

Pinto, L. & Dan, Y. Cell-type-specific activity in prefrontal cortex during goal-directed behavior. Neuron 87, 437–450 (2015).

Hirokawa, J., Vaughan, A., Masset, P., Ott, T. & Kepecs, A. Frontal cortex neuron types categorically encode single decision variables. Nature 576, 446–451 (2019).

Hocker, D. L., Brody, C. D., Savin, C. & Constantinople, C. M. Subpopulations of neurons in lOFC encode previous and current rewards at time of choice. eLife 10, e70129 (2021).

Churchland, M. M. & Shenoy, K. V. Temporal complexity and heterogeneity of single-neuron activity in premotor and motor cortex. J. Neurophysiol. 97, 4235–4257 (2007).

Machens, C. K., Romo, R. & Brody, C. D. Functional, but not anatomical, separation of ‘what’ and ‘when’ in prefrontal cortex. J. Neurosci. 30, 350–360 (2010).

Rigotti, M. et al. The importance of mixed selectivity in complex cognitive tasks. Nature 497, 585–590 (2013).

Mante, V., Sussillo, D., Shenoy, K. V. & Newsome, W. T. Context-dependent computation by recurrent dynamics in prefrontal cortex. Nature 503, 78–84 (2013).

Park, I. M., Meister, M. L. R., Huk, A. C. & Pillow, J. W. Encoding and decoding in parietal cortex during sensorimotor decision-making. Nat. Neurosci. 17, 1395–1403 (2014).

Raposo, D., Kaufman, M. T. & Churchland, A. K. A category-free neural population supports evolving demands during decision-making. Nat. Neurosci. 17, 1784–1792 (2014).

Buonomano, D. V. & Maass, W. State-dependent computations: spatiotemporal processing in cortical networks. Nat. Rev. Neurosci. 10, 113–125 (2009).

Gallego, J. A., Perich, M. G., Miller, L. E. & Solla, S. A. Neural manifolds for the control of movement. Neuron 94, 978–984 (2017).

Remington, E. D., Narain, D., Hosseini, E. A. & Jazayeri, M. Flexible sensorimotor computations through rapid reconfiguration of cortical dynamics. Neuron 98, 1005–1019 (2018).

Saxena, S. & Cunningham, J. P. Towards the neural population doctrine. Curr. Opin. Neurobiol. 55, 103–111 (2019).

Vyas, S., Golub, M. D., Sussillo, D. & Shenoy, K. V. Computation through neural population dynamics. Annu. Rev. Neurosci. 43, 249–275 (2020).

Rajan, K., Harvey, C. D. & Tank, D. W. Recurrent network models of sequence generation and memory. Neuron 90, 128–142 (2016).

Chaisangmongkon, W., Swaminathan, S. K., Freedman, D. J. & Wang, X.-J. Computing by robust transience: how the fronto-parietal network performs sequential, category-based decisions. Neuron 93, 1504–1517 (2017).

Wang, J., Narain, D., Hosseini, E. A. & Jazayeri, M. Flexible timing by temporal scaling of cortical responses. Nat. Neurosci. 21, 102–110 (2018).

Sohn, H., Narain, D., Meirhaeghe, N. & Jazayeri, M. Bayesian computation through cortical latent dynamics. Neuron 103, 934–947 (2019).

Sussillo, D. Neural circuits as computational dynamical systems. Curr. Opin. Neurobiol. 25, 156–163 (2014).

Omri, B. Recurrent neural networks as versatile tools of neuroscience research. Curr. Opin. Neurobiol. 46, 1–6 (2017).

Yang, G. R., Joglekar, M. R., Song, H. F., Newsome, W. T. & Wang, X.-J. Task representations in neural networks trained to perform many cognitive tasks. Nat. Neurosci. 22, 297–306 (2019).

Mastrogiuseppe, F. & Ostojic, S. Linking connectivity, dynamics, and computations in low-rank recurrent neural networks. Neuron 99, 609–623 (2018).

Katsuyuki, S. Task set and prefrontal cortex. Annu. Rev. Neurosci. 31, 219–245 (2008).

Duncker, L., Driscoll, L., Shenoy, K. V., Sahani, M. & Sussillo, D. Organizing recurrent network dynamics by task-computation to enable continual learning. Advances in Neural Information Processing Systems 33 (2020).

Masse, N. Y., Grant, G. D. & Freedman, D. J. Alleviating catastrophic forgetting using context-dependent gating and synaptic stabilization. Proc. Natl Acad. Sci. USA 115, E10467–E10475 (2018).

Schuessler, F., Dubreuil, A., Mastrogiuseppe, F., Ostojic, S. & Barak, O. Dynamics of random recurrent networks with correlated low-rank structure. Phys. Rev. Res. 2, 013111 (2020).

Schuessler, F., Mastrogiuseppe, F., Dubreuil, A., Ostojic, S. & Barak, O. The interplay between randomness and structure during learning in RNNs. Advances in Neural Information Processing Systems 33, 13352–13362 (2020).

Beiran, M., Dubreuil, A., Valente, A., Mastrogiuseppe, F. & Ostojic, S. Shaping dynamics with multiple populations in low-rank recurrent networks. Neural Comput. 33, 1572–1615 (2021).

Sherman, S. M. & Guillery, R. W. On the actions that one nerve cell can have on another: distinguishing ‘drivers’ from ‘modulators’. Proc. Natl Acad. Sci. USA 95, 7121–7126 (1998).

Ferguson, K. A. & Cardin, J. A. Mechanisms underlying gain modulation in the cortex. Nat. Rev. Neurosci. 21, 80–92 (2020).

Yang, G. R. & Wang, X.-J. Artificial neural networks for neuroscientists: a primer. Neuron 107, 1048–1070 (2020).

Gold, J. I. & Shadlen, M. N. The neural basis of decision making. Annu. Rev. Neurosci. 30, 535–574 (2007).

Romo, R., Brody, C. D., Hernández, A. & Lemus, L. Neuronal correlates of parametric working memory in the prefrontal cortex. Nature 399, 470–473 (1999).

Miyashita, Y. Neuronal correlate of visual associative long-term memory in the primate temporal cortex. Nature 335, 817–820 (1988).

Cunningham, J. P. & Yu, B. M. Dimensionality reduction for large-scale neural recordings. Nat. Neurosci. 17, 1500–1509 (2014).

Fusi, S., Miller, E. K. & Rigotti, M. Why neurons mix: high dimensionality for higher cognition. Curr. Opin. Neurobiol. 37, 66–74 (2016).

Cromer, J. A., Roy, J. E. & Miller, E. K. Representation of multiple, independent categories in the primate prefrontal cortex. Neuron 66, 796–807 (2010).

Fritz, J. B., David, S. V., Radtke-Schuller, S., Yin, P. & Shamma, S. A. Adaptive, behaviorally gated, persistent encoding of task-relevant auditory information in ferret frontal cortex. Nat. Neurosci. 13, 1011–1019 (2010).

Diego, E. et al. State-dependent encoding of sound and behavioral meaning in a tertiary region of the ferret auditory cortex. Nat. Neurosci. 22, 447–459 (2019).

Zenke, F., Poole, B. & Ganguli, S. Continual learning through synaptic intelligence. Proc. Mach. Learn. Res. 70, 3987–3995 (2017).

Jefferson, E. R., Riesenhuber, M., Poggio, T. & Miller, E. K. Prefrontal cortex activity during flexible categorization. J. Neurosci. 30, 8519–8528 (2010).

Sussillo, D. & Barak, O. Opening the black box: low-dimensional dynamics in high-dimensional recurrent neural networks. Neural Comput. 25, 626–649 (2013).

Rabinowitz, N. C., Goris, R. L., Cohen, M. & Simoncelli, E. P. Attention stabilizes the shared gain of v4 populations. eLife 4, e08998 (2015).

Salinas, E. & Thier, P. Gain modulation: a major computational principle of the central nervous system. Neuron 27, 15–21 (2000).

Stroud, J. P., Porter, M. A., Hennequin, G. & Vogels, T. P. Motor primitives in space and time via targeted gain modulation in cortical networks. Nat. Neurosci. 21, 1774–1783 (2018).

Maheswaranathan, N., Williams, A. H., Golub, M. D., Ganguli, S. & David, S. Universality and individuality in neural dynamics across large populations of recurrent networks. Adv. Neural Inf. Process. Syst. 2019, 15629–15641 (2019).

Flesch, T., Juechems, K., Dumbalska, T., Saxe, A. & Summerfield, C. Rich and lazy learning of task representations in brains and neural networks. Neuron 110, 1258–1270 (2022).

Aoi, M. C., Mante, V. & Pillow, J. W. Prefrontal cortex exhibits multidimensional dynamic encoding during decision-making. Nat. Neurosci. 23, 1410–1420 (2020).

Werbos, P. J. Backpropagation through time: what it does and how to do it. Proc. IEEE 78, 1550–1560 (1990).

Kingma, D. P. & Ba, J. Adam: a method for stochastic optimization. Preprint at https://arxiv.org/abs/1412.6980 (2014).

Paszke, A. et al. Automatic differentiation in PyTorch. NIPS 2017 Workshop Autodiff. https://openreview.net/forum?id=BJJsrmfCZ (2017).

Pedregosa, F. et al. Scikit-learn: machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

Kingma, D. P. & Welling, M. Auto-encoding variational Bayes. Preprint at https://arxiv.org/abs/1312.6114 (2013).

Kostantinos, N. Gaussian mixtures and their applications to signal processing. https://www.dsp.utoronto.ca/~kostas/Publications2008/pub/bch3.pdf (2000).

Acknowledgements

This project was supported by the National Institutes of Health BRAIN Initiative project U01-NS122123 (S.O. and A.V.); ANR project MORSE (ANR-16-CE37-0016) (S.O. and F.M.); CRCNS project PIND (ANR-19-NEUC-0001) (S.O., A.D. and M.B.); and the program ‘Ecoles Universitaires de Recherche’ launched by the French Government and implemented by the ANR, with reference ANR-17-EURE-0017 (S.O.). S.O. thanks J. Johansen and B. Pesaran for fruitful discussions.

Author information

Authors and Affiliations

Contributions

A.D., A.V. and S.O. designed the study. A.D., A.V. and S.O. developed the training and analysis pipelines. A.D., A.V., M.B., F.M. and S.O. performed research and contributed to writing the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Neuroscience thanks Máté Lengyel and the other, anonymous reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 Additional ePAIRS results.

(a) p-values given by the ePAIRS test on selectivity spaces for the full-rank networks displayed in Fig. 1d (two-sided ePAIRS test, 100 networks per task, n = 512 neurons for each network). (b) p-values given by the ePAIRS test on connectivity spaces for the low-rank networks displayed in Fig. 1h (two-sided ePAIRS test, 100 networks per task, n = 512 neurons for each network). (c) ePAIRS effect sizes on the selectivity space for the same low-rank networks (two-sided ePAIRS test, 100 networks per task, n = 512 neurons for each network). (d) Corresponding ePAIRS p-values.

Extended Data Fig. 2 Determination of the minimal rank for each task.

For each task and each rank R between 1 and 5, ten rank-R networks were trained with different random initial connectivity. For each task, a panel displays the performance of trained networks as function of their rank.

Extended Data Fig. 3 Analysis of trained full-rank networks.

(a)-(b) Analysis of full-rank networks trained with initial connectivity weights of variance 1/N (100 networks for each task). (a) Performance of truncated-rank networks. Following35, we extract from full-rank networks the learned part of the connectivity ΔJ = J − J0 defined as the difference between the final connectivity J and the initial connectivity J0. We then truncate ΔJ to a given rank via singular value decomposition, and add it back to J0. For each task, a panel displays the performance of the obtained networks as function of the rank used for the truncation. (b) Resampling analysis of truncated networks. Starting from the truncated networks in (a) we fit multivariate Gaussians to the distribution of their ΔJ in the corresponding connectivity spaces. We then generate new networks by resampling from this distribution, as done on the trained low-rank networks for Fig. 1i-l. For each task, a panel displays the performance of the obtained resampled network as function of the rank used for the truncation. (c)-(d) Same analyses as (a)-(b) for sets of networks trained with initial connectivity weights of variance 0.1/N (100 networks for each task, for DMS 49/100 networks that had an accuracy < 95% after training and were ignored). Networks with weaker initial connectivity are better approximated by their resampled low-rank connectivity. This is due to the fact that larger initial connectivities induce correlations between ΔJ and J035. The resampling destroys both this correlation and the population structure, leading to performance impairments even when the population structure is potentially irrelevant.

Extended Data Fig. 4 Increasing the rank maintains the requirement for population structure.

For this figure we have trained low-rank networks with a rank higher than 1 on the CDM task, fitted a single Gaussian or a mixture of 2 Gaussians to the obtained connectivity space, and retrained the obtained distribution (Methods) to obtain resampled networks with a performance as high as possible. Even with this additional layer of retraining of the fitted distributions (which is only present in the main text for the DMS task) the obtained single-population networks fell short of performing the CDM task with a good accuracy. Here, 10 draws of a single network for each combination of rank and number of populations are shown (line: median, box: quartiles, whiskers: range, in the limit of median ± 1.5 interquartile range, points: outliers).

Extended Data Fig. 5 Alternative implementation of the CDM task.

A network trained with different hyperparameters offers an example of an alternative solution for the CDM task, using 3 effective populations and a fourth one accounting for neurons that are not involved in the task (see Supplementary Text 3)). (a) Left: for each number of subpopulations, a boxplot shows the performance of 10 networks with connectivity resampled from a Gaussian Mixture Model (GMM) fitted to the trained network (line: median, box: quartiles, whiskers: range, in the limit of median ± 1.5 interquartile range, points: outliers). Right: for the GMM with four subpopulations, size of each component found by the clustering procedure. (b) Four 2d projections of the 7-dimensional connectivity space. (c) Upper-right triangle of the empirical covariance matrices for each of the four populations. (d) Illustration of the mechanism used by the network at the level of latent dynamics. Populations 2 − 4 control one effective coupling each, indicated by the matching color. (e) Psychometric matrices similar to those shown in Fig. 4 after inactivation of each subpopulation. (f) Violin plots showing the gain distributions of neurons in each of the four subpopulations in each context.

Extended Data Fig. 6 Multi-population analysis of networks performing the delayed match-to-sample (DMS) task.

(a) Networks received a sequence of two stimuli during two stimulation periods (in light gray) separated by a delay. Each stimulus belonged to one out of two categories (A or B), each represented by a different input vector. Rank-two networks were trained to produce an output during a response period (in light orange) with a positive value if the two stimuli were identical, and a negative value otherwise. Here we illustrate two trials with stimuli A-A and B-A respectively. (b) Psychometric response matrices. Fraction of positive responses for each combination of first and second stimuli, for a trained network (left) and for networks generated by resampling connectivity from a single population (middle) or two populations (right). (c) Average accuracy of a trained network and for 10 draws of resampled single-population and two-population networks (line: median, box: quartiles, whiskers: range, in the limit of median ± 1.5 interquartile range, points: outliers).

Extended Data Fig. 7 Statistics of connectivity in trained networks.

Upper left corner of the empirical covariance matrix between connectivity vectors for networks trained on each task, after clustering neurons in two populations for tasks CDM and DMS. These covariance matrices are then used for resampling single-population and two-population networks that successfully perform each task.

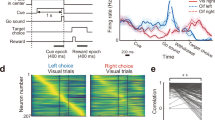

Extended Data Fig. 8 Context-dependent decision-making state-space dynamics.

Here we reproduce figures akin to those presented in15 for the trained low-rank network used in Figs. 4 and 5. We generate 32 conditions corresponding to different combinations of context, signal A coherence and signal B coherence and then project condition-averaged trajectories either on the plane spanned by the recurrent connectivity vector m (which corresponds to the choice axis) and the input vector IA, or on the m − IB plane. Similarly to what was observed in15, signal A strength is encoded along the IA axis, even when it is irrelevant (lower left corner), and signal B strength is encoded along the IB axis, even when it is irrelevant (top right corner).

Extended Data Fig. 9 Low-dimensional latent dynamics in networks performing the delayed match-to-sample (DMS) task.

(a) Circuit diagram representing latent dynamics for a minimal network trained on the DMS task (Eq. (51)). The network was of rank two, so that the latent dynamics were described by two internal variables κ1 and κ2. Input A acts as a modulator on the recurrent interactions between the two internal variables. (b) Dynamical landscape for the autonomous latent dynamics in the κ1 − κ2 plane (ie. the m(1)-m(2) plane). Colored lines depict trajectories corresponding to the 4 types of trials in the task (see Sup. Fig. S6 for details of trajectories). Background color and white lines encode the speed and direction of the dynamics in absence of inputs. (c) Two 2d projections of the seven-dimensional connectivity space, with colors indicating the two subpopulations and lines corresponding to linear regressions for each of them on the right panel. (d) Effective circuit diagrams in absence of inputs (left), and when input A (middle) or input B (right) are present (see Supplementary Note 2.4). Filled circles denote positive coupling, open circles negative coupling. Input A in particular induces a negative feedback from κ2 to κ1. (e) Distribution of neural gains for each populations (pop. 1: n = 3050, pop. 2: n = 1046), in the three situations described above. The gain of population 1 (green) is specifically modulated by input A. (f) Dynamical landscapes in the 3 situations described above (see Methods). Filled and empty circles indicate respectively stable and unstable fixed points. The negative feedback induced by input A causes a limit cycle to appear in the latent dynamics.

Extended Data Fig. 10 Control for the strength of context cues in the MDM task.

Here the context input vectors have been multiplied by a factor five compared to the network analyzed in Fig. 6g. (a) Context cues are thus able to set the functioning point of some neurons closer to the saturating part of the transfer function, leading to the observation of non-linear mixed-selectivity between context and changes in sensory representation with context. (b) As opposed to the CDM task, this particular feature of selectivity is not functional as revealed by specifically inactivating neurons with a high selectivity to context A or B, showing a similar decrease in behavioral performance as for randomly selected neurons.

Supplementary information

Supplementary Information

Supplementary Text 1-4, Supplementary Discussion 1-2, Supplementary Notes 1-2, Supplementary Figure S1-S7

Rights and permissions

About this article

Cite this article

Dubreuil, A., Valente, A., Beiran, M. et al. The role of population structure in computations through neural dynamics. Nat Neurosci 25, 783–794 (2022). https://doi.org/10.1038/s41593-022-01088-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41593-022-01088-4

This article is cited by

-

Curiosity: primate neural circuits for novelty and information seeking

Nature Reviews Neuroscience (2024)

-

A low dimensional embedding of brain dynamics enhances diagnostic accuracy and behavioral prediction in stroke

Scientific Reports (2023)

-

Reconstructing computational system dynamics from neural data with recurrent neural networks

Nature Reviews Neuroscience (2023)

-

Working memory control dynamics follow principles of spatial computing

Nature Communications (2023)

-

Abstract representations emerge naturally in neural networks trained to perform multiple tasks

Nature Communications (2023)