Abstract

Computational methods that aim to exploit publicly available mass spectrometry repositories rely primarily on unsupervised clustering of spectra. Here we trained a deep neural network in a supervised fashion on the basis of previous assignments of peptides to spectra. The network, called ‘GLEAMS’, learns to embed spectra in a low-dimensional space in which spectra generated by the same peptide are close to one another. We applied GLEAMS for large-scale spectrum clustering, detecting groups of unidentified, proximal spectra representing the same peptide. We used these clusters to explore the dark proteome of repeatedly observed yet consistently unidentified mass spectra.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$259.00 per year

only $21.58 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The data used to explore the dark proteome have been deposited to the MassIVE repository with the dataset identifier MSV000088598. It consists of MGF files containing the representative medoid spectra from GLEAMS clustering and the associated ANN-SoLo identifications in mzTab format27.

All other data supporting the presented analyses have been deposited to the MassIVE repository with the dataset identifier MSV000088599. Source data are provided with this paper.

Code availability

GLEAMS was implemented in Python 3.8. Pyteomics (v.4.3.2)45 was used to read MS/MS spectra in the mzML22, mzXML and MGF formats. spectrum_utils (v.0.3.4)46 was used for spectrum preprocessing. We performed a submodular selection using apricot (v.0.4.1)21. The neural network code was implemented using the Tensorflow/Keras framework (v.2.2.0)47. SciPy (v.1.5.0)48 and fastcluster (v.1.1.28)49 were used for hierarchical clustering. Additional scientific computing was done using NumPy (v.1.19.0)50, Scikit-Learn (v.0.23.1)51, Numba (v.0.50.1)52 and Pandas (v.1.0.5)53. Data analysis and visualization were performed using Jupyter Notebooks54, matplotlib (v.3.3.0)55, Seaborn (v.0.11.0)56 and UMAP (v.0.4.6)13.

All code is available as open source under the permissive BSD license at https://github.com/bittremieux/GLEAMS. Code used to analyze the data and to generate the figures presented here is available on GitHub (https://github.com/bittremieux/GLEAMS_notebooks). Permanent archives of the source code and the analysis notebooks are available on Zenodo at https://doi.org/10.5281/zenodo.5794613 and https://doi.org/10.5281/zenodo.5794616, respectively57,58.

References

Tabb, D. L. The SEQUEST family tree. J. Am. Soc. Mass. Spectrom. 26, 1814–1819 (2015).

Perez-Riverol, Y. et al. The PRIDE database and related tools and resources in 2019: improving support for quantification data. Nucleic Acids Res. 47, D442–D450 (2019).

Frank, A. M. et al. Clustering millions of tandem mass spectra. J. Proteome Res. 7, 113–122 (2008).

Griss, J., Foster, J. M., Hermjakob, H. & Vizcaíno, J. A. PRIDE cluster: building a consensus of proteomics data. Nat. Methods 10, 95–96 (2013).

Griss, J. et al. Recognizing millions of consistently unidentified spectra across hundreds of shotgun proteomics datasets. Nat. Methods 13, 651–656 (2016).

Wang, M. et al. Assembling the community-scale discoverable human proteome. Cell Syst. 7, 412–421.e5 (2018).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Tran, N. H. et al. De novo peptide sequencing by deep learning. Proc. Natl Acad. Sci USA. 114, 8247–8252 (2017).

Tran, N. H. et al. Deep learning enables de novo peptide sequencing from data-independent-acquisition mass spectrometry. Nat. Methods 16, 63–66 (2018).

Gessulat, S. et al. Prosit: proteome-wide prediction of peptide tandem mass spectra by deep learning. Nat. Methods 16, 509–518 (2019).

Tiwary, S. et al. High-quality MS/MS spectrum prediction for data-dependent and data-independent acquisition data analysis. Nat. Methods 16, 519–525 (2019).

Hadsell, R., Chopra, S., LeCun, Y. In Proc. 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (eds Fitzgibbon, A., Taylor, C. J., LeCun, Y.) 1735–1742 (IEEE, New York, 2006).

McInnes, L., Healy, J., Melville, J. UMAP: Uniform manifold approximation and projection for dimension reduction. Preprint at ArXiv http://arxiv.org/abs/1802.03426 (2020).

Hijazi, M. et al. Reconstructing kinase network topologies from phosphoproteomics data reveals cancer-associated rewiring. Nat. Biotechnol. 38, 493–502 (2020).

The, M. & Käll, L. MaRaCluster: a fragment rarity metric for clustering fragment spectra in shotgun proteomics. J. Proteome Res. 15, 713–720 (2016).

Bittremieux, W., Laukens, K., Noble, W. S. & Dorrestein, P. C. Large-scale tandem mass spectrum clustering using fast nearest neighbor searching. Rapid Commun. Mass Spectrom. e9153 (2021).

Frank, A. M. et al. Spectral archives: extending spectral libraries to analyze both identified and unidentified spectra. Nat. Methods 8, 587–591 (2011).

Creasy, D. M. & Cottrell, J. S. Unimod: protein modifications for mass spectrometry. Proteomics 4, 1534–1536 (2004).

Wolski, W. E. et al. Analytical model of peptide mass cluster centres with applications. Proteome Sci. 4, 18 (2006).

Hofmann, T., Schölkopf, B. & Smola, A. J. Kernel methods in machine learning. Ann. Stat. 36, 1171–1220 (2008).

Schreiber, J., Bilmes, J. & Noble, W. S. apricot: submodular selection for data summarization in Python. J. Mach. Learn. Res. 21, 1–6 (2020).

Martens, L. et al. mzML—a community standard for mass spectrometry data. Mol. Cell. Proteom. 10, R110.000133–R110.000133 (2011).

Kim, S. & Pevzner, P. A. MS-GF+ makes progress towards a universal database search tool for proteomics. Nat. Commun. 5, 5277 (2014).

Breuza, L. et al. The UniProtKB guide to the human proteome. Database 2016, bav120 (2016).

Zolg, D. P. et al. Building proteometools based on a complete synthetic human proteome. Nat. Methods 14, 259–262 (2017).

Huttlin, E. L. et al. The BioPlex network: a systematic exploration of the human interactome. Cell 162, 425–440 (2015).

Griss, J. et al. The mzTab data exchange format: communicating mass-spectrometry-based proteomics and metabolomics experimental results to a wider audience. Mol. Cell. Proteom. 13, 2765–2775 (2014).

Simonyan, K. & Zisserman A. Very deep convolutional networks for large-scale image recognition. In 3rd International Conference on Learning Representations (ICLR 2015) 1–14 (Computational and Biological Learning Society, 2019).

Klambauer, G., Unterthiner, T., Mayr, A. & Hochreiter S. Self-normalizing neural networks. In Proc. 31st International Conference on Neural Information Processing Systems (eds von Luxburg, U. & Guyon, I.) 972–981(Curran Associates, 2017).

LeCun, Y. A., Bottou, L., Orr, G. B., Müller, K.-R. In Neural Networks: Tricks of the Trade (eds Montavon, G., Orr, G. B. & Müller, K.-R.) 9–48 (Springer, 2012).

Glorot, X., Bengio, Y. In Proc. Thirteenth International Conference on Artificial Intelligence and Statistics (eds. Teh, Y. W., Titterington, M.) 249–256 (JMLR Workshop and Conference Proceedings, 2010).

Liu, L. et al. On the variance of the adaptive learning rate and beyond. In International Conference on Learning Representations (ICLR, 2020).

Jones, A. R. et al. The mzIdentML data standard for mass spectrometry-based proteomics results. Mol. Cell. Proteom. 11, M111.014381–M111.014381 (2012).

Fondrie, W. E., Bittremieux, W. & Noble, W. S. ppx: programmatic access to proteomics data repositories. J. Proteome Res. 20, 4621–4624 (2021).

Hulstaert, N. et al. ThermoRawFileParser: modular, scalable, and cross-platform RAW file conversion. J. Proteome Res. 19, 537–542 (2020).

Perkins, D. N., Pappin, D. J. C., Creasy, D. M. & Cottrell, J. S. Probability-based protein identification by searching sequence databases using mass spectrometry data. Electrophoresis 20, 3551–3567 (1999).

Ester, M., Kriegel, H.-P., Sander, J., Xu, X. In Proc. Second International Conference on Knowledge Discovery and Data Mining (eds Simoudis, E., Han, J. & Fayyad, U.) 226–231 (AAAI Press, 1996).

Rosenberg, A., Hirschberg, J. In Proc. 2007 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning (ed. Eisner, J.) 410–420 (Association for Computational Linguistics, 2007).

Bittremieux, W., Meysman, P., Noble, W. S. & Laukens, K. Fast open modification spectral library searching through approximate nearest neighbor indexing. J. Proteome Res. 17, 3463–3474 (2018).

Bittremieux, W., Laukens, K. & Noble, W. S. Extremely fast and accurate open modification spectral library searching of high-resolution mass spectra using feature hashing and graphics processing units. J. Proteome Res. 18, 3792–3799 (2019).

Lam, H. et al. Development and validation of a spectral library searching method for peptide identification from MS/MS. Proteomics 7, 655–667 (2007).

Deutsch, E. W. et al. A guided tour of the trans-proteomic pipeline. Proteomics 10, 1150–1159 (2010).

Lam, H., Deutsch, E. W. & Aebersold, R. Artificial decoy spectral libraries for false discovery rate estimation in spectral library searching in proteomics. J. Proteome Res. 9, 605–610 (2010).

Fu, Y. & Qian, X. Transferred subgroup false discovery rate for rare post-translational modifications detected by mass spectrometry. Mol. Cell. Proteom. 13, 1359–1368 (2014).

Levitsky, L. I., Klein, J. A., Ivanov, M. V. & Gorshkov, M. Pyteomics 4.0: five years of development of a Python proteomics framework. J. Proteome Res. 18, 709–714 (2019).

Bittremieux, W. spectrum_utils: a Python package for mass spectrometry data processing and visualization. Anal. Chem. 92, 659–661 (2020).

Abadi, M. et al. TensorFlow: a system for large-scale machine learning. In Proc. 12th USENIX conference on Operating Systems Design and Implementation 265–283 (USENIX Association, 2016).

Virtanen, P. et al. SciPy 1.0: fundamental algorithms for scientific computing in Python. Nat. Methods 17, 261–272 (2020).

Müllner, D. Fastcluster: fast hierarchical, agglomerative clustering routines for R and Python. J. Stat. Softw. 53, 1–18 (2013).

Harris, C. R. et al. Array programming with NumPy. Nature 585, 357–362 (2020).

Pedregosa, F. et al. Scikit-Learn: machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

Lam, S. K., Pitrou, A., Seibert, S. In Proc. Second Workshop on the LLVM Compiler Infrastructure in HPC (ed. Finkel, H.) 1–6 (ACM Press, 2015).

McKinney, W. In Proc. 9th Python in Science Conference (eds van der Walt, S. & Millman, J.) 51–56 (ACM Press, 2010).

Thomas, K., et al. In Positioning and Power in Academic Publishing: Players, Agents and Agendas (eds Schmidt, B. & Loizides, F.) 87–90 (IOS Press, 2016).

Hunter, J. D. Matplotlib: a 2D graphics environment. Comput. Sci. Eng. 9, 90–95 (2007).

Waskom, M., et al. mwaskom/seaborn: v0.11.2 (August 2021) Zenodo https://doi.org/10.5281/zenodo.592845 (2020).

Bittremieux, W. (2021). bittremieux/GLEAMS: v0.3 (v0.3) Zenodo https://doi.org/10.5281/zenodo.5794613 (2021).

Bittremieux, W. (2021). bittremieux/GLEAMS_notebooks: v0.3 (v0.3) Zenodo https://doi.org/10.5281/zenodo.5794616 (2021).

Acknowledgements

This work was supported by National Institutes of Health award R01 GM121818.

Author information

Authors and Affiliations

Contributions

W.S.N. conceptualized the work. W.S.N. and J.B. supervised the work. W.B. and D.H.M. developed the software and carried out the analyses. W.B., D.H.M. and W.S.N. wrote the manuscript. All authors reviewed and edited the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Methods thanks Johannes Griss, Sean Hackett and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Primary Handling Editor: Allison Doerr, in collaboration with the Nature Methods team. Peer reviewer reports are available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

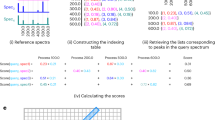

Extended Data Fig. 1 GLEAMS embedder network.

Each instance of the embedder network in the Siamese neural network separately receives each of three feature types as input. Precursor features are processed through a fully connected network with two layers of sizes 32 and 5. Binned fragment intensities are processed through five blocks of one-dimensional convolutional layers and max pooling layers. Reference spectra features are processed through a fully connected network with two layers of sizes 750 and 250. The output of the three subnetworks is concatenated and passed to a final fully connected layer of size 32.

Extended Data Fig. 2 UMAP visualization of embeddings, colored by precursor charge.

UMAP projection of 685,337 embeddings from frequently occurring peptides in 10 million randomly selected identified spectra. Note that the visualization may group peptides with similarities on some dimensions of the 32-dimensional embedding space, but which are nevertheless distinguishable based on their full embeddings.

Extended Data Fig. 3 False negative rate between positive and negative embedding pairs.

The false negative rate between positive and negative embedding pairs for 10 million randomly selected pairs from the test dataset, at distance threshold 0.5455 (gray line), corresponding to 1% false discovery rate, is 1%.

Extended Data Fig. 4 ROC curve for GLEAMS performance on unseen phosphorylated spectra.

Receiver operating characteristic (ROC) curve for GLEAMS embeddings corresponding to 7.5 million randomly selected spectrum pairs from an independent phosphoproteomics study. The ROC curve and area under the curve (AUC) show how often a same-peptide spectrum pair had a smaller distance than a different peptide spectrum pair.

Extended Data Fig. 5 Clustering result characteristics produced by different tools.

Clustering result characteristics at approximately 1% incorrectly clustered spectra over three random folds of the test dataset. (a) Complementary empirical cumulative distribution of the cluster sizes. (b) The number of datasets that spectra in the test dataset originate from per cluster (24 datasets total).

Extended Data Fig. 6 GLEAMS performance with different clustering algorithms.

Average clustering performance over three random folds of the test dataset containing 28 million MS/MS spectra each. The GLEAMS embeddings were clustered using hierarchical clustering with complete linkage, single linkage, or average linkage; or using DBSCAN. The performance of alternative spectrum clustering tools (Fig. 1d, e) is shown in gray for reference. (a) The number of clustered spectra versus the number of incorrectly clustered spectra per clustering algorithm. (b) Cluster completeness versus the number of incorrectly clustered spectra per clustering algorithm.

Extended Data Fig. 7

Runtime scalability of spectrum clustering tools. Scalability of spectrum clustering tools when processing increasingly large data volumes. Three random subsets of the test dataset were combined to form input datasets consisting of 28 million, 56 million, and 84 million spectra. Evaluations of falcon and MS-Cluster on larger datasets were excluded due to excessive runtimes.

Extended Data Fig. 8 UMAP visualization of the selected reference spectra.

UMAP visualization of the selected reference spectra. The two-dimensional UMAP visualization was computed from the dot product pairwise similarity matrix between all 200,000 randomly selected spectra from the training data.

Extended Data Fig. 9 Input features ablation test.

Ablation testing during training of the GLEAMS Siamese network shows the benefit of the different input feature types. The performance is measured using the validation loss while training for 20 iterations consisting of 40,000 steps with batch size 256. The line indicates the smoothed average validation loss over five consecutive iterations, with the markers showing the individual validation losses at the end of each iteration.

Supplementary information

Supplementary Table 1

GLEAMS learns latent spectrum properties. Correlation of individual embedding dimensions with latent properties of the spectra. Spearman correlations above 0.2 and below −0.2 are shown.

Supplementary Table 2

Top 500 precursor mass differences from the ANN-SoLo open modification search of GLEAMS cluster centroids.

Source data

Source Data Fig. 1

Statistical Source Data.

Source Data Fig. 2

Statistical Source Data.

Source Data Extended Data Fig. 2

Statistical Source Data.

Source Data Extended Data Fig. 3

Statistical Source Data.

Source Data Extended Data Fig. 4

Statistical Source Data.

Source Data Extended Data Fig. 5

Statistical Source Data.

Source Data Extended Data Fig. 6

Statistical Source Data.

Source Data Extended Data Fig. 7

Statistical Source Data.

Source Data Extended Data Fig. 8

Statistical Source Data.

Source Data Extended Data Fig. 9

Statistical Source Data.

Rights and permissions

About this article

Cite this article

Bittremieux, W., May, D.H., Bilmes, J. et al. A learned embedding for efficient joint analysis of millions of mass spectra. Nat Methods 19, 675–678 (2022). https://doi.org/10.1038/s41592-022-01496-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41592-022-01496-1

This article is cited by

-

BUDDY: molecular formula discovery via bottom-up MS/MS interrogation

Nature Methods (2023)

-

The importance of resource awareness in artificial intelligence for healthcare

Nature Machine Intelligence (2023)

-

Spectroscape enables real-time query and visualization of a spectral archive in proteomics

Nature Communications (2023)

-

The critical role that spectral libraries play in capturing the metabolomics community knowledge

Metabolomics (2022)

-

Good practices and recommendations for using and benchmarking computational metabolomics metabolite annotation tools

Metabolomics (2022)