Abstract

Biobanks that collect deep phenotypic and genomic data across many individuals have emerged as a key resource in human genetics. However, phenotypes in biobanks are often missing across many individuals, limiting their utility. We propose AutoComplete, a deep learning-based imputation method to impute or ‘fill-in’ missing phenotypes in population-scale biobank datasets. When applied to collections of phenotypes measured across ~300,000 individuals from the UK Biobank, AutoComplete substantially improved imputation accuracy over existing methods. On three traits with notable amounts of missingness, we show that AutoComplete yields imputed phenotypes that are genetically similar to the originally observed phenotypes while increasing the effective sample size by about twofold on average. Further, genome-wide association analyses on the resulting imputed phenotypes led to a substantial increase in the number of associated loci. Our results demonstrate the utility of deep learning-based phenotype imputation to increase power for genetic discoveries in existing biobank datasets.

Similar content being viewed by others

Main

The past decade has seen the growth of datasets that collect deep phenotypic and genomic data across large numbers of individuals. Although these population-scale biobanks aim to capture a wide range of phenotypes across the population (including demographic information, laboratory tests, imaging, medication usage and diagnostic codes), phenotypes in this setting are frequently missing across many of the individuals for reasons such as cost or difficulty of acquisition (for example, phenotypes derived from imaging scans and other potentially invasive procedures). As a result, our ability to study clinically relevant phenotypes or diseases using biobank data remains limited.

The ubiquity of missing data in the biomedical domain has motivated extensive work into statistical methods for imputing or ‘filling-in’ missing data1,2,3,4,5,6,7 (see Supplementary Note Section S1 for additional related work). Accurate imputation of large numbers of phenotypes and individuals in population-scale biobank data presents several challenges. First, accurate imputation requires faithfully modeling the dependencies across the phenotypes. Such dependencies can arise because of genetic or environmental effects that are shared across phenotypes. Accumulating evidence for the abundance of shared genetic effects (pleiotropy) even among seemingly unrelated phenotypes suggests that the ability to model dependencies across large numbers of collected phenotypes could substantially improve imputation accuracy. Second, patterns of missingness in these datasets tend to be complex (for example, individuals who were not administered a questionnaire will be missing for all answers relevant to the questionnaire). Third, the method needs to be scalable. Thus, methods that can accurately impute phenotypes in the presence of complex patterns of missingness while being scalable are needed.

Here we propose AutoComplete, a deep-learning method based on an autoencoder architecture designed for highly incomplete biobank-scale phenotype data. Our use of deep learning for imputation is motivated by the ability of neural networks to learn potentially complex dependencies among phenotypes, as shown in the application of neural networks to other biological datasets8,9,10,11,12. Earlier works, however, have relied on access to individuals with no missing phenotypes to learn the imputation model13 (such an approach would substantially reduce the data available to learn the model) or have assumed that entries in a dataset are missing completely at random14,15. To be able to impute in the presence of realistic patterns of missingness, we employed copy-masking, a procedure that propagates missingness patterns present in the data6. AutoComplete can impute both binary and continuous phenotypes while scaling with ease to datasets with half a million individuals and millions of entries.

We compared the accuracy of AutoComplete with state-of-the-art missing data imputation methods on two collections of phenotypes derived from the UK Biobank (UKBB)16: a set of 230 cardiometabolic-related phenotypes and a set of 372 phenotypes related to psychiatric disorders, each measured across ~300,000 unrelated white British individuals. AutoComplete improved squared Pearson correlation (\({r}^{2}\)) by 18% on average over the next best method (SoftImpute5) and 45% on average for binary phenotypes. AutoComplete is suitable for large-scale biobanks, demonstrating an empirical run time of one hour to fit and impute either dataset. We explored the utility of our method in increasing the power to detect genetic associations for three phenotypes—direct bilirubin, LifetimeMDD17 and cannabis ever taken—that had a substantial proportion of missing entries (21%, 80% and 67%, respectively) and were imputed with adequate accuracy in simulations, and for which genome-wide association results could be further verified with studies of comparable phenotypes that did not overlap UKBB. We demonstrate that genome-wide association studies (GWAS) on the imputed phenotypes yield associations that have consistent effects both in the originally observed phenotypes in UKBB and in the external studies. Beyond the replication of significantly associated variants, the polygenic architecture of the imputed phenotypes is highly concordant with those of the originally observed phenotypes in UKBB and the phenotypes measured in the external studies (quantified by their genetic correlation). We observed an increase in effective sample size of 1.8-fold on average, with GWAS on the resulting imputed phenotypes leading to the discovery of 57 new loci. Our results illustrate the value of deep learning-based imputation for genomic discovery.

Results

Methods overview

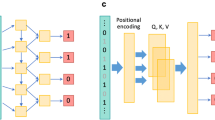

AutoComplete is based on an autoencoder (a type of neural network) that is capable of simultaneously imputing continuous and binary-valued features. Given a vector of features that represent the phenotypes measured on an individual (some of which might be missing), AutoComplete maps the features to a hidden representation using a nonlinear transformation (encoder), which is then mapped back to the original space of features to reconstruct the phenotypes (decoder). In this process, AutoComplete imputes missing phenotypes (Fig. 1).

AutoComplete defines a feed-forward encoder-decoder architecture h trained using copy-masking, a procedure that simulates realistic missingness patterns that the model uses to impute missing values. AutoComplete minimizes the loss function \({\mathscr{L}}\) that is defined over the observed and masked values. AutoComplete supports the imputation of continuous and binary features.

AutoComplete aims to learn the autoencoder by masking features that are originally observed in the data and searching for the parameters of the autoencoder that can reconstruct the masked and observed features with minimal error. To enable AutoComplete to impute in the presence of realistic missingness patterns, we employed copy-masking, a procedure that propagates missingness patterns already present in the data6.

Experiment overview

We evaluated the accuracy of phenotypes imputed by AutoComplete on two collections of UKBB phenotypes: a set of 230 cardiometabolic phenotypes derived from patient records and imaging data, and a larger set of 372 phenotypes related to psychiatric disorders from an on-going study of major depressive disorder (MDD)18. Each collection contains phenotypes measured across ~300,000 unrelated individuals of white British ancestry, where the median missingness rates across phenotypic entries were 47% and 67% (Supplementary Table 1). The phenotypes in each dataset were collected based on general guidance received from experts with an interest in cardiometabolic and psychiatric disorders, respectively. A focus was placed on phenotypes that were highly missing and of clinical relevance such that imputation would provide a clear utility.

We compared the accuracy of AutoComplete with a representative selection of imputation methods that could be applied at scale. We considered K-Nearest Neighbors (KNN), missForest19 and MICE3, among the most widely used imputation methods routinely available in data science packages4. We also considered SoftImpute5 based on its consistently high imputation accuracy in previous works6,20. Finally, we also evaluated two recent deep learning-based imputation methods: a generative-adversarial imputation method, GAIN21, and a deep generative model, HI-VAE20 (see Supplementary Note Section S1 for a more detailed description of related methods).

In determining which methods scale and would therefore be suitable for practical use for the datasets of interest, we assessed the capability of each method to impute the psychiatric disorder dataset in a given amount of time (Supplementary Fig. 1 and Supplementary Note Section S3). Of the considered methods, we determined that missForest and MICE would not be suitable for the scale of our datasets and these were excluded from our large-scale analysis. We also evaluated our method on a small-scale dataset consisting of 86 phenotypes and 50,000 individuals sub-sampled from the cardiometabolic dataset, allowing comparisons with KNN, MissForest19 and MICE3 (Supplementary Note Section S5).

To quantify the accuracy of each method to impute previously unseen individuals, we adopted a 50% train–test split of the two datasets such that all hyperparameter tuning and training were performed on the training set, whereas evaluations of all methods were performed on the test set (Methods).

To evaluate the imputation methods, we simulated missing entries by masking originally observed phenotypes across a range of missingness levels (1–50%). We examined \({r}^{2}\) between imputed and originally observed values as the primary metric, given its compatibility with continuous and binary phenotypes and its interpretation in terms of the effective sample size22. We additionally examined imputation accuracy of binary phenotypes using \({r}^{2}\), area under the precision-recall curve (AUPR) and the area under the receiver operating characteristic curve (AUROC). For each metric, we quantified standard error and confidence intervals using 50 bootstrap replicates. To test for significant differences in the imputation accuracy obtained by each method, we performed a two-tailed significance test using the bootstrap standard errors.

We explored the utility of phenotypes imputed using AutoComplete for improving power in GWAS for three phenotypes: direct bilirubin, LifetimeMDD and cannabis ever taken. To account for imputation uncertainty, we implemented a bootstrapping procedure to produce ten multiple imputations and combined our results across these multiple imputations (Methods). To determine whether using AutoComplete for downstream analysis leads to reliable biological discoveries, we examined the consistency of effects at individual loci found to be significantly associated with the imputed phenotype and the similarity of the polygenic architecture of the imputed phenotype. We performed these analyses both within UKBB (comparing the imputed portion of a phenotype with its originally observed portion) and by comparing the UKBB imputed phenotypes with external GWAS that do not overlap with UKBB.

AutoComplete significantly improves imputation accuracy

AutoComplete obtained the most accurate imputations across all levels of missingness (from 1% to 50%) in the tested datasets (Table 1 and Fig. 2). Imputation accuracy was generally higher in the cardiometabolic dataset relative to the psychiatric disorders dataset, which we hypothesize can be attributed, in part, to the greater proportion of missing entries in the latter (Supplementary Table 1). Further, the imputation accuracy of all methods decreased with increasing levels of missingness. Although SoftImpute (based on a linear model) was most accurate among the existing methods, AutoComplete obtained the highest overall accuracy with an average improvement over SoftImpute of 18% (\(P=1.21\times 1{0}^{-67}\) under two-tailed t-test). Separately for the cardiometabolic and psychiatric disorder datasets, AutoComplete obtained improvements of 11% and 25% (\(P=3.54\times 1{0}^{-26}\) and \(P=2.28\times 1{0}^{-301}\)) respectively, indicating the value of modeling nonlinear relationships among phenotypes (Fig. 2).

a, Average Pearson’s r2 imputation accuracy across phenotypes for a range (1–50%) of simulated missingness (bars denote 95% CIs obtained through 100 bootstraps). b, Comparisons of imputation accuracy per phenotype between AutoComplete (AC) and SoftImpute (SI; next best). Blue dots indicate a significant difference in accuracy (two-sided t-test with\(P < 2.17\times 1{0}^{-4}\) and \(P < 1.34\times 1{0}^{-4}\), adjusted for the number of phenotypes, for cardiometabolic and psychiatric disorder phenotypes). c, Relative improvements in imputation accuracy for binary-valued phenotypes between AutoComplete and each compared method (percentages thresholded at 200% for clarity). Boxes indicate the first, median and third quartiles, and whiskers extend to 1.5× the interquartile range. The psychiatric disorders dataset contained 372 phenotypes and the cardiometabolic dataset contained 230 phenotypes.

AutoComplete significantly improved \({r}^{2}\) for 20 (85) phenotypes over SoftImpute with 1% (20%) missingness in the cardiometabolic dataset (P < 0.05/230 correcting for the number of phenotypes tested). Analogously, AutoComplete significantly improved \({r}^{2}\) for 36 (179) phenotypes with 1% (20%) missingness in the psychiatric disorders dataset (Supplementary Table 2; P < 0.05/372 correcting for the number of phenotypes tested), where the number of phenotypes on which AutoComplete improved accuracy was greater than those where it had lower accuracy in all settings (Supplementary Table 2).

The improvements in imputation accuracy were particularly substantial for binary phenotypes. Here, AutoComplete obtained a relative improvement over the next best method (SoftImpute) of 51% in \({r}^{2}\) on the cardiometabolic data and 39% on the psychiatric disorders data across all simulations (Fig. 2c). We found qualitatively similar trends for other metrics such as AUPR and AUROC (Supplementary Table 2). In comparison with SoftImpute, AutoComplete imputation obtained a relative increase in AUPR of 10% and AUROC of 5% in the cardiometabolic dataset and an increase of 6% and 7% for both metrics in the psychiatric disorders dataset (Table 1).

We performed a separate experiment on a small-scale subset of UKBB in which we compare AutoComplete with missForest and MICE, which could not scale to the full UKBB phenotypes, and found that AutoComplete remains the most accurate method in this setting (Supplementary Fig. 4 and Supplementary Note Section S5).

Finally, we also explored the importance of the copy-masking procedure to the accuracy of AutoComplete. We compared AutoComplete trained with copy-masking and a denoising autoencoder trained with uniformly random masking (Supplementary Note Section S6). For the setting of 1% missingness, the highest average \({r}^{2}\) obtained through uniformly random masking was 0.121 compared with 0.142 with AutoComplete (15% lower with uniformly random masking) with similar trends in tests with increasing missingness (average 16% improvement using copy-masking; Supplementary Fig. 5 and Supplementary Note Section S6). We further assessed the importance of copy-masking in the evaluation step used to measure imputation accuracy. Instead of copying existing missing patterns, we chose values to be missing uniformly at random among all observed values until 1–50% of the observed data was withheld for imputation(Supplementary Fig. 6 and Supplementary Note Section S6). When not propagating the existing missing data patterns for testing, the imputation accuracy (r2) of AutoComplete was inflated to 0.164 on average (0.117 originally), whereas the imputation accuracy of LifetimeMDD grew to 0.757 (0.407 originally) across 1–50% simulations. We therefore conclude that copy-masking is integral to evaluating imputation accuracy and that AutoComplete benefits from mimicking realistic missingness patterns that aid the denoising behavior of the deep-learning model.

Imputed phenotypes lead to replicable genomic discoveries

We explored the utility of phenotypes imputed using AutoComplete for improving power in GWAS. We selected three phenotypes (direct bilirubin, LifetimeMDD and cannabis ever taken) that had a considerable fraction of missing entries (21%, 80% and 67%, respectively) and were imputed with reasonable accuracy in simulations (\({r}^{2}\) = 0.510, 0.507 and 0.310, respectively). To confirm that these phenotypes are accurately imputed in real data, we verified imputation quality measured as the ratio of the variance between the imputed portion of the phenotype and the variance of the observed portion (analogous to the metrics used to measure the quality of genotype imputation23,24) was sufficiently high across the three phenotypes (0.21, 0.52 and 0.28, respectively). The type of each phenotype differed, where direct bilirubin was continuous, cannabis ever taken was ordinal and LifetimeMDD was binary. Both direct bilirubin and cannabis ever taken were estimated as continuous phenotypes by AutoComplete, whereas LifetimeMDD was estimated as a binary phenotype in a continuous probability scale from 0 to 1. For the purpose of concise downstream analysis, all three phenotypes were treated as continuous phenotypes. Importantly, each of these phenotypes had sufficiently large GWAS summary statistics that did not overlap with UKBB. Furthermore, we implemented a bootstrapping procedure to produce ten multiple imputations to account for uncertainties that arise during the imputation process. We then combined genetic analyses across the multiple imputations using Rubin’s rule (Methods).

We estimated the effective gain in sample size resulting from imputation using AutoComplete for each phenotype. We observed an increase in sample size of around 1.8-fold on average: LifetimeMDD had an effective sample size of 193,379 from 67,164 original samples, a 1.87-fold increase, whereas bilirubin had a 0.13-fold increase consistent with the lower missingness rate (Supplementary Table 4 and Methods). We performed GWAS on each of the imputed phenotypes and observed 57 new significantly associated loci in total: 28 each for LifetimeMDD and cannabis ever taken and one new locus for bilirubin, consistent with the missingness rates across these phenotypes (Fig. 3 and Table 2).

Results of GWAS of the observed portions of bilirubin, LifetimeMDD and cannabis ever taken in the UKBB (indicated as Obs), where each phenotype had 21%, 80% and 67% missingness respectively. GWAS was then performed for all individuals in the dataset after using AutoComplete to impute the missing entries for each phenotype (indicated as ImpAll). The significance threshold of \(P < 5\times 1{0}^{-8}\) is indicated by a red line, and SNPs passing the threshold are highlighted in red.

To assess the reliability of phenotypes imputed using AutoComplete for GWAS, we performed GWAS on only the imputed portions of the phenotypes in UKBB (termed Imp). For each of the three phenotypes, we examined the consistency of effects at individual loci found to be significantly associated with the imputed phenotype and the similarity of the polygenic architecture of the imputed phenotype. We performed these analyses by comparing the results obtained from the imputed UKBB phenotypes (Imp) with the observed phenotypes within UKBB (Obs) and to external studies that do not overlap with UKBB (Ext). We analyzed four external cohorts: bilirubin from the Vanderbilt University Medical Center (VUMC)25, major depression from the Psychiatric Genomic Consortium (PGC)26 and from 23andMe (23andMe)17, and lifetime cannabis use from the International Cannabis Consortium (ICC)27.

We performed GWAS on each of the three Imp phenotypes within UKBB to detect 51 significantly associated loci (\(P < 5\times 1{0}^{-8}\)). For each of the significant loci, we first examined the concordance of its effect direction in the phenotypes originally observed in UKBB (Obs). Of the 51 loci, we specifically inspected 38 Obs loci that demonstrated effect sizes significantly distinct from zero (P < 0.05). All 38 loci had a matching direction of effects in the corresponding Obs phenotype (P = 7.3 × 10−12 for a binomial test; Fig. 4a and Table 3). We then performed the same validation procedure given summary statistics of the Ext phenotypes. Of the 51 loci, 43 could be located in the summary statistics of the nonUKBB studies, of which 26 loci had effects significantly distinct from zero (P < 0.05). Of the 26 loci, 25 had matching direction of effects (96%; P = 8.0 × 10−7 for a binomial test; Fig. 4a and Table 3). We observed that bilirubin was the only phenotype in which the direction of effects did not match across all associated loci, with 8 of 9 loci having consistent direction of effects. However, this rate is consistent with the rate of sign consistency that we observe for loci discovered to be associated with originally observed bilirubin (14 of 15; Table 3). We further report the number of matching effects regardless of being significantly different from zero in Supplementary Table 5. We observed qualitatively similar results when testing the P values of the discovered loci in both the Obs and Ext datasets: 38 of 51 loci had P < 0.05 in the Obs dataset, whereas 28 of 43 had P < 0.05 in the Ext dataset (compared with 15 of 33 for loci discovered in the Obs dataset; Table 3).

a, Effect sizes of significantly associated loci based on imputed phenotypes were examined in the association studies of the observed phenotypes in UKBB (Obs or observed) and comparable nonUKBB studies (Ext or external). Genome-wide analysis was performed across 5,776,313 SNPs. For imputed phenotypes, circles indicate the mean effect based on multiple imputation (black bars indicate the 95% CI). Mismatches in effect directions are highlighted in orange. Effects that were not significantly different from zero in Obs or Ext (at P < 0.05, two-sided t-test) are denoted using empty markers. Loci are visualized that were present across compared studies for each phenotype. b, Genetic correlation (\({r}_{G}\)) for bilirubin, LifetimeMDD and cannabis ever taken between UKBB observed and imputed (Obs and Imp, in blue) phenotypes and nonUKBB cohorts (Ext, in orange and purple). Bar heights for genetic correlations that involve imputed phenotypes indicate mean \({r}_{G}\) based on multiple imputation. Black bars indicate the 95% CI.

We measured the similarity in genome-wide SNP effects between the imputed (Imp), observed (Obs) and external (Ext) phenotypes by estimating the genetic correlation (\({r}_{G}\)) of their summary statistics using LD score regression (LDSC)28. The average \({r}_{G}\) between Imp and Obs phenotypes was 1.03 (95% confidence intervals (CIs) overlap 1 in all cases; Fig. 4b). When comparing the Imp and corresponding Ext phenotypes, \({r}_{G}\) was 0.83 on average. The lower \({r}_{G}\) (Imp, Ext) is not unexpected given the differences between UKBB and the external studies. For example, the cannabis ever taken phenotype in UKBB takes distinct values based on the number of times cannabis was used (never used, used 1–2, 3–10, 11–100 and more than 100 times), whereas the cannabis usage phenotype measured in ICC was a binary phenotype on whether or not an individual reported using cannabis in their lifetime. The ICC GWAS is a meta-analysis of 13 studies that report a wide range in the prevalence of lifetime cannabis use, reflecting differences across these studies. To place these \({r}_{G}\) estimates in context, we compared the \({r}_{G}\) of pairs of Imp and Ext phenotypes with the corresponding pairs of Obs and Ext phenotypes to find that the two sets of estimates are not significantly different from each other (\({r}_{G}\) of 0.92 across the pairs of Obs and Ext phenotypes so that a test of the difference in \({r}_{G}\)(Obs, Ext) to \({r}_{G}\)(Imp, Ext) failed to reject the null hypothesis of no difference in \({r}_{G}\); Fig. 4b). Taken together, we conclude that the genetic architecture of the imputed phenotypes is similar to that of the originally observed phenotypes both at individual GWAS loci and across the genome.

Discussion

The ubiquity of missing data in population-scale biobanks necessitates effective methods for imputation. Here, we describe AutoComplete, a deep-learning approach to imputation, which we demonstrate to be accurate and efficient for imputing phenotypes in the UK Biobank.

AutoComplete increased the imputation accuracy of highly missing phenotypes related to cardiometabolic and psychiatric disorders in comparison with state-of-the-art linear methods. This implies that understanding nonlinear dependencies among phenotypes in biobank data is important. Patterns of missingness are often structured for biobank-type data as a consequence of the data-gathering procedures. We also observed that realistic simulations of missing data make a substantial contribution to the accuracy of the model learned for imputation (Supplementary Note Section S6). Our use of copy-masking provides a straightforward and general approach for training deep-learning methods in the presence of complex, structured missingness that can be expanded and adapted to new settings.

For the application of our method to new datasets, it would be important to be able to quantitatively determine the quality of imputations for each phenotype. Given that we were able to validate a set of phenotypes chosen based on the variance ratio of the imputed to the observed phenotype (>0.2), accuracy measured on masked phenotypes (\({r}^{2}\) > 0.2) and sufficient fraction of missing entries (>10%), we recommend these metrics as a starting point for future analyses. To allow users to explore choices that might be most appropriate for their specific analyses, we provide the ability for a user of our software package to view these metrics for each phenotype similarly to how we have examined them for any imputed dataset.

We discuss limitations of our method and directions for future work. First, the basic autoencoder architecture underlying our method can be extended in many ways. Although we determined through cross-validation that the majority of the imputation accuracy is gained architecturally from the first three layers and the support for continuous and binary imputations, a fuller exploration of the architecture of the neural network could lead to further improvements in accuracy. Second, because biobanks collect diverse data modalities, including imaging, time-series and multiomic data, imputing missing data that arises in the context of these diverse data types remains a challenge. The phenotypes that we impute in our current work are a mix of continuous, binary and ordinal types, wherein we treat ordinal phenotypes as continuous. The modularity of the underlying neural network architecture will enable our method to deal with the diversity of phenotypic data types that are being gathered, and we leave this as a promising direction for future work. Finally, the consequence of using a deep-learning method is that the resulting imputation phenotypes are often challenging to interpret. Such interpretations are critical to understanding whether an imputed phenotype is enriched for the genetic component of the original phenotype. Methodology for interpreting deep-learning methods is an area of active research29,30 and could be extended to our setting. Analyzing the signals driving our imputation method when applied to biological datasets could reveal distinct subtypes of a disease and could provide insights into disease etiology. Interpretable components could also give higher credence to the imputed phenotypes.

Methods

Datasets

The UKBB16 makes available genetic data for up to half a million individuals and thousands of traits. We gathered two collections of phenotypes in UKBB.

We collected a group of 230 cardiometabolic phenotypes31,32 consisting of phenotypes and serum biomarkers derived from body imaging and laboratory measurements relevant to cardiometabolic disorders, consumption of prescribed drugs (for example, medication for cholesterol or aspirin), measures of daily physical activity and food consumption, as well as anthropometric and general demographic information. In addition, we collected International Statistical Classification of Diseases and Related Health Problems tenth revision (ICD-10) and ICD-9 codes relating to nonalcoholic fatty liver disease33,34, and ICD-10, ICD-9 and Office of Population Censuses and Surveys Classification of Interventions and Procedures version 4 codes relating to coronary artery disease as described35.

We constructed a second dataset of 372 phenotypes related to psychiatric disorders. This included lifetime and current MDD symptom screens36,37, psychosocial factors, comorbidities, family history of common diseases, a broad range of demographic information, as well as both deep and shallow definitions of MDD derived from symptom questionnaires using clinical diagnostic criteria or self-reports17. Both datasets consist of ~300,000 white British unrelated individuals. Each of these collections included a mix of continuous and binary-valued phenotypes (Supplementary Table 1). Missingness rates for phenotypes across individuals varied from 0% (age, sex) and up to 99% (addiction, self-harm).

For each dataset containing N individuals and P phenotypes, a data matrix of dimension N × P was created including missing values. Approximately 50% of all individuals were reserved for testing (evaluating the accuracy of the methods) and the remainder was used for training and any hyperparameter tuning for all methods (in an 80–20 split). Continuous phenotypes were normalized to have zero mean with unit variance per phenotype. Binary-valued phenotypes were processed specific to the capabilities of each method; for methods that did not handle binary data, labels were converted from 0,1 to −0.5,0.5 and treated as continuous values. To prevent information leakage, statistics of the training split were used to normalize the test split.

AutoComplete

AutoComplete is based on a type of neural network that is capable of simultaneously imputing continuous and binary-valued phenotypes. For each individual, AutoComplete considers a fixed list of phenotypes including missing values and reconstructs all phenotypes from a latent representation using an autoencoder architecture. Of the input phenotypes, missing entries were masked (set to zero), then all observed phenotype values were transformed to a hidden representation in the encoding stage. The decoding stage transforms the hidden representation back to the input space such that all phenotypes were reconstructed. To support heterogeneous data types, imputed entries corresponding to binary phenotypes were obtained as the output of a sigmoid function so that these entries lie in the range [0,1].

Let \(\widetilde{X}\) denote a N × P phenotype matrix such that \({\widetilde{X}}_{{ij}}\) is the value of the jth phenotype measured on the ith individual, \(M\) denotes a \(N\times P\) indicator matrix (termed the Mask matrix) where \({M}_{{ij}}=1\) if the jth phenotype is observed for the ith individual and \({M}_{{ij}}=0\) otherwise. For simplicity, continuous and binary phenotypes were organized in \(\widetilde{X}\) such that the first \(C\) phenotypes were continuous.

\(h\) denotes the nonlinear function corresponding to the autoencoder. The function \(h\) imputes both missing phenotype values and reconstructs observed ones. During imputation, only the imputed missing values are used. Using the LeakyReLU function \(\varPhi\) as a nonlinearity in the hidden layer and the sigmoid function \(s\) that was applied to binary-valued imputations, we define for the case of one hidden layer the following feed-forward function \(h\) (additional hidden layers could be defined analogously):

where

\({\widetilde{{\textbf{X}}}}_{i,:}\) denotes row \(i\) of \({\widetilde{{{X}}}}\) (equivalently the vector of phenotypes associated with individual i). For each layer, the learnable weight parameter \(W\) is a \(D\times P\) matrix where D is the dimension of the hidden representation, whereas the bias vector \({\textbf{b}}\) is of length D.

Given function h, the final imputed matrix \(\hat{X}\) is constructed from \(\widetilde{{{X}}}\,\) as follows:

Here · denotes entrywise product.

In training, we promoted imputation using \(h\) such that both truly observed and masked phenotype values were subject equally to a reconstruction loss. Observed values were withheld based on existing missingness patterns, which were randomly drawn from the dataset and then applied to other individuals—a process we refer to as copy-masking. To do this, a binary mask vector \(\widetilde{{\textbf{m}}}\) is drawn from the rows of the mask matrix \(M\) and was applied to the input of \(h\) such that for individual i, the jth phenotype would be masked when \({\widetilde{{{m}}}}_{j}=0\) or unmodified when \({\widetilde{{{m}}}}_{j}=1\). We controlled the prevalence of masking in training by the parameter \(\rho\), which was the probability one individual would receive a copy-mask. The masking process of AutoComplete is illustrated in Fig. 1.

A joint loss function was defined over observed and masked values such that mean square error and cross entropy loss were applied to continuous and binary phenotypes respectively. For simplicity the two types of phenotypes were partitioned by index C. The joint loss function was applied over all values that were originally observed:

The parameters \(\varTheta \equiv \{{W}^{\,(1)},{{\textbf{b}}}^{(1)},{W}^{\,(2)},{{\textbf{b}}}^{(2)}\}\) of \(h\) were optimized with respect to the objective \(L\). Stochastic Gradient Descent38 was used to fit the neural net, where the initial learning rate, momentum and mini-batch size were also determined on a validation split of each dataset. The weights and biases of the network were initialized using the Kaiming Uniform distribution, and the slope parameter of LeakyReLU was initialized as \({l}_{\phi }=0.01\). Training proceeded given a maximum number of allowed epochs, up to 500, whereas the network weights were checkpointed based on a validation split which was randomly sampled from the training set to avoid overfitting. After training, the last checkpointed weights that attained the best validation loss were loaded back to the model for all imputation and downstream analysis. In Supplementary Fig. 2, we visualize the loss history recorded while fitting on the UKBB datasets. A single RTX8000 GPU was used to accelerate the fitting process of AutoComplete.

Copy-masking

We implemented copy-masking, a simulation procedure to induce realistic patterns of missingness on observed data. This procedure was first used to simulate artificial missing data in the training and test splits of the datasets in the range of 1–50% for the purpose of assessing accuracy with structured missingness. For AutoComplete, we applied the same masking procedure as augmentations during training on top of the missing values already present with probability \(\rho\) for a given individual. This approach strives to maintain the realistic missingness patterns in datasets while introducing simulated missing values. By contrast, uniform randomly withholding observed values could distort the distribution of the features; for example, when two features have correlated missingness. To illustrate the impact of copy-masking for imputation, we describe in Supplementary Note Section S6 the effect of using uniform masking for imputation performance in place of copy-masking and observe that no amount of uniform random masking alone attains the accuracy obtained with copy-masking (Supplementary Fig. 5).

Hyperparameter tuning

For our simulation results, all methods were tested after tuning their hyperparameters on a validation dataset. For AutoComplete, HI-VAE and GAIN, we used the same predetermined portion (20%) of the samples not part of the test set as a validation set on which we evaluated hyperparameters after training on the remaining portion. SoftImpute was tuned using a k-fold (k = 5) cross-validation. For the AutoComplete final imputation results, we carried over the same hyperparameters which were found to be optimal in simulations.

In summary, the final set of notable hyperparameters chosen for AutoComplete were learning_rate = 0.1, copy_mask = 80%, batch_size = 2,048 and max_epochs = 500 for the psychiatric disorders dataset; and copy_mask = 30% for the cardiometabolic dataset. The copy-mask percentage was the main contributor to optimal accuracy, and other hyperparameters such as the momentum for Stochastic Gradient Descent optimization, learning rate decay of the scheduler and Leaky ReLU parameters were left fixed. For HI-VAE, the final set of hyperparameters chosen were y = 5, z = 16, s = 1, batch_size = 4,096 and max_epochs = 100. For GAIN, the final set of hyperparameters chosen were hint = 0.9, alpha = 10, batch_size = 4,096 and max_epochs = 2,000. To tune SoftImpute, we followed a cross-validation procedure as used previously39, where we chose a nuclear norm (Lambda) value of 108. Because of the difficulty in KNN and missForest scaling to the size of the cardiometabolic and psychiatric disorders dataset, we did not perform hyperparameter tuning for these methods (which would require repeated fits and evaluations). Reasonable values for hyperparameters were chosen instead. For KNN, the number of neighbors K was set to 10. For missForest, the number of trees per forest was set to 10 and up to 10 epochs were run. We did not alter hyperparameters that were not modifiable given each method’s software package. Supplementary Note Section S2 describes details on the specific hyperparameters that were tuned for each method.

Details of GWAS analysis

We used imputed genotypes available from the UKBB for the individuals that were included in the phenotype imputation. We performed stringent filtering on the imputed variants, removing all insertions and deletions and multiallelic SNPs: we hard-called genotypes from imputed dosages at 9,720,420 biallelic SNPs with imputation INFO score >0.9, MAF >0.1% and P value for violation of Hardy–Weinberg equilibrium \(> {10}^{-6}\), in individuals with a genotype probability threshold of 0.9 (individuals with genotype probabilities below 0.9 would be assigned a missing genotype). Of these, 5,776,313 SNPs are common (minor allele frequencies (MAF) >5%). We consistently use these SNPs for all analyses in this study.

We used 20 principal components (PCs) computed with FlashPCA40 on 337,126 white British individuals in UKBB and genotyping arrays as covariates for all GWAS. We performed principal component analysis on directly genotyped SNPs from samples in UKBB and used PCs as covariates in all our analyses to control for population structure. From the array genotype data, we first removed all samples that did not pass quality control, leaving 337,126 white British, unrelated samples. We then removed SNPs not included in the phasing and imputation and retained those with MAF ≥0.1%, and P value for violation of Hardy–Weinberg equilibrium \(> {10}^{-6}\), leaving 593,300 SNPs. We then removed 20,567 SNPs that are in known structural variants and the major histocompatibility complex, as recommended by UKBB16, leaving 572,733 SNPs. Of these, 334,702 are common (MAF >5%), and from these common SNPs we further filtered based on missingness <0.002 and pairwise LD \({r}^{2} < 0.1\) with SNPs in a sliding window of 1,000 SNPs to obtain 68,619 LD-pruned SNPs for computing PCs using FlashPCA. We obtained 20 PCs, their eigenvalues, loadings and variance explained, and consistently use these PCs as covariates for all our genetic analyses.

The number of loci were counted from the GWAS results through a chromosome-wide clumping procedure. The top significantly detected SNP from one chromosome was tallied as a hit, and then all significant hits within 1 Mb from the SNP were ignored. The procedure was repeated for any remaining significant detection in the chromosome, and then repeated within all chromosomes.

GWAS on AutoComplete-imputed phenotypes

For the imputation of phenotypes for which we performed GWAS, AutoComplete was allowed to fit all available individuals to impute missing entries. For binary phenotypes, phenotypes were imputed in a continuous range of 0–1 reflective of confidence in the prediction. When fitting all individuals, optimal hyperparameters were carried over from the tuning result of 1% missing data simulation. Similar to the simulation phase, during the final imputation procedure a portion of all samples were reserved as a validation set (20% by default), which was used to monitor for overfitting and perform weight saving. Therefore, all individuals present in the dataset were considered for the final imputation, and the sample size for downstream analyses was the total number of individuals in each dataset.

GWAS on originally observed UKBB phenotypes were performed with imputed genotype data at the 5,776,313 SNPs (MAF >5%, INFO score >0.9) using logistic regression or linear regression based on the data type of the phenotype (PLINK v.2)41. For all GWAS involving imputed phenotypes, linear regression was performed. We tally the number of significantly associated loci using the combination of observed and imputed individuals (all available individuals) and visualize their corresponding quantile-quantile plots in Supplementary Fig. 3.

External GWAS datasets

We compared the GWAS on AutoComplete-imputed phenotypes with four GWAS results on external datasets. Direct bilirubin levels (field 30660) were measured for 226,876 unrelated white British individuals in the UKBB (58,531 missing). Imputed direct bilirubin was compared with measurements of bilirubin levels on 66,732 individuals from the Vanderbilt University Medical Center (VUMC) EHR system25. Diagnosis of LifetimeMDD17 for 67,165 individuals (269,963 missing) in the UKBB was validated against a comprehensive study of MDD across of 124,065 individuals by the PGC (excluding UKBB and 23andMe)26 and a study of 307,354 individuals carried out using data from 23andMe17. Finally, comparisons were made between the cannabis ever taken status in the UKBB (field 20453) for 110,189 individuals (226,939 missing) and a study of lifetime cannabis use across 32,330 individuals of European ancestry by the ICC27.

Accounting for imputation uncertainty in downstream genomic analysis

We implemented a procedure involving multiple imputations through bootstrap resampling to account for uncertainty arising from imputation. This approach was applied to account for imputation uncertainty in downstream analyses such as when testing for genetic associations and measuring genetic correlations.

For a given dataset, we repeated the imputation procedure ten times using AutoComplete, which was fitted from scratch to reflect variations in imputation. Although the fitting procedure and hyperparameters were kept the same, the seed of the random generation was altered such that the weights would be initialized differently, mini batches would be formed in a differently shuffled order and the sequence of individuals randomly selected to receive copy-masking would change. In addition, we introduced bootstrapping to the fitting process such that the model was fit on a bootstrapped dataset in which all individuals were sampled with replacement, while the fitted model was used to impute the original dataset. This bootstrapping procedure accounts for the variation in the imputation model due to variation in the training samples (reflected in differences in the bootstrap samples), missingness patterns encountered (since copy-masking is applied independently in each bootstrapped sample), and to dependence on random parameter initialization.

We applied Rubin’s rule3 to utilize the multiple imputed datasets to account for imputation uncertainty in a downstream statistic. In the context of GWAS, an association study was performed for each imputation such that multiple effect size estimates and their standard errors were estimated per SNP. The significance of each SNP was determined by combining the point estimates and standard errors. Tallies of significantly associated loci in our results involving imputed phenotypes were based on this procedure. For genetic correlation analyses, the \({r}_{G}\) was measured between a nonimputation-based GWAS (UKBB or nonUKBB) and multiple imputation-based GWAS, and their statistics were combined while accounting for imputation uncertainty. Empirical observations on the change in the statistics due to imputation are further described in Supplementary Note Section S4.

Additional analysis of imputed phenotypes

The effective sample size was calculated as a function of imputation accuracy for a given phenotype from simulations (1% missingness) and the number of missing values imputed, such that \({N}_{{{\textrm{Effective}}}}={N}_{{{\textrm{Observed}}}}+{r}_{{{\textrm{AutoComplete}}}}^{2}\times {{N}}_{{{\textrm{Imputed}}}}\) for a given phenotype.

We examined genetic correlations (\({r}_{G}\)) between a subset of phenotypes within the psychiatric disorder dataset collected within the UK Biobank and related phenotypes collected from cohorts outside the UK Biobank. The three phenotypes examined based on the UK Biobank were direct bilirubin, LifetimeMDD17 and status of having ever taken cannabis. In the context of these phenotypes, we gathered GWAS summary statistics from external studies that examined bilirubin measurements25, MDD17,26 and lifetime cannabis use27. We used LDSC28 to estimate \({r}_{G}\) between each pairing of phenotypes using LD Scores estimated from the 1,000 Genomes white European population42,43.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Data availability

The genotype and phenotype data are available by application from the UKBB, https://www.ukbiobank.ac.uk. The LD Scores from the 1000 Genomes project are available from https://alkesgroup.broadinstitute.org/LDSCORE/. Further data are available as follows: Bilirubin GWAS25, http://ftp.ebi.ac.uk/pub/databases/gwas/summary_statistics/GCST90012001-GCST90013000/GCST90012749/; MDD GWAS by PGC (excluding UKBB and 23andMe)26, https://figshare.com/articles/dataset/mdd2018/14672085; MDD GWAS of 23andMe cohort17, https://figshare.com/s/b61e44d5142cc0690772; Lifetime cannabis use GWAS27, https://www.ru.nl/bsi/research/group-pages/substance-use-addiction-food-saf/vm-saf/genetics/international-cannabis-consortium-icc/. The following GWAS of phenotypes after imputing all missing entries are available from the GWAS Catalog with the accession codes: bilirubin, GCST90277451; cannabis ever taken, GCST90277452; and LifetimeMDD, GCST90277450.

Code availability

The software can be accessed as follows: AutoComplete, https://github.com/sriramlab/AutoComplete (https://doi.org/10.5281/zenodo.8243106); Plink 2.0, https://www.cog-genomics.org/plink/2.0/; LDSC, https://github.com/bulik/ldsc; HI-VAE, https://github.com/probabilistic-learning/HI-VAE; GAIN, https://github.com/jsyoon0823/GAIN; KNN, https://scikit-learn.org/stable/modules/generated/sklearn.impute.KNNImputer.html; MissForest, https://cran.r-project.org/web/packages/missForest/index.html; MICE, https://github.com/AnotherSamWilson/miceforest.

References

Greenland, S. & Finkle, W. D. A critical look at methods for handling missing covariates in epidemiologic regression analyses. Am. J. Epidemiol. 142, 1255–1264 (1995).

Rubin, D. B. Multiple Imputation for Nonresponse in Surveys (Wiley, 2004).

van Buuren, S. Flexible Imputation of Missing Data 2nd edn (CRC Press, 2018).

Troyanskaya, O. et al. Missing value estimation methods for DNA microarrays. Bioinformatics 17, 520–525 (2001).

Hastie, T., Mazumder, R., Lee, J. D. & Zadeh, R. Matrix completion and low-rank SVD via fast alternating least squares. J. Mach. Learn. Res. 16, 3367–3402 (2015).

Dahl, A. et al. A multiple-phenotype imputation method for genetic studies. Nat. Genet. 48, 466–472 (2016).

Hormozdiari, F. et al. Imputing phenotypes for genome-wide association studies. Am. J. Hum. Genet. 99, 89–103 (2016).

Helmstaedter, M. et al. Connectomic reconstruction of the inner plexiform layer in the mouse retina. Nature 500, 168–174 (2013).

Leung, M. K. K., Xiong, H. Y., Lee, L. J. & Frey, B. J. Deep learning of the tissue-regulated splicing code. Bioinformatics 30, i121–i129 (2014).

Xiong, H. Y. et al. The human splicing code reveals new insights into the genetic determinants of disease. Science 347, 1254806 (2015).

Alipanahi, B., Delong, A., Weirauch, M. T. & Frey, B. J. Predicting the sequence specificities of DNA- and RNA-binding proteins by deep learning. Nat. Biotechnol. 33, 831–838 (2015).

Ma, J., Sheridan, R. P., Liaw, A., Dahl, G. E. & Svetnik, V. Deep neural nets as a method for quantitative structure–activity relationships. J. Chem. Inf. Model. 55, 263–274 (2015).

Arisdakessian, C., Poirion, O., Yunits, B., Zhu, X. & Garmire, L. X. DeepImpute: an accurate, fast, and scalable deep neural network method to impute single-cell RNA-seq data. Genome Biol. 20, 211 (2019).

Phung, S., Kumar, A. & Kim, J. A deep learning technique for imputing missing healthcare data. Ann. Int. Conf. IEEE Eng. Med. Biol. Soc. 2019, 6513–6516 (2019).

Beaulieu-Jones, B. K. & Moore, J. H. Missing data imputation in the electronic health record using deeply learned autoencoders. Pac. Symp. Biocomput. 22, 207–218 (2017).

Bycroft, C. et al. The UK Biobank resource with deep phenotyping and genomic data. Nature 562, 203–209 (2018).

Cai, N. et al. Minimal phenotyping yields genome-wide association signals of low specificity for major depression. Nat. Genet. 52, 437–447 (2020).

Dahl, A. et al. Phenotype integration improves power and preserves specificity in biobank-based genetic studies of major depressive disorder. Nat. Genet. https://doi.org/10.1038/s41588-023-01559-9 (2023).

Stekhoven, D. J. & Bühlmann, P. MissForest—non-parametric missing value imputation for mixed-type data. Bioinformatics 28, 112–118 (2011).

Nazábal, A., Olmos, P. M., Ghahramani, Z. & Valera, I. Handling incomplete heterogeneous data using VAEs. Pattern Recognit. 107, 107501 (2020).

Yoon, J., Jordon, J. & van der Schaar, M. GAIN: missing data imputation using generative adversarial nets. Proc. Mach. Learn. Res. 80, 5689–5698 (2018).

Pritchard, J. K. & Przeworski, M. Linkage disequilibrium in humans: models and data. Am. J. Hum. Genet. 69, 1–14 (2001).

Zeggini, E. et al. Meta-analysis of genome-wide association data and large-scale replication identifies additional susceptibility loci for type 2 diabetes. Nat. Genet. 40, 638–645 (2008).

Marchini, J., Howie, B., Myers, S., McVean, G. & Donnelly, P. A new multipoint method for genome-wide association studies by imputation of genotypes. Nat. Genet. 39, 906–913 (2007).

Dennis, J. K. et al. Clinical laboratory test-wide association scan of polygenic scores identifies biomarkers of complex disease. Genome Med. 13, 6 (2021).

Wray, N. R. et al. Genome-wide association analyses identify 44 risk variants and refine the genetic architecture of major depression. Nat. Genet. 50, 668–681 (2018).

Stringer, S. et al. Genome-wide association study of lifetime cannabis use based on a large meta-analytic sample of 32330 subjects from the International Cannabis Consortium. Transl. Psychiatry 6, e769 (2016).

Bulik-Sullivan, B. K. et al. LD score regression distinguishes confounding from polygenicity in genome-wide association studies. Nat. Genet. 47, 291–295 (2015).

Sundararajan, M., Taly, A. & Yan, Q. Axiomatic attribution for deep networks. ICML’17: Proc. 34th Int. Conf. Mach. Learn. 70, 3319–3328 (2017).

Lundberg, S. M. & Lee, S. I. A unified approach to interpreting model predictions. In NIPS’17: Proc. 31st International Conference on Neural Information Processing Systems, 4768–4777 (Curran Associates Inc., 2017).

Littlejohns, T. J. et al. The UK Biobank imaging enhancement of 100,000 participants: rationale, data collection, management and future directions. Nat. Commun. 11, 2624 (2020).

Wilman, H. R. et al. Characterisation of liver fat in the UK Biobank cohort. PLoS ONE 12, e0172921 (2017).

Williams, V. F., Taubman, S. B. & Stahlman, S. Non-alcoholic fatty liver disease (NAFLD), active component, U.S. Armed Forces, 2000–2017. MSMR 26, 2–11 (2019).

Miao, Z. et al. Identification of 90 NAFLD GWAS loci and establishment of NAFLD PRS and causal role of NAFLD in coronary artery disease. HGG Adv. 3, 100056 (2021).

Khera, A. V. et al. Genome-wide polygenic scores for common diseases identify individuals with risk equivalent to monogenic mutations. Nat. Genet. 50, 1219–1224 (2018).

Gigantesco, A. & Morosini, P. Development, reliability and factor analysis of a self-administered questionnaire which originates from the World Health Organization’s Composite International Diagnostic Interview—Short Form (CIDI-SF) for assessing mental disorders. Clin. Pract. Epidemiol. Ment. Health 4, 8 (2008).

Kroenke, K. & Spitzer, R. L. The PHQ-9: a new depression diagnostic and severity measure. Psychiatr. Ann. 32, 509–515 (2002).

Zhou, P. et al. Towards theoretically understanding why SGD generalizes better than ADAM in deep learning. In NIPS’20: Proc. 34th International Conference on Neural Information Processing Systems, 21285–21296 (Curran Associates Inc., 2020).

Mongia, A., Sengupta, D. & Majumdar, A. McImpute: Matrix completion based imputation for single cell RNA-seq data. Front. Genet. 10, 9 (2019).

Abraham, G., Qiu, Y. & Inouye, M. FlashPCA2: principal component analysis of Biobank-scale genotype datasets. Bioinformatics 33, 2776–2778 (2017).

Chang, C. C. et al. Second-generation PLINK: rising to the challenge of larger and richer datasets. GigaScience 4, 7 (2015).

Auton, A. et al. A global reference for human genetic variation. Nature 526, 68–74 (2015).

Zheng, J. et al. LD Hub: a centralized database and web interface to perform LD score regression that maximizes the potential of summary level GWAS data for SNP heritability and genetic correlation analysis. Bioinformatics 33, 272–279 (2016).

Acknowledgements

This research was conducted using the UK Biobank Resource under applications 33127 and 33297. We thank the participants of UK Biobank for making this work possible. This work was funded by National Institutes of Health grant nos R01HG010505 and R01DK132775 (P.P., M.A.), R35GM125055 (S.S.), and National Science Foundation grant nos III-1705121, CAREER-1943497 (S.S., U.A., A.P.), and an award from the UCLA-AWS ScienceHub (U.A., S.S.). This work was also funded by Lundbeckfonden Fellowship R335-2019-2318 (A.J.S.).

Author information

Authors and Affiliations

Contributions

U.A. contributed to the initial ideation of the method, carried out the experiments and wrote the code. A.P. contributed to the initial ideation of the method, organized an initial version of the cardiometabolic dataset, and helped carry out GWAS. M.A. and P.P. organized the final version of the cardiometabolic dataset with additional features and helped verify its imputation quality. N.C. and A.D. contributed to the initial ideation of the method, organized the psychiatric disorders dataset and helped verify the imputation quality of its phenotypes. L.H. and N.C. helped carry out the first round of GWAS using imputed phenotypes. S.B., A.J.S., K.K., N.Z. and J.F. helped with interpreting the imputation results and designing the replication experiments. S.S. secured funding, contributed to the initial ideation of the method, the design of all experiments performed with the method, and the writing and revision process of the paper. All authors contributed to the initial drafting and follow-up revisions of the paper.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Genetics thanks Dokyoon Kim and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Note, Figs. 1–6 and Tables 1–5.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

An, U., Pazokitoroudi, A., Alvarez, M. et al. Deep learning-based phenotype imputation on population-scale biobank data increases genetic discoveries. Nat Genet 55, 2269–2276 (2023). https://doi.org/10.1038/s41588-023-01558-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41588-023-01558-w

This article is cited by

-

Personalized mood prediction from patterns of behavior collected with smartphones

npj Digital Medicine (2024)

-

Phenotype integration improves power and preserves specificity in biobank-based genetic studies of major depressive disorder

Nature Genetics (2023)