Abstract

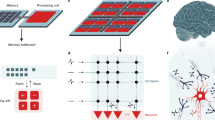

There are two general approaches to developing artificial general intelligence (AGI)1: computer-science-oriented and neuroscience-oriented. Because of the fundamental differences in their formulations and coding schemes, these two approaches rely on distinct and incompatible platforms2,3,4,5,6,7,8, retarding the development of AGI. A general platform that could support the prevailing computer-science-based artificial neural networks as well as neuroscience-inspired models and algorithms is highly desirable. Here we present the Tianjic chip, which integrates the two approaches to provide a hybrid, synergistic platform. The Tianjic chip adopts a many-core architecture, reconfigurable building blocks and a streamlined dataflow with hybrid coding schemes, and can not only accommodate computer-science-based machine-learning algorithms, but also easily implement brain-inspired circuits and several coding schemes. Using just one chip, we demonstrate the simultaneous processing of versatile algorithms and models in an unmanned bicycle system, realizing real-time object detection, tracking, voice control, obstacle avoidance and balance control. Our study is expected to stimulate AGI development by paving the way to more generalized hardware platforms.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

$199.00 per year

only $3.90 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The datasets that we used for benchmarks are publicly available, as described in the text and the relevant references38,41,42,44,45,46. The training methods are provided in the relevant references36,37,47,54. The experimental setups for simulations and measurements are detailed in the text. Other data that support the findings of this study are available from the corresponding author on reasonable request.

Code availability

The codes used for the software tool chain and the bicycle demonstration are available from the corresponding author on reasonable request.

References

Goertzel, B. Artificial general intelligence: concept, state of the art, and future prospects. J. Artif. Gen. Intell. 5, 1–48 (2014).

Benjamin, B. V. et al. Neurogrid: a mixed-analog-digital multichip system for large-scale neural simulations. Proc. IEEE 102, 699–716 (2014).

Merolla, P. A. et al. A million spiking-neuron integrated circuit with a scalable communication network and interface. Science 345, 668–673 (2014).

Furber, S. B. et al. The SpiNNaker project. Proc. IEEE 102, 652–665 (2014).

Schemmel, J. et al. A wafer-scale neuromorphic hardware system for large-scale neural modeling. In Proc. 2010 IEEE Int. Symposium on Circuits and Systems 1947–1950 (IEEE, 2010).

Davies, M. et al. Loihi: a neuromorphic manycore processor with on-chip learning. IEEE Micro 38, 82–99 (2018).

Chen, Y.-H. et al. Eyeriss: an energy-efficient reconfigurable accelerator for deep convolutional neural networks. IEEE J. Solid-State Circuits 52, 127–138 (2017).

Jouppi, N. P. et al. In-datacenter performance analysis of a tensor processing unit. In 2017 ACM/IEEE 44th Annual Int. Symposium on Computer Architecture 1–12 (IEEE, 2017).

Markram, H. The blue brain project. Nat. Rev. Neurosci. 7, 153–160 (2006).

Izhikevich, E. M. Simple model of spiking neurons. IEEE Trans. Neural Netw. 14, 1569–1572 (2003).

Eliasmith, C. et al. A large-scale model of the functioning brain. Science 338, 1202–1205 (2012).

Song, S., Miller, K. D. & Abbott, L. F. Competitive Hebbian learning through spike-timing-dependent synaptic plasticity. Nat. Neurosci. 3, 919–926 (2000).

Gusfield, D. Algorithms on Strings, Trees and Sequences: Computer Science and Computational Biology (Cambridge Univ. Press, 1997).

Qiu, G. Modelling the visual cortex using artificial neural networks for visual image reconstruction. In Fourth Int. Conference on Artificial Neural Networks 127–132 (Institution of Engineering and Technology, 1995).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Russell, S. J. & Norvig, P. Artificial Intelligence: A Modern Approach (Pearson Education, 2016).

He, K. et al. Deep residual learning for image recognition. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 770–778 (IEEE, 2016).

Hinton, G. et al. Deep neural networks for acoustic modeling in speech recognition. IEEE Signal Process. Mag. 29, 82–97 (2012).

Young, T. et al. Recent trends in deep learning based natural language processing. IEEE Comput. Intell. Mag. 13, 55–75 (2018).

Silver, D. et al. Mastering the game of Go with deep neural networks and tree search. Nature 529, 484–489 (2016).

Lake, B. M. et al. Building machines that learn and think like people. Behav. Brain Sci. 40, e253 (2017).

Hassabis, D. et al. Neuroscience-inspired artificial intelligence. Neuron 95, 245–258 (2017).

Marblestone, A. H., Wayne, G. & Kording, K. P. Toward an integration of deep learning and neuroscience. Front. Comput. Neurosci. 10, 94 (2016).

Lillicrap, T. P. et al. Random synaptic feedback weights support error backpropagation for deep learning. Nat. Commun. 7, 13276 (2016).

Roelfsema, P. R. & Holtmaat, A. Control of synaptic plasticity in deep cortical networks. Nat. Rev. Neurosci. 19, 166–180 (2018).

Ullman, S. Using neuroscience to develop artificial intelligence. Science 363, 692–693 (2019).

Xu, K. et al. Show, attend and tell: neural image caption generation with visual attention. In Int. Conference on Machine Learning (eds Bach, F. & Blei, D.) 2048–2057 (International Machine Learning Society, 2015).

Zhang, B., Shi, L. & Song, S. in Brain-Inspired Robotics: The Intersection of Robotics and Neuroscience (eds Sanders, S. & Oberst, J.) 4–9 (Science/AAAS, 2016).

Sabour, S., Frosst, N. & Hinton, G. E. Dynamic routing between capsules. Adv. Neural Inf. Processing Syst. 30, 3856–3866 (2017).

Mi, Y. et al. Spike frequency adaptation implements anticipative tracking in continuous attractor neural networks. Adv. Neural Inf. Processing Syst. 27, 505–513 (2014).

Herrmann, M., Hertz, J. & Prügel-Bennett, A. Analysis of synfire chains. Network 6, 403–414 (1995).

London, M. & Häusser, M. Dendritic computation. Annu. Rev. Neurosci. 28, 503–532 (2005).

Imam, N. & Manohar, R. Address-event communication using token-ring mutual exclusion. In 2011 17th IEEE Int. Symposium on Asynchronous Circuits and Systems 99–108 (IEEE, 2011).

Deng, L. et al. GXNOR-Net: training deep neural networks with ternary weights and activations without full-precision memory under a unified discretization framework. Neural Netw. 100, 49–58 (2018).

Han, S. et al. EIE: efficient inference engine on compressed deep neural network. In 2016 ACM/IEEE 43rd Annual Int. Symposium on Computer Architecture 243–254 (IEEE, 2016).

Diehl, P. U. et al. Fast-classifying, high-accuracy spiking deep networks through weight and threshold balancing. In 2015 Int. Joint Conference on Neural Networks 1–8 (IEEE, 2015).

Wu, Y. et al. Spatio-temporal backpropagation for training high-performance spiking neural networks. Front. Neurosci. 12, 331 (2018).

Orchard, G. et al. Converting static image datasets to spiking neuromorphic datasets using saccades. Front. Neurosci. 9, 437 (2015).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. ImageNet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 25, 1097–1105 (2012).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Int. Conference on Learning Representations; preprint at https://arxiv.org/pdf/1409.1556.pdf (2015).

Deng, J. et al. ImageNet: a large-scale hierarchical image database. In 2009 IEEE Conference on Computer Vision and Pattern Recognition 248–255 (IEEE, 2009).

LeCun, Y. et al. Gradient-based learning applied to document recognition. Proc. IEEE 86, 2278–2324 (1998).

Courbariaux, M., Bengio, Y. & David, J.-P. BinaryConnect: training deep neural networks with binary weights during propagations. Adv. Neural Inf. Processing Syst. 28, 3123–3131 (2015).

Krizhevsky, A. & Hinton, G. Learning Multiple Layers of Features from Tiny Images. MSc thesis, Univ. Toronto (2009).

Merity, S. et al. Pointer sentinel mixture models. In Int. Conference on Learning Representations; preprint at https://arxiv.org/abs/1609.07843 (2017).

Krakovna, V. & Doshi-Velez, F. Increasing the interpretability of recurrent neural networks using hidden Markov models. Preprint at https://arxiv.org/abs/1606.05320 (2016).

Wu, S. et al. Training and inference with integers in deep neural networks. In Int. Conference on Learning Representations; preprint at https://arxiv.org/abs/1802.04680 (2018).

Paszke, A. et al. Automatic differentiation in Pytorch. In Proc. NIPS Autodiff Workshop https://openreview.net/pdf?id=BJJsrmfCZ (2017).

Narang, S. & Diamos, G. Baidu DeepBench. https://github.com/baidu-research/DeepBench (2017).

Fowers, J. et al. A configurable cloud-scale DNN processor for real-time AI. In 2018 ACM/IEEE 45th Annual Int. Symposium on Computer Architecture 1–14 (IEEE, 2018).

Xu, M. et al. HMM-based audio keyword generation. In Advances in Multimedia Information Processing – PCM 2004, Vol. 3333 (eds Aizawa, K. et al.) 566–574 (Springer, 2004).

Mathis, A., Herz, A. V. & Stemmler, M. B. Resolution of nested neuronal representations can be exponential in the number of neurons. Phys. Rev. Lett. 109, 018103 (2012).

Gerstner, W. et al. Neuronal Dynamics: From Single Neurons to Networks and Models of Cognition (Cambridge Univ. Press, 2014).

Liang, D. & Indiveri, G. Robust state-dependent computation in neuromorphic electronic systems. In IEEE Biomedical Circuits and Systems Conference 1–4 (IEEE, 2017).

Akopyan, F. et al. TrueNorth: design and tool flow of a 65 mw 1 million neuron programmable neurosynaptic chip. IEEE Trans. Comput. Aided Des. Integrated Circ. Syst. 34, 1537–1557 (2015).

Han, S. et al. ESE: efficient speech recognition engine with sparse LSTM on FPGA. In Proc. 2017 ACM/SIGDA Int. Symposium on Field-Programmable Gate Arrays 75–84 (ACM, 2017).

Acknowledgements

We thank B. Zhang, R. S. Williams, J. Zhu, J. Guan, X. Zhang, W. Dou, F. Zeng and X. Hu for thoughtful discussions; L. Tian, Q. Zhao, M. Chen, J. Feng, D. Wang, X. Lin, H. Cui, Y. Hu and Y. Yu contributing to experiments; H. Xu for coordinating experiments; and MLink for design assistance. This work was supported by projects of the National Natural Science Foundation of China (NSFC; 61836004, 61327902 and 61475080); the Brain-Science Special Program of Beijing (grant Z181100001518006); and the Suzhou-Tsinghua innovation leading program (2016SZ0102).

Author information

Authors and Affiliations

Contributions

J.P., L.D., S.S., M.Z., Y.Z., Shuang Wu and G.W. were in charge of, respectively, the principles of chip design, chip design, the principles of neuron computing, the unmanned bicycle system, software, implementation of Tianjic in the unmanned bicycle system, and chip testing. J.P., L.D., G.W., Z.W. and Y.Z. carried out chip development. Shuang Wu, G.W., Z.Z., Z.Y. and Yujie Wu worked on the unmanned bicycle experiment. Y.Z. and W. Han worked on software development. Yujie Wu, Shuang Wu and G.L. developed the algorithm. J.P., L.D., S.S., Si Wu, C.M., F.C., W. He, R.Z. and L.S. contributed to the analysis and interpretation of results. All of the authors contributed to discussion of architecture design principles. L.D., W. He, R.Z., S.S., Z.W. and L.S. wrote the manuscript with input from all authors. L.S. proposed the concept of hybrid architecture and supervised the whole project.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Peer review information Nature thanks Meng-Fan (Marvin) Chang and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Extended data figures and tables

Extended Data Fig. 1 Overview of the FCore architecture.

We adopted a fully digital design. The axon module acts as a data buffer to store the inputs and the outputs. Synapses are designed to store on-chip weights and are pinned close to the dendrite for better memory locality. The dendrite is an integration engine that contains multipliers and accumulators. The soma is a computation unit for neuronal transformations. IntraFCore and interFCore communications are wired by a router, which supports arbitrary topology. Act_Fun, activation function; A/s, activation (ANN mode)/spike (SNN mode); B_L, bias/leakage; In BUF, input buffer; Inhibit Reg, inhibition register; In(Out)_L/E/W/S/N, local/eastern/western/southern/northern input(output); Lat_Acc, lateral accumulation; MEM, memory; MUX, multiplexer; Out_Copy, output copy; Out_Trans, output transmission; P_MEM, parameter memory; Spike_Gen, spike generator; V_MEM, membrane potential memory; X & Index, axon output and weight index. The numbers in or above memories indicate memory size; ‘b’ represents bit(s).

Extended Data Fig. 2 Fabrication of the Tianjic chip and testing boards.

a, Chip layout and images of the Tianjic chip. b, Testing boards equipped with a single Tianjic chip or a chip array (5 × 5 size).

Extended Data Fig. 3 Throughput-aware unfolded mapping and resource-aware folded mapping.

a, Unfolded mapping converts all topologies into a fully connected (FC) structure without reusing data. In CANN: Norm, normalization; r, firing rate; V, membrane potential. In LSTM: f/i/o, forget/input/output gate output; g, input activation; h/c, hidden/cell state; t, time step; x, external input. b, Folded mapping folds the network along the row dimension of feature maps (FMs) for resource reuse. We note that the weights are still unfolded along the column dimension to maintain parallelism, and wide FMs can be split into multiple slices, which are allocated into different FCores for concurrent processing. r0/1/2, row 0/1/2.

Extended Data Fig. 4 Chip measurements in different modes.

a, Power consumption in ANN-only mode at different voltages and frequencies. Here the ‘compute ratio’ is the duty ratio for computation, that is, the ratio of computation time/(computation time + idle time). The phase on the x- axis denotes the execution time phase of FCore. b, Power consumption in SNN-only mode with different rates of input spikes. c, Membrane potential of output neurons in SNN mode. Information was represented in a rate-coding scheme by counting the number of spikes during a given time period.

Extended Data Fig. 5 Performance comparison and routing profiling.

a, FCore placements in six layers (split into seven execution layers); the numbers within the image denote the numbers of FCores used. b, Comparison of the performance of different neural network modes. Acc., accuracy. c, Power consumption for each layer. d, Average number of received routing packets per FCore in each layer. e, Average number of sent packets per FCore across time phases. f, Distribution of total transfer packets for each FCore. The oval with the arrow emphasizes the difference in packet amount between the SNN-only mode and the hybrid mode.

Extended Data Fig. 6 Overheads of the Tianjic chip during the bicycle experiment.

a, Placement of FCores in different network models. Numbers refer to the number of FCores used. b, Measured power consumption under different tasks and at different voltages. The Tianjic chip typically worked at 0.9 V during the bicycle demonstration, and the power consumption was about 400 mW.

Extended Data Fig. 7 Neural state machine.

a, State transition in the bicycle task. b, NSM architecture. The NSM is composed of three subgroups of neurons: state, transfer and output neurons. There are three matrices that determine the connections between different neurons: the trigger, state-transfer and output matrices.

Supplementary information

Supplementary Video 1

Unmanned bicycle equipped with Tianjic chip for real-time object detection, tracking, voice recognition, obstacle avoidance, and balance control. The video consists of two scenes. In Scene 1, the bicycle rides over a speed bump, then it follows the voice commands to change direction or adjust speed. In Scene 2, the bicycle detects and tracks a moving human, and avoids obstacles when necessary.

Rights and permissions

About this article

Cite this article

Pei, J., Deng, L., Song, S. et al. Towards artificial general intelligence with hybrid Tianjic chip architecture. Nature 572, 106–111 (2019). https://doi.org/10.1038/s41586-019-1424-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41586-019-1424-8

This article is cited by

-

Temporal dendritic heterogeneity incorporated with spiking neural networks for learning multi-timescale dynamics

Nature Communications (2024)

-

Local prediction-learning in high-dimensional spaces enables neural networks to plan

Nature Communications (2024)

-

Research on General-Purpose Brain-Inspired Computing Systems

Journal of Computer Science and Technology (2024)

-

MSAT: biologically inspired multistage adaptive threshold for conversion of spiking neural networks

Neural Computing and Applications (2024)

-

Error-Aware Conversion from ANN to SNN via Post-training Parameter Calibration

International Journal of Computer Vision (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.