Abstract

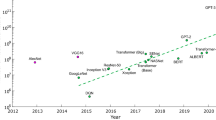

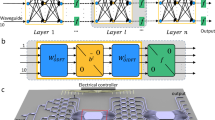

Deep neural networks (DNNs) are reshaping the field of information processing. With the exponential growth of these DNNs challenging existing computing hardware, optical neural networks (ONNs) have recently emerged to process DNN tasks with high clock rates, parallelism and low-loss data transmission. However, existing challenges for ONNs are high energy consumption due to their low electro-optic conversion efficiency, low compute density due to large device footprints and channel crosstalk, and long latency due to the lack of inline nonlinearity. Here we experimentally demonstrate a spatial-temporal-multiplexed ONN system that simultaneously overcomes all these challenges. We exploit neuron encoding with volume-manufactured micrometre-scale vertical-cavity surface-emitting laser (VCSEL) arrays that exhibit efficient electro-optic conversion (<5 attojoules per symbol with a π-phase-shift voltage of Vπ = 4 mV) and compact footprint (<0.01 mm2 per device). Homodyne photoelectric multiplication allows matrix operations at the quantum-noise limit and detection-based optical nonlinearity with instantaneous response. With three-dimensional neural connectivity, our system can reach an energy efficiency of 7 femtojoules per operation (OP) with a compute density of 6 teraOP mm−2 s−1, representing 100-fold and 20-fold improvements, respectively, over state-of-the-art digital processors. Near-term development could improve these metrics by two more orders of magnitude. Our optoelectronic processor opens new avenues to accelerate machine learning tasks from data centres to decentralized devices.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$209.00 per year

only $17.42 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

All the data that support the findings of this study are included in the main text and Supplementary Information. The data are available from the corresponding authors upon reasonable request.

References

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. ImageNet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems (eds Pereira, F., Burges, C., Bottou, L. & Weinberger, K.) Vol. 25 (Curran Associates, 2012); https://proceedings.neurips.cc/paper/2012/file/c399862d3b9d6b76c8436e924a68c45b-Paper.pdf

Serre, T., Wolf, L., Bileschi, S., Riesenhuber, M. & Poggio, T. Robust object recognition with cortex-like mechanisms. IEEE Trans. Pattern Anal. Mach. Intell. 29, 411–426 (2007).

Young, T., Hazarika, D., Poria, S. & Cambria, E. Recent trends in deep learning based natural language processing. IEEE Comput. Intell. Mag. 13, 55–75 (2018).

Mnih, V. et al. Human-level control through deep reinforcement learning. Nature 518, 529–533 (2015).

Vamathevan, J. et al. Applications of machine learning in drug discovery and development. Nat. Rev. Drug Discov. 18, 463–477 (2019).

Noé, F., Tkatchenko, A., Müller, K.-R. & Clementi, C. Machine learning for molecular simulation. Annu. Rev. Phys. Chem. 71, 361–390 (2020).

Xu, X. et al. Scaling for edge inference of deep neural networks. Nat. Electron. 1, 216–222 (2018).

Brown, T. B. et al. Language models are few-shot learners. In Proc. of the 34th International Conference on Neural Information Processing Systems (NeurIPS) 1877–1901 (2020); https://proceedings.neurips.cc/paper/2020/file/1457c0d6bfcb4967418bfb8ac142f64a-Paper.pdf

Dennard, R. et al. Design of ion-implanted MOSFET’s with very small physical dimensions. IEEE J. Solid-State Circuits 9, 256–268 (1974).

Horowitz, M. 1.1 Computing’s energy problem (and what we can do about it). In 2014 IEEE International Solid-State Circuits Conference Digest of Technical Papers (ISSCC) 10–14 (IEEE, 2014).

Nahmias, M. A. et al. Photonic multiply-accumulate operations for neural networks. IEEE J. Select. Topics Quantum Electron. 26, 1–18 (2020).

Wetzstein, G. et al. Inference in artificial intelligence with deep optics and photonics. Nature 588, 39–47 (2020).

Zhou, H. et al. Photonic matrix multiplication lights up photonic accelerator and beyond. Light Sci. Appl. 11, 30 (2022).

Keckler, S. W., Dally, W. J., Khailany, B., Garland, M. & Glasco, D. GPUs and the future of parallel computing. IEEE Micro 31, 7–17 (2011).

Jouppi, N. P. et al. In-datacenter performance analysis of a tensor processing unit. In Proc. 44th Annual International Symposium on Computer Architecture 1–12 (Association for Computing Machinery, 2017); https://doi.org/10.1145/3079856.3080246

Chen, T. et al. DianNao: a small-footprint high-throughput accelerator for ubiquitous machine-learning. SIGARCH Comput. Archit. News 42, 269–284 (2014).

Sze, V., Chen, Y.-H., Yang, T.-J. & Emer, J. S. Efficient processing of deep neural networks: a tutorial and survey. Proc. IEEE 105, 2295–2329 (2017).

Miller, D. A. B. Attojoule optoelectronics for low-energy information processing and communications. J. Lightwave Technol. 35, 346–396 (2017).

Shen, Y. et al. Deep learning with coherent nanophotonic circuits. Nat. Photon. 11, 441–446 (2017).

Tait, A. N. et al. Neuromorphic photonic networks using silicon photonic weight banks. Sci. Rep. 7, 7430 (2017).

Ashtiani, F., Geers, A. J. & Aflatouni, F. An on-chip photonic deep neural network for image classification. Nature 606, 501–506 (2022).

Feldmann, J., Youngblood, N., Wright, C. D., Bhaskaran, H. & Pernice, W. H. P. All-optical spiking neurosynaptic networks with self-learning capabilities. Nature 569, 208–214 (2019).

Feldmann, J. et al. Parallel convolutional processing using an integrated photonic tensor core. Nature 589, 52–58 (2021).

Lin, X. et al. All-optical machine learning using diffractive deep neural networks. Science 361, 1004–1008 (2018).

Zhou, T. et al. Large-scale neuromorphic optoelectronic computing with a reconfigurable diffractive processing unit. Nat. Photon. 15, 367–373 (2021).

Xu, X. et al. 11 TOPS photonic convolutional accelerator for optical neural networks. Nature 589, 44–51 (2021).

Sludds, A. et al. Delocalized photonic deep learning on the internet’s edge. Science 378, 270–276 (2022).

Wang, T. et al. An optical neural network using less than 1 photon per multiplication. Nat. Commun. 13, 123 (2022).

Wang, C. et al. Integrated lithium niobate electro-optic modulators operating at CMOS-compatible voltages. Nature 562, 101–104 (2018).

Zuo, Y. et al. All-optical neural network with nonlinear activation functions. Optica 6, 1132–1137 (2019).

Li, G. H. et al. All-optical ultrafast ReLU function for energy-efficient nanophotonic deep learning. Nanophotonics 12, 847–855 (2022).

Tait, A. N. et al. Silicon photonic modulator neuron. Phys. Rev. Appl. 11, 064043 (2019).

Kim, I. et al. Nanophotonics for light detection and ranging technology. Nat. Nanotechnol. 16, 508–524 (2021).

Liu, A., Wolf, P., Lott, J. A. & Bimberg, D. Vertical-cavity surface-emitting lasers for data communication and sensing. Photon. Res. 7, 121–136 (2019).

Koyama, F. Recent advances of VCSEL photonics. J. Lightwave Technol. 24, 4502–4513 (2006).

Ossiander, M. et al. Absolute timing of the photoelectric effect. Nature 561, 374–377 (2018).

Tait, A. N. et al. Silicon photonic modulator neuron. Phys. Rev. Appl. 11, 064043 (2019).

Heidari, E., Dalir, H., Ahmed, M., Sorger, V. J. & Chen, R. T. Hexagonal transverse-coupled-cavity VCSEL redefining the high-speed lasers. Nanophotonics 9, 4743–4748 (2020).

Hoghooghi, N., Ozdur, I., Akbulut, M., Davila-Rodriguez, J. & Delfyett, P. J. Resonant cavity linear interferometric intensity modulator. Opt. Lett. 35, 1218–1220 (2010).

Using VCSELs in 3D Sensing Applications (Finisar Corporation, 2022); https://www.semiconchina.org/Semicon_China_Manager/upload/kindeditor/file/20190415/20190415103954_498.pdf

Hamerly, R., Bernstein, L., Sludds, A., Soljačić, M. & Englund, D. Large-scale optical neural networks based on photoelectric multiplication. Phys. Rev. X 9, 021032 (2019).

Hubara, I., Courbariaux, M., Soudry, D., El-Yaniv, R. & Bengio, Y. Quantized neural networks: training neural networks with low precision weights and activations. J. Mach. Learn. Res. 18, 6869–6898 (2017).

Jager, R. et al. 57% wallplug efficiency oxide-confined 850 nm wavelength GaAs VCSELs. Electron. Lett. 33, 330–331 (1997).

Kumari, S. et al. Vertical-cavity silicon-integrated laser with in-plane waveguide emission at 850 nm. Laser Photon. Rev. 12, 1700206 (2018).

Yang, Y., Djogo, G., Haque, M., Herman, P. R. & Poon, J. K. S. Integration of an O-band VCSEL on silicon photonics with polarization maintenance and waveguide coupling. Opt. Express 25, 5758–5771 (2017).

Atabaki, A. H. et al. Integrating photonics with silicon nanoelectronics for the next generation of systems on a chip. Nature 556, 349–354 (2018).

Sun, C. et al. Single-chip microprocessor that communicates directly using light. Nature 528, 534–538 (2015).

Heuser, T. et al. Developing a photonic hardware platform for brain-inspired computing based on 5 × 5 VCSEL arrays. J. Phys. Photon. 2, 044002 (2020).

Rowland, J., Perrella, C., Light, P., Sparkes, B. M. & Luiten, A. N. Using an injection-locked VCSEL to produce Fourier-transform-limited optical pulses. Opt. Lett. 46, 412–415 (2021).

Bhooplapur, S., Hoghooghi, N. & Delfyett, P. J. Pulse shapes reconfigured on a pulse-to-pulse time scale by using an array of injection-locked VCSELs. Opt. Lett. 36, 1887–1889 (2011).

Ioffe, S. & Szegedy, C. Batch normalization: accelerating deep network training by reducing internal covariate shift. In Proc. 32nd International Conference on Machine Learning, Proceedings of Machine Learning Research Vol. 37 (eds Bach, F. & Blei, D.) 448–456 (PMLR, 2015); https://proceedings.mlr.press/v37/ioffe15.html

Mishkin, D. & Matas, J. All you need is a good init. In International Conference on Learning Representations (ICLR) (2016); http://cmp.felk.cvut.cz/~mishkdmy/papers/mishkin-iclr2016.pdf

Paszke, A. et al. PyTorch: an imperative style, high-performance deep learning library. In Advances in Neural Information Processing Systems (eds Wallach, H. et al.) Vol. 32 (Curran Associates, 2019); https://proceedings.neurips.cc/paper/2019/file/bdbca288fee7f92f2bfa9f7012727740-Paper.pdf

Yeap, G. et al. 5 nm CMOS production technology platform featuring full-fledged EUV, and high mobility channel FinFETs with densest 0.021 μm2 SRAM cells for mobile SoC and high performance computing applications. In Proc. 2019 IEEE International Electron Devices Meeting (IEDM) 36.7.1–36.7.4 (IEEE, 2019).

Hadibrata, W., Wei, H., Krishnaswamy, S. & Aydin, K. Inverse design and 3D printing of a metalens on an optical fiber tip for direct laser lithography. Nano Lett. 21, 2422–2428 (2021).

Acknowledgements

This work is supported by the Army Research Office under grant no. W911NF17-1-0527, NTT Research under project no. 6942193 and NTT Netcast award 6945207. I.C. acknowledges support from the National Defense Science and Engineering Graduate Fellowship Program and National Science Foundation (NSF) award DMR-1747426. L.B. is supported by the National Science Foundation EAGER programme (CNS-1946976) and the Natural Sciences and Engineering Research Council of Canada (PGSD3-517053-2018). L.A. acknowledges support from the NSF Graduate Research Fellowship (grant no. 1745302). S.R. acknowledges financial support from the Volkswagen Foundation via the project NeuroQNet 2. We thank S. Bandyopadhyay and C. Brabec of MIT and Y. Cui of TUM for comments on the manuscript. We also thank D.A.B. Miller of Stanford University, N. Harris and D. Bunandar of Lightmatter, and S. Lloyd of MIT for informative discussions.

Author information

Authors and Affiliations

Contributions

Z.C., R.H. and D.E. conceived the experiments. Z.C. performed the experiment, assisted by A.S., R.D. and I.C. A.S. conducted high-speed measurements on the VCSEL transmitters and developed the integrating electronics. R.D. created the software model for neural network training. I.C. performed electronic packaging on the VCSEL arrays. L.A. assisted with assembling an initial set-up for testing VCSEL samples. A.S. and L.B. assisted with discussions on the experimental data. T.H., N.H., J.A.L. and S.R. designed and fabricated the VCSEL arrays and characterized their performance. R.H. and D.E. provided critical insights regarding the experimental implementation and results analysis. Z.C. wrote the manuscript with contributions from all authors.

Corresponding authors

Ethics declarations

Competing interests

Z.C., D.E. and R.H. have filed a patent related to VCSEL ONNs, under application no. 63/341,601. D.E. serves as scientific advisor to and holds equity in Lightmatter Inc. A.S. is a senior photonic architect at Lightmatter Inc. and holds equity. Other authors declare no competing interests.

Peer review

Peer review information

Nature Photonics thanks Xingyuan Xu and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Figs. 1–12, Discussion and Tables 1–3.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chen, Z., Sludds, A., Davis, R. et al. Deep learning with coherent VCSEL neural networks. Nat. Photon. 17, 723–730 (2023). https://doi.org/10.1038/s41566-023-01233-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41566-023-01233-w

This article is cited by

-

Multi-junction cascaded vertical-cavity surface-emitting laser with a high power conversion efficiency of 74%

Light: Science & Applications (2024)

-

Fiber optic computing using distributed feedback

Communications Physics (2024)

-

An optoacoustic field-programmable perceptron for recurrent neural networks

Nature Communications (2024)