Abstract

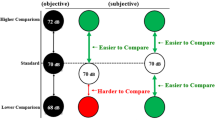

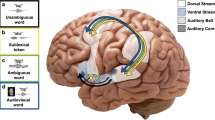

The way top-down and bottom-up processes interact to shape our perception and behaviour is a fundamental question and remains highly controversial. How early in a processing stream do such interactions occur, and what factors govern such interactions? The degree of abstractness of a perceptual attribute (for example, orientation versus shape in vision, or loudness versus sound identity in hearing) may determine the locus of neural processing and interaction between bottom-up and internal information. Using an imagery-perception repetition paradigm, we find that imagined speech affects subsequent auditory perception, even for a low-level attribute such as loudness. This effect is observed in early auditory responses in magnetoencephalography and electroencephalography that correlate with behavioural loudness ratings. The results suggest that the internal reconstruction of neural representations without external stimulation is flexibly regulated by task demands, and that such top-down processes can interact with bottom-up information at an early perceptual stage to modulate perception.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

References

Gilbert, C. D. & Li, W. Top-down influences on visual processing. Nat. Rev. Neurosci. 14, 350–363 (2013).

Firestone, C. & Scholl, B. J. Cognition does not affect perception: evaluating the evidence for ‘top-down’ effects. Behav. Brain Sci. 39, e229 (2016).

Molloy, K., Griffiths, T. D., Chait, M. & Lavie, N. Inattentional deafness: visual load leads to time-specific suppression of auditory evoked responses. J. Neurosci. 35, 16046–16054 (2015).

Tian, X. & Poeppel, D. Mental imagery of speech: linking motor and perceptual systems through internal simulation and estimation. Front. Hum. Neurosci. 6, 314 (2012).

Tian, X. & Poeppel, D. The effect of imagination on stimulation: the functional specificity of efference copies in speech processing. J. Cogn. Neurosci. 25, 1020–1036 (2013).

Tian, X., Zarate, J. M. & Poeppel, D. Mental imagery of speech implicates two mechanisms of perceptual reactivation. Cortex 77, 1–12 (2016).

Wheeler, M. E., Petersen, S. E. & Buckner, R. L. Memory’s echo: vivid remembering reactivates sensory-specific cortex. Proc. Natl Acad. Sci. USA 97, 11125–11129 (2000).

Kosslyn, S. M., Ganis, G. & Thompson, W. L. Neural foundations of imagery. Nat. Rev. Neurosci. 2, 635–642 (2001).

Zatorre, R. J. & Halpern, A. R. Mental concerts: musical imagery and auditory cortex. Neuron 47, 9–12 (2005).

Kosslyn, S. M. et al. The role of area 17 in visual imagery: convergent evidence from PET and rTMS. Science 284, 167–170 (1999).

Slotnick, S. D., Thompson, W. L. & Kosslyn, S. M. Visual mental imagery induces retinotopically organized activation of early visual areas. Cereb. Cortex 15, 1570–1583 (2005).

Thirion, B. et al. Inverse retinotopy: inferring the visual content of images from brain activation patterns. Neuroimage 33, 1104–1116 (2006).

Bunzeck, N., Wuestenberg, T., Lutz, K., Heinze, H.-J. & Jancke, L. Scanning silence: mental imagery of complex sounds. Neuroimage 26, 1119–1127 (2005).

Halpern, A. R. & Zatorre, R. J. When that tune runs through your head: a PET investigation of auditory imagery for familiar melodies. Cereb. Cortex 9, 697–704 (1999).

Kosslyn, S. M. & Thompson, W. L. When is early visual cortex activated during visual mental imagery? Psychol. Bull. 129, 723–746 (2003).

Tartaglia, E. M., Bamert, L., Mast, F. W. & Herzog, M. H. Human perceptual learning by mental imagery. Curr. Biol. 19, 2081–2085 (2009).

Pearson, J., Clifford, C. W. & Tong, F. The functional impact of mental imagery on conscious perception. Curr. Biol. 18, 982–986 (2008).

Pearson, J., Rademaker, R. L. & Tong, F. Evaluating the mind’s eye: the metacognition of visual imagery. Psychol. Sci. 22, 1535–1542 (2011).

Laeng, B. & Sulutvedt, U. The eye pupil adjusts to imaginary light. Psychol. Sci. 25, 188–197 (2014).

Scott, M. Corollary discharge provides the sensory content of inner speech. Psychol. Sci. 24, 1824–1830 (2013).

Grill-Spector, K., Henson, R. & Martin, A. Repetition and the brain: neural models of stimulus-specific effects. Trends Cogn. Sci. 10, 14–23 (2006).

Tian, X. & Huber, D. E. Measures of spatial similarity and response magnitude in MEG and scalp EEG. Brain Topogr. 20, 131–141 (2008).

Tian, X., Poeppel, D. & Huber, D. E. TopoToolbox: using sensor topography to calculate psychologically meaningful measures from event-related EEG/MEG. Comput. Intell. Neurosci. 2011, 674605 (2011).

Roberts, T. P. L., Ferrari, P., Stufflebeam, S. M. & Poeppel, D. Latency of the auditory evoked neuromagnetic field components: stimulus dependence and insights toward perception. J. Clin. Neurophysiol. 17, 114–129 (2000).

Kraemer, D. J., Macrae, C. N., Green, A. E. & Kelley, W. M. Musical imagery: sound of silence activates auditory cortex. Nature 434, 158 (2005).

Oh, J., Kwon, J. H., Yang, P. S. & Jeong, J. Auditory imagery modulates frequency-specific areas in the human auditory cortex. J. Cogn. Neurosci. 25, 175–187 (2013).

Linke, A. C. & Cusack, R. Flexible information coding in human auditory cortex during perception, imagery, and STM of complex sounds. J. Cogn. Neurosci. 27, 1322–1333 (2015).

Cebrian, A. N. & Janata, P. Electrophysiological correlates of accurate mental image formation in auditory perception and imagery tasks. Brain Res. 1342, 39–54 (2010).

Herholz, S. C., Lappe, C., Knief, A. & Pantev, C. Neural basis of music imagery and the effect of musical expertise. Eur. J. Neurosci. 28, 2352–2360 (2008).

Wu, J., Yu, Z., Mai, X., Wei, J. & Luo, Y. Pitch and loudness information encoded in auditory imagery as revealed by event‐related potentials. Psychophysiology 48, 415–419 (2011).

Helson, H. Current trends and issues in adaptation-level theory. Am. Psychol. 19, 26–38 (1964).

Stevens, S. S. Adaptation-level vs. the relativity of judgment. Am. J. Psychol. 71, 633–646 (1958).

Heeger, D. J. Normalization of cell responses in cat striate cortex. Vis. Neurosci. 9, 181–197 (1992).

Louie, K., Grattan, L. E. & Glimcher, P. W. Reward value-based gain control: divisive normalization in parietal cortex. J. Neurosci. 31, 10627–10639 (2011).

Mapes-Riordan, D. & Yost, W. A. Loudness recalibration as a function of level. J. Acoust. Soc. Am. 106, 3506–3511 (1999).

Arieh, Y. & Marks, L. E. Recalibrating the auditory system: a speed-accuracy analysis of intensity perception. J. Exp. Psychol. Hum. Percept. Perform. 29, 523–536 (2003).

Brascamp, J. W., Knapen, T. H., Kanai, R., van Ee, R. & van den Berg, A. V. Flash suppression and flash facilitation in binocular rivalry. J. Vision. 7, 12.1-12 (2007).

Scott, M. Corollary discharge provides the sensory content of inner speech. Psychol. Sci. 24, 1824–1830 (2013).

Tian, X. & Poeppel, D. Dynamics of self-monitoring and error detection in speech production: evidence from mental imagery and MEG. J. Cogn. Neurosci. 27, 352–364 (2015).

Moseley, P., Smailes, D., Ellison, A. & Fernyhough, C. The effect of auditory verbal imagery on signal detection in hallucination-prone individuals. Cognition 146, 206–216 (2016).

Ford, J. M. et al. Neurophysiological studies of auditory verbal hallucinations. Schizophr. Bull. 38, 715–723 (2012).

Ford, J. M. et al. Tuning in to the voices: a multisite FMRI study of auditory hallucinations. Schizophr. Bull. 35, 58–66 (2009).

Mathalon, D. H., Jorgensen, K. W., Roach, B. J. & Ford, J. M. Error detection failures in schizophrenia: ERPs and fMRI. Int. J. Psychophysiol. 73, 109–117 (2009).

Perez, V. B. et al. Error monitoring dysfunction across the illness course of schizophrenia. J. Abnorm. Psychol. 121, 372–387 (2012).

De Cheveigné, A. & Simon, J. Z. Denoising based on time-shift PCA. J. Neurosci. Methods 165, 297–305 (2007).

Almeida, D. & Poeppel, D. Word-specific repetition effects revealed by MEG and the implications for lexical access. Brain Lang. 127, 497–509 (2013).

Davelaar, E. J., Tian, X., Weidemann, C. T. & Huber, D. E. A habituation account of change detection in same/different judgments. Cogn. Affect. Behav. Neurosci. 11, 608–626 (2011).

Huber, D. E., Tian, X., Curran, T., O’Reilly, R. C. & Woroch, B. The dynamics of integration and separation: ERP, MEG, and neural network studies of immediate repetition effects. J. Exp. Psychol. Hum. Percept. Perform. 34, 1389–1416 (2008).

Luo, H., Tian, X., Song, K., Zhou, K. & Poeppel, D. Neural response phase tracks how listeners learn new acoustic representations. Curr. Biol. 23, 968–974 (2013).

Tian, X. & Huber, D. E. Playing “duck duck goose” with neurons: change detection through connectivity reduction. Psychol. Sci. 24, 819–827 (2013).

Tian, X. & Poeppel, D. Mental imagery of speech and movement implicates the dynamics of internal forward models. Front. Psychol. 1, 166 (2010).

Hämäläinen, M., Hari, R., Ilmoniemi, R. J., Knuutila, J. & Lounasmaa, O. V. Magnetoencephalography—theory, instrumentation, and applications to noninvasive studies of the working human brain. Rev. Mod. Phys. 65, 413–497 (1993).

Lin, F. H., Belliveau, J. W., Dale, A. M. & Hämäläinen, M. S. Distributed current estimates using cortical orientation constraints. Hum. Brain Mapp. 27, 1–13 (2006).

Fischl, B., Sereno, M. I. & Dale, A. M. Cortical surface-based analysis. II: Inflation, flattening, and a surface-based coordinate system. Neuroimage 9, 195–207 (1999).

Fischl, B., Sereno, M. I., Tootell, R. B. H. & Dale, A. M. High-resolution intersubject averaging and a coordinate system for the cortical surface. Hum. Brain Mapp. 8, 272–284 (1999).

Dale, A. M. et al. Dynamic statistical parametric mapping: combining fMRI and MEG for high-resolution imaging of cortical activity. Neuron 26, 55–67 (2000).

Delorme, A. & Makeig, S. EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21 (2004).

Lopez-Calderon, J. & Luck, S. J. ERPLAB: an open-source toolbox for the analysis of event-related potentials. Front. Hum. Neurosci. 8, 213 (2014).

Rouder, J. N., Speckman, P. L., Sun, D., Morey, R. D. & Iverson, G. Bayesian t-tests for accepting and rejecting the null hypothesis. Psychon. Bull. Rev. 16, 225–237 (2009).

Acknowledgements

We thank J. Walker for technical support with MEG data collection, S. Yuan for help with running the EEG experiment, Q. Xu for help with running BE2–BE4 and L. Tao for comments and edits on an early draft. This study was supported by the National Natural Science Foundation of China (31500914 to X.T., and 31771248 and 31500873 to N.D.), the Major Program of the Science and Technology Commission of Shanghai Municipality (15JC1400104 and 17JC1404104), the Program of Introducing Talents of Discipline to Universities (Base B16018), a grant from the New York University Global Seed Grants for Collaborative Research (85-65701-G0757-R4551), the Joint Research Institute Seed Grants for Research Collaboration from the New York University-East China Normal University Institute of Brain and Cognitive Science at New York University, Shanghai (to X.T.), the Zhejiang Provincial Natural Science Foundation of China (LR16C090002), research funding from the State Key Laboratory of Industrial Control Technology, Zhejiang University (to N.D.) and National Institutes of Health 2R01DC05660 (to D.P.). No funders had any role in study design, data collection and analysis, decision to publish or preparation of the manuscript.

Author information

Authors and Affiliations

Contributions

X.Tian conceived and designed the study, performed BE1 and the MEG experiments and analysed the data. F.B. performed BE2–BE4 and the EEG experiments. X.Tian, N.D., X.Teng and D.P. wrote the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

About this article

Cite this article

Tian, X., Ding, N., Teng, X. et al. Imagined speech influences perceived loudness of sound. Nat Hum Behav 2, 225–234 (2018). https://doi.org/10.1038/s41562-018-0305-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41562-018-0305-8

This article is cited by

-

Preparatory delta phase response is correlated with naturalistic speech comprehension performance

Cognitive Neurodynamics (2022)

-

Moment-by-moment tracking of naturalistic learning and its underlying hippocampo-cortical interactions

Nature Communications (2021)

-

The functional relations among motor-based prediction, sensory goals and feedback in learning non-native speech sounds: Evidence from adult Mandarin Chinese speakers with an auditory feedback masking paradigm

Scientific Reports (2018)

-

The impact of perilaryngeal vibration on the self-perception of loudness and the Lombard effect

Experimental Brain Research (2018)