Abstract

Modeling chemical reactions and complicated molecular systems has been proposed as the “killer application” of a future quantum computer. Accurate calculations of derivatives of molecular eigenenergies are essential toward this end, allowing for geometry optimization, transition state searches, predictions of the response to an applied electric or magnetic field, and molecular dynamics simulations. In this work, we survey methods to calculate energy derivatives, and present two new methods: one based on quantum phase estimation, the other on a low-order response approximation. We calculate asymptotic error bounds and approximate computational scalings for the methods presented. Implementing these methods, we perform geometry optimization on an experimental quantum processor, estimating the equilibrium bond length of the dihydrogen molecule to within \(0.014\) Å of the full configuration interaction value. Within the same experiment, we estimate the polarizability of the H\({}_{2}\) molecule, finding agreement at the equilibrium bond length to within \(0.06\) a.u. (\(2 \%\) relative error).

Similar content being viewed by others

Introduction

Quantum computers are at the verge of providing solutions for certain classes of problems that are intractable on a classical computer.1 As this threshold nears, an important next step is to investigate how these new possibilities can be translated into useful algorithms for specific scientific domains. Quantum chemistry has been identified as a key area where quantum computers can stop being science and start doing science.2,3,4,5 This observation has lead to an intense scientific effort towards developing and improving quantum algorithms for simulating time evolution6,7 and calculating ground state energies8,9,10,11 of molecular systems. Small prototypes of these algorithms have been implemented experimentally with much success.10,12,13,14,15 However, advances over the last century in classical computational chemistry methods, such as density functional theory (DFT),16 coupled cluster (CC) theory,17 and quantum Monte-Carlo methods,18 set a high bar for quantum computers to make impact in the field.

The ground and/or excited state energy is only one of the targets for quantum chemistry calculations. For many applications one also needs to be able to calculate the derivatives of the molecular electronic energy with respect to a change in the Hamiltonian.19,20 For example, the energy gradient (or first-order derivative) for nuclear displacements is used to search for minima, transition states, and reaction paths21 that characterize a molecular potential energy surface (PES). They also form the basis for molecular dynamics (MD) simulations to dynamically explore the phase space of the system in its electronic ground state22 or, after a photochemical transition, in its electronically excited state.23 While classical MD usually relies on force-fields which are parameterized on experimental data, there is a growing need to obtain these parameters on the basis of accurate quantum chemical calculations. One can easily foresee a powerful combination of highly accurate forces generated on a quantum computer with machine-learning algorithms for the generation of reliable and broadly applicable force-fields.24 This route might be particularly important in exploring excited state PES and non-adiabatic coupling terms, which are relevant in describing light-induced chemical reactions.25,26,27 Apart from these perturbations arising from changing the nuclear positions, it is also of interest to consider the effect that small external electric and/or magnetic fields have on the molecular energy. These determine well-known molecular properties, such as the (hyper)polarizability, magnetizability, A- and g-tensors, nuclear magnetic shieldings, among others.

Although quantum algorithms have been suggested to calculate derivatives of a function represented on a quantum register,28,29,30,31,32 or of derivatives of a variational quantum eigensolver (VQE) for optimization purposes,33,34 the extraction of molecular properties from quantum simulation has received relatively little focus. To the best of our knowledge only three investigations; in geometry optimization and molecular energy derivatives,35 molecular vibrations,36 and the linear response function;37 have been performed to date.

In this work, we survey methods for the calculation of molecular energy derivatives on a quantum computer. We calculate estimation errors and asymptotic convergence rates of these methods, and detail the classical pre- and post-processing required to convert quantum computing output to the desired quantities. As part of this, we detail two new methods for such derivative calculations. The first involves simultaneous quantum phase and transition amplitude (or propagator) estimation, which we name “propagator and phase estimation” (PPE). The second is based on truncating the Hilbert space to an approximate (relevant) set of eigenstates, which we name the “eigenstate truncation approximation” (ETA). We use these methods to perform geometry optimization of the H\({}_{2}\) molecule on a superconducting quantum processor, as well as its response to a small electric field (polarizability), and find excellent agreement with the full configuration interaction (FCI) solution.

Results

Let \(\hat{H}\) be a Hamiltonian on a \({2}^{{N}_{{\rm{sys}}}}\)-dimensional Hilbert space (e.g., the Fock space of an \({N}_{{\rm{sys}}}\)-spin orbital system), which has eigenstates

ordered by the energies \({E}_{j}\). In this definition, the Hamiltonian is parametrized by the specific basis set that is used and has additional coefficients \({\lambda }_{1},{\lambda }_{2},\ldots\), which reflect fixed external influences on the electronic energy (e.g., change in the structure of the molecule, or an applied magnetic or electric field). An \(d\)th-order derivative of the ground state energy with respect to the parameters \({\lambda }_{i}\) is then defined as

where \(d={\sum }_{i}{d}_{i}\). As quantum computers promise exponential advantages in calculating the ground state \({E}_{0}\) itself, it is a natural question to ask how to efficiently calculate such derivatives on a quantum computer.

The quantum chemical Hamiltonian

A major subfield of computational chemistry concerns solving the electronic structure problem. Here, the system takes a second-quantized ab initio Hamiltonian, written in a basis of molecular spinors \(\{{\phi }_{p}({\bf{r}})\}\) as follows

where \({\hat{E}}_{pq}={\hat{c}}_{p}^{\dagger }{\hat{c}}_{q}\) and \({\hat{c}}_{p}^{\dagger }\) (\({\hat{c}}_{p}\)) creates (annihilates) an electron in the molecular spinor \({\phi }_{p}\). With Eq. (3) relativistic and nonrelativistic realizations of the method only differ in the definition of the matrix elements \({h}_{pq}\) and \({g}_{pqrs}\).38 A common technique is to assume pure spin-orbitals and integrate over the spin variable. As we want to develop a formalism that is also valid for relativistic calculations, we will remain working with spinors in this work. Adaptation to a spinfree formalism is straightforward, and will not affect computational scaling and error estimates.

The electronic Hamiltonian defined above depends parametrically on the nuclear positions, both explicitly via the nuclear potential and implicitly via the molecular orbitals that change when the nuclei are displaced.

Asymptotic convergence of energy derivative estimation methods

In this section, we present and compare various methods for calculating energy derivatives on a quantum computer. In Table 1, we estimate the computational complexity of all studied methods in terms of the system size \({N}_{{\rm{sys}}}\) and the estimation error \(\epsilon\). We also indicate which methods require quantum phase estimation (QPE), as these require longer coherence times than variational methods. Many methods benefit from the amplitude estimation algorithm,39 which we have included costings for. We approximate the scaling in \({N}_{{\rm{sys}}}\) between a best-case scenario (a lattice model with a low-weight energy derivative and aggressive truncation of any approximations), and a worst-case scenario (the electronic structure problem with a high-weight energy derivative and less aggressive truncation). The lower bounds obtained here are competitive with classical codes, suggesting that these methods will be tractable for use in large-scale quantum computing. However, the upper bounds will need reduction in future work to be practical, e.g., by implementing the strategies suggested in ref. 11,33,40

For wavefunctions in which all parameters are variationally optimized, the Hellmann–Feynman theorem allows for ready calculation of energy gradients as the expectation value of the perturbing operator35,41

This expectation value may be estimated by repeated measurement of a prepared ground state on a quantum computer (Section IV C), and classical calculation of the coefficients of the Hermitian operator \(\partial \hat{H}/\partial \lambda\) (See Supplementary methods). If state preparation is performed using a VQE, estimates of the expectation values in Eq. (4) will often have already been obtained during the variational optimization routine. If one is preparing a state via QPE, one does not get these expectation values for free, and must repeatedly measure the quantum register on top of the phase estimation routine. Such measurement is possible even with single-ancilla QPE methods which do not project the system register into the ground state (see Section IV I). Regardless of the state preparation method, the estimation error may be calculated by summing the sampling noise of all measured terms (assuming the basis set error and ground state approximation errors are minimal).

The Hellmann–Feynman theorem cannot be so simply extended to higher-order energy derivatives. We now study three possible methods for such calculations. The PPE method uses repeated rounds of QPE to measure the frequency-domain Green’s function, building on previous work on Green’s function techniques.37,42,43 We may write an energy derivative via perturbation theory as a sum of products of path amplitudes \(A\) and energy coefficients \({f}_{A}\). For example, a second order energy derivative may be written as

allowing us to identify two amplitudes

and two corresponding energy coefficients

The generic form of a \(d\)th order energy derivative may be written as

where \({X}_{{\mathcal{A}}}\) counts the number of excitations in the path. As this is different from the number of responses of the wavefunction, \({X}_{{\mathcal{A}}}\) does not follow the \(2n+1\) rule; rather, \({X}_{{\mathcal{A}}}\le d\). The amplitudes \({\mathcal{A}}\) take the formFootnote 1

These may be estimated simultaneously with the corresponding energies \({E}_{{j}_{x}}\) by applying rounds of QPE in between excitations by operators \({\hat{P}}_{x}\) (Section IV J). One may then classically calculate the energy coefficients \({f}_{A}\), and evaluate Eq. (9). Performing such calculation over an exponentially large number of eigenstates \(\left|{\Psi }_{{j}_{x}}\right\rangle\) would be prohibitive. However, the quantum computer naturally bins small amplitudes of nearby energy with a resolution \(\Delta\) controllable by the user. We expect the resolution error to be smaller than the error in estimating the amplitudes \({\mathcal{A}}({j}_{1},\ldots ,{j}_{{X}_{{\mathcal{A}}}-1})\) (Section IV L); we use the latter for the results in Table 1.

In lieu of the ability to perform the long circuits required for phase estimation, one may approximate the sum over (exponentially many) eigenstates \(\left|{\Psi }_{j}\right\rangle\) in Eq. (9) by taking a truncated set of (polynomially many) approximate eigenstates \(\left|{\tilde{\Psi }}_{j}\right\rangle\). We call such an approximation the eigenstate truncation approximation, or ETA for short. However, on a quantum computer, we expect both to better approximate the true ground state \(\left|{\Psi }_{0}\right\rangle\), and to have a wider range of approximate excited states.14,40,44,45,46 In this work, we focus on the quantum subspace expansion (QSE) method.40 This method proceeds by generating a set of \({N}_{{\rm{E}}}\) vectors \(\left|{\chi }_{j}\right\rangle\) connected to the ground state \(\left|{\Psi }_{0}\right\rangle\) by excitation operators \({\hat{E}}_{j}\)

This is similar to truncating the Hilbert space using a linear excitation operator in the (classical) equation of motion CC (EOMCC) approach.47 The \(\left|{\chi }_{j}\right\rangle\) states are not guaranteed to be orthonormal; the overlap matrix

is not necessarily the identity. To generate the set \(\left|{\tilde{\Psi }}_{j}\right\rangle\) of orthonormal approximate eigenstates, one can calculate the projected Hamiltonian matrix

and solve the generalized eigenvalue problem

Regardless of the method used to generate the eigenstates \(\left|{\tilde{\Psi }}_{j}\right\rangle\), the dominant computational cost of the ETA is the need to estimate \({N}_{{\rm{E}}}^{2}\) matrix elements. Furthermore, to combine all matrix elements with constant error requires the variance of each estimation to scale as \({N}_{{\rm{E}}}^{-2}\) (assuming the error in each term is independent). This implies that, in the absence of amplitude amplification, the computational complexity scales as \({N}_{{\rm{E}}}^{4}\). Taking all single-particle excitations sets \({N}_{{\rm{E}}}\propto {N}_{{\rm{sys}}}^{2}\). However, in a lattice model one might consider taking only local excitations, setting \({N}_{{\rm{E}}}\propto {N}_{{\rm{sys}}}\). Further reductions to \({N}_{{\rm{E}}}\) will increase the systematic error from Hilbert space truncation (Section IV M), although this may be circumvented somewhat by extrapolation.

For the sake of completeness, we also consider here the cost of numerically estimating an energy derivative by estimating the energy at multiple points

Here, the latter formula is preferable if one has direct access to the derivative in a VQE via the Hellmann–Feynman theorem, while the former is preferable when one may estimate the energy directly via QPE. In either case, the sampling noise (Section IV C and Section IV G) is amplified by the division of \(\delta \lambda\). This error then competes with the \(O(\delta {\lambda }^{2})\) finite difference error, the balancing of which leads to the scaling laws in Table 1. This competition can be negated by coherently copying the energies at different \(\lambda\) to a quantum register of \(L\) ancilla qubits and performing the finite difference calculation there.28,48 Efficient circuits (and lower bounds) for the complexity of such an algorithm have not been determined, and proposed methods involve coherent calculation of the Hamiltonian coefficients on a quantum register. This would present a significant overhead on a near-term device, but with additive and better asymptotic scaling than the QPE step itself (which we use for the results in Table 1).

Geometry optimization on a superconducting quantum device

To demonstrate the use of energy derivatives directly calculated from a quantum computing experiment, we perform geometry optimization of the diatomic H\({}_{2}\) molecule, using two qubits of a superconducting transmon device. (Details of the experiment are given in Section IV N.) Geometry optimization aims to find the ground state molecular geometry by minimizing the ground state energy \({E}_{0}({\bf{R}})\) as a function of the atomic co-ordinates \({R}_{i}\). In this small system, rotational and translational symmetries reduce this to a minimization as a function of the bond distance \({R}_{{\rm{H-H}}}\) In Fig. 1, we illustrate this process by sketching the path taken by Newton’s minimization algorithm from a very distant initial bond distance (\({R}_{{\rm{H-H}}}=1.5\) Å). At each step of the minimization we show the gradient estimated via the Hellmann–Feynman theorem. Newton’s method additionally requires access to the Hessian, which we calculated via the ETA (details given in Section IV N). The optimization routine takes \(5\) steps to converge to a minimum bond length of \(0.749\) Å, within \(0.014\) Å of the target FCI equilibrium bond length (given the chosen STO-3G basis set). To demonstrate the optimization stability, we performed \(100\) simulations of the geometry optimization experiment on the quantumsim density–matrix simulator,49 with realistic sampling noise and coherence time fluctuations (details given in Section IV O). We plot all simulated optimization trajectories on Fig. 1, and highlight the median \(({R}_{{\rm{H-H}}},E({R}_{{\rm{H-H}}}))\) of the first \(7\) steps. Despite the rather dramatic variations between different gradient descent simulations, we observe all converging to within similar error bars, showing that our methods are indeed stable.

Illustration of geometry optimization of the H\({}_{2}\) molecule. A classical optimization algorithm (Newton) minimizes the estimation of the true ground state energy (dark blue curve) on a superconducting transmon quantum computer (red crosses) as a function of the bond distance \({R}_{{\rm{H-H}}}\). To improve convergence, the quantum computer provides estimates of the FCI gradient (red arrows) and the Hessian calculated with the response method. Dashed vertical lines show the position of the FCI and estimated minima (error \(0.014\)Å). Light blue dashed lines show the median value of \(100\) density matrix simulations (Section IV O) of this optimization, with the shaded region the corresponding interquartile range.

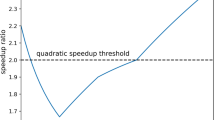

To study the advantage in geometry optimization from direct estimation of derivatives on a quantum computer, we compare in Fig. 2 our performance with gradient-free (Nelder–Mead) and Hessian-free (Broyden–Fletcher–Goldfarb–Shanno) optimization routines. We also compare the performance of the Newton’s method with an approximate Hessian from Hartree–Fock (HF) theory. All methods converge to near-identical minima, but all methods using experimentally provided gradient information converge about four times as fast as Nelder–Mead. The density–matrix simulations predict that the ETA method Hessians provide less stable convergence than the HF Hessians; we attribute this to the fact that the HF Hessian at a fixed bond distance does not fluctuate between iterations. We also find that the separation between Hessian and Hessian-free gradient descent methods is insignificant in this one-dimensional problem. However we expect this to become more stark at larger system sizes, as is observed typically in numerical optimization.50

Comparison of geometry optimization via different classical optimization routines, using a quantum computer to return energies and Jacobians as required, and estimating Hessians as required either via the ETA on the experimental device, or the Hartree–Fock (HF) approximation on a classical computer. Each algorithm was run till termination with a tolerance of \(1{0}^{-3}\), so as to be comparable to the final error in the system. (Inset) bar plot of the number of function evaluations of the four compared methods. Light blue points correspond to median \({N}_{{\rm{fev}}}\) from \(100\) density-matrix simulations (Section IV O) of geometry optimization, and error bars to the interquartile ranges.

To separate the performance of the energy derivative estimation from the optimization routine, we study the error in the energy \(E\), the Jacobian \(J\) and Hessian \(K\) given as \({\epsilon }_{A}=| {A}_{{\rm{FCI}}}-{A}_{{\rm{expt}}}|\), \((A=E,J,K).\) In Fig. 3, we plot these errors for different bond distances. For comparison we additionally plot the error in the HF Hessian approximation. We observe that the ETA Hessian is significantly closer than the HF-approximated Hessian to the true value, despite the similar performance in geometry optimization. The accuracy of the ETA improves at large bond distance, where the HF approximation begins to fail, giving hope that the ETA Hessian will remain appropriate in strongly correlated systems where this occurs as well. We also observe that the error in the ETA Hessian is approximately two times smaller than that of the energy, which we believe comes from error mitgation inherent in the QSE protocol.40 The large fluctuations in the error in the gradient (large green shaded area) suggest that the estimation error in these points is mostly stochastic and not biased (unlike the error in the energy, which is variationally bound).

Absolute error in energies and energy derivatives from an experimental quantum computation on \(11\) points of the bond dissociation curve of H\({}_{2}\). The error is dominated here by experimental sources (in particular qubit decay channels); error bars from sampling noise are smaller than the points themselves. Continuous lines connect the median value of \(100\) density matrix simulations at each points, with the shaded region corresponding to errors to the interquartile range.

Polarizability estimation

A key property to model in quantum chemistry is the polarizability, which describes the tendency of an atom or molecule to acquire an induced dipole moment due to a change in an external electric field \(\overrightarrow{F}\). The polarizability tensor may be calculated as \({\alpha }_{i,j}={\left.\frac{\partial E(\overrightarrow{F})}{\partial {F}_{i}\partial {F}_{j}}\right|}_{\overrightarrow{F}=0}.\)Footnote 2 In Fig. 4, we calculate the \(z\)-component of the polarizability tensor of H\({}_{2}\) in the ETA, and compare it to FCI and HF polarizability calculations on a classical computer. We observe good agreement to the target FCI result at low \({R}_{{\rm{H-H}}}\), finding a \(0.060\) a.u. (\(2.1 \%\)) error at the equilibrium bond distance (including the inaccuracy in estimating this distance). However, our predictions deviate from the exact result significantly at large bond distance (\({R}_{{\rm{H-H}}}\gtrsim 1.2\) Å). We attribute this deviation to the transformation used to reduce the description of H\({}_{2}\) to a two-qubit device (see Section IV N), which is no longer valid when adding the dipole moment operator to the Hamiltonian. To confirm this, we classically compute the FCI polarizability following the same transformation (which corresponds to projecting the larger operator onto a \(2\)-qubit Hilbert space). We find excellent agreement between this and the result from the quantum device across the entire bond dissociation curve. This implies that simulations of H\({}_{2}\) on a \(4\)-qubit device should match the FCI result within experimental error.

Discussion

In this work, we have surveyed possible methods for estimating energy gradients on a quantum computer, including two new techniques of our own design. We have estimated the computational complexity of these methods, both in terms of the accuracy required for the result and the size of the studied system. We have demonstrated the use of these methods on a small-scale quantum computing experiment, obtaining the equilibrium bond length of the H\({}_{2}\) molecule to \(0.014\) Å (\(2 \%\)) of the target FCI value, and estimating the polarizability at this bond length to within \(0.060\) a.u. (\(2.1 \%\)) of the same target.

Our methods do not particularly target the ground state over any other eigenstate of the system, and so can be used out-of-the-box for gradient estimation for excited state chemistry. They hold further potential for calculating frequency-domain Green’s functions in strongly correlated physics systems (as PPE estimates the gradient through a Green’s function calculation). However, throughout this work we made the assumption that the gap \(\delta\) between ground and excited state energies was sufficiently large to not be of concern (namely that \(\delta \propto {N}_{{\rm{sys}}}^{-1}\)). Many systems of interest (such as high-temperature superconductors) are characterized by gap closings in the continuum limit. How this affects PPE is an interesting question for future work. Further investigation is also required to improve some of the results drawn upon for this work, in particular reducing the number of measurements required during a VQE and improving amplitude estimation during single-round QPE.

Methods

Classical computation

The one- and two-electron integrals defining the fermionic Hamiltonian in Eq. (3) are obtained from a preliminary HF calculation that is assumed to be easily feasible on a classical computer. In non-relativistic theory the one-electron integrals are given by

where \(V({\bf{r}})\) is the electron-nuclear attraction potential from fixed nuclei at positions \({{\bf{R}}}_{i}\). The two-electron integrals are given by,

For simplicity we used a finite difference technique to compute the matrix representations of perturbations corresponding to a change in nuclear coordinates and an external electric field

and

where \(\delta \lambda =0.001\) corresponds to a small change in \(\lambda\). The above (perturbed) quantum chemical Hamiltonians have been determined within the Dirac program51 and transformed into qubit Hamiltonians using the OpenFermion52 package. This uses the newly developed, freely available53 OpenFermion-Dirac interface, allowing for the simulation of relativistic quantum chemistry calculations on a quantum computer. While a finite difference technique was sufficient for the present purpose, such schemes are sensitive to numerical noise and have a high computational cost when applied to larger molecular systems. A consideration of the analytical calculation of energy derivatives can be found in the Supplementary methods.

Approximate bound calculation details

In this section we detail our method for calculating the approximate bounds in Table 1. We first estimate the error \(\epsilon\) (Table 2, first row; details of the non-trivial calculations for the PPE and ETA methods given in Section IV H and Section IV M), respectively. Separately, we may calculate the time cost by multiplying the number of circuits, the number of repetitions of said circuits (\({n}_{{\rm{m}}}\), \({N}_{{\rm{m}}}\), and \(K\) depending on the method), and the time cost of each circuit (Table 2, second row). (This assumes access to only a single quantum processor, and can in some situations be improved by simultaneous measurement of commuting terms, as discussed in Section IV C.) We then choose the scaling of the number of circuit repetitions as a function of the other metaparameters to fix \(\epsilon\) constant (Table 2, third row). We finally substitute the lower and upper bounds for these metaparameters in terms of the system size as stated throughout the remaining sections. For reference, we summarize these bounds in Table 3.

Quantum simulation of the electronic structure problem — preliminaries

To represent the electronic structure problem on a quantum computer, we need to rewrite the fermionic creation and annihilation operators \({\hat{c}}_{i}^{\dagger }\), \({\hat{c}}_{i}\) in terms of qubit operators (e.g., elements of the Pauli basis \({{\mathbb{P}}}^{N}={\{I,X,Y,Z\}}^{\otimes N}\)). This is necessarily a non-local mapping, as local fermionic operators anti-commute, while qubit operators commute. A variety of such transformations are known, including the Jordan–Wigner,54,55 and Bravyi–Kitaev56 transformations, and more recent developments.57,58,59,60,61,62,63,64

After a suitable qubit representation has been found, we need to design quantum circuits to implement unitary transformations. Such circuits must be constructed from an appropriate set of primitive units, known as a (universal) gate-set. For example, one might choose the set of all single-qubit operators, and a two-qubit entangling gate such as the controlled-NOT, C-Phase, or iSWAP gates.65 One can then build the unitary operators \({e}^{i\theta \hat{P}}\) for \(\hat{P}\in {{\mathbb{P}}}^{N}\) exactly (in the absence of experimental noise) with a number of units and time linear in the size of \(\hat{P}\).66 (Here, size refers to the number of non-identity tensor factors of \(\hat{P}\).) Optimizing the scheduling and size of these circuits is an open area of research, but many improvements are already known.67

Transformations of a quantum state must be unitary, which is an issue if one wishes to prepare, e.g., \(\partial \hat{H}/\partial \lambda \left|{\Psi }_{0}\right\rangle\) on a quantum register (\(\partial \hat{H}/\partial \lambda\) is almost always not unitary). To circumvent this, one must decompose \(\partial \hat{H}/\partial \lambda\) as a sum of \({N}_{{\rm{U}}}\) unitary operators, perform a separate circuit for each unitary operator, and then combine the resulting measurements as appropriate. Such a decomposition may always be performed using the Pauli group (although better choices may exist). Each such decomposition incurs a multiplicative cost of \({N}_{{\rm{U}}}\) to the computation time, and further increases the error in any final result by at worst a factor of \({N}_{{\rm{U}}}^{1/2}\). This makes the computational complexities reported in Table 2 highly dependent on \({N}_{{\rm{U}}}\). The scaling of \({N}_{{\rm{U}}}\) with the system size is highly dependent on the operator to be decomposed and the choice of decomposition. When approximating this in Table 3 we use a range between \(O({N}_{{\rm{sys}}})\) (which would suffice for a local potential in a lattice model) to \(O({N}_{{\rm{sys}}}^{4})\) (for a two-body interaction).

To interface with the outside world, a quantum register needs to be appropriately measured. Similarly to unitary transformations, one builds these measurements from primitive operations, typically the measurement of a single qubit in the \(Z\) basis. This may be performed by prior unitary rotation, or by decomposing an operator \(\hat{O}\) into \({N}_{{\rm{T}}}\) Hermitian terms \({\hat{O}}_{i}\) (which may be measured separately). \({N}_{{\rm{T}}}\) differs from \({N}_{{\rm{U}}}\) defined above, as the first is for a Hermitian decomposition of a derivative operator and the second is for a unitary decomposition. Without a priori knowledge that the system is near an eigenstate of a operator \(\hat{O}\) to be measured, one must perform \({n}_{{\rm{m}}}\) repeated measurements of each \({\hat{O}}_{i}\) to estimate \(\langle \hat{O}\rangle\) to an accuracy \(\propto {n}_{{\rm{m}}}^{-1/2}{N}_{{\rm{T}}}^{1/2}\). As such measurement is destructive, this process requires \({n}_{{\rm{m}}}{N}_{{\rm{T}}}\) preparations and pre-rotations on top of the measurement time. This makes the computational costs reported in Table 2 highly dependent on \({N}_{{\rm{T}}}\). The scaling of \({N}_{{\rm{T}}}\) with the system size \({N}_{{\rm{sys}}}\) is highly dependent on the operator \(\hat{O}\) to be measured and the choice of measurements to be made.11,33 In Table 3, we assume a range between \(O({N}_{{\rm{sys}}})\) and \(O({N}_{{\rm{sys}}}^{4})\) to calculate the approximate computation cost. This is a slight upper bound, as terms can be measured simultaneously if they commute, and error bounds may be tightened by accounting for the covariance between non-commuting terms.11 The details on how this would improve the asymptotic scaling are still lacking in the literature, however, and so we leave this as an obvious target for future work.

Throughout this text we require the ability to measure a phase \({e}^{i\phi }\) between the \(\left|0\right\rangle\) and \(\left|1\right\rangle\) states of a single qubit. (This information is destroyed by a measurement in the \(Z\) basis, which may only obtain the amplitude on either state.) Let us generalize this to a mixed state on a single qubit, which has the density matrix65

where \({p}_{0}+{p}_{1}=1\), and \(0\le {p}_{+}\le \sqrt{{p}_{0}{p}_{1}}\le 0.5\). If one repeatedly performs the two circuits in Fig. 5 (which differ by a gate \(R=I\) or \(R={R}_{Z}={e}^{i\frac{\pi }{4}Z}\)), and estimates the probability of a final measurement \(m=0,1\), one may calculate

We define the circuit element \({M}_{T}\) throughout this work as the combination of the two circuits to extract a phase using this equation. As above, the error in estimating the real and imaginary parts of \(2{p}_{+}{e}^{i\phi }\) scales as \({n}_{{\rm{m}}}^{-1/2}\).

Definition of the circuit element \({M}_{T}\) used throughout this work to estimate the phase \({e}^{i\phi }\) on a single qubit. This is done by repeatedly preparing and measuring the qubit along two different axis, by a combination of rotation and Hadamard gates and measurement \({M}_{Z}\) in the computational basis. The final measurements may then be combined via Eq. (22).

Hamiltonian Simulation

Optimal decompositions for complicated unitary operators are not in general known. For the electronic structure problem, one often wants to perform time evolution by a Hamiltonian \(\hat{H}\), requiring a circuit for the unitary operator \(U={e}^{i\hat{H}t}\). For a local (fermionic or qubit) Hamiltonian, the length \({T}_{{\rm{U}}}\) of the required circuit is polynomial in the system size \({N}_{{\rm{sys}}}\). However, the coefficient of this polynomial is often quite large; this depends on the chosen Hamiltonian, its basis set representation, the filling factor \(\eta\) (i.e., number of particles), and whether additional ancilla qubits are used.4,5 Moreover, such circuits usually approximate the target unitary \(U\) with some \(\tilde{U}\) with some bounds on the error \({\epsilon }_{{\rm{H}}}=\parallel U-\tilde{U}{\parallel }_{{\rm{S}}}\). This bound \({\epsilon }_{{\rm{H}}}\) is proportional to the evolution time \(t\), providing a ‘speed limit’ for such simulation.68 For the electronic structure problem, current methods achieve scaling between \(O({N}_{{\rm{sys}}}^{2})\)69 and \(O({N}_{{\rm{sys}}}^{6})\)67,70 for the circuit length \({T}_{{\rm{U}}}\), assuming \(\eta \propto {N}_{{\rm{sys}}}\) (and fixed \(t\), \(\epsilon\)). (When \(\eta\) is sublinear in \({N}_{{\rm{sys}}}\), better results exist.71) The proven \(O({N}_{{\rm{sys}}}^{6})\) scaling is an upper bound, and most likely reduced by recent work.72,73 For simpler models, such as the Hubbard model, scalings between \(O(1)\) and \(O({N}_{{\rm{sys}}})\) are available.64,74 As we require \(t\propto {N}_{{\rm{sys}}}^{-1}\) for the purposes of phase estimation (described in Section IV G), this scaling is reduced by an additional factor throughout this work (though this cannot reduce the scaling below \(O(1)\)). For Table 3, we use a range of \({T}_{{\rm{U}}}=O(1)\) and \({T}_{{\rm{U}}}=O({N}_{{\rm{sys}}}^{5})\) when approximating the scaling of our methods with the system size.

Ground state preparation and measurement

A key requirement for our derivative estimation methods is the ability to prepare the ground state \(\left|{\Psi }_{0}\right\rangle\) or an approximation to it on the system register. Various methods exist for such preparation, including QPE (see Section IV G), adiabatic state preparation,75 VQE,10,11 and more recent developments.76,77 Some of these preparation methods (in particular adiabatic and variational methods) are unitary, whilst others (phase estimation) are projective. Given a unitary preparation method, one may determine whether the system remains in the ground state by inverting the unitary and measuring in the computational basis (Section IV C). By contrast, such determination for QPE requires another phase estimation round, either via multiple ancilla qubits or by extending the methods in Section IV G. Unitary preparation methods have a slight advantage in estimating expectation values of unitary operators \(\hat{U}\); the amplitude amplification algorithm39 improves convergence of estimating \(\langle \hat{U}\rangle\) from \(\epsilon \propto {T}^{-1/2}\) to \(\epsilon \propto {T}^{-1}\) (in a total computation time \(T\)). However, this algorithm requires repeated application of the unitary preparation while maintaining coherence, which is probably not achievable in the near-term. We list the computation time in Tables 1 and 2 both when amplitude amplification is (marked with \(* *\)) and is not available.

Regardless of the method used, state preparation has a time cost that scales with the total system size. For QPE, this is the time cost \(K{T}_{{\rm{U}}}\) of applying the required estimation circuits, where \(K\) is the total number of applications of \({e}^{i\hat{H}t}\).78 The scaling of a VQE is dependent on the variational ansatz chosen.11,33 The popular UCCSD ansatz for the electronic structure problem has a \(O({N}_{{\rm{sys}}}^{5})\) computational cost if implemented naively. However, recent work suggests aggressive truncation of the number of variational terms can reduce this as far as \(O({N}_{{\rm{sys}}}^{2})\).33 We take this as the range of scalings for our approximations in Table 1.

Systematic error from ground state approximations (state error)

Traditionally, VQEs are not guaranteed to prepare the true ground state \(\left|{\Psi }_{0}\right\rangle\), but instead some approximate ground state

Recent work has provided means of expanding VQEs iteratively to make the wavefunction error \(1-| {a}_{0}{| }^{2}\) arbitrarily small,79,80,81 although it is unclear how the time cost of these procedures scale with the system size. One may place very loose bounds on the error induces in the energy

where here \(\parallel \hat{H}{\parallel }_{{\rm{S}}}\) is the spectral norm of the Hamiltonian (its largest eigenvalue). (Note that while in general \(\parallel \hat{H}{\parallel }_{{\rm{S}}}\) is difficult to calculate, reasonable approximations are usually obtainable.) As \(\left|{\tilde{\Psi }}_{0}\right\rangle\) is chosen to minimize the approximate energy \({\tilde{E}}_{0}\), one expects to be much closer to the smaller bound than the larger. For an operator \(\hat{D}\) (such as a derivative operator \(\partial \hat{H}/\partial \lambda\)) other than the Hamiltonian, cross-terms will contribute to an additional error in the expectation value \({D}_{0}=\langle | \hat{D}| \rangle\)

One can bound this above in turn using the fact that

which leads to

Combining this with the error in the energy gives the bound

This ties the error in our derivative to the energy in our error, but with a square root factor that unfortunately slows down the convergence when the error is small. (This factor comes about precisely because we do not expect to be in an eigenstate of the derivative operator.) Unlike the above energy error, we cannot expect this bound to be loose without a good reason to believe that the orthogonal component \({\sum }_{j>0}{a}_{j}\left|{\Psi }_{j}\right\rangle\) has a similar energy gradient to the ground state. This will often not be the case; the low-energy excited state manifold is usually strongly coupled to the ground state by a physically-relevant excitation, causing the energies to move in opposite directions. Finding methods to circumvent this issue are obvious targets for future research. For example, one could optimize a VQE on a cost function other than the energy. One could also calculate the gradient in a reduced Hilbert space (see Section IV M) using eigenstates of \({\hat{H}}^{({\rm{QSE}})}+\epsilon {\hat{D}}^{({\rm{QSE}})}\) with small \(\epsilon\) to ensure the coupling is respected.

Quantum phase estimation

Non-trivial measurement of a quantum computer is of similar difficulty to non-trivial unitary evolution. Beyond learning the expectation value of a given Hamiltonian \(\hat{H}\), one often wishes to know specific eigenvalues \({E}_{i}\) (in particular for the electronic structure problem, the ground and low-excited state energies). This may be achieved by QPE.9 QPE entails repeated Hamiltonian simulation (as described above), conditional on an ancilla qubit prepared in the \(\left|+\right\rangle =\frac{1}{\sqrt{2}}(\left|0\right\rangle +\left|1\right\rangle )\) state. (The resource cost in making the evolution conditional is constant in the system size.) Such evolution causes phase kickback on the ancilla qubit; if the system register was prepared in the state \({\sum }_{j}{a}_{j}\left|{\Psi }_{j}\right\rangle\), the combined (system plus ancilla) state evolves to

Repeated tomography at various \(k\) allows for the eigenphases \({E}_{j}\) to be inferred, up to a phase \({E}_{j}t+2\pi \equiv {E}_{j}t\). This inference can be performed directly with the use of multiple ancilla qubits,9 or indirectly through classical post-processing of a single ancilla tomographed via the \({M}_{T}\) gate of Fig. 5.39,78,82,83,84,85

The error in phase estimation comes from two sources; the error in Hamiltonian simulation and the error in the phase estimation itself. The error in Hamiltonian simulation may be bounded by \({\epsilon }_{{\rm{H}}}\) (as calculated in the previous section), which in turn sets the time for a single unitary \({T}_{U}\). Assuming a sufficiently large gap to nearby eigenvalues, the optimal scaling of the error in estimating \({E}_{j}\) is \({A}_{j}^{-1}{t}^{-1}{K}^{-1}\) (where \({A}_{j}=| {a}_{j}{| }^{2}\) is the amplitude of the \(j\)th eigenstate in the prepared state). Note that the phase equivalence \({E}_{j}t+2\pi ={E}_{j}t\) sets an upper bound on \(t\); in general we require \(t\propto \parallel \hat{H}{\parallel }_{S}\), which typically scales with \({N}_{{\rm{sys}}}\). (This scaling was incorporated into the estimates of \({T}_{{\rm{U}}}\) in the previous section.) The scaling of the ground state amplitude \({A}_{0}\) with the system size is relatively unknown, although numeric bounds suggest that it scales approximately as \(1-\alpha {N}_{{\rm{sys}}}\)3 for reasonable \({N}_{{\rm{sys}}}\), with \(\alpha\) a small constant. Approximating this as \({N}_{{\rm{sys}}}^{-1}\) implies that \(K\propto {N}_{{\rm{sys}}}^{2}\) is required to obtain a constant error in estimating the ground state energy.

The error in estimating an amplitude \({A}_{j}\) during single-ancilla QPE has not been thoroughly investigated. A naive least-squares fit to dense estimation leads to a scaling of \({n}_{{\rm{m}}}^{-1/2}{k}_{\max }^{-1/3}\), where \({n}_{{\rm{m}}}\) is the number of experiments performed at each point \(k=1,\ldots ,{k}_{\max }\). One requires to perform controlled time evolution for up to \({k}_{\max }\approx \max ({t}^{-1},{A}_{j}^{-1})\) coherent steps in order to guarantee separation of \({\phi }_{j}\) from other phases. To obtain a constant error, one must then perform \({n}_{{\rm{m}}}\propto {k}_{\max }^{-\frac{2}{3}}\propto \max ({A}_{j}^{\frac{2}{3}},{t}^{\frac{2}{3}})\) measurements at each \(k\), implying \(K={n}_{{\rm{m}}}{k}_{\max }\propto \max ({A}_{j}^{-\frac{1}{3}},{t}^{-\frac{1}{3}})\) applications of \({e}^{i\hat{H}t}\) are required. For the ground state (\({A}_{j}={A}_{0}\)), this gives scaling \(K\propto {N}_{{\rm{sys}}}^{\frac{1}{3}}\). By contrast, multiple-ancilla QPE requires \({N}_{{\rm{m}}}\) repetitions of \({e}^{i\hat{H}t}\) with \({k}_{\max }=\max ({A}_{0}^{-1},{t}^{-1})\) to estimate \({A}_{0}\) with an error of \({({A}_{0}(1-{A}_{0}){N}_{{\rm{m}}}^{-1})}^{1/2}\). This implies that \({N}_{{\rm{m}}}\propto {A}_{0}\) measurements are sufficient, implying \(K\propto {N}_{{\rm{m}}}{A}_{0}^{-1}\) may be fixed constant as a function of the system size for constant error in estimation of \({A}_{0}\). Though this has not yet been demonstrated for single-round QPE, we expect it to be achievable and assume this scaling in this work.

The propagator and phase estimation method

In this section, we outline the circuits required and calculate the estimation error for our newly developed PPE method for derivative estimation. A prototype application of this method to a one-qubit system may be found in the Supplementary Notes of this work.

Estimating expectation values with single-ancilla QPE

Though single-ancilla QPE only weakly measures the system register, and does not project it into an eigenstate \(\left|{\Psi }_{j}\right\rangle\) of the chosen Hamiltonian, it can still be used to learn properties of the eigenstates beyond their eigenvalues \({E}_{j}\). In particular, if one uses the same ancilla qubit to control a further unitary operation \(\hat{U}\) on the system register, the combined (system plus ancilla) state evolves from Eq. (29) to

The phase accumulated on the ancilla qubit may then be calculated to be

Note that the gauge degree of freedom is not present in Eq. (31); if one re-defines \(\left|{\Psi }_{j}\right\rangle \to {e}^{i\theta }\left|{\Psi }_{j}\right\rangle\), one must send \({a}_{j}\to {e}^{-i{\phi }_{j}}{a}_{j}\), and the phase cancels out. One may obtain \(g(k)\) at multiple points \(k\) via tomography of the ancilla qubit (Fig. 5). From here, either Prony’s method or Bayesian techniques may be used to extract phases \({\omega }_{j}\approx {E}_{j}t\) and corresponding amplitudes \({\alpha }_{j}\approx {\sum }_{j^{\prime} }{a}_{j}{a}_{j^{\prime} }^{* }\langle {\Psi }_{j^{\prime} }| \hat{U}| {\Psi }_{j}\rangle\).85 The amplitudes \({\alpha }_{j}\) are often not terribly informative, but this changes if one extends this process over a family of operators \(U\). For instance, if one chooses \(U={e}^{ik^{\prime} \hat{H}t}\hat{V}{e}^{ik\hat{H}t}\) (with \(\hat{V}\) unitary), an application of the Prony’s method on \(k\) returns amplitudes of the form

from which a second application of Prony’s method obtains phases \({\omega }_{j^{\prime} }={E}_{j^{\prime} }t\) with corresponding amplitudes

Each subsequent application of QPE requires taking data with \({U}^{k}\) fixed from \(k=1,\ldots ,K\) to resolve \(K\) individual frequencies (and corresponding eigenvalues). However, if one is simply interested in expectation values \(\langle {\Psi }_{j}| \hat{V}| {\Psi }_{j}\rangle\) (i.e., when \(j=j^{\prime}\)), one may fix \(k=k^{\prime}\) and perform a single application of the Prony’s method, reducing the number of circuits that need to be applied from \(O({K}^{2})\) to \(O(K)\) (see Fig. 6). The error in the estimator \({\alpha }_{j,j}\) (Eq. (33)) may be bounded above by the error in the estimator \({\alpha }_{j}\) (Eq. (32)). However, to estimate \(\langle {\Psi }_{j}| \hat{V}| {\Psi }_{j}\rangle\) from Eq. (33), one needs to divide by \({A}_{j}\). This propagates directly to the error, which then scales as \({A}_{j}^{-1/2}{N}_{{\rm{m}}}^{-1/2}\). Thus constant error in estimating \(\langle {\Psi }_{j}| \hat{V}| {\Psi }_{j}\rangle\) is achieved if \(K\propto {N}_{{\rm{sys}}}\).

A circuit to measure \(\langle {\Psi }_{j}| \hat{V}| {\Psi }_{j}\rangle\) without preparing \(\left|{\Psi }_{j}\right\rangle\) on the system register. The tomography box \({M}_{T}\) is defined in Fig. 5.

PPE circuits

As presented, the operator \(\hat{V}\) in Fig. 6 must be unitary. However if one applies additional phase estimation within \(\hat{V}\) itself, one can extend this calculation to non-unitary operators, such as those given in Eq. (9). This is similar in nature to calculating the time-domain Green’s function for small \(t\) on a quantum computer (which has been studied before in refs. 41,42,43), but performing the transformation to frequency space with the Prony’s method instead of a Fourier transform to obtain better spectral resolution. It can also be considered a generalization of ref. 37 beyond the linear response regime. To calculate a \(X\)th order amplitude (Eq. (10)), one may set

which is unitary if the \({\hat{P}}_{x}\) are chosen to be a unitary decomposition of \(\partial \hat{H}/\partial {\lambda }_{x}\). In Fig. 7, we show two circuits for the estimation of a second order derivative with \(\hat{P}=\partial \hat{H}/\partial {\lambda }_{1}\), \(\hat{Q}=\partial \hat{H}/\partial {\lambda }_{2}\) (or some piece thereof). The circuits differ by whether QPE or a VQE is used for state preparation. If QPE is used for state preparation, the total phase accumulated by the ancilla qubit over the circuit is

Applying the Prony’s method at fixed \({k}_{1}\) will obtain a signal at phase \(2t{E}_{0}\) with amplitude

A second application of the Prony’s method in \({k}_{1}\) allows us to obtain the required amplitudes

and the eigenvalues \({\omega }_{j}\approx {E}_{j}t\), allowing classical calculation of both the amplitudes and energy coefficients required to evaluate Eq. (9). If a VQE is used for state preparation instead, one must post-select on the system register being returned to \(|\overrightarrow{0}\rangle\). Following this, the ancilla qubit will be in the state

with an accumulated phase \(g(k)={\alpha }_{0,0}(k)\) (where \({\alpha }_{0,0}\) is as defined above). Here, \(|{\Psi }_{0}^{({\rm{VQE}})}\rangle\) is the state prepared by the VQE unitary (which may not be the true ground state of the system). Both methods may be extended immediately to estimate higher-order amplitudes by inserting additional excitations and rounds of QPE, resulting in amplitudes of the form \({\alpha }_{0,0}({k}_{1},\ldots ,{k}_{X})\).

Circuits for calculating path amplitudes (Eq. (10)) for a second-order derivative on a quantum computer (individual units described throughout Section IV C), using either a VQE (top) or QPE (bottom) for state preparation. Both circuits require an \(N\)-qubit system register and a single ancilla qubit. Repeat measurements of these circuit at different values of \(k\) (top) or \({k}_{0}\) and \({k}_{1}\) (bottom) allow for the inference of the amplitude, as described in the text. \({M}_{Z}\) refers to a final measurement of all qubits in the computational basis, which is required for post-selection.

We note that the VQE post-selection does not constitute “throwing away data”; if the probability of post-selecting \(\left|{\Psi }_{0}\right\rangle\) is \(p\), we have

and as the variance in any term \({\alpha }_{i,j}({k}_{1},\ldots ,{k}_{X})\) scales as \(| {\alpha }_{i,j}({k}_{1},\ldots ,{k}_{X})(1-{\alpha }_{i,j}({k}_{1},\ldots ,{k}_{X}))|\), the absolute error in estimating a derivative scales as \({p}^{1/2}\) (note the lack of minus sign). (Note here that the relative error scales as \({p}^{-1/2}\)).

Energy discretization (resolution error)

The maximum number of frequencies estimatable from a signal \(g(0),\ldots ,g(k)\) is \((k+1)/2\). (This can be seen by parameter counting; it differs from the bound of \(k\) for QPE85 as the amplitudes are not real.) As the time required to obtain \(g(k)\) scales at best linearly with \(k\) (Section IV D), we cannot expect fine enough resolution of all \({2}^{{N}_{{\rm{sys}}}}\) eigenvalues present in a \({N}_{{\rm{sys}}}\)-qubit system. Instead, a small amplitude \({\mathcal{A}}({j}_{1},\ldots ,{j}_{X})\) (\(| {\mathcal{A}}({j}_{1},\ldots ,{j}_{X})| \le \Delta\)) will be binned with paths \({\mathcal{A}}^{\prime} ({l}_{1},\ldots ,{l}_{X})\) of similar energy (\(\delta ={\max }_{x}| {E}_{{j}_{x}}-{E}_{{l}_{x}}| \ll \Delta\)), and labeled with the same energy \({E}_{{B}_{x}}\approx {E}_{{j}_{x}}\approx {E}_{{k}_{x}}\).85 Here, \(\Delta\) is controlled by the length of the signal \(g(k)\), i.e., \(\Delta \propto \max {(k)}^{-1}\). This grouping does not affect the amplitudes; as evolution by \({e}^{ik\hat{H}t}\) does not mix eigenstates (regardless of energy), terms of the form \(\left|{\Psi }_{{j}_{x}}\right\rangle \left\langle {\Psi }_{{l}_{x}}\right|\) do not appear. (This additional amplitude error would occur if one attempted to calculate single amplitudes of the form \(\langle {\Psi }_{j}| \hat{P}| {\Psi }_{k}\rangle\) on a quantum device, e.g., using the method in Section IV G or that of ref. 37, and multiply them classically to obtain a \(d\ >\ 1\)st order derivative.) The PPE method then approximates Eq. (9) as

Classical post-processing then need only sum over the (polynomially many in \(\Delta\)) bins \({B}_{x}\) instead of the (exponentially many in \({N}_{{\rm{sys}}}\)) eigenstates \(\left|{\Psi }_{{j}_{x}}\right\rangle\), which is then tractable.

To bound the resolution error in the approximation \({f}_{{\mathcal{A}}}({E}_{0};{E}_{{j}_{1}},\ldots ,{E}_{{j}_{{X}_{{\mathcal{A}}}-1}})\to {f}_{{\mathcal{A}}}({E}_{0};{E}_{{B}_{1}},\ldots ,{E}_{{B}_{{X}_{{\mathcal{A}}}-1}})\), we consider the error if \({E}_{j}\) were drawn randomly from bins of width \(\parallel \hat{H}{\parallel }_{{\rm{S}}}\Delta\) (where \(\parallel \hat{H}{\parallel }_{{\rm{S}}}\) is the spectral norm). The energy functions \(f\) take the form of \({X}_{{\mathcal{A}}}-1\) products of \(\frac{1}{{E}_{{j}_{x}}-{E}_{0}}\). If each term is independent, these may be bounded as

where \(\delta\) is the gap between the ground and excited states. Then, as the excitations \(\hat{P}\) are unitary, for each amplitude \({\mathcal{A}}\) one may bound

Propagating variances then obtains

where \({N}_{{\mathcal{A}}}\) is the number of amplitudes in the estimation of \(D\). As we must decompose each operator into unitaries to implement in a circuit, \({N}_{{\mathcal{A}}}\propto {N}_{{\rm{U}}}^{d}\).

This bound is quite loose; in general we expect excitations \(\partial \hat{H}/\partial \lambda\) to couple to low-level excited states, which lie in a constant energy window (rather than one of width \(\parallel \hat{H}{\parallel }_{S}\)), and that contributions from different terms should be correlated (implying that \({N}_{{\mathcal{A}}}\) should be treated as constant here). This implies that one may take \(\Delta\) roughly constant in the system size, which we assume in this work.

Sampling noise error

We now consider the error in calculating Eq. (38) from a finite number of experiments (which is separate to the resolution error above). Following Section IV G we have that, if QPE is used for state preparation

If one were to use a VQE for state preparation, the factors of \({A}_{0}\) would be replaced by the state error of Section IV F. We have not included this calculation in Table 2 for the sake of simplicity. Then, assuming each term in Eq. (38) is independently estimated, we obtain

Substituting the individual scaling laws one obtains

where again \({N}_{{\mathcal{A}}}\propto {N}_{U}^{d}\). This result is reported in Table 2.

Eigenstate truncation approximation details

In this section, we outline the classical post-processing required to evaluate Eq. (9) in the ETA, using QSE to generate approximate eigenstates. We then calculate the complexity cost of such estimation, and discuss the systematic error in an arbitrary response approximation from Hilbert space truncation.

The chosen set of approximate excited states \(\left|{\tilde{\Psi }}_{j}\right\rangle\) defines a subspace \({{\mathcal{H}}}^{({\rm{QSE}})}\) of the larger FCI Hilbert space \({{\mathcal{H}}}^{({\rm{FCI}})}\). To calculate expectation values within this subspace, we project the operators \(\hat{O}\) of interest (such as derivatives like \(\partial \hat{H}/\partial \lambda\)) onto \({{\mathcal{H}}}^{({\rm{QSE}})}\), giving a set of reduced operators \({\hat{O}}^{({\rm{QSE}})}\) (\({O}_{i,j}^{({\rm{QSE}})}=\langle {\tilde{\Psi }}_{i}| \hat{O}| {\tilde{\Psi }}_{j}\rangle\)). These are \({N}_{{\rm{E}}}\times {N}_{{\rm{E}}}\)-dimensional classical matrices, which may be stored and operated on in polynomial time. Methods to obtain the matrix elements \({O}_{i,j}^{({\rm{QSE}})}\) are dependent on the form of the \(\left|{\tilde{\Psi }}_{j}\right\rangle\) chosen. Within the QSE, one can obtain these by directly measuring40

using the techniques outlined in Section IV C, and rotating the basis from \(\{|{\chi }_{j}\rangle \}\) to \(\{|{\tilde{\Psi }}_{j}\rangle \}\) (using Eq. (14)).

The computational complexity for a derivative calculation within the QSE is roughly independent of the choice of \(\left|{\tilde{\Psi }}_{j}\right\rangle\). The error \(\epsilon\) may be bounded above by the error in each term of the \({N}_{{\rm{E}}}\times {N}_{{\rm{E}}}\) projected matrices, which scales as either \({N}_{{\rm{T}}}^{1/2}{n}_{{\rm{m}}}^{-1/2}\) (when directly estimating), \({A}_{j}^{-1/2}{N}_{{\rm{m}}}^{-1/2}\) (when estimating via QPE), or \({N}_{{\rm{U}}}^{1/2}{K}^{-1}{n}_{{\rm{m}}}^{-1/2}\) (using the amplitude estimation algorithm). We assume that the \({N}_{{\rm{E}}}^{2}\) terms are independently estimated, in which case \(\epsilon\) scales with \({N}_{{\rm{E}}}\). In general this will not be the case, and \(\epsilon\) could scale as badly as \({N}_{{\rm{E}}}^{2}\), but we do not expect this to be typical. Indeed, one can potentially use the covariance between different matrix elements to improve the error bound.40 As we do not know the precise improvement this will provide, we leave any potential reduction in the computational complexity stated in Table 2 to future work. The calculation requires \({n}_{{\rm{m}}}\) repetitions of \({N}_{{\rm{T}}}\) circuits for each pair of \({N}_{{\rm{E}}}\) excitations, leading to a total number of \({n}_{{\rm{m}}}{N}_{{\rm{T}}}{N}_{{\rm{E}}}^{2}\) preparations (each of which has a time cost \({T}_{{\rm{P}}}\)), as stated in Table 2. (With the amplitude amplification algorithm, the dominant time cost comes from running \(O({N}_{{\rm{T}}}{N}_{{\rm{E}}}^{2})\) circuits of length \(K{T}_{{\rm{P}}}\).)

Regardless of the method of generating eigenstates, the ETA incurs a systematic truncation error from approximating an exponentially large number of true eigenstates \(\left|{\Psi }_{j}\right\rangle\) by a polynomial number of approximate eigenstates \(\left|{\tilde{\Psi }}_{j}\right\rangle ={\sum }_{l}{\tilde{A}}_{j,l}\left|{\Psi }_{l}\right\rangle\). This truncation error can be split into three pieces. Firstly, an excitation \(\hat{P}\left|{\Psi }_{0}\right\rangle\) may not lie within the response subspace \({{\mathcal{H}}}^{({\rm{QSE}})}\), in which case the pieces lying outside the space will be truncated away. Secondly, the term \(\hat{P}\left|{\tilde{\Psi }}_{j}\right\rangle \left\langle {\tilde{\Psi }}_{j}\right|\hat{Q}\) may contain terms of the form \(\hat{P}\left|{\Psi }_{j}\right\rangle \left\langle {\Psi }_{l}\right|\hat{Q}\), which do not appear in the original resolution of the identity. Thirdly, the approximate energies \({\tilde{E}}_{j}\) may not be close to the true energies \({E}_{j}\) (especially when \(\left|{\tilde{\Psi }}_{j}\right\rangle\) is a sum of true eigenstates \(\left|{\Psi }_{l}\right\rangle\) with large energy separation \({E}_{j}-{E}_{l}\)). If one chooses excitation operators \({\hat{E}}_{j}\) in the QSE so that \(\hat{P}={\sum }_{j}{p}_{j}{\hat{E}}_{j}\), one completely avoids the first error source. By contrast, if one chooses a truncated set of true eigenstates \(\left|{\tilde{\Psi }}_{j}\right\rangle =\left|{\Psi }_{j}\right\rangle\), one avoids the second and third error sources exactly. In the Supplementary Notes of this work, we expand on this point, and place some loose bounds on these error sources.

Experimental methods

The experimental implementation of the geometry optimization algorithm was performed using two of three transmon qubits in a circuit QED quantum processor. This is the same device used in ref. 86 (raw data is the same as in Fig. 1e of this paper, but with heavy subsequent processing). The two qubits have individual microwave lines for single-qubit gating and flux-bias lines for frequency control, and dedicated readout resonators with a common feedline. Individual qubits are addressed in readout via frequency multiplexing. The two qubits are connected via a common bus resonator that is used to achieve an exchange gate

via a flux pulse on the high-frequency qubit, with an uncontrolled additional single-qubit phase that was canceled out in post-processing. The exchange angle \(\theta\) may be fixed to a \(\pi /6000\) resolution by using the pulse duration (with a \(1\,{\rm{ns}}\) duration) as a rough knob and fine-tuning with the pulse amplitude. Repeat preparation and measurement of the state generated by exciting to \(\left|01\right\rangle\) and exchanging through one of \(41\) different choices of \(\theta\) resulted in the estimation of \(41\) two-qubit density matrices \({\rho }_{i}\) via linear inversion tomography of \(1{0}^{4}\) single-shot measurements per prerotation.87 All circuits were executed in eQASM88 code compiled with the QuTech OpenQL compiler, with measurements performed using the qCoDeS89 and PycQED90 packages.

To use the experimental data to perform geometry optimization for H\({}_{2}\), the ground state was estimated via a VQE.10,11 The Hamiltonian at a given H–H bond length \({R}_{{\rm{H-H}}}\) was calculated in the STO-3G basis using the Dirac package,51 and converted to a qubit representation using the Bravyi–Kitaev transformation, and reduced to two qubits via exact block-diagonalization12 using the Openfermion package52 and the Openfermion–Dirac interface.53 With the Hamiltonian \(\hat{H}({R}_{{\rm{H-H}}})\) fixed, the ground state was chosen variationally: \(\rho ({R}_{{\rm{H-H}}})={\min }_{{\rho }_{i}}{\rm{Trace}}[\hat{H}({R}_{{\rm{H-H}}}){\rho }_{i}]\). The gradient and Hessian were then calculated from \(\rho ({R}_{{\rm{H-H}}})\) using the Hellmann–Feynman theorem (Section II B) and ETA (Section IV M), respectively. For the ETA, we generated eigenstates using the QSE, with the Pauli operator \(XY\) as a single excitation. This acts within the number conserving subspace of the two-qubit Hilbert space, and, being imaginary, will not have the real-valued H\({}_{2}\) ground state as an eigenstate. (This in turn guarantees the generated excited state is linearly independent of the ground state.) For future work, one would want to optimize the choice of \(\theta\) at each distance \({R}_{{\rm{H-H}}}\), however this was not performed due to time constraints. We have also not implemented the error mitigation strategies studied in ref. 86 for the sake of simplicity.

Simulation methods

Classical simulations of the quantum device were performed in the full-density–matrix simulator (quantumsim).49 A realistic error model of the device was built using experimentally calibrated parameters to account for qubit decay (\({T}_{1}\)), pure dephasing (\({T}_{2}^{* }\)), residual excitations of both qubits, and additional dephasing of qubits fluxed away from the sweet spot (which reduces \({T}_{2}^{* }\) to \({T}_{2}^{* ,red}\) for the duration of the flux pulse). This error model further accounted for differences in the observed noise model on the individual qubits, as well as fluctuations in coherence times and residual excitation numbers. Further details of the error model may be found in ref. 86 (with device parameters in Table S1 of this reference).

With the error model given, \(100\) simulated experiments were performed at each of the \(41\) experimental angles given. Each experiment used unique coherence time and residual excitation values (drawn from a distribution of the observed experimental fluctuations), and had appropriate levels of sampling noise added. These density matrices were then resampled \(100\) times for each simulation.

Data availability

Experimental data and code for post-processing are available from the corresponding author upon reasonable request.

Code availability

All packages used in this work are open source and available via git repositories online.

Notes

Higher order (\(d\ge 4\)) amplitudes will eventually contain terms corresponding to disconnected excitations, which then are products of multiple terms of the form of Eq. (10). Our procedure may be extended to include these contributions; we have excluded them here for the sake of readibility.

The first-order derivative \(\partial E/\partial {F}_{i}\) gives the dipole moment, which is also of interest, but is zero for the hydrogen molecule.

References

Preskill, J. Quantum computing in the NISQ era and beyond. Quantum 2, 79 (2018).

Lloyd, S. Universal quantum simulators. Science 273, 1073–1078 (1996).

Reiher, M., Wiebe, N., Svore, K. M. & Wecker, D. Elucidating reaction mechanisms on quantum computers. Proc. Natl Acad. Sci. USA 114, 7555–7560 (2017).

Cao, Y. et al. Quantum chemistry in the age of quantum computing. Chem. Rev. 119, 10856–10915 (2019).

McArdle, S. et al. Quantum Computational Chemistry. Preprint at: arXiv:1808.10402. https://arxiv.org/abs/1808.10402 (2018).

Abrams, D. S. & Lloyd, S. Simulation of many-body fermi systems on a universal quantum computer. Phys. Rev. Lett. 79, 2586 (1997).

Zalka, C. Simulating quantum systems on a quantum computer. Proc. Royal Soc. Lond. A 454, 313–322 (1998).

Aspuru-Guzik, A., Dutoi, A. D., Love, P. J. & Head-Gordon, M. Simulated quantum computation of molecular energies. Science 309, 1704–1707 (2005).

Kitaev, A. Y. Quantum Measurements and the Abelian Stabilizer Problem. Preprint at: arXiv:quant-ph/9511026. https://arxiv.org/abs/quant-ph/9511026 (1995).

Peruzzo, A. et al. A variational eigenvalue solver on a photonic quantum processor. Nat. Commun. 5, 4213 (2014).

McClean, J. R., Romero, J., Babbush, R. & Aspuru-Guzik, A. The theory of variational hybrid quantum-classical algorithms. New J. Phys. 18, 023023 (2016).

O’Malley, P. J. J. et al. Scalable quantum simulation of molecular energies. Phys. Rev. X 6, 031007 (2016).

Kandala, A. et al. Hardware-efficient variational quantum eigensolver for small molecules and quantum magnets. Nature 549, 242 (2017).

Santagati, R. et al. Witnessing eigenstates for quantum simulation of hamiltonian spectra. Sci. Adv. 4, eaap9646 (2018).

Colless, J. I. et al. Computation of molecular spectra on a quantum processor with an error-resilient algorithm. Phys. Rev. X 8, 011021 (2018).

Dreizler, R. M. & Gross, E. K. U. Density Functional Theory: An Approach to the Quantum Many-Body Problem (Springer: Berlin. Heidelberg, 1990).

Shavitt, I. & Bartlett, R. J. Many-Body Methods in Chemistry and Physics: MBPT and Coupled-Cluster Theory (Cambridge University Press, 2009).

Booth, G. H., Thom, A. J. & Alavi, A. Fermion monte carlo without fixed nodes: a game of life, death, and annihilation in slater determinant space. J. Chem. Phys. 131, 054106 (2009).

Jensen, F. Introduction to Computational Chemistry, 2nd ed. (John Wiley & Sons, 2007).

Norman, P., Ruud, K. & Saue, T. Principles and Practices of Molecular Properties: Theory, Modeling, and Simulations. https://doi.org/10.1002/9781118794821 (John Wiley & Sons, 2018).

Schlegel, H. B. Geometry optimization. Wiley Interdiscip. Rev. Comput. Mol. Sci. 1, 790–809 (2011).

Marx, D. & Hutter, J. Ab Initio Molecular Dynamics: Basic Theory and Advance Methods. https://www.cambridge.org/nl/academic/subjects/physics/mathematical-methods/ab-initio-molecular-dynamics-basic-theory-and-advanced-methods?format=PB (Cambridge University Press, 2009).

Tully, J. C. Molecular dynamics with electronic transitions. J. Chem. Phys. 93, 1061–1071 (1990).

Behler, J. Perspective: machine learning potentials for atomistic simulations. J. Chem. Phys. 145, 170901 (2016).

Li, Z. & Liu, W. First-order nonadiabatic coupling matrix elements between excited states: a lagrangian formulation at the cis, rpa, td-hf, and td-dft levels. J. Chem. Phys. 141, 014110 (2014).

Curchod, B. F., Rothlisberger, U. & Tavernelli, I. Trajectory-based nonadiabatic dynamics with time-dependent density functional theory. ChemPhysChem 14, 1314–1340 (2013).

Faraji, S., Matsika, S. & Krylov, A. I. Calculations of non-adiabatic couplings within equation-of-motion coupled-cluster framework: theory, implementation, and validation against multi-reference methods. J. Chem. Phys. 148, 044103 (2018).

Jordan, S. P. Fast quantum algorithm for numerical gradient estimation. Phys. Rev. Lett. 95, 050501 (2005).

Gilyén, A., Arunachalam, S. & Wiebe, N. Optimizing quantum optimization algorithms via faster quantum gradient computation. Proc. Symp. Disc. Alg. 30, 1425–1444 (2019).

Dallaire-Demers, P.-L., Romero, J., Veis, L., Sim, S. & Aspuru-Guzik, A. Low-depth circuit ansatz for preparing correlated fermionic states on a quantum computer. Quant. Sci. Tech 4, 045005 (2019).

Schuld, M., Bergholm, V., Gogolin, C., Izaac, J. & Killoran, N. Evaluating analytic gradients on quantum hardware. Phys. Rev. A 99, 032331 (2019).

Harrow, A. & Napp, J. Low-Depth Gradient Measurements Can Improve Convergence in Variational Hybrid Quantum-Classical Algorithms. Preprint at arXiv:1901.05374. https://arxiv.org/abs/1901.05374 (2019).

Romero, J. et al. Strategies for quantum computing molecular energies using the unitary coupled cluster ansatz. Quantum Sci. Technol. 4, 014008 (2018).

Guerreschi, G. G. & Smelyanskiy, M. Practical Optimization for Hybrid Quantum-Classical Algorithms. Preprint at: arXiv:1701.01450. https://arxiv.org/abs/1701.01450 (2017).

Kassal, I. & Aspuru-Guzik, A. Quantum algorithm for molecular properties and geometry optimization. J. Chem. Phys. 131, 224102 (2009).

McArdle, S. Mayorov, A. Shan, X. Benjamin, S. & Yuan, X. Quantum Computation of Molecular Vibrations. Chem. Sci. 10, 5725–5735 (2019).

Roggero, A. Carlson, J. Linear Response on a Quantum Computer. Phys. Rev. C 100, 034610 (2019).

Visscher, L. The Dirac equation in quantum chemistry: strategies to overcome the current computational problems. J. Comp. Chem. 23, 759–766 (2002).

Knill, E., Ortiz, G. & Somma, R. D. Optimal quantum measurements of expectation values of observables. Phys. Rev. A 75, 012328 (2007).

McClean, J. R., Kimchi-Schwartz, M. E., Carter, J. & de Jong, W. A. Hybrid quantum-classical hierarchy for mitigation of decoherence and determination of excited states. Phys. Rev. A 95, 042308 (2017).

Wecker, D. et al. Solving strongly correlated electron models on a quantum computer. Phys. Rev. A 92, 062318 (2015).

Dallaire-Demers, P.-L. & Wilhelm, F. K. Method to efficiently simulate the thermodynamic properties of the fermi-hubbard model on a quantum computer. Phys. Rev. A 93, 032303 (2016).

Bauer, B., Wecker, D., Millis, A. J., Hastings, M. B. & Troyer, M. Hybrid quantum-classical approach to correlated materials. Phys. Rev. X 6, 031045 (2016).

Parrish, R. M., Hohenstein, E. G., McMahon, P. L. & Martinez, T. J. Quantum computation of electronic transitions using a variational quantum Eigensolver. Phys. Rev. Lett. 122, 230401 (2019).

Higgott, O., Wang, D. & Brierley, S. Variational quantum computation of excited states. Quantum 3, 156 (2019).

Endo, S., Jones, T., McArdle, S., Yuan, X. & Benjamin, S. Variational quantum algorithms for discovering Hamiltonian spectra. Phys. Rev. A 99, 062304 (2019)

Bartlett, R. J. Coupled cluster theory and its equation of motion extensions. Wiley Interdiscip. Rev. 2, 126–211 (2011).

Kassal, I., Jordan, S. P., Love, P. J., Mohseni, M. & Aspuru-Guzik, A. Polynomial-time quantum algorithm for the simulation of chemical dynamics. Proc. Natl Acad. Sci. USA 105, 18681 (2008).

O’Brien, T., Tarasinski, B. & DiCarlo, L. Density-matrix simulation of small surface codes under current and projected experimental noise. npj Quant. Inf. 3. https://doi.org/10.1038/s41534-017-0039-x (2017).

Nocedal, J. & Wright, S. Numerical Optimization (Springer Science & Business Media, 2006).

Saue, T. et al. DIRAC, a relativistic ab initio electronic structure program, Release DIRAC18. Available at: https://doi.org/10.5281/zenodo.2253986, see also http://www.diracprogram.org (2018).

McClean, J. R. et al. Openfermion: the electronic structure package for quantum computers. Preprint at: arXiv:1710.07629. https://arxiv.org/abs/1710.07629 (2017).

Senjean, B. https://github.com/bsenjean/Openfermion-Dirac.

Jordan, P. & Wigner, E. Über das Paulische Äquivalenzverbot. Z. Phys. 47, 631 (1928).

Ortiz, G., Gubernatis, J., Knill, E. & Laflamme, R. Quantum algorithms for fermionic simulations. Phys. Rev. A 64, 022319 (2001).

Bravyi, S. B. & Kitaev, A. Y. Fermionic quantum computation. Ann. Phys. 298, 210–226 (2002).

Verstraete, F. & Cirac, J. I. Mapping local Hamiltonians of fermions to local Hamiltonians of spins. J. Stat. Mech. Theory Exp. 2005, P09012 (2005).

Seeley, J. T., Richard, M. J. & Love, P. J. The Bravyi-Kitaev transformation for quantum computation of electronic structure. J. Chem. Phys. 137, 224109 (2012).

Whitfield, J. D., Havlíček, V. & Troyer, M. Local spin operators for fermion simulations. Phys. Rev. A 94, 030301 (2016).

Steudtner, M. & Wehner, S. Fermion-to-qubit mappings with varying resource requirements for quantum simulation. New J. Phys. 20, 063010 (2018).

Setia, K., Bravyi, S., Mezzacapo, A. & Whitfield, J. D. Superfast encodings for Fermionic quantum simulation. Phys. Rev. Res. 1, 033033 (2019).

Setia, K. & Whitfield, J. D. Bravyi-Kitaev superfast simulation of electronic structure on a quantum computer. J. Chem. Phys. 148, 164104 (2018).

Tranter, A., Love, P. J., Mintert, F. & Coveney, P. V. A comparison of the Bravyi-Kitaev and Jordan-Wigner transformations for the quantum simulation of quantum chemistry. J. Chem. Theory Comput. 14, 5617–5630 (2018).

O’Brien, T., Rożek, P. & Akhmerov, A. Majorana-based fermionic quantum computation. Phys. Rev. Lett. 120, 220504 (2018).

Nielsen, M. A. & Chuang, I. L. Quantum Computation and Quantum Information (Cambridge University Press, 2002). https://doi.org/10.1017/CBO9780511976667.

Whitfield, J. D., Biamonte, J. & Aspuru-Guzik, A. Simulation of electronic structure hamiltonians using quantum computers. Mol. Phys. 109, 735–750 (2011).

Hastings, M. B., Wecker, D., Bauer, B. & Troyer, M. Improving quantum algorithms for quantum chemistry. Quant. Inf. Comp. 15, 1 (2015).

Berry, D., Ahokas, G., Cleve, R. & Sanders, B. Efficient quantum algorithms for simulating sparse hamiltonians. Comm. Math. Phys. 270, 359–371 (2007).

Low, G. & Wiebe, N. Hamiltonian Simulation in the Interaction Picture. Preprint at: arXiv:1805.00675. https://arxiv.org/abs/1805.00675 (2018).

Motzoi, F., Kaicher, M. & Wilhelm, F. Linear and logarithmic time compositions of quantum many-body operators. Phys. Rev. Lett. 119, 160503 (2017).

Babbush, R., Berry, D., McClean, J. & Neven, H. Quantum simulation of chemistry with sublinear scaling to the continuum. npj Quant. Inf. 5, 92 (2019).

Motta, M. et al. Low Rank Representations for Quantum Simulation of Electronic Structure. Preprint at: arXiv:1808.02625. https://arxiv.org/abs/1808.02625 (2018).

Campbell, E. A Random Compiler for Fast Hamiltonian Simulation. Phys. Rev. Lett. 123, 070503 (2019).

Kivlichan, I. et al. Improved fault-tolerant quantum simulation of condensed-phase correlated electrons via trotterization. Preprint at: arXiv:1902.10673. https://arxiv.org/abs/1902.10673 (2019).

Wu, L.-A. Byrd, M. & Lidar, D. Polynomial-time simulation of pairing models on a quantum computer. Phys. Rev. Lett. 89. https://journals.aps.org/prl/abstract/10.1103/PhysRevLett.89.057904 (2002).

Motta, M. et al. Quantum Imaginary Time Evolution, Quantum Lanczos, and Quantum Thermal Averaging. Preprint at: arXiv:1901.07653. https://arxiv.org/abs/1901.07653 (2019).

Kyriienko, O. Quantum inverse iteration algorithm for near-term quantum devices. Preprint at: arXiv:1901.09988. https://arxiv.org/abs/1901.09988 (2019).

Higgins, B. L. et al. Demonstrating Heisenberg-limited unambiguous phase estimation without adaptive measurements. New J. Phys. 11, 073023 (2009).

Grimsley, H. R., Economou, S. E., Barnes, E. & Mayhall, N. J. An adaptive variational algorithm for exact molecular simulations on a quantum computer. Nat. Commun. 10, 3007 (2019).

Ryabinkin, I. G. & Genin, S. N. Iterative Qubit Coupled Cluster Method: A Systematic Approach to the Full-ci Limit in Quantum Chemistry Calculations on Nisq Devices. Preprint at: arXiv:1906.11192. https://arxiv.org/abs/1906.11192 (2019).

Herasymenko, Y. & O’Brien, T.E. A Diagrammatic Approach to Variational Quantum Ansatz Construction. Preprint at: arXiv:1907.08157. https://arxiv.org/abs/1907.08157 (2019).

Svore, K., Hastings, M. & Freedman, M. Faster phase estimation. Quant. Inf. Comp. 14, 306–328 (2013).

Kimmel, S., Low, G. H. & Yoder, T. J. Robust calibration of a universal single-qubit gate set via robust phase estimation. Phys. Rev. A 92, 062315 (2015).

Wiebe, N. & Granade, C. Efficient bayesian phase estimation. Phys. Rev. Lett. 117, 010503 (2016).

O’Brien, T., Tarasinski, B. & Terhal, B. Quantum phase estimation of multiple eigenvalues for small-scale (noisy) experiments. New J. Phys. 21 https://iopscience.iop.org/article/10.1088/1367-2630/aafb8e (2019).

Sagastizabal, R. et al. Error Mitigation by symmetry verification on a variational quantum Eigensolver. Phys. Rev. A 100, 010302(R) (2019).

Saira, O.-P. et al. Entanglement genesis by ancilla-based parity measurement in 2D circuit QED. Phys. Rev. Lett. 112, 070502 (2014).

Fu, X. et al. eQASM: an executable quantum instruction set architecture. In Proceedings of 25th IEEE International Symposium on High-Performance Computer Architecture (HPCA), 224–237 (IEEE, 2019).

Johnson, A. & Ungaretti, G. et al. QCoDeS. https://github.com/QCoDeS/Qcodes (2016).

Rol, M. A. et al. PycQED. https://github.com/DiCarloLab-Delft/PycQED_py3 (2016).

Acknowledgements

We would like to thank B.M. Terhal, I. Kassal, V.P. Ostroukh, and C.H. Price for useful discussions, M. Singh, M.A. Rol, C.C. Bultink, X. Fu, N. Muthusubramanian, A. Bruno, M. Beekman, N. Haider, F. Luthi, B. Tarasinski, and C. Dickel for experimental assistance, and C.W.J. Beenakker and D. Hohl for support during this project. This research was funded by the Netherlands Organization for Scientific Research (NWO/OCW), an ERC Synergy Grant, Shell Global Solutions BV, and IARPA (U.S. Army Research Office grant W911NF-16-1-0071).

Author information

Authors and Affiliations

Contributions

New quantum algorithms were designed by T.E.O. Errors were estimated by T.E.O., B.S., and A.D. Experiment was performed by R.S. and L.D.C. Density matrix simulations were performed by X.B.M. Computational chemistry calculations performed by B.S. and L.V. Gradient descent and polarizability calculations from experimental and simulated data were performed by T.E.O. and B.S. Relevance to quantum chemistry and suggested applications provided by F.B. and L.V. Paper written by T.E.O., B.S., F.B., and L.V. (with input from all other authors).

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions