Abstract

The Eliashberg theory of superconductivity accounts for the fundamental physics of conventional superconductors, including the retardation of the interaction and the Coulomb pseudopotential, to predict the critical temperature Tc. McMillan, Allen, and Dynes derived approximate closed-form expressions for the critical temperature within this theory, which depends on the electron–phonon spectral function α2F(ω). Here we show that modern machine-learning techniques can substantially improve these formulae, accounting for more general shapes of the α2F function. Using symbolic regression and the SISSO framework, together with a database of artificially generated α2F functions and numerical solutions of the Eliashberg equations, we derive a formula for Tc that performs as well as Allen–Dynes for low-Tc superconductors and substantially better for higher-Tc ones. This corrects the systematic underestimation of Tc while reproducing the physical constraints originally outlined by Allen and Dynes. This equation should replace the Allen–Dynes formula for the prediction of higher-temperature superconductors.

Similar content being viewed by others

Introduction

Although the theory of electron–phonon superconductivity due to Bardeen–Cooper–Schrieffer, Gor’kov, Eliashberg, Migdal, and others is well-established, it has not historically aided in the discovery of new superconductors. The materials space to search for new superconductors is vast, and it is, therefore, desirable to find a practical way to use theory as a guide. Recent computational developments may allow a new approach to superconducting materials discovery based on ab-initio and materials-genome type methods1,2,3.

One approach to this problem, pioneered by McMillan4 and Allen and Dynes5, is to search for a formula for Tc based on materials-specific parameters derived from the Eliashberg equations of superconductivity. These parameters, mostly moments of the electron–phonon spectral function α2F(ω), can be determined by experiment or, more recently, calculated within ab initio approaches. In principle, this allows one also to deduce how to optimize Tc if one can optimize one or more of these parameters.

The Allen–Dynes equation has played a crucial role in debates on how to achieve high-temperature superconductivity by both theorists, who use it to predict Tc, and by experimentalists, who extract λ from measured Tc and ωD. Nevertheless, it is important to recall that the Allen–Dynes equation has been derived from Eliashberg theory within an approximation where the momentum dependence of the Eliashberg function is neglected. It is based on 217 Eliashberg solutions of three types of α2F(ω) shapes (those obtained from tunneling data on Hg and Pb, and those obtained for a single Einstein mode).

There have been several important advances in providing more detailed solutions to the Eliashberg equations since the work of Allen and Dynes. Combescot solved the Eliashberg equations on the weak coupling side and obtained an expression for Tc that depends on \(\omega_{\mathrm{log}}\) and a shape-dependent integral6. Recently, Marsiglio et al. solved the Eliashberg equations numerically at small λ7,8, exhibiting some deviations of the theory from the BCS result in this limit, in particular the correction \(\frac{1}{\sqrt{e}}\) to the BCS Tc9,10,11. And of course the full equations can be solved numerically for any coupling, including the momentum dependence of α2F if desired12,13. The validity of the theory as λ increases is a subtle question, and has been the subject of a number of recent studies14,15,16,17,18,19.

In this paper, we solve the Eliashberg equations using different types of electron–phonon spectral functions, including multimodal Einstein-like spectra and a set of α2F obtained from first-principles calculations. We find that, while the Allen–Dynes formula accurately predicts the Eliashberg Tc for λ values near 1.6 (the coupling constant for Hg and Pb), it nevertheless deviates from the Eliashberg Tc when λ is significantly larger or smaller than 1.6 and when the shape of α2F(ω) differs from the simple unimodal Einstein model. This deficiency highlights the need to improve on Allen–Dynes to investigate the high-pressure, high-temperature hydrides of great current interest20.

In a previous paper, we used an analytical machine learning approach to try to improve on the Allen–Dynes formula, testing and training on tiny databases from the Allen–Dynes table of 29 superconducting materials21. This proof of principle work showed that the SISSO framework, properly constrained by physical law, could substantially improve the performance of the Allen–Dynes equation with a smaller number of parameters. Clearly, it is necessary to apply this approach to a more extensive and diverse database.

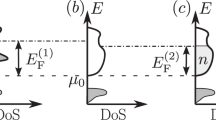

Here, we proceed more systematically and show how we can “teach the machine Eliashberg theory” by generating large databases of α2F functions from both real materials and single- and multimodal artificial ones and learning the results of Tc from solutions to the Eliashberg equations. We additionally include in our study α2F functions for superhydrides, extending training and testing to the higher λ range. We show that the Allen–Dynes equation fails in this region particularly badly, since it was designed to fit materials with the ratio of the Allen–Dynes parameters \({\bar{\omega }}_{2}/{\omega }_{{{\mathrm{log}}}\,}\simeq 1\), which is strongly violated in some of the higher-Tc materials. Here λ is the integral \(2\int\nolimits_{0}^{\infty }{\alpha }^{2}F(\omega )/\omega \ {\rm {d}}\omega\), the frequencies \({\bar{\omega }}_{n}\) are the nth root of the nth moment of the normalized distribution g(ω) = 2/(λω) α2F(ω), and \({\omega }_{{{\mathrm{log}}}\,}\equiv \exp \left [ \int_0^\infty \ln \omega \, g(\omega) \, d\omega \right ]\).

We begin by introducing the McMillan and Allen–Dynes equations, against which we will compare our results. McMillan4, in an attempt to improve on the BCS weak-coupling Tc, incorporated elements of Eliashberg theory22 into a phenomenological expression, relating Tc to physical parameters that could in principle be extracted from tunneling data23,

where μ* is the Coulomb pseudopotential and ωD is the Debye frequency. Note that the McMillan formula predicts a saturation of Tc in the strong-coupling limit, λ → ∞, for fixed ωD.

Allen and Dynes5 showed that the true Eliashberg Tc did not obey such a bound in this limit but rather grew as \(\sqrt{\lambda }\). They proposed an alternate approximate fit to Eliashberg theory based on data on a few low-Tc superconductors known in 1975

where f1 and f2 are factors depending on \(\lambda ,{\mu }^{* },{\omega }_{{{\mathrm{log}}}\,}\), and \({\bar{\omega }}_{2}\).

Results and discussion

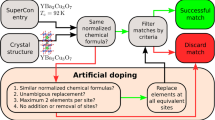

Figure 1 outlines our methods and computational workflow. We begin by collecting α2F(ω) spectral functions from ab initio calculations and augmenting the dataset with artificial spectral functions based on generated Gaussian functions. The Coulomb pseudopotential μ* is sampled as a free parameter and used, alongside the spectral functions, as an input to the Eliashberg equations. Eliashberg theory yields the superconducting gap function Δ, from which we extract \(T_{\mathrm{c}}^{\mathrm{E}}\). At the same time, we extract the quantities λ, \({\omega }_{{{\mathrm{log}}}\,}\), and \(\bar{\omega}_2\) from α2F. Next, we use machine learning techniques to learn the relationship between the four model inputs, or features, and the critical temperature from Eliashberg theory \({T}_{\mathrm{c}}^{\mathrm{E}}\). Finally, we compare the predictive models for Tc and discuss the feature-Tc relationships.

Computational details

We compile a set of 2874 electron–phonon spectral functions α2F(ω), summarized in Table 1. Of these, 13 are conventional phonon mediated superconductors, where we calculate α2F using the electron–phonon Wannier package (EPW)12,24 of the Quantum Espresso (QE) code25,26. An additional 42 (29 classic and 13 hydride superconductors) are obtained from the computational superconductivity literature. We augment the dataset by generating 2819 artificial multimodal α2F(ω) functions and calculating the corresponding Tc’s with the EPW code. The superconducting transition temperatures are estimated by using both the Allen–Dynes equation and by solving the isotropic Eliashberg equations. The raw data are available upon request.

The artificially generated α2F(ω) consist of three Gaussian peaks with randomly selected peak location and height

where G(ω) is a normalized Gaussian with width of 1/8 of the peak frequency ωi.

The total λ is then equal to the sum of the λi, which simplifies sampling of the space of spectral functions. The artificial trimodal α2F spectral functions resemble those of many realistic materials, see Fig. 2 for the example of LaAl2. The Allen–Dynes and Eliashberg Tc for the hydrides are obtained from published work (see refs. in Table 1).

To ensure efficient sampling of the input spaces, we select values of λ and μ* with pseudorandom Sobol sequences. As shown in Fig. 3, our uniform sampling scheme results in a set of artificially generated α2F corresponding to an approximately uniform distribution of Tc. Next, we removed artificial entries with Tc > 400 K to better reflect the distribution of realistic materials. While the histogram of μ* remains approximately uniform after this truncation, the histograms of λ, \({\omega }_{{{\mathrm{log}}}\,}\), and \({\bar{\omega }}_{2}\) become skewed towards lower values.

Data

In the Allen–Dynes formula, the “arbitrarily chosen” shape-dependent factor f2 is based on the numerical solutions using the spectral functions of Hg, Pb, and the Einstein model5. Because the number of α2F(ω) shapes is small, it is expected that the Allen–Dynes Tc (\({T}_{\,{{\mbox{c}}}}^{{{\mbox{AD}}}\,}\)) would have significant errors in some instances. Figure 4 illustrates such deviations for bimodal Gaussian spectral functions. So far, we discussed the α2F(ω) shapes in an abstract sense because there is no single parameter that uniquely determines their shape. Allen and Dynes proposed using the ratio \({\omega }_{\mathrm{log}} /{\bar{\omega }}_{2}\) as an indicator of the shape of α2F(ω). In Fig. 4, the ratio \({{T}_{{{\mathrm{c}}}}^{{{\mathrm{AD}}}}}/{{T}_{{{\mathrm{c}}}}^{{{\mathrm{E}}}}}\) is plotted against \({\omega}_{{{\mathrm{log}}}} / {\bar{\omega}}_{2}\) for λ = 0.6, 1, 2, 3 and 4. The results demonstrate that there can be significant differences between the Allen–Dynes \({T}_{{{\mathrm{c}}}}^{{{\mathrm{AD}}}}\) and Eliashberg \({T}_{{{\mathrm{c}}}}^{{{\mathrm{E}}}}\) even for some simple cases. The root mean square error in the Allen–Dynes paper is 5.6%. When the ratio \({\omega}_{{{\mathrm{log}}}} / {\bar{\omega}}_{2}\) is 1, the shape of α2F is that of the unimodal Einstein model and the Allen–Dynes Tc accurately predict the Eliashberg Tc regardless of the coupling strength. When the ratio \({\omega}_{{{\mathrm{log}}}} / {\bar{\omega}}_{2}\) decreases, i.e. the shape of α2F has more structure; whether the Allen–Dynes formula can then still reasonably predict the Eliashberg Tc depends on the electron–phonon coupling strength.

In this work, we train and test machine-learning models using the datasets listed in Table 1. Two sizes are reported for each non-Gaussian dataset, indicating the number of unique materials compared to the total number of datapoints. We sample μ* between [0.1, 0.16] which covers a wide range of possible μ* values5,27. The calculated, artificial Gaussian, and literature-derived α2F datasets are used for training all machine learning models. We left the hydride materials out of the training in order to validate the extrapolative capacity of each model.

Correction factors for T c from symbolic regression

As in our previous symbolic regression effort21, we use the SISSO framework to generate millions of candidate expressions by recursively combining the input variables with mathematical operators such as addition and exponentiation. We performed symbolic regression twice, sequentially, to obtain two dimensionless prefactors of the McMillan exponential, yielding a machine learned critical temperature

We name the two learned prefactors a posteriori based on their functional forms and the mechanisms by which they reduce the error in predicting Tc. The first factor

is obtained from the fit to the ratio \({T}_{{{\mathrm{c}}}}^{{{\mathrm{E}}}}/{T}_{{{\mathrm{c}}}}^{{{\mathrm{McMillan}}}}\) and eliminates the systematic underprediction of Tc at higher temperatures. Like the Allen–Dynes prefactor f2, fω includes the ratio \({\omega }_{{{\mathrm{log}}}}/{\bar{\omega }}_{2}\), modifying the prediction based on the shape of α2F(ω). Moreover, fω also scales with \(\sqrt{\lambda }\), like the Allen–Dynes prefactor f1. This is in agreement with the correct large-λ behavior of Eliashberg theory, unlike our earlier work21 and the modified Tc equation with linear correction proposed recently by Shipley et al. 28. The manifestation of both behaviors in fω gives credence to our symbolic regression approach because it incorporates the primary effects of the Allen–Dynes equation with fewer parameters. Applying the correction \({T}_{{{\mathrm{c}}}}={f}_{\omega }{T}_{{{\mathrm{c}}}}^{{{\mathrm{McMillan}}}\,}\) achieves a percent RMSE of 15.2% across the materials (non-Gaussian model) data, compared to 48.6% when using the Allen–Dynes equation.

The second correction factor

is obtained from the fit to the ratio \({T}_{\,{{\mathrm{c}}}}^{{{\mathrm{E}}}}/({f}_{\omega }{T}_{{{\mathrm{c}}}}^{{{\mathrm{McMillan}}}})\), effectively correcting the residual error from the fit of fω and thus cannot be used independently. Applying the correction \({T}_{{{\mathrm{c}}}}={f}_{\omega }{f}_{\mu }{T}_{{{\mathrm{c}}}}^{{{\mathrm{McMillan}}}}\) achieves a percent RMSE of 15.1% across the materials datasets, compared to 15.2% when using fω alone. The influence of fμ is more apparent when examining clusters of points corresponding to resampled μ* values for a single material, where the systematic error in \({T}_{{{\mathrm{c}}}}^{{{\mathrm{ML}}}}/{T}_{{{\mathrm{c}}}}^{{{\mathrm{E}}}}\) is reduced.

Note that fμ → 1 in both of the limits λ → 0 and λ → ∞, and in fact does not vary by more than ~10% from 1 over the data set.

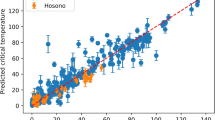

Figure 5 shows that, apart from the low-Tc non-hydride materials for which the difference is smaller than 0.1 K, the corrections fω and fμ dramatically improve predictions compared to using the Allen–Dynes equation. Since we excluded the hydrides from the training, these results successfully validate our data-driven symbolic regression approach by demonstrating the extrapolative capacity of the learned equations.

The model \({T}_{{{\mathrm{c}}}}^{{{\mathrm{Model}}}}\) is plotted against the Eliashberg \({T}_{{{\mathrm{c}}}}^{{{\mathrm{E}}}}\) such that accurate predictions lie on the gray 1:1 line. a, b Non-hydride and c, d artificial Gaussian panels depict the training error while e, f hydride35,36,37 panels show extrapolative capacity. The non-hydride and artificial Gaussian panels are colored by the log-density of points. We report the root-mean-square error (RMSE), mean-absolute error (MAE), maximum residual, and minimum residual values in Kelvin. The maximum residual corresponds to the largest overprediction while the minimum residual corresponds to the largest underprediction. The two multiplicative factors obtained from symbolic regression improve the prediction compared with the two multiplicative factors of the Allen–Dynes formula, particularly for the higher Tc systems.

To further quantify the similarity between the existing Allen–Dynes prefactors and the machine-learned prefactors, we employ two statistical measures, the Spearman and distance correlation. The Spearman correlation is a measure of monotonicity in the relationship between rankings of two variables. Like the Pearson correlation coefficient for linear correlation, the Spearman correlation varies between −1 and +1, where extrema imply high correlation and zero implies no correlation. Unlike the Pearson correlation, the Spearman correlation does not assume normally distributed datasets. By construction, all four prefactors tend to unity for many materials, resulting in asymmetric distributions that are unsuitable for analysis with parametric measures like the Pearson correlation.

In addition to the Spearman correlation, we compute the distance correlation, another nonparametric measure of the dependence between two variables. The distance correlation is defined as the ratio of the distance covariance and the product of the distance standard deviations, where distance covariance is the weighted Euclidean distance between the joint characteristic function of the two variables and the product of their marginal characteristic functions. Unlike the Pearson and Spearman correlation coefficients, the distance correlation varies between 0 and 1, where 0 indicates that the variables are independent, measuring both linear and nonlinear association.

Table 2 shows a strong relationship between f1, f2, and fω according to both Spearman and distance correlation metrics, with values close to one. This numerical analysis reinforces the conclusion that fω reproduces characteristics of both f1 and f2, as illustrated earlier in the comparison of functional forms. On the other hand, both Spearman correlation and distance correlation measures indicate slightly weaker relationships between fμ and the other three prefactors. The relative independence of fμ compared to fω, f1, and f2 stems from the sequential nature of the fitting process.

Comparing predictive models for T c

To compare existing equations for Tc with the corrections identified in this work, we benchmarked the RMSE across non-hydride materials, artificial Gaussians, and hydrides as tabulated in Table 3. Additionally, we compute the %RMSE by normalizing each RMSE by the mean value across the corresponding dataset. To assess the behavior of each model with increasing λ, we plot \({T}_{{{\mathrm{c}}}}/{\omega }_{{{\mathrm{log}}}\,}\) for each model in Fig. 6.

The McMillan and Xie at al. (2019) equations, which do not change with \({\omega }_{{{\mathrm{log}}}\,}/{\bar{\omega }}_{2}\), are depicted as dashed curves. The Allen–Dynes formula and the ANN, RF, and symbolic regression machine learning corrections from this work are plotted as shaded regions bound by \({\omega }_{{{\mathrm{log}}}\,}/{\bar{\omega }}_{2}=1.1\) and \({\omega }_{{{\mathrm{log}}}\,}/{\bar{\omega }}_{2}=1.6\) curves. All models behave similarly for low to moderate values of λ. For larger values of λ, the ANN, RF, and symbolic regression corrections deviate significantly from the Allen–Dynes equation as well as the previous symbolic regression equation. The RF regression exhibits discontinuities due to its piecewise-constant form.

As expected, the Allen–Dynes equation improves on the McMillan equation across all three groups. On the other hand, the equation identified by Xie et al. 21 in an earlier symbolic regression work performs slightly worse on the low-Tc non-hydride dataset but achieves lower RMSE across the artificial Gaussian and hydride materials despite being trained on a small set of 29 low-Tc materials.

Applying the new fω prefactor to the McMillan equation reduces %RMSE in non-hydride materials from 14.4% to 8.4%, in artificial Gaussian models from 45.1% to 9.2%, and in hydrides from 36.6% to 5.8%. Moreover, applying both fω and fμ results in a further, modest improvement to the RMSE. In Fig. 6, our machine-learned correction (blue) is nearly equal to the Allen–Dynes equation (gray) for values of λ up to 1 but rapidly increases at larger λ. Both bounds, for higher and lower values of \({\omega }_{{{\mathrm{log}}}\,}/{\bar{\omega }}_{2}\), exceed the bounded region of the Allen–Dynes equation, indicating that at least part of the new model’s success is due to an improvement in capturing the behavior of Tc with increasing λ.

We additionally fit a random forest (RF) model and an artificial neural network (ANN) model using the same training data to compare against our symbolic regression method. Hyperparameters for RF and ANN models were selected using 10-fold leave-cluster-out cross-validation and the same clusters identified for symbolic regression. On the other hand, the model error was estimated using nested cross-validation, where the inner loop was performed using a conventional 5-fold cross-validation scheme. Production models used in Fig. 6 were fit with the selected hyperparameters using the entire training set.

The RF is an ensemble model comprised of decision trees, each fit to random subsets of the data and queried to yield an independent prediction. Each decision tree uses a flow-chart-like series of decisions (branches) to yield predictions (leaves) and is optimized by varying decision thresholds. While individual decision trees are prone to overfitting, a RF produces robust predictions by averaging the predictions of its members. The optimized RF model, consisting of 100 decision trees with a maximum depth of eight splits per tree, achieved the lowest RMSE across all three models, with 4.7% RMSE in the testing set of hydride materials. This success may be attributed to both the flexibility of the method and the relative complexity compared to other methods. With up to 128 nodes per tree, the RF evaluates tens of thousands of binary decisions per prediction. On the other hand, as illustrated in Fig. 6, the resulting output (green) is discontinuous. Furthermore, the RF does not have the ability to extrapolate outside of regions of the input spaces included in the training data, resulting in constant-value outputs. This deficiency is evident in both upper- and lower-bound curves above λ = 3.8, where the RF correction results in a simple rescaling of the McMillan curve.

The ANN models in this work are feedforward neural networks, also known as multi-layer perceptrons, designed to learn highly non-linear function approximators to map multiple inputs to a target output. The feedforward architecture involves an input layer consisting of one neuron per input, one or more hidden layers, and an output layer consisting of one neuron per target. The value at each non-input neuron is a weighted, linear summation of the values in the preceding layer followed by a non-linear activation function. The optimized ANN includes three hidden layers with forty neurons each, totaling 3521 trainable parameters of multiplicative weights and additive biases. Despite the increased model complexity, the ANN performs similarly to the symbolic regression model, with slightly lower training RMSE and slightly higher testing RMSE. With increasing λ, the ANN model yields similar values of Tc as indicated by the overlap between the shaded regions of the symbolic regression model (blue) and the ANN (yellow).

For low to moderate values of λ, such as those originally studied by Allen and Dynes, all models behave similarly and the dimensionless corrections (f1, f2, fω, fμ, ANN, RF) are close to unity. However, as λ increases, the ANN, RF, and symbolic regression corrections deviate significantly from the Allen–Dynes equation as well as the previous symbolic regression equation21. The corrections introduced in this work successfully correct the systematic underprediction of Tc, with the symbolic regression solution offering simplicity and accuracy. Moreover, the monotonicity constraint in the symbolic regression search guarantees invertibility, allowing experimentalists to extract λ from measured Tc and the electron–phonon spectral function. This characteristic is not guaranteed for the RF and ANN models.

Summary

The present work demonstrates the application of symbolic regression to a curated dataset of α2F(ω) spectral functions, yielding an improved analytical correction to the McMillan equation for the critical temperature of a superconductor. We showed that the well-known Allen–Dynes equation, an early improvement based on fitting to a very limited set of spectral functions, exhibits systematic error when predicting the Eliashberg critical temperature of high-Tc hydrides, a flaw due to the original training set being based on low-Tc superconductors. The equation we obtain here by symbolic regression has the same form as the original Allen–Dynes equation, with exactly the same McMillan exponential factor, but has two prefactors that behave very differently than those employed by Allen–Dynes. They ensure that superconductors with spectral functions, α2F(ω), of unusual shapes, such that \({\bar{\omega }}_{2}/{\omega }_{{{\mathrm{log}}}\,}\) is significantly different from 1, are adequately described; this subset of conventional superconductors includes the new hydride high-pressure superconductors. In addition, the machine-learned equation can be simplified by dropping one of the prefactors with negligible loss of accuracy. Since the machine-learned expression of Eqs. (6)–(8) extends the accuracy of the Allen and Dynes expression to high-temperature superconductors while maintaining the utility and simplicity of the original formula, we suggest that this equation should replace the Allen–Dynes formula for predictions of critical temperatures and estimations of λ from experimental data, particularly for higher-temperature superconductors.

Using a dataset of ab initio calculations alongside artificially generated spectral functions, we mitigated the small-data problem associated with previous symbolic-regression efforts. The dimensionless correction factor identified by symbolic regression reproduces the expected physical behavior with increasing λ and achieves lower prediction errors than the Allen–Dynes corrections, despite having similar model complexity. Finally, we compared our equation to models generated with two other machine-learning techniques, which achieve modest improvements in error at the cost of far greater complexity and lack of invertibility. While the present work successfully learns the isotropic Eliashberg Tc, future extensions may incorporate additional data from fully-anisotropic Eliashberg calculations and experimental measurements. On the other hand, separate extensions may involve approximating α2F-related quantities from less-expensive calculation of density functional theory-based descriptors like the electronic density of states.

Methods

Calculating electron–phonon spectral functions with density functional theory

We calculate the electron–phonon spectral functions α2F(ω) for 13 compounds with density-functional theory using the QE code25,26 and the EPW12,24. We use the optimized norm-conserving pseudopotential29,30 and the PBE version of the generalized gradient exchange-correlation functional31. We sample the Brillouin zone for the electron orbitals using a 24 × 24 × 24k-point mesh and for the phonons using a 6 × 6 × 6 q-point mesh. To obtain the critical temperatures Tc of the compounds, we solve the isotropic Eliashberg equations with the EPW code.

Symbolic regression

We performed symbolic regression using the sure independence screening (SIS) and sparsifying operator (SISSO) framework32,33, generating millions of candidate expressions. Based on memory constraints, the subspace of expressions was limited to those generated within four iterations. This limitation precludes the appearance of expressions of the complexity of the Allen–Dynes equation, motivating our search for a dimensionless correction to the McMillan equation rather than directly learning models for Tc.

The initial quantities for generating expressions were the three dimensionless quantities λ, μ*, and the ratio \({\omega }_{{{\mathrm{log}}}\,}/{\bar{\omega }}_{2}\). Candidates were generated using the set of operators \(\{+,-,\times ,\exp ,{{\mathrm{log}}}\,,\sqrt{},\root 3 \of {}{,}^{-1}{,}^{2}{,}^{3}\}\). During the SIS step, these expressions were ranked based on their correlation to the ratio \({T}_{{{\mathrm{c}}}}^{{{\mathrm{E}}}}/{T}_{{{\mathrm{c}}}}^{{{\mathrm{McMillan}}}}\) rather than \({T}_{{{\mathrm{c}}}}^{{{\mathrm{E}}}}\) to identify dimensionless, multiplicative corrections to \({T}_{{{\mathrm{c}}}}^{{{\mathrm{McMillan}}}}\).

To facilitate generalizability, we employ leave-cluster-out cross-validation during the generation of expressions using k-means-clustering with k = 10 on the combined set of 179 non-hydride and 2819 artificial-Gaussian entries. For each round of cross-validation, we generate candidate equations using a different subset of nine clusters and used the remaining cluster to evaluate performance using the root-mean-square error metric. As such, each training sample was left out of training and used for testing during one round. The top 10,000 models, ranked by root-mean-square error (RMSE) across the training set, were returned from each round. Models that did not appear in all ten rounds, corresponding to those with poor performance in one or more clusters, were eliminated. Following the same principle, we ranked the remaining equations by the average RMSE across all ten rounds.

We note that the sparsifying operator (SO) step of the SISSO framework offers increased model complexity, as we explored in our previous work, but is limited in functional form to linear combinations of expressions generated from the preceding step. The linear combination of expressions from the initial subspace, by extension, also excludes equations as complex as the Allen–Dynes correction. Therefore, we did not consider linear combinations of expressions, meaning the SO simply selected the first-ranked expression from the SIS step in each run.

Data availability

The database of critical temperatures, descriptors derived from the computed spectral functions α2F(ω), and symbolic regression logs are freely available at https://MaterialsWeb.org and https://www.materialscloud.org34.

Code availability

The symbolic regression workflow software we developed is freely available on Github (https://github.com/henniggroup/).

References

Norman, M. R.Materials design for new superconductors. Rep. Prog. Phys. 79, 074502 (2016).

Boeri, L. et al. The 2021 Room-Temperature Superconductivity Roadmap. J. Condens. Matter Phys. (2021). https://doi.org/10.1088/1361-648X/ac2864.

Duan, D., Yu, H., Xie, H. & Cui, T. Ab Initio Approach and Its Impact on Superconductivity. J. Supercond. Nov. Mag. 32, 53 (2019).

McMillan, W. L. Transition temperature of strong-coupled superconductors. Phys. Rev. 167, 331–344 (1968).

Allen, P. B. & Dynes, R. C. Transition temperature of strong-coupled superconductors reanalyzed. Phys. Rev. B 12, 905–922 (1975).

Combescot, R. Critical temperature of superconductors: Exact solution from Eliashberg equations on the weak-coupling side. Phys. Rev. B 42, 7810–7824 (1990).

Marsiglio, F. Eliashberg theory in the weak-coupling limit. Phys. Rev. B 98, 024523 (2018).

Mirabi, S., Boyack, R. & Marsiglio, F. Eliashberg theory in the weak-coupling limit: Results on the real frequency axis. Phys. Rev. B 101, 064506 (2020).

Karakozov, A., Maksimov, E. & Mashkov, S. Effect of the frequency dependence of the electron-phonon interaction spectral function on the thermodynamic properties of superconductors. Zh. Eksp. Teor. Fiz. 68, 1937–1950 (1975).

Dolgov, O. V., Mazin, I. I., Golubov, A. A., Savrasov, S. Y. & Maksimov, E. G. Critical temperature and enhanced isotope effect in the presence of paramagnons in phonon-mediated superconductors. Phys. Rev. Lett. 95, 257003 (2005).

Wang, Y. & Chubukov, A. Quantum-critical pairing in electron-doped cuprates. Phys. Rev. B 88, 024516 (2013).

Giustino, F., Cohen, M. L. & Louie, S. G. Electron-phonon interaction using Wannier functions. Phys. Rev. B 76, 165108 (2007).

Margine, E. R. & Giustino, F. Anisotropic Migdal-Eliashberg theory using Wannier functions. Phys. Rev. B 87, 024505 (2013).

Capone, M. & Ciuchi, S. Polaron crossover and bipolaronic metal-insulator transition in the half-filled Holstein model. Phys. Rev. Lett. 91, 186405 (2003).

Hague, J. P. & d’Ambrumenil, N. Breakdown of Migdal-Eliashberg theory via catastrophic vertex divergence at low phonon frequency. J. Low. Temp. Phys. 151, 1149–1163 (2008).

Bauer, J., Han, J. E. & Gunnarsson, O. Quantitative reliability study of the Migdal-Eliashberg theory for strong electron-phonon coupling in superconductors. Phys. Rev. B 84, 184531 (2011).

Esterlis, I. et al. Breakdown of the Migdal-Eliashberg theory: A determinant quantum monte carlo study. Phys. Rev. B 97, 140501 (2018).

Chubukov, A. V., Abanov, A., Esterlis, I. & Kivelson, S. A. Eliashberg theory of phonon-mediated superconductivity – When it is valid and how it breaks down. Ann. Phys. 417, 168190 (2020).

Dee, P. M., Coulter, J., Kleiner, K. G. & Johnston, S. Relative importance of nonlinear electron-phonon coupling and vertex corrections in the Holstein model. Commun. Phys. 3, 145 (2020).

Flores-Livas, J. A. et al. A perspective on conventional high-temperature superconductors at high pressure: Methods and materials. Phys. Rep. 856, 1 – 78 (2020).

Xie, S. R., Stewart, G. R., Hamlin, J. J., Hirschfeld, P. J. & Hennig, R. G. Functional form of the superconducting critical temperature from machine learning. Phys. Rev. B 100, 174513 (2019).

Eliashberg, G. M. Interaction between electrons and lattice vibrations in a superconductor. Sov. Phys. JETP 11, 696 (1960).

McMillan, W. L. & Rowell, J. M. Lead phonon spectrum calculated from superconducting density of states. Phys. Rev. Lett. 14, 108–112 (1965).

Poncé, S., Margine, E., Verdi, C. & Giustino, F. Epw: Electron-phonon coupling, transport and superconducting properties using maximally localized Wannier functions. Comput. Phys. Commun. 209, 116–133 (2016).

Giannozzi, P. et al. Quantum Espresso toward the exascale. J. Chem. Phys. 152, 154105 (2020).

Giannozzi, P. et al. Quantum Espresso: A modular and open-source software project for quantum simulations of materials. J. Phys. Condens. Matter 21, 395502 (2009).

Allen, P. B. & Mitrović, B. Theory of superconducting Tc. Solid State Phys. 37, 1–92 (1983).

Shipley, A. M., Hutcheon, M. J., Needs, R. J. & Pickard, C. J. High-throughput discovery of high-temperature conventional superconductors. Physical Review B 104 (2021). https://doi.org/10.1103/physrevb.104.054501.

Hamann, D. R. Optimized norm-conserving Vanderbilt pseudopotentials. Phys. Rev. B 88, 085117 (2013).

Schlipf, M. & Gygi, F. Optimization algorithm for the generation of ONCV pseudopotentials. Comput. Phys. Commun. 196, 36–44 (2015).

Perdew, J. P., Burke, K. & Ernzerhof, M. Generalized gradient approximation made simple. Phys. Rev. Lett. 77, 3865–3868 (1996).

Ouyang, R., Curtarolo, S., Ahmetcik, E., Scheffler, M. & Ghiringhelli, L. M. Sisso: A compressed-sensing method for identifying the best low-dimensional descriptor in an immensity of offered candidates. Phys. Rev. Mater. 2, 083802 (2018).

Ouyang, R., Ahmetcik, E., Carbogno, C., Scheffler, M. & Ghiringhelli, L. M. Simultaneous learning of several materials properties from incomplete databases with multi-task SISSO. J. Phys. Mater. 2, 024002 (2019).

Xie, S. et al. Machine learning of superconducting critical temperature from Eliashberg theory. Materials Cloud Archive (2021). https://doi.org/10.24435/materialscloud:68-6p.

Kvashnin, A. G., Semenok, D. V., Kruglov, I. A., Wrona, I. A. & Oganov, A. R. High-temperature superconductivity in a Th-H system under pressure conditions. ACS Appl. Mater. Interfaces 10, 43809–43816 (2018).

Kruglov, I. A. et al. Uranium polyhydrides at moderate pressures: Prediction, synthesis, and expected superconductivity. Sci. Adv. 4, eaat9776 (2018).

Semenok, D. V., Kvashnin, A. G., Kruglov, I. A. & Oganov, A. R. Actinium hydrides AcH10, AcH12, and AcH16 as high-temperature conventional superconductors. J. Phys. Chem. Lett. 9, 1920–1926 (2018).

Uzunok, H. Y., Tütüncü, H. M., Srivastava, G. P. & Başoğlu, A. Theoretical investigation of superconductivity in the non-centrosymmetric SrPtGe3 and CaPtSi3 compounds. Philos. Mag. 99, 198–223 (2018).

Singh, S. & Kumar, R. Correction to: Superconducting properties of LaSn3 under positive hydrostatic pressure. J. Supercond. Nov. Magn. 32, 3437–3438 (2019).

Arslan, E., Karaca, E., Tütüncü, H., Başoglu, A. & Srivastava, G. Theoretical investigation of superconductivity in SrAuSi3 and SrAu2si2. J. Phys. Chem. Solids 95, 65–73 (2016).

Tütüncü, H. & Srivastava, G. Electron–phonon interaction and superconductivity in SnAs with the sodium chloride crystal structure. Solid State Commun. 221, 24–27 (2015).

Tütüncü, H., Karaca, E. & Srivastava, G. Ab initio investigation of superconductivity in orthorhombic MgPtSi. J. Alloy. Compd. 673, 302–308 (2016).

Uzunok, H., İpsara, E., Tütüncü, H., Srivastava, G. & Başoglu, A. The effect of spin orbit interaction for superconductivity in the noncentrosymmetric superconductor CaIrSi3. J. Alloy. Compd. 681, 205–211 (2016).

Uzunok, H., Tütüncü, H., Srivastava, G. & Başoğlu, A. The influence of spin orbit interaction on phonons and superconductivity in the noncentrosymmetric superconductors LaPt3Si and LaPtSi3. Intermetallics 86, 1–10 (2017).

Dilmi, S., Saib, S. & Bouarissa, N. Band structure, electron-phonon interaction and superconductivity of yttrium hypocarbide. Curr. Appl. Phys. 18, 1338–1344 (2018).

Sichkar, S. M. & Antonov, V. N. Electronic structure, phonon spectra and electron–phonon interaction in ScB2. Low. Temp. Phys. 39, 595–601 (2013).

Li, C. et al. Strong increase in superconducting Tc for Nb2InC under compressive strain. J. Appl. Phys. 117, 093908 (2015).

Tütüncü, H. M. & Srivastava, G. P. A comparative ab initio study of superconductivity in the body centered tetragonal YC2 and LaC2. J. Appl. Phys. 117, 153902 (2015).

Uzunok, H. Y., Tütüncü, H. M., Srivastava, G. P., İpsara, E. & Basoglu, A. The effect of spin orbit interaction on the physical properties of LaTSi3 (T = Ir, Pd, and Rh): First-principles calculations. J. Appl. Phys. 121, 193904 (2017).

Uzunok, H., Tütüncü, H., Karaca, E. & Srivastava, G. Theoretical investigation of antisymmetric spin-orbit coupling effect on the physical properties of noncentrosymmetric BaPtSb superconductor. Intermetallics 108, 109–116 (2019).

Karaca, E., Tütüncü, H. M., Uzunok, H. Y., Srivastava, G. P. & Ugur, Ş. Theoretical investigation of superconductivity in SrPd2Ge2, SrPd2As2, and CaPd2As2. Phys. Rev. B 93, 054506 (2016).

Uzunok, H. Y., Tütüncü, H. M., Karaca, E., Başoğlu, A. & Srivastava, G. P. Ab initio investigation of electron-phonon interaction in LaSn3 and CaSn3. Philos. Mag. Lett. 98, 375–391 (2018).

Tütüncü, H. M., Karaca, E. & Srivastava, G. P. Electron-phonon interaction and superconductivity in the borocarbide superconductor. Philos. Mag. 97, 2669–2688 (2017).

Tütüncü, H. M., Karaca, E. & Srivastava, G. P. Electron–phonon interaction and superconductivity in the La3Ni2B2N3. Philos. Mag. 97, 128–143 (2016).

Karaca, E., Karadağ, S., Tütüncü, H. M., Srivastava, G. P. & Uğur, Ş. First-principles investigation of superconductivity in the body-centred tetragonal LaRu2P2. Philos. Mag. 96, 2059–2073 (2016).

Wang, X., Chen, J. & Xie, D. Prospect of node-line semimetal Cu3PdN to be a topological superconductor. J. Supercond. Nov. Magn. 30, 2727–2734 (2017).

Shrivastava, D. & Sanyal, S. P. Electronic, phonon and superconducting properties of LaPtBi half-Heusler compound. Solid State Commun. 273, 1–4 (2018).

Saib, S., Karaca, E., Tütüncü, H. & Srivastava, G. Electron-phonon interaction and superconductivity in the multiband superconductor β-Bi2Pd. Intermetallics 84, 136–141 (2017).

Dabhi, S. D., Shrivastava, D., Jha, P. K. & Sanyal, S. P. On possibility of superconductivity in SnSb: A first principle study. Phys. C. 528, 56–59 (2016).

Shrivastava, D. & Sanyal, S. P. Electronic structure and superconducting behaviour of LuPtBi half-Heusler compound: A first principle study. Phys. C. 544, 22–26 (2018).

Wu, S., Yan, G. & Cheng, X. First-principles investigation of the superconducting properties of thallium sulfide. Phys. C. 562, 1–6 (2019).

Karaca, E. Theoretical investigation of the superconductivity mechanism of BaIr2As2. Phys. C. 563, 42–47 (2019).

Tütüncü, H. M. & Srivastava, G. P. Phonon anomalies and superconductivity in the Heusler compound YPd2Sn. J. Appl. Phys. 116, 013907 (2014).

Pawar, H., Aynyas, M., Shugani, M. & Sanyal, S. P. Electronic structure, lattice dynamics, and superconducting properties of mercury-alkaline earth metal compounds: A first-principles study. J. Supercond. Nov. Magn. 32, 3425–3430 (2019).

Bekaert, J. et al. Anisotropic type-I superconductivity and anomalous superfluid density in OsB2. Phys. Rev. B 94, 144506 (2016).

Acharya, N. & Sanyal, S. P. Structural phase transition, electronic and superconducting properties of ScBi and YBi. Solid State Commun. 266, 39–45 (2017).

Uzunok, H. Ab initio investigation of physical properties of LaT2B2C (T= Ir, Rh) compounds: A density functional theory approach. Phys. C. 568, 1353585 (2020).

Tütüncü, H. M. & Srivastava, G. P. Phonons and superconductivity in the cubic perovskite Cr3RhN. J. Appl. Phys. 112, 093914 (2012).

Yue, S.-Y., Cheng, L., Liao, B. & Hu, M. Electron–phonon interaction and superconductivity in the high-pressure cI16 phase of lithium from first principles. Phys. Chem. Chem. Phys. 20, 27125–27130 (2018).

Ortigoza, M. A. et al. Ab initio lattice dynamics and electron-phonon coupling of Bi(111). Phys. Rev. B 90, 195438 (2014).

Cuamba, A. S., Lu, H.-Y. & Ting, C. S. Electronic structure and phonon-mediated superconductivity in ScIrP compound: First-principles calculations. Phys. Rev. B 94, 094513 (2016).

Chen, J. Phonons in bulk and monolayer HfS2 and possibility of phonon-mediated superconductivity: A first-principles study. Solid State Commun. 237-238, 14–18 (2016).

Tütüncü, H. M., Karaca, E. & Srivastava, G. P. Electron-phonon superconductivity in the filled skutterudites LaRu4P12, LaRu4As12, and LaPt4Ge12. Phys. Rev. B 95, 214514 (2017).

Karaca, E., Tütüncü, H. M., Srivastava, G. P. & Uğur, S. Electron-phonon superconductivity in the ternary phosphides BaM2P2 (M = Ni, Rh, and Ir). Phys. Rev. B 94, 054507 (2016).

Tütüncü, H. M., Karaca, E., Uzunok, H. Y. & Srivastava, G. P. Physical properties of hexagonal BaPtAs with noncentrosymmetric SrPtSb-type and centrosymmetric YPtAs-type crystal structures: Effects of spin-orbit coupling. Phys. Rev. B 100, 174507 (2019).

Singh, S. & Kumar, R. Variation of superconducting transition temperature of YSn3 under negative pressure. J. Supercond. Nov. Magn. 32, 1157–1162 (2018).

Tütüncü, H. M., Karaca, E., Uzunok, H. Y. & Srivastava, G. P. Role of spin-orbit coupling in the physical properties of LaX3 (X = In, P, Bi) superconductors. Phys. Rev. B 97, 174512 (2018).

Ono, S. Low-temperature electron-phonon relaxation in Cu and Ag thin films. Phys. Rev. B 101, 201404 (2020).

Acknowledgements

We are grateful to L. Boeri, P. Allen, and W. Pickett for valuable discussions. We thank D. Semenok for providing data related to Actinium hydrides. The work presented here was performed under the auspice of Basic Energy Sciences, United States Department of Energy, contract number DE-SC0020385. Partial funding was also provided by the University of Florida Informatics Institute.

Author information

Authors and Affiliations

Contributions

All authors contributed extensively to the work presented in this paper. S.R.X., Y.Q., A.C.H., L.F., J.L., J.K., G.R.S., J.J.H., P.J.H., and R.G.H. conceived the overall methodology of data assembly, augmentation, Eliashberg calculations, and symbolic regression. Y.Q., B.D., J.M.D., I.S., and U.S.H. performed the literature search and collected the spectral function data for superconductors. S.R.X. and Y.Q. implemented the algorithm and performed the calculations and analysis. S.R.X., Y.Q., P.J.H., and R.G.H. contributed to the writing of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xie, S.R., Quan, Y., Hire, A.C. et al. Machine learning of superconducting critical temperature from Eliashberg theory. npj Comput Mater 8, 14 (2022). https://doi.org/10.1038/s41524-021-00666-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41524-021-00666-7

This article is cited by

-

Full-bandwidth anisotropic Migdal-Eliashberg theory and its application to superhydrides

Communications Physics (2024)

-

Electron–phonon physics from first principles using the EPW code

npj Computational Materials (2023)

-

Linear Jacobi-Legendre expansion of the charge density for machine learning-accelerated electronic structure calculations

npj Computational Materials (2023)

-

Artificial Intelligence in Physical Sciences: Symbolic Regression Trends and Perspectives

Archives of Computational Methods in Engineering (2023)

-

Machine Learning-Based Classification, Interpretation, and Prediction of High-Entropy-Alloy Intermetallic Phases

High Entropy Alloys & Materials (2023)