Abstract

One of the most exciting tools that have entered the material science toolbox in recent years is machine learning. This collection of statistical methods has already proved to be capable of considerably speeding up both fundamental and applied research. At present, we are witnessing an explosion of works that develop and apply machine learning to solid-state systems. We provide a comprehensive overview and analysis of the most recent research in this topic. As a starting point, we introduce machine learning principles, algorithms, descriptors, and databases in materials science. We continue with the description of different machine learning approaches for the discovery of stable materials and the prediction of their crystal structure. Then we discuss research in numerous quantitative structure–property relationships and various approaches for the replacement of first-principle methods by machine learning. We review how active learning and surrogate-based optimization can be applied to improve the rational design process and related examples of applications. Two major questions are always the interpretability of and the physical understanding gained from machine learning models. We consider therefore the different facets of interpretability and their importance in materials science. Finally, we propose solutions and future research paths for various challenges in computational materials science.

Similar content being viewed by others

Introduction

In recent years, the availability of large datasets combined with the improvement in algorithms and the exponential growth in computing power led to an unparalleled surge of interest in the topic of machine learning. Nowadays, machine learning algorithms are successfully employed for classification, regression, clustering, or dimensionality reduction tasks of large sets of especially high-dimensional input data.1 In fact, machine learning has proved to have superhuman abilities in numerous fields (such as playing go,2 self driving cars,3 image classification,4 etc). As a result, huge parts of our daily life, for example, image and speech recognition,5,6 web-searches,7 fraud detection,8 email/spam filtering,9 credit scores,10 and many more are powered by machine learning algorithms.

While data-driven research, and more specifically machine learning, have already a long history in biology11 or chemistry,12 they only rose to prominence recently in the field of solid-state materials science.

Traditionally, experiments used to play the key role in finding and characterizing new materials. Experimental research must be conducted over a long time period for an extremely limited number of materials, as it imposes high requirements in terms of resources and equipment. Owing to these limitations, important discoveries happened mostly through human intuition or even serendipity.13 A first computational revolution in materials science was fueled by the advent of computational methods,14 especially density functional theory (DFT),15,16 Monte Carlo simulations, and molecular dynamics, that allowed researchers to explore the phase and composition space far more efficiently. In fact, the combination of both experiments and computer simulations has allowed to cut substantially the time and cost of materials design.17,18,19,20 The constant increase in computing power and the development of more efficient codes also allowed for computational high-throughput studies21 of large material groups in order to screen for the ideal experimental candidates. These large-scale simulations and calculations together with experimental high-throughput studies22,23,24,25 are producing an enormous amount of data making possible the use of machine learning methods to materials science.

As these algorithms start to find their place, they are heralding a second computational revolution. Because the number of possible materials is estimated to be as high as a googol (10100),26 this revolution is doubtlessly required. This paradigm change is further promoted by projects like the materials genome initiative (Materials genome initiative) that aim to bridge the gap between experiment and theory and promote a more data-intensive and systematic research approach. A multitude of already successful machine learning applications in materials science can be found, e.g., the prediction of new stable materials,27,28,29,30,31,32,33,34,35 the calculation of numerous material properties,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51 and the speeding up of first-principle calculations.52

Machine learning algorithms have already revolutionized other fields, such as image recognition. However, the development from the first perceptron53,54 up to modern deep convolutional neural networks was a long and tortuous process. In order to produce significant results in materials science, one necessarily has not only to play to the strength of machine learning techniques but also apply the lessons already learned in other fields.

As the introduction of machine learning methods to materials science is still recent, a lot of published applications are quite basic in nature and complexity. Often they involve fitting models to extremely small training sets or even applying machine learning methods to composition spaces that could possibly be mapped out in hundreds of CPU hours. It is of course possible to use machine learning methods as a simple fitting procedure for small low-dimensional datasets. However, this does not play to their strength and will not allow us to replicate the success machine learning methods had in other fields.

Furthermore, and as always when entering a different field of science, nomenclature has to be applied correctly. One example is the expression “deep learning”, which is responsible for a majority of the recent success of machine learning methods (e.g., in image recognition and natural language processing55). It is of course tempting to describe one’s work as deep learning. However, denoting neural networks with one or two fully connected hidden layer as deep learning56 is confusing for researchers new to the topic, and it misrepresents the purpose of deep-learning algorithms. The success of deep learning is rooted in the ability of deep neural networks to learn descriptors of data with different levels of abstraction without human intervention.55,57 This is, of course, not the case in two-layer neural networks.

One of the major criticisms of machine learning algorithms in science is the lack of novel laws, understanding, and knowledge arising from their use. This comes from the fact that machine learning algorithms are often treated as black boxes, as machine-built models are too complex and alien for humans to understand. We will discuss the validity of the criticism and different approaches to this challenge.

Finally, there have already been a number of excellent reviews of materials informatics and machine learning in materials science in general,13,58,59,60,61,62 as well as some other covering specifically machine learning in the chemical sciences,63 in materials design of thermoelectrics and photovoltaics,64 in the development of lithium-ion batteries,65 and in atomistic simulations.66 However, owing to the explosion in the number of works using machine learning, an enormous amount of research has already been published since the past reviews and the research landscape has quickly transformed.

Here we concentrate on the various applications of machine learning in solid-state materials science (especially the most recent ones) and discuss and analyze them in detail. As a starting point, we provide an introduction to machine learning, and in particular to machine learning principles, algorithms, descriptors, and databases in materials science. We then review numerous applications of machine learning in solid-state materials science: the discovery of new stable materials and the prediction of their structure, the machine learning calculation of material properties, the development of machine learning force fields for simulations in material science, the construction of DFT functionals by machine learning methods, the optimization of the adaptive design process by active learning, and the interpretability of, and the physical understanding gained from, machine learning models. Finally, we discuss the challenges and limitations machine learning faces in materials science and suggest a few research strategies to overcome or circumvent them.

Basic principles of machine learning

Machine learning algorithms aim to optimize the performance of a certain task by using examples and/or past experience.67 Generally speaking, machine learning can be divided into three main categories, namely, supervised learning, unsupervised learning, and reinforcement learning.

Supervised machine learning is based on the same principles as a standard fitting procedure: it tries to find the unknown function that connects known inputs to unknown outputs. This desired result for unknown domains is estimated based on the extrapolation of patterns found in the labeled training data. Unsupervised learning is concerned with finding patterns in unlabeled data, as, e.g., in the clustering of samples. Finally, reinforcement learning treats the problem of finding optimal or sufficiently good actions for a situation in order to maximize a reward.68 In other words, it learns from interactions.

Finally, halfway between supervised and unsupervised learning lies semi-supervised learning. In this case, the algorithm is provided with both unlabeled as well as labeled data. Techniques of this category are particularly useful when available data are incomplete and to learn representations.69

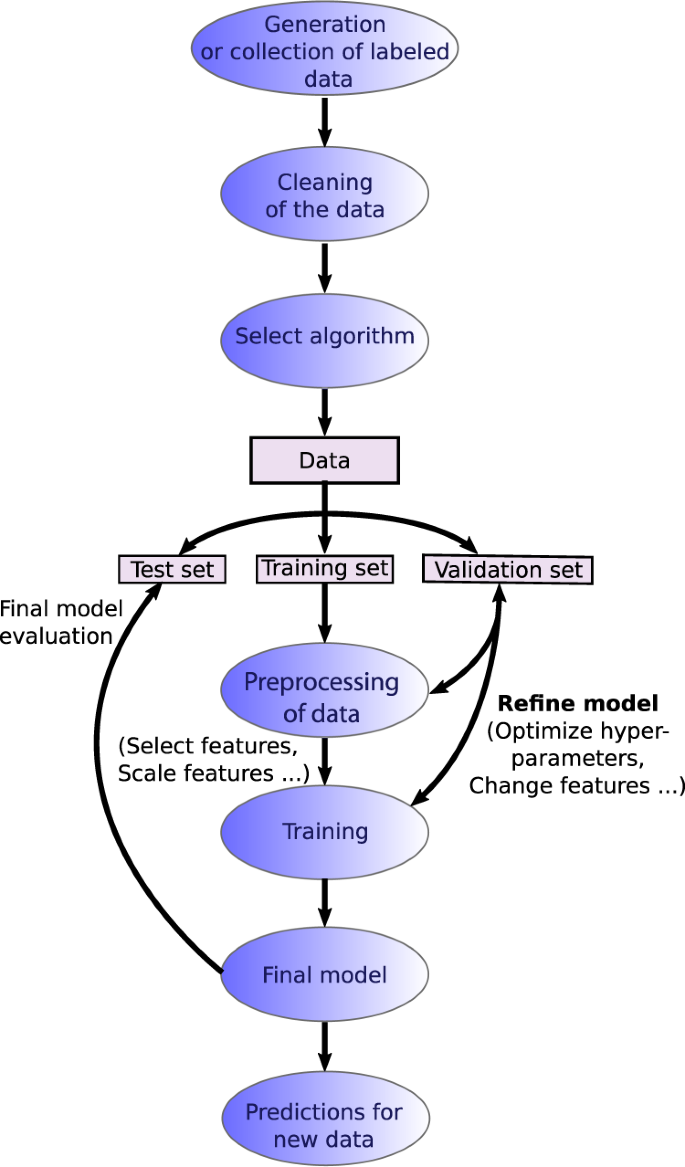

As supervised learning is by far the most widespread form of machine learning in materials science, we will concentrate on it in the following discussion. Figure 1 depicts the workflow applied in supervised learning. One generally chooses a subset of the relevant population for which values of the target property are known or creates the data if necessary. This process is accompanied by the selection of a machine learning algorithm that will be used to fit the desired target quantity. Most of the work consists in generating, finding, and cleaning the data to ensure that it is consistent, accurate, etc. Second, it is necessary to decide how to map the properties of the system, i.e., the input for the model, in a way that is suitable for the chosen algorithm. This implies to translate the raw information into certain features that will be used as inputs for the algorithm. Once this process is finished, the model is trained by optimizing its performance, usually measured through some kind of cost function. Usually this entails the adjustment of hyperparameters that control the training process, structure, and properties of the model. The data are split into various sets. Ideally, a validation dataset separate from the test and training sets is used for the optimization of the hyperparameters.

Every machine learning application has to consider the aspects of overfitting and underfitting. The reason for underfitting usually lies either in the model, which lacks the ability to express the complexity of the data, or in the features, which do not adequately describe the data. This inevitably leads to a high training error. On the other hand, an overfitted model interprets part of the noise in the training data as relevant information, therefore failing to reliably predict new data. Usually, an overfitted model contains more free parameters than the number required to capture the complexity of the training data. In order to avoid overfitting, it is essential to monitor during training not only the training error but also the error of the validation set. Once the validation error stops decreasing, a machine learning model can start to overfit. This problem is also discussed as the bias-variance trade off in machine learning.70,71 In this context, the bias is an error based on wrong assumptions in the trained model, while high variance is the error resulting from too much sensitivity to noise in the training data. As such, underfitted models possess high bias while overfitted models have high variance.

Before the model is ready for applications, it has to be evaluated on previously unseen data, denoted as test set, to estimate its generalization and extrapolation ability.

Different methods ranging from a simple holdout, over k-fold cross-validation, leave-one-out cross-validation, Monte Carlo cross-validation,72 up to leave-one-cluster-out cross-validation73 can be used for the evaluation. All these methods rely on keeping some data hidden from the model during the training process. For a simple holdout, this is just performed once, while for k-fold cross-validation the dataset is separated into k equally sized sets. The algorithm is trained with all but one of these k subsets, which is used for testing. Finally, the process is repeated for every subset. For leave-one-out cross-validation, each sample is left out of the training set once and the model is evaluated for that sample. It has to be noted that research in chemistry has shown that this form of cross-validation is insufficient to evaluate adequately the predictive performance of quantitative structure–property relationship and should therefore be avoided.74,75 Monte Carlo cross-validation is similar to k-fold cross-validation in the sense that the training and test set are randomly chosen. However, here the size of the training/test set is chosen independently from the number of folds. While this can be advantageous, it also means that a sample is not guaranteed to be in the test/training set. Leave-one-cluster-out cross-validation73 was specifically developed for materials science and estimates the ability of the machine learning model to extrapolate to novel groups of materials that were not present in the training data. Depending on the target quantity, this allows for a more realistic evaluation and a better understanding of the limitations of the machine learning model. Leave-one-cluster-out cross-validation removes a cluster of materials and then considers the error for predictions of the materials belonging to the removed cluster. This is, for example, consistent with the finding in ref. 76 that models trained on superconductors with a specific superconducting mechanism do not have any predictive ability for superconductors with other mechanisms.

Before discussing various applications of machine learning in materials science, we will give an overview of the different descriptors, algorithms, and databases used in materials informatics.

Databases

Machine learning in materials science is mostly concerned with supervised learning. The success of such methods depends mainly on the amount and quality of data that is available, and this turns out to be one of the major challenges in material informatics.77 This is especially problematic for target properties that can only be determined experimentally in a costly fashion (such as the critical temperature of superconductors—see section “Prediction of material properties—superconductivity”). For this reason, databases such as the materials project,78 the inorganic crystal structure database,79 and others (Materials genome initiative, The NOMAD archive, Supercon, National Institute of Materials Science 2011)80,81,82,83,84,85,86,87,88,89,90,91,92 that contain information on numerous properties of known materials are essential for the success of materials informatics.

In order for these databases and for materials informatics to thrive, a FAIR treatment of data93 is absolutely required. A FAIR treatment encompasses the four principles: findability, accessibility, interoperability, and repurposability.94 In other words, researchers from different disciplines should be able to find and access data, as well as the corresponding metadata, in a commonly accepted format. This allows the application of the data for new purposes.

Traditionally, negative results are often discarded and left unpublished. However, as negative data are often just as important for machine learning algorithms as positive results,28,95 a cultural adjustment toward the publication of unsuccessful research is necessary. In some disciplines with a longer tradition of data-based research (like chemistry), such databases already exist.95 In a similar vein, data that emerges as a side product but are not essential for a publication are often left unpublished. This eventually results in a waste of resources as other researchers are then required to repeat the work. In the end, every single discarded calculation will be sorely missed in future machine learning applications.

Features

A pivotal ingredient of a machine learning algorithm is the representation of the data in a suitable form. Features in material science have to be able to capture all the relevant information, necessary to distinguish between different atomic or crystal environments.96 The process itself, denoted as feature extraction or engineering, might be as simple as determining atomic numbers, might involve complex transformations such as an expansion of radial distribution functions (RDFs) in a certain basis, or might require aggregations based on statistics (e.g., average over features or the calculation of their maximum value). How much processing is required depends strongly on the algorithm. For some methods, such as deep learning, the feature extraction can be considered as part of the model.97 Naturally, the best choice for the representation depends on the target quantity and the variety of the space of occurrences. For completeness, we have to mention that the cost of feature extraction and of target quantity evaluation must never be comparable.

Ideally, descriptors should be uncorrelated, as an abundant number of correlated features can hinder the efficiency and accuracy of the model. When this happens, further feature selection is necessary to circumvent the curse of dimensionality,98 simplify models, and improve their interpretability as well as training efficiency. For example, several elemental properties such as the period and group in the periodic table, ionization potential, and covalent radius, can be used as features to model formation energies or distances to the convex hull of stability. However, it was shown that, to obtain acceptable accuracies, often only the period and the group are required.99

Having described the general properties of descriptors, we will proceed with a listing of the most used features in materials science. Without a doubt, the most studied type of features in this field are the ones related to the fitting of potential energy surfaces. In principle, the nuclear charges and the atomic positions are sufficient features, as the Hamiltonian of a system is usually fully determined by these quantities. In practice, however, while Cartesian coordinates might provide an unambiguous description of the atomic positions, they do not make a suitable descriptor, as the list of coordinates of a structure are ordered arbitrarily and the number of such coordinates varies with the number of atoms. The latter is a problem, as most machine learning models require a fixed number of features as an input. Therefore, to describe solids and large clusters, the number of interacting neighbors has to be allowed to vary without changing the dimensionality of the descriptor. In addition, a lot of applications require that the features are continuous and differentiable with respect to atomic positions.

A comprehensive study on features for atomic potential energy surfaces can be found in the review of Bartó et al.100. Important points mentioned in their work are: (i) the performance of the model and its ability to differentiate between different structures do not depend directly on the descriptors but on the similarity measurement between them; (ii) the quality of the descriptors is related to the differentiability with respect to the movement of the atoms, completeness of the representation, and invariance to the basis symmetries of physics (rotation, reflection, translation, and permutation of atoms of the same species). For clarification, a set of invariant descriptors qi, which uniquely determines an atomic environment up to symmetries, is defined as complete. An overcomplete set is then a set that includes more features than necessary.

Simple representations that show shortcomings as features are transformations of pairwise distances,101,102,103 Weyl matrices,104 and Z-matrices.105 Pairwise distances (and also reciprocal or exponential transformations of these) only work for a fixed number of atoms and are not unique under permutation of atoms. The constrain on the number of atoms is also present for polynomials of pairwise distances. Histograms of pairwise atomic distances are non-unique: if no information on the angles between the atoms is given, of if the ordering of the atoms is unknown, it might be possible to construct at least two different structures with the same features. Weyl matrices are defined by the inner product between neighboring atoms positions, forming an overcomplete set, while permutations of the atoms change the order of the rows and columns. Finally, Z-matrices or internal coordinate representations are not invariant under permutations of atoms.

In 2012, Rupp et al.106 introduced a representation for molecules based on the Coulomb repulsion between atoms I and J and a polynomial fit of atomic energies to the nuclear charge

The ordered eigenvalues (ε) of these “Coulomb matrices” are then used to measure the similarity between two molecules.

Here, if the number of atoms is not the same in both systems, ε is extended by zeros. In this representation, symmetrically equivalent atoms contribute equally to the feature function, the diagonalized matrices are invariant with respect to permutations and rotations, and the distance d is continuous under small variations of charge or interatomic distances. Unfortunately, this representation is not complete and does not uniquely describe every system. The incompleteness derives from the fact that not all degrees of freedom are taken into account when comparing two systems. The non-uniqueness can be demonstrated using as an example acetylene (C2H2).107 In brief, distortions of this molecule can lead to several geometries that are described by the same Coulomb matrix.

Faber et al.108 presented three distinct ways to extend the Coulomb matrix representation to periodic systems. The first of these features consists of a matrix where each element represents the full Coulomb interaction between two atoms and all their infinite repetitions in the lattice. For example:

where the sum over k (l) is taken over the atom i (j) in the unit cell and its N closest equivalent atoms. However, as this double sum has convergence issues, one has to resort to the Ewald trick: Xij is divided into a constant and two rapidly converging sums, one for the long-range interaction and another for the short-range interaction. Another extension by Faber et al. considers electrostatic interactions between the atoms in the unit cell and the atoms in the N closest unit cells. In addition, the long-range interaction is replaced by rapidly decaying interaction. In their final extension, the Coulomb interaction in the usual matrix is replaced by a potential that is symmetric with respect to the lattice vectors.

In the same line of work, Schütt et al.109 extended the Coulomb matrix representation by combining it with the Bravais matrix. Unfortunately, this representation is plagued by a degeneracy problem that comes from the arbitrary choice of the coordinate system in which the Bravais matrix is written. Another representation proposed by Schütt et al. is the so called partial radial distribution function, which considers the density of atoms β in a shell of width dr and radius r centered around atom α (see Fig. 2):

Two crystal structure representations. (Left) A unit cell with the Bravais vectors (blue) and base (pink) represented. (Right) Depiction of a shell of the discrete partial radial distribution function gαβ(r) with width dr. (Reprinted with permission from ref. 109. Copyright 2014 American Physical Society

Here Nα and Nβ are the number of atom of types α and β, Vr is the volume of the shell, and dαβ are the pairwise distances between two atom types.

Another form for representing the local structural environment was proposed by Behler and Parrinelo.110 Their descriptors111 involve an invariant set of atom-centered radial

and angular symmetry functions

where θijk is the angle between Rj − Ri and Rk − Ri. While the radial functions \(G_i^{\mathrm{r}}\) contain information on the interaction between pairs of atoms within a certain radius, the angular functions \(G_i^{\mathrm{a}}\) contains additional information on the distribution of the bond angles θijk. Examples for atom-centered symmetry functions are

and

Here fc is a cutoff function, leading to the neglect of interactions between atoms beyond a certain radius Rc. Furthermore, η controls the width of the Gaussians, Rs is just a parameter that shifts the Gaussians, λ determines the positions of the extrema of the cosine, and ζ controls the angular resolution. The sum over neighbors enforces the permutation invariance of these symmetry functions. Usually, 20–100 symmetry functions are used per atom, constructed by varying the parameters above. Beside atom centered, these functions can also be pair centered.112

A generalization of the atom-centered pairwise descriptor of Behler was proposed by Seko et al.113 It consists of simple basis functions constructed from the multinomial expansion of the product between a cutoff function (fc) and an analytical pairwise function (fn) (for example, Gaussian, cosine, Bessel, Neumann, polynomial, or Gaussian-type orbital functions)

where p is a positive integer, and \(R_{jk}^i\) indicates the distance between atoms j and k of structure i. The descriptor then uses the sum of these basis functions over all the atoms in the structure \(\left( {\mathop {\sum}\limits_j {b_{n,p}^{i,j}} } \right)\).

A similar type of descriptor is the angular Fourier series (AFS),100 which consists of a collection of orthogonal polynomials, like the Chebyshev polynomials Tl(cos θ) = cos (lθ), and radial functions

These radial functions are expansions of cubic or higher-order polynomials

where

A different approach for atomic environment features was proposed by Bartok et al.100,114 and leads to the power spectrum and the bispectrum. The approach starts with the generation of an atomic neighbor density function

which is projected onto the surface of a four-dimensional sphere with radius r0. As an example, Fig. 3 depicts the projection for 1 and 2 dimensions. Then the hyperspherical harmonic functions \(U_{m\prime m}^j\) can be used to represent any function ρ defined on the surface of a four-dimensional sphere115,116

Mapping of a flat space in one and two dimensions onto the surface of a sphere in one higher dimension. (Reprinted with permission from ref. 100. Copyright 2013 American Physical Society.)

Combining these with the rotation operator and the transformation of the expansion coefficients under rotation leads to the formula

for the SO(4) power spectrum. On the other hand, the bispectrum is given by

where \(C_{m\,m_1\,m_2}^{j\,j_1\,j_2}\) are the Clebsch–Gordon coefficients of SO(4). We note that the representations above are truncated, based on the band limit jmax in the expansion.

Finally, one of the most successful atomic environment features is the following similarity measurement

also known as the smooth overlap of atomic positions (SOAP) kernel.100 Here ζ is a positive integer that enhances the sensitivity of the kernel to changes on the atomic positions and ρ is the atomic neighbor density function, which is constructed from a sum of Gaussians, centered on each neighbor:

In practice, the function ρ is then expanded in terms of the spherical harmonics. In addition, k(ρ, ρ′) is a rotationally invariant kernel, defined as the overlap between an atomic environment and all rotated environments:

The normalization factor \(\sqrt {k(\rho ,\rho )k(\rho \prime ,\rho \prime )}\) ensures that the overlap of an environment with itself is one.

The SOAP kernel can be perceived as a three-dimensional generalization of the radial atom-centered symmetry functions and is capable of characterizing the entire atomic environment at once. It was shown to be equivalent to using the power or bispectrum descriptor with a dot-product covariance kernel and Gaussian neighbor densities.100

A problem with the above descriptors is that their number increases quadratically with the number of chemical species. Inspired by the Behler symmetry functions and the SOAP method, Artrith et al.117 devised a conceptually simple descriptor whose dimension is constant with respect to the number species. This is achieved by defining the descriptor as the union between two sets of invariant coordinates, one that maps the atomic positions (or structure) and another for the composition. Both of these mappings consist of the expansion coefficients of the RDFs

and angular distribution functions (ADF)

in a complete basis set ϕα (like the Chebyshev 94% average cross-validation error). The advantage of stability

and

Here fc is a cut-off function that limits the range of the interactions. The weights wtj and wtk take the value of one for the structure maps, while the weights for the compositional maps depend on the chemical species, according to the pseudo-spin convention of the Ising model. By limiting the descriptor to two and three body interactions, i.e., radial and angular contributions, this method maintains the simple analytic nature of the Behler–Parrinelo approach. Furthermore, it allows for an efficient implementation and differentiation, while systematic refinement is assured by the expansion in a complete basis set.

Sanville et al.118 used a set of vectors, each of which describes a five-atom chain found in the system. This information includes distances between the five atoms, angles, torsion angles, and functions of the bond screening factors.119

The simplex representation of a molecular structure of Kuz’min et al.120,121 consists in representing a molecule as a system of different simplex descriptors, i.e., a system of different tetratromic fragments. These descriptors become consecutively more detailed with the increase of the dimension of the molecule representation. The simplex descriptor at the one-dimensional (1D) level consists on the number of combinations of four atoms for a given composition. At the two-dimensional (2D) level, the topology is also taken into account, while at the 3D level, the descriptor is the number of simplexes of fixed composition, topology, chirality, and symmetry. The extension of this methodology to bulk materials was proposed by Isayev et al.122 and counts bounded and unbounded simplexes (see Fig. 4). While a bonded simplex characterizes only a single component of the mixture, unbounded simplexes can describe up to four components of the unit cell.

Depiction of the generation of the simplex representation of molecular structure descriptors for materials. (Reprinted with permission from ref. 122. Further permissions should be directed to the ACS.)

Isayef et al.41 also adapted property-labeled material fragments123 to solids. The structure of the material is encoded in a graph that defines the connectivity within the material based on its Voronoi tessellation124,125 (see Fig. 5). Only strong bonding interactions are considered. Two atoms are seen as connected only when they share a Voronoi face and the interatomic distance does not exceed the sum of the Cordero covalent bond lengths.126 In the graph, the nodes correspond to the chemical elements, which are identified through a plethora of elemental properties, like Mendeleev group, period, thermal conductivity, covalent radius, etc. The full graph is divided into subgraphs that correspond to the different fragments. In addition, information about the crystal structure (e.g., lattice constants) is added to the descriptor of the material, resulting in a feature vector of 2500 values in total. A characteristic of these graphs is their adjacency matrix, which consists of a square matrix of order n (number of atoms) filled with zeros except for the entries aij = 1 that occur when atom i and j are connected. Finally, for every property scheme q, the descriptors are calculated as

where the set of indices go over all pairs of atoms or over all pairs of bonded atoms, and Mij are the elements of the product between the adjacency matrix of the graph and the reciprocal square distance matrix.

Representation of the construction of property-labeled material fragments. The atomic neighbors of a crystal structure (a) are found via Voronoi tessellation (b). The full graph is constructed from the list of connections, labeled with a property (c) and decomposed into smaller subgraphs (d). (Reprinted with permission from ref. 41 licensed under the CC BY 4.0 [https://creativecommons.org/licenses/by/4.0/])

A different descriptor, named orbital-field matrix, was introduced by Pham et al.127 Orbital-field matrices consist in the weighted product between one-hot vectors \(\left( {o_i^p} \right)\), resembling those from the field of natural language processing. These vectors are filled with zeros with the exception of the elements that represent the electronic configuration of the valence of the atom. As an example, for the sodium atom with electronic configuration [Ne]3s1, the one-hot vector is filled with zeros except for the first element, which is 1. The elements of the matrices are calculated from:

where the weight \(w_k(\theta _k^p,r_{pk})\) represents the contribution of atom k to the coordination number of the center atom p and depends on the distance between the atoms and the solid angle \(\theta _k^p\) determined by the face of the Voronoi polyhedron between the atoms. To represent crystal structures, the orbital-field matrices are averaged over the number of atoms Np in the unit cell:

Another way to construct features based on graphs is the crystal graph convolutional neural network (CGCNN) framework, proposed by Xie et al.40 and shown schematically in Fig. 6. The atomic properties are represented by the nodes and encoded in the feature vectors vi. Instead of using continuous values, each continuous property is divided into ten categories resulting in one-hot features. This is obviously not necessary for the discrete properties, which can be encoded as standard one-hot vectors without further transformations. The edges represent the bonding interactions and are constructed analogously to the property-labeled material fragments descriptor. Unlike most graphs, these crystal graphs allow for several edges between two nodes, due to periodicity. Therefore, the edges are encoded as one-hot feature vectors \(u_{(i,j)_k}\), which translates into the kth bond between atom i and j. Crystal graphs do not form an optimal representation for predicting target properties by themselves; however, they can be improved by using convolution layers. After each convolution layer, the feature vectors gradually contain more information on the surrounding environment due to the concatenation between atom and bond feature vectors. The best convolution function of Xie et al. consisted of

where \({\boldsymbol{W}}_f^{(t)}\), \({\boldsymbol{W}}_s^{(t)}\), and \({\boldsymbol{b}}_i^{(t)}\) represent the convolution weight matrix, self-weight matrix, and the bias of the tth layer, respectively. In addition, e indicates element-wise multiplication, σ denotes the sigmoid function, and \(z_{(i,j)_k}^{(t)}\) is the concatenation of neighbor vectors:

Here ⊕ denotes concatenation of vectors.

Illustration of the crystal graph convolutional neural network. a Construction of the graph. b Structure of the convolutional neural network. (Reprinted with permission from ref. 40. Copyright 2018 American Physical Society.)

After R convolutions, a pooling layer reduces the spatial dimensions of the convolution neural network. Using skip layer connections,128 the pooling function operates not only on the last feature vector but also on all feature vectors (obtained after each convolution).

The idea of applying graph neural networks129,130,131 to describe crystal structures stems from graph-based models for molecules, such as those proposed in refs. 131,132,133,134,135,136,137,138,139,140. Moreover, all these models can be reorganized into a single common framework, known as message passing neural network141 (MPNNs). The latter can be defined as a model operating on undirected graphs G, with edge features xv and vertex features evw. In this context, the forward pass is divided into two phases: the message passing phase and the readout phase.

During the message passing phase, which lasts for T interaction steps, the hidden states hv at each node in the graph are updated based on the messages \(m_v^{t + 1}\):

where St(⋅) is the vertex update function. The messages are modified at each interaction by an update function Mt(⋅), which depends on all pairs of nodes (and their edges) in the neighborhood of v in the graph G:

where N(v) denotes the neighbors of v.

The readout phase occurs after T interaction steps. In this phase, a readout function R(⋅) computes a feature vector for the entire graph:

Jørgensen et al.142 extended MPNNs with an edge update network, which enforces the dependence of the information exchanged between atoms on the previous edge state and on the hidden states of the sending and receiving atom:

where Et(.) is the edge update function. One example of MPNNs are causal generative neural networks. The message corresponds to \(z_{(i,j)_k}^{(t)}\), defined in Eq. (27). Likewise, the hidden node update function corresponds to the convolution function of Eq. (27). In this case, we can clearly see that the hidden node update function depends on the message and on the hidden node state. The readout phase comes after R convolutions (or T iterations steps) and the readout function corresponds to the pooling layer function of the CGCNNs.

Up to now, we discussed very general features to describe both the crystal structure and chemical composition. However, should constrains be applied to the material space, the features necessary to study such systems can be vastly simplified. As mentioned above, elemental properties alone can be used as features, e.g., when a training set is restricted to only one kind of crystal structure and stoichiometry.33,35,56,99,143 Consequently, the target property only depends on the chemical elements present in the composition. Another example can be found in ref. 144, where a polymer is represented by the number of building blocks (e.g., number of C6H4, CH2, etc) or of pairs of blocks.

The crude estimations of properties can be an interesting supplement to standard features as discussed in ref. 77. As its name implies, crude estimations of properties consist of the calculation of a target property (for example, the experimental band gap) utilizing crude estimators [for example, the DFT band gap calculated with the Perdew–Burke–Ernzerhof (PBE) approximation145 to the exchange-correlation functional]. In principle, this approach can achieve successful results, as the machine learning algorithm no longer needs to predict a target property but rather an error or a difference between properties calculated with two well-defined methodologies.

Fischer et al.146 took another route and used as features a vector that completely denotes the possible ground states of an alloy:

where \(x_{c_i}\) denotes the all possible crystal structures present in the alloy at a given composition and \(x_{E_1}\) the elemental constituents of the system. In this way, the vector X = (fcc, fcc, Au, Ag) would represent the gold–silver system. Furthermore, the probability density p(X) denotes the probability that X is the set of ground states in a binary alloy. With these tools, one can find the most likely crystal structure for a given composition by sorting the probabilities and predict crystal structures by evaluating the conditional probability p(X|e), where e denotes unknown variables.

Having presented so many types of descriptors, the question that now remains concerns the selection of the best features for the problem at hand. In section “Basic principles of machine learning—Algorithms”, we discuss some automatic feature selection algorithms, e.g., least absolute shrinkage and selection operator (LASSO), sure independence screening and sparsifying operator (SISSO), principal component analysis (PCA), or even decision trees. Yet these methods mainly work for linear models, and selecting a feature for, e.g., a neural network force field from the various features we described is not possible with any of these methods. A possible solution to this problem is to perform through benchmarks. Unfortunately, while there are many studies presenting their own distinct way to build features and applying them to some problem in materials science, fewer studies96,100,147 actually present quantitative comparisons between descriptors. Moreover, some of the above features require a considerable amount of time and effort to be implemented efficiently and are not readily and easily available.

In view of the present situation, we believe that the materials science community would benefit greatly from a library containing efficient implementations of the above-mentioned descriptors and an assembly of benchmark datasets to compare the features in a standardized manner. Recent work by Himanen et al.148 addresses part of the first problem by providing efficient implementations of common features. The library is, however, lacking the implementation of the derivatives. SchNetPack by Schütt et al.149 also provides an environment for training deep neural network for energy surfaces and various material properties. Further useful tools and libraries can be found in refs. 150,151,152

Algorithms

In this section, we briefly introduce and discuss the most prevalent algorithms used in materials science. We start with linear- and kernel-based regression and classification methods. We then introduce variable selection and extraction algorithms that are also largely based on linear methods. Concerning completely non-linear models, we discuss decision tree-based methods like random forests (RFs) and extremely randomized trees and neural networks. We start with simple fully connected feed-forward networks and convolutional networks and continue with more complex applications in the form of variational autoencoders (VAEs) and generative adversarial networks (GANs).

In ridge regression, a multi-dimensional least-squares linear-fit problem, including a L2-regularization term, is solved:

The extra regularization term is included to favor specific solutions with smaller coefficients.

As complex regression problems can usually not be solved by a simple linear model, the so-called kernel trick is often applied to ridge regression.153 Instead of using the original descriptor x, the data are first transformed into a higher-dimensional feature space ϕ(x). In this space, the kernel k(x, y) is equal to the inner product 〈ϕ(x), ϕ(y)〉. In practice, only the kernel needs to be evaluated, avoiding an inefficient or even impossible explicit calculation of the features in the new space. Common kernels are, e.g.,154, the radial basis function kernel

or the polynomial kernel of degree d

Solving the minimization problem given by Eq. (34) in the new feature space results in a non-linear regression in the original feature space. This is usually referred to as kernel ridge regression (KRR). KRR is generally simple to use, as for a successful application of KRR only very few hyperparameters have to be adjusted. Consequently, KRR is often used in materials science.

Support vector machines155 (SVMs) search for the hyperplanes that divide a dataset into classes such that the margin around the hyperplane is maximized (see Fig. 7). The hyperplane is completely defined by the data points that lie the closest to the plane, i.e., the support vectors from which the algorithm derives its name.

Analogously to ridge regression, the kernel trick can be used to arrive at non-linear SVMs.153 SVM regressors also create a linear model (non-linear in the kernel case) but use the so-called ε-insensitive loss function:

where f(x) is the linear model and ε a hyperparameter. In this way, errors smaller than the threshold defined by ε are neglected.

When comparing SVMs and KRR, no big performance differences are to be expected. Usually SVMs arrive at a sparser representation, which can be of advantage; however, their performance relies on a good setting of the hyperparameters. In most cases, SVMs will provide faster predictions and consume less memory, while KRR will take less time to fit for medium datasets. Nevertheless, owing to the generally low computational cost of both algorithms, these differences are seldom important for relatively small datasets. Unfortunately, neither method is feasible for large datasets as the size of the kernel matrix scales quadratically with the number of data points.

Gaussian process regression (GPR) relies on the assumption that the training data were generated by a Gaussian process and therefore consists of samples from a multivariate Gaussian distribution. The only other assumption that enter the regression are the forms of the covariance function k(x, x′) and the mean (which is often assumed to be zero). Based on the covariance matrix, whose elements represent the covariance between two features, the mean and the variance for every possible feature value can be predicted. The ability to estimate the variance is the main advantage of GPR, as the uncertainty of the prediction can be an essential ingredient of a materials design process (see section “Adaptive design process and active learning”). GPR also uses a kernel to define the covariance function. In contrast to KRR or SVMs where the hyperparameters of the kernel have be optimized with an external validation set, the hyperparameters in GPR can be optimized with gradient descent if the calculation of the covariance matrix and its inverse are computationally feasible. Although modern and fast implementations of Gaussian processes in materials science exist (e.g., COMBO156), their inherent scaling is quite limiting with respect to the data size and the descriptor dimension as a naive training requires an inversion of the covariance matrix of order \({\cal{O}}(N^3)\) and even the prediction scales with \({\cal{O}}(N^2)\) with respect to the size of the dataset.157 Based on the principles of GPR, one can also produce a classifier. First, GPR is used to qualitatively evaluate the classification probability. Then a sigmoid function is applied to the latent function resulting in values in the interval [0, 1].

In the previous description of SVMs, KRR, and GPR, we assumed that a good feature choice is already known. However, as this choice can be quite challenging, methods for feature selection can be essential.

The LASSO158,159 attempts to improve regression performance through the creation of sparse models through variable selection. It is mostly used in combination with least-squares linear regression, in which case it results in the following minimization problem159:

where yi are the outcomes, xi the features, and β the coefficients of the linear model that have to be determined. In contrast to ridge regression, where the L2-norm of the regularization term is used, LASSO aims at translating most coefficients to zero. In order to actually find the model with the minimal number of non-zero components, one would have to use the so called L0-norm of the coefficient vector, instead of the L1-norm used in LASSO. (The L0-norm of a vector is equal to its number of non-zero elements). However, this problem is non-convex and NP-hard and therefore infeasible from a computational perspective. Furthermore, it is proven160 that the L1-norm is a good approximation in many cases. The ability of LASSO to produce very sparse solutions makes it attractive for cases where a simple, maybe even simulatable model (see section “Discussion and conclusions—Interpretability”), is needed. The minimization problem from Eq. (38), under the constraint of the L0-norm and the theory around it, is also known as compressed sensing.161

Ghiringhelli et al. described an extended methodology for feature selection in materials science based on LASSO and compressed sensing.162 Starting with a number of primary features, the number of descriptors is exponentially increased by applying various algebraic/functional operators (such as the absolute value of differences, exponentiation, etc.) and constructing different combinations of the primary features. Necessarily, physical notions like the units of the primary features constrain the number of combinations. LASSO is then used to reduce the number of features to a point where a brute force combination approach to find the lowest error is possible. This approach is chosen in order to circumvent the problems pure LASSO faces when treating strongly correlated variables and to allow for non-linear models.

As LASSO is unfortunately still computationally infeasible for very high-dimensional feature spaces (>109), Ouyang et al. developed the SISSO163 that combines sure independence screening,164 other sparsifying operators, and the feature space generation from ref. 162. Sure independence screening selects a subspace of features based on their correlation with the target variable and allows for extremely high-dimensional starting spaces. The selected subspace is than further reduced by applying the sparsifying operator (e.g., LASSO). Predicting the relative stability of octet binary materials as either rock-salt or zincblende was used as a benchmark. In this case, SISSO compared favorably with LASSO, orthogonal matching pursuit,165,166 genetic programming,167 and the previous algorithm from ref. 162 Bootstrapped-projected gradient descent168 is another variable selection method developed for materials science. The first step of bootstrapped-projected gradient descent consists in clustering the features in order to combat the problems other algorithms like LASSO face when encountering strongly correlated features. The features in every cluster are combined in a representative feature for every cluster. In the following, the sparse linear fit problem is approximated with projected gradient descent169 for different levels of sparsity. This process is also repeated for various bootstrap samples in order to further reduce the noise. Finally, the intersection of the selected feature sets across the bootstrap samples is chosen as the final solution.

PCA170,171 extracts the orthogonal directions with the greatest variance from a dataset, which can be used for feature selection and extraction. This is achieved by diagonalizing the covariance matrix. Sorting the eigenvectors by their eigenvalues (i.e., by their variance) results in the first principal component, second principal component, and so on. The broad idea behind this scheme is that, in contrast to the original features, the principal components will be uncorrelated. Furthermore, one expects that a small number of principal components will explain most of the variance and therefore provide an accurate representation of the dataset. Naturally, the direct application of PCA should be considered feature extraction, instead of feature selection, as new descriptors in the form of the principal components are constructed. On the other hand, feature selection based on PCA can follow various strategies. For example, one can select the variables with the highest projection coefficient from, respectively, the first n principal components when selecting n features. A more in-depth discussion of such strategies can be found in ref. 171.

The previous algorithms can be considered as linear models or linear models in a kernel space. An important family of non-linear machine learning algorithms is composed by decision trees. In general terms, decision trees are graphs in tree form,172 where each node represents a logic condition aiming at dividing the input data into classes (see Fig. 8) or at assigning a value in the case of regressors. The optimal splitting conditions are determined by some metric, e.g., by minimizing the entropy after the split or by maximizing an information gain.173

In order to avoid the tendency of simple decision trees to overfit, ensembles such as RFs174 or extremely randomized trees175 are used in practice. Instead of training a single decision tree, multiple decision trees with a slightly randomized training process are built independently from each other. This randomization can include, for example, using only a random subset of the whole training set to construct the tree, using a random subset of the features, or a random splitting point when considering an optimal split. The final regression or classification result is usually obtained as an average over the ensemble. In this way, additional noise is introduced into the fitting process and overfitting is avoided.

In general, decision tree ensemble methods are fast and simple to train as they are less reliant on good hyperparameter settings than most other methods. Furthermore, they are also feasible for large datasets. A further advantage is their ability to evaluate the relevance of features through a variable importance measure, allowing a selection of the most relevant features and some basic understanding of the model. Broadly speaking, these are based on the difference in performance of the decision tree ensemble by including and excluding the feature. This can be measured, e.g., through the impurity reduction of splits using the specific feature.176

Extremely randomized trees are usually superior to RFs in higher variance cases as the randomization decreases the variance of the total model175 and demonstrate at least equal performances in other cases. This proved true for several applications in materials science where both methods were compared.99,177,178 However, as RFs are more widely known, they are still prevalent in materials science.

Boosting methods179 generally combine a number of weak predictors to create a strong model. In contrast to, e.g., RFs where multiple strong learners are trained independently and combined through simple averaging to reduce the variance of the ensemble model, the weak learners in boosting are not trained independently and are combined to decrease the bias in comparison to a single weak learner. Commonly used methods, especially in combination with decision tree methods, are gradient boosting180,181 and adaptive boosting.182,183 In materials science, they were applied to the prediction of bulk moduli184,185 and the prediction of distances to the convex hull, respectively.99,186

Ranging from feed-forward neural networks over self-organizing maps187 up to Boltzmann machines188 and recurrent neural networks,189 there is a wide variety of neural network structures. However, until now only feed-forward networks have found applications in materials science (even if some Boltzmann machines are used in other areas of theoretical physics190). As such, in the following we will leave out “feed-forward” when referring to feed-forward neural networks. In brief, a neural network starts with an input layer, continues with a certain number of hidden layers, and ends with an output layer. The neurons of the nth layer, denoted as the vector xn, are connected to the previous layer through the activation function ϕ(x) and the weight matrix \(A_{ij}^{n - 1}\):

The weight matrices are the parameters that have to be fitted during the learning process. Usually, they are trained with gradient descent style methods with respect to some loss function (usually L2 loss with L1 regularization), through a method known as back-propagation.

Inspired by biological neurons, sigmoidal functions were classically used as activation functions. However, as the gradient of the weight-matrix elements is calculated with the chain rule, deeper neural networks with sigmoidal activation functions quickly lead to a vanishing gradient,191 hampering the training process. Modern activation functions such as rectified linear units192,193

or exponential linear units194

alleviate this problem and allow for the development of deeper neural networks.

The real success story of neural networks only started once convolutional neural networks were introduced to image recognition.55,195 Instead of solely relying on fully connected layers, two additional layer variants known as convolutional and pooling layers were introduced (see Fig. 9).

Convolutional layers consist of a set of trainable filters, which usually have a receptive field that considers a small segment of the total input. The filters are applied as discrete convolutions across the whole input, allowing the extraction of local features, where each filter will learn to activate when recognizing a different feature. Multiple filters in one layer add an additional dimension to the data. As the same weights/filters are used across the whole input data, the number of hidden neurons is drastically reduced in comparison to fully connected layers, thus allowing for far deeper networks. Pooling layers further reduce the dimensionality of the representation by combining subregions into a single output. Most common is the max pooling layer that selects the maximum from each region. Furthermore, pooling also allows the network to ignore small translations or distortions. The concept of convolutional networks can also be extended to graph representations in material science,139 in what can be considered MPNNs141. In general, neural networks with five or more layers are considered deep neural networks,55 although no precise definition of this term in relation to the network topology exists. The advantage of deep neural networks is not only their ability to learn representations with different abstraction levels but also to reuse them.97 Ideally, the invariance and differentiation ability of the representation should increase with increasing depth of the model.

Obviously, this saves resources that would otherwise be spent on feature engineering. However, some of these resources have now to be allocated to the development of the topology of the neural network. If we consider hard-coded layers (like pooling layers), one can once again understand them as feature extraction through human intervention. While some methods for the automatic development of neural network structures exist (e.g., the neuroevolution of augmenting topologies196), in practice the topologies of neural networks are still developed through trial and error. The extreme speedup in training time through graphics processing unit implementations and new methods that improve the training of deep neural networks, like dropout197 and batch normalization,198 also played a big role in the success story of neural networks. As these methods are included in open source libraries, like tensorflow199 or pytorch,200 they can easily be applied in materials science.

Neural networks can also be used in a purely generative manner, for example, in the form of autoencoders201,202 or GANs.203 Generative models learn to reproduce realistic samples from the distribution of data they are trained on. Naturally, one of the end goals of machine learning in materials science is the development of generative models, which can take into account material properties and therefore encompass most of the material design process.

Autoencoders are built with the purpose of learning a new efficient representation of the input data without supervision. Typically, the autoencoder is divided into two parts (see Fig. 10). The first half of the neural network is the encoder, which ends with a layer that is typically far smaller than the input layer in order to force the autoencoder to reduce the dimensionality of the data. The second half of the network, the decoder, attempts to regain the original input from the new encoded representation.

VAEs are based on a specific training algorithm, namely, stochastic gradient variational Bayes,204 that assumes that the VAE learns an approximation of the distribution of the input. Naturally, VAEs can also be used as generative models by generating data in the form of the output of the encoder and subsequently decoding it.

GANs consist of two competing neural networks that are trained together (see Fig. 11): a generative model that attempts to produce samples from a distribution and a discriminative model that predicts the probability that an input belongs to the original distribution or was produced by the generative model. GANs have found great success in image processing205,206 and have recently been introduced to other fields, such as astronomy,207 particle physics,208 genetics,209 and also very recently to materials science.29,210,211

More information about these algorithms can be found in the references provided or in refs. 1,212,213,214,215,216. We would like to note that the choice of the machine learning algorithm is completely problem dependent. It can be useful to establish a baseline for the quality of the model by using simple approaches (such as extremely randomized trees) before spending time optimizing hyperparameters of more complex models.

Material discovery

Nearly 30 years ago, the at the time editor of Nature, John Maddox wrote “One of the continuing scandals of physical science is that it remains in general impossible to predict the structure of even the simplest crystalline solids from a knowledge of their chemical composition.”217 While this is far from true nowadays, predicting the crystal structure based solely on the composition remains one of the most important (if not even the key) challenge in materials science, as any rational materials design has to be grounded in the knowledge of the crystal structure.

Unfortunately, the first-principle prediction of crystal or molecular structures is exceptionally difficult, because the combinatorial space is composed of all possible arrangements of the atoms in three-dimensional space and with an extremely complicated energy surface.18 In recent years, advanced structure selection and generation algorithms such as random sampling,218,219,220,221 simulated annealing,222,223,224 metadynamics,225 minima hopping,226 and evolutionary algorithms,19,227,228,229,230,231,232,233 as well as the progress in energy evaluation methods, expanded the scope of application of “classical” crystal structure prediction methods to a wider range of molecules and solid forms.234 Nevertheless, these methods are still highly computationally expensive, as they require a substantial amount of energy and force evaluations. However, the search for new or better high-performance materials is not possible without searching through an enormous composition and structure space. As there are tremendous amounts of data involved, machine learning algorithms are some of the most promising candidates to take on this challenge.

Machine learning methods can tackle this problem from different directions. A first approach is to speed up the energy evaluation by replacing a first-principle method with machine learning models that are orders of magnitude faster (see section “Machine learning force fields”). However, the most prominent approach in inorganic solid-state physics is the so-called component prediction.61 Instead of scanning the structure space for one composition, one chooses a prototype structure and scans the composition space for the stable materials. In this context, thermodynamic stability is the essential concept. By this we mean compounds that do not decompose (even in infinite time) into different phases or compounds. Clearly, metastable compounds like diamond are also synthesizable and advances in chemistry have made them more accessible.235,236 Nevertheless, thermodynamically stable compounds are in general easier to produce and work with. The usual criterion for thermodynamic stability is based on the energetic distance to the convex hull, but in some cases the machine learning model will directly calculate the probability of a compound existing in a specific phase.

Component prediction

Clearly the formation energy of a new compound is not sufficient to predict its stability. Ideally, one would always want to use the distance to the convex hull of thermodynamic stability. In contrast to the formation energy, the distance to the convex hull considers the difference in free energy of all possible decomposition channels. De facto, this is not the case because our knowledge of the convex hull is of course incomplete. Fortunately, as our knowledge of the convex hull continuously improves with the discovery of new stable materials, this problem becomes less important over time. Lastly, most first-principle energy calculations are done at zero temperature and zero pressure, neglecting kinetic effects on the stability.

Faber et al.35 applied KRR to calculate formation energies of two million elpasolites (with stoichiometry ABC2D6) crystals consisting of main group elements up to bismuth. Errors of around 0.1 eV/atom were reported for a training set of 104 compositions. Using energies and data from the materials project,78 phase diagrams were constructed and 90 new stoichiometries were predicted to lie on the convex hull.

Schmidt et al.99 first constructed a dataset of DFT calculations for approximately 250,000 cubic perovskites (with stoichiometry ABC3) using all elements up to bismuth and neglecting rare gases and lanthanides. After testing different machine learning methods, extremely randomized trees175 in combination with adaptive boosting183 proved the most successful with an mean average error of 0.12 eV/atom. Curiously, the error in the prediction depends strongly on the chemical composition (see Fig. 12). Furthermore, an active learning approach based on pure exploitation was suggested (see section “Adaptive design process and active learning”).

Mean average error (in meV/atom) for adaptive boosting used with extremely random trees averaged over all perovskites containing the element. The numbers in parentheses are the actual averaged error for each element. (Reprinted with permission from ref. 99. Copyright 2017 American Chemical Society.)

In ref. 186, the composition space for two ternary prototypes with stoichiometry AB2C2 (tI10-CeAl2Ga2 and the tP10-FeMo2B2 prototype structures) were explored for stable compounds using the approach developed in ref. 99. In total, 1893 new compounds were found on the convex hull while saving around 75% of computation time and reporting false negative rates of only 0% for the tP10 and 9% for the tI10 compound.

Ward et al.34 used standard RFs to predict formation energies based on features derived from Voronoi tessellations and atomic properties. Starting with a training set of around 30,000 materials, the descriptors showed better performance than Coulomb matrices108 and partial RDFs109 (see section “Basic principles of machine learning—Features” for the different descriptors). Surprisingly, the structural information from the Voronoi tessellation did not improve the results for the training set of 30,000 materials. This is based on the fact that very few materials with the same composition, but different structure, are present in the dataset. Changing the training set to an impressive 400,000 materials from the open quantum materials database80 proved this point, as the error for the composition-only model was then 37% higher than for the model including the structural information.

A recent study by Kim et al.237 used the same method for the discovery of quaternary Heusler compounds and identified 53 new stable structures. The model was trained for different datasets (complete open quantum materials database,80 only the quaternary Heusler compounds, etc.). For the prediction of Heusler compounds, it was found that the accuracy of the model also benefited from the inclusion of other prototypes in the training set. It has to be noted that studies with such large datasets are not feasible with kernel-based methods (e.g. KRR, SVMs) due to their unfavorable computational scaling.

Li et al.33 applied different regression and classification methods to a dataset of approximately 2150 A1−xA′xB1−yB′yO3 perovskites, materials that can be used as cathodes in high-temperature solid oxide fuel cell.238 Elemental properties were used as features for all methods. Extremely randomized trees proved to be the best classifiers (accuracy 0.93, F1-score 0.88) while KRR and extremely randomized trees had the best performance for regression, with mean average errors of <17 meV/atom. The errors in this work are difficult to compare to others as the elemental composition space was very limited.

Another work treating the problem of oxide–perovskite stability is ref. 56. Using neural networks based only on the elemental electronegativity and ionic radii, Ye et al. achieved a mean average error of 30 meV/atom for the prediction of the formation energy of unmixed perovskites. Unfortunately, their dataset contained only 240 compounds for training, cross-validation, and testing. Ye et al.56 also achieved comparable errors for mixed perovskites, i.e. perovskites with two different elements on either the A- or B-site. Mean average errors of 9 and 26 meV/atom were then obtained, respectively, for unmixed and mixed garnets with the composition C3A2D3O12. By reducing the mixing to the C-site and including additional structural descriptors, Ye et al. were able to once again decrease the latter error to merely 12 meV/atom. If one compares this study to, e.g., refs. 1,35, the errors seem extremely small. This is easily explained once we notice that ref. 56 only considers a total compound space of around 600 compounds in comparison to around 250,000 compounds in ref. 1. In other words, the complexity of the problem differs by more than two orders of magnitude.

The CGCNNs (see section “Basic principles of machine learning—Features”) developed by Xie et al.,40 the MatErials Graph Networks132 by Chen et al., and the MPNNs by Jørgensen et al.142 also allow for the prediction of formation energies and therefore can be used to speed up component prediction.

Up to this point, all component prediction methods presented here relied on first-principle calculations for training data. Owing to the prohibitive computational cost of finite temperature calculations, nearly all of this data correspond to zero temperature and pressure and therefore neglects kinetic effects on the stability. Furthermore, metastable compounds, such as diamond, which are stable for all practical purposes and essential for applications, risk to be overlooked. The following methods bypass this problem through the use of experimental training data.

The first structure prediction model that relies on experimental information can be traced back to the 1920s. One example that was still relevant until the past decade is the tolerance factor of Goldschmidt,239 a criterion for the stability of perovskites. Only recently, modern methods like SISSO,163 gradient tree boosting,180 and RFs174 improved upon these old models and allowed a rise in precision from 74% to >90%143,240,241 for the stability prediction of perovskites. Balachandran et al.241 also predicted whether the material would exist as a cubic or non-cubic perovskite, reaching a 94% average cross-validation error. The advantage of stability prediction based on experimental data is a higher precision and reliability, as the theoretical distance to the convex hull is a good but far from perfect indicator for stability. Taking the example of perovskites, one has to increase the distance to the convex hull up to 150 meV/atom just to find even 95% of the perovskites present in the inorganic crystal structure database79 (see Fig. 13).

Histogram of the distance to the convex hull for perovskites included in the inorganic crystal structure database.79 Calculations were performed within density functional theory with the Perdew–Burke–Ernzerhof approximation. The bin size is 25 meV/atom. (Reprinted with permission from ref. 99. Copyright 2017 American Chemical Society.)

Another system with a relatively high number of experimentally known structures are the AB2C Heusler compounds. Oliynyk et al.242 used RFs and experimental data for all compounds with AB2C stoichiometry from Pearson’s crystal data243 and the alloy phase diagram database88 to build a model to predict the probability to form a full-Heusler compound with a certain composition. Using basic elemental properties as features, Olynyk et al. were able to successfully predict and experimentally confirm the stability of several novel full-Heusler phases.

Legrain et al. extended the principle of this work to half-Heusler ABC compounds. While comparing the results of three ab initio high-throughput studies37,244,245 to the machine learning model, they found that the predictions of the high-throughput studies were neither consistent with each other nor with the machine learning model. The inconsistency between the first-principle studies is due to different publication dates that led to different knowledge about the convex hulls and to slightly differing methodologies. The machine learning model performs well with 9% false negatives and 1% false positives (in this case, positive means stable as half-Heusler structure). In addition, the machine learning model was able to correctly label several structures for which the ab initio prediction failed. This demonstrates the possible advantages of experimental training data, when it is available.

Zheng et al.36 applied convolutional neural networks and transfer learning246 to the prediction of stable full-Heusler compounds AB2C. Transfer learning considers training a model for one problem and then using parts of the model, or the knowledge gained during the first training process, for a second training thereby reducing the data required. An image of the periodic table representation was used in order to take advantage of the great success of convolutional neural networks for image recognition. The network was first trained to predict the formation energy of around 65,000 full-Heusler compounds from the open quantum materials database,80 resulting in a mean absolute error of 7 meV/atom (for a training set of 60,000 data points) and 14 meV/atom (for a training set of 5000 compositions). The weights of the neural network were then used as starting point for the training of a second convolutional neural network that classified the compositions as stable or unstable according to training data from the inorganic crystal structure database.79 Unfortunately, no data concerning the accuracy of the second network was published.

Hautier et al.247 combined the use of experimental and theoretical data by building a probabilistic model for the prediction of novel compositions and their most likely crystal structures. These predictions were then validated with ab initio computations. The machine learning part had the task to provide the probability density of different structures coexisting in a system based on the theory developed in ref. 146. Using this approach, Hautier et al. searched through 2211 ABO compositions where no ternary oxide existed in the inorganic crystal structure database79 and where the probability of forming a compound was larger than a threshold. This resulted in 1261 compositions and 5546 crystal structures, whose energy was calculated using DFT. To assess the stability, the energies of all decomposition channels that existed in the database were also calculated, resulting in 355 new compounds on the convex hull.

It is clear that component prediction via machine learning can greatly reduce the cost of high-throughput studies through a preselection of materials by at least a factor of ten.1 Naturally, the limitations of stability prediction according to the distance to the convex hull have to be taken into consideration when working on the basis of DFT data. While studies based on experimental data can have some advantage in accuracy, this advantage is limited to crystal structures that are already thoroughly studied, e.g., perovskites, and consequently a high number of experimentally stable structures is already known. For a majority of crystal structures, the number of known experimentally stable systems is extremely small and consequently ab initio data-based studies will definitely prevail over experimental data-based studies. Once again, a major problem is the lack of any benchmark datasets, preventing a quantitative comparison between most approaches. This is even true for work on the same structural prototype. Considering, for example, perovskites, we notice that three groups predicted distances to the convex hull.33,56,99 However, as the underlying composition spaces and datasets are completely different it is hardly possible to compare them.

Structure prediction

In contrast to the previous section, where the desired output of the models was a value quantifying the probability of compositions to condense in one specific structure, models in this chapter are concerned with differentiating multiple crystal structures. Usually this is a far more complex problem, as the theoretical complexity of the structural space dwarfs the complexity of the composition space. Nevertheless, it is possible to tackle this problem with machine learning methods.