Abstract

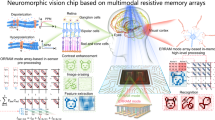

Conventional imaging and recognition systems require an extensive amount of data storage, pre-processing, and chip-to-chip communications as well as aberration-proof light focusing with multiple lenses for recognizing an object from massive optical inputs. This is because separate chips (i.e., flat image sensor array, memory device, and CPU) in conjunction with complicated optics should capture, store, and process massive image information independently. In contrast, human vision employs a highly efficient imaging and recognition process. Here, inspired by the human visual recognition system, we present a novel imaging device for efficient image acquisition and data pre-processing by conferring the neuromorphic data processing function on a curved image sensor array. The curved neuromorphic image sensor array is based on a heterostructure of MoS2 and poly(1,3,5-trimethyl-1,3,5-trivinyl cyclotrisiloxane). The curved neuromorphic image sensor array features photon-triggered synaptic plasticity owing to its quasi-linear time-dependent photocurrent generation and prolonged photocurrent decay, originated from charge trapping in the MoS2-organic vertical stack. The curved neuromorphic image sensor array integrated with a plano-convex lens derives a pre-processed image from a set of noisy optical inputs without redundant data storage, processing, and communications as well as without complex optics. The proposed imaging device can substantially improve efficiency of the image acquisition and recognition process, a step forward to the next generation machine vision.

Similar content being viewed by others

Introduction

Advances in the imaging, data storage, and processing technology have enabled diverse image-data-based processing tasks1. The efficient acquisition and recognition of a target image is a key procedure in the machine vision applications1,2, such as facial recognition3 and object detection2. The image recognition based on conventional image sensors and data processing devices, however, is not ideal in terms of efficiency and power consumption4,5, which are particularly important aspects for advanced mobile systems6. This is because conventional systems recognize objects through a series of iterative computing steps that require massive data storage, processing, and chip-to-chip communications7,8 in conjunction with aberration-proof imaging with complicated optical components9 (Supplementary Fig. 1a).

An example of the image recognition process based on the conventional system is shown in Supplementary Fig. 2. The raw image data over the entire time domain is obtained by a flat image sensor array with multi-lens optics9,10 and stored in a memory device for frame-based image acquisition1 (Supplementary Fig. 2a and b). The massive raw data is iteratively processed and stored by a central processing unit (CPU) and a memory device for event detection and data pre-processing3 (Supplementary Fig. 2c and d). Then the pre-processed data is transferred to and processed by a graphics processing unit (GPU) based on a neural network algorithm7 (e.g., vector-matrix multiplication11) for feature extraction and classification12 (Supplementary Fig. 2e). Such iterative computing steps1 as well as multi-lens optics13 in the conventional imaging device increase system-level complexity.

In contrast, human vision outperforms conventional imaging and recognition systems particularly with regard to unstructured image classification and recognition14. In the human visual recognition system, visual scenes are focused by a single lens, detected by the hemispherical retina, transmitted to the brain through optic nerves, and recognized by a neural network in the visual cortex1 (Supplementary Fig. 1b). The human-eye optics is much simpler than the multi-lens optics in conventional cameras9,13. A distinctive feature is extracted in the neural network from the visual information acquired by the human eye15,16, which is used for image identification based on memories17. Therefore, the human visual recognition system can achieve higher efficiency than conventional image sensors and data processing devices1.

Inspired by the neural network of the human brain, memristor crossbar arrays have been proposed for neuromorphic data processing3,18, which can potentially replace the GPU7,12. Memristor arrays have demonstrated efficient vector-matrix multiplications by physically implementing the neural network in hardware5,11. However, these electrical neuromorphic devices cannot directly respond to optical stimuli, and thus still require separate image sensors, memory, and processors to capture, store, and pre-process the massive visual information, respectively1 (Supplementary Fig. 1c). Meanwhile, inspired by the human eye, curved image sensor arrays have been proposed19,20. Although they could simplify the structure of the imaging module10, they do not have data processing capabilities and thus additional processing units and memory modules are still needed.

Ideally, a novel imaging device inspired by the human visual recognition system, which enables neuromorphic image data pre-processing as well as simplified aberration-free image acquisition, is necessary to dramatically improve the efficiency of the imaging and recognition process for machine vision applications1,21. We herein present a curved neuromorphic image sensor array (cNISA) using a heterostructure of MoS2 and poly(1,3,5-trimethyl-1,3,5-trivinyl cyclotrisiloxane) (pV3D3), aiming at aberration-free image acquisition and efficient data pre-processing with a single integrated neuromorphic imaging device (Supplementary Fig. 1d). The cNISA integrated with a single plano-convex lens realizes unique features of the human visual recognition system, such as imaging with simple optics and data processing with photon-triggered synaptic plasticity. The cNISA derives a pre-processed image from a set of noisy optical inputs without repetitive data storage, processing, and communications as well as complicated optics, required in conventional imaging and recognition systems.

Results

Curved neuromorphic imaging device inspired by human vision

Figure 1a, b shows schematic illustrations of the human visual recognition system and the curved neuromorphic imaging device, respectively. The human eye, despite its simple optics, enables the high-quality imaging without optical aberrations22 because its hemispherical retina matches with the hemispherical focal plane of the single human-eye lens9 (Fig. 1a). In addition, the neural network exhibits high efficiency for classification of unstructured data by deriving distinctive features of the input data based on the synaptic plasticity14 (i.e., short-term plasticity (STP) and long-term potentiation (LTP); Fig. 1a inset); the intensity of the post-synaptic output signal is weighted by the frequency of pre-synaptic inputs15.

a Schematic illustration of the human visual recognition system comprised of a single human-eye lens, a hemispherical retina, optic nerves, and a neural network in visual cortex. The inset schematic shows the synaptic plasticity (i.e., STP and LTP) of the neural network. b Schematic illustration of the curved neuromorphic imaging device. The inset in the dashed box shows the concept of photon-triggered synaptic plasticity that derives a weighted electrical output from massive optical inputs. c Block diagram showing the sequence of the image recognition using the conventional imaging and data processing system (e.g., conventional imaging system with a conventional processor (top) or with a neuromorphic chip (bottom)). d Block diagram showing the sequence of the image recognition using cNISA and a post-processor (e.g., GPU or neuromorphic chip).

All such efficient features of the human visual recognition system are incorporated into the curved neuromorphic imaging device. A single plano-convex lens focuses the incident light (e.g., massive noisy images) on cNISA that detects massive optical inputs and derives a pre-processed image through neuromorphic data processing (Fig. 1b). The concave curvature of cNISA matches with the Petzval surface of the lens, minimizing optical aberrations without the need of complicated multi-lens optics13 (Supplementary Fig. 3). The photon-triggered electrical responses, which are similar to synaptic signals in the neural network, are enabled by the MoS2-pV3D3 heterostructure and result in a weighted electrical output from optical inputs (Fig. 1b inset). Such an integrated imaging device enables the image acquisition and data pre-processing through a single readout operation.

The high efficiency of cNISA in comparison with conventional systems is explained in Fig. 1c, d. In case of a conventional imaging system with a conventional processor (i.e., von-Neumann architecture; Fig. 1c top), a flat image sensor array responds to incoming light (i.e., optical inputs) focused by multi-lens optics10 and generates a photocurrent proportional to the intensity of applied optical inputs23,24. All measurements by the image sensor should be converted into digital signals and stored in a memory device for frame-based image acquisition25. Then massive electrical outputs (i.e., raw image data) are sent to a pre-processing module and processed iteratively3. The pre-processed data is sent to post-processing units (e.g., GPU) for additional processing and image recognition12. Meanwhile, neuromorphic chips have been proposed to overcome computational inefficiency of the conventional von-Neumann architecture8 (Fig. 1c bottom). However, there are still inefficient aspects in terms of storage, transfer, and pre-processing of massive electrical outputs (i.e., raw image data) due to isolated construction of the image sensor array from the pre-processing units1.

On the contrary, cNISA can simplify imaging and data pre-processing steps, and thereby maximize efficiency (Fig. 1d). The detailed description on the overall architecture for image acquisition, data pre-processing, and data post-processing is presented in Supplementary Fig. 4. The cNISA receives optical inputs through a single lens, which can simplify the optical system construction (Supplementary Fig. 3b). The detailed optical analyses for the plano-convex lens and cNISA in comparison with the conventional imaging system are described in Supplementary Note 1 and Supplementary Tables 1 and 2. The output photocurrent gradually increases in a pixel with frequently repeated optical inputs (LTP; the bottom of Fig. 1b inset), while it decays in a pixel with infrequent optical inputs (STP; top of Fig. 1b inset). The electrical output at each pixel presents a weighted value proportional to the frequency of optical inputs, which includes the history of entire optical inputs. By a single readout of the electrical output, cNISA can derive a pre-processed image from a set of noisy optical inputs. Therefore, massive data storage, numerous data communications, and iterative data processing that have been required to obtain the pre-processed image data in conventional systems are not necessary7,12.

Photon-triggered synaptic plasticity

The key principle of the neuromorphic imaging in cNISA is photon-triggered synaptic plasticity of the MoS2-pV3D3 phototransistor (pV3D3-PTr). The pV3D3-PTr consists of a Si3N4 substrate, graphene source/drain electrodes, a MoS2 light-sensitive channel, a pV3D3 dielectric layer, and a Ti/Au gate electrode (Fig. 2a). An optical microscope image (top view) and cross-sectional transmission electron microscope (TEM) images are presented in Fig. 2b, c. The detailed synthesis, fabrication, and characterization procedures are described in Methods. The pV3D3-PTr exhibits the light-sensitive field-effect transistor behavior26 and maintains its performance over three months (Supplementary Fig. 5).

a Schematic illustration of the device structure of pV3D3-PTr. b Optical microscope image of pV3D3-PTr. c Cross-sectional TEM images of pV3D3-PTr (left) and its magnified view (right). d, e Photon-triggered STP (d) and LTP (e) of pV3D3-PTr. f Photocurrent generation and decay characteristics of pV3D3-PTr and Al2O3-PTr. g Statistical analyses (N = 36) of time constants (τ1, τ2) and ratio of the photocurrent coefficient (I2/I1) for pV3D3-PTr and Al2O3-PTr. h, Photocurrent decay characteristics of pV3D3-PTr. For STP, a single optical pulse with 0.5 s duration was applied. For LTP, 20 optical pulses for 0.5 s duration each with 0.5 s intervals were applied. i An/A1 of pV3D3-PTr and Al2O3-PTr as a function of the number of applied optical pulses. j Computationally obtained plane-averaged interfacial charge density difference in the MoS2-pV3D3 heterostructure (i.e., Δρ = ρMoS2,B − ρMoS2 − ρB where the subscript B indicates the dielectric) versus the distance in the aperiodic lattice direction. k Contour plots of the charge density difference in planes normal to the interface in the MoS2-pV3D3 heterostructure. The green and red contours imply potential hole trapping and electron trapping sites, respectively. The inset shows a side view of Fig. 2k.

The photo-response of pV3D3-PTr exhibits key characteristics of the synaptic plasticity in the human neural network. The photocurrent decays rapidly under infrequent optical inputs (e.g., two optical pulses with 10 s intervals; Fig. 2d), which corresponds to STP. However, the photocurrent is accumulated under frequent optical inputs (e.g., 20 optical pulses with 0.5 s intervals; Fig. 2e), which corresponds to LTP. In addition, the accumulated photocurrent becomes larger, as more frequent optical inputs are applied (Supplementary Fig. 6). Such a photon-triggered electrical response similar to the synaptic plasticity in the neural network is attributed to two characteristics of pV3D3-PTr, quasi-linear time-dependent photocurrent generation and prolonged photocurrent decay.

First, pV3D3-PTr exhibits the quasi-linear time-dependent photocurrent generation (Fig. 2f top). As a comparison, we prepared a control device, a MoS2-Al2O3 phototransistor (Al2O3-PTr), which is a representative example of MoS2-based phototransistors9,27. The Al2O3-PTr shows a non-linear time-dependent photocurrent generation behavior (Fig. 2f bottom). For quantitative comparison, a linearity factor (α), a degree of linearity of the photocurrent increase with respect to the illumination time (Iphα ~ t), is analyzed. As α approaches 1, the photocurrent increase becomes linear. However, if α is much larger than 1, the photocurrent increases nonlinearly and becomes saturated shortly, which hinders efficient pre-processing of data1. The linearity factor of pV3D3-PTr (αpV3D3) and that of Al2O3-PTr (αAl2O3) are obtained by fitting log(Iph) with respect to log(t), where αpV3D3 (1.52) is closer to unity than αAl2O3 (2.50). Therefore, pV3D3-PTr is more ideal for neuromorphic image data pre-processing than the control device (i.e., Al2O3-PTr)28.

The time-dependent photocurrent generation of pV3D3-PTr in comparison with Al2O3-PTr is analyzed further by using an analytical model29 (Supplementary Fig. 7). The details of the analytical model are described in Supplementary Note 2. The analytical model, Iph(t) = I1(1-exp(-t/τ1)) + I2(1-exp(-t/τ2)), consists of two exponential photocurrent generation terms with time constants (τ1 and τ2) and photocurrent coefficients (I1 and I2). These parameters are compared in Fig. 2g. The pV3D3-PTr exhibits a large photocurrent coefficient ratio (I2,pV3D3/I1,pV3D3 = 11.03) and large τ2 (τ2,pV3D3 = 12.85 s), resulting in a quasi-linear photocurrent generation function after series expansion of the exponential function (Iph(t) ≅ I2,pV3D3(t/τ2,pV3D3); Supplementary Fig. 7a). In contrast, the control device (i.e., Al2O3-PTr) exhibits a much smaller photocurrent coefficient ratio (I2,Al2O3/I1,Al2O3 = 0.95) and smaller τ2 (τ2,Al2O3 = 4.39 s), thus showing non-linear photocurrent generation (Supplementary Fig. 7b).

Second, pV3D3-PTr shows prolonged photocurrent decay (Fig. 2h). The total decay time becomes longer with more frequent optical inputs. The decay time constant (τdecay), the time required for photocurrent decay to 1/e of an initial value, of pV3D3-PTr is dependent on the number of applied optical pulses (Supplementary Fig. 8). The decay time constant for LTP and STP (τdecay,LTP and τdecay,STP) are 8.61 and 1.43 s, respectively (red line and black line in Fig. 2h), and the retention time for LTP and STP are 3600 and 1200 s, respectively (Supplementary Fig. 9). Such a large difference of the decay time and the retention time between LTP and STP are important to enhance the contrast of the pre-processed image.

With these attributes, pV3D3-PTr exhibits a high contrast between LTP and STP. The synaptic weight (An/A1), a ratio of the photocurrent generated by n optical pulses (An) to the photocurrent generated by a single optical pulse (A1), is defined to analyze the contrast quantitatively. The synaptic weight of pV3D3-PTr continuously increases as the number of applied optical pulses increases (red line in Fig. 2i), whereas the synaptic weight of the control device (i.e., Al2O3-PTr) becomes almost saturated after five optical pulses (black line in Fig. 2i). Therefore, pV3D3-PTr exhibits a larger synaptic weight (A25/A1) of 5.93 than Al2O3-PTr with A25/A1 of 2.89 upon the irradiation of 25 optical pulses (Supplementary Fig. 10), leading to a better contrast in the neuromorphic imaging and pre-processing.

Such time-dependent photo-responses of MoS2-based phototransistors are related to charge trapping at the interface between MoS2 and dielectrics30. Therefore, we theoretically investigated the interfacial properties of the MoS2-pV3D3 heterostructure in comparison with those of the MoS2-Al2O3 heterostructure. The MoS2-pV3D3 heterostructure exhibits a large exciton binding energy (0.43 eV) that promotes spatial separation of electron-hole pairs at the interface31,32. In addition, its type-II band alignment and large conduction band energy difference facilitate the spatial charge separation33,34 (Supplementary Fig. 11a). As a result, electrons and holes of a high density are spatially-separated and localized near the respective interface in the MoS2-pV3D3 heterostructure (Fig. 2j). Such electron-hole pairs can induce photocurrent by the photogating effect35. The localized charges in the MoS2-pV3D3 heterostructure were experimentally verified by the Kelvin probe force microscopy (Supplementary Fig. 12). Conversely, the charge separation at the MoS2-Al2O3 heterostructure is less probable (Supplementary Fig. 13a), because its type-I band alignment hinders the hole transfer at the interface33 (Supplementary Fig. 11b). More details about the theoretical analysis are described in Supplementary Note 3.

The spatial distribution of the charge density difference (Δρ), in which negative Δρ indicates the existence of potential hole trapping sites, was computationally analyzed. The MoS2-pV3D3 heterostructure exhibits a spatially inhomogeneous distribution of Δρ in both in-plane and out-of-plane direction (Fig. 2k and its inset), compared to the relatively homogeneous distribution in the MoS2-Al2O3 heterostructure (Supplementary Fig. 13b and its inset), due to the irregular geometry of the polymeric pV3D3 structure. Such an inhomogeneous distribution of Δρ results in the complex spatial and energy distribution of the potential hole trapping sites which are required for the active interfacial charge transfer36.

Image acquisition and neuromorphic data pre-processing

The cNISA is applied to the acquisition of a pre-processed image from massive noisy optical inputs. First, a simplified version of the array (3 × 3 array) was used to explain its operating principle. A set of noisy optical inputs (Im), successively incident to the array, induces a weighted photocurrent (Iph,n) in each pixel (Pn) (Fig. 3a). For example, Iph,n changes gradually by the irradiation of nine optical inputs (I1–I9; Supplementary Fig. 14). Pixels of #1, #2, #3, #5, and #8 receive eight optical pulses from nine incident inputs and thus generate a large accumulated photocurrent, whereas pixels of #4, #6, #7, and #9 receive only one optical pulse and thereby generate a negligible photocurrent (Fig. 3b). Since the final photocurrent of each pixel represents a weighted value, a pre-processed image can be obtained by simply mapping the final photocurrent.

a Schematic diagram showing the image acquisition and neuromorphic data pre-processing by using a 3 × 3 pV3D3-PTr array. b Normalized photocurrent measured at each pixel of the 3 × 3 pV3D3-PTr array. c Acquired image at each time point. d Pre-processed image obtained through image acquisition and neuromorphic data pre-processing. e Pre-processed image stored in the array while photocurrents of individual pixels slowly decay. f Erasure of the memorized image by applying a positive gate bias (i.e., Vg = 1 V).

The mapped images at different time points are shown in Fig. 3c. The image contrast is enhanced as more optical inputs are applied. Eventually, a pre-processed image ‘T’ is obtained from noisy optical inputs (Fig. 3d). Meanwhile, the pre-processed image is memorized and slowly dissipated over a long period of time (~30 s; Fig. 3e). These image pre-processing and signal dissipation processes resemble synaptic behaviors in the neural network (i.e., memorizing and forgetting)14. The remaining image can be immediately erased, if needed, by applying a positive gate bias (e.g., Vg = 1 V) (Fig. 3f). The positive gate bias facilitates de-trapping of holes in the MoS2-pV3D3 heterostructure, which removes the photogating effect and returns pV3D3-PTrs to the initial state30. Therefore, the subsequent image acquisition and pre-processing can be proceeded without interference by the afterimage in the previous imaging and pre-processing step37.

For imaging demonstrations of more complex patterns, the array size is expanded from 9 pixels to 31 pixels, and other components are assembled for the integrated imaging system (Fig. 4a). The integrated system consists of a plano-convex lens that focuses incident optical inputs, cNISA that derives a pre-processed image from noisy optical inputs, and a housing that supports the lens and array (inset of Fig. 4a, b). The pixels in cNISA are distributed as a nearly circular shape (Supplementary Fig. 15). By employing an ultrathin device structure38,39 (~2 μm thickness including encapsulations) and using intrinsically flexible materials (i.e., graphene40, MoS241,42,43, and pV3D344), we could fabricate a mechanically deformable array. We also adopted a strain-releasing mesh design45,46, added patterns to fragile materials (i.e., Si3N4), and located the array near the neutral mechanical plane. Therefore, the strain induced on the deformed array was <0.053 % (Supplementary Fig. 16). As a result, the array can be integrated on a concavely curved surface without mechanical failures (Fig. 4c). Additional details for mechanical analyses are described in Supplementary Note 4 and Supplementary Fig. 16d. A customized data acquisition system including current amplifiers and an analog-to-digital converter (ADC) enables the photocurrent measurement from cNISA (Fig. 4d and Supplementary Figs. 17 and 18). Each pixel of cNISA is serially connected to the current amplifier via an anisotropic conductive film (ACF). The detailed experimental setup and imaging procedures are described in Methods.

a Photograph of an integrated imaging system that consists of a plano-convex lens, cNISA, and a housing. The inset shows the components before assembly. b Exploded illustration of the curved neuromorphic imaging device. c Photograph of cNISA on a concave substrate. d Schematic diagram of the customized data acquisition system for measuring the photocurrents of individual pixels in cNISA. e–h Demonstrations for deriving a pre-processed image from massive noisy optical inputs (e.g., acquisition of a pre-processed C-shape image (i), decay of the memorized C-shape image (ii), erasure of the afterimage (iii), and acquisition of a pre-processed N-shape image (iv)). Figure 4e shows applied optical inputs and an applied electrical input. Figure 4f shows obtained images at each time point. Figure 4g and h show the photocurrent obtained from the pointed pixels at each time point.

The image acquisition and pre-processing demonstrations by using the integrated system are shown in Fig. 4e–h. First, noisy optical inputs of C-shaped images (e.g., 20 optical inputs with 0.5 s durations and 0.5 s intervals; Supplementary Fig. 19a) are irradiated (Fig. 4e (i), red colored region). Then, a large accumulated photocurrent is generated in pixels with frequent optical inputs (Fig. 4g (i), red colored region), while a negligible photocurrent is generated in pixels with infrequent optical inputs (Fig. 4h (i), red colored region). As a result, a pre-processed image ‘C’ is acquired (Fig. 4f (i), red colored region). The pre-processed image is maintained approximately for 30 s (Fig. 4f–h (ii), blue colored region). The remaining image can be erased by applying a positive gate bias (e.g., Vg = 1 V; at 60 s of Fig. 4e–h (iii), violet colored region). Then, each pixel returns to the initial state (i.e., zero photocurrent). After erasing the afterimage, another imaging can be followed. For example, a pre-processed image ‘N’ is derived (Fig. 4e–h (iv), green colored region) from a set of noisy optical inputs (e.g., 20 images with 0.5 s durations and 0.5 s intervals; Supplementary Fig. 19b). These successful demonstrations of neuromorphic imaging, i.e., acquisition of a pre-processed image from massive noisy optical inputs through a single readout operation, exhibit the exceptional features of cNISA.

Discussion

In this study, we developed a curved neuromorphic imaging device by using a MoS2-organic heterostructure. Distinctive features of the human visual recognition system, such as the curved retina with simple optics and the efficient data processing in the neural network with synaptic plasticity, have inspired the development of a novel imaging device for efficient image acquisition and pre-processing with the simple system construction. The distinctive features of pV3D3-PTr, i.e., quasi-linear time-dependent photocurrent generation and prolonged photocurrent decay characteristics, have enabled photon-triggered synaptic plasticity of cNISA. Such photon-triggered synaptic behaviors were analyzed by experimental studies and computational simulations. The imaging demonstrations using the integrated system proved that a pre-processed image can be successfully derived from a set of noisy optical inputs through a single readout operation.

Such a curved neuromorphic imaging device could enhance the efficiency for image acquisition and data pre-processing as well as simplify the optics to miniaturize the overall device size, and thus has a potential to be a key component for efficient machine vision applications. Note that detailed specifications of the curved neuromorphic imaging device are compared to those of the relevant neuromorphic image sensors in Supplementary Table 3. Nevertheless, additional processors for data post-processing, which extract features from the pre-processed image data and identify the target object, are still necessary for machine vision applications47,48. Therefore, further device units for efficient post-processing of the pre-processed image data should be still integrated37, although the pre-processed image can be efficiently obtained by cNISA. Neuromorphic processors (e.g., memristor crossbar array) enable efficient post-processing of the pre-processed image data in terms of the fast computation and the low power consumption11. The combination of cNISA with such neuromorphic processors would be helpful for demonstrating machine vision applications, although massive data storage and communications between them are still required due to their isolated architecture. In this regard, the development of a fully integrated system, which can perform the entire steps from image acquisition to data pre-/post-processing in a single device, can be an important goal in future research. The development of such technologies would make a step forward to the high-performance machine vision.

Methods

Synthesis of graphene and MoS2

Graphene was synthesized using chemical vapor deposition (CVD)9. After 30 min annealing of a copper foil (Alfa Aesar, USA) at 1000 °C under a constant flow of H2 (8 sccm, 0.08 Torr), a flow of CH4 (20 sccm, 1.6 Torr) was added for 20 min at 1000 °C. Then, the chamber was cooled down to room temperature under a flow of H2 (8 sccm, 0.08 Torr).

MoS2 was also synthesized using CVD9. Alumina crucibles with sulfur (0.1 g; Alfa Aesar, USA) and MoO3 (0.3 g; Sigma Aldrich, USA) were placed at the upstream and center of the chamber, respectively. A SiO2 wafer treated with the piranha solution and oxygen plasma was placed at the downstream of the MoO3 crucible. After 30 min annealing at 150 °C under a constant flow of Ar (50 sccm, 10 Torr), the chamber was heated up to 650 °C for 20 min and maintained as 650 °C for 5 min under a constant flow of Ar (50 sccm, 10 Torr). The temperature of sulfur was maintained as 160 °C. After the synthesis is finished, the chamber was naturally cooled down to room temperature under a constant flow of Ar (50 sccm, 10 Torr).

Deposition of pV3D3

pV3D3 was deposited using initiated CVD (iCVD)44. The sample was placed in the iCVD chamber, whose temperature was maintained as 50 °C. The temperature of the filament was maintained as 140 °C. During the synthesis, 1,3,5-trimethyl-1,3,5-trivinyl cyclotrisiloxane (V3D3, 95%; Gelest, USA) and di-tert-butyl peroxide (TBPO, 97%; Sigma Aldrich, USA) were vaporized and introduced to the chamber. The ratio of V3D3 and TBPO was controlled as 2:1, and the chamber pressure was maintained as 0.3 Torr.

Fabrication of cNISA

The fabrication of cNISA began with spin-coating of a polyimide (PI) film (~1 μm thickness, bottom encapsulation; Sigma Aldrich, USA) on a SiO2 wafer. A thin layer of Si3N4 (~15 nm thickness, substrate) was deposited using plasma-enhanced CVD, and the Si3N4 film was etched into an island-shaped array using photolithography and dry etching. Graphene (~2 nm thickness, electrode) was transferred onto Si3N4. Thin Ti/Au layers (~5 nm/25 nm thickness) were deposited and used as an etch mask and probing pads. Graphene was patterned as interdigitated source/drain electrodes. An ultrathin MoS2 layer (~4 nm thickness, light-sensitive channel) was transferred onto the graphene electrodes, and patterned by photolithography and dry etching. A pV3D3 layer (~25 nm thickness, dielectric) was deposited by iCVD, and was etched by photolithography and dry etching as an island-shaped array. Then a lift-off process was used to pattern the Ti/Au layers (~5 nm/25 nm thickness, gate electrode) deposited by thermal evaporation. Ti/Au layers (~5 nm/25 nm thickness, ACF pad) were additionally deposited. Additional deposition of a parylene film (~1 μm thickness, top encapsulation) and dry etching completed fabrication of the neuromorphic image sensor array. The fabricated image sensor array was detached from the SiO2 wafer using a water-soluble tape (3 M Corp., USA), and then transfer-printed onto a concave hemispherical substrate made of polydimethylsiloxane (PDMS; Dow Corning, USA). The radius of curvature and the subtended angle of the hemispherical substrate are 11.3 mm and 123.8°, respectively (Supplementary Fig. 16c). A housing for mechanically supporting the plano-convex lens and cNISA was fabricated using a 3D printer (DP200, prototech Inc., Republic of Korea).

Electrical characterization of pV3D3-PTr

The electrical properties of pV3D3-PTr were characterized by using a parameter analyzer (B1500A, Agilent, USA). A control device (i.e., Al2O3-PTr) was prepared for comparison by using a similar fabrication process with pV3D3-PTr. The Al2O3 layer (~25 nm) deposited at 200 °C through thermal atomic layer deposition was used as a dielectric layer for fabricating Al2O3-PTr9. A white light-emitting diode whose intensity is 0.202 mW cm−2 was used as a light source for the device characterization. The emission spectrum of the white light-emitting diode is shown in Supplementary Fig. 20. The pulsed optical inputs with 0.5 s durations were programmed and generated by using the Arduino UNO.

Characterization of a MoS2-pV3D3 heterostructure

High-resolution TEM images were obtained by using the Cs corrected TEM (JEM-ARM200F, JEOL, Japan) to analyze the vertical configuration of pV3D3-PTr. The surface potential of a MoS2-pV3D3 heterostructure was measured by using the Kelvin probe force microscope (Dimension Icon, Bruker, USA). A control sample of a MoS2-Al2O3 heterostructure was also prepared and characterized for comparison. Raman and photoluminescence (PL) spectra of the as-grown MoS2, the MoS2-pV3D3 heterostructure, and the MoS2-Al2O3 heterostructure were analyzed by using Raman/PL micro-spectroscopy (Renishaw, Japan) with 532 nm laser. PL spectra were fitted by Gaussian function. More details about PL and Raman analyses are described in Supplementary Note 5 with Supplementary Figs. 21 and 22.

Imaging demonstration

The programmed white optical pulses with durations of 0.5 s, intervals of 0.5 s, and intensities of 0.202 mW cm−2 were irradiated to cNISA for illumination of a series of 20 noisy optical inputs (Supplementary Fig. 19). The front aperture blocked the stray light, and the plano-convex lens focused the incoming light onto cNISA. The surface profile of the plano-convex lens is measured by a large-area aspheric 3D profiler (UA3P, Panasonic) (Supplementary Fig. 23), and the detailed information about the optical system is described in Supplementary Table 1. The cNISA received incident optical inputs and generated a weighted photocurrent. The current amplifiers (e.g., transimpedance amplifiers and inverters) and power supplying chips were assembled on a printed circuit board (PCB; Supplementary Fig. 18 and Supplementary Table 4). Each pixel in cNISA is individually connected to the current amplifier via ACF. The photocurrents of individual pixels were amplified by the current amplifiers and independently processed by ADC (USB-6289, National Instruments, USA).

Data availability

The data files that support the findings of this study are available from the corresponding authors upon reasonable request.

Code availability

We used Arduino 1.8.3 and LabVIEW 2017 to operate the custom-made circuits. The source codes for Arduino and LabVIEW are available from the corresponding authors upon reasonable request.

Change history

01 September 2022

A Correction to this paper has been published: https://doi.org/10.1038/s41467-022-32731-0

References

Zhou, F. et al. Optoelectronic resistive random access memory for neuromorphic vision sensors. Nat. Nanotechnol. 14, 776–782 (2019).

Roy, K., Jaiswal, A. & Panda, P. Towards spike-based machine intelligence with neuromorphic computing. Nature 575, 607–617 (2019).

Yao, P. et al. Face classification using electronic synapses. Nat. Commun. 8, 15199 (2017).

Zidan, M. A., Strachan, J. P. & Lu, W. D. The future of electronics based on memristive systems. Nat. Electron. 1, 22–29 (2018).

van de Burgt, Y., Melianas, A., Keene, S. T., Malliaras, G. & Salleo, A. Organic electronics for neuromorphic computing. Nat. Electron. 1, 386–397 (2018).

Floreano, D. & Wood, R. J. Science, technology and the future of small autonomous drones. Nature 521, 460–466 (2015).

Jeong, D. S. & Hwang, C. S. Nonvolatile memory materials for neuromorphic intelligent machines. Adv. Mater. 30, 1704729 (2018).

Gkoupidenis, P., Koutsouras, D. A. & Malliaras, G. G. Neuromorphic device architectures with global connectivity through electrolyte gating. Nat. Commun. 8, 15448 (2017).

Choi, C. et al. Human eye-inspired soft optoelectronic device using high-density MoS2-graphene curved image sensor array. Nat. Commun. 8, 1664 (2017).

Ko, H. C. et al. A hemispherical electronic eye camera based on compressible silicon optoelectronics. Nature 454, 748–753 (2008).

Sheridan, P. M. et al. Sparse coding with memristor networks. Nat. Nanotechnol. 12, 784–789 (2017).

Xia, Q. & Yang, J. J. Memristive crossbar arrays for brain-inspired computing. Nat. Mater. 18, 309–323 (2019).

Lee, G. J., Choi, C., Kim, D.-H. & Song, Y. M. Bioinspired artificial eyes: optic components, digital cameras, and visual prostheses. Adv. Funct. Mater. 28, 1705202 (2018).

Lee, M. et al. Brain-inspired photonic neuromorphic devices using photodynamic amorphous oxide semiconductors and their persistent photoconductivity. Adv. Mater. 29, 1700951 (2017).

Ohno, T. et al. Short-term plasticity and long-term potentiation mimicked in single inorganic synapses. Nat. Mater. 10, 591–595 (2011).

Wang, H. et al. A ferroelectric/electrochemical modulated organic synapse for ultraflexible, artificial visual-perception system. Adv. Mater. 30, 1803961 (2018).

Brady, T. F., Konkle, T., Alvarez, G. A. & Oliva, A. Visual long-term memory has a massive storage capacity for object details. Proc. Natl Acad. Sci. USA 105, 14325–14329 (2008).

Prezioso, M. et al. Training and operation of an integrated neuromorphic network based on metal-oxide memristors. Nature 521, 61–64 (2015).

Zhang, K. et al. Origami silicon optoelectronics for hemispherical electronic eye systems. Nat. Commun. 8, 1782 (2017).

Lee, W. et al. Two-dimensional materials in functional three-dimensional architectures with applications in photodetection and imaging. Nat. Commun. 9, 1417 (2018).

Seo, S. et al. Artificial optic-neural synapse for colored and color-mixed pattern recognition. Nat. Commun. 9, 5106 (2018).

Jung, I. et al. Dynamically tunable hemispherical electronic eye camera system with adjustable zoom capability. Proc. Natl Acad. Sci. USA 108, 1788–1793 (2011).

Kim, J. et al. Photon-triggered nanowire transistors. Nat. Nanotechnol. 12, 963–968 (2017).

Kim, M. S. et al. An aquatic-vision-inspired camera based on a monocentric lens and a silicon nanorod photodiode array. Nat. Electron. 3, 546–553 (2020).

Mennel, L. et al. Ultrafast machine vision with 2D material neural network image sensors. Nature 579, 62–66 (2020).

Kang, K. et al. High-mobility three-atom-thick semiconducting films with wafer-scale homogeneity. Nature 520, 656–660 (2015).

Cheng, R. et al. Few-layer molybdenum disulfide transistors and circuits for high-speed flexible electronics. Nat. Commun. 5, 5143 (2014).

Moon, K. et al. RRAM-based synapse devices for neuromorphic systems. Faraday Discuss 213, 421–451 (2019).

Amit, I. et al. Role of charge traps in the performance of atomically thin transistors. Adv. Mater. 29, 1605598 (2017).

Lee, J. et al. Monolayer optical memory cells based on artificial trap-mediated charge storage and release. Nat. Commun. 8, 14734 (2017).

Massicotte, M. et al. Dissociation of two-dimensional excitons in monolayer WSe2. Nat. Commun. 9, 1633 (2018).

Jauregui, L. A. et al. Electrical control of interlayer exciton dynamics in atomically thin heterostructures. Science 366, 870–875 (2019).

Zhu, T. et al. Highly mobile charge-transfer excitons in two-dimensional WS2/tetracene heterostructures. Sci. Adv. 4, eaao3104 (2018).

Zhu, X. et al. Charge transfer excitons at van der Waals interfaces. J. Am. Chem. Soc. 137, 8313–8320 (2015).

Pak, J. et al. Intrinsic optoelectronic characteristics of MoS2 phototransistors via a fully transparent van der Waals heterostructure. ACS Nano 13, 9638–9646 (2019).

Kaako, L. G., Barbara, P. F. & Zhu, X.-Y. Intrinsic charge trapping in organic and polymeric semiconductors: a physical chemistry perspective. J. Phys. Chem. Lett. 1, 628–635 (2010).

Jang, H. et al. An atomically thin optoelectronic machine vision processor. Adv. Mater. 32, 2002431 (2020).

Kaltenbrunner, M. et al. An ultra-lightweight design for imperceptible plastic electronics. Nature 499, 458–463 (2013).

Choi, M. K. et al. Wearable red-green-blue quantum dot light-emitting diode array using high-resolution intaglio transfer printing. Nat. Commun. 6, 7149 (2015).

Kang, P., Wang, M. C., Knapp, P. M. & Nam, S. Crumpled graphene photodetector with enhanced, strain-tunable, and wavelength-selective photoresponsivity. Adv. Mater. 28, 4639–4645 (2016).

Yin, Z. et al. Single-layer MoS2 phototransistors. ACS Nano 6, 74–80 (2012).

Akinwande, D., Petrone, N. & Hone, J. Two-dimensional flexible nanoelectronics. Nat. Commun. 5, 5678 (2014).

Xu, R. et al. Vertical MoS2 double-layer memristor with electrochemical metallization as an atomic-scale synapse with switching thresholds approaching 100 mV. Nano Lett. 19, 2411–2417 (2019).

Moon, H. et al. Synthesis of ultrathin polymer insulating layers by initiated chemical vapour deposition for low-power soft electronics. Nat. Mater. 14, 628–635 (2015).

Son, D. et al. Multifunctional wearable devices for diagnosis and therapy of movement disorders. Nat. Nanotechnol. 9, 397–404 (2014).

Kim, J. et al. Stretchable silicon nanoribbon electronics for skin prosthesis. Nat. Commun. 5, 5747 (2014).

Bhowmik, P., Pantho, M. J. H. & Bobda, C. Bio-inspired smart vision sensor: toward a reconfigurable hardware modeling of the hierarchical processing in the brain. J. Real-Time Image Proc. https://doi.org/10.1007/s11554-020-00960-5 (2020).

Bhowmik, P., Pantho, M. J. H. & Bobda, C. Event-based re-configurable hierarchical processors for smart image sensors. 2019 IEEE 30th International Conference on Application-specific Systems, Architectures and Processors pp. 115–122 (IEEE, 2019).

Acknowledgements

This research was supported by IBS-R006-A1. S.N. acknowledges support from NSF (MRSEC DMR-1720633, ECCS-1935775, CMMI-1904216, and DMR-1708852), AFOSR (FA2386-17-1-4071), NASA ECF (NNX16AR56G), and ONR YIP (N00014-17-1-2830). N.R.A. acknowledges support from NSF (OISE-1545907, DMR-1708852, MRSEC DMR-1720633, and CMMI-1921578). A.T. and N.R.A. acknowledge the use of the parallel computing resources: (1) Blue Waters (supported by NSF awards OCI-0725070, ACI-1238993 and the state of Illinois, and as of December 2019, supported by the National Geospatial-Intelligence Agency), and (2) Comet at San Diego Supercomputer Center which is provided by the Extreme Science and Engineering Discovery Environment (XSEDE) (supported by National Science Foundation (NSF) Grant No. OCI1053575) under TG-CDA100010 allocation. C.C. acknowledges support from NASA Space Technology Research Fellow Grant No. 80NSSC17K0149.

Author information

Authors and Affiliations

Contributions

C.C., J.L., M.K., A.T., K.W.C., T.H., N.R.A., S.N., and D.-H.K. designed the experiments, analyzed the data and wrote the manuscript. C.C., M.K., and H.S. fabricated cNISA and performed the characterization of individual devices. C.C., J.L., A.T., C.C., H.J.B., and N.R.A. analyzed the MoS2-pV3D3 heterostructure experimentally and theoretically. C.C. performed theoretical analysis on mechanics. G.J.L and Y.M.S. performed theoretical analysis on optics. All authors discussed the results and commented on the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Nature Communications thanks Geoffrey P McKnight and the other, anonymous reviewer for their contribution to the peer review of this work. Peer reviewer reports are available.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Choi, C., Leem, J., Kim, M. et al. Curved neuromorphic image sensor array using a MoS2-organic heterostructure inspired by the human visual recognition system. Nat Commun 11, 5934 (2020). https://doi.org/10.1038/s41467-020-19806-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-020-19806-6

This article is cited by

-

In-sensor dynamic computing for intelligent machine vision

Nature Electronics (2024)

-

Neuromorphic electro-stimulation based on atomically thin semiconductor for damage-free inflammation inhibition

Nature Communications (2024)

-

Toward grouped-reservoir computing: organic neuromorphic vertical transistor with distributed reservoir states for efficient recognition and prediction

Nature Communications (2024)

-

High performance artificial visual perception and recognition with a plasmon-enhanced 2D material neural network

Nature Communications (2024)

-

The Roadmap of 2D Materials and Devices Toward Chips

Nano-Micro Letters (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.