Abstract

Purpose

To assess a Royston−Parmar flexible parametric survival model to generate a personalised risk profile for keratoconus progression.

Methods

We re-analysed a historic database of 2723 individuals with keratoconus. A Royston−Parmar survival model was fitted to predict the likelihood of the worse eye progressing to corneal transplantation. We used a backwards selection multivariable fractional polynomial procedure to assist with selection of covariates and identify appropriate transformation(s) to retain in the final model. Time-dependent receiver operating characteristic (ROC) curves from censored survival data using the Kaplan−Meier (KM) method were computed to visually assess how well the model identified eyes likely to progress.

Results

In all, 5020 eyes from 2581 patients were available for model development. This included 2378 worst affected eyes, and 313 eyes that progressed to transplantation. The best fitting model [df = 1: Bayes information criterion (BIC) = 1573] included three variables, keratometry [hazard ratio (HR) 0.36: 95% confidence limits (CI) 0.32–0.42], age at baseline [HR 0.97: CI 0.95–0.99] and ethnicity [HR 3.92: CI 2.58–5.95]. Specificity at 1 year was 92.8% (CI 90.4−95.2%) with a corresponding sensitivity of 64.6% (CI 58.9−60.0%). These three prognostic factors account for 41.3% (CI 33.6 – 48.2%) of the variation among the survival curves.

Conclusion

Researchers should consider the Royston−Parmar model as an alternative to the Cox model. We illustrate the concepts and our results may lead to better tools that identify individuals at high risk of keratoconus progression.

Similar content being viewed by others

Introduction

Keratoconus is distortion of the cornea with ectasia and irregular astigmatism that affect vision [1]. The onset of disease is usually in the second or third decade of life with a prevalence in Europe estimated to be between 86 and 265 per 100,000 of the population [2, 3], with a higher prevalence in black, South Asian or other non-European groups than in white Europeans [4, 5]. The rate of progression varies between individuals and the disease is typically asymmetric, with the worse affected eye at the time of diagnosis deteriorating at the fastest rate [6]. As the corneal shape becomes progressively more abnormal, spectacles or rigid contact lenses cannot fully correct vision, which can affect quality of life, education and career development [7]. Approximately 20% of patients with keratoconus eventually require corneal transplantation [8]. However, the cornea normally becomes more rigid with age, such that it is unusual for the corneal shape to deteriorate significantly beyond the age of 40 years.

Corneal collagen cross-linking (CXL) is the only proven intervention that can halt disease progression in keratoconus. It is effective in 98% of treated eyes and may also result in an improvement in corneal shape and visual acuity [9, 10], which may reduce the long-term requirement for corneal transplantation [11]. Complications of CXL can occur and there is an approximately 3% risk that vision will be worse as the result of infection or inflammation [12]. For this reason, CXL is not indicated for stable keratoconus and it is normally only offered to individuals who have had recent disease progression (i.e. steepening of the cornea, corneal thinning, worsening vision) demonstrated after serial monitoring of their eyes over months or years.

If individuals at particular risk of disease progression could be identified at the time of diagnosis, this would influence the decision to offer CXL. Sensitive methods to detect early keratoconus have been developed [13], and risk factors for disease progression have also been identified, including ethnicity, eye rubbing, age at diagnosis, and high corneal astigmatism or more advanced disease at diagnosis [5, 14,15,16]. These risk factors are obtained from time-to-event survival data related to an endpoint, e.g. a defined significant change in corneal shape, or corneal transplantation. The Cox proportional hazards method is frequently used to analyse the effect of individual risk factors (covariates) on the baseline hazard (e.g. survival), but it is semi-parametric and the baseline hazard function is not estimated. Researchers involved in predicting patient survival have been encouraged to consider using the Royston−Parmar flexible parametric model as an alternative to the Cox method as it estimates both baseline hazard and the effect of covariates on the baseline hazard [17]. In this paper we show how this can be applied and how the results may be incorporated in the development of models that predict keratoconus disease progression to corneal transplantation.

Materials and methods

This study was approved by the Research and Development department of Moorfields Eye Hospital NHS Foundation Trust (ROAD 14/037). We re-analysed a large anonymized database of keratoconus patients who attended the contact lens department of Moorfields Eye Hospital between 1985 and 1992 [16]. A diagnosis of keratoconus was based on the established criteria of the time: corneal steeping on keratometry, irregular astigmatism, corneal thinning, Vogt’s striae, Fleischer rings, and scissoring of the retinoscopic reflex [1]. Digital corneal tomography was unavailable. At their first visit, patient demographics were recorded with the contact lens-corrected distance Snellen visual acuity. The steepest keratometry (Kmax) and flattest keratometry (Kmin) were measured in millimetres (Javal-Schiotz). Keratometry was repeated at each subsequent visit, but patients were not routinely asked to remove their contact lenses for a specific period of time prior to keratometry. The worst affected eye was defined as the eye with the smallest radius of curvature on keratometry (i.e. the steepest cornea); where both eyes of the same patient were equal, the worst eye was identified based on corrected visual acuity. A corneal transplant was typically offered if the visual acuity was 6/18 or less, if the best contact lens fit was unstable or if the patient was intolerant to lenses, although not all patients offered a corneal transplant chose to proceed. Progression was analysed from baseline (first visit) to the date of the corneal transplant. Follow-up was defined as the interval between diagnosis and the last visit. Eyes with a history of trauma or prior corneal surgery were excluded. Corneal astigmatism (Kmax−Kmin) was excluded from the model as we considered that this measurement would have been inaccurate if contact lenses had been worn immediately prior to keratometry. Similarly, we excluded visual acuity as this had been measured with contact lenses, which did not reflect the effect of the underlying corneal shape.

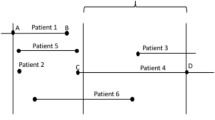

Data were randomly split into a model building data set (75%) and an internal validation data set (25%)—this was done by setting a seed and randomly drawing numbers from a uniform distribution, whilst ensuring that the proportion of events within the two datasets was alike. A Royston−Parmar flexible parametric survival model was fitted to the model building data set to predict the probability of the worse eye progressing to corneal transplantation in the validation data set. A backwards selection multivariable fractional polynomial procedure was used to assist with selection of covariates and identify appropriate transformation(s) to retain in the final fully adjusted model. The selection of the final model, and selection of scales and number of degrees of freedom (df) for the baseline function, were guided by the Bayes information criterion (BIC) statistic. Royston and Sauerbrei’s D statistic was used as a measure of discrimination, and R2D as a measure of explained variation on the natural scale of the model. To assess model performance, we used the validation set to compute time-dependent receiver operating characteristic (ROC) curves from censored survival data using the Kaplan−Meier (KM) method and area under the receiver operating characteristic (AUROC) curve, sensitivity, specificity, positive predictive value (PPV) and negative predictive value (NPV).

Two risk groups were identified by computing and maximising time-dependent sensitivity and specificity values when predicting the likelihood of an event in the model building data set, enabling determination of optimal prognostic index time-dependent cut-off points. KM curves for the two identified risk profiles were constructed by computing model predictions in the internal validation data set and this was compared with the observed KM curves for visual inspection.

Analysis was performed in Stata 13 (StataCorp LP, Texas, USA) and flexible parametric models were fitted using the stpm2 command [18]. Time-dependent ROC curves as well as sensitivity, specificity, positive predictive value (PPV) and negative predictive value (NPV) alongside with respective 95% confidence intervals (CIs) were computed (survivalROC 1.0.3) [19] within R [20]. This study adheres to the TRIPOD guidelines (http://www.tripod-statement.org/) for analysing and reporting prognostic studies [21].

Results

Data from 5163 eyes of 2660 patients were available for analysis. A total of 123 eyes from 70 patients with a history of prior surgery or trauma were excluded. A further 20 eyes from 18 patients who had a corneal transplant prior to their first assessment were also excluded. After further exclusions 2378 worse eyes were available for analysis, with 1824 eyes that had full time-to-event data, of which 313 eyes had a corneal transplant. These were split into model building and validation sets (supplementary Fig. 1). Baseline data for the model building and validation datasets are shown in Supplementary Table 1. The median follow-up from first assessment was 3.8 years [interquartile range (IQR): 1.6–7.9] in the model building data set. The follow-up data for model building and validation datasets are summarised in Supplementary Table 2. The significant factors identified in univarible analysis (model building data set) are presented in Table 1.

The best fitting model (df = 1; BIC = 1219) includes three variables, Kmax [hazard radio (HR) = 0.34: 95% confidence interval (CI) 0.29–0.4], age at baseline [HR 0.97: CI 0.95–0.99] and black African or West Indian ethnicity [HR 3.46: CI 2.07–5.8] (Table 2). Prognostic factors accounted for 41.3% (CI 33.6–48.2%) of the variation among the survival curves. A sensitivity analysis performed using flattest (Kmin) instead of steepest (Kmax) keratometry measurement in the final model yielded a poorer fitting model (df = 1; BIC = 1218), with prognostic factors accounting for less of the variation among the survival curves (34.1%: CI = 26.3−41.4%).

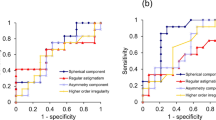

Figure 1 illustrates the estimated survival probabilities and shows what might happen to eyes followed over 10 years across the risk spectrum (validation data set only). Predicted and observed survival plots for the highest and lowest risk groups over 10 years using optimal prognostic index cut-off points at 1, 3 and 10 years (optimal prognostic index cut-off point is identical for 3 and 5 years) are presented in Fig. 2. For patients in the highest-risk group, using 5-year optimal prognostic index cut-off, the model predicts there is a 40% chance their worse eye will require a corneal graft within 5 years. For patients in the lowest-risk group, the model predicts there is an 8% chance their worse eye will require a corneal graft within 5 years. Table 3 shows that the model has a high specificity for predictions up to 4 years, with a value at 4 years of 96% (CI 94.2−97.8%). Corresponding sensitivities are however lower, with a value of 51.2% (CI 46.5−55.8 %) at 4 years. Table 3 also shows high negative predictive values, suggesting that a patient who is predicted at low risk of progression is unlikely to progress.

The ROC curves (Supplementary Fig. 2) considered with sensitivity and specificity data (Table 3) indicate that AUROC is <1.0 and thus there is a degree of uncertainty of prediction within the model.

Discussion

We have re-examined a longitudinal database of individuals with keratoconus to identify risk factors associated with progression of disease to corneal transplantation [16]. Results show that the steepest corneal curvature at presentation (Kmax), age at presentation, and black African and West Indian ethnicity were all associated with an increased risk of progression. The model has the advantage over the Cox model as it also enabled us to estimate risk when covariants were combined, for a follow-up of 10 years. Overall, these three risk factors only accounted for an estimated 41% variation in survival. Further variation may be the result of uncertainty in measurement accuracy for some variables in keratoconus (e.g. corneal shape) [22], and the absence of some biomarkers from the model that we now strongly suspect will predict outcome, such as corrected visual acuity, corneal astigmatism, asymmetry between eyes, the severity of allergic eye disease and eye rubbing, and the genetic background of an individual.

Defining progression of keratoconus remains a challenge, with a lack of consensus among clinicians and researchers [23,24,25]. Since this data set was collected there have been major advances in corneal imaging, with an emphasis on the detection of early keratoconus in candidates for refractive surgery [26], although this has yet to be combined with demographic data that are thought to affect the development of keratoconus. In addition, the advent of CXL now offers a reliable method to prevent progression of early keratoconus [25]. The introduction of CXL therefore means that it is unlikely that a large data set of untreated keratoconus will again become available. In the absence of a modern data set that combines an endpoint for progression (corneal transplantation) and demographic risk factors associated with progression, we have revisited this historic data set to evaluate this alternative mathematical model.

In the original analysis of these data, prognostic factors were identified using a Cox proportional hazards model [16]. Researchers today are encouraged to use parametric models such as the Royston−Parmar flexible parametric survival model [18, 27]. This allows greater flexibility in model fitting which can provide insight on the behaviour of the hazard function and the hazard ratio over time [17]. Also, in the original analysis, age was dichotomised using 18 years as the cut-off, which suggested that younger patients progressed more rapidly to surgery [16]. However, it is now recommended that age is analysed as continuous rather than dichotomous [28], and our best fit model confirmed that age measured on a continuous scale is a risk factor (HR 0.97: CI 0.95–0.99, p < 0.001). Although the HR ratio appears relatively small, HR for continuous age indicates the change in the risk of a corneal graft per unit increase (1 year) in age at presentation. This means that for every additional year of a patient’s age at presentation, the risk of a corneal graft falls by 3%, falling by approximately 26% for 10 additional years. The ROC curves (Supplementary Fig. 2) considered with sensitivity and specificity data (Table 3) confirm that there is a degree of uncertainty within the model.

Unfortunately, given the variability of decision-making inherent in choosing when to perform corneal transplant surgery, it is unlikely that we can improve the accuracy of the model using this data set. Applying this methodology to a prospective database of young keratoconus patients using a clinically relevant change in serial digital tomography to define progression, and including objective parameters such as visual acuity, would offer greater opportunity to improve the sensitivity and specificity of a model.

The use of corneal transplant surgery as an endpoint for disease progression has limitations. Corneal transplantation is typically the final option for visual rehabilitation of keratoconus when glasses, corneal ring implants or contact lenses fail. However, the decision to proceed with surgery is not always evidence of disease progression as in the original analysis contact lens intolerance, as distinct from lens instability, was the indication for surgery in 6.8% of eyes [16]. Corneal transplantation may also be deferred for personal reasons or by the clinician if the likelihood of success is poor, for example if the cornea is vascularised. A better definition of progression would be an objective variable such as a deterioration in the shape of the cornea. However, tomography is more reproducible in early keratoconus than in advanced disease [25], and the reliability of corneal topography is further reduced if a contact lens has been worn in the weeks prior to scanning because the lens can mechanically flatten (warp) the cornea [29, 30]. Contact lenses were not removed for any period prior to keratometry in this study. Data on ethnicity were incomplete as it is voluntary for individuals to provide this information. A final drawback to our database was the inclusion of patients >40 years of age in whom keratoconus will almost certainly have stabilised.

In conclusion, the application of flexible parametric models to survival data, as an alternative to a Cox model, will not identify novel covariants but it will improve modelling of time-dependant effects and prediction of outcome. If this methodology is applied to tomography data, which can detect many more parameters than keratometry, with the addition of other biomarkers, it would be possible to improve this model to help develop a clinical tool that will help clinicians counsel individuals with keratoconus as to their likely need for corneal cross-linking (CXL), allowing closer monitoring and possible earlier intervention to prevent visual loss when there is high-risk, as well as avoiding unnecessary treatment for individuals at low-risk or progression.

Summary

What was known before

-

For progressive keratoconus corneal cross-linking can stabilise the corneal shape and prevent further visual loss.

-

Early identification of individuals at risk of progression could help identify those who would benefit most from early intervention.

What this study adds

-

A Royston−Parmar flexible parametric survival model was fitted to a large patient database as an alternative to a Cox model.

-

This methodology could lead to individual risk profiles for patients at the time of their diagnosis of keratoconus.

References

Rabinowitz YS. Keratoconus. Surv Ophthalmol. 1998;42:297–319.

Godefrooij DA, de Wit GA, Uiterwaal CS, Imhof SM, Wisse RP. Age-specific incidence and prevalence of keratoconus: a nationwide registration study. Am J Ophthalmol. 2017;175:169–72.

Nielsen K, Hjortdal J, Aagaard Nohr E, Ehlers N. Incidence and prevalence of keratoconus in Denmark. Acta Ophthalmol Scand. 2007;85:890–2.

Pearson AR, Soneji B, Sarvananthan N, Sandford-Smith JH. Does ethnic origin influence the incidence or severity of keratoconus? Eye. 2000;14:625–8.

Bak-Nielsen S, Ramlau-Hansen CH, Ivarsen A, Plana-Ripoll O, Hjortdal J. A nationwide population-based study of social demographic factors, associated diseases and mortality of keratoconus patients in Denmark from 1977 to 2015. Acta Ophthalmol. 2018. https://doi.org/10.1111/aos.13961. [Epub ahead of print].

Zadnik K, Steger-May K, Fink BA, Joslin CE, Nichols JJ, Rosenstiel CE, et al. Between-eye asymmetry in keratoconus. Cornea. 2002;21:671–9.

Kymes SM, Walline JJ, Zadnik K, Sterling J, Gordon MO. Collaborative Longitudinal Evaluation of Keratoconus Study G Changes in the quality-of-life of people with keratoconus. Am J Ophthalmol. 2008;145:611–7.

Lass JH, Lembach RG, Park SB, Hom DL, Fritz ME, Svilar GM, et al. Clinical management of keratoconus. A multicenter analysis. Ophthalmology. 1990;97:433–45.

O’Brart DP, Patel P, Lascaratos G, Wagh VK, Tam C, Lee J, et al. Corneal cross-linking to halt the progression of keratoconus and corneal ectasia: seven-year follow-up. Am J Ophthalmol. 2015;160:1154–63.

Wittig-Silva C, Chan E, Islam FM, Wu T, Whiting M, Snibson GR. A randomized, controlled trial of corneal collagen cross-linking in progressive keratoconus: three-year results. Ophthalmology. 2014;121:812–21.

Godefrooij DA, Gans R, Imhof SM, Wisse RP. Nationwide reduction in the number of corneal transplantations for keratoconus following the implementation of cross-linking. Acta Ophthalmol. 2016;94:675–8.

Koller T, Mrochen M, Seiler T. Complication and failure rates after corneal crosslinking. J Cataract Refract Surg. 2009;35:1358–62.

Mas Tur V, MacGregor C, Jayaswal R, O’Brart D, Maycock N. A review of keratoconus: diagnosis, pathophysiology, and genetics. Surv Ophthalmol. 2017;62:770–83.

Gordon-Shaag A, Millodot M, Kaiserman I, Sela T, Barnett Itzhaki G, Zerbib Y, et al. Risk factors for keratoconus in Israel: a case-control study. Ophthalmic Physiol Opt. 2015;35:673–81.

McMahon TT, Edrington TB, Szczotka-Flynn L, Olafsson HE, Davis LJ, Schechtman KB, et al. Longitudinal changes in corneal curvature in keratoconus. Cornea. 2006;25:296–305.

Tuft SJ, Moodaley LC, Gregory WM, Davison CR, Buckley RJ. Prognostic factors for the progression of keratoconus. Ophthalmology. 1994;101:439–47.

Royston P, Parmar MK. Flexible parametric proportional-hazards and proportional-odds models for censored survival data, with application to prognostic modelling and estimation of treatment effects. Stat Med. 2002;21:2175–97.

Royston P, Lambert PC. Flexible parametric survival analysis using stata: beyond the Cox model. College Station, TX: Stata Press; 2011.

Heagerty PJ, Zheng Y. Survival model predictive accuracy and ROC curves. Biometrics. 2005;61:92–105.

R Core Team. R: A language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing; 2014. http://www.R-project.org/. Accessed May 2019.

Collins GS, Reitsma JB, Altman DG, Moons KGM. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): the TRIPOD statement. BJOG. 2015;122:434–43.

Cohen JF, Korevaar DA, Altman DG, Bruns DE, Gatsonis CA, Hooft L, et al. STARD 2015 guidelines for reporting diagnostic accuracy studies: explanation and elaboration. BMJ Open. 2016;6:e012799.

Brown SE, Simmasalam R, Antonova N, Gadaria N, Asbell PA. Progression in keratoconus and the effect of corneal cross-linking on progression. Eye Contact Lens. 2014;40:331–8.

Gore DM, Shortt AJ, Allan BD. New clinical pathways for keratoconus. Eye. 2013;27:329–39.

Flynn TH, Sharma DP, Bunce C, Wilkins MR. Differential precision of corneal Pentacam HR measurements in early and advanced keratoconus. Br J Ophthalmol. 2016;100:1183–7.

Lopes BT, Ramos IC, Salomão MQ, Guerra FP, Schallhorn SC, Schallhorn JM, et al. Enhanced tomographic Assessment to detect corneal ectasia based on artificial intelligence. Am J Ophthalmol. 2018;195:223–32.

Miladinovic B, Kumar A, Mhaskar R, Kim S, Schonwetter R, Djulbegovic B. A flexible alternative to the Cox proportional hazards model for assessing the prognostic accuracy of hospice patient survival. PLoS ONE. 2012;7:e47804.

Cumberland PM, Czanner G, Bunce C, Dore CJ, Freemantle N, Garcia-Finana M, et al. Ophthalmic statistics note: the perils of dichotomising continuous variables. Br J Ophthalmol. 2014;98:841–3.

Smolek MK, Klyce SD, Maeda N. Keratoconus and contact lens-induced corneal warpage analysis using the keratomorphic diagram. Invest Ophthalmol Vis Sci. 1994;35:4192–204.

Tsai PS, Dowidar A, Naseri A, McLeod SD. Predicting time to refractive stability after discontinuation of rigid contact lens wear before refractive surgery. J Cataract Refract Surg. 2004;30:2290–4.

Acknowledgements

This study is supported in part by the Department of Health’s National Institute for Health Research (NIHR) Biomedical Research Centre for Ophthalmology at Moorfields Eye Hospital and University College London (UCL) Institute of Ophthalmology; Special Trustees of Moorfields Eye Hospital (ST1507M); Friends of Moorfields (G159). The views expressed in this publication are those of the authors and not necessarily those of the Department of Health.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Quartilho, A., Gore, D.M., Bunce, C. et al. Royston−Parmar flexible parametric survival model to predict the probability of keratoconus progression to corneal transplantation. Eye 34, 657–662 (2020). https://doi.org/10.1038/s41433-019-0554-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41433-019-0554-4