Abstract

Background

Anthropometric data quality in large multicentre nutrition surveys is seldom adequately assessed. In preparation for the South African National Dietary Intake Survey (NDIS-2022), this study assessed site leads’ and fieldworkers’ intra- and inter-rater reliability for measuring weight, length/height, mid-upper arm circumference (MUAC), waist circumference (WC) and calf circumference (CC).

Methods

Standardised training materials and measurement protocols were developed, and new anthropometric equipment was procured. Following two training rounds (12 site lead teams, 46 fieldworker teams), measurement reliability was assessed for both groups, using repeated measurements of volunteers similar to the survey target population. Reliability was statistically assessed using the technical error of measurement (TEM), relative TEM (%TEM), intra-class correlation coefficient (ICC) and coefficient of reliability (R). Agreement was visualised with Bland-Altman analysis.

Results

By %TEM, the best reliability was achieved for weight (%TEM = 0.260–0.923) and length/height (%TEM = 0.434–0.855), and the poorest for MUAC by fieldworkers (%TEM = 2.592–3.199) and WC (%TEM = 2.353–2.945). Whole-sample ICC and R were excellent ( > 0.90) for all parameters except site leads’ CC inter-rater reliability (ICC = 0.896, R = 0.889) and fieldworkers’ inter-rater reliability for MUAC in children under two (ICC = 0.851, R = 0.881). Bland-Altman analysis revealed no significant bias except in fieldworkers’ intra-rater reliability of length/height measurement in adolescents/adults ( + 0.220 (0.042, 0.400) cm). Reliability was higher for site leads vs. fieldworkers, for intra-rater vs. inter-rater assessment, and for weight and length/height vs. circumference measurements.

Conclusion

NDIS-2022 site leads and fieldworkers displayed acceptable reliability in performing anthropometric measurements, highlighting the importance of intensive training and standardised measurement protocols. Ongoing reliability assessment during data collection is recommended.

Similar content being viewed by others

Introduction

Anthropometric measurements are generally considered easy to perform, but in reality, accurate and reliable measurement is challenging. Anthropometric data quality in multi-site national Demographic and Health Surveys varies greatly within and between countries [1]. Substandard anthropometric data quality compromises the accuracy of the results (including estimates of population nutrition status), while potentially attenuating or exaggerating associations between anthropometric data and other variables of interest. Furthermore, questionable data quality limits the comparability of data over time, and within and between studies. A recent synthesis of scoping reviews conducted in low- and middle-income countries found wide between-study variation in malnutrition prevalence estimates in school-age children: in South African studies, reported prevalences ranged from 10–37% for stunting, 18–34% for wasting, and 4–81% for thinness [2]. While temporal and sociodemographic differences account for some variation, the possible contribution of inconsistent or inappropriate measurement techniques cannot be ignored.

Reliability (also called repeatability or reproducibility) describes the degree to which repeated measurements of the same (unchanged) parameter yield the same results. Reliability may be assessed for repeated measurements by one measurer (intra-rater reliability) or for measurements of the same parameter by two or more measurers (inter-rater reliability). The degree of variability in repeated measurements is inversely related to reliability, providing the basis for quantitative assessment [3].

Many factors affect the reliability of anthropometric measurements. Equipment-related error can be minimised by using identical high-quality equipment across all sites, preferably newly purchased, and performing daily verification of accuracy and periodic calibration. Consistent, appropriate measurement technique is essential. Measurement reliability can be affected by several aspects of technique, including measurement site (e.g., for waist circumference (WC) [4,5,6]), equipment choice (e.g. types of tape measures) and the exact techniques used (e.g. infant length). In a multi-site study, these factors should be standardised a priori when developing measurement protocols, and fieldworkers trained accordingly [7,8,9,10]. Standardised, uniform fieldworker training should be followed by practical assessment of measurement techniques and statistical assessment of reliability. Apart from fieldworker competence, the ease of taking precise measurements depends on participant factors (e.g. participant cooperation), age and body size [11] and the anthropometric parameter of interest (e.g. weight vs. length/height vs. MUAC) [12]; fieldworker training should therefore cover all measurements in as many anticipated participant types as possible.

This work was conducted in preparation for the multi-site South African National Dietary Intake Survey 2022 (NDIS-2022), which aimed to recruit a nationally representative sample of South African preschoolers, schoolchildren, adults and elderly, with anthropometric data included as indicators of under- and overnutrition in all age groups. Given the aforementioned considerations, evidence that fieldworkers could perform anthropometric measurements with acceptable reliability was considered critical. Following training, such reliability was assumed in previous South African national surveys, but evidence thereof was not documented; post-hoc analysis suggests that anthropometric data quality in these studies was moderate at best [1]. Furthermore, nearly all reports of pre-survey reliability assessment are from high-income country settings, primarily European [3, 13,14,15] and North American [16, 17], leaving a conspicuous data gap for low-and middle-income countries, particularly sub-Saharan Africa. Additionally, the reliability of calf circumference (CC) measurements in a multi-site survey has not yet been assessed outside of the National Health and Nutrition Examination Surveys that were used to develop the reference standards [18].

Aim

This study aimed to assess the intra- and inter-rater reliability of site lead anthropometrists and anthropometry fieldworkers for all the anthropometric measurements included in the NDIS-2022 (namely weight, length/ height, MUAC, WC and CC) in volunteers of all the age groups included in the NDIS-2022.

Methods

Data collection in the NDIS-2022 was decentralised to twelve teams working in nine provinces, each managed by a two-person team (one site lead, who was also responsible for fieldworker training, and one coordinator).

Anthropometry training and reliability assessment were based on a newly developed twelve-module training programme (Table S1). To facilitate international comparability of the NDIS-2022 results, protocols were based on World Health Organisation guidelines for anthropometry in children under five [10], the DHS Programme best-practice guidelines for anthropometric data collection in Demographic and Health Surveys [19], and the FANTA Guide to Anthropometry [20]. The training manual was supplemented by PowerPoint presentations, all of which are available in the public domain [21].

Training and standardisation of site lead anthropometrists

Orientation and training of the twelve site leads (plus site coordinators) were conducted by the authors (FW, SN and LvdB) during a two-day, centralised meeting (January 2022). This served as a prototype for the decentralised fieldworker training, which the twelve site leads and coordinators would conduct. Most of the site leads and coordinators were dietitians/ nutritionists with prior training in anthropometry. After working through the training manual and accompanying PowerPoint Presentations, the measurement techniques were demonstrated and practised on a variety of adult, child and infant volunteers. The anthropometric equipment purchased for the main study (Table S2) was used.

For logistical, safety and COVID-19-related reasons, the reliability assessment on adult volunteers was conducted at the training venue and on children under five at an Early Child Development Centre. Trainees worked in pairs to take measurements on three infants (aged 7–14 months), three to four children (aged 3–4 years) and three adults. Measurements were documented on a study-specific form, which was submitted after the first round of measurements to reduce recall bias. After completing one round of measurements on all volunteers, the process was repeated on the same volunteers to yield two measurement sets for each trainee pair.

Based on post-training feedback from the site leads, minor adjustments were made to the study protocol (e.g. minimum requirements for volunteer numbers and attributes, and measurement of WC by a fieldworker of the same sex as the participant).

Training and standardisation of fieldworkers

Site leads and coordinators were responsible for fieldworker training at their respective sites (February 2022), using similar training procedures with minor site-specific adjustments as needed. Eight provincial training sessions were conducted; site trainings were combined where one province contained two study sites (three provinces), or neighbouring provinces had small fieldwork teams (two provinces). Two anthropometry fieldworkers (a lead measurer and an assistant) were appointed to each fieldwork team. In total, 46 pairs of anthropometry fieldworkers were trained (3–11 teams per province, depending on data collection burden; Table S3). Post-training reliability assessment followed procedures similar to the site lead training, with two rounds of measurements completed on the same volunteers. Standardisation volunteers included one or more persons aged 0–1 years, 1–5 years and >12 years (including overweight/ obese adults) to ensure representation of the most challenging measurement conditions. Following preliminary reliability analyses, retraining was required at one site, with markedly lower reliability and an insufficient variety of standardisation volunteers. Only the reliability data collected after the retraining are included in these analyses.

Data management and analysis

All raw data were captured and cleaned in Excel and analysed using R (R Foundation for Statistical Computing, Vienna, Austria) [22]. Both measurements were used to assess intra-rater reliability, while only the first measurement was used to assess inter-rater reliability. Data from volunteers that were measured by only one fieldworker were excluded, as this does not allow for assessment of inter-rater reliability.

Intra- and inter-rater reliability were assessed using the technical error of measurement (TEM), relative TEM (%TEM), coefficient of reliability (R) and intra-class correlation coefficient (ICC) [12, 23,24,25] using the equations shown in Table S4. Reliability statistics were calculated separately for each measured parameter (i.e. weight, length/ height, MUAC, WC and CC), for site leads and fieldworkers, and by volunteer age group (0 ≤ 2 years, 2–12 years and >12 years), where relevant.

The TEM indicates the overall absolute error, expressed in the same units as the measurement being analysed (e.g. kg for weight, cm for height) [24]. The difference between the site leads’ and fieldworkers’ TEM was quantified using an F-statistic [F = (TEM site leads)2 /(TEM fieldworkers)2] and p-values calculated with N-1 degrees of freedom. Relative TEM describes the TEM as a percentage of the mean of the measurements to compensate for the correlation between TEM and measurement size [24]. Lower TEM and %TEM values indicate greater reliability. The coefficient of reliability (R) quantifies the proportion of observed between-subject variance that is not attributable to measurement error [24]. The ICC incorporates elements of both correlation and agreement between two sets of measurements [25]. The ICC was calculated using R software packages ‘irr’ [26] and ‘irrNA' [27], using a one-way random-effects model with the measurement from a single rater as the basis of the assessment [25]. Higher ICC and R values (up to one) indicate greater reliability.

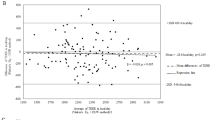

Bland-Altman analysis was used to visualise agreement using the R software package ‘blandr’ [28]. For intra-rater agreement, the difference between repeated measurements (y-axis) was plotted against the mean of the same two measurements (x-axis). For inter-rater agreement, the difference between the first measurement done by the measurer and the mean of all the measurements of the same parameter (y-axis) was plotted against the mean of all the measurements of that parameter (x-axis). The overall bias (with a 95% confidence interval) was calculated as the mathematical mean of the differences (i.e. y-axis values), and the limits of agreement were set at two standard deviations above and below this mean.

Ethical considerations

Informed consent/ assent was obtained from all participants (and parents/ guardians, where appropriate). The NDIS-2022 received umbrella ethical approval (University of the Western Cape: BM21/4/12). Site-specific ethical approval and institutional permissions were obtained where required.

Results

The reliability assessment was conducted with 15 volunteers for the site leads and 75 volunteers for the fieldworkers (Table S3). Each volunteer was measured by 2–11 (median 4) different measurers. Intra- and inter-rater TEM, %TEM, R and ICC for all anthropometric parameters are shown in Table 1. According to %TEM, both site leads and fieldworkers had the highest reliability for weight (inter-rater %TEM 0.320–0.923; intra-rater %TEM 0.260–0.645) and length/ height (inter-rater %TEM 0.581–0.855, intra-rater %TEM 0.434–0.757). For fieldworkers, MUAC had the poorest reliability (inter-rater %TEM 3.199; intra-rater %TEM 2.592), while site leads performed poorest at WC (inter-rater %TEM 2.945; intra-rater %TEM 2.353). Analysed by age group, fieldworkers’ %TEM was consistently highest (i.e. least reliable) in the 0 ≤ 2 years group.

Site leads’ TEM was significantly lower than that of fieldworkers for weight (inter-rater TEM 0.097 vs. 0.291 kg; p = 0.012 intra-rater TEM 0.075 vs. 0.215 kg; p < 0.001), length/ height intra-rater assessment (0.478 vs. 0.851 cm; p = 0.009) and CC intra-rater assessment (0.363 vs. 0.793 cm; p < 0.001). For inter-rater assessment of WC, fieldworkers (TEM 1.745 cm) performed significantly better than supervisors (TEM 2.364 cm; p = 0.014).

Whole-sample ICC and R values exceeded 0.90 for all parameters except site leads’ CC inter-rater reliability (ICC = 0.896, R = 0.889). Upon analysis by age group, fieldworkers’ inter-rater ICC and R for MUAC in children 0 ≤ 2 years was also < 0.9 (ICC = 0.851, R = 0.881).

Bland-Altman plots are shown in Figs. 1 and 2 statistics summarised in Table S5). Statistically significant bias was only seen in fieldworkers’ intra-rater reliability of length/ height measurement (0.194 (0.058, 0.330) cm), attributable to significant bias of 0.220 (0.042, 0.400) cm in the > 12 years group. On all plots, > 90% of the measurements fell within the limits of agreement.

Discussion

This paper describes the measurement reliability of anthropometry fieldworkers in the South African NDIS-2022, as assessed after standardised training. The NDIS-2022 was the first South African national multi-site nutrition survey to implement this standardised training and reliability assessment approach. Results suggest a high level of reliability among the site lead anthropometrists and lower (though still acceptable) reliability among fieldworkers. Almost all ICC and R values exceeded 0.9, indicating excellent reliability [25]. However, %TEM indicated lower reliability for MUAC, CC AND WC than for weight and length/ height.

Few guidelines for acceptable TEM values exist. Absolute TEM is proportionate to the size of the measured value; thus, acceptability thresholds might vary by age group (e.g. infants vs. adults) and measurement (e.g. MUAC vs. length/ height). Using %TEM mitigates this by expressing TEM relative to the size of the measurement, which may allow for consistent cutoffs across various measurements. Carlsey et al. [16] used %TEM < 2.0 for weight and length measurements in children 0–18 years, which our study achieved in all cases except fieldworkers’ weight measurements in children 0 ≤ 2 years. Though not included in the Carlsey et al. study, %TEM for MUAC was > 2 in all groups in our study, suggesting higher measurement variability. In adults, Perini et al. [23] proposed stricter %TEM cutoffs for weight and height, with different standards for beginner (inter-rater %TEM < 2, intra-rater %TEM < 1.5) and experienced (inter-rater %TEM < 1.5, intra-rater %TEM < 1.0) anthropometrists. By this standard, both site leads and fieldworkers had excellent reliability for adult weight and height measurements. Perini et al. did not include MUAC, CC or WC in their guidelines, but the higher %TEM for these measurements suggest greater variability than for weight and height.

Previous studies from primarily high-income countries (Table 2) have described inter- and intra-rater reliability of anthropometric measurements in children [13, 15,16,17], adolescents [14, 16], adults [15, 29] and the elderly [3]. Whilst exact results vary, some patterns are evident. Firstly, intra-rater reliability consistently exceeded inter-rater reliability, a pattern that held true in this study. Secondly, weight measurements consistently had the highest reliability, and WC (when measured) the lowest [3, 13, 15, 17]. In this study, the reliability of weight and length/height measurements exceeded that of MUAC, CC and WC. This is consistent with the technical difficulty of circumference measurements as well as the novelty of the techniques – particularly CC, which was novel to all the site leads and fieldworkers.

Site leads displayed better reliability than fieldworkers for most measurements, although TEM was only significantly different for weight (intra- and inter-rater reliability), length/height (intra-rater reliability) and CC (intra-rater reliability). This may be because most of the site leads (unlike fieldworkers) were dietitians/ nutritionists with prior tertiary-level training in anthropometric assessment. Site leads’ psychological investment and sense of ownership as project leaders may also have inspired more meticulous measurement practices. Unexpectedly, the fieldworkers outperformed the site leads for WC measurements, although only inter-rater TEM differed significantly. This underscores the importance of training and standardisation even if anthropometry is done by qualified persons as, for example, institutions differ in terms of WC site identification protocols, resulting in different values [4].

Bland-Altman analyses revealed no statistically significant bias, except in fieldworkers’ intra-rater reliability of height measurement in subjects > 12 years old, though the magnitude of the bias was small (0.22 (0.042–0.400) cm). Visual inspection of Bland-Altman plots shows greater variability of length/ height measurements in subjects < 100 cm tall (i.e. infants and young children), which is consistent with the technical difficulty of measuring length/ height in this age group. No other age-related trends were evident. In general, acceptable group-level measurement agreement was achieved within and between raters for all the pertinent anthropometric parameters, as is relevant for a national study.

The data described here provides statistical evidence of the ability of NDIS-2022 site leads and fieldworkers to perform anthropometric measurements reliably. The use of consistent training materials, standardised measurement protocols, and identical brand-new equipment across all study sites further contributes to anthropometric data quality. Additionally, this study reports the first South African reliability data for adult CC measurement. This study, alongside the publicly available training materials, paves the way for consistent quality standards for anthropometry in future large-scale South African studies, thus contributing to harmonisation and comparability of data over time and across different settings.

Some limitations must be acknowledged. First, we did not assess measurement accuracy (i.e. how closely the measured values approximate the true value). Obtaining true “gold standard” anthropometric measurements requires highly trained and accredited anthropometrists, which were unavailable in this setting. Careful equipment selection with daily verification checks should minimise equipment-related errors, but systematic errors due to suboptimal measurement technique cannot be ruled out. Finally, limited numbers of trainees and volunteers in some provinces and age groups increase statistical volatility. The pooling of data from all sites allowed for meaningful statistical analyses but may have obscured some inter-site differences.

In line with international recommendations [1, 10, 19], standardised anthropometric training and reliability assessment should precede any large nutrition survey. This increases confidence in the study results (or, conversely, highlights limitations to consider when interpreting the data). Our experience suggests that two days of training are likely insufficient for fieldworkers with no previous anthropometry experience, and more time should be allotted, particularly for hands-on practice. Adding a pre-training assessment of measurement skills would provide evidence of training effectiveness and allow for refinement of the training approach based on trainees’ strengths and challenge areas. During data collection, ongoing reliability assessment must be incorporated to ensure that data quality is maintained. Finally, statistical evidence of anthropometric data quality should be reported in detail alongside the main study results. For child anthropometry, the guidelines set out by the WHO [10] should be followed, and calculation of the composite index of anthropometric data quality described by Perumal et al. [1] is recommended. Communication of data quality (or the absence thereof), as well as steps taken to identify and manage errors, are prerequisites for ethical and transparent science communication and essential for continued trust in science [30]. This empowers both researchers (by allowing re-analyses of existing data, knowledge synthesis, and study reproduction) and policymakers (by allowing meaningful longitudinal monitoring of population trends) [30].

Conclusion

Using a variety of statistical techniques, this study describes the intra- and inter-rater reliability of anthropometric measurements in the NDIS-2022. It is the first published evidence of its kind for a large-scale multicentre South African survey. Reliable measurement is the basis of anthropometric data quality in nutrition surveys and a prerequisite for valid results. While most of the raw anthropometric data showed acceptable reliability, consistent measurement precision cannot be assumed even for well-established measurements (e.g. infant length, MUAC and WC). This highlights the importance of harmonisation of measurement protocols, intensive pre-survey training and objective and continuous assessment of reliability.

Data availability

Data may be obtained from the authors upon reasonable request.

References

Perumal N, Namaste S, Qamar H, Aimone A, Bassani DG, Roth DE. Anthropometric data quality assessment in multisurvey studies of child growth. Am J Clin Nutr. 2020;112:806S–15S. https://doi.org/10.1093/ajcn/nqaa162.

Wrottesley SV, Mates E, Brennan E, Bijalwan V, Menezes R, Ray S, et al. Nutritional status of school-age children and adolescents in low- and middle-income countries across seven global regions: a synthesis of scoping reviews. Public Health Nutr. 2023;26:63–95. https://doi.org/10.1017/S1368980022000350.

Gómez-Cabello A, Vicente-Rodríguez G, Albers U, Mata E, Rodriguez-Marroyo JA, Olivares PR, et al. Harmonization process and reliability assessment of anthropometric measurements in the Elderly EXERNET multi-centre study. PLoS ONE. 2012;7:e41752. https://doi.org/10.1371/journal.pone.0041752.

Wang J, Thornton JC, Bari S, Williamson B, Gallagher D, Heymsfield SB, et al. Comparisons of waist circumferences measured at 4 sites. Am J Clin Nutr. 2003;77:379–84. https://doi.org/10.1093/ajcn/77.2.379.

Seimon RV, Wild-Taylor AL, Gibson AA, Harper C, McClintock S, Fernando HA, et al. Less waste on waist measurements: determination of optimal waist circumference measurement site to predict visceral adipose tissue in postmenopausal women with obesity. Nutrients. 2018;10:239. https://doi.org/10.3390/nu10020239.

Verweij LM, Terwee CB, Proper KI, Hulshof CTJ, van Mechelen W. Measurement error of waist circumference: gaps in knowledge. Public Health Nutr. 2013;16:281–8. https://doi.org/10.1017/S1368980012002741.

University of Michigan Survey Research Centre. Guidelines for best practice in cross-cultural surveys. 3rd ed. Ann Arbor, MI: Survey Research Center, Institute for Social Research, University of Michigan; 2011. https://ccsg.isr.umich.edu/.

Fortier I, Raina P, Van den Heuvel ER, Griffith LE, Craig C, Saliba M, et al. Maelstrom Research guidelines for rigorous retrospective data harmonization. Int J Epidemiol. 2017;46:103–5. https://doi.org/10.1093/ije/dyw075.

Fortier I, Doiron D, Little J, Ferretti V, L’Heureux F, Stolk RP, et al. Is rigorous retrospective harmonization possible? Application of the DataSHaPER approach across 53 large studies. Int J Epidemiol. 2011;40:1314–28. https://doi.org/10.1093/ije/dyr106.

World Health Organization, United Nations Children’s Fund. Recommendations for data collection, analysis and reporting on anthropometric indicators in children under 5 years old. Geneva: World Health Organization; 2019. https://apps.who.int/iris/handle/10665/324791.

Sonnenschein EG, Kim MY, Pasternack BS, Toniolo PG. Sources of variability in waist and hip measurements in middle-aged women. Am J Epidemiol. 1993;138:301–9. https://doi.org/10.1093/oxfordjournals.aje.a116859.

De Onis M, Onyango AW, Van den Broeck J, Chumlea WC, Martorell R. Measurement and standardization protocols for anthropometry used in the construction of a new international growth reference. Food Nutr Bull. 2004;25:S27–S36. https://doi.org/10.1177/15648265040251S105.

De Miguel-Etayo P, Mesana MI, Cardon G, De Bourdeaudhuij I, Góźdź M, Socha P, et al. Reliability of anthropometric measurements in European preschool children: the ToyBox-study. Obes Rev. 2014;15:67–73. https://doi.org/10.1111/obr.12181.

Nagy E, Vicente-Rodriguez G, Manios Y, Béghin L, Iliescu C, Censi L, et al. Harmonization process and reliability assessment of anthropometric measurements in a multicenter study in adolescents. Int J Obes. 2008;32:S58–S65. https://doi.org/10.1038/ijo.2008.184.

Androutsos O, Anastasiou C, Lambrinou C-P, Mavrogianni C, Cardon G, Van Stappen V, et al. Intra- and inter- observer reliability of anthropometric measurements and blood pressure in primary schoolchildren and adults: the Feel4Diabetes-study. BMC Endocrin Disord. 2020;20:27. https://doi.org/10.1186/s12902-020-0501-1.

Carsley S, Parkin PC, Tu K, Pullenayegum E, Persaud N, Maguire JL, et al. Reliability of routinely collected anthropometric measurements in primary care. BMC Med Res Methodol. 2019;19:84. https://doi.org/10.1186/s12874-019-0726-8.

Li F, Wilkens LR, Novotny R, Fialkowski MK, Paulino YC, Nelson R, et al. Anthropometric measurement standardization in the US-affiliated Pacific: Report from the Children’s Healthy Living Program. Am J Hum Biol. 2016;28:364–71. https://doi.org/10.1002/ajhb.22796.

Gonzalez MC, Mehrnezhad A, Razaviarab N, Barbosa-Silva TG, Heymsfield SB. Calf circumference: cutoff values from the NHANES 1999–2006. Am J Clin Nutr. 2021;113:1679–87. https://doi.org/10.1093/ajcn/nqab029.

The DHS Program. Best Practices for Quality Anthropometric Data Collection at The DHS Program. Rockville, MD: The DHS Program; 2019. https://www.dhsprogram.com/publications/publication-OD77-Other-Documents.cfm.

Cashin K, Oot L. Guide to Anthropometry: A Practical Tool for Program Planners, Managers, and Implementers. Washington, DC.: Food and Nutrition Technical Assistance III Project (FANTA)/FHI 360.; 2018. https://www.fantaproject.org/tools/anthropometry-guide.

Wenhold FAM, Nel S, Van den Berg V. Hands-On Anthropometry: A South African handbook for large-scale nutrition studies. Training and standardisation manual. Pretoria; 2022. https://www.up.ac.za/centre-for-maternal-fetal-newborn-and-child-healthcare/article/3043272/anthropometry-body-composition-and-growth-assessment.

R Core team. R: A Language and Environment for Statistical Computing Vienna, Austria: R Foundation for Statistical Computing; 2023. https://www.R-project.org/.

Perini T, de Oliviera G, dos Santos Ornellas J, de Oliviera F. Technical error of measurement in anthropometry. Rev Bras Med Esport. 2005;11:86–90. http://www.scielo.br/pdf/rbme/v11n1/en_24109.pdf.

Ulijaszek SJ, Kerr DA. Anthropometric measurement error and the assessment of nutritional status. Brit J Nutr. 1999;82:165–77. https://doi.org/10.1017/S0007114599001348.

Koo TK, Li MY. A guideline of selecting and reporting intraclass correlation coefficients for reliability research. J Chiroprac Med. 2016;15:155–63. https://doi.org/10.1016/j.jcm.2016.02.012.

Gamer M, Lemon J, Fellows I, Singh P. irr: Various Coefficients of Interrater Reliability and Agreement. version 0.84.1; 2019. https://CRAN.R-project.org/package=irr.

Breuckl M, Heuer F. irrNA: Coefficients of Interrater Reliability - Generalized for Randomly Incomplete Datasets. 0.2.3; 2022. https://CRAN.R-project.org/package=irrNA.

Datta D Blandr: a Bland-Altman Method Comparison package for R. 20170. https://doi.org/10.5281/zenodo.824514, https://github.com/deepankardatta/blandr.

Geeta A, Jamaiyah H, Safiza M, Khor G, Kee C, Ahmad A, et al. Reliability, technical error of measurements and validity of instruments for nutritional status assessment of adults in Malaysia. Singap Med J. 2009;50:1013–8. http://www.smj.org.sg/article/reliability-technical-error-measurements-and-validity-instruments-nutritional-status.

National Academies of Sciences E, and Medicine Reproducibility and Replicability in Science. Washington, DC: The National Academies Press; 2019. https://doi.org/10.17226/25303.

Acknowledgements

The authors gratefully acknowledge the NDIS-2022 planning team for assistance with technical and logistical arrangements. In particular we value the continued support of Rina Swart from the University of the Western Cape. The commitment of the anthropometry site leads deserves special mention.

Funding

The NDIS-2022 was funded by the South African Department of Health (contract: NDOH 45/2018-2019). No funding was received for the development of the training materials, conducting the training or related to publication. Open access funding provided by University of Pretoria.

Author information

Authors and Affiliations

Contributions

FW, LvdB and SN planned and executed the research, JdM performed all statistical analyses, all authors contributed to the initial draft and subsequent revisions.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

The NDIS-2022 received umbrella ethical approval (University of the Western Cape: BM21/4/12). Informed consent/ assent was obtained from all participants (and parents/ guardians, where appropriate).

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Nel, S., de Man, J., van den Berg, L. et al. Statistical assessment of reliability of anthropometric measurements in the multi-site South African National Dietary Intake Survey 2022. Eur J Clin Nutr (2024). https://doi.org/10.1038/s41430-024-01449-1

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41430-024-01449-1