Abstract

In 2006, the WHO published a framework for calculating the desired level of fortification of any micronutrient in any staple food vehicle, to reduce micronutrient malnutrition. This framework set the target median nutrient intake, of the population consuming the fortified food, at the 97.5th percentile of their nutrient requirement distribution; the Probability of Inadequacy (PIA) of the nutrient would then be 2.5%. We argue here that the targeted median nutrient intake should be at Estimated Average Requirement (50th percentile), since the intake distribution will then overlap the requirement distribution in a population that is in homeostasis, resulting in a PIA of 50%. It is also important to recognize that setting the target PIA at 2.5% may put a sizable proportion at risk of adverse consequences associated with exceeding the tolerable upper limit (TUL) of intake. This is a critical departure from the WHO framework. For a population with different age- and sex-groups, the pragmatic way to fix the fortification level for a staple food vehicle is by achieving a target PIA of 50% in the most deprived age- or sex-group of that population, subject to the condition that only a very small proportion of intakes exceed the TUL. The methods described here will aid precision in public health nutrition, to pragmatically determine the precise fortification level of a nutrient in a food vehicle, while balancing risks of inadequacy and excess intake.

Similar content being viewed by others

Introduction

Owing to a perception of widespread micronutrient deficiency, the WHO [1] suggested food fortification with micronutrients and published guidelines for a stepwise approach of food fortification in 2006. This was considered an attractive strategy, as it was thought to be simple and avoided direct engagement with the beneficiary for their behavioural modification [2]. The gap in nutrients could be judged, and nutrients in deficit could be added to commonly consumed foods, in doses designed exclusively by experts from the food industry, scientists, and policymakers [2]. There was already a lot of experience with this strategy, particularly for iodine [1].

This perspective paper evaluates the WHO-based determination of the amount of chemical nutrient that is determined for addition to a chosen food vehicle (the nutrient gap), particularly for mass or mandatory fortification. There are two critical parameters that guide the determination of optimum quantity of micronutrient in food fortification: a) The maximum permissible proportion of the population at risk of inadequate micronutrient intake after consumption of the fortified food; and b) The minimum permissible proportion of population who would be at risk of excess micronutrient intake after consumption of the fortified food. The WHO recommendation used several metrics to quantitatively derive these parameters and describe the process of deciding on a specific food fortification level with a specific nutrient [1]. This included the nutrient requirement, defined as the Estimated Average Requirement (EAR; at the time of publication of the WHO report [1], the EAR was not routinely used as a requirement metric), which is the average (50th percentile) value of the distribution of the nutrient requirement in a population; the Recommended Dietary Allowance or the Reference Nutrient Intake (RDA or RNI) which is the 97.5th percentile of the requirement distribution, and the Tolerable Upper Limit of intake (TUL), which is the intake beyond which the risk of adverse effects begins to increase.

The adequacy of the measured daily dietary intake of a nutrient in a population should ideally be assessed by comparison with the measured daily physiological requirement of the nutrient (which includes a growth function for children). However, there is no easy way of directly measuring the physiological nutrient requirement of a population. What is possible to measure is the risk of inadequacy of intake. Here, the measured distribution of nutrient intakes in a population is compared with a known distribution of requirements to yield a measure of the risk of inadequacy. The question for fortification efforts therefore is, what should be the target for the acceptable risk of intake inadequacy after the introduction of the fortified food? This is discussed below.

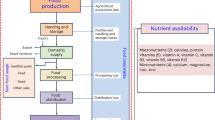

The a priori target for the WHO recommendation [1] for fortification, was to reduce the risk of nutrient intake inadequacy to 2–3%. That is, the final intake of the fortified nutrient is to be at least at the EAR level for all except 2–3% of the target population (Fig. 1). This also meant that the median of the fortified nutrient intake would be at, or more than the RDA, or the 97.5th percentile of the requirement distribution. The ratio of the amount of nutrient required to shift the distribution of intake to the desired target, and the intended food vehicle intake, would then yield the required food fortification level. Finally, a simulation could be performed of the effect of micronutrient additions through this fortification for different age groups. This was all described in an elegant stepwise approach by the WHO [1].

Intake and requirement distributions of a theoretical nutrient. Under ideal conditions in healthy populations (black line and green dashed line), the intake and requirement distributions overlap, meaning that the proportion of intakes that are less than the estimated average requirement (EAR) will be 50%. The red dashed line is the intake distribution after fortification, when the WHO recommended target, recommended dietary allowance (RDA) is used. The shaded area is the proportion of the population with intakes that are less than the EAR for the fortified intake (2–3%).

However, it is not entirely clear why the value of 2–3% risk of inadequacy, would be chosen a priori as a target for fortification. The fortified nutrient intake might be excessive and could result in the right-hand tail of the intake distribution now exceeding the TUL, particularly when the latter is relatively low and close to the RDA, as with iron, vitamin A and folic acid. In addition to acute toxic effects, excessive or high intakes may also be associated with risks for later chronic disease. One example is the risk of chronic disease with high ferritin concentrations [3,4,5]. When this occurs, there is not simply a risk of biological harm, but also economic harm, particularly in Low to Middle Income Countries (LMIC) that do not have universal health care systems. This calls for a re-look at the WHO recommendation, including a close examination of framework and metrics that define the risk of nutrient inadequacy, along with the acceptable target for nutrient fortification, or even supplementation. In this perspective paper, a modified approach is proposed with a formal derivation, to define nutrient inadequacy and fortification levels is provided below, followed by a specific example of iron fortification. This nutrient was chosen because it is particularly challenging to address as discussed below, and because there is evidence now that high ferritin concentrations are linked to the risk for chronic disease [3,4,5].

The statistical threshold used to define the risk of inadequate dietary intake of a nutrient in a population: The Probability of Inadequacy (PIA)

The population level of the risk of an inadequate or adequate nutrient intake is assessed by comparing the distribution of the habitual nutrient intake of the specific population against its requirement distribution in the same population, irrespective of requirement of any specific individual. This is quantified by evaluating the relative position of the habitual nutrient intake on its requirement scale: if the intake is more to the right on the requirement scale, the lower is the risk of an inadequate habitual nutrient intake of the population.

The risk of an inadequate intake is defined by the probability that the requirement is greater than a given usual intake [6]. If ‘Y’ is a random variable, which denotes the requirement of a nutrient for a given population, then the risk of inadequate intake for a habitual intake ‘x’ is defined as [6]

For a sufficiently large representative sample of usual intakes (\(x_i^\prime s;i = 1, \ldots ,n\)) of a population, the probability of inadequacy (PIA) in the nutrient intake of the population can be estimated as the average risk of inadequate intake of the individuals in the sample, as follows

where g(x) is the probability density function of a random variable X, which denotes the habitual intake of a nutrient in the population. Although it is reasonable to consider that the habitual daily intake of a nutrient is to fulfil the normal daily requirement of the body, we assume that the requirement (Y) and the usual intake (X) are stochastically independent, as they both are computed from different processes [6]. This assumption helps estimate PIA by Eq. (2) simplifying from

where f(y, x) is the joint probability density function of requirement(Y) and usual intake (X). Thus, the population measure, PIA is the arithmetic mean of the risk of inadequate intake by all individuals in the population under consideration. To simplify this, when the requirement distribution is symmetric, the PIA can be estimated by the proportion of the population whose intakes are below the median of the requirement which is EAR.

Interpreting Probability of Inadequacy (PIA) values

The interpretation of PIA, as well as the threshold that should be used to define it, has been a long-standing debate. The PIA measures the risk of inadequacy and much of the confusion around the PIA comes from interpreting this risk as analogous to the presence of definite disease or nutrient deficiency, as happens in classical epidemiology.

In classical epidemiology, individuals are classified into either having, or not having, a disease/pathology, based on its manifestations, like signs and symptoms. There are of course, some pathological conditions like anaemia, which are solely defined by a diagnostic cut-off of a blood biomarker like haemoglobin (Hb). That cut-off comes from examining the standard distribution of Hb in an apparently health population, that is selected by stringent exclusion and inclusion criteria. Here, the 5th percentile of the standard distribution is used as the cut-off for diagnosing the presence of anemia [7].

In the PIA metric, there is some similarity to the classical epidemiological approach. The distribution of the nutrient requirement is equivalent to the standard distribution of the disease biomarker (like Hb above), while the habitual nutrient intake of an individual is equivalent to the measured nutrient biomarker concentration in that individual. The similarity ends there. Instead of attempting a diagnosis of a definite disease or nutrient deficiency, based on whether the habitual intake is below the 2.5th or 5th percentile of the requirement distribution, here, the risk of inadequacy is described. This is the area under the requirement distribution curve that is above the habitual intake of that nutrient. If the habitual nutrient intake is below the 2.5th percentile of the nutrient requirement distribution, then the risk of an inadequate nutrient intake would be more than 97.5%. A habitual nutrient intake could be considered in the frame of a definitive disease or deficiency/inadequacy metric when it is below 2.5th percentile of requirement distribution, that is, when the risk of inadequate intake is greater than 97.5%.

The question could be asked: which metric should be used when evaluating dietary nutrient intakes in a population: the PIA or actual or definitive nutrient intake inadequacy? When everyone’s intake and requirement are known (measured), the proportion of definitively deficient individuals can be measured in the population. However, when the requirement is unknown, and only the habitual intake is known, along with a distribution of requirements in a population, the PIA metric is superior. It is a truism that a cut-off creates a binary of normal and abnormal when this might not actually exist for all practical purposes. Thus, even though all individuals that are within the neighbourhood of a cut-off may be similar, those on the left side of cut off are termed abnormal, while those on the right of the cut-off are termed normal. This, despite the consideration that from the clinical perspective, all those in the neighbourhood of the cut-off require equal health attention. The PIA metric is superior, because it derives a risk by considering the relative position of a habitual nutrient intake on its requirement scale.

Setting thresholds for adequacy

To understand the ideal ‘threshold of PIA’, assume a hypothetical standard healthy population where the intake of all subjects matches their requirements, such that they have no inadequacy. The gap between requirement and habitual intake of the population will be zero. Then, the distribution of habitual intakes must superimpose on the distribution of requirements, with the critical assumption that the (estimated) requirement distribution truly represents the population requirement. When the PIA metric is applied, to derive the individual risk of inadequate nutrient intake, despite there being no intake inadequacy in the population, all subjects would exhibit different degrees of risk depending on the location of their habitual intake on the requirement scale. For example, for an individual with requirement at the 2.5th percentile of the requirement distribution, the habitual nutrient intake should also be at the same point, such that there is a zero gap between requirement and intake. However, the risk of inadequate intake or PIA for the individual would be 97.5%, as the PIA is defined as the area under the requirement curve that lies above the habitual intake. Similarly, for an individual with nutrient requirement at the 97.5th percentile of the requirement distribution from the same hypothetical population, the risk of inadequate intake will be 2.5% although there is zero gap when the habitual intake of that individual matches the 97.5th percentile value. As habitual intakes move upward (toward the right) on the requirement scale, the risk of an inadequate intake would decrease. Hence, when the PIA, of this hypothetical normal healthy population with zero gap between intake and requirement, is derived as the average of all these individual subject risks, it will be 50% (See Fig. 2). Therefore, the threshold of PIA for a population with healthy normal nutrient intakes should be 50%, and the normal healthy condition should be a PIA of ≤50% or less. However, it is not advisable to use the PIA metric to assess the habitual intake of an individual. A population with a PIA ≥ 50% will have some degree of risk of nutrient inadequacy, increasing as the PIA increases. Additional terminologies can be suggested, such as a ‘probability of severe inadequacy’ (PSIA), when the PIA in the population is ≥97.5%, or the habitual nutrient intakes are ≤2.5% on the requirement scale.

Hypothetical symmetric distribution of habitual intake and requirement of a nutrient with zero gap between requirement and intake (overlapping each other). The curve for individual risk of inadequate intake over habitual intake on requirement scale is also overlayed. The dotted horizonal line represents the average risk probability of inadequacy (PIA) under this ideal condition of a population.

Which metric should be used as the target nutrient requirement of a population: the EAR or the RDA?

The EAR, which is the 50th percentile and RDA, which is the 97.5th percentile of the of the requirement distribution of a healthy normal population, are the standard metrics used globally. It has been recommended [8] that the RDA should be considered for an individual dietary recommendation, as the risk of inadequacy for the individual will be less than 2.5%. The WHO [1] recommends that fortification should increase the habitual intake of the population such that the mean nutrient intake is at or near the RDA, in order to minimize the population proportion that is below the EAR, to be less than 2.5% (Fig. 1). Thus, based on PIA, setting the mean population target intake to be close to 97.5% percentile of the requirement distribution would mean that in theory, there will be an excess nutrient intake (whether toxic or not) for 97.5% of the population, which, from a conceptual perspective, may be as unacceptable as having a very low mean population intake. A recent evaluation report on the harmonization of nutrient requirements [9] suggested that the RDA is not a useful metric in public health nutrition and should be dropped from the lexicon of requirements.

The challenge is in balancing the nutrient intake to exactly what is needed (rather than too much), meaning that the intake and requirement distributions should overlap. Thus, efforts to improve nutrient intake, such as diet diversification, fortification, or supplementation, should ideally target a PIA of 50% as this is the state of a healthy population with no undernutrition, where the intake and requirement distributions overlap. There could be some tolerance on either side of 50%, given uncertainties in defining the EAR, and in measurement of nutrient intakes. It is important to point out that increasing the population mean nutrient intake beyond the EAR through supplementation or fortification, in the interest of delivering what may be considered a ‘safe intake’ for a population, is not ideal [10]. While intakes beyond the EAR may not result in any immediate dietary toxicity, they are still more than what is required, leading to an imbalance in multiple nutrient intakes, where the excess of one nutrient may in turn require an excess of other nutrients, as can happen for amino acids [11], causing potential metabolic stress and long-term consequences. An imbalance in the dietary Zinc:Copper ratio (excess zinc) has been reported to aggravate cardiovascular morbidity [12]. These consequences are not trivial, particularly when they relate to non-communicable diseases like diabetes, cardiovascular or liver disease (see below), where the economic consequences are as devastating as biological effects when out-of-pocket expenses are needed for treatment, as in many LMIC.

The Tolerable Upper Level of intake (TUL) and an Intermediate Upper Level of intake (IUL)

The TUL of a nutrient is derived from toxicological principles, based on the manifestation of toxicity with increasing nutrient intake, to ensure high levels of safety. However, it is dependent on clinical reports of acute toxicity, and is a fragile estimate with high uncertainty. It is also important to point out that many nutrients have pleiotropic effects, and adversity may not be the occurrence of acute organ damage. For example, with iron, an excess could also imply an increased risk of other conditions [3,4,5], which may manifest well before clinical toxicity (see below). This implies that the safety of supplementary efforts to increase population nutrient intakes should not be guided solely by the TUL. This is not to say that the TUL has limited use in nutrition. The TUL could be a therapeutic limit for short-term nutrient supplementation in clinical interventions. However, it perhaps should not be used to signify safety for long-term unsupervised interventions such as food fortification.

It is difficult to define safety, or TUL, from a perspective that includes all potential direct and indirect adverse outcomes, including the risk for other diseases. Therefore, it might be prudent to propose an alternative metric that does not depend on sporadic reports of individual toxicity. One possibility is to use the value at the extreme right tail of the population nutrient requirement distribution, for example, the ‘mean + 3 SD’ value, and called an Intermediate Upper Limit (IUL) of intake. The IUL will depend on the requirement distribution that will vary by sex or age. For iron, the IUL is 24 mg and 42 mg for adult men and women respectively, based on their requirement distributions. Given different IUL values for different age-and sex-groups, it might be conservative to use the highest IUL value for any given age-group. This is a data-driven suggestion for safety in prolonged and unsupervised public health nutrition programs but needs validation.

A proposed method for defining the ‘nutrient gap’ for targeting through dietary diversification, fortification or supplementation

The first step in deriving the nutrient intake gap, for the purpose of informing programs to improve dietary nutrient intake through diversification, supplementation or fortification, is to estimate the real inadequacy of the nutrient intake in the population. This is estimated as an ‘excess inadequacy’ over the normal PIA expected at the population level, which as explained earlier, should be 50%:

For the sake of simplicity, the distribution of the habitual nutrient intake of any population, can be assumed to be either a normal probability distribution if it is bell shaped, or a log-normal distribution if it is positively skewed. Obtaining a negatively skewed habitual nutrient intake is unlikely. For the purpose of defining the daily dose of supplementation or fortification, the additional amount of nutrient (δ) that should be added to the habitual intake of each person in a population needs to be defined, such that EIA ≤ 0. The ideal target EIA is zero%. The nutrient gap (γ) in the habitual intake, that should be filled by dietary diversification, supplementation or fortification, will be defined by

Computation of γ when the distribution of habitual intake (X) is bell-shaped

If the habitual nutrient intake (X) has a bell-shaped distribution, it is assumed to be a normal probability distribution, with µ as mean, and σ as the standard deviation. The new habitual intake after adding δ (i.e., \(X \ast \left( \delta \right) = X + \delta\)) will also be a normal probability distribution with mean μ+δ and standard deviation σ.

The following steps illustrate an iterative approach to deriving a value for γ

Step 1: Choose a range of values for

Step 2: For each \(\{ \delta _k;k = 1,\,2,\, \ldots ,m\}\), simulate a random sample from the normal distribution of intakes, with \((mean = \mu + \delta _k,SD = \sigma )\), say \(\{ X_1^ \ast (\delta _k), X_2^ \ast (\delta _k), \ldots ,X_n^ \ast (\delta _k)\}\); n should be very large, such as n=105. Then, the EIA can be derived as

where \(\mu _R = EAR\;\&\; \sigma _R = \frac{{ + 2SD \,-\, EAR}}{{1.96}}\) when the requirement distribution is symmetric; but when positively skewed, the requirement distribution the Eq. (6) would be modified as

where, \(\mu _R = {{{\mathrm{log}}}}(EAR)\;\&\; \sigma _R = \frac{{{{{\mathrm{log}}}}( + 2SD/EAR)}}{{1.96}}\) and \({{\Phi }}(.)\) is the cumulative distribution function of a standard normal distribution.

Step 3: Repeat step 2 for k till \(EIA\left( {\delta _{k - 1}} \right) \,>\, 0\;\&\; EAI\left( {\delta _k} \right) \le 0\) and \(\gamma = \delta _k\).

Computation of γ when the distribution of habitual intake (X) is positively skewed

If the habitual intake distribution (X) is positively skewed, it is assumed to be a log-normal probability distribution, with µ as the log-scale mean, and σ as the log-scale standard deviation, of the habitual intakes of the population. The new habitual intake after adding δ (i.e., \(X^ \ast (\delta ) = X + \delta\)) will be also a log normal probability distribution with

log scale mean \(\mu \left( \delta \right) = \log \left[ {E\left( X \right) + \delta } \right] - \frac{{\sigma ^2(\delta )}}{2}\), and

standard deviation \(\scriptstyle{\sigma \left( \delta \right) = \sqrt {\log \left[ {1 + \frac{{Var\left( X \right)}}{{\left( {E\left( X \right) \,+\, \delta } \right)^2}}} \right]}}\)where \(E\left( X \right) = e^{\mu + \frac{{\sigma ^2}}{2}}\) and \(Var\left( X \right) = (e^{\sigma ^2} - 1) \times [E(X)]^2\)are the expectation and variance of a lognormal distribution with shape and scale parameter µ and σ respectively.

The following steps illustrate an iterative approach to deriving a value for γ in this circumstance:

Step 1: Choose a range of values for \(\delta = \{ \delta _1 \,<\, \delta _2 \,<\, \ldots <\, \delta _m\} \in (0, + 2SD - \mu )\)

Step 2: For each \(\{ \delta _k;k = 1,2, \ldots ,m\}\) simulate a random sample from the log-normal distribution of intakes with \((\log \;{{{\mathrm{scale}}}}\;{{{\mathrm{mean}}}} = \mu (\delta _k),{{{\mathrm{logscale}}}}\;{{{\mathrm{SD}}}} = \sigma (\delta _k))\), say \(\{ X_1^ \ast (\delta _k),\;X_2^ \ast (\delta _k), \ldots ,X_n^ \ast (\delta _k)\}\); n should be very large, such as n = 105. Then \(EIA(\delta _k)\) can be derived by Eq. (6) if the nutrient requirement distribution is symmetric, otherwise Eq. (7) is used.

Step 3: Repeat step 2 for k till \(EIA\left( {\delta _{k - 1}} \right) \,>\, 0\;\& \;EAI\left( {\delta _k} \right) \le 0\) and \(\gamma = \delta _k\).

A proposed method for ‘filling the gap’ by fortification, by setting nutrient fortification levels in the chosen food fortification vehicle

The distribution of nutrient requirements and their habitual intakes varies with different age- and sex-specific groups. Therefore, the estimated nutrient gap (γ) in the intake, will be different for different age and sex strata. However, the challenge is that the fortification level of any nutrient in any specific food vehicle must be uniform for all age-, sex- and socioeconomic status (SES)-specific groups, for the ease and feasibility of implementation of a fortification policy for any population. Therefore, a fortifying ‘amount’ of nutrient must be selected, that can reduce the EIA of all age- and sex-specific strata to a value close to zero, and simultaneously ensure that the probability of excess intake with respect to the TUL does not exceed 1% (this is a subjective decision that should be decided by scientific consensus).

In the following, a possible method is suggested for the derivation of γ, which can potentially apply to all groups in a population. After deriving γ for all age- and sex-groups, the method chooses the group with highest γ value. To estimate the fortification level of a chosen food vehicle, the highest γ value (gap) is then divided by the median intake of the food vehicle in that specific group, to get to an initial estimate of the desirable nutrient fortification level.

The next step is to multiply this estimate of the fortification level to the median intake values of the same food for all the other population groups. This will provide the group-specific amount of nutrient that fortification will provide for that food vehicle at that fortification level. Finally considering that group-specific amount of nutrient as the δ value, the EIA for each group should be recalculated using Eq. (6) or Eq. (7). The required EIA is ≤ 0%; it may not be possible to achieve this, and some tolerance to this value should be defined, such as an EIA that is ≤ 5–10%. Thus, the method to fix the fortification level for a staple food vehicle is to choose a level that will achieve a PIA of 50% in the most nutrient-deprived population group, however, this is subject to the condition that only a very small proportion of fortified intakes will exceed the TUL, as described below.

The burden of excess intake (>TUL) of each group should be assessed after adding the group-specific amount of fortified nutrient that will be added to their respective habitual intakes. This could also be defined against the IUL if such a value existed. For a given habitual nutrient intake (X) of a group, the risk of excess intake (REI) can be defined by

When X is normally distributed, with mean µ and standard deviation σ, \(REI = 1 - \Phi \left( {\frac{{TUL - \mu }}{\sigma }} \right)\) otherwise, if X is lognormally distributed with log-scale mean µ and log-scale standard deviation σ, \(REI = 1 - \Phi ( {\frac{{\log ( {TUL} ) - \mu }}{\sigma }} )\). If the fortification nutrient amount δ is added to the habitual intake, the REI for a normally distribution will be

And for a lognormal distribution will be

where \(\mu \left( \delta \right),\sigma \left( \delta \right)\;\&\; \Phi \left( . \right)\) are as defined above. Here, the adjustment of the fortification level should be subject to \(REI\left( \delta \right) \,<\, 0.005\), or an acceptable value that is defined a priori.

An example: Calculation of the fortification level of a food staple for iron fortification

A practical demonstration of the considerations detailed above is presented with respect to iron nutriture and the possibility of fortifying cereals with iron. It should be noted that this example relates to the consideration of a single source of fortified food, that is, when only one staple is fortified at any time. This is a critical consideration, considering that multiple foods, like rice, wheat, or salt, could be fortified with the same nutrient, creating a danger of ‘layering’, or cumulative additions, of the fortified nutrient intake with magnified risks of an excess intake [10].

To provide this demonstration, a random dataset was generated, of the average daily habitual intakes of iron and cereals across different age and sex groups from the Indian National Nutrition Monitoring Bureau (NNMB 2012) survey [13] It should be emphasized that this is a dummy dataset and the results calculated thereof, do not reflect the true state of dietary iron intake in India.

The first step is identifying country-specific values for the EAR and TUL, which, as defined for India [14], are listed for iron in Table 1 according to different age and sex-groups. Next, the distribution of habitual daily iron intake is calculated, and this is depicted in Supplementary Fig. 1 for age, sex, and SES groups in log scale, as this was a skewed distribution (as is expected for iron intake). The PIA is then calculated for each group, using the listed EAR and the iron intake distribution, allowing for mapping the PIA across groups (not shown).

The calculated iron intake gap (γ) values are reported in Table 1. The highest gap, which was 7.5 mg/d out of a requirement of 18 mg/d (EAR), was estimated for 16–17 years girls of medium and low SES. Since the chosen food staple is cereal (rice), the median cereal intake of the groups is also provided in Table 1; the median cereal intake (g/d) for 16–17 years girls of medium and low SES (with the highest iron intake gap) was 221 g/d and 229 g/d respectively (Table 1). Based on the gap and the food vehicle intake, the calculated fortification level in cereal for iron, for these groups, was estimated at ~3.5 mg/100 g.

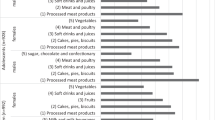

Next, the PIA was re-calculated for each group, this time with the increased intake of iron that would be afforded with fortified cereal intake, based on their median cereal intake (Table 1). The PIA of all the groups was close to the acceptable value of 50% (Fig. 3A), with the target EIA of near zero. However, the REI (excess intake) crossed 1% among adult males (Fig. 3B). Given that there is some uncertainty in the TUL estimate, and that the REI was a little over 1%, one can consider ignoring this value; however, this needs to be decided a priori. As a conservative suggestion, it may be desirable to keep safety paramount and not allow the REI to exceed say 2.5% in any condition. Thus, a balance of the EIA and the REI should be achieved for all age and sex-specific groups, with legitimate compromises to either side of the risk, considering the different layers of uncertainties that are introduced in this computation process.

A Probability of inadequacy (PIA) across age-, sex- and socioeconomic status (SES) groups after intake of fortified cereal; with fortification level of 3.5 mg iron /100 g of cereal. Note all PIA values are in the acceptable range. B Risk of excess intake (REI) across age-, sex-, and SES groups after intake of fortified cereal; with fortification level of 3.5 mg iron /100 g of cereal. The suffix ‘G’ and ‘B’ for different age groups refers to ‘girls’ and ‘boys’.

One could follow the current WHO recommendation for fortification: that the target PIA should be 2.5% (Fig. 1, red dashed distribution curve), meaning that the median nutrient intake of a population after fortification should be equal to the +2 SD value (RDA) of the nutrient requirement distribution. Therefore, this recommendation was also implemented in a further exercise with the same dataset as above. Since the target intake was a value that was at the + 2 SD value of the requirement distribution, the calculated iron intake gaps were of course much higher. The highest gap estimated was 23.5 mg/d, for a target requirement of 32 mg/d or the RDA for 16–17 y girls of medium SES (Fig. 4A). The median cereal intake (g/d) for this group was 221 g/d (Table 1). Based on these data, the calculated fortification level for iron, to fulfil the WHO recommended target, was ~10.5 mg/100 g of cereal. This is quite high; so high that universal cereal fortification at this level would expose adolescents and adults to very high level of risk of excess intake (REI) of dietary iron, as high as >50% in adult men (Fig. 4B).

A Additional iron intake required (δ) to meet the iron recommended dietary allowance (RDA) or to achieve a probability of inadequacy (PIA) < 2.5% across age-, sex- and socioeconomic status (SES) groups. Note that this is highest in 16–17 years boys. B Risk of Excess Intake (REI) in different age-, sex- and socioeconomic status (SES) groups, when supplied with fortified cereal at a fortification level of 10 mg iron /100 g of cereal (see text for details). The suffix ‘G’ and ‘B’ for different age groups refers to ‘girls’ and ‘boys’.

Concluding comments

Food fortification has now become one of the most popular ways of increasing specific nutrient intakes in a population, owing to claims for its relative ease and non-obtrusive nature which requires no behavioural change on the part of the beneficiary [1]. When applied to deficient populations, it has been shown to have some efficacy [15, 16]. Subsequently, fortification of foods with single ‘deficient’ nutrients became fashionable. But these were single nutrient approaches, like iodine and iron fortification for the prevention of goitre and anaemia respectively. While levels of fortification for single nutrients were described for different foods, an altogether unexpected policy development was the simultaneous fortification of multiple food vehicles with the same nutrient, leading to concerns about excess intakes. This has happened in India, for example, with the provision of iron-fortified rice and salt at the same time to some populations [10]. On the other hand, witness the success of single nutrient fortification with iodine, which has been successful for the prevention of goitre, which has a single nutrient aetiology (although even with iodine, there are indications of excess intake now in some Indian states [17]). The success for anaemia will be variable, given its complex aetiology with several possible causes, of which iron deficiency is only one. This has led to attempts for multiple nutrients in food fortification, with additional technical difficulties, as these nutrients can interact with each other. Finally, there is the problem of acceptability, as in some instances, beneficiaries have been able to discern that their fortified food is different, leading to poor uptake [18]. Thus, food fortification, contrary to its perception of simplicity, is complex in implementation.

A major shift in the definition of nutrient requirements has begun, with the need for harmonizing these requirements across the world [9]. As international trade increases, with the need for food labelling to be uniform across borders, the need to uniformly define nutrient requirements becomes evident. For example, the Codex [19] uses Nutrient Reference Values (NRVs) that should be used in labelling of food products, to help consumers to compare food products. The NRV for adults is the 98th percentile of the nutrient requirement distribution (Individual Nutrient Level 98 or INL 98; somewhat equivalent to the RDA). There was some consideration to use the INL 50 (equivalent of the EAR) as the correct NRV; however the Codex Committee on Nutrition and Foods for Special Dietary Uses (CCNFSDU) preferred to continue with the INL 98, noting first, that the INL 50 (EAR) was not as widely published as the INL 98, and second, while agreeing that the INL 50 was closer to most individuals’ requirements, that the INL 98 was preferable as it more likely to exceed the nutrient requirement of the vast majority of a population [19]. These concepts, although published in 2019 [19], appear quite dated, given that the EAR (INL 50) for many nutrients is now widely published. Indeed, in their recent, excellent effort to define harmonization of nutrient requirement metrics, Allen et al [9] have clearly shown that the two important metrics are the EAR and the TUL, with no need for the RDA.

The next step is to consider how nutrient inadequacy is measured in a population. Ideally, this should be done by measuring the nutrient requirement and intake of each member of that population. But measuring the requirement in a population is all but impossible. Indeed, nutrient requirements are usually measured in very controlled conditions, and in metabolic laboratories, ensuring that nutrient intakes are reasonable and constant, and that non-physiological nutrient demands are not present, and from these the distribution and average requirement is defined. There are two main methods to measure the requirement: first, by actual nutrient balance measurement, which is critically performed under a reasonable range of habitual diets, such that a dose response can be constructed to define the requirement. However, many times, balance measurements are simply not possible, and the second way to define the requirement is a factorial method. Here, the framework for the requirement is based on several ‘factors’, of which the key is the daily loss of the nutrient. The factorial method then works out how to replace this daily loss, considering other factors of the efficiency of absorption and utilization. The foundation is the daily loss of nutrient (in otherwise healthy conditions), and this is dependent on the amount that is usually eaten. Since an individual intake of a nutrient can be variable, and in excess of the physiological requirement on some days, the body excretes the excess, unless this is stored. It then becomes very important to measure the daily nutrient loss at normal or reasonable nutrient intakes, else one may overestimate the physiological loss on normal intakes. One may ask: what is reasonable or normal? Normal intakes in health, differ widely across countries, regions and cultures, and populations can adapt to different intakes while maintaining health. An example is the demonstration of the dependence of the 24-hour nitrogen excretion on the habitual intake of protein in humans, as well as the demonstration that it took about 5–10 days to adapt to a step change (from high to low, but both intakes greater than EAR) in the protein or amino acid intake [20, 21]. This is why nutrient requirements must be measured by harmonized methods, defining such intricacies as the habitual intakes at which nutrient losses are measured.

Once the nutrient requirement (and distribution) is known, then, using the median or EAR, to define the adequacy of diets to deliver nutrients, is a required step. In this paper, we depart from the WHO recommendation [1], which suggested that the adequacy of nutrient intake would exist when the intake distribution crossed the EAR to the extent that only 2–3% of the intakes were less than the EAR. Here, we show that distributions of intake and requirement will overlap in a normal healthy population, such that 50% of the intakes will lie on either side of the EAR. Another way to examine this is in the intakes of exclusively breastfed infants, who, by definition, have an adequate intake. Their protein EAR was factorially derived to be 1.13 g/kg/d at the 3rd month, by the WHO/FAO/UNU in 2007 [22]. In the DARLING study [23], on 3-month-old, presumably exclusively breastfed children, who were growing normally at that time (observed weight gain of 28 ± 7 g/d vs the expected 31 g/d), their average daily protein intake (excluding urea) was found to be 1.09 g/kg/d. Given that some breast milk urea will also be used as an additional nitrogen source [21], this average protein intake of the children, who are not faltering, almost overlaps with their protein EAR, meaning that their risk of protein inadequacy will be close to 50%. Thus, based on probability theory and empirical findings, the identification of a normal PIA of 50% is logical.

More recently, another concern has cropped up: that is the possibility of excess nutrient intake. This could occur due to many reasons, not least is the possibility of several foods being fortified simultaneously with the same nutrient, or due to the layering of nutrient supplementation (with quite pharmacological doses) on top of food fortification with the same nutrient [10]. This also becomes quite relevant with mass fortification through mandatory or universal initiatives, where all segments of society get fortified food, mandated by law. This may not be good for all: as social inequalities have increased in the distribution of income, wealth, and access to diverse foods, populations in different socioeconomic strata can have very different nutrient intakes. In addition, the universal intake of, for example, iron fortified foods can pose oxidative risks to those with haemoglobinopathies, even when their traits are carried [24, 25], or the risk of an adverse microbiome composition in children [26]. In the upper socioeconomic strata, specific nutrient intakes could already be high, and with fortification, it is possible for intakes to cross into risk-prone zones, with consequences from over-nutrition. This is clearly demonstrated for iron in the worked example above, where adult men and adolescent boys are at risk of excess intake of iron after fortified cereal intake. This is not trivial: while the TUL is set based on toxicological principles, in turn based on reports of adverse symptoms with high iron intake [27], a newer concept of TUL is required, which considers the risk for other conditions. Iron is inherently a pro-oxidative element [28], and analyses of the US National Health and Nutrition Examination Survey (NHANES) data showed that the risk of diabetes increased by up to 4-fold when otherwise normal individuals in the highest quintile of serum ferritin concentration were compared to those in the lowest quintile [29], also confirmed by a meta-analysis of 15 studies, which showed that greater ferritin levels were associated with a greater risk of type 2 diabetes [3]. There are also associations of increased iron status (increased serum ferritin) with hypertension and dyslipidaemias [4, 30], and these associations have also recently been reported in children [5].

In summary, this paper describes a framework to derive fortification levels of nutrients for food vehicles, by using the EAR and TUL metrics, and acceptable levels of risk of inadequate and excess nutrient intakes. This method can be used with normal or skewed nutrient intake or requirement distributions, and for any nutrient, as long as there is a well-defined measured distribution of nutrient intakes, and defined EAR and TUL values for the population. Future research, where accurate surveys of nutrient intake are accompanied by measuring precise biomarkers of deficient and excess nutrient intake, is required to fully validate the concepts presented here. Further, this method needs to be applied more widely across different nutrients that are being considered for fortification. In addition, since the TUL is derived from a toxicological framework that is based on sporadic case descriptions of adverse effects, an alternate and conservative descriptor of high intake, the IUL, based on the nutrient requirement distribution, is also presented for better safety, to obviate risks for other conditions with higher nutrient intakes: something that will happen as different food vehicles are fortified with the same nutrient in the same population, and when supplements are added on. In the future, the understanding of nutrient requirements will evolve and become more precise, while food intake behaviours will change due to innovations, food availability or global circumstances, presenting challenges that should be considered. The method presented here offers not just balance between benefits and harms in the calculation of nutrient fortification levels in staple foods by considering both tails of the fortified intake distribution, but also precision in public health nutrition as it relates to fortification.

Data availability

This was a secondary data analysis, based on a dummy dataset generated out of original data from the National Nutrition Monitoring Bureau (NNMB) 2012. The NNMB data are the property of the Indian Council of Medical Research - National Institute of Nutrition, Hyderabad, India.

References

WHO, FAO. Guidelines on Food Fortification with Micronutrients. WHO Press; Geneva, 2006.

Kimura AH. Solving hidden hunger with fortified food. In Hidden Hunger: Gender and the Politics of Smarter Foods. Cornell University Press, 2013 http://www.jstor.org/stable/10.7591/j.ctt1xx5n3.8. Accessed 5 April 2022.

Jiang L, Wang K, Lo K, Zhong Y, Yang A, Fang X, et al. Sex-specific association of circulating ferritin level and risk of type 2 diabetes: A dose-response meta-analysis of prospective studies. J Clin Endocrinol Metab. 2019;104:4539–51.

Lee DH, Kang SK, Choi WJ, Kwak KM, Kang D, Lee SH, et al. Association between serum ferritin and hypertension according to the working type in Korean men: the fifth Korean National Health and nutrition examination survey 2010-2012. Ann Occup Environ Med. 2018;30:40.

Ghosh S, Thomas T, Kurpad AV, Sachdev HS. Is iron status associated with markers of non-communicable disease in Indian children? Res Sq. 2021. https://doi.org/10.21203/rs.3.rs-1136688/v1.

Carriquiry AL. Assessing the prevalence of nutrient inadequacy. Public Health Nutr. 1999;2:23–33. https://doi.org/10.1017/s1368980099000038. MarPMID: 10452728

Sachdev HS, Porwal A, Acharya R, Ashraf S, Ramesh S, Khan N, et al. Haemoglobin thresholds to define anaemia in a national sample of healthy children and adolescents aged 1-19 years in India: A population-based study. Lancet Glob Health. 2021;9:e822–e831.

Institute of Medicine. Dietary Reference Intakes. The essential guide to nutrient requirements. Washington (DC): National Academies Press;2006.

Allen LH, Carriquiry AL, Murphy SP. Perspective: Proposed harmonized nutrient reference values for populations. Adv Nutr. 2020;11:469–83.

Kurpad AV, Ghosh S, Thomas T, Bandyopadhyay S, Goswami R, Gupta A, et al. Perspective: When the cure might become the malady: the layering of multiple interventions with mandatory micronutrient fortification of foods in India. Am J Clin Nutr. 2021;114:1261–6.

Kurpad AV. Amino Acid Imbalances: Still in the Balance. J Nutr. 2018;148:1647–9.

Kärberg K, Forbes A, Lember M. Raised dietary Zn:Cu ratio increases the risk of atherosclerosis in type 2 diabetes. Clinical Nutrition ESPEN, https://doi.org/10.1016/j.clnesp.2022.05.013.

National Nutrition Monitoring Bureau (NNMB). Diet and Nutritional Status of Rural Population, Prevalence of Hypertension and Diabetes Among Adults and Infant and Young Child Feeding Practices‑ Report of Third Repeat Survey. Hyderabad, India: National Institute of Nutrition, Indian Council Med Res. 2012.

Indian Council of Medical Research / National Institute of Nutrition. Nutrient Requirements for Indians. Department of Health Research, Ministry of Health and Family Welfare, Government of India. 2020.

Gera T, Sachdev HS, Boy E. Effect of iron-fortified foods on hematologic and biological outcomes: systematic review of randomized controlled trials. Am J Clin Nutr. 2012;96:309–24.

WHO. Guideline: fortification of food-grade salt with iodine for the prevention and control of iodine deficiency disorders. Geneva: World Health Organization; 2014.

Ministry of Health and Family Welfare (MoHFW), Government of India, UNICEF and Population Council. Comprehensive National Nutrition Survey (CNNS). National Report 2016–2018. New Delhi, India: MoHFW, Government of India, UNICEF and Population Council; 2019.

Cyriac S, Haardörfer R, Neufeld LM, Girard AW, Ramakrishnan U, Martorell R, et al. High coverage and low utilization of the Double Fortified Salt program in Uttar Pradesh, India: Implications for program implementation and evaluation. Curr Dev Nutr. 2020;4:nzaa133 https://doi.org/10.1093/cdn/nzaa133.

Lewis J. Codex nutrient reference values. Rome. FAO, WHO. 2019.

Quevedo MR, Price GM, Halliday D, Pacy PJ, Millward DJ. Nitrogen homoeostasis in man: diurnal changes in nitrogen excretion, leucine oxidation and whole body leucine kinetics during a reduction from a high to a moderate protein intake. Clin Sci (Lond). 1994;86:185–93.

Kurpad AV, Regan MM, Raj T, El-Khoury A, Kuriyan R, Vaz M, et al. Lysine requirements of healthy adult Indian subjects receiving long-term feeding, measured with a 24-h indicator amino acid oxidation and balance technique. Am J Clin Nutr. 2002;76:404–12.

WHO, FAO, UNU. Protein and Amino Acid Requirements in Human Nutrition: Report of a Joint WHO/FAO/UNU Expert Consultation. Geneva, Switzerland: World Health Organization; 2007. WHO technical report series 935.

Heinig MJ, Nommsen LA, Peerson JM, Lonnerdal B, Dewey KG. Energy and protein intakes of breast-fed and formula-fed infants during the first year of life and their association with growth velocity: The DARLING study. Am J Clin Nutr. 1993;58:152–61.

Walter PB, Fung EB, Killilea DW, Jiang Q, Hudes M, Madden J, et al. Oxidative stress and inflammation in iron-overloaded patients with beta-thalassaemia or sickle cell disease. Br J Haematol. 2006;135:254–63.

Zimmermann MB, Fucharoen S, Winichagoon P, Sirankapracha P, Zeder C, Gowachirapant S, et al. Iron metabolism in heterozygotes for hemoglobin E (HbE), alpha-thalassemia 1, or beta-thalassemia and in compound heterozygotes for HbE/beta-thalassemia. Am J Clin Nutr. 2008;88:1026–31.

Paganini D, Zimmermann MB. The effects of iron fortification and supplementation on the gut microbiome and diarrhea in infants and children: A review. Am J Clin Nutr. 2017;106:1688S–1693S.

Institute of Medicine (US) Panel on Micronutrients. Dietary Reference Intakes for Vitamin A, Vitamin K, Arsenic, Boron, Chromium, Copper, Iodine, Iron, Manganese, Molybdenum, Nickel, Silicon, Vanadium, and Zinc. National Academies Press (US), Washington DC; 2001.

Simcox JA, McClain DA. Iron and diabetes risk. Cell Metab. 2013;17:329–41.

Ford ES, Cogswell ME. Diabetes and serum ferritin concentration among U.S. adults. Diabetes Care. 1999;22:1978–83.

Kim YE, Kim DH, Roh YK, Ju SY, Yoon YJ, Nam GE, et al. Relationship between serum ferritin levels and dyslipidemia in Korean adolescents. PLoS One. 2016;11:e0153167.

Acknowledgements

HSS, TT and AVK are recipients of the Wellcome Trust/Department of Biotechnology India Alliance Clinical/Public Health Research Centre Grant # IA/CRC/19/1/610006.

Author information

Authors and Affiliations

Contributions

SG, HSS and AVK conceived the idea, SG and TT conducted all statistical analyses; all authors guided the analyses and were involved during drafting and approval of the final manuscript.

Corresponding authors

Ethics declarations

Competing interests

HSS is a member of the World Health Organization Nutrition Guidance Expert Advisory Subgroup on Diet and Health. HSS and AVK are members of Expert Groups of the Ministry of Health and Family Welfare on Nutrition and Child Health, the National Technical Board on Nutrition of the Niti Aayog, Government of India and the Food Standards and Safety Authority of India. KMN is the Chairperson of the FSSAI Scientific Panel on Labelling, Claims and Advertisements, and a member of FSSAI Expert Committee 2 for Approval of Non-specified Food and Food Ingredients and a member of the FSSAI Scientific Panel on Nutrition and Fortification.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ghosh, S., Thomas, T., Pullakhandam, R. et al. A proposed method for defining the required fortification level of micronutrients in foods: An example using iron. Eur J Clin Nutr 77, 436–446 (2023). https://doi.org/10.1038/s41430-022-01204-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41430-022-01204-4

This article is cited by

-

The Need for Food Fortification With Zinc in India: Is There Evidence for This?

Indian Pediatrics (2023)