Abstract

Effective strategies for early detection of cognitive decline, if deployed on a large scale, would have individual and societal benefits. However, current detection methods are invasive or time-consuming and therefore not suitable for longitudinal monitoring of asymptomatic individuals. For example, biological markers of neuropathology associated with cognitive decline are typically collected via cerebral spinal fluid, cognitive functioning is evaluated from face-to-face assessments by experts and brain measures are obtained using expensive, non-portable equipment. Here, we describe scalable, repeatable, relatively non-invasive and comparatively inexpensive strategies for detecting the earliest markers of cognitive decline. These approaches are characterized by simple data collection protocols conducted in locations outside the laboratory: measurements are collected passively, by the participants themselves or by non-experts. The analysis of these data is, in contrast, often performed in a centralized location using sophisticated techniques. Recent developments allow neuropathology associated with potential cognitive decline to be accurately detected from peripheral blood samples. Advances in smartphone technology facilitate unobtrusive passive measurements of speech, fine motor movement and gait, that can be used to predict cognitive decline. Specific cognitive processes can be assayed using ‘gamified’ versions of standard laboratory cognitive tasks, which keep users engaged across multiple test sessions. High quality brain data can be regularly obtained, collected at-home by users themselves, using portable electroencephalography. Although these methods have great potential for addressing an important health challenge, there are barriers to be overcome. Technical obstacles include the need for standardization and interoperability across hardware and software. Societal challenges involve ensuring equity in access to new technologies, the cost of implementation and of any follow-up care, plus ethical issues.

Similar content being viewed by others

Introduction

The proportion of the world’s population over the age of 60 will rapidly increase in the coming decades [1]. Cognitive decline is more likely with increasing age: this decline is primarily due to pathological processes and not age per se [2, 3]. Many neurodegenerative diseases have a long prodromal phase of several years, providing a window of opportunity to identify cognitive decline when impairment is non-existent or has little impact on daily function [4]. Existing methods for detecting cognitive decline are best suited for scenarios where symptoms have already manifested (e.g., a referral following subjective cognitive impairment) but are not appropriate when longitudinal monitoring of asymptomatic individuals is required. For example, biomarkers such as hyperphosphorylated tau protein (p-tau) and amyloid-beta (Aβ) can identify neuropathology associated with neurodegenerative diseases such as Alzheimer’s Disease (AD), prior to any cognitive decline. However, these biomarkers are typically obtained invasively from cerebrospinal fluid (CSF) via lumbar puncture. Neuropsychological tests of cognition typically require specialist administration, are insensitive to subtle declines in cognition and a patient’s performance can vary day-to-day. Structural and functional neuroimaging technologies such as magnetic resonance imaging (MRI) and positron emission tomography (PET) can detect and predict cognitive decline years before its detection via traditional neuropsychological assessment tools [5]. However, obtaining these brain data is costly and requires rigorous protocol standardization to be meaningful [6].

Scalable methods for early detection of cognitive decline would have several advantages. Even in the absence of disease-modifying therapies, which remain largely in development [7], there is a benefit to using scalable measures for screening cognitively unimpaired (CU) individuals. Early detection of cognitive decline may reduce adverse outcomes such as loss of autonomy [8] and may also mitigate the high healthcare costs that occur in the decade before formal diagnosis [9] (e.g., by providing homecare to prevent falls or infections [10] or failure to take prescribed medicine). In the event that disease-modifying therapies for neurodegenerative diseases become widely available, healthcare systems will require scalable measures for identifying patients at an early stage of the disease. A combination of a cognitive instrument and blood-based biomarker tests to triage patients at the primary care level could eliminate wait lists after the first 3 years and increase correctly identified cases by about 120,000 per year [11], primarily because referrals for PET or CSF-based biomarkers would be restricted to patients for whom disease-modifying treatment is possible (reducing annual health system expenditure by $400–700 million in the United States). With respect to clinical trials, it can be challenging to identify sufficient numbers of participants who meet the criteria for inclusion (e.g., increased brain amyloid) [12]. Improved detection could identify those at the earliest stages of the disease process, a status that could be confirmed by invasive methods. As we discuss below, a benefit of scalable measures is that clinical trial data can be recorded frequently, potentially detecting treatment effects sooner than scenarios where in-clinic assessments are collected periodically.

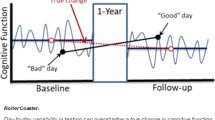

Here, we outline some emerging approaches that can provide scalable, repeatable, relatively non-invasive and comparatively inexpensive strategies for early detection of cognitive decline. These strategies are linked by two common themes. First, the data collection methods are simpler than extant clinical approaches because they do not rely on measurements obtained by professionals in dedicated settings. Rather, data are collected passively, by participants themselves or by non-experts. Second, analysis of the data does require highly sophisticated methods, which are designed and/or performed by specialists. These new approaches include new techniques in analysis of blood-based biomarkers that allow neuropathology to be detected from peripheral blood samples with high sensitivity and specificity. Where available, we report metrics incorporating specificity (i.e., proportion of healthy participants correctly classified) and sensitivity (i.e., the proportion of patients correctly classified). The area under the curve of the receiver operating characteristic (AROC) is a summary measure incorporating both sensitivity and specificity (AROC = 1 denotes perfect classification, .5 denotes random performance). Although AROC values such as .9 are sometimes used heuristically as a threshold for clinical tests, the desired sensitivity and specificity are situation-specific (e.g., in the context of population screening where disease prevalence is low, high specificity is desirable to avoid large numbers of false positive cases). The sample size required for any analysis also depends on the disease prevalence. When disease prevalence is low (cf. case-control designs) the sample size needs to be sufficient to accurately quantify sensitivity and specificity. Use of passive measurements collected via smartphone can unobtrusively record features relevant to cognition (collecting data almost continuously). Specific cognitive processes (e.g., memory) can be monitored regularly using ‘gamified’ cognitive tasks, potentially in combination with at-home recording of electroencephalography (EEG). Frequent repeated assessment promises richer and more reliable data than traditional snapshot assessment in the clinic [13], especially relevant because cognitive performance of older adults is more sensitive to external factors such as time of day [14, 15] or fluctuations in stress [16]. We describe the practical challenges when translating validated research methodologies into the healthcare pathway. An overview of this topic is summarized in Fig. 1.

Peripheral-blood-based measures of neuropathology

Typically, biomarkers associated with cognitive decline are based on analysis of CSF, involving an invasive lumbar puncture and associated risks (e.g., infection). In contrast, blood-based biomarkers can be collected in a wide range of settings, such as tertiary care centers, increasing accessibility. However, detecting biomarkers in blood is challenging, not least because some biomarker levels, such as amyloid-β (Aβ), are tenfold more concentrated in CSF than in blood [17]. Blood contains several proteins, peptides, nucleic acids, lipids, metabolites, exosomes, and cellular components that present diurnal variations in their concentrations [18]. Biomarkers degrade in the liver or directly in the blood by proteases, adhere to plasma proteins or blood cells, and are excreted from the kidneys [19]. However, newer platforms can detect biomarkers present at very low concentrations in blood after having crossed the blood-brain barrier. Luminex xMAP, single-molecule array (SIMOA), immunomagnetic reduction (IMR), and immunoprecipitation mass spectrometry (IP-MS) assays, among others, are based on the principle of the Enzyme-Linked Immunoassay (ELISA) and have improved sensitivity versus conventional biomarker assays [18, 20, 21].

Previous studies related to blood-based biomarkers for detecting cognitive impairment focused on mild cognitive impairment (MCI) or AD dementia. Increasing attention is being given to evaluating blood-based biomarkers in cognitively unimpaired (CU) older adults who are at risk of developing AD, and biomarkers have predicted cognitive decline in a prospective cohort study [22]. In this section, we focus on longitudinal studies in CU participants deemed at risk (e.g., defined by Aβ status and/or genetic risk) of developing dementia.

Aβ peptides can aggregate and form oligomers and fibrils, resulting in amyloid plaque deposition, one of AD’s histopathological hallmarks [23]. Aβ peptides vary in size from 39–43 amino acids, with Aβ40 being the most abundant in CSF (about 60% of total Aβ), despite being less prone to aggregate. On the other hand, Aβ42 has a higher propensity to form toxic oligomers, which are present in CSF decades before AD onset [24, 25]. The Aβ42/40 ratio has been shown to improve diagnostic performance in routine clinical use [26] and is robust to influence from pre-analytical or analytical factors. An early study using plasma showed an Aβ42/40 ratio reduction in AD dementia versus subjective cognitive decline and MCI, with moderate accuracy (AROC = 0.68) [27]. Subsequently, other sensitive methods have been developed to quantify Aβ in blood plasma [28], and have shown Aβ42/40 ratio as a biomarker in the progression to MCI or AD dementia in CU individuals enrolled in large cohort studies [29,30,31,32,33]. Furthermore, the combination of plasma Aβ42/40 ratio with age and APOE-ε4 genotype could identify amyloid positivity with higher accuracy (AROC = 0.90) [30].

In AD and other tauopathies, tau undergoes post-translational modifications, resulting in aggregation and in the formation of neurofibrillary tangles [34]. Together with Aβ peptides, neurofibrillary tangles are a pathological hallmark of AD [35,36,37]. Different isoforms of plasma phosphorylated tau (p-tau) may be early biomarkers of cognitive decline (cf. plasma total tau) [6, 38, 39], with different assay platforms able to differentiate AD from CU participants [40, 41]. The most studied tau isoform is p-tau181, with several works reporting higher plasma levels associated with future cognitive decline over time in CU individuals [25, 42,43,44,45,46,47,48,49,50]. Plasma p-tau181 had superior accuracy versus CSF p-tau181 (AROC = 0.94–0.98 and AROC = 0.87–0.91, respectively) for predicting AD progression in CU individuals over time [46]. From plasma, the p-tau231 isoform differentiated persons with AD from Aβ−CU older adults and discriminated AD patients from those with non-AD neurodegenerative disorders, as well as from Aβ−MCI patients [51]. Notably, p-tau231 levels in plasma increase earlier than p-tau181 – before the threshold for Aβ PET positivity has been reached and in response to early brain tau deposition [51]. Therefore, p-tau231 may be a particularly useful biomarker of AD pathology. Plasma p-tau217 is another promising blood-based biomarker that may play a role in the spread of neocortical tangles in AD. P-tau217’s first increases in plasma are driven by Aβ aggregation and may appear largely before the spread of tau tangles outside of the medial temporal lobe [52]. Higher p-tau217 levels have been associated with steeper rates of cognitive decline, with a greater risk of converting to AD and with morphological brain alterations [1, 53,54,55].

Neurofilament light (NfL) plays a crucial role in the assembly and maintenance of the axonal cytoskeleton chain. After an axonal injury or neuronal degeneration, NfL is released into interstitial fluid and eventually into CSF and plasma [56, 57]. NfL levels are increased in frontotemporal dementia [58], small vessel disease [59], Parkinson’s disease [60] and AD [61]. The results of cross-sectional studies comparing plasma NfL concentrations and cognitive performance are mixed: some studies have found associations [62], whereas others did not [63, 64]. Longitudinal studies, however, showed that increasing levels of plasma NfL were significantly associated with declines in attention and global cognition, even after a short 15-month follow-up period [64]. In a separate study with CU participants, mean plasma levels increased 3.4 times faster in participants who subsequently developed AD than those who remained cognitively healthy [65]. In a study that investigated the NfL plasma levels in adults (mean age = 48 years) with a mean follow-up of 4.3 years, initial NfL levels were associated with a faster decline in normalized mental status scores in Whites and those >50 years old [66]. Taken together, NfL may be a predictive blood-based biomarker for global cognitive impairment.

Glial fibrillary acidic protein (GFAP) is a type-III intermediate filament component of the cytoskeleton of mature astrocytes and a marker of astroglial activation induced upon brain damage, during CNS degeneration or in the aged brain [67]. In CU individuals at risk of developing AD, GFAP predicted brain PET Aβ + (AROC = 0.76), outperforming CSF GFAP (AROC = 0.69) and other glial markers (CSF chitinase-3-like protein 1, YKL-40: AROC = 0.64; and Triggering Receptor Expressed on Myeloid Cells 2: AROC = 0.71). These results were independent of tau-PET burden, suggesting plasma GFAP is an early marker associated with brain Aβ pathology but not with tau aggregation [68]. Combining plasma GFAP with other information improved classification of Aβ+ compared to Aβ−: adding plasma GFAP plus age, sex, and APOE-ε4 carriage improved the AROC from 0.78 to 0.91 [69]. In addition, GFAP might be a prognostic biomarker to predict incident dementia. Higher baseline GFAP levels in CU participants were associated with a steeper rate of decline in memory, attention, and executive functioning [70]. In MCI participants, plasma GFAP detected AD pathology and predicted conversion to AD dementia (AROC = 0.84); in the latter case, adding APOE-ε4 or age to the model did not significantly improve the accuracy of the diagnosis [71]. However, other studies have reported a link between plasma GFAP level and progression from MCI to dementia [70].

Combining different types of blood-based biomarkers can better predict change over time [72]. Combining data from p-tau181 and NfL––but not Aβ42/Aβ40––produced the most accurate prediction (AROC = 0.88) of 4-year conversion to AD, a result that was validated in a separate cohort. A study with a long follow-up compared baseline blood-based biomarkers (Aβ misfolding, NfL, p-tau181 and GFAP) in 308 participants: 68 of whom developed dementia within 17 years. Among individual measures, Aβ misfolding was the best predictor (AROC = 0.78), followed by GFAP (0.74), NfL (0.68) and p-tau181 (0.61). However, the strongest predictor was a combination of Aβ misfolding, GFAP and APOE status (AROC = 0.83). With respect to preclinical AD, a variety of blood-based biomarkers–p-tau181, p-tau217, p-tau231, GFAP, NfL and Aβ42/40––and Aβ pathology were compared in at-risk individuals (divided into two groups, over and under 65 years old) [73]. In combination with age, sex and APOE ε4 status, the best predictors of CSF-determined Aβ status were p-tau231 (AROC = 0.81 and 0.83 for younger and older groups, respectively) and p-tau217 were (AROC = 0.76 and 0.89 for younger and older groups, respectively). Studies such as these have great potential as tools for recruitment and outcome measures in clinical trials.

Practical considerations for blood-based biomarkers

The technology for scalable blood-based biomarkers is quite mature. For example, there is already a blood-based test for AD based on the Aβ42/40 ratio (measured by mass spectrometry), age and APOE-ε4 genotype [74], which is concordant with PET imaging scans in 94% of cases (see ref. [75]). In the context of blood-based biomarkers, a standardized operating procedure for plasma handling was produced by the Standardization of Alzheimer’s Blood Biomarkers working group [76] to describe best practices for sample pre-analytical handling (collection, preparation, dilution, and storage). These recommendations will likely bring more standardized results and, consequently, more robust comparisons among different studies evaluating blood-based biomarkers for cognitive decline. There remain practical challenges to widespread implementation of blood-based biomarkers. Once extracted, blood cells need to be separated from plasma, requiring several minutes in a centrifuge before aliquoting and freezer storage within 2 h [77]. These steps require both expertise and expensive laboratory equipment (including reliable storage at −80 °C), which are typically not available in primary healthcare settings.

Passive assessment of cognition

Increasing smartphone and tablet usage presents new opportunities for expanding the availability and reducing the cost of cognitive assessment and for improving the precision and reducing the burden of cognitive testing. For example, in 2021, 61% of U.S. adults aged over 65 years owned a smartphone [78], an almost 5-fold increase since 2012 [79]. Various wearable and in-home sensors have been employed with the aim of detecting cognitive decline (for overviews refs. [80, 81]). However, using built-in smartphone sensors––already in the pockets of a large proportion of the population––allows passive monitoring to scale up dramatically. Extant smartphone-based research has focused on assessments of movement (e.g., gait, mobility, fine motor skills) and of language and speech problems, all of which have been associated with cognitive decline [82,83,84,85].

Mobile technologies can be used for automatic analysis of speech and language impairments that may signal cognitive decline. For example, automated speech analysis of the linguistic features captured during a tablet-administered picture description task distinguished MCI/AD patients from CU individuals [86]. Moreover, unlike standard neuropsychological test scores, one of these linguistic features–language coherence–declined significantly faster in the MCI/AD group than in CU on a 6-month follow-up, suggesting utility for monitoring language abilities over time. In a separate study, spoken answers to cognitive assessments were recorded on a tablet in order to generate a range of features (e.g., number of pauses, verbal fluency). These features were then used to develop models that differentiated among patients with subjective cognitive impairment, MCI, AD and mixed dementia patients with up to 92% accuracy [87]. Speech and language can also be assessed in less structured, naturalistic settings, such as during phone calls or typing. For example, natural language processing of speech data was passively collected during regular monitoring phone calls in a small sample of older adults with or without AD [88] and linguistic features (e.g., atypical repetition) differentiated AD from CU participants (AROC = 0.75–0.91). In addition to spoken language, features derived from touchscreen typing classified older adults with or without MCI (AROC = 0.75) [89]. Relevant features included aspects of fine motor movement (rigidity, bradykinesia, alternate finger tapping) and those related to language (e.g., lexical richness, grammatical and syntactical complexity).

Although passive measurements of communication bear an obvious relation to cognition, other features–such as gait–may also accurately predict cognitive decline. Assessing gait using smartphone tools alone is currently not specific enough for detecting AD in the general population [80]. Wearable accelerometers, however, can differentiate among dementia subtypes (AD, dementia with Lewy bodies and Parkinson’s disease) based on gait characteristics with moderate accuracy (AROC = 0.403–0.799 for the different wearable gait metrics) [90]. Real-life mobility of older adults can also be measured through smartphones using global positioning system data. Indeed, passive smartphone measures have been shown to correlate with cognitive abilities better than laboratory indicators of mobility capacity [91].

Practical considerations for passive assessment

One of the potential benefits of passive assessment lies in utilizing the smart devices that people already possess. However, the low cost, high scalability and accessibility of such an approach has to be weighed against issues that are outside of researchers’ control. Data can be lost simply due to internet connection problems. Hardware in commercially available devices is heterogeneous (e.g., the quality of motion and acceleration sensors varies widely). Priorities of smartphone developers and researchers differ: many smart devices automatically shut down background applications to extend battery life, leading to data loss. The format of data generated from smartphone devices prioritizes user-friendly dashboards rather than a form suitable for researchers to perform quantitative analyses.

Data privacy is a major concern for passive data collection. Consent to passive monitoring of cognition via a smartphone application appears higher in participants with more technology experience and lower in healthcare professionals [92]. Worryingly, not all smartphone applications intended for neuropsychiatric conditions seem to have a privacy policy, and if this is available at all, it is often inappropriately complex for lay people [93]. It is possible, however, to effectively anonymize GPS data in order to prevent re-identification use of ancillary data [94]. Given these concerns, it is important to inform participants about what happens to their data in an accessible, transparent way.

Remote, repeated cognitive assessment

Cognitive assessment remains the most common method for clinical diagnosis of disease-related cognitive decline, despite the recent shift towards biological biomarkers [95, 96]. However, identifying subtle changes in cognition outside the clinic – in cognitively unimpaired individuals – requires valid and reliable tools that can be administered frequently. Recent research has made strides to make cognitive assessment deliverable via desktop computer or smartphone. For example, the Test My Brain Digital Neuropsychology Toolkit [97] was developed and made available rapidly to meet a growing need for remote neuropsychological assessment during the COVID-19 pandemic. Tests in this toolkit probe a range of cognitive abilities including memory, processing speed and executive function: these tests all achieved ‘acceptable’ to ‘very good’ reliability despite being self-administered. Other cognitive assessment tools under development include the Boston Remote Assessment for Neurocognitive Health (BRANCH [98], designed to capture the first signs of cognitive decline in preclinical AD using smartphone tests. Validation work has shown that BRANCH has biological relevance, with composite BRANCH scores negatively correlated with amyloid and entorhinal tau levels [98].

Remote cognitive assessment has the benefit that it facilitates longitudinal data collection. Repeated assessment allows performance to be evaluated relative to an individual’s own baseline, which is important given that short-term fluctuations can account for up to 50% of variance on some cognitive tests across years [99]. Memory is often the earliest cognitive function to noticeably decline and is a cognitive function that is obviously suited to longitudinal assessment [100]. Smartphone assessment of memory is not only more convenient but can allow researchers to manipulate features of the design among participants to assess what works best. For example, repeated smartphone assessment was used to measure preclinical AD-related changes in long-term associative memory [100] across varied memory retention intervals of between 1–13 days. It was found that retention intervals of at least 3 days were needed to be sufficiently sensitive to differences in recall and recognition performance in adults without diagnosed cognitive impairment.

Longitudinal assessment using smartphones can also allow researchers to capture periodic fluctuations in cognition. This is important because increased variability in cognitive performance itself predicts cognitive decline in older adults, particularly on speeded [101] or selective attention tasks [102]. An assessment of the psychometric characteristics of very frequent and brief repeated smartphone assessment [103] found that cognitive test scores, averaged across 14 days of 5-times-a-day assessment, had a between-person reliability of 0.97–0.99. However, the tests still manifested sufficient within-person variability to capture cognitive fluctuations between occasions (within-person reliability of 0.41–0.53). Furthermore, smartphone-administered brief cognitive assessment repeated twice a day in multiple short sessions across 12 months could disentangle long- and short-term changes in cognitive performance [104]. Most of the variance in cognitive performance was due to between-person differences and short-term within-person fluctuations. Long-term within-person variability–the metric needed to detect cognitive decline–accounted for only approximately 14% of variance in cognition. Fluctuations in cognitive performance can differ by cognitive status. Utilizing a repeated smartphone assessment protocol, diurnal patterns in cognitive performance distinguished individuals at risk of AD from healthy older adults [15]. Specifically, time-of-day effects–lower performance in afternoon vs. morning–on an associative memory task were stronger in individuals with abnormal levels of AD biomarkers. This type of variability would be very difficult to detect using laboratory-based protocols.

Although repeated cognitive assessment is desirable, cognitive tasks can be repetitive, boring, too difficult, too easy [105] and may have practice effects that affect their validity [106]. To solve these problems, there is growing interest in the use of gamification or “serious games” in cognitive assessment [107,108,109]. Gamified assessment aims to reduce testing anxiety [110] and increase task engagement and enjoyment without affecting performance [108]. Many studies have successfully adapted gamified assessment methods in older adults with or without cognitive impairment [111,112,113,114]. Gamified assessment can provide better construct and ecological validity than simple laboratory-based tasks thanks to a richer, more realistic context [115, 116]. Gamification is especially well suited for the assessment of navigation abilities or spatial memory because games can provide an immersive experience in 3D environments. For example, a smartphone application assessed way-finding in a high-quality 3D environment and showed that spatial navigation ability on the application was more sensitive to genetic risk for AD (APOE-ε4 status) than a classic visual episodic memory test (see Fig. 2) [117].

‘Adaptive testing’ is a common feature of gamified tasks, which is a procedure wherein tasks become more or less challenging to provide a tailored experience for the end-user. This allows cognitive tests to arrive at a reliable estimate of a person’s ability faster and more efficiently and avoids ceiling and floor effects that can harm test sensitivity. Adaptive testing also has the benefit that it promotes engagement on the part of the user and reduces frustration, making people more likely to play for longer and more frequently [118]. A driving scenario game took this approach to assess attention and executive function via tablet [119]. The game is a closed-loop system that dynamically adjusts to keep the difficulty at 80% for all players and takes approximately 7 min to complete. In a recent proof-of-concept study, the game was able to distinguish those with cognitive impairment in a multiple sclerosis (MS) population from those without [119].

Practical considerations for remote cognitive assessment

Ideally, metrics of cognitive functioning should be interoperable (i.e., easily exchanged and interpreted across systems), although this can be difficult to achieve in practice. For example, differences in the technical parameters of smartphones affect the accuracy of task presentation and measurement particularly for timed tasks that involve very rapid presentation of stimuli or on tasks that become more or less difficult based on screen size [120]. These differences are not random: cognitive task performance varies systematically per device type and operating system, which is partially explained by demographic characteristics like education, age and gender, suggesting that different people access different devices [121]. Interoperability is also hindered because researchers working in this area preferentially develop new assessment tools rather than validating and generalizing existing tools [121] (cf. the Open Digital Health initiative, https://opendigitalhealth.org).

There is less experimental control with regards to distractions and identity verification for remotely collected data but this is counteracted by greater precision and standardization of the stimuli vs. pen-and-paper tasks [120, 121]. Moreover, assessment in real-life conditions can be more ecologically valid than laboratory-based single assessments in carefully controlled conditions (e.g., for tasks that examine memory retention over several days). A further concern is that tasks ideal for repeated assessment need to be short to remain engaging, which means fewer trials and therefore less reliable measures. Repeated administration of tasks can improve reliability by aggregating across multiple timepoints.

Repeatable, remote brain assessment

Electroencephalography (EEG) is a non-invasive technique that detects the synchronous activation of cortical pyramidal neurons from the scalp. It can be used either to measure large-scale oscillatory neural population spontaneous activity during quiet wakefulness or it can be time-locked to an event. In contrast to MRI and PET, EEG technology does not involve exposure to radioactive isotopes or magnetic fields. A growing body of evidence shows that EEG is sensitive to cognitive decline, at least. As we discuss later, EEG can also potentially allow cognitive decline to be monitored via at-home recording of brain activity because the technology can be miniaturized and made portable [122].

Resting state EEG (rsEEG) may be particularly useful as a scalable method because the participant does not have to engage in a specific task, yet rsEEG appears sensitive to cognitive decline over time, albeit in groups with mild symptoms at baseline (cf. prospectively in a healthy cohort). For example, 54 MCI, 50 mild AD, and 45 CU older adults each had their EEG recorded 1-year apart [123]. At baseline, alpha-band power was lowest in the mild AD group, highest in the CU group, whereas the MCI group had intermediate values. At follow-up, the MCI group’s alpha power was further decreased, suggesting that rsEEG could be sensitive to disease progression. Similarly, in a separate study [124] there were no differences in neuropsychological test performance for participants, either Aβ positive or negative, in a 2-year follow-up. However, rsEEG, specifically the ratio of θ:α power, changed significantly over time in participants who were Aβ+. A comparison of 88 older adults with mild AD versus 35 CU across one year reported increased widespread delta power and decreased power of widespread alpha and posterior beta [125]. Furthermore, the topographies of the rsEEG power spectrum appear to be sensitive to disease stage. Differences in rsEEG power spectrum densities between mild AD patients and CU controls were the largest around temporal lobes while differences between advanced AD patients and controls were largest around frontal regions [126, 127]. A review of results from 14 studies that applied machine learning to rsEEG data reported classification accuracies between CU and MCI patients between 77–98%, sensitivity between 75–100% and specificity between 75–97% [128]. A study with a large sample (n = 496) healthy older adults reported that resting-state prefrontal biomarkers could predict global cognition (Mini-Mental State Examination score) with moderate accuracy (maximum intraclass correlation = 0.76) [129].

When time-locked to an event (e.g., a visual stimulus or a motor response), the electrical potentials recorded via EEG are called event-related potentials (ERPs) that can reveal – with temporal resolution in the range of milliseconds – the neural correlates of early sensory processes and of higher cognitive functions such as decision-making. The P300 ERP is often evoked using oddball tasks, during which participants should attend to the presence of an infrequent stimulus [130], and is characterized by a positive ERP deflection from approximately 300 ms after presentation of the infrequent stimulus. The P300 ERP is thought to reflect decision making and context-updating processes [131] and is particularly promising for detecting cognitive decline [126, 127, 132], with the benefit that it seems generally robust to gender and education [133] (cf. ref. [134]. P300 latency correlates with degree of cognitive deficit in AD [123, 127, 135,136,137]; and is increased in AD compared to MCI, and MCI patients in turn have longer latencies than age-matched controls [126, 127]. The P300 latency is correlated with cognitive impairment, as measured by the Mini-Mental State Exam [138] and the Alzheimer’s Disease Assessment Scale–Cognitive Subscale [139]. Finally, using EEG phase-amplitude coupling measures extracted from an oddball task, 15 CU were distinguished from 25 MCI with an accuracy of 95%, a sensitivity of 96%, and a specificity of 93% [140].

In contrast to the P300 ERP, which requires a participant’s attention, the mismatch negativity (MMN) ERP is produced by passive auditory oddball paradigms in which a train of frequent tones are interspersed with rare (‘deviant’) tones differing in duration or frequency [141]. The MMN ERP is thought to reflect automatic sensory processing and to act as a perceptual prediction error signal, with a latency of 100–200 ms post deviant stimulus presentation and ERP amplitude maximal at frontocentral sites [142]. The MMN ERP can distinguish MCI from AD patients [143] and amnestic MCI from healthy controls [142, 144]. MMN amplitude appears to decrease in AD for interstimulus intervals longer than 3 seconds, suggesting that sensory memory traces decay faster in AD patients compared to healthy controls [144]. Demonstrating the utility of ERPs, whereas age and education could not predict episodic memory or attention/executive functions at 5-year follow-up, MMN metrics explained an additional 36% of the variance in episodic memory performance [144] at follow-up.

Given that laboratory-based EEG appears suitable for detecting cognitive decline, scalability can be achieved by recording EEG outside of the laboratory (i.e., remotely). Furthermore, remote EEG can be conducted by unsupervised non-expert users [122, 145,146,147,148,149]. Remote EEG platforms vary on a range of parameters such as the electrode type (i.e., requiring a conductive medium or not), number and placement of electrodes; portability; user-friendliness and whether or not the signals are transmitted wirelessly [122]. Precise synchronization between the EEG recording device and the presentation of exogenous stimuli can be achieved by pairing the EEG headset to a handheld tablet [147, 148] or laptops [147].

The nomenclature for remote EEG reflects the variety of possible configurations. ‘Portable’ refers to systems designed for use outside the laboratory. ‘Mobile’ refers specifically to technology that can be used in motion, such as walking [149]. ‘Wet’ and ‘Dry’ refer to the type of electrodes used to conduct the signal from the scalp: the former requiring a conductive medium (electrolytic gel or water), the latter relying on mechanical pressure against the scalp to ensure contact. In order to ensure standardized electrode placement on the scalp, rigid headsets are preferred for non-expert use [149]. Portable, dry EEG devices can yield data that are similar in quality to those obtained by wet laboratory-based EEG systems [150,151,152,153] with comparable ERP amplitudes and latencies between wet and dry EEG [154], significant positive correlations (r = 0.54–0.89) between wet and dry EEG recordings for both spectral components and ERPs [155], and intra-class correlations between 0.76–0.85 across three separate testing time points using dry EEG [156]. Figure 3 shows an example of a portable EEG system and associated remotely collected data.

Upper left panel: The platform (Cumulus Neuroscience) consists of a wireless 16-channel dry sensor EEG headset linked to an Android tablet for task presentation. Upper right panel: Flexible Ag/AgCl coated dry EEG electrodes. Lower left panel: Figure adapted with permission from ref. [155] showing weekly adherence of a cohort of 50 healthy older adults (+55 years old) with a 6-week at-home EEG recording protocol. Lower Right Panel: Figure adapted with permission from ref. [153] showing averaged event-related potentials from target trials extracted from a gamified Oddball task, collected remotely by participants themselves.

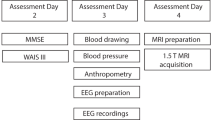

Most usability studies of portable EEG have been conducted in laboratories and/or have been overseen by trained technicians [149, 151, 155, 157]. Limited data are available regarding the usability of such technology when participants self-administer EEG longitudinally from the home. However, one study [148] reported data from 89 CU adults (age range 40–80 years) asked to complete at-home recordings with a portable wireless dry EEG platform 5 times per week for 3 months. Each session was approximately 30 mins and consisted of gamified versions of tasks commonly administered under EEG–two-stimulus oddball, flanker, delayed match-to-sample and N-back–plus rsEEG. Participants were not compensated, yet mean adherence was 4.1 sessions per week with a low attrition rate of 11/89 participants (neither adherence nor attrition were related to age). A high percentage of recordings (96% of 3,603 sessions) contained usable EEG data. These results suggest that it is feasible for participants to collect longitudinal brain data from the home, which is essential if EEG is to be used for detecting or monitoring cognitive decline

Practical considerations for remote EEG assessment

Integrating measures of cognitive decline into a healthcare system requires standardized metrics, yet subtle differences in task design (e.g., frequency of stimulus presentation) can change the nature of EEG results [144]. Unlike psychometric tests, there are no established norms for cognitive ERP amplitude and latency although the development of standardized metrics may address this issue [158, 159]. Self-administered EEG may not be feasible for those with motor impairment (e.g., Parkinson’s disease). Putting on the headset and adjusting electrodes requires some manual dexterity: some assistance may be needed for these participants. Finally, many remote technologies often rely on a good WiFi connection to download tasks and upload recorded data.

Pathway to healthcare practice: opportunities and challenges

The approaches described above–peripheral blood-based markers associated with neuropathology, passive monitoring of cognition and remote brain assessment–offer potential strategies for detecting cognitive decline. However, deploying these strategies at scale in diverse real-world healthcare settings is subject to several considerations: scientific, societal and ethical. In this section, we discuss these challenges and some potential solutions.

Early diagnosis raises complex ethical issues, including the right (not) to know and communication challenges regarding the probabilistic nature of any assessment [160]. Although smartphone and EEG tools described here accurately measure cognitive function, they are not yet comparable with clinical tools. In particular, the specificity–the proportion of negative cases correctly identified–needs to be high before a tool for identifying cognitive decline is clinically useful. Identifying cases with low or no symptoms may cause individual distress and unduly burden health systems by creating the ‘worried well’, particularly when there are limited treatment options for diseases that cause cognitive decline [161]. An economic burden may result from the cost of technologies, such as dry EEG and plasma biomarker platforms, although these should reduce if manufactured at scale. Many methods have been developed for Western populations: for example, between 2000–2019, 41% of studies using automated speech and language processing for AD monitoring were conducted in English, with other studies focusing mostly on Western-European languages [84]. It will be important to ensure these approaches are suitable for low- and middle-income countries, particularly because cognitive decline is becoming a bigger issue in those regions [162, 163]. At a more fundamental level, some methods lack an evidence base per se. A review of 83 available smartphone apps related to the most disabling neuropsychiatric conditions found that only 18% seemed evidence-based [93].

An important stepping-stone to real-world implementation is deployment of scalable methods and tools in large, prospective cohorts of CU participants. Indeed, some of these studies are already underway. For example, the Early Detection of Neurodegenerative diseases (EDoN) [164] project aims to collect data from passive sensors and easily obtained clinical measures to detect the earliest signatures of dementia. EDoN’s ultimate goal is to develop a digital toolkit to deployed at a population level for people over age 40. Notably, at first, a range of digital metrics will be recorded. Subsequently, a data-driven approach (machine learning) will identify a subset of measures that, in combination, are most predictive. Combining a variety of tools in this way may improve specificity. A Swedish-based prospective study – BioFINDER (Biomarkers For Identifying Neurodegenerative Disorders Early and Reliably; https://biofinder.se/)–seeks to validate blood-based biomarkers for the diagnosis of AD and Parkinson’s Disease in primary care settings. Participants in BioFINDER complete a wide range of specialized, gold-standard measures (e.g., neuroimaging), against which scalable methods can be compared.

Conclusion

In this review, we described approaches for detecting cognitive decline at scale, each of which varied in their development status. Improvements in standardization and interoperability are needed for tools designed for assaying specific cognitive functions to be widely deployed. However, in the near future, blood plasma measures of neuropathology, passive smartphone data collection and resting-state EEG could plausibly be implemented at scale. It may be possible to provide a direct-to-consumer test using p-tau as a blood-based biomarker to identify those most at risk of cognitive decline [165]. Alternatively, or in addition, cognitive functioning can be detected using passive “digital biomarkers” to detect early signs of disease [166]. The methods described here can also be used to identify participants at-risk of cognitive impairment for inclusion in clinical trials, to monitor progression of cognitive decline and to assay treatment responses over time. For clinical trials, the ability to monitor cognitive and brain responses frequently (perhaps even on a daily basis) would allow researchers to map the evolution of any treatment response over time, and potentially to identify individual differences associated with effective medication response. Obtaining behavior and brain data outside the laboratory may radically change approaches for detecting cognitive decline.

References

Bloom DE, Canning D, Lubet A Global Population Aging: Facts, Challenges, Solutions & Perspectives. Daedalus (2015). https://doi.org/10.1162/DAED_a_00332

Wilson RS, Wang T, Yu L, Bennett DA, Boyle PA. Normative cognitive decline in old age. Ann Neurol. 2020;87:816–29. https://doi.org/10.1002/ana.25711

Buckley R, Pascual-Leone A. Age-related cognitive decline is indicative of neuropathology. Ann Neurol. 2020;87:813–15. https://doi.org/10.1002/ana.25733

McDade E, Bednar MM, Brashear HR, Miller DS, Maruff P, Randolph C, et al. The pathway to secondary prevention of Alzheimer’s disease. Alzheimer’s Dement: Transl Res Clin Interventions. 2020;6:12069. https://doi.org/10.1002/trc2.12069

McConathy J, Sheline YI. Imaging biomarkers associated with cognitive decline: a review. Biol Psychiatry. 2015;77:685–92. https://doi.org/10.1016/j.biopsych.2014.08.024

Kirkpatrick RH, Munoz DP, Khalid-Khan S, Booij L. Methodological and clinical challenges associated with biomarkers for psychiatric disease: A scoping review. J Psychiatr Res. 2021;143:572–579. https://doi.org/10.1016/j.jpsychires.2020.11.023

Cummings J, Lee G, Nahed P, Kambar MEZN, Zhong K, Fonseca J, et al. Alzheimer’s disease drug development pipeline: 2022. Alzheimer’s Dement: Transl Res Clin Interventions. 2022;8:e12295.

Hodes JF, Oakley CI, O’Keefe JH, Lu P, Galvin JE, Saif N, et al. Alzheimer’s “prevention” vs. “risk reduction”: transcending semantics for clinical practice. Front Neurol. 2019;9:1179. https://doi.org/10.3389/fneur.2018.01179

Persson S, Saha S, Gerdtham U-G, Toresson H, Trépel D, Jarl J. Healthcare costs of dementia diseases before, during and after diagnosis: Longitudinal analysis of 17 years of Swedish register data. Alzheimer’s Dement. https://doi.org/10.1002/alz.12619.

Bynum JP, Rabins PV, Weller W, Niefeld M, Anderson GF, Wu AW. The relationship between a dementia diagnosis, chronic illness, medicare expenditures, and hospital use. J Am Geriatr Soc. 2004;52:187–94. https://doi.org/10.1111/j.1532-5415.2004.52054.x

Mattke S, Cho SK, Bittner T, Hlavka J, Hanson M. Blood-based biomarkers for Alzheimer’s pathology and the diagnostic process for a disease-modifying treatment: Projecting the impact on the cost and wait times. Alzheimers Dement. 2020;12:12081. https://doi.org/10.1002/dad2.12081

Hansson O, Edelmayer RM, Boxer AL, Carrillo MC, Mielke MM, Rabinovici GD et al. The Alzheimer’s Association appropriate use recommendations for blood biomarkers in Alzheimer’s disease. Alzheimers Dement. 2022. https://doi.org/10.1002/alz.12756

McKinney TL, Euler MJ, Butner JE. It’s about time: The role of temporal variability in improving assessment of executive functioning. Clin Neuropsychol. 2020;34:619–642. https://doi.org/10.1080/13854046.2019.1704434

West R, Murphy KJ, Armilio ML, Craik FIM, Stuss DT. Effects of time of day on age differences in working memory. J Gerontology: Ser B. 2002;57:3–10. https://doi.org/10.1093/geronb/57.1.P3

Wilks H, Aschenbrenner AJ, Gordon BA, Balota DA, Fagan AM, Musiek E, et al. Sharper in the morning: Cognitive time of day effects revealed with high-frequency smartphone testing. J Clin Exp Neuropsychol. 2021;43:825–837. https://doi.org/10.1080/13803395.2021.2009447

Sliwinski MJ, Smyth JM, Hofer SM, Stawski RS. Intraindividual coupling of daily stress and cognition. Psychol aging. 2006;21:545–57. https://doi.org/10.1037/0882-7974.21.3.545

Lewczuk P, Esselmann H, Bibl M, Paul S, Svitek J, Miertschischk J, et al. Electrophoretic separation of amyloid beta peptides in plasma. Electrophoresis. 2004;25:3336–43. https://doi.org/10.1002/elps.200406068

Hampel H, O’Bryant SE, Molinuevo JL, Zetterberg H, Masters CL, Lista S, et al. Blood-based biomarkers for Alzheimer disease: mapping the road to the clinic. Nat Rev Neurol. 2018;14:639–652. https://doi.org/10.1038/s41582-018-0079-7

Henriksen K, O’Bryant SE, Hampel H, Trojanowski JQ, Montine TJ, Jeromin A, et al. The future of blood-based biomarkers for Alzheimer’s disease. Alzheimers Dement. 2014;10:115–31. https://doi.org/10.1016/j.jalz.2013.01.013

Leuzy A, Mattsson-Carlgren N, Palmqvist S, Janelidze S, Dage JL, Hansson O. Blood-based biomarkers for Alzheimer’s disease. EMBO Mol Med. 2022;14:14408. https://doi.org/10.15252/emmm.202114408

Teunissen CE, Verberk IMW, Thijssen EH, Vermunt L, Hansson O, Zetterberg H, et al. Blood-based biomarkers for Alzheimer’s disease: towards clinical implementation. Lancet Neurol. 2022;21:66–77. https://doi.org/10.1016/S1474-4422(21)00361-6

Cullen NC, Leuzy A, Janelidze S, Palmqvist S, Svenningsson AL, Stomrud E, et al. Plasma biomarkers of Alzheimer’s disease improve prediction of cognitive decline in cognitively unimpaired elderly populations. Nat Commun. 2021;12:3555. https://doi.org/10.1038/s41467-021-23746-0

Roda AR, Serra-Mir G, Montoliu-Gaya L, Tiessler L, Villegas S. Amyloid-beta peptide and tau protein crosstalk in Alzheimer’s disease. Neural Regen Res. 2022;17:1666–1674. https://doi.org/10.4103/1673-5374.332127

Vandersteen A, Hubin E, Sarroukh R, De Baets G, Schymkowitz J, Rousseau F, et al. A comparative analysis of the aggregation behavior of amyloid-beta peptide variants. FEBS Lett. 2012;586:4088–93. https://doi.org/10.1016/j.febslet.2012.10.022

Lloret A, Esteve D, Lloret MA, Cervera-Ferri A, Lopez B, Nepomuceno M, et al. When does Alzheimer’s disease really start? The Role of Biomarkers. Int J Mol Sci (2019) https://doi.org/10.3390/ijms20225536

Lehmann S, Delaby C, Boursier G, Catteau C, Ginestet N, Tiers L, et al. Relevance of Abeta42/40 ratio for detection of Alzheimer disease pathology in clinical routine: the PLMR scale. Front Aging Neurosci. 2018;10:138. https://doi.org/10.3389/fnagi.2018.00138

Janelidze S, Stomrud E, Palmqvist S, Zetterberg H, van Westen D, Jeromin A, et al. Plasma beta-amyloid in Alzheimer’s disease and vascular disease. Sci Rep. 2016;6:26801. https://doi.org/10.1038/srep26801

Nakamura A, Kaneko N, Villemagne VL, Kato T, Doecke J, Dore V, et al. High performance plasma amyloid-beta biomarkers for Alzheimer’s disease. Nature. 2018;554:249–254. https://doi.org/10.1038/nature25456

Lu WH, Giudici KV, Rolland Y, Guyonnet S, Li Y, Bateman RJ, et al. Prospective associations between plasma amyloid-beta 42/40 and frailty in community-dwelling older adults. J Prev Alzheimers Dis. 2021;8:41–47. https://doi.org/10.14283/jpad.2020.60

West T, Kirmess KM, Meyer MR, Holubasch MS, Knapik SS, Hu Y, et al. A blood-based diagnostic test incorporating plasma Abeta42/40 ratio, ApoE proteotype, and age accurately identifies brain amyloid status: findings from a multi-cohort validity analysis. Mol Neurodegener. 2021;16:30. https://doi.org/10.1186/s13024-021-00451-6

Giudici KV, de Souto Barreto P, Guyonnet S, Li Y, Bateman RJ, Vellas B, et al. Assessment of Plasma Amyloid-beta42/40 and Cognitive Decline Among Community-Dwelling Older Adults. JAMA Netw Open. 2020;3:e2028634.

Verberk IMW, Thijssen E, Koelewijn J, Mauroo K, Vanbrabant J, de Wilde A, et al. Combination of plasma amyloid beta(1-42/1-40) and glial fibrillary acidic protein strongly associates with cerebral amyloid pathology. Alzheimers Res Ther. 2020;12:118.

Doecke JD, Perez-Grijalba V, Fandos N, Fowler C, Villemagne VL, Masters CL, et al. Total Abeta42/Abeta40 ratio in plasma predicts amyloid-PET status, independent of clinical AD diagnosis. Neurology. 2020;94:e1580–e91.

Sexton C, Snyder H, Beher D, Boxer AL, Brannelly P, Brion JP, et al. Current directions in tau research: Highlights from Tau 2020. Alzheimers Dement. 2021;18:988–1007. https://doi.org/10.1002/alz.12452

Selkoe DJ. The molecular pathology of Alzheimer’s disease. Neuron. 1991;6:487–98. https://doi.org/10.1016/0896-6273(91)90052-2

Selkoe DJ, Hardy J. The amyloid hypothesis of Alzheimer’s disease at 25 years. EMBO Mol Med. 2016;8:595–608. https://doi.org/10.15252/emmm.201606210

Alonso A, Zaidi T, Novak M, Grundke-Iqbal I, Iqbal K. Hyperphosphorylation induces self-assembly of tau into tangles of paired helical filaments/straight filaments. Proc Natl Acad Sci USA 2001;98:6923–8. https://doi.org/10.1073/pnas.121119298

Illan-Gala I, Lleo A, Karydas A, Staffaroni AM, Zetterberg H, Sivasankaran R, et al. Plasma Tau and neurofilament light in frontotemporal lobar degeneration and Alzheimer disease. Neurology. 2021;96:671. https://doi.org/10.1212/WNL.0000000000011226

Mattsson N, Zetterberg H, Janelidze S, Insel PS, Andreasson U, Stomrud E, et al. Plasma tau in Alzheimer disease. Neurology. 2016;87:1827–1835. https://doi.org/10.1212/WNL.0000000000003246

Bayoumy S, Verberk IMW, den Dulk B, Hussainali Z, Zwan M, van der Flier WM, et al. Clinical and analytical comparison of six Simoa assays for plasma P-tau isoforms P-tau181, P-tau217, and P-tau231. Alzheimers Res Ther. 2021;13:198. https://doi.org/10.1186/s13195-021-00939-9

Suarez-Calvet M, Karikari TK, Ashton NJ, Lantero Rodriguez J, Mila-Aloma M, Gispert JD, et al. Novel tau biomarkers phosphorylated at T181, T217 or T231 rise in the initial stages of the preclinical Alzheimer’s continuum when only subtle changes in Abeta pathology are detected. EMBO Mol Med. 2020;12:12921. https://doi.org/10.15252/emmm.202012921

Moscoso A, Grothe MJ, Ashton NJ, Karikari TK, Lantero Rodriguez J, Snellman A, et al. Longitudinal associations of blood phosphorylated Tau181 and neurofilament light chain with neurodegeneration in Alzheimer disease. JAMA Neurol. 2021;78:396–406. https://doi.org/10.1001/jamaneurol.2020.4986

Lantero Rodriguez J, Karikari TK, Suarez-Calvet M, Troakes C, King A, Emersic A, et al. Plasma p-tau181 accurately predicts Alzheimer’s disease pathology at least 8 years prior to post-mortem and improves the clinical characterisation of cognitive decline. Acta Neuropathol. 2020;140:267–278. https://doi.org/10.1007/s00401-020-02195-x

Karikari TK, Benedet AL, Ashton NJ, Lantero Rodriguez J, Snellman A, Suarez-Calvet M, et al. Diagnostic performance and prediction of clinical progression of plasma phospho-tau181 in the Alzheimer’s Disease Neuroimaging Initiative. Mol Psychiatry. 2021;26:429–442. https://doi.org/10.1038/s41380-020-00923-z

Palmqvist S, Tideman P, Cullen N, Zetterberg H, Blennow K. Alzheimer’s Disease Neuroimaging Initiative et al. Prediction of future Alzheimer’s disease dementia using plasma phospho-tau combined with other accessible measures. Nat Med. 2021;27:1034–1042. https://doi.org/10.1038/s41591-021-01348-z

Janelidze S, Mattsson N, Palmqvist S, Smith R, Beach TG, Serrano GE, et al. Plasma P-tau181 in Alzheimer’s disease: relationship to other biomarkers, differential diagnosis, neuropathology and longitudinal progression to Alzheimer’s dementia. Nat Med. 2020;26:379–386. https://doi.org/10.1038/s41591-020-0755-1

Chen SD, Huang YY, Shen XN, Guo Y, Tan L, Dong Q, et al. Longitudinal plasma phosphorylated tau 181 tracks disease progression in Alzheimer’s disease. Transl Psychiatry. 2021;11:356. https://doi.org/10.1038/s41398-021-01476-7

Simren J, Leuzy A, Karikari TK, Hye A, Benedet AL, Lantero-Rodriguez J, et al. The diagnostic and prognostic capabilities of plasma biomarkers in Alzheimer’s disease. Alzheimers Dement. 2021;17:1145–1156. https://doi.org/10.1002/alz.12283

Salami A, Adolfsson R, Andersson M, Blennow K, Lundquist A, Adolfsson AN, et al. Association of APOE varepsilon4 and Plasma p-tau181 with Preclinical Alzheimer’s Disease and Longitudinal Change in Hippocampus Function. J Alzheimers Dis. 2022;85:1309–1320. https://doi.org/10.3233/JAD-210673

Therriault J, Benedet AL, Pascoal TA, Lussier FZ, Tissot C, Karikari TK, et al. Association of plasma P-tau181 with memory decline in non-demented adults. Brain Commun. 2021;3:136. https://doi.org/10.1093/braincomms/fcab136

Ashton NJ, Pascoal TA, Karikari TK, Benedet AL, Lantero-Rodriguez J, Brinkmalm G, et al. Plasma p-tau231: a new biomarker for incipient Alzheimer’s disease pathology. Acta Neuropathol. 2021;141:709–724. https://doi.org/10.1007/s00401-021-02275-6

Mattsson-Carlgren N, Janelidze S, Bateman RJ, Smith R, Stomrud E, Serrano GE, et al. Soluble P-tau217 reflects amyloid and tau pathology and mediates the association of amyloid with tau. EMBO Mol Med. 2021;13:14022. https://doi.org/10.15252/emmm.202114022

Mattsson N, Cullen NC, Andreasson U, Zetterberg H, Blennow K. Association between longitudinal plasma neurofilament light and neurodegeneration in patients with Alzheimer disease. JAMA Neurol. 2019;76:791–799. https://doi.org/10.1001/jamaneurol.2019.0765

Leuzy A, Smith R, Cullen NC, Strandberg O, Vogel JW, Binette AP, et al. Biomarker-based prediction of longitudinal tau positron emission tomography in Alzheimer disease. JAMA Neurol. 2021;79:149–158. https://doi.org/10.1001/jamaneurol.2021.4654

Janelidze S, Berron D, Smith R, Strandberg O, Proctor NK, Dage JL, et al. Associations of plasma phospho-Tau217 levels with Tau positron emission tomography in early Alzheimer disease. JAMA Neurol. 2021;78:149–156. https://doi.org/10.1001/jamaneurol.2020.4201

Petzold A. Neurofilament phosphoforms: surrogate markers for axonal injury, degeneration and loss. J Neurol Sci. 2005;233:183–98. https://doi.org/10.1016/j.jns.2005.03.015

Gaiottino J, Norgren N, Dobson R, Topping J, Nissim A, Malaspina A, et al. Increased neurofilament light chain blood levels in neurodegenerative neurological diseases. PLoS One. 2013;8:75091. https://doi.org/10.1371/journal.pone.0075091

Silva-Spinola A, Lima M, Leitao MJ, Duraes J, Tabuas-Pereira M, Almeida MR, et al. Serum neurofilament light chain as a surrogate of cognitive decline in sporadic and familial frontotemporal dementia. Eur J Neurol. 2022;29:36–46. https://doi.org/10.1111/ene.15058

Egle M, Loubiere L, Maceski A, Kuhle J, Peters N, Markus HS Neurofilament light chain predicts future dementia risk in cerebral small vessel disease. J Neurol Neurosurg Psychiatry. 2021. https://doi.org/10.1136/jnnp-2020-325681.

Huang Y, Huang C, Zhang Q, Shen T, Sun J. Serum NFL discriminates Parkinson disease from essential tremor and reflect motor and cognition severity. BMC Neurol. 2022;22:39 https://doi.org/10.1186/s12883-022-02558-9

Zhao Y, Xin Y, Meng S, He Z, Hu W. Neurofilament light chain protein in neurodegenerative dementia: a systematic review and network meta-analysis. Neurosci Biobehav Rev. 2019;102:123–138. https://doi.org/10.1016/j.neubiorev.2019.04.014

Hall JR, Johnson LA, Peterson M, Julovich D, Como T, O’Bryant SE. Relationship of Neurofilament Light (NfL) and cognitive performance in a sample of Mexican Americans with normal cognition, mild cognitive impairment and dementia. Curr Alzheimer Res. 2020;17:1214–1220. https://doi.org/10.2174/1567205018666210219105949

Delaby C, Julian A, Page G, Ragot S, Lehmann S, Paccalin M. NFL strongly correlates with TNF-R1 in the plasma of AD patients, but not with cognitive decline. Sci Rep. 2021;11:10283 https://doi.org/10.1038/s41598-021-89749-5

Mielke MM, Syrjanen JA, Blennow K, Zetterberg H, Vemuri P, Skoog I, et al. Plasma and CSF neurofilament light: relation to longitudinal neuroimaging and cognitive measures. Neurology. 2019;93:252 https://doi.org/10.1212/WNL.0000000000007767

de Wolf F, Ghanbari M, Licher S, McRae-McKee K, Gras L, Weverling GJ, et al. Plasma tau, neurofilament light chain and amyloid-beta levels and risk of dementia; a population-based cohort study. Brain. 2020;143:1220–1232. https://doi.org/10.1093/brain/awaa054

Beydoun MA, Noren Hooten N, Beydoun HA, Maldonado AI, Weiss J, Evans MK, et al. Plasma neurofilament light as a potential biomarker for cognitive decline in a longitudinal study of middle-aged urban adults. Transl Psychiatry. 2021;11:436 https://doi.org/10.1038/s41398-021-01563-9

Middeldorp J, Hol EM. GFAP in health and disease. Prog Neurobiol. 2011;93:421–43. https://doi.org/10.1016/j.pneurobio.2011.01.005

Pereira JB, Janelidze S, Smith R, Mattsson-Carlgren N, Palmqvist S, Teunissen CE, et al. Plasma GFAP is an early marker of amyloid-beta but not tau pathology in Alzheimer’s disease. Brain. 2021;144:3505–3516. https://doi.org/10.1093/brain/awab223

Chatterjee P, Pedrini S, Stoops E, Goozee K, Villemagne VL, Asih PR, et al. Plasma glial fibrillary acidic protein is elevated in cognitively normal older adults at risk of Alzheimer’s disease. Transl Psychiatry. 2021;11:27 https://doi.org/10.1038/s41398-020-01137-1

Verberk IMW, Laarhuis MB, van den Bosch KA, Ebenau JL, van Leeuwenstijn M, Prins ND, et al. Serum markers glial fibrillary acidic protein and neurofilament light for prognosis and monitoring in cognitively normal older people: a prospective memory clinic-based cohort study. Lancet Healthy Longev. 2021;2:87 https://doi.org/10.1016/s2666-7568(20)30061-1

Cicognola C, Janelidze S, Hertze J, Zetterberg H, Blennow K, Mattsson-Carlgren N, et al. Plasma glial fibrillary acidic protein detects Alzheimer pathology and predicts future conversion to Alzheimer dementia in patients with mild cognitive impairment. Alzheimers Res Ther. 2021;13:68 https://doi.org/10.1186/s13195-021-00804-9

Cullen NC, Leuzy A, Palmqvist S, Janelidze S, Stomrud E, Pesini P, et al. Individualized prognosis of cognitive decline and dementia in mild cognitive impairment based on plasma biomarker combinations. Nat Aging. 2021;1:114–123. https://doi.org/10.1038/s43587-020-00003-5

Milà-Alomà M, Ashton NJ, Shekari M, Salvadó G, Ortiz-Romero P, Montoliu-Gaya L, et al. Plasma p-tau231 and p-tau217 as state markers of amyloid-β pathology in preclinical Alzheimer’s disease. Nat Med. 2022;28:1797–1801. https://doi.org/10.1038/s41591-022-01925-w

Schindler SE, Bollinger JG, Ovod V, Mawuenyega KG, Li Y, Gordon BA, et al. High-precision plasma β-amyloid 42/40 predicts current and future brain amyloidosis. Neurology. 2019;93:1647 https://doi.org/10.1212/WNL.0000000000008081

Servick K. Alzheimer’s drug approval spotlights blood tests. Science. 2021;373:373–374. https://doi.org/10.1126/science.373.6553.373

Verberk IMW, Misdorp EO, Koelewijn J, Ball AJ, Blennow K, Dage JL, et al. Characterization of pre-analytical sample handling effects on a panel of Alzheimer’s disease–related blood-based biomarkers: Results from the Standardization of Alzheimer’s Blood Biomarkers (SABB) working group. Alzheimer Dement. 2022;18:1484–1497. https://doi.org/10.1002/alz.12510

O’Bryant SE, Gupta V, Henriksen K, Edwards M, Jeromin A, Lista S, et al. Guidelines for the standardization of preanalytic variables for blood-based biomarker studies in Alzheimer’s disease research. Alzheimer Dement. 2015;11:549–60

Pew Research Centre. Mobile fact sheet. (2022) https://www.pewresearch.org/internet/fact-sheet/mobile/

Faverio, M Share of those 65 and older who are tech users has grown in the past decade. Pew Research Center (2022) https://www.pewresearch.org/fact-tank/2022/01/13/share-of-those-65-and-older-who-are-tech-users-has-grown-in-the-past-decade/

Kourtis LC, Regele OB, Wright JM, Jones GB. Digital biomarkers for Alzheimer’s disease: The mobile/wearable devices opportunity. npj Digital Med. 2019;2:1–9.

Piau A, Wild K, Mattek N, Kaye J. Current State of Digital Biomarker Technologies for Real-Life, Home-Based Monitoring of Cognitive Function for Mild Cognitive Impairment to Mild Alzheimer Disease and Implications for Clinical Care: Systematic Review. J Med Internet Res. 2019;21:e12785.

Aggarwal NT, Wilson RS, Beck TL, Bienias JL, Bennett DA. Motor Dysfunction in Mild Cognitive Impairment and the Risk of Incident Alzheimer Disease. Arch Neurol. 2006;63:1763–1769.

Buracchio T, Dodge HH, Howieson D, Wasserman D, Kaye J. The Trajectory of Gait Speed Preceding Mild Cognitive Impairment. Arch Neurol. 2010;67:980–986.

de la Fuente Garcia S, Ritchie CW, Luz S. Artificial intelligence, speech, and language processing approaches to monitoring Alzheimer’s disease: a systematic review. J Alzheimers Dis. 2020;78:1547–1574.

Rycroft SS, Quach LT, Ward RE, Pedersen MM, Grande L, Bean JF. The relationship between cognitive impairment and upper extremity function in older primary care patients. J Gerontol A Biol Sci Med Sci. 2019;74:568–574.

Robin J, Xu M, Kaufman LD, Simpson W. Using digital speech assessments to detect early signs of cognitive impairment. Front Digit Health. 2021;3:749758.

Konig A, Satt A, Sorin A, Hoory R, Derreumaux A, David R, et al. Use of speech analyses within a mobile application for the assessment of cognitive impairment in elderly people. Curr Alzheimer Res. 2018;15:120–129.

Yamada Y, Shinkawa K, Shimmei K. Atypical repetition in daily conversation on different days for detecting Alzheimer disease: evaluation of phone-call data from a regular monitoring service. JMIR Ment Health. 2020;7:e16790.

Ntracha A, Iakovakis D, Hadjidimitriou S, Charisis VS, Tsolaki M, Hadjileontiadis LJ. Detection of mild cognitive impairment through natural language and touchscreen typing processing. Front Digit Health. 2020;2:567158.

Mc Ardle R, Del Din S, Galna B, Thomas A, Rochester L. Differentiating dementia disease subtypes with gait analysis: feasibility of wearable sensors? Gait Posture. 2020;76:372–376.

Giannouli E, Bock O, Zijlstra W. Cognitive functioning is more closely related to real-life mobility than to laboratory-based mobility parameters. Eur J Ageing. 2018;15:57–65.

Josephy-Hernandez S, Norise C, Han JY, Smith KM. Survey on acceptance of passive technology monitoring for early detection of cognitive impairment. Digit biomark. 2021;5:9–15.

Minen MT, Gopal A, Sahyoun G, Stieglitz E, Torous J. The functionality, evidence, and privacy issues around smartphone apps for the top neuropsychiatric conditions. J Neuropsychiatry Clin Neurosci. 2021;33:72–79.

Sila-Nowicka K, Thakuriah P. The trade-off between privacy and geographic data resolution. A case of GPS trajectories combined with the social survey results. ISPRS-Int Arch Photogramm, Remote Sens Spat Inf Sci. 2016;49B2:535–542.

Dubois B, Villain N, Frisoni G, Rabinovici G, Sabbagh M, Cappa S, et al. Clinical diagnosis of Alzheimer’s disease: recommendations of the International Working Group. Lancet Neurol. 2021;20:484–496.

Glymour MM, Brickman AM, Kivimaki M, Mayeda ER, Chêne G, Dufouil C, et al. Will biomarker-based diagnosis of Alzheimer’s disease maximize scientific progress? Evaluating proposed diagnostic criteria. Eur J Epidemiol. 2018;33:607–612.

Singh S, Strong RW, Jung L, Li FH, Grinspoon L, Scheuer LS, et al. The TestMyBrain digital neuropsychology toolkit: development and psychometric characteristics. J Clin Exp Neuropsychol. 2021;43:786–795.

Papp KV, Samaroo A, Chou HC, Buckley R, Schneider OR, Hsieh S, et al. Unsupervised mobile cognitive testing for use in preclinical Alzheimer’s disease. Alzheimers Dement. 2021;13:e12243.

Rast P, MacDonald SWS, Hofer SM. Intensgns for. Res Aging GeroPsych. 2012;25:45–55.

Lancaster C, Koychev I, Blane J, Chinner A, Chatham C, Taylor K, et al. Gallery Game: Smartphone-based assessment of long-term memory in adults at risk of Alzheimer’s disease. J Clin Exp Neuropsychol. 2020;42:329–343.

Bielak AAM, Hultsch DF, Strauss E, MacDonald SWS, Hunter MA. Intraindividual variability in reaction time predicts cognitive outcomes 5 years later. Neuropsychology. 2010;24:731–741.

Duchek JM, Balota DA, Tse CS, Holtzman DM, Fagan M, Goate AM, et al. The Utility of Intraindividual Variability in Selective Attention Tasks as an Early Marker for Alzheimer’s Disease. Neuropsychology. 2009;23:746–758.

Sliwinski MJ, Mogle JA, Hyun J, Munoz E, Smyth JM, Lipton RB. Reliability and Validity of Ambulatory Cognitive. Assess Assess. 2018;25:14–30.

Brewster PWH, Rush J, Ozen L, Vendittelli R, Hofer SM. Feasibility and Psychometric Integrity of Mobile Phone-Based Intensive Measurement of Cognition in Older Adults. Exp Aging Res. 2021;47:303–321.

Germine L, Strong RW, Singh S, Sliwinski MJ. Toward dynamic phenotypes and the scalable measurement of human behavior. Neuropsychopharmacol. 2021;46:209–216.

Scharfen J, Peters JM, Holling H. Retest effects in cognitive ability tests: A meta-analysis. Intelligence. 2018;67:44–66.

Gillan CM, Rutledge RB. Smartphones and the Neuroscience of Mental Health. Annu Rev Neurosci. 2021;44:129–151.

Lumsden J, Skinner A, Woods AT, Lawrence NS, Munafò M. The effects of gamelike features and test location on cognitive test performance and participant enjoyment. PeerJ. 2016;4:e2184.

Valladares-Rodríguez S, Pérez-Rodríguez R, Anido-Rifón L, Fernández-Iglesias M. Trends on the application of serious games to neuropsychological evaluation: A scoping review. J Biomed Inf. 2016;64:296–319.

Leduc-McNiven K, White B, Zheng H, McLeod DR & Friesen RM Serious games to assess mild cognitive impairment: ‘The game is the assessment’. Res Rev Insights 2, (2018)

Isernia S, Cabinio M, Di Tella S, Pazzi S, Vannetti F, Gerli F, et al. Diagnostic validity of the smart aging serious game: an innovative tool for digital phenotyping of mild neurocognitive disorder. J Alzheimers Dis. 2021;83:1789–1801.

Tong T, Chignell M, Tierney MC, Lee J. A serious game for clinical assessment of cognitive status: validation study. JMIR Serious Games. 2016;4:e7.

Valladares-Rodriguez S, Fernández-Iglesias MJ, Anido-Rifón L, Facal D, Pérez-Rodríguez R. Episodix: a serious game to detect cognitive impairment in senior adults. A psychometric study. PeerJ. 2018;6:e5478.

Zygouris S, Giakoumis D, Votis K, Doumpoulakis S, Ntovas K, Segkouli S, et al. Can a virtual reality cognitive training application fulfill a dual role? using the virtual supermarket cognitive training application as a screening tool for mild cognitive impairment. J Alzheimers Dis. 2015;44:1333–1347.

Pedersen MK, Díaz CMC, Alba-Marrugo MA, Amidi A, Basaiawmoit RV, Bergenholtz C et al. Cognitive Abilities in the Wild: Population-scale game-based cognitive assessment. Preprint at http://arxiv.org/abs/2009.05274 (2021)

Shute VJ, Wang L, Greiff S, Zhao W, Moore G. Measuring problem solving skills via stealth assessment in an engaging video game. Comput Hum Behav. 2016;63:106–117.

Coughlan G, Coutrot A, Khondoker M, Minihane AM, Spiers H, Hornberger M. Toward personalized cognitive diagnostics of at-genetic-risk Alzheimer’s disease. PNAS. 2019;116:9285–9292.

Mishra J, Anguera JA, Gazzaley A. Video Games for Neuro-Cognitive Optimization. Neuron. 2016;90:214–218.

Hsu W-Y, Rowles W, Anguera JA, Zhao C, Anderson A, Alexander A, et al. Application of an adaptive, digital, game-based approach for cognitive assessment in multiple sclerosis: observational study. J Med Internet Res. 2021;23:e24356.

Germine L, Reinecke K, Chaytor NS. Digital neuropsychology: challenges and opportunities at the intersection of science and software. Clin Neuropsychol. 2019;33:271–286.

Van Patten R. Introduction to the Special Issue - Neuropsychology from a distance: Psychometric properties and clinical utility of remote neurocognitive tests. JCEN. 2021;43:767–77.

Lau-Zhu A, Lau MPH, McLoughlin G. Mobile EEG in research on neurodevelopmental disorders: opportunities and challenges. Dev Cogn Neurosci. 2019;36:100635 https://doi.org/10.1016/j.dcn.2019.100635

Babiloni C, Del Percio C, Lizio R, Marzano N, Infarinato F, Soricelli A, et al. Cortical sources of resting state electroencephalographic alpha rhythms deteriorate across time in subjects with amnesic mild cognitive impairment. Neurobiol Aging. 2014;35:130–42. https://doi.org/10.1016/j.neurobiolaging.2013.06.019

Dubois B, Epelbaum S, Nyasse F, Bakardjian H, Gagliardi G, Uspenskaya O, et al. Cognitive and neuroimaging features and brain β-amyloidosis in individuals at risk of Alzheimer’s disease (INSIGHT-preAD): a longitudinal observational study. Lancet Neurol 2018;17:335–346. https://doi.org/10.1016/S1474-4422(18)30029-2

Babiloni C, Lizio R, Del Percio C, Marzano N, Soricelli A, Salvatore E, et al. Cortical sources of resting state EEG rhythms are sensitive to the progression of early stage Alzheimer’s disease. J Alz Dis. 2013;34:1015–35. https://doi.org/10.3233/JAD-121750

Babiloni C, Blinowska K, Bonanni L, Cichocki A, De Haan W, Del Percio C, et al. What electrophysiology tells us about Alzheimer’s disease: a window into the synchronization and connectivity of brain neurons. Neurobiol Aging. 2020;85:58–73. https://doi.org/10.1016/j.neurobiolaging.2019.09.008

Horvath A, Szucs A, Csukly G, Sakovics A, Stefanics G, Kamondi AEEG. and ERP biomarkers of Alzheimer’s disease: a critical review. Front Biosci - Landmark. 2018;23:183–220. https://doi.org/10.2741/4587

Rodrigues PM, Bispo BC, Garrett C, Alves D, Teixeira JP, Freitas D. Lacsogram: A New EEG Tool to Diagnose Alzheimer’s Disease. IEEE J Biomed Heal Inform. 2021;25:3384–3395. https://doi.org/10.1109/JBHI.2021.3069789

Choi J, Ku B, You YG, et al. Resting-state prefrontal EEG biomarkers in correlation with MMSE scores in elderly individuals. Sci Rep. 2019;9:10468. https://doi.org/10.1038/s41598-019-46789-2

Dinteren R, Arns M, Jongsma MLA, Kessels RPC. P300 development across the lifespan: a systematic review and meta-analysis. PLoS One. 2014;9:87347. https://doi.org/10.1371/journal.pone.0087347

Twomey DM, Murphy PR, Kelly SP, O’Connell RG. The classic P300 encodes a build-to-threshold decision variable. Eur J Neurosci. 2015;42:1636–43. https://doi.org/10.1111/ejn.12936

Paitel ER, Samii MR, Nielson KA. A systematic review of cognitive event-related potentials in mild cognitive impairment and Alzheimer’s disease. Behav Brain Res. 2021;396:112904. https://doi.org/10.1016/j.bbr.2020.112904

Pavarini SCI, Brigola AG, Luchesi BM, Souza ÉN, Rossetti ES, Fraga FJ, et al. O uso do P300 como ferramenta para avaliação do processamento cognitivo em envelhecimento saudável. Dement e Neuropsychol. 2018;12:1–11. https://doi.org/10.1590/1980-57642018dn12-010001

Melynyte S, Wang GY, Griskova-Bulanova I. Gender effects on auditory P300: A systematic review. Int J Psychophysiol. 2018. https://doi.org/10.1016/j.ijpsycho.2018.08.009

Fruehwirt W, Dorffner G, Roberts S, Gerstgrasser M, Grossegger D, Schmidt R, et al. Associations of event-related brain potentials and Alzheimer’s disease severity: a longitudinal study. Prog Neuro-Psychopharmacol Biol Psychiatry. 2019;92:31–38. https://doi.org/10.1016/j.pnpbp.2018.12.013

Cintra MTG, Ávila RT, Soares TO, Cunha LCM, Silveira KD, de Moraes EN, et al. Increased N200 and P300 latencies in cognitively impaired elderly carrying ApoE ε-4 allele. Int J Geriatr Psychiatry. 2018;92:31–38. https://doi.org/10.1016/j.pnpbp.2018.12.013

Porcaro C, Balsters JH, Mantini D, Robertson IH, Wenderoth N. P3b amplitude as a signature of cognitive decline in the older population: An EEG study enhanced by Functional Source Separation. Neuroimage. 2019;184:535–546. https://doi.org/10.1016/J.NEUROIMAGE.2018.09.057

Lee MS, Lee SH, Moon EO, Moon YJ, Kim S, Kim SH, et al. Neuropsychological correlates of the P300 in patients with Alzheimer’s disease. Prog Neuro-Psychopharmacol Biol Psychiatry. 2013;40:62–9. https://doi.org/10.1016/j.pnpbp.2012.08.009

Bonanni L, Franciotti R, Onofrj V, Anzellotti F, Mancino E, Monaco D, et al. Revisiting P300 cognitive studies for dementia diagnosis: early dementia with Lewy bodies (DLB) and Alzheimer disease (AD). Neurophysiol Clin Neurophysiol. 2010;40:255–65. https://doi.org/10.1016/j.neucli.2010.08.001

Dimitriadis SI, Laskaris NA, Bitzidou MP, Tarnanas I, Tsolaki MN. A novel biomarker of amnestic MCI based on dynamic cross-frequency coupling patterns during cognitive brain responses. Front Neurosci. 2015;9:350. https://doi.org/10.3389/fnins.2015.00350

Näätänen R, Kujala T, Kreegipuu K, Carlson S, Escera C, Baldeweg T, et al. The mismatch negativity: An index of cognitive decline in neuropsychiatric and neurological diseases and in ageing. Brain. 2011;134:3435–53. https://doi.org/10.1093/brain/awr064

Lindín M, Correa K, Zurrón M, Díaz F. Mismatch negativity (MMN) amplitude as a biomarker of sensory memory deficit in amnestic mild cognitive impairment. Front Aging Neurosci. 2013;5:79 https://doi.org/10.3389/fnagi.2013.00079

Ruzzoli M, Pirulli C, Mazza V, Miniussi C, Brignani D. The mismatch negativity as an index of cognitive decline for the early detection of Alzheimer’s disease. Sci Rep. 2016;6:33167. https://doi.org/10.1038/srep33167

Laptinskaya D, Thurm F, Küster OC, Fissler P, Schlee W, Kolassa S et al. Auditory memory decay as reflected by a new mismatch negativity score is associated with episodic memory in older adults at risk of dementia. Front Aging Neurosci. 2018. https://doi.org/10.3389/FNAGI.2018.00005/PDF.

Maskeliunas R, Damasevicius R, Martisius I, Vasiljevas M, Consumer-grade EEG. devices: are they usable for control tasks? PeerJ. 2016. https://doi.org/10.7717/peerj.1746

Krigolson OE, Williams CC, Norton A, Hassall CD, Colino FL. Choosing MUSE: Validation of a low-cost, portable EEG system for ERP research. Front Neurosci. 2017;11:109. https://doi.org/10.3389/fnins.2017.00109

Kuziek JWP, Shienh A, Mathewson KE. Transitioning EEG experiments away from the laboratory using a Raspberry Pi 2. J Neurosci Methods. 2017;277:75–82. https://doi.org/10.1016/j.jneumeth.2016.11.013

McWilliams EC, Barbey FM, Dyer JF, Islam MN, McGuinness B, Murphy B, et al. Feasibility of Repeated Assessment of Cognitive Function in Older Adults Using a Wireless, Mobile, Dry-EEG Headset and Tablet-Based Games. Front Psychiatry. 2021;12:574482. https://doi.org/10.3389/fpsyt.2021.574482

Cruz-Garza JG, Brantley JA, Nakagome S, Kontson K, Megjhani M, Robleto D et al. Deployment of mobile EEG technology in an art museum setting: Evaluation of signal quality and usability. Front Hum Neurosci. 2017. https://doi.org/10.3389/FNHUM.2017.00527/BIBTEX.