Abstract

Aperiodic sinusoidal patterns that are cast by a GOBO (GOes Before Optics) projector are a powerful tool for optically measuring the surface topography of moving or deforming objects with very high speed and accuracy. We optimised the first experimental setup that we were able to measure inflating car airbags at frame rates of more than 50 kHz while achieving a 3D point standard deviation of ~500 µm. Here, we theoretically investigate the method of GOBO projection of aperiodic sinusoidal fringes. In a simulation-based performance analysis, we examine the parameters that influence the accuracy of the measurement result and identify an optimal pattern design that yields the highest measurement accuracy. We compare the results with those that were obtained via GOBO projection of phase-shifted sinusoidal fringes. Finally, we experimentally verify the theoretical findings. We show that the proposed technique has several advantages over conventional fringe projection techniques, as the easy-to-build and cost-effective GOBO projector can provide a high radiant flux, allows high frame rates, and can be used over a wide spectral range.

Similar content being viewed by others

Introduction

Measuring the three-dimensional (3D) topography of macroscopic objects by using structured light requires

-

(i)

the (sequential) projection of N ≥ 1 pattern(s) onto the object and

-

(ii)

the simultaneous recording of the pattern(s) that are modulated by the object topography.

Years of research and development have shown that the accuracy that can be achieved by such pattern projection systems depends directly on the number N of projected patterns1,2,3. Along with the increased demands on measurement accuracy, in recent years, requirements on measurement speed have also risen, which necessitate high-speed pattern projection and recording and fast computation and evaluation. In particular, dynamically moving or deforming objects are to be measured.

In general, well-known algorithms for determining 3D object coordinates by evaluating projected patterns are based on detecting two-dimensional (2D) point correspondences between two cameras or between one camera and the projector4,5,6,7. Corresponding points are defined as 2D sensor points that are images of the same 3D object point. When using a series of N patterns, triangulation algorithms require temporal consistency of these point correspondences during the period NT = N/f, where f = T−1 is the projection and recording frame rate. In dynamic measurement situations, i.e., if the measurement object and the sensor system move relative to each other, this rigid assignment will not be satisfied.

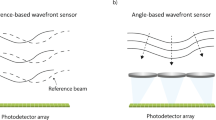

To re-establish a quasi-static measurement scenario, two approaches are possible: first, methodological modifications could be made, e.g., reducing the number of patterns N and, therefore, the period NT; projecting alternative patterns; or compensating for the relative movement. In particular, single-shot techniques (N = 1), such as Fourier transform profilometry8,9, multi-line triangulation10, and wave grid-based active stereo11, are popular and efficient ways to minimise the measurement time. Second, the projector and camera frame rate could be significantly increased. The minimum of the two frame rates limits the measurement speed. In contrast to cameras with high sensitivity, frame rate, and resolution (several kHz at megapixel resolution), which have been commercially available for some time, conventional projectors are limited in terms of speed (especially in 8-bit greyscale mode), radiant flux, and applicable light spectral range12,13,14. Thus, the focus is on improving traditional projection systems, along with making potentially necessary changes in the design of the projected patterns.

In addition to the well-known and extensively studied phase-shifting fringe projection and phase value calculation15,16,17, 3D object coordinates can be determined by evaluating a pattern sequence via temporal correlation18,19. At each image point (x(1),y(1)) in camera 1, a temporal grey value sequence \(I_1^{\left( 1 \right)}, \ldots ,I_N^{\left( 1 \right)}\) is measured and correlated with the grey value stack \(I_1^{\left( 2 \right)}, \ldots ,I_N^{\left( 2 \right)}\) of each pixel (x(2),y(2)) in camera 2 according to the normalised cross-correlation:

where \(\overline {I^{\left( j \right)}}\) is the arithmetic mean of the grey value sequence in camera j. Corresponding points are obtained by maximising the correlation coefficient ρ. If the system parameters are calibrated, 3D object coordinates can be calculated via triangulation of corresponding points20,21.

In contrast to phase-shifting methods, determining corresponding points via normalised cross-correlation does not require any knowledge of the pattern design or the variation between successive patterns. To be suitable for (dynamic) 3D measurements, the only prerequisites are a significant temporal variation of the intensity distribution and spatial frequencies that match the other system parameters. The camera resolution, magnitude of the pattern variation, and spatial frequencies of the patterns should be fine-tuned to minimise disturbing effects and obtain the optimum reconstruction accuracy.

Aperiodic sinusoidal fringes are a special type of temporal pattern coding22. In contrast to two-dimensionally varying patterns, such as speckle patterns23,24, aperiodic sinusoidal fringes vary solely in one dimension:

with spatially and temporally varying offset ai(x), amplitude bi(x), period length 2π/ci(x), and phase shift di(x). The loss of coding information in one dimension is compensated for by making use of the sensor geometry. Based on the extrinsic and intrinsic camera parameters, the search space for point correspondences can be reduced to so-called epipolar lines20,21. Then, an intensity variation along these lines is sufficient, i.e., the aperiodic sinusoidal fringes should be approximately perpendicular to the epipolar lines.

One novel approach to high-speed pattern projection is the GOBO projection of aperiodic sinusoidal fringes25. In general, GOBO projectors consist of a light source, a light collector, a slide (GOBO = GOes Before Optics), and imaging optics. Changing the projected patterns can be realised by moving the GOBO, e.g., by rotating a GOBO wheel. To project aperiodic sinusoidal fringes, the GOBO wheel is equipped with aperiodic binary fringes, the projected image is slightly defocused, and the GOBO wheel is revolving during the camera exposure time. In this way, the wheel can be rotated continuously instead of in start/stop operation. Furthermore, the projector does not need to be synchronised with the cameras.

When using an appropriate light source, a GOBO projector can provide a radiant flux of several 100 W, thereby allowing for extremely low camera exposure times in the range of a few microseconds. With a 3D sensor that comprises a GOBO projector and two high-speed cameras, we were able to three-dimensionally capture highly dynamic processes, such as a soccer ball kick. The system enabled us to reconstruct 1300 independent point clouds per second at a resolution of 1 megapixel25. Higher frame rates of more than 50 kHz can be achieved when reducing the camera resolution and adjusting the rotational speed of the GOBO wheel accordingly.

After demonstrating the suitability of a GOBO projector for high-speed 3D measurements via mainly qualitative studies, quantitative investigations are necessary. In this paper, we theoretically study the dependency of the 3D reconstruction quality on various parameters of the GOBO projection-based system, e.g., the GOBO wheel’s rotational speed and the cameras’ exposure time.

Results

The quality of a 3D point cloud can be characterised by two crucial indicators: accuracy and completeness. When measuring an object with a GOBO projection-based sensor, occlusions might restrict the surface area that is covered by both the projector and the cameras, which limits the maximum number of points that can be reconstructed. The completeness p of a 3D point cloud specifies how many of these points have been correctly determined. The accuracy can be described by the standard deviation σ3D of non-outlier points from the known surface. Naturally, the completeness should be as high as possible, i.e., p = 100%, and the standard deviation should be as low as possible. Therefore, the parameters of a GOBO projection-based 3D sensor, such as the distance between the cameras, the working distance, the number of strips and slits in the GOBO wheel, and its rotational speed, must be carefully designed to match one another.

Table 1 summarises the parameters of a GOBO projection-based 3D sensor, which affect the accuracy and the completeness of the 3D reconstruction. Some of these variables are related to each other, e.g., the number of strips and slits in the GOBO wheel and the average angle that is covered by one strip or slit. The third column lists values that are realised in the current setups. To facilitate understanding of the parameters, Fig. 1 illustrates some of the variables that are shown in Table 1. Figure 1a shows a schematic top view of the camera-projector-camera arrangement. The projection centre, which is denoted by P, should be midway between the two camera centres, which are denoted as C1 and C2. The principal rays of the cameras and the projector intersect at the centre of the cuboid measurement volume. Figure 1b shows an exemplary GOBO wheel with 36 aperiodic binary fringes. Only a square area of size a × a is illuminated and imaged into the measurement volume. According to their frame rate f = T−1, both cameras start acquiring an image at any time t0 + kT, \(k \in {\Bbb N}\). Throughout the exposure time texp, the GOBO wheel is continuously rotating through an angle of texpω.

Schematic illustration of a GOBO projection-based 3D sensor. Definitions of some of the variables that are listed in Table 1 by means of a a top view of the camera-projector-camera arrangement and b an exemplary GOBO wheel with aperiodic binary fringes

Aperiodic sinusoidal patterns

Using the simulation framework that is presented in the Methods section, we generated several thousand GOBO wheels with random parameters c (the ratio of the maximum and minimum strip or slit widths), σblur (the degree of defocusing of the GOBO wheel), and n (the number of illuminated strips + the number of illuminated slits). Then, we rendered camera images according to random parameters ω (the rotational speed of the GOBO wheel) and e (the ratio of camera exposure time texp and period T), added noise that corresponded to four signal-to-noise ratios (SNRs), and calculated 3D point clouds. For the exclusive presence of quantisation noise (SNR ≈ 29 dB), Fig. 2a shows the results that have standard deviations of σ3D = 50 µm or lower, which are represented in parallel coordinates. Parameter sets that lead to a completeness of p = 100% are coloured according to the standard deviation. Parameter sets that result in a completeness of p < 100% are shown in black (background). For some of the parameters, the green curves, which correspond to small standard deviations, are concentrated around certain values (see the orange rectangles).

a, d p and σ3D as functions of randomly varied parameters c, σblur, n, ω, and e. b The minimum standard deviation σ3D, which is shown for each of the five parameters. c, e A camera image of the projection of the optimal GOBO pattern onto a plane. f The 3D point standard deviation σ3D as a function of the number of aperiodic sinusoidal patterns (solid lines) and phase-shifted sine-like patterns (dashed lines) that are used for reconstruction

For each of five parameters, namely, c, σblur, n, ω, and e, Fig. 2b shows the minimum standard deviation σ3D of the point clouds with a completeness p = 100% that have been reconstructed during the simulation. In this representation, the optimal ranges become apparent, which are indicated by orange rectangles. They enable the derivation of general guidelines for designing an optimum GOBO wheel for a specified sensor:

-

Based on the optimum range of the number n of illuminated strips and slits, the average fringe pitch should be approximately 22 px in the camera images. A larger fringe width results in a reduced measurement accuracy, while a smaller fringe width reduces the uniqueness of the sequence, thereby leading to a lower completeness.

-

The exposure time proportion, which is expressed as \(e = \frac{{t_{{\rm{exp}}}}}{T}\), does not exhibit a distinct minimum, i.e., there are accurate data sets for each value of e. For that reason, and since an exposure time proportion that is as large as possible is desired for high-speed measurements, it can be fixed to a reasonable value of e = 0.95, which takes the short time of 0.05T for data readout into account.

-

The rotational speed ω of the GOBO wheel should be such that the pattern is rotated by nearly half the average fringe pitch between each image acquisition. A higher rotational speed would ensure that very different areas of the GOBO wheel are illuminated successively so that the temporal intensity values are independent of each other. However, this would lead to substantial blurring of the fringes during the exposure time and, thus, to an undesired low grey value modulation.

-

The defocusing of the imaging lens should be adjusted such that the rotating pattern neither contains intensity plateaus nor has a poor modulation. If the projection is too sharp, the integration of the rotating pattern over the exposure time results in triangular or trapezoidal patterns instead of aperiodic sinusoidal patterns. If the projection is too blurry, the grey values of adjacent camera pixels do not differ substantially.

-

The ratio c of the maximum and minimum angles that are covered by one strip or slit should be between 2 and 2.5. The closer the ratio is to 1, the more periodic and, therefore, ambiguous the patterns become, such that completeness of 100% cannot be achieved. The larger the ratio, the more inhomogeneously the fringes are blurred. Either narrow fringes with very low modulation or broad fringes with unwanted intensity plateaus are obtained.

The randomness of the fringe generation can lead to different standard deviations σ3D and completeness values p for the same parameter set, which made it impossible for us to carry out any direct optimisation. Even if, e.g., we used the average or median of many results for each parameter set, optimisation algorithms became trapped in different local extrema each time, despite unchanged initial values. Therefore, within the restricted parameter ranges (see the orange rectangles in Fig. 2a, b; e = 0.95), we have generated several thousand additional random GOBO patterns and picked the one with p = 100% completeness that yielded the lowest standard deviation σ3D. The optimal result was the one that is shown in Table 2 for SNR ≈ 29 dB with a completeness of p = 100% and a standard deviation of σ3D ≈ 11.4 µm (which is equal to a relative standard deviation \(\sigma _{{\rm{3D}}}^{{\rm{rel}}} = \sigma _{{\rm{3D}}}/\sqrt {2s^2 + d^2} \approx 2.2 \times 10^{ - 5}\) with respect to the measurement volume diagonal). Figure 2c shows one of the corresponding camera images and a profile along the central line. Based on this optimal projected pattern, the full GOBO wheel can be constructed via repetition of this section so that the results remain approximately unchanged for all rotation angles of the GOBO wheel.

The randomly obtained optimum agrees very well with the results of previous theoretical and experimental investigations on aperiodic sinusoidal fringes26,27. In these investigations, we used the same sensor setup and obtained an optimum of 90 fringes within the measurement field. For GOBO-projected aperiodic sinusoidal patterns, the number of projected fringes inherently varies vertically due to the wheel layout. Therefore, the number of illuminated strips and slits, namely, n = 120, that was obtained for the parameter set in Table 2 is only realised in the upper part of the camera image. In the centre, the number of fringes is n ≈ 90 (see Fig. 2c), which corresponds very well to the values that were obtained in previous examinations.

Up to this point, we have taken into account spatial integration over the camera pixels and 8-bit quantisation in our simulation. However, cameras of real 3D sensors will exhibit two additional types of noise, which negatively affect the accuracy that can be achieved with the optimised patterns: shot noise and dark noise. We simulated the signal-dependent shot noise ne (Poisson distribution with expected value μe and standard deviation \(\sigma _e = \sqrt {\mu _e}\)) and the signal-independent dark noise nd (normal distribution with standard deviation σd) according to EMVA standard 128828 as described in the Methods section. We considered three levels of noise that correspond to signal-to-noise ratios of SNR ≈ 19 dB, 17 dB, and 15 dB. The following conclusions are drawn from the simulation results.

-

The lower the SNR, the lower the 3D point accuracy and the fewer pattern sets result in 100% point cloud completeness. High noise leads to a high standard deviation σ3D. However, noisy data can also cause the temporal grey value sequences of non-corresponding points to have a higher correlation coefficient than the actual homologous points. For this reason, for an SNR of 15 dB, 10 patterns are insufficient for achieving 100% completeness.

-

The lower the SNR, the higher the number of fringes that are necessary to obtain high accuracy, which is caused by the counteraction of two effects: For broad fringes, the integrated intensity over the finite area of a pixel approximates very well the projected intensity in the centre of the pixel. For narrow fringes, the grey values of adjacent pixels differ significantly, thereby making subpixel interpolation more reliable. The higher the noise, the more essential it is to have a large difference between adjacent grey values.

Table 2 shows the optimum GOBO parameters that we obtained for SNR ≈ 19 dB, 17 dB, and 15 dB. Although the span of the standard deviation and completeness increases with decreasing signal-to-noise ratio, it is still possible to generate patterns that yield 100% completeness. For SNR ≈ 15 dB, the 3D point standard deviation is ~4.8 times higher than for 29 dB.

Phase-shifted sine-like patterns

According to the discussed studies on aperiodic sinusoidal fringes26,27, phase-shifted sinusoidal fringes are the limiting case of aperiodic sinusoidal fringes in terms of accuracy. Therefore, it is reasonable to investigate whether this is also the case with GOBO projection because the GOBO projector can be used to project phase-shifted sine-like patterns when synchronised with the cameras29,30. In the presented simulation framework (see Methods section), this can be carried out by setting c = 1 (the ratio of the maximum and minimum fringe widths) and ω = 2ϕavg/NT (the rotational speed of the GOBO wheel). Random generation of the remaining parameters, namely, n, σblur, and e, leads to the diagram that is shown in Fig. 2d for SNR ≈ 29 dB.

The comparatively poor completeness of the reconstructed point clouds is noticeable. Due to the periodicity of the sinusoidal patterns, the temporal grey value sequences are ambiguous and applying Eq. 1 yields many false correspondences, as the number of maxima of the correlation coefficient equals the number of fringe pairs within the disparity search range of ≈ ±110 px. These ambiguities must be eliminated via phase unwrapping31,32. However, conventional methods that are based on the evaluation of the phase difference between adjacent pixels fail on objects with sharp edges or large depth. Therefore, a variety of techniques have been developed for localising corresponding fringe periods.

The most robust phase unwrapping method is the projection of a sequence of Gray code patterns33,34,35, which leads to a significant increase in the total length of the pattern sequence N. Moreover, it is not feasible to project Gray code patterns using the GOBO projection principle. The projection of phase-shifted sinusoidal fringes with different period lengths36,37,38,39,40,41 is also impracticable because this would require divergent rotational speeds of the GOBO wheel. Instead, an additional pattern must be embedded in the sinusoidal pattern, e.g., a one-dimensional binary De Bruijn sequence42 or a band-limited 1/f noise pattern43,44, which can be achieved, for example, by placing an additional (static) slide in front of the GOBO wheel29,30.

An embedded pattern reduces the signal-to-noise ratio of the phase values and, thus, the measurement accuracy. In addition, such an approach requires a spatial correlation that acts as a spatial frequency low-pass filter so that high-frequency components of the object surface are not taken into account. Therefore, in all studies of GOBO-projected phase-shifted sinusoidal patterns, we have considered only pure sinusoidal patterns. In the general case of an arbitrarily complex measurement object, these patterns lead to very low completeness. However, they yield high accuracy, as shown by the large number of yellow curves, which correspond to small standard deviations σ3D, in Fig. 2d. The optimum values of the parameters σblur, n, and ω are indicated by orange crosses. The exposure ratio e can again be chosen almost arbitrarily; it is set to 0.95.

In contrast to aperiodic sinusoidal patterns, the remaining independent parameters, namely, σblur and n, uniquely specify a phase-shifted sine-like pattern. Therefore, they can be easily optimised, e.g., by the downhill simplex method45. The results are listed in Table 2. As expected, phase-shifted sine-like patterns yield the lowest 3D point standard deviation. The achievable standard deviation is between 1.3 and 1.6 times smaller than with aperiodic sinusoidal patterns. However, if there are no special constraints on the measurement object, the periodic pattern cannot be used due to its ambiguities, which lead to poor completeness.

Figure 2e shows the optimum sine-like pattern for SNR ≈ 29 dB. The determined number of fringes is consistent with the findings of previous studies26,27, in which an optimal number of 100 fringes was obtained. In the centre of the optimised GOBO-projected phase-shifted pattern, 102 fringes are projected, which demonstrates the high degree of agreement between the investigations.

Naturally, the achievable 3D accuracy depends directly on the number of patterns that are used for the reconstruction. Figure 2f shows this dependence for both phase-shifted and aperiodic sinusoidal patterns. For example, with 10 aperiodic sinusoidal patterns, the same standard deviation is obtained as with 5–6 phase-shifted sinusoidal patterns, which is in line with the results of previous investigations of the measurement technique26,27. However, aperiodic sinusoidal patterns yield a significantly higher completeness of p = 100%, thereby making resolving ambiguities by projection of additional patterns unnecessary. Therefore, GOBO projection of aperiodic sinusoidal fringes is an excellent alternative to the established phase-shifting technique. In addition, it offers the potential for an extremely fast and bright projection compared to conventional projectors and it can easily be used in a wide spectral range25.

Experimental evaluation

It is useful to experimentally verify the simulation results. However, the diagram that is shown in Fig. 2a suggests that it is not practical to experimentally vary all five parameters that have been considered so far: c, σblur, n, ω, and e. Manufacturing many GOBO wheels to vary the number of fringes n and the fringe width ratio c would be very cumbersome and expensive. Therefore, we decided to use one of our existing sensors (see Fig. 3a)46,47 and varied the projection blur (in the form of the standard deviation σblur of a Gaussian blur) and the rotational speed ω = ϕ/T of the GOBO wheel.

a A photograph of the sensor that is used. b, c The measured and e, f simulated dependency of the 3D point standard deviation σ3D and point cloud completeness p on the degree of projector defocusing, which is approximated by a Gaussian blur with standard deviation σblur, and the rotational speed ω of the GOBO wheel. d A detailed view of the GOBO wheel of the sensor that is shown in a. Undesired opaque spots, which may negatively affect the measurement accuracy, are clearly recognisable

For each combination of σblur and ω, we recorded 1000 images of the aperiodic sinusoidal fringes that were projected onto a granite slab. We used 10 images to calculate each 3D point cloud, thereby obtaining 100 independent data sets per parameter combination. Figure 3b, c shows the resulting average 3D standard deviation σ3D and average point cloud completeness p, respectively, as functions of σblur and ω. The lowest achievable degree of defocus leads to the highest accuracy. Moreover, the standard deviation and completeness show an opposite trend within the scanned parameter range: whereas for a high measurement accuracy, the rotational speed should be comparatively low, the completeness of the calculated point clouds increases with increasing rotational speed. A suitable parameter combination is, e.g., σblur = 15 µm and ω = 0.15°/T, which results in a 3D standard deviation of 150 µm and a completeness of 100%.

To determine whether the experimental measurement data match the theoretically expected results, we simulated the 3D data generation of the NIR scanner in the same way as described in the Methods section, but with modified parameters that took the deviating sensor geometry into account (see Table 3). The first step (the random generation of the GOBO wheel) was obsolete, as the design of the wheel was known. For the dark and shot noise that we added to the rendered camera images, we assumed values that corresponded to a signal-to-noise ratio of SNR ≈ 17 dB. In the simulation, we were able to vary the two parameters, namely, σblur and ω, more finely and within a larger range of values than in the experiment. The resulting diagrams for the standard deviation and completeness are shown in Fig. 3e, f, respectively. The optimum results are achieved for σblur = 7.5 µm and ω = 0.17°/T. For this parameter combination, the 3D standard deviation is σ3D ≈ 130 µm and the completeness is p = 100%.

Even if the simulated values very well agree with the experimental results, it is likely that the comparatively high assumed camera noise (SNR ≈ 17 dB) is lower in practice and the 3D accuracy is negatively affected by another effect. There are many indications that the GOBO wheel itself plays a major role since its production quality is not optimal. According to Fig. 3d, especially in the magnified view, the fringes on the GOBO wheel are strongly frayed and there are many dark spots in areas that should be transparent. The GOBO wheel that is shown here and was used in the NIR scanner was manufactured by applying an aluminium layer onto a 1.1 mm thick substrate of borosilicate glass, which was partially removed by a laser beam. Due to the recognisable artefacts, we will evaluate alternative fabrication methods. In the near future, we are planning to produce GOBO wheels via electron-beam lithography.

Discussion

We have studied the performance of the novel principle of 3D shape measurement using GOBO-projected aperiodic sinusoidal patterns25. For this purpose, we have varied five key parameters that influence the quality of the measurement result (e.g., the number of fringes and the rotational speed of the GOBO wheel) in an extensive rendering simulation of the measurement of a plane object. Two main conclusions are drawn from the results of our investigations. First, when setting up a GOBO projection-based 3D sensor, the parameters must be tuned carefully, as small variations of parameters can lead to substantially different 3D results. Second, it is possible to identify parameters of a GOBO-projected pattern that ensure a small standard deviation and high completeness of the 3D point cloud. In this way, e.g., 10 GOBO-projected aperiodic sinusoidal patterns can lead to the same measurement accuracy as 5 to 6 GOBO-projected phase-shifted sine-like patterns, but without requiring phase unwrapping. This result confirms the findings of previous investigations of the principle of projecting aperiodic sinusoidal patterns26,27.

Since corresponding points are detected solely via temporal correlation, the results that are obtained for the plane measurement object can be generalised to arbitrary objects (see Supplementary Video S1), demonstrating the excellent suitability of GOBO projection of aperiodic sinusoidal patterns for high-speed 3D shape measurement, which in addition to the generation of point clouds with high accuracy and completeness, is characterised by the potential for very fast pattern variation and high radiant flux. The results of the exemplary measurement that is shown in Fig. 4 demonstrate this, as we were able realise a 3D rate of 5.5 kHz, which corresponds to a temporal resolution of just over 180 µs (see also Supplementary Video S2). Moreover, due to the pattern generation via a metal mask, a GOBO projector is suitable for application in a wide spectral range that is beyond the visible light range.

High-speed 3D measurement of the impact of a 40-bar nitrogen jet on a 400 × 400 mm2 pillow that is attached to a polystyrene plate. a Snapshots of the recorded process at five points in time. b Camera images of the GOBO-projected aperiodic sinusoidal fringes, which are recorded with a resolution of 512 × 408 px at a frame rate of 55.2 kHz. c Reconstructed point clouds at a 3D rate of 5.5 kHz (see also Supplementary Video S2)

Because of its benefits, the principle of GOBO projection should be further investigated. Future research should focus on studying the dependence of the 3D measurement quality on the number of projected patterns and on the motion of the measurement object. The projection of a smaller number of patterns generally results in lower measurement accuracy, point cloud completeness, and/or density. However, it might be reasonable to use fewer patterns for 3D reconstruction of fast moving objects to minimise the acquisition time. Therefore, it is of particular interest to examine up to which object speeds the GOBO projection of a series of aperiodic sinusoidal patterns is superior to common single-shot methods.

Materials and methods

Simulation framework

To identify an optimal set of GOBO wheel parameters within a simulation framework, not all of the parameters that are shown in Table 1 should be freely varied because the solution space would be inconveniently large and some of the parameters are not independent. For instance, equally scaling the GOBO wheel and the size of the illuminated area by a specified factor will lead to the same results, as the cameras observe the same patterns. Therefore, we simulate a typical sensor setup and set some of the parameters, e.g., a distance of 200 mm between the cameras, a working distance of 1 m, and a measurement volume of 300 × 300 × 300 mm3 (see the last column of Table 1). Hence, the optimisation problem is reduced to a five-dimensional problem with the following variables:

-

c = 1…10 (the ratio of the maximum and minimum strip or slit widths),

-

σblur = 0…50 µm (the degree of defocusing of the GOBO wheel),

-

n = 20…400 (the number of illuminated strips + the number of illuminated slits),

-

ω = 0.001°/T…2.5°/T (the rotational speed of the GOBO wheel), and

-

e = 0.5…1 (the ratio of the camera exposure time texp and period T).

Figure 5 shows a block diagram of the simulation framework. The procedure can be divided into the five steps explained in next sections.

According to the parameters c and n, a part of a GOBO wheel is generated (1). Based on the parameters σblur, ω, and e, subpatterns of the rotating GOBO wheel are determined (2). The subpatterns that are related to a pattern are projected onto a plane and the corresponding camera images are rendered (3). After computing the disparity map (4) and reconstructing the 3D point cloud (5), the standard deviation σ3D and completeness p are estimated

Generation of the GOBO wheel

First, n random values Xi are generated, each between 1 and c. Then, the values

represent the angles of change between transparent and opaque fringes in the illuminated part of the GOBO wheel (0 < φj ≤ δ). The resulting GOBO mask contains n strips and slits, which each cover an angle between φmin and φmax = cφmin ≥ φmin.

Generation of the projected patterns

The GOBO wheel is continuously rotating at a speed ω = ϕ/T, i.e., between successive image acquisitions, the GOBO wheel has been rotated by an angle of ϕ. However, to take the movement during the exposure time and the ratio of the exposure time texp and period T into account, the wheel is rotated by subangles ϕi. We opted for 50 subpatterns: ϕi = iϕe/50. For each of the 50 subpositions, a square of size a × a = 10 × 10 mm2 with a distance of r = 25 mm from the GOBO wheel centre is cut out. The defocusing of the imaging lens is approximated by a Gaussian blur with standard deviation σblur.

Rendering of the camera images

The generated GOBO subpatterns are projected onto a plane that is parallel to the GOBO wheel at a working distance of 1 m. The corresponding camera images with a resolution of 1024 × 1024 px are rendered using a ray-tracer on the basis of a physically based rendering system, namely, “PBRT”48. Each set of 50 subimages is averaged to form the camera image, thus keeping motion blur in mind. Altogether, N = 10 images per camera are generated, which are rectified according to the camera-projector-camera arrangement21,49. In the rectified images with coordinates \(\left( x{\prime}_{\hskip-3pt1} ,y\prime_{\hskip-3pt1} \right)\) and \(\left( {x\prime_{\hskip-3pt2} ,y\prime_{\hskip-3pt2} } \right)\), corresponding points lie on the same horizontal line, i.e., \(y\prime_{\hskip-3pt1} = y\prime_{\hskip-3pt2}\).

Computation of the disparity map

The rectified images are used to calculate the coefficient ρ of the normalised cross-correlation according to Eq. 1 between each pixel in camera 1 and pixels on the same horizontal line in camera 2. The search area is limited by the measurement volume, as the distance of w ± d/2 = (1 ± 0.15) m from the sensor corresponds to a disparity search range of ≈ ± 110 px. The global maximum of the correlation coefficient within this disparity search range is considered to occur at the corresponding point. Subpixel accuracy is achieved via linear grey value interpolation between adjacent pixels in each rectified image of camera 2, with the aim of maximising ρ.

Reconstruction of the 3D point cloud

Based on the disparity map, for each pair of corresponding points, namely, \(\left( {x\prime_{\hskip-3pt1} ,y\prime } \right)\) and \(\left( {x\prime_{\hskip-3pt2} ,y\prime } \right) = \left( {x\prime_{\hskip-3pt1} - {{disp}},y\prime } \right)\), a point with homogeneous coordinates

can be calculated, where κ is the camera constant of the rectified system (in pixel units), \(c\prime_{\hskip-3ptx1}\) and \(c\prime_{\hskip-3pty1}\) are the coordinates of the (rectified) principal point in camera 1, and \(c\prime_{\hskip-3ptx2}\) is the x-coordinate of the (rectified) principal point in camera 2. The resulting point cloud is compared with the known plane so that outliers can be identified. The point cloud completeness p is given by the ratio of the number of correct points mcorrect and the maximum possible number of points mmax:

After removing the mfalse outliers, the standard deviation σ3D of the remaining 3D points from the plane is calculated.

To take the various types of noise of real cameras into account, step 3 (i.e., Rendering of the camera images) can be extended by following EMVA standard 128828. Let the output of the ray-tracer be the number of photons μp that impinge on each camera pixel. Depending on the total quantum efficiency η(λ), they generate a number of electrons, which is expressed as μe = η(λ)μp. Without loss of generality, we set η(λ) = 1. Then, the number of electrons μe = μp fluctuates with a signal-dependent shot noise ne (Poisson distribution with standard deviation \(\sigma _e = \sqrt {\mu _e}\)) and a signal-independent dark noise nd (normal distribution with standard deviation σd). The noisy number of electrons

is converted to a grey value \(g^{{\rm{noisy}}} = K\mu _e^{{\rm{noisy}}}\) according to the overall system gain K. After clipping the grey value to the dynamic range of the camera, namely, 0…2b−1, it is rounded to the nearest integer to take analogue-to-digital conversion into account. The signal-to-noise ratio (SNR) can be expressed as

with the variance \(\sigma _q^2 = 1/12\,{\rm{DN}}\) of the uniformly distributed quantisation noise. In addition to the absence of shot and dark noise, we have decided to simulate three reasonable levels of noise, which are described as follows:

-

K = 1/25 DN/e−, σd = 0.5/K (low noise),

-

K = 1/10 DN/e−, σd = 1/K (medium noise), and

-

K = 1/5 DN/e−, σd = 2/K (high noise).

For a grey value of g = 255, these levels correspond to signal-to-noise ratios of SNR ≈ 19 dB, 17 dB, and 15 dB. When only considering quantisation noise (i.e., K→∞ and σd→0), the signal-to-noise ratio is SNR ≈ 29 dB.

In this way, we generated several thousand random parameter combinations and evaluated the resulting point clouds with respect to the standard deviation σ3D and completeness p. Then, we compared the results with those that were obtained from GOBO-projected phase-shifted sine-like patterns, as phase-shifting fringe projection is considered the gold standard for structured light-based 3D measurement. The simulation framework can easily be used to carry this out by setting c = 1 (the ratio of the maximum and minimum fringe widths) and ω = 2φavg/NT (the rotational speed of the GOBO wheel).

Experimental setup

To experimentally verify the simulation results, we used one of our existing GOBO projection-based sensors (see Fig. 3a)46,47. Since this 3D scanner is primarily intended for the irritation-free measurement of human faces, the aperiodic sinusoidal patterns are projected and detected in the near infrared (NIR) region at a wavelength of 850 nm. With the help of an additional RGB camera, colour information can be acquired simultaneously with the 3D measurement.

For our measurements, we used two Basler “acA2040-180kmNIR” measurement cameras at a resolution of 1024 × 1024 px and a frame rate of f = T−1 = 50 Hz. By using an exposure time of texp = 19 ms, we set the exposure time ratio to e = texp/T = 0.95. The cameras’ projection centres were of distance l = 0.23 m from each other and they observed a measurement field of size s × s = 0.5 × 0.5 m2 at a working distance of w = 1.5 m. The GOBO projector contained a GOBO wheel with a diameter of 66 mm. The GOBO wheel comprised ntot = 946 strips and slits of various widths with a known distribution, ~94 of which were illuminated at any point in time. All relevant parameters are summarised in Table 3. The grey highlighted cells indicate the two parameters that we have varied in the experiment: the projection blur (in the form of the standard deviation σblur of a Gaussian blur) and the rotational speed ω = ϕ/T of the GOBO wheel.

To determine the standard deviation σblur that corresponds to a specified level of projector defocusing, we placed a matte white sprayed planar granite slab with a calibrated peak-to-valley height of 4.55 µm parallel to the sensor at the working distance and illuminated it with the pattern of the stationary GOBO wheel. The resulting camera image was compared with differently blurred camera images of (hypothetical) binary fringes. The value σblur for which the sum of the squared deviations was minimal was assigned to the respective defocusing setting. Due to the inherent slight lens blur, a value of σblur = 0 could not be realised. In addition, excessively high values could not be achieved because defocusing settings that exceed a specified level produce additional effects that cannot be approximated by a Gaussian blur. Overall, we have realised values between σblur ≈ 15 µm and 60 µm. The rotational speed of the GOBO wheel was varied such that the wheel was rotated between 0.05° and 0.50° between two consecutive acquisition trigger signals.

References

Schaffer, M., Große, M., Harendt, B. & Kowarschik, R. Coherent two-beam interference fringe projection for highspeed three-dimensional shape measurements. Appl. Opt. 52, 2306–2311 (2013).

Große, M., Schaffer, M., Harendt, B. & Kowarschik, R. Fast data acquisition for three-dimensional shape measurement using fixed-pattern projection and temporal coding. Opt. Eng. 50, 100503 (2011).

Lutzke, P., Schaffer, M., Kühmstedt, P., Kowarschik, R. & Notni, G. Experimental comparison of phase-shifting fringe projection and statistical pattern projection for active triangulation systems. In Proceedings of SPIE 8788, Optical Measurement Systems for Industrial Inspection VIII. p. 878813 (SPIE, Munich, Germany, 2013).

Pagès, J., Salvi, J., García, R. & Matabosch, C. Overview of coded light projection techniques for automatic 3D profiling. In Proceedings of 2003 IEEE International Conference on Robotics and Automation pp. 133–138 (IEEE, Taipei, Taiwan, China, 2003).

Salvi, J., Pagès, J. & Batlle, J. Pattern codification strategies in structured light systems. Pattern Recognit. 37, 827–849 (2004).

Salvi, J., Fernandez, S., Pribanic, T. & Llado, X. A state of the art in structured light patterns for surface profilometry. Pattern Recognit. 43, 2666–2680 (2010).

Geng, J. Structured-light 3D surface imaging: a tutorial. Adv. Opt. Photon 3, 128–160 (2011).

Takeda, M. & Mutoh, K. Fourier transform profilometry for the automatic measurement of 3-D object shapes. Appl. Opt. 22, 3977–3982 (1983).

Takeda, M., Gu, Q., Kinoshita, M., Takai, H. & Takahashi, Y. Frequency-multiplex Fourier-transform profilometry: a single-shot three-dimensional shape measurement of objects with large height discontinuities and/or surface isolations. Appl. Opt. 36, 5347–5354 (1997).

Willomitzer, F. & Häusler, G. Single-shot 3D motion picture camera with a dense point cloud. Opt. Express 25, 23451–23464 (2017).

Kasuya, N., Sagawa, R., Kawasaki, H. & Furukawa, R. Robust and accurate one-shot 3D reconstruction by 2C1P system with wave grid pattern. In Proceedings of 2013 International Conference on 3D Vision pp. 247–254 (IEEE, Washington, 2013).

Huang, P. S., Zhang, C. P. & Chiang, F. P. High-speed 3-D shape measurement based on digital fringe projection. Opt. Eng. 42, 163–168 (2003).

Watanabe, Y., et al. High-speed 8-bit image projector at 1,000 fps with 3 ms delay. In Proceedings of 2015 International Display Workshops pp. 1064–1065 (IDW, Shiga, Japan, 2015).

Texas Instruments. DLP7000 DLP® 0.7 XGA 2x LVDS type A DMD (2017). www.ti.com/lit/gpn/dlp7000.

Zhang, S. Recent progresses on real-time 3D shape measurement using digital fringe projection techniques. Opt. Lasers Eng. 48, 149–158 (2010).

Gorthi, S. S. & Rastogi, P. Fringe projection techniques: whither we are? Opt. Lasers Eng. 48, 133–140 (2010).

Batlle, J., Mouaddib, E. & Salvi, J. Recent progress in coded structured light as a technique to solve the correspondence problem: a survey. Pattern Recognit. 31, 963–982 (1998).

Albrecht, P. & Michaelis, B. Stereo photogrammetry with improved spatial resolution. In Proceedings of the 14th International Conference on Pattern Recognition pp 845–849 (IEEE, Brisbane, Queensland, 1998).

Wiegmann, A., Wagner, H. & Kowarschik, R. Human face measurement by projecting bandlimited random patterns. Opt. Express 14, 7692–7698 (2006).

Hartley R., Zisserman A. Multiple View Geometry in Computer Vision. 2nd edn (Cambridge University Press, New York, 2004).

Luhmann T., Robson S., Kyle S., Boehm J. Close-Range Photogrammetry and 3D Imaging. 2nd edn (De Gruyter, Berlin, 2014).

Heist, S., Mann, A., Kühmstedt, P., Schreiber, P. & Notni, G. Array projection of aperiodic sinusoidal fringes for high-speed three-dimensional shape measurement. Opt. Eng. 53, 112208 (2014).

Schaffer, M., Grosse, M. & Kowarschik, R. High-speed pattern projection for three-dimensional shape measurement using laser speckles. Appl. Opt. 49, 3622–3629 (2010).

Schaffer, M., Grosse, M., Harendt, B. & Kowarschik, R. High-speed three-dimensional shape measurements of objects with laser speckles and acousto-optical deflection. Opt. Lett. 36, 3097–3099 (2011).

Heist, S. et al. High-speed three-dimensional shape measurement using GOBO projection. Opt. Lasers Eng. 87, 90–96 (2016).

Heist, S., Kühmstedt, P., Tünnermann, A. & Notni, G. Theoretical considerations on aperiodic sinusoidal fringes in comparison to phase-shifted sinusoidal fringes for high-speed three-dimensional shape measurement. Appl. Opt. 54, 10541–10551 (2015).

Heist, S., Kühmstedt, P., Tünnermann, A. & Notni, G. Experimental comparison of aperiodic sinusoidal fringes and phase-shifted sinusoidal fringes for high-speed three-dimensional shape measurement. Opt. Eng. 55, 024105 (2016).

EMVA 1288 Standard for Characterization of Image Sensors and Cameras, R3.1. European Machine Vision Association, 2016.

Hyun, J. S., Chiu, G. T. C. & Zhang, S. High-speed and high-accuracy 3D surface measurement using a mechanical projector. Opt. Express 26, 1474–1487 (2018).

Hyun, J. S. & Zhang, S. High-speed 3D surface measurement with mechanical projector. In Proceedings of SPIE 10220, Dimensional Optical Metrology and Inspection for Practical Applications VI p. 1022004 (SPIE, Anaheim, CA, 2017).

Judge, T. R. & Bryanston-Cross, P. J. A review of phase unwrapping techniques in fringe analysis. Opt. Lasers Eng. 21, 199–239 (1994).

Ghiglia, D. C. & Pritt, M. D. Two-Dimensional Phase Unwrapping: Theory, Algorithms, and Software (Wiley, New York, 1998).

Zhang, Q. C., Su, X. Y., Xiang, L. Q. & Sun, X. Z. 3-D shape measurement based on complementary Gray-code light. Opt. Lasers Eng. 50, 574–579 (2012).

Sansoni, G., Carocci, M. & Rodella, R. Three-dimensional vision based on a combination of gray-code and phase-shift light projection: analysis and compensation of the systematic errors. Appl. Opt. 38, 6565–6573 (1999).

Bräuer-Burchardt, C. et al. On the accuracy of point correspondence methods in three-dimensional measurement systems using fringe projection. Opt. Eng. 52, 063601 (2013).

Song, L. M., Dong, X. X., Xi, J. T., Yu, Y. G. & Yang, C. K. A new phase unwrapping algorithm based on three wavelength phase shift profilometry method. Opt. Laser Technol. 45, 319–329 (2013).

Song, L. M. et al. Phase unwrapping method based on multiple fringe patterns without use of equivalent wavelengths. Opt. Commun. 355, 213–224 (2015).

Towers, C. E., Towers, D. P. & Jones, J. D. C. Absolute fringe order calculation using optimised multi-frequency selection in full-field profilometry. Opt. Lasers Eng. 43, 788–800 (2005).

Saldner, H. O. & Huntley, J. M. Temporal phase unwrapping: application to surface profiling of discontinuous objects. Appl. Opt. 36, 2770–2775 (1997).

Zhao, H., Chen, W. Y. & Tan, Y. S. Phase-unwrapping algorithm for the measurement of three-dimensional object shapes. Appl. Opt. 33, 4497–4500 (1994).

Li, J. L., Hassebrook, L. G. & Guan, C. Optimized two-frequency phase-measuring-profilometry light-sensor temporal-noise sensitivity. J. Opt. Soc. Am. A 20, 106–115 (2003).

Wissmann, P., Schmitt, R. & Forster, F. Fast and accurate 3D scanning using coded phase shifting and high speed pattern projection. In Proceedings of 2011 International Conference on 3D Imaging, Modeling, Processing, Visualization and Transmission pp 108–115(IEEE, Hangzhou, 2011).

Lohry, W., Chen, V. & Zhang, S. Absolute three-dimensional shape measurement using coded fringe patterns without phase unwrapping or projector calibration. Opt. Express 22, 1287–1301 (2014).

Lohry, W. & Zhang, S. High-speed absolute three-dimensional shape measurement using three binary dithered patterns. Opt. Express 22, 26752–26762 (2014).

Nelder, J. A. & Mead, R. A simplex method for function minimization. Comput. J. 7, 308–313 (1965).

Bräuer-Burchardt, C., et al. Accurate 3D face and body scanning using an irritation-free pattern projection system. In Proceedings of the 5th International Symposium on Sensor Science p. 765 (MDPI, Barcelona, 2017).

Brahm, A., et al. Fast 3D NIR systems for facial measurement and lip-reading. In Proceedings of SPIE 10220, Dimensional Optical Metrology and Inspection for Practical Applications VI p. 102200P (SPIE, Anaheim, CA, 2017).

Pharr, M. & Humphreys, G. Physically Based Rendering: from Theory to Implementation (Elsevier, Amsterdam, 2010).

Hartley, R. I. Theory and practice of projective rectification. Int J. Comput. Vis. 35, 115–127 (1999).

Acknowledgements

This project was supported by the German Federal Ministry of Education and Research (BMBF) under project number 03ZZ0436.

Author information

Authors and Affiliations

Contributions

S.H. conceived and implemented the simulation framework. P.D. programmed the software for camera calibration and 3D reconstruction. S.H. and M.L. conducted the experiments. P.K. and G.N. participated in the analysis of the data and detailed discussions. A.T. supervised the work. S.H. wrote the manuscript based on the input from all authors.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Article accepted preview

Accepted article preview online: 14 September 2018

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Heist, S., Dietrich, P., Landmann, M. et al. GOBO projection for 3D measurements at highest frame rates: a performance analysis. Light Sci Appl 7, 71 (2018). https://doi.org/10.1038/s41377-018-0072-3

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41377-018-0072-3

This article is cited by

-

A primary sensory cortical interareal feedforward inhibitory circuit for tacto-visual integration

Nature Communications (2024)

-

Speckle-based high-resolution multimodal soft sensing

Scientific Reports (2022)

-

Single-shot detection of 8 unique monochrome fringe patterns representing 4 distinct directions via multispectral fringe projection profilometry

Scientific Reports (2021)