Abstract

Conventional temperature measurements rely on material responses to heat, which can be detected visually. When Galileo developed an air expansion based device to detect temperature changes, Santorio, a contemporary physician, added a scale to create the first thermometer. With this instrument, patients' temperatures could be measured, recorded, and related to changing health conditions. Today, advances in materials science and bioengineering provide new ways to report temperature at the molecular level in real time. In this review, the scientific foundations and history of thermometry underpin a discussion of the discoveries emerging from the field of molecular thermometry. Intracellular nanogels and heat sensing biomolecules have been shown to accurately report temperature changes at the nanoscale. Various systems will soon provide the ability to accurately measure temperature changes at the tissue, cellular, and even subcellular level, allowing for detection and monitoring of very small changes in local temperature. In the clinic, this will lead to enhanced detection of tumors and localized infection, and accurate and precise monitoring of hyperthermia-based therapies. Some nanomaterial systems have even demonstrated a theranostic capacity for heat-sensitive, local delivery of chemotherapeutics. Just as early thermometry rapidly moved into the clinic, so too will these molecular thermometers.

Similar content being viewed by others

Main

Temperature is a master environmental variable that affects virtually all natural and engineered systems, from the molecular through the “systems” level. Conventional temperature measurements rely on repeatable physical manifestations of molecular effects, which report changes in material heat content on a macroscale. The expansion of mercury, the ductility and conductivity changes of metal, and shifts in infrared reflections are calibrated to associate molecular level changes with easily interpretable scales, which we use—and take for granted—in everyday life. As more reliable and economically feasible methods have evolved for directly examining material changes at near molecular dimensions in real time, it follows that one of the obvious outcomes is molecular thermometry. In this review, we define molecular thermometry as a direct measurement of molecules or cohorts of molecules, which distinctly respond to heat transfer on a nanoscale—either alone or through the behavior of like-scale reporters that can be associated with physical/mathematical benchmarks that we (already) use to describe the heat content of a given substance. Although these concepts are the traditional realm of thermodynamics, the biologic context offers a fundamental path for such local temperature measurements at a cellular and, likely even subcellular, level.

In the clinic, molecular thermometry could be applied to image and possibly treat tumors and localized infections. The local increases in heat released from the sites of tumors and infections cause significant temperature gradients that have long been known (1–3), and molecular thermometry could aid detection in a manner similar to conventional contrast agents. With increased concern regarding emerging infections and multidrug resistant microorganisms, especially those of nosocomial origins, the potential benefit of imaging or opsonizing localized infections would yield obvious benefits (2). Further, as the field of theranostics evolves, sensing differential heat content with nanoscale biosensors that can respond with the local temperature-sensitive delivery of pharmaceuticals, including chemotherapeutics and antimicrobials, may be an indispensable facet of their application (4).

Because the medical applications of bioelectromagnetics and the directed interaction of electromagnetic fields (EMF) with biologic systems are becoming mainstream, the field is rapidly yielding clinical devices and applications based on sound scientific understanding of molecular level effects. Many biologic applications of EMF purposely deliver energy to rapidly change the temperature of local targets in tissues (lasers, cauterizations); however, there are other therapeutic applications facilitated by directed EMFs with controlled heat transfer. Nanopulse EMFs have been calculated to increase temperature at the subcellular level, heating the membrane without raising the intracellular temperature (De Vita A et al., Anomalous heating in a living cell with dispersive membrane capacitance under pulsed electromagnetic fields. Bioelectromagnetics Society Meetings, June 14–19, 2009, Davos, Switzerland, Abstract P-205). Other EMF-based therapies that have shown promise rely on an increase in cellular or tissue temperature; induced hyperthermia has been shown to enhance the effects of gamma irradiation (5,6), and a recent study has shown hyperthermia can lead to tumor clearance, including metastases that were not directly treated, likely through an immune-mediated mechanism (Ito A., Hyperthermia using functional magnetite nanoparticles, Bioelectromagnetics Society Meetings, June 14–19, 2009, Davos, Switzerland, Abstract Plenary 3-2). The reporting and control of local tissue temperatures will be critical for the success of these and other emerging therapeutic and surgical methods, and as such, in situ systems engineered to accurately monitor temperature at a tissue and cellular level will become essential.

History of Thermometry

The earliest assessment of temperature was likely based on sensation or observation; hot versus cold weather; the vaporization of water as it boils; or from a physician's view point, how ill patients' bodies can often be notably warmer than that of their healthy counterparts. These early temperature associations lacked any basic understanding of material heat content, heat transfer processes, or empiric methods of their measurement. A physical understanding of temperature did not begin to arise until around 100BC when Hero of Alexandria observed that air volumes vary significantly with temperature; however, this observation had to wait over 1700 y to be used in a measurement device (7). In the late 1500s, Galileo and a group of Venetian scientists and physicians developed the thermoscope and ultimately the first thermometer (8).

Galileo had read of the experiments of Hero and repeated these same observations using a tube containing air trapped above a column of water; when heated by his hands, the water column dropped as the air expanded and as the column again cooled, the water rose (7). Galileo had invented the precursor of a modern thermometer, many of which also rely on expansion and contraction of liquid materials within a tube. This device, although absolute and repeatable in response, was lacking a critical standard: a scale would permit air volume changes to be related to heat content through simple mathematical means, that would, in turn, enable a standardized record, which could be compared over time.

The addition of a scale to the thermoscope, thus creating a calibrated thermometer, is commonly attributed to Santorio Santorio, a Venetian physician and contemporary of Galileo. Santorio devoted great efforts to measurements of the human body, his own and his patients. He used a pendulum (another of Galileo's inventions) to accurately measure pulse, and he regularly weighed himself, his food and beverage intake, and his wastes and determined much of his intake was systematically lost through “insensible perspiration” (7,9). It is of great significance to the birth of thermodynamics that Santorio was among the first to recognize that heat transfer occurs continuously and progresses through both “sensible” and “insensible” mechanisms: i.e. the heat content of materials changes in a sensible relationship to their temperature when they are of a single phase (liquid) and through an “insensible” relationship when materials change phase (evaporation). The latter entity, “insensible heat,” is the early thermodynamic concept of latent heat capacity—or the energy required for a substance to change phase, either from solid to liquid, or liquid to gas (10). When phase changes occur, they do so at constant temperature, while significant amounts of thermal energy are concomitantly transferred to the environment (perspiration and the welcome effect of evaporative cooling) (9). The phenomenon of latent heat transfer was termed “nonsensible” or “insensible,” because no temperature changes could be observed (seen) during this energy intensive process (9).

Santorio also identified that heat transfer processes could be quantified and scaled in a range that was germane to biologic functions. Thus, it is no surprise that this fastidious “measurer” would adapt the thermoscope to systematically record human temperatures on a consistent scale. This adaptation was critical to the development of modern thermometry. Indeed, Santorio proved that by capturing the heat liberated from a living being in an adjacent but confined material, the heat transferred could manifest in a macroscale reporting system (the density change of water and gases), such that he could record and compare cohorts of patients' temperatures through changing health conditions (7,8).

Because such observations evolved to support the empirical science of thermodynamics, the understanding of temperature moved from the simple concept of sensible heat to more advanced perspectives of specific heat content, latent heat capacity, and how thermal energy transiently moves in biologic systems and the environment at large. It was later realized that the thermoscope and thermometers were leveraging intrinsic material properties that isolated heat content as a variable. Eventually, the invention of the sealed thermometer, a bulb and stem alcohol thermometer, by Ferdinando II de' Medici was able to measure temperature independently of barometric pressure, unlike Galileo and Santorio's open thermoscope and thermometer (11). A similar device using mercury, designed by Daniel Gabriel Fahrenheit, gave us the common glass tube thermometer calibrated to report on a Fahrenheit scale (°F) (11). Improved understanding of the nature of sensible heat and material heat content led to the continued development of temperature scales that were increasingly precise and molecularly relevant.

Thermodynamics and Thermometry

Classical thermodynamics hosts the convention for the temperature scales used in contemporary medicine, engineering, and bioengineering. The salient theme through which thermometry has evolved continues to be defined by thermodynamic thresholds associated with material phase transitions. These transitions are visible on the macroscale, where correlations of temperature and the behavior of ordinary substances—the boiling of water or the expansion of mercury—are commonplace. They extend to wide-spread engineering applications where repeatable changes in material properties manifest from density changes. The ubiquitous thermocouple is calibrated to report temperatures based on a metal's current capacity or physical conformation, because it expands in response to changing heat content—a microscale process, which is used for thermal control systems. We now leverage analytical tools that confidently report physical conformation on subcellular scales, where we can literally watch engineered and biologic polymers undergo structural transitions that are as predictable as mercury expansion and the transition from ice to water. Phase transitions are the common denominator of thermometry, and the prospects for (bio)polymer-based temperature reporting is replete with power and in situ functional potential.

Throughout history, physicists, chemists, and more recently bioengineers have pursued thermometry on an absolute temperature scale, independent of the properties of individual substances, and thus report true heat content (10). Attempts at such manifest in the modern Rankine (R) scale in the English system, and the Kelvin (K) scale in the SI system (both these scales officially abandoned the degree designation in 1967 [e.g. °K] to reflect their physical basis in absolute zero) (10). A scale that is thermodynamically identical to these absolute scales is based on the activity of ideal gases, where the temperature is defined as zero when the pressure they exert is zero (10). Using this basis, efforts toward improving the range and accuracy of direct temperature measurements have vastly improved over the last generation and in large part rely on nanomaterial science to produce, contain, and control unique states of matter [e.g. plasma, Bose-Einstein condensates (12–14)] as well as a multitude of systems which deliver, detect, and record different forms of electromagnetic radiation [e.g. lasers and infrared lenses (15)]. The international temperature scale of 1990 (ITS-90) reflects how recent advances in nanoscale science translate into improved applications (16). ITS-90 is based on the fusion and sublimation of water at its triple point—the thermodynamic condition (absolute pressure and temperature) where liquid, vapor, and solid ice exist in physical equilibrium.

Although absolute scales have improved thermometry, absolute scales are typically used in science and engineering outside ranges that are germane to biologic systems—except when supporting the development of localized destructive freezing practices for surgeries and long-term cryogenic storage (17,18). The resolution and reporting of temperature are largely idiosyncratic to application, and often intrusive; nowhere is this more obvious than in medical settings. With notable exception to infrared reflectometry, which is local and surficial (2), temperature measurements within biologic systems are notoriously difficult from a bioengineering perspective because of the range of their scale(s) and the fact that they are not in thermodynamic equilibrium.

Thermodynamics teaches us that fixed point calibration brings tremendous leverage to thermometry from an absolute basis, regardless of the reporting mechanism and how it is used in a thermometer: if water-ice and steam points are assigned respective values of 0 and 100, then the temperature scale will be identical to the Celsius scale. Any scale can thus be “embedded” in another, provided the fixed points defining the desired range are also based on a thermodynamic phase transition; thus, thermometry can be extended to any material(s) that undergoes structural transition, provided the time frame of the transition is germane to the system of concern, including, but not limited to, tissues, cells, and biopolymers they contain.

Nanomaterial-Based Thermometry

Just as the first thermometer was used for medicine, as new thermometric technologies are developed, they too are adapted for medicine and biomedical research, although not always with the success Santorio enjoyed. An early example of a micro- or nanoscale approach to thermometry using embedded scales and relevant to medical settings comes from a patent filed in the late1970s (19). This patent relies on the changes in the magnetic characteristics of ferromagnetic, paramagnetic, and diamagnetic materials corresponding to changes in temperature. Using micron-sized particles, which were injected i.v., the patent suggests that certain cells will absorb particles according to an equilibrium distribution, which is dependent on intracellular temperature. With calibration to known temperatures, a sensitive three-dimensional (3D) scanner could then detect the changes in the magnetic properties of these intracellular particles. Although the underlying physics is sound (20,21), there are no published examples of this technology being used. This is likely due to problems achieving the broad cellular uptake, issues with biocompatability, and a lack (at that time) of viable 3D imaging technologies required to make implementation of such a method practical.

With the creation of nanomaterials, this same theory has been applied to develop a more practical cellular thermometry. The origins of this technology lie in the development of fluorescent quantum dots (QD), also known as semiconductor nanocrystals. These QDs have found many in vitro uses as fluorescent labels with size-dependent emission wavelengths, bright fluorescence, and resistance to photobleaching (22). However, the main effect temperature has on QDs in a physiologic range is to shorten their radiative-lifetimes with increasing temperature through 300 K (26.8°C) (22–24). Although QDs do respond to temperature changes in only a limited capacity, the fluorescence intensity they liberate is significantly affected by the makeup of their immediate surroundings (22). Thus, QDs can provide a simple, fluorescence-based detection system that is sensitive to environmental conditions.

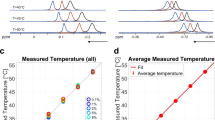

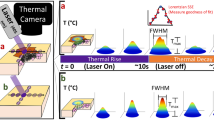

Materials scientists have taken advantage of QD sensitivity to environment and developed nanogels that significantly change structure in response to temperature. These nanomaterial gels expand or contract with temperature changes, and this alteration in conformation at the molecular level will exclude ions and even water molecules (25). When QDs are placed in or conjugated to these nanogels, changes in temperature alter the local environment such that QDs significantly change the intensity of the fluorescence they liberate (25). These technologies have been successfully used to measure relevant temperatures in physiologic buffers (25).

Recent advances in this nanogel technology no longer rely on QDs for reporting but rather use chemical modifications that lend environmentally sensitive fluorescence to the nanogel itself. This type of engineered nanogel behaves similarly to QD containing nanogels; it changes conformation in response to temperature changes, altering the molecular environment and thus changing the emission of integrated fluorescent moieties (26). This fluorescent nanogel thermometer has been successfully microinjected into COS-7 cells and demonstrated temperature dependent fluorescence with a resolution of 0.31°C at ∼28°C (26).

One of the largest concerns about the use of nanomaterials in the biologic arena, and particularly in medicine, is biocompatability. Early studies of the interactions of nanomaterials in biologic systems have shown that many nanomaterials are unpredictably reactive and can produce reactive oxygen species, radicals, and a host of other by-products as a result of their highly ordered structures (27,28). Although there has been extensive testing of biocompatability with engineered nanomaterials that could potentially serve as in situ thermometric systems (25,26), because of the complex nature of biologic systems, minor but significant effects on cellular systems may exist that have not yet been observed. Further, much of the biocompatibilty testing has been observed on short time scales, and longer term interactions, because the biomolecules used for targeting are likely degraded, revealing the QD cores, must be studied; such systems have been examined in animal models with few effects, although long-term effects have not been examined other than to note tissue specific accumulation (22). This lack of data pose significant problems for long term in vivo and ultimately clinical applications of this technology.

Biomolecular-Based Molecular Thermometry

To circumvent the engineered nanomaterials-based biocompatibility question, other systems must be investigated. Perhaps the most biocompatible nanomaterials are biologic macromolecules themselves. By their nature, biologic molecules are naturally occurring nanomaterials; they are highly ordered molecular structures of the size range characteristic of engineered nanomaterials—<100 nm (nm) in any dimension (Fig. 1). Further, biologic macromolecules, by the nature of biologic diversity, function at a wide range of temperatures. This eliminates the issue of a relevant scale seen with temperature sensing using QDs alone without nanogels. Further, engineering of specific biomolecules has become routine; thus, we can readily design and even evolve bionanomaterials to perform specific functions [e.g. aptamers (29)]. The next step to designing biologic molecules for intracellular thermometry is a relatively small one.

Some synthetic polymer gels share physical/chemical and thus structural features with the contents of living cells. Much like their synthetic counterparts, hydrogels that are constructed in whole or in part of natural biopolymers are gaining increased attention for thermometric applications. Such pseudo-natural gels, from a physical perspective, mimic nanoscale framework of actual intercellular matrices—such as cytoplasm, which is a unique and dynamic hydrogel created by short and long polymers biochemically associating with gelatinous qualities (30). Similar to the way water condenses to ice, the biopolymers, which constitute some hyrdogels, can experience significant physical transformations in response to temperature thresholds (31) and thus will be useful for thermometry. In this context, hydrogel transformations have been termed “phase transitions” by a growing cohort of biophysicists (32), which were first predicted through thermodynamic calculation by polymer scientists (33) and subsequently modeled by experiment (34,35). These transitions, characterized by massive conformational changes, including protein denaturation or the melting of hybridized nucleotide complexes, are typically triggered at thresholds through small environmental shifts, much like the freezing and boiling of liquids. The denaturation of protein in a cooking egg provides an excellent example. Biopolymer interactions that maintain the character of hydrogels are pseudo-stable at a given heat (content), pH, ionic strength through a conglomerate of weak intermolecular interactions; by their nature, these become unstable at physical/chemical thresholds. Although the temperature-induced shift of selected biopolymer conformation (e.g. a specific protein) from one folded state to another is well recognized, the accompanying shift in the stability of gel-like plasma in which proteins reside is less acknowledged. Yasunaga et al. attempted to model plasma-like gelling behavior with hydrogels of a common polymer (acronymed PNIPAM) (36,37). Using nuclear magnetic resonance, they demonstrated that when the temperature of a PNIPAM-containing solution progressively increased, a surrogate metric for the motional freedom of associated water (T2/s) also increased to a marked threshold. Beyond a critical temperature, termed the “gellation temperature,” water motion becomes remarkably restricted as the “system gels.” An analogous thermodynamic motif emerged which parallels the freezing of pure substances: where temperature thresholds predictably associate with profound structural changes can be leveraged for thermometry (Fig. 2).

Generalized structural motif representing how some engineered polymeric hydrogels respond to temperature shifts as judged by water exclusion. The fluidity of hydrogels is governed by weak electrostatic interactions between water and the polymers comprising the gel. When temperature reaches a “gel” threshold, water and polymer order undergoes formidable structural rearrangements. This behavior is parallel to the thresholds observed where pure substances experience conventional phase changes.

Some of the earliest studies of genetic mutation took advantage of these biopolymer transitions in response to changes in temperature (38,39). The use of temperature sensitive (ts) mutants, or wild-type (wt) biomolecules for that matter, is typically considered only in the context of their biologic function; however, they can also provide information about their environment. These mutants (both heat and cold sensitive) essentially function as biologic thermometers; they have a detection system (i.e. the function of the biomolecule typically evidenced through a detectable phenotype) and a relevant scale can be readily defined (i.e. the biomolecule functions at 35°C but not at 42°C). Although much of the literature surrounding ts mutants focuses on function as a measure of denaturation or structural change in an on/off manner, some studies have examined function through a range of temperatures.

Chao et al. developed a series of lacI-ts mutants to eliminate the need for biochemical analogs to activate lactose-triggered expression systems (40). Instead of using expensive reagents to induce the desired expression of a critical gene cassette, key regulatory proteins (LacI) were engineered such that they would thermally denature at predetermined temperature levels and expose the transcription factor-binding site (T7A1 promoter with synthetic lac0 sites), which controlled the downstream production of a valued pharmaceutically active protein. In controlled steps between 1 and 3°C, they examined the expression of the reporter protein LacZ through the range between 30–40°C and demonstrated a significant response in LacZ activity corresponding to stepwise temperature increases through this physiologically relevant range (40). This group, in developing a temperature based expression system to reduce the cost of pharmaceutical production, has laid the groundwork for a ts mutant-based intracellular thermometer. Further work characterizing these vectors, perhaps with different reporter constructs or in different cell systems, will be required to confirm the clinical applicability of such a system as a biomolecular thermometer.

Even less complicated systems for a biomolecular thermometer can also be imagined. One report used loss of enhanced green fluorescent protein (EGFP) fluorescence as a marker for cellular heating, assuming heat denaturation led to this loss of fluorescence (Wood W et al., The use of fluorescent dyes and other markers to measure elevated temperature in cells during RF exposure, Bioelectromagnetics Society Meetings, June 14–19, 2009, Davos, Switzerland, Abstract P-63). However, this group observed a sharp reduction in fluorescence through a narrow temperature threshold, limiting the sensitivity and applicable range of this system using EGFP. This approach can likely be applied to other reporter proteins, wt or engineered. As a result of their individual structural features and stabilities, other proteins would likely behave differently, showing different temperatures at which the reporter denatures or perhaps broader temperature ranges of detection. Several reporters could be used in concert to achieve a broad range sensitive thermometric system in a cell using native wt proteins.

Another approach to an EGFP-based thermometric system would be to examine, not the loss of fluorescence, but rather changes in the emission spectrum of a fluorescing protein. The various EGFP derivatives with different excitation and emission spectra represent minor structural changes to the molecule. Much in the way ts mutants alter the sensitivity of a protein to temperature, these subtle changes in excitation and emission could be created by changes in temperature. A recent biophysics article has in fact demonstrated changes in the emission wavelengths of wt-green fluorescent protein with increasing temperatures (41). The temperatures tested range from 85 K to 294 K (−188.5 to +20.85°C) (41). Although just shy of a clinically relevant range, this trend would likely continue and could be seen in EGFP and other protein based fluorophores. Perhaps most importantly, changes in emission are quite easy to detect with high levels of sensitivity.

There are a variety of molecular mechanisms by which temperature is sensed in the cell aside from proteins; heat-shock induced expression changes provide an RNA-based example (42). In one system, the temperature dependent control of heat-shock uses the ROSE RNA element (43). This ROSE sequence is found in the mRNA of a heat-shock transcript and regulates translation of the mRNA. At 30°C, a ribosomal binding sequence is occluded in the ROSE stem-loop structure; however, as the cell heats to 42°C, the hydrogen bonds forming the stem-loop become unstable or “melt,” exposing the ribosomal binding sequence, leading to translation (43). Thus, this article demonstrated the ability of 3D structure of a biomolecule to act as a thermometer in vivo.

The melting curves of the ROSE and a mutant were measured at various ion concentrations, from 0 to 100°C. The melting curves manifest into distinct distributions, suggesting that the melting of the stem loop structure did not occur in all molecules simultaneously (43). This population distribution of melted and intact stem-loop structures at any given temperature indicates that a standard could be determined which would relate temperature to different families of reporter systems. Incorporation of the ROSE element into a marker transcript, EGFP, LacZ, etc., would provide such a detection system and lead to RNA-based thermometry within a cell.

Just as the melting of the stem loop structure of the ROSE element manifests into a distribution through a given temperature range, small DNA hairpins would likely behave in a similar manner. Hairpin probes designed for quantitative polymerase chain reaction (qPCR) have fluorophores at both ends of the strand that quench when in a hairpin, but fluoresce when melted and bound to the target amplicon. Much in the way heat “melts” a ROSE element, exposing a ribosomal binding sequence, a DNA hairpin, tagged with a quenched fluorophore, would also fluoresce beyond a denaturing temperature threshold. The base order, AT/GC content, as well as hairpin length of a family of such tagged oligonucleotides, could be engineered for desired melting temperatures and thus provide control through relevant temperature ranges, which could be assessed by individual hairpins. The relative fluorescence could then be calibrated against known temperatures and thus provide relevant scales.

Summary

In this review, we have presented hallmarks circumscribing the development and application of modern thermometry in biologic applications, as well as some of the historical path through which thermometry has emerged and progressed into contemporary thermodynamics and physics. We close with an acknowledgment and perspective of the unfortunate overlapping usage of the term “scales” in this context: one with respect to the scale of the systems, we desire to measure (organism, tissue, or cell); another with respect to the scale of the reporting system of local heat content (a thermocouple, a contained thermometer, or intracellular biopolymer); as well as the various mathematical scales benchmarked to unique material behaviors (the freezing of water or the denaturing of proteins) used by thermometric systems to report heat content. This multiple usage reflects a parallel in the overlap of many fields—thermodynamics, molecular biology, materials science, and physics—that have precipitated the potential for molecular thermometry to serve important functions in medical applications.

We find the art and science of thermometry experiencing an unprecedented acceleration over the past decade and, fortunately, following technological trends of miniaturization into the micro- and even nanoscale. At the intracellular scale, a variety of molecular thermometers have emerged with markedly different physical bases: some are based on magnetic behaviors, others in cutting-edge nanomaterials, and yet others based on biopolymer behaviors, both native and engineered. All of these thermometric systems share two things with conventional thermometry: a method of detecting temperature changes (i.e. the loss or gain of energy through heat transfer) and a relevant scale such that temperature readings can be normalized and compared. These systems cover a tremendous range of relative complexity from individual molecules or particles, to hydrogels, to transcription control systems. Advancements in nanomaterials and bioengineering will no doubt lead to thermometry improvements which we cannot yet envision.

It is important to note that many of the molecular thermometers presented herein are hypothetical and have yet to mature into reliable temperature reporting systems, and this is particularly true of those based on biomolecular functions. In this realm, the only systems actually serving as a functional thermometer beyond the context of immediate biologic function is that incorporating EGFP denaturation. Although it may seem that the use of biomolecules as thermometers may be lost in the complex nature of cells, far more complicated biologic systems have been used as thermometers for over one hundred years. It had long been recognized that the activity of common crickets varies with temperature in temperate climates, but in 1898, Dolbear conducted an extensive study that demonstrated that the rate of audible cricket chirps closely correlates with temperature. His observations showed temperature in °F equals 50 plus the number of chirps let in a minute, minus 40, divided by 4; thus providing the relevant scale to a macro level observation turning a cricket into a thermometer (44). Indeed, the further evolution of thermometry will require classical thermodynamics to embrace other disciplines, and it is an exciting time for those collaborating in this unique cusp.

Abbreviations

- EGFP:

-

enhanced green fluorescent protein

- EMF:

-

electromagnetic fields

- QD:

-

quantum dot

- ts:

-

temperature sensitive

References

Donovan JA, Jochimsen PR, Folk GE Jr, Loh PM 1985 Temperature over tumors in hairless mice. Cryobiology 22: 499–502

Saxena AK, Willital GH 2008 Infrared thermography: experience from a decade of pediatric imaging. Eur J Pediatr 167: 757–764

Sterns EE, Zee B 1991 Thermography as a predictor of prognosis in cancer of the breast. Cancer 67: 1678–1680

Kettering M, Zorn H, Bremer-Streck S, Oehring H, Zeisberger M, Bergemann C, Hergt R, Halbhuber KJ, Kaiser WA, Hilger I 2009 Characterization of iron oxide nanoparticles adsorbed with cisplatin for biomedical applications. Phys Med Biol 54: 5109–5121

Latorre M, Rinaldi C 2009 Applications of magnetic nanoparticles in medicine: magnetic fluid hyperthermia. P R Health Sci J 28: 227–238

Tong L, Wei Q, Wei A, Cheng JX 2009 Gold nanorods as contrast agents for biological imaging: optical properties, surface conjugation and photothermal effects. Photochem Photobiol 85: 21–32

Mulcahy R 1996 Medical Technology: Inventing the Instruments. Oliver Press, Minneapolis, pp 17–24

Bolton HC 2007 Evolution of the Thermometer, 1592–1743. Kessinger Publishing, LLC, Whitefish, pp 7–27

Krzysztofcena JA Politechnika Wroctawska 1981 Bioengineering, Thermal Physiology and Comfort. Elsevier Science, New York, pp 82–85.

Çengel YA, Turner RH, Cimbala JM 2008 Fundamentals of Thermal-Fluid Sciences. McGraw-Hill, New York

Spink WW 1978 Infectious Diseases: Prevention and Treatment in the Nineteenth and Twentieth Centuries. University of Minnesota Press, Minneapolis

Lewanndowski HJ, Harber DM, Whitaker DL, Cornell EA 2003 Simplified system for creating a bose-einstein condensate. J Low Temp Phys 132: 309–367

Donley EA, Claussen NR, Cornish SL, Roberts JL, Cornell EA, Wieman CE 2001 Dynamics of collapsing and exploding Bose-Einstein condensates. Nature 412: 295–299

Roberts JL, Claussen NR, Cornish SL, Donley EA, Cornell EA, Wieman CE 2001 Controlled collapse of a Bose-Einstein condensate. Phys Rev Lett 86: 4211–4214

Childs PR, Greenwood JR, Long CA 2000 Review of temperature measurement. Rev Sci Instrum 71: 2959–2978

Preston-Thomas H 1990 The international temperature scale of (ITS-90). Metrologia 27: 3–10

Lustgarten DL, Keane D, Ruskin J 1999 Cryothermal ablation: mechanism of tissue injury and current experience in the treatment of tachyarrhythmias. Progr Cardiovasc Dis 41: 481–498

Spurr EE, Wiggins NE, Marsden KA, Lowenthal RM, Ragg SJ 2002 Cryopreserved human haematopoietic stem cells retain engraftment potential after extended (5–14 years) cryostorage. Cryobiology 44: 210–217

Gordon RT 1979 Intracellular temperature measurement. US Patent No. 4,136,683. Available at: http://patft.uspto.gov. Accessed January 28, 2010

Gilbert W 1991 De magnete. Dover Publications, Mineola

Uyeda C, Tanaka K, Takashima R, Sakakibara M 2003 Characteristics of paramagnetic and diamagnetic anisotropy which induce magnetic alignment of micron-sized non-ferromagnetic particles. Mater Trans JIM 44: 2594–2598

Alivisatos AP, Gu W, Larabell C 2005 Quantum dots as cellular probes. Annu Rev Biomed Eng 7: 55–76

Borri P, Langbein W, Schneider S, Woggon U, Sellin RL, Ouyang D, Bimberg D 2001 Ultralong dephasing time in InGaAs quantum dots. Phys Rev Lett 87: 157401

XB Zhang RD 2004 Thermal effects on the luminescent properties of quantum dots. In: Schwartz JA, Contescu CI, Putyera K (eds) Dekker Encyclopedia of Nanoscience and Nanotechnology. Marcel Dekker, Inc, New York, pp 3873–3881

Hardison D, Deepthike HU, Senevirathna W, Pathirathne T, Wells M 2008 Temperature-sensitive microcapsules with variable optical signatures based on incorporation of quantum dots into a highly biocompatible hydrogel. J Mater Chem 18: 5368–5375

Gota C, Okabe K, Funatsu T, Harada Y, Uchiyama S 2009 Hydrophilic fluorescent nanogel thermometer for intracellular thermometry. J Am Chem Sci 131: 2766–2767

Park EJ, Yi J, Chung KH, Ryu DY, Choi J, Park K 2008 Oxidative stress and apoptosis induced by titanium dioxide nanoparticles in cultured BEAS-2B cells. Toxicol Lett 180: 222–229

Xia T, Kovochich M, Brant J, Hotze M, Sempf J, Oberley T, Sioutas C, Yeh JI, Wiesner MR, Nel AE 2006 Comparison of the abilities of ambient and manufactured nanoparticles to induce cellular toxicity according to an oxidative stress paradigm. Nano Lett 6: 1794–1807

Nimjee SM, Rusconi CP, Sullenger BA 2005 Aptamers: an emerging class of therapeutics. Annu Rev Med 56: 555–583

Porter KR, Beckerle M, McNivan M 1983 The cytoplasmic matrix. Mod Cell Biol 2: 259–302

Hoffman A 1991 Conventional and environmentally sensitive hydrogels for medical and industrial uses: a review paper. Polym Gels 268: 82–87

Pollack GH 2001 Cells, Gels and the Engines of Life. Ebner and Sons Publishing, Seattle

Dusek K, Patterson D 1968 A transition in swollen polymer networks induced by intramolecular condensation. J Polym Sci Polym Chem 6: 1209–1216

Tanaka T 1981 Gels. Sci Am 244: 124–136, 138

Tanaka T, Annaka M, Ilmain F, Ishii K, Kokufuta E, Suzuki A, Tokita M 1992 Phase transitions of gels. In: Karalis TK (ed) Mechanics of Swelling: From Clays to Living Cells and Tissues. Springer-Verlag, Inc, Berlin, pp 683–703

Yasunaga H, Ando I 1993 Effect of cross-linking on the molecular motion of water in polymer gel as studied by pulse 1H NMR and PGSE 1H NMR. Polymer Gels and Networks 1: 267–274

Yasunaga H, Kobayashi M, Matsukawa S, Kurosu H, Ando I 1997 Structure and dynamics of polymer gel systems viewed from NMR spectroscopy. Annu Rep NMR Spectros 34: 39–104

Chakshusmathi G, Mondal K, Lakshmi GS, Singh G, Roy A, Ch RB, Madhusudhanan S, Varadarajan R 2004 Design of temperature-sensitive mutants solely from amino acid sequence. Proc Natl Acad Sci USA 101: 7925–7930

Fried M 1965 Cell-transforming ability of a temperature-sensitive mutant of polyoma virus. Proc Natl Acad Sci USA 53: 486–491

Chao YP, Chern JT, Wen CS, Fu H 2002 Construction and characterization of thermo-inducible vectors derived from heat-sensitive lacI genes in combination with the T7 A1 promoter. Biotechnol Bioeng 79: 1–8

Leiderman P, Huppert D, Agmon N 2006 Transition in the temperature-dependence of GFP fluorescence: from proton wires to proton exit. Biophys J 90: 1009–1018

Narberhaus F, Waldminghaus T, Chowdhury S 2006 RNA thermometers. FEMS Microbiol Rev 30: 3–16

Chowdhury S, Maris C, Allain FH, Narberhaus F 2006 Molecular basis for temperature sensing by an RNA thermometer. EMBO J 25: 2487–2497

Eric R, Eaton KK 2007 Kaufman field guide to insects of North America. Houghton Mifflin Harcourt, Geneva, pp 82

Author information

Authors and Affiliations

Corresponding author

Additional information

Supported by The State of Colorado and NIH Grant #1RO1 EB 009115-01 A1.

Rights and permissions

About this article

Cite this article

McCabe, K., Hernandez, M. Molecular Thermometry. Pediatr Res 67, 469–475 (2010). https://doi.org/10.1203/PDR.0b013e3181d68cef

Received:

Accepted:

Issue Date:

DOI: https://doi.org/10.1203/PDR.0b013e3181d68cef

This article is cited by

-

Gold nanoclusters as a near-infrared fluorometric nanothermometer for living cells

Microchimica Acta (2019)

-

Measurement of local temperature increments induced by cultured HepG2 cells with micro-thermocouples in a thermally stabilized system

Scientific Reports (2017)

-

Ratiometric thermal sensing based on Eu3+-doped YVO4 nanoparticles

Journal of Nanoparticle Research (2016)

-

Intracellular Temperature Sensing: An Ultra-bright Luminescent Nanothermometer with Non-sensitivity to pH and Ionic Strength

Scientific Reports (2015)

-

One-step synthesis of fluorescent smart thermo-responsive copper clusters: A potential nanothermometer in living cells

Nano Research (2015)