Abstract

The mechanism by which heart rate variability (HRV) changes during neonatal illness is not known. One possibility is that reduced HRV is merely a diminished or scaled-down version of normal. Another possibility is that there is a fundamental change in the mechanism underlying HRV, resulting in a change in the ordering of RR intervals. We investigated the nature and extents of order in RR interval time series from 25 Neonatal Intensive Care Unit patients with a spectrum of clinical illness severity and HRV. We measured predictability (deviation of predicted intervals from observed), and regularity (measured as approximate entropy) of RR interval time series showing different degrees of HRV. In RR interval time series where the effects of scaling were removed, we found 1) records showing normal HRV had more order than those showing low HRV; 2) the nature of the order was more like that of a periodic process with frequencies over a large range(time series whose log-log power spectrum had a 1/f distribution) than that of chaotic one (logistic map); and 3) the nature of order did not change greatly as HRV fell. We conclude that neonatal RR interval time series are ordered by periodic processes with frequencies over a large range, and that the extent of order is less during illness when HRV is low.

Similar content being viewed by others

Main

In healthy newborn infants, time series of heart period (or RR intervals, the time between successive heartbeats) show obvious variability. This phenomenon, termed HRV, arises from the interplay of the sympathetic and parasympathetic arms of the autonomic nervous system, which act, respectively, to speed or slow the heart rate. HRV is reduced, however, in at-risk infants or during severe neonatal illness(1–6). Mathematical analyses of heart rate variability(7) have included use of descriptive statistics and other time-domain calculations(8–13), frequency domain calculations(14–17), and nonlinear dynamical analyses(18–21). These measures have been used most often to distinguish abnormal HRV from normal and to estimate parasympathetic tone. In addition, these kinds of analyses have been used to address two kinds of mechanisms by which HRV might fall during illness.

The first possible mechanism is a simple one-the physiologic events that generate normal HRV are unchanged during illness, but cellular dysfunction limits the dynamic range of heart rate. In this case, mathematical analyses would show similar fundamental characteristics of abnormal and normal HRV, with a difference only in scaling. Specifically, measures such as the interbeat increments-the difference between successive RR intervals-would be lower during illness. Consider this example. Suppose you are to construct a time series of RR intervals beginning at a given initial value, and you are given a list of interbeat increments. You proceed by adding the first increment to the initial value, then you add the second increment to that sum, and so forth. Suppose you now construct a second time series using interbeat increments scaled down to one-tenth the size of the original. The two resulting time series would look very different-the second would appear much less variable-and that difference could be quantified by measures of scaling.

The second possible mechanism, on the other hand, calls for a fundamental difference in the physiologic processes involved and results in differences in the order of the time series. Consider this example. Suppose you are again to construct RR interval time series from a list of interbeat increments. One way to proceed would be to select the increments at random. Another way would be to put the increments in order, say from largest to smallest, and then to use them in that order. The two resulting time series would look very different-the second would appear much less variable-and that difference could be quantified by measures of order.

The differences in scaling and order in normal and abnormal HRV in adults and in newborn infants have been addressed. A seminal study of adult HRV demonstrated more order in normal HRV thatn in abnormal, and found no significant difference in scaling(22). Our similar analysis in newborn infants, however, had different results. We found little change in order during severe cardiorespiratory illness, and found larger changes in scaling(23), as the amplitude of the interbeat increments changed 2-3-fold(4). These findings suggested that the physiologic mechanisms that underlie normal HRV are fundamentally unchanged during illness, although they may be dampened. That analysis was limited in several ways. We investigated infants only at the extremes of illness and health, and did not allow for the possibility that more than one kind of order might be present.

To investigate further the issue of order in neonatal RR interval time series, we have used measures of order based on predictability and regularity. The goal was to quantify order in RR interval time series using new measures in a new and larger data set representing a broader range of neonatal illness severity. We hypothesized that this more comprehensive analysis might better reveal changes in order in normal compared with abnormal neonatal HRV. We also addressed the issue of the nature of the order in neonatal RR interval time series, distinguishing between the kinds of order found in periodic as opposed to chaotic time series.

METHODS

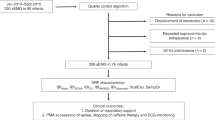

Data collection. RR interval time series data were collected in the Neonatal Intensive Care Unit of the University of Virginia Hospital. The University of Virginia Human Investigations Committee approved the protocol. The continuous analog output of a bedside ECG monitor was input to an 80486-based microcomputer equipped with a digital signal processor board(National Instruments AT-2200DSP) and sampled at 4 kHz. QRS complexes were identified using amplitude and duration criteria. We recorded the beat-to-beat(RR) time intervals in 0.25 msec increments and created data files of 4096 consecutive RR intervals. We arbitrarily selected 175 RR interval data files representing a wide range of heart rate variability from 25 patients. The RR interval data files were categorized based on the CV, a descriptive statistical measure defined as SD divided by mean (σ/μ) and expressed as a percentage, of the RR interval time series. We defined three groups: CV< 3.0 (group I), 3.0 ≤ CV < 6.0 (group II), and CV ≥ 6.0 (group III). These groups represented low HRV, and two degrees of normal HRV, respectively. The threshold values for classification were arbitrary. Group I included 50 files, group II included 76 files, and group III included 49 files.

Prediction error. Prediction of individual RR intervals was performed using a technique adapted from the work of Sauer(24, 25), and an example is shown in Figure 1. The goal of the process is to predict the next point in the time series of heart rate. This predicted value is then compared with the observed value. The process is repeated, point by point, for long epochs of data. If the differences between the predicted and observed values are small, then we conclude that the time series has a high degree of order. The prediction process consists of: 1) noting the two values before the point to be predicted; 2) searching the previous values for two that most closely match these two prior values; 3) noting the value that follows the past, similar pair of values; 4) adjusting this third value to allow for absolute differences in the past and present pairs of values; and 5) using this adjusted value as the predicted point.

Prediction algorithm. Prediction algorithm demonstrated for normalized RR interval data with scanning region size (h) = 60, window size (y) = 3, and the last point in the scanning region(n) = 60. To predict the 61st interval (n + 1), the scanning window is first positioned to include intervals 1, 2, and 3. The absolute differences of the slopes within this window (the first difference of intervals 1 and 2, and the first difference of intervals 2 and 3) and the corresponding slopes within the reference window (the first difference of intervals 58 and 59, and the first difference of intervals 59 and 60, respectively) are then summed to give the absolute error. The scanning window is then moved forward one point at a time through the entire scanning region, and the scanning window with the least absolute error is marked as the best match window. The best match window in this example includes intervals 13, 14 and 15. Thus, the first difference of intervals 15 and 16 (z and z+ 1, respectively) is added to interval 60 (n) to give the predicted 61st (n + 1) interval. The predicted value in this example, therefore, is: 1.318 + (0.82 - 1.242) = 0.896. The actual 61st interval in this data set is 1.062. Thus, the prediction error in this example is: 1.062 - 0.896 = 0.166.

We first removed the effects of scaling by normalizing the RR intervals to have mean (μ) = 0.0 and SD (σ) = 1.0. To predict the n + 1st RR interval (in Fig. 1, a question mark), we searched the preceding h RR intervals (scanning region; 60 points in Fig. 1) for the y intervals (scan window;y = 3 in Fig. 1) ending at an interval z (best match window) whose first differences most closely matched those of the intervals n-y + 1 to n (reference window; also y = 3 in Fig. 1). The predicted n + 1st interval was then taken to be a + b, where a is the nth interval and b is the first difference of the zth and z + 1st interval. We then calculated the error by subtracting the predicted value from the actual n + 1st RR interval. The adjustable parameters in the algorithm were h, y, and n, representing scanning region size, window size, and the last point in the scanning region, respectively. In this work, the scanning region was 1500 points, and the scan and reference windows were 4 points. We predicted RR intervals n + 1 = 1501 to n + 1 = 1600 in each of 175 data files. Predictability of data within a single data file was then quantified by calculating normalized mean-squared error (NMSE): Equation where N is the number of RR intervals predicted (100, in our case), σ2 is the variance of the RR intervals (1.0, after the normalization), RRactual is the observed value of the n + 1st RR interval, and RRpredicted is a + b, the predicted value. As predictability increases, NMSE (which we will refer to as the prediction error) decreases. Therefore, highly ordered (and thus predictable) time series generate low prediction errors. By definition, setting RRpredicted equal to the mean RR interval results in an prediction error of 1.0, and perfect prediction results in an error of 0.0. There is no upper bound on the value of the prediction error; the mean for our random number data sets was 2.28.

Approximate entropy. We implemented the algorithm developed by Pincus(26–28) (kindly supplied by Dr. Pincus). This statistic estimates regularity of time series, and is calculated as the negative logarithm of the likelihood that patterned runs of data points remain similar. For example, consider a run of any m + 1 consecutive numbers of the time series (we used m = 2). Suppose that the first m numbers recur (within a tolerance r, for which we used 20% of the SD) elsewhere in the time series. We calculate the probability that the third (m + 1st) numbers of both runs also lie within the tolerance r of one another. ApEn is the negative logarithm of that probability. For repeating data sets, that probability of recurrence is 1 and ApEn is 0. For nonrepeating data sets, the probability of recurrence is 0, and ApEn is infinitely large. The precise values of ApEn depend entirely on record length and other parameters of the data set and algorithm, and ApEn can be used only for comparative purposes. In this context, low values of ApEn connote more order in the RR interval time series.

Prediction error and ApEn are related notions. Although calculation of prediction error uses the single best matching pattern within the scanning region, ApEn yields a probabilistic answer by weighing the predictions from all the patterns matching within a tolerance in the scanning region.

Experimental strategy. One of our goals was to quantify the extent of order in the RR interval time series. We approached this goal by performing numerical experiments using both the clinically observed RR interval time series and other time series of simulated RR intervals that we designed with specific statistical and mathematical features in mind. We have called these simulated RR interval time series numerical data sets(NDS). Each consisted of 4096 normally distributed data points with mean = 0 and SD = 1.0. Figure 2 shows segments of these simulated RR interval time series, their power spectra, and their frequency histograms. In particular, we were interested in the extent and nature of the order in these time series.

Numerical data sets. Three 4096 point data sets were constructed to perturb RR interval time series. For each, 100 points of the series (top panels), the power spectrum (middle panels), and frequency histogram (bottom panels) are shown. Power spectra are the average of spectra of windows of 512 points overlapping by 50%. The ordinate scales are logarithmic, as is the abscissa in the middle panel. The smooth lines in the frequency histograms are Gaussian functions with μ = 0 and σ = 1.

The first NDS contained random numbers(29), and we refer to this set as NDS(random). It contained no order at all.

The second NDS was the sum of 1000 sine waves of frequency 0.001-1.000 Hz whose log-log power spectrum had a 1/f distribution. This frequency content is similar to that of time series of RR intervals(30, 31), and we refer to this set as NDS(1/f). It was perfectly ordered, and the nature of the order was that of a periodic process with many frequencies.

The third NDS was a series derived from the logistic map, a nonlinear system that displays period-doubling bifurcations and regions of deterministic chaos depending on the value of a parameter λ. The logistic map is:Equation Setting λ = 4.0 and x0 = 0.1 resulted in a chaotic series, although the values do not have a normal distribution. We therefore mapped the normally distributed NDS(random) to the logistic time series. To do so, we assigned a rank order to each value in the 4096 point logistic time series that we created. For example, the largest value in the time series was assigned rank order 1, and the smallest was assigned rank order 4096. We then rearranged NDS(random) by these ranks so, for example, the largest and smallest values of NDS(random) appeared in the same position as the largest and smallest values of the logistic time series. The result was a data set that had both the deterministic order of the logistic time series as well as the normal distribution of NDS(random). We refer to this set as NDS(logistic). Like NDS(1/f), this time series was perfectly ordered, but the nature of the order was that of deterministic chaos.

With these well defined numerical data sets in hand, we set out to estimate the extent and the nature of the order in the clinically observed RR interval time series. The overall strategy was to prepare many new time series by combining the simulated and observed RR interval time series in known proportions, and measuring the extent of order in these new time series using prediction error and approximate entropy.

Data and statistical analysis. For data simulation and curvefitting, we used Origin (Microcal), SigmaPlot (Jandel), and our own FORTRAN programs. Correlations were tested using the Spearman rank order test(SigmaStat, Jandel). Differences between groups were tested for significance using the t test and ANOVA. When data sets failed tests for normality and equal variance, nonparametric tests were used (Kruskal-Wallis ANOVA on ranks; Dunn's method for all pairwise multiple comparison procedures)(32). Values of p less than 0.05 were taken to be significant.

RESULTS

Order of the numerical data sets. The prediction error and ApEn for NDS(random), NDS(1/f), and NDS(logistic) were 2.280 and 2.305; 0.002 and 1.412; and 0.000 and 0.652, respectively. The very low prediction error for NDS(logistic) confirms that the order of the logistic time series was preserved in the reordering of NDS(random). ApEn scores for the ordered number sets were higher than the prediction errors, indicating the probabilistic nature of this measure.

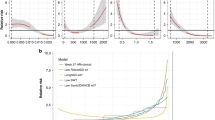

We performed numerical control experiments by combining these numerical data sets with one another. We first measured the effect of incrementally substituting NDS(random) for NDS(logistic), that is:Equation

This would have the effect of obscuring the order of NDS(logistic) as the proportion of NDS(random) increased, and the expected result is an increase in prediction error and ApEn. As shown in Figure 3A, the prediction error of NDS(logistic) is 0, validating the prediction algorithm. Substituting NDS(random) in a proportion of about 0.4 or more completely obscured the order of NDS(logistic), resulting in a prediction error indistinguishable from NDS(random).

Order of mixed numerical data sets. Prediction errors change as data sets are mixed. The leftmost and rightmost points in each plot are derived from unmixed data sets, as labeled. A and B demonstrate increasing prediction error as random numbers replace the deterministic number sets NDS(logistic) and NDS(1/f). The smooth lines in A and B are fits of Boltzmann functions to the data with w(0.5) and k of 0.2382 and 0.057 for A and 0.4413 and 0.134 for B. C and D demonstrate that mixed data sets have higher prediction errors whether the nature of order is different (C) or the same (D). In D, the averaged results of four and six separate realizations of NDS(1/f) and NDS(logistic) are plotted.

Next, we measured the effect of incrementally substituting NDS(random) for NDS(1/f), an ordered data set with frequency-domain characteristics more similar to RR interval time series. Generally, the results were similar to the results obtained by adding NDS(random) to NDS(logistic), but a larger proportion of NDS(random) was necessary to obscure the order of NDS(1/f)(Fig. 3B).

Next, we measured the effect of incrementally combining data sets with different kinds of order-here, substituting NDS(1/f) for NDS(logistic). This perturbation would have the effect of replacing the order of NDS(logistic) with another kind of order. The expected result is low prediction error at either extreme, where NDS(1/f) or NDS(logistic) predominate, and much higher prediction errors for mixed data sets. Figure 3C shows this result, with a large relative increase in prediction error for intermediate data sets. Note that the prediction errors of both NDS(1/f) and NDS(logistic) were near 0, confirming the ability of the prediction algorithm to detect order of more than one kind.

Finally, Figure 3D shows the result of substituting data sets with different extents of the same kind of order. The left-most points are the prediction errors for NDS(1/f) or NDS(logistic) replaced by NDS(random) in proportion 0.2. The rightmost points are the prediction errors for other realizations of NDS(1/f) and NDS(logistic) replaced by NDS(random) in proportion 0.8. In each case there is no large increase in prediction error for intermediate sets, such as that seen when one kind or order replaces another as in Figure 3C.

Order of the RR interval time series. We next measured the prediction error and ApEn in the 175 normalized RR interval time series. The mean (± SD) prediction errors were 1.40 ± 1.04 (group I), 0.88± 0.59 (group II), and 0.57 ± 0.53 (group III). The mean ApEn values were 1.40 ± 0.31, 1.02 ± 0.25, and 0.90 ± 0.34, respectively. All of these values are significantly lower than those of NDS(random) (2.280 and 2.305), demonstrating the presence of order in the RR interval time series.

Figure 4 shows the correlation of prediction error(A) and ApEn (B) with HRV. Both estimates of order demonstrated modest inverse correlations, with correlation coefficients of-0.4 and -0.6, respectively. This suggests that the order of the time series of RR intervals falls as HRV falls, in keeping with our findings(23) and with those of Goldberger and co-workers(22). The same data are shown as box plots in Fig. panels C and D. The differences between prediction errors for any two groups were significant, and the differences in ApEn between groups II and I and between groups I and III were significant. This demonstrates that the extent of order is higher in RR interval time series with normal HRV.

Order correlates with heart rate variability.(A) Prediction errors and (B) approximate entropy of the 175 RR interval time series plotted as a function of heart rate variability. Spearman correlation coefficients are -0.41 (A) and -0.58(B), p < 10-6. (C and D) box plots of the same data, categorized by level of heart rate variability.

Substituting observed data with random numbers. As shown in Figure 5, substituting NDS(random) obscured the order of all groups of RR interval time series. This was evidenced by an increase in prediction error and ApEn with increased proportion of NDS(random) for all three groups. We examined whether a larger proportion of NDS(random) was necessary to obscure the order of the apparently more ordered data in groups II and III compared with group I. Our method was to ascertain the prediction error when NDS(random) was substituted in a proportion of 0.4. For group I, the result was 2.18 ± 0.64; for group II, the result was 2.00 ± 0.64, and for group III, the result was 1.92 ± 0.67. The difference between groups I and III was significant (p = 0.05, t test). The same analysis of ApEn showed significant differences between any two of the groups (ANOVA on ranks). Because the addition of the same proportion of random noise obscured the order of RR interval time series with normal HRV to a lesser extent, this analysis confirms that there was a significantly larger degree of order in the original data.

Order as a function of perturbation of heart rate variability data with random noise. Box plots of (A) prediction error and (B) approximate entropy of data files as a function of added NDS(random). The top row of plots represents group I data files, the middle row group II data files, and the bottom row group III files. In the box plot symbol, the horizontal line is the median; the box encloses 50 of the data points, and the hatches enclose 80%.

Substituting observed data with ordered numbers.Figures 6 and 7 show the results of substituting RR interval files with NDS(1/f) and NDS(logistic). Data across the range of HRV responded very similarly to the incremental substitution of either. In both cases, intermediate data sets had increased prediction errors, as expected from the results shown in Figure 3,C and D. We note, however, that the relative increase in prediction error is much smaller for the intermediate data sets combining NDS(1/f) with RR interval time series (Fig. 6) than for those combining NDS(logistic) with RR interval time series (Fig. 7). This is consistent with the idea that RR interval time series are more similar to NDS(1/f) than to NDS(logistic). This is explored further in the next section.

Order as a function of perturbation of heart rate variability data with NDS(1/f). Box plots of prediction error and approximate entropy of data files as a function of substituted NDS(1/f). Note that intermediate data sets have only a small increase in prediction error, suggesting that the order in the RR interval time series is similar to that of NDS(1/f).

Order as a function of perturbation of heart rate variability data with NDS(logistic). Box plots of prediction error and approximate entropy of data files as a function of added NDS(logistic). The intermediate data sets have relatively higher prediction error, suggesting that one kind of order is replaced with another, and that the order of RR interval time series is not similar to NDS(logistic).

What is the extent and nature of the order in neonatal RR interval time series? We examined whether NDS(1/f), a data set constructed to mimic the frequency content of RR interval time series, was a better model of the observed data than was the chaotic NDS(logistic). This requires estimates of the extent of order in RR interval time series, for which we used a scheme based on the data in Figure 3,A and B. We fit the data to Boltzmann functions of the form: Equation where w is the proportion of randomness (that is, the proportion of NDS(random) substituted), w0.5 is the proportion of NDS(random) which elevates the prediction error to half its maximum value, and k is a slope factor. This gives us a way to relate the measured prediction error to the relative extents of randomness and order in an RR interval time series. Low prediction errors lead to low values of w, which correspond to larger extents of order. We then calculated the value of the proportion of added NDS(random) that yielded the observed mean prediction errors for groups of RR interval clinical data, and used the result to estimate the extent of order present in the original RR interval time series. For example, using the model for NDS(1/f) we estimated that the approximate proportion of order in group I was 0.54, in group II 0.65, and in group III 0.72. This is equivalent to saying that RR interval time series can be modeled as the weighted sum of either NDS(1/f) (or NDS(logistic) and NDS(random), and that time series with normal HRV differ from those with low HRV by an increased weighting of the ordered component.

We first examined the suitability of NDS(1/f) as a model for neonatal RR interval time series. If indeed RR interval time series can be modeled as NDS(1/f) with a random component, then we should be able to predict how much additional random component must be substituted to elevate the prediction error to its maximum in any group of RR interval time series of known HRV. We begin by representing RR interval time series asEquation

We want to test the idea that p, the proportion of ordered component, is 0.54, 0.65, and 0.72 for the three groups of data. We can represent the data sets obtained by substituting RR interval time series with NDS(random) by: Equation

To allow for the random component already present in RR interval time series, we substitute [p·ordered + (1 - p)·random] for the RR interval time series term: Equation

Collecting like terms yields: Equation

So the total random component in a mixed data set is [w + (1 -w)·(1 - p)], or 1 - p + wp. This is comprised in part of 1 - p, the extent of randomness inherent in the data, and in part of w, the proportion of NDS(random) that is substituted. Inspection of Figure 3B shows that prediction error rises to its maximum of 2.28 when the random component is 0.7 of the total. Thus w, the proportion of additional random noise necressary to achieve a total proportion of 0.7, comes from the solution of 0.7 = 1 - p + wp and is 1 - (0.3/p). Substituting our estimates of p-0.54, 0.65, and 0.72-results in w values of 0.44, 0.54, and 0.58 for the three groups. That is to say, we would predict that addition of random noise in proportion of 0.44 will give the maximum prediction error of 2.28 when we add NDS(random) to RR interval time series of low HRV (group I).

The prediction can be checked using the data in Figure 5. The mean prediction errors when NDS(random) is substituted in group I data with proportions of 0.4 and 0.5 are 2.18 and 2.46. Hence, the prediction is roughly correct. For group II, where we predict the additional proportion of random noise necessary will be 0.54, the mean prediction error is between 2.27 and 2.39. Our prediction is again quite close. For group III, where we predict the additional proportion of random noise necessary will be 0.58, the mean prediction error is between 2.14 and 2.34, which is again very close to the expected value of 2.28. From this analysis, we conclude that the use of NDS(1/f) as a model for ordered RR interval time series is a reasonable approximation.

These results can be compared with a similar exercise testing the hypothesis that NDS(logistic) is a good model for neonatal RR interval time series. Here, the approximate proportions of order are 0.75, 0.80, and 0.83. The prediction error in this model rises to its maximum when the random component is 0.4 of the total. The predicted proportions of NDS(random) necessary to maximize prediction error [1 - (0.6/p)] are 0.20, 0.25, and 0.27. The resulting prediction errors are about 2.0, 1.5, and 1.5. These are obviously much less than the expected value of 2.28.

This analysis suggests two conclusions. First, the RR interval time series are more similar to NDS(1/f) than NDS(logistic). This is not surprising, as NDS(1/f) was constructed to contain the same frequencies as RR interval time series, and there is no physical basis for suggesting that RR interval time series should mimic the logistic map. Second, because NDS(1/f) is a reasonable model over the entire range of HRV tested, we find no evidence for a large change in the kind of order as HRV falls. This idea is tested further in the next section.

Is the order in RR interval time series showing low HRV different from that in normal HRV? Substituting one ordered data set with another leads to intermediate data sets with less order than either unmixed data set. To investigate further whether the order in low HRV RR interval time series is different from that in RR interval time series showing normal HRV, we measured the order in mixed time series with components of low and normal HRV. If the order when HRV is low is of a different type than that when HRV is normal, then mixed sets should have a relatively large increase in prediction error.Figure 8 shows the results of mixing 100 randomly selected RR interval time series showing low HRV (group I) with 100 randomly selected RR interval time series showing normal HRV (group III). There is no large increase in the prediction error of intermediate data sets. This supports the idea that the order present in RR interval time series is not greatly changed regardless of the HRV.

The order in RR interval time series with low and normal heart rate variability is similar. Box plots of prediction error as RR interval time series showing low heart rate variability are incrementally substituted for RR interval time series showing normal heart rate variability. There is no large increase in prediction error for intermediate data sets.

DISCUSSION

We used numerical methods to evaluate the mathematical differences between neonatal RR interval time series across a wide spectrum of clinical illness severity and HRV. Our most important findings are 1) there is order present in neonatal RR interval time series, 2) the nature of the order is similar to periodic processes with a wide range of frequencies,3) the extent of order is higher when HRV is normal, and 4) there is no large change in the nature of the order across a wide range of HRV.

Why does heart rate variability change during illness? We consider three possibilities, each of which makes predictions about the mathematical characteristics of RR interval time series showing normal and low HRV.

The first mechanism is a reduction of parasympathetic tone. Here, sympathetic control of the heart rate would be unopposed, and less variability would be present. Cohen and co-workers(14) performed frequency domain analysis of RR interval time series in dogs and showed that parasympathetic nervous system activity accounted for all the high frequency elements. This kind of analysis of adult, pediatric, and neonatal RR interval time series has been used frequently since, and has shown that reduced high frequency content is an important predictor of poor clinical outcome. Although this hypothesis may be true, it cannot explain the phenomenon entirely. Multiple discrepancies between clinical findings and this hypothesis have been presented(33). For example, unopposed sympathetic control of heart rate would lead to a less variable rate, but that rate should be very high. A fall in neonatal HRV, though, is not matched with a proportionate rise in heart rate(4).

A second mechanism centers on the general notion that normal physiology is more complex than abnormal, hence heart rhythm is more irregular during health. Goldberger has pioneered the use of nonlinear dynamical analysis of RR interval time series, and has interpreted the findings in light of system complexity and deterministic chaos(31, 34–37).

Based on our observation of the importance of scaling of RR interval time series, we here suggest a third possible and nonexclusive mechanism: reduced responsiveness of the sinus node to catecholamines and acetylcholine during illness. This point of view holds that the normal physiologic mechanisms of HRV persist, but the dynamic range of the output is reduced.

At least three kinds of ion channels contribute to the rate at which sinus node cells spontaneously reach threshold and initiate heartbeats. Opening of hyperpolarization-activated nonselective monovalent cation channels(If)(38) or L-type calcium channels (ICa, L) depolarizes cells and increases heart rate, whereas opening of acetylcholine-dependent potassium channels(IK(ACh)) hyperpolarizes cells and reduces heart rate. Signal transduction processes regulate the activity of each. Sympathetic stimulation activates Gs, which speeds heart rate by directly opening If channels(39) and by activating adenylylcyclase-the resulting increase in cAMP shifts the voltage-dependence of If to more positive potentials(40), and leads to phosphorylation (and increased probability of opening) of L-type Ca2+ channels by cAMP-dependent protein kinase(41). The increase in these two inward currents leads to cell depolarization and thus to action potentials. Parasympathetic stimulation reduces both these depolarizing currents, and also leads to opening of the hyperpolarizing IK(ACh) by the actions of subunits of Gi(42). Other ionic channels and mechanisms, also under the control of signal transduction processes, no doubt contribute. The precisely adaptive control of heart rate required in a changing environment arises from this complex system with many regulated steps, which need produce only small changes in membrane currents to effect the necessary changes in heart rate.

Many sinus node cellular processes must operate and cooperate optimally to produce normal heart rate variability. We suggest that human illness that perturb cellular function result in reduced heart rate variability by constricting the dynamic range of the sinus node cellular responses to sympathetic and parasympathetic stimuli. Possible mechanisms include cell hypoxia, reduced intracellular pH or [ATP], and reduced protein kinase activity. The expected result of a mathematical analysis of heart rate during such an illness would be a reduction in the scaling of interbeat increments with no fundamental change in order. This kind of mechanism seems more likely to be at play in an acute infectious illness such as neonatal sepsis, where circulating bacterial toxins disrupt cellular function. Other mechanisms are more likely in chronic illnesses where new patterns of autonomic nervous system firing may arise as compensation for chronic organ failure. The expected result of a mathematical analysis in this situation would be a change in the order of interbeat increments. Thus, the study of Peng et al.(22) of adults with chronic heart failure found much more prominent effects on order of heart rate time series.

In summary, we find that there are at least two kinds of mathematical differences in neonatal RR interval time series with a range of HRV. First, time series showing low HRV have reduced scaling, manifest as lower amplitudes of differences between one RR interval and the next(4, 23). Second, time series with normal HRV have more order manifest by decreased prediction error and approximate entropy. These results are unlikely to share one mechanism. Although we view the difference in scaling in terms of intracellular signal transduction, the difference in order defies simple explanation, and may reflect deterministic dynamics of the heartbeat.

Abbreviations

- HRV:

-

heart rate variability

- CV:

-

coefficient of variation

- ApEn:

-

approximate entropy

- NDS:

-

numerical data set

References

Burnard ED 1959 Changes in heart size in the dyspnoeic newborn infant. BMJ 1: 1495–1500.

Rudolph AJ, Vallbona C, Desmond MM 1965 Cardiodynamic studies in the newborn. III. Heart rate patterns in infants with idiopathic respiratory distress syndrome. Pediatrics 36: 551–559.

Cabal LA, Siassi B, Zanini B, Hodgman JE, Hon EE 1980 Factors affecting heart rate variability in preterm infants. Pediatrics 65: 50–56.

Griffin MP, Scollan DF, Moorman JR 1994 The dynamic range of neonatal heart rate variability. J Cardiovasc Electrophysiol 5: 112–124.

Rother M, Zwiener U, Eiselt M, Witte H, Zwacka G, Frenzel J 1987 Differentiation of healthy newborns and newborns-at-risk by spectal analysis of heart rate fluctuations and respiratory movements. Early Hum Dev 15: 349–363.

Schechtman VL, Raetz SL, Harper RK, Garfinkel A, Wilson AJ, Southall DP, Harper RM 1992 Dynamic analysis of cardiac R-R intervals in normal infants and in infants who subsequently succumbed to the sudden infant death syndrome. Pediatr Res 31: 606–612.

Task Force of the European Society of Cardiology and the North American Society of Pacing and Electrophysiology 1996 Heart rate variability: standards of measurement, physiological interpretation, and clinical use. Circulation 93: 1043–1065.

Porges SW, Bohrer RE, Cheung MN, Drasgow F, McCabe PM, Keren G 1980 New time-series statistic for detecting rhythmic co-occurrence in the frequency domain: the weighted coherence and its application to psychophysiological research. Psychol Bull 88: 580–587.

Kleiger RE, Miller JP, Bigger JT, Moss AJ Jr, Multicenter Post-Infarction Research Group 1987 Decreased heart rate variability and its association with increased mortality after acute myocardial infarction. Am J Cardiol 59: 256–262.

McAreavey D, Neilson JMM, Ewing DJ, Russell DC 1989 Cardiac parasympathetic activity during the early hours of acute myocardial infarction. Br Heart J 62: 165–170.

Schechtman VL, Kluge KA, Harper RM 1988 Time domain system for assessing variations in heart rate. Med Biol Eng Comput 26: 367–373.

Schechtman VL, Harper RM, Kluge KA 1989 Development of heart rate variation over the first six months of life in normal infants. Pediatr Res 26: 343–346.

Kleiger RE, Stein PK, Bosner MS, Rottman JN 1992 Time domain measurements of heart rate variability. Cardiol Clin 10: 487–498.

Akselrod S, Gordon D, Ubel FA, Shannon DC, Barger AC, Cohen RJ 1981 Power spectrum analysis of heart rate fluctuation: a quantitative probe of beat-to-beat cardiovascular control. Science 213: 220–222.

Malliani A, Pagani M, Lombardi F, Cerutti S 1991 Cardiovascular neural regulation explored in the frequency domain. Circulation 84: 482–492.

Baldzer K, Dykes FD, Jones SA, Brogan M, Carrigan TA, Giddens DP 1989 Heart rate variability analysis in full-term infants: spectral indices for study of neonatal cardiorespiratory control. Pediatr Res 26: 188–195.

Ori Z, Monir G, Weiss J, Sayhouni X, Singer DH 1992 Heart rate variability: frequency domain analysis. Cardiol Clin 10: 499–533.

Kanters JK, Holstein-Rathlou N-H, Agner E 1994 Lack of evidence for lowdimensional chaos in heart rate variability. J Cardiovasc Electrophysiol 5: 591–601.

Guzzetti S, Signorini MG, Cogliati C, Mezzetti S, Porta A, Cerutti S, Malliani A 1996 Non-linear dynamics and chaotic indices in heart rate variability of normal subjects and heart transplanted patients. Cardiovasc Res 31: 441–446.

Mansier P, Clairambault J, Charlotte N, Medigue C, Vermeiren C, LePape G, Carre F, Gounaropoulou A, Swynghedauw B 1996 Linear and non-linear analyses of heart rate variability: a minireview. Cardiovasc Res 31: 371–379.

Mrowka R, Patzak A, Schubert E, Persson PB 1996 Linear and non-linear properties of heart rate in postnatal maturation. Cardiovasc Res 31: 447–454.

Peng C-K, Mietus J, Hausdorff JM, Havlin S, Stanley HE, Goldberger AL 1993 Long-range anticorrelations and non-Gaussian behavior of the heartbeat. Phys Rev Lett 70: 1343–1346.

Aghili AA, Rizwan-uddin, Griffin MP, Moorman JR 1995 Scaling and ordering of neonatal heart rate variability. Phys Rev Lett 74: 1254–1257.

Sauer T 1992 A noise reduction method for signals from nonlinear systems. Physica D 58: 193–201.

Sauer, T. 1994 Time series prediction by using delay coordinate embedding. In: Weigend AS, Gershenfield NA (eds) Time Series Prediction: Forecasting the Future and Understanding the Past. Addison-Wesley, Reading, MA, pp 175–194.

Pincus SM 1991 Approximate entropy as a measure of system complexity. Proc Natl Acad Sci USA 88: 2297–2301.

Pincus SM, Goldberger AL 1994 Physiological time-series analysis: what does regularity quantify?. Am J Physiol 266:H1643–H1656.

Pincus SM 1995 Approximate entropy (ApEn) as a complexity measure. Chaos 5: 110–117.

Press, WH, Teukolsky SA, Vetterling WT, Flannery BP 1994 Numerical Recipes in FORTRAN. The Art of Scientific Computing. Cambridge University Press, New York

Kobayashi M, Musha T 1982 1/f fluctuation of heartbeat period. IEEE Trans Biomed Eng 29: 456–457.

Goldberger AL, Bhargava V, West BJ, Mandell AJ 1985 On a mechanism of cardiac electrical stability: the fractal hypothesis. Biophys J 48: 525–528.

Glantz SA 1981 Primer of Biostatistics. McGraw-Hill, New York

Malik M, Camm AJ 1993 Heart rate variability: from facts to fancies. J Am Coll Cardiol 22: 566–568.

Goldberger AL, West BJ 1987 Chaos in physiology: health or disease? In: Degn H, Holden AV, Olsen LF (eds) Chaos in Biological Systems. Plenum Press, New York, pp 1–4.

Goldberger AL, West BJ 1987 Applications of nonlinear dynamics to clinical cardiology. Ann NY Acad Sci 504: 195–213.

Goldberger, A. 1990 Fractal electrodynamics of the heartbeat. In: Jalife J (ed) Mathematical Approaches to Cardiac Arrhythmias. New York Academy of Sciences. New York, pp 402–409.

Goldberger AL, Rigney DR, West BJ 1990 Chaos and fractals in human physiology. Sci Am 262: 42–46.

DiFrancesco D 1995 The onset and autonomic regulation of cardiac pacemaker activity: relevance of the f current. Cardiovasc Res 29: 449–456.

Yatani A, Okabe K, Codina J, Birnbaumer L, Brown AM 1990 Heart rate regulation by G proteins acting on the cardiac pacemaker channel. Science 249: 1163–1166.

DiFrancesco D, Tortora P 1991 Direct activation of cardiac pacemaker channels by intracellular cyclic AMP. Nature 351: 145–147.

Yue DT, Herzig S, Marban E 1990 -Adrenergic stimulation of calcium channels occurs by potentiation of high-activity gating modes. Proc Natl Acad Sci USA 87: 753–757.

Wickman K, Clapham DE 1995 Ion channel regulation by G proteins. Physiol Rev 75: 865–885.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Nelson, J., Rizwan-Uddin, Griffin, M. et al. Probing the Order within Neonatal Heart Rate Variability. Pediatr Res 43, 823–831 (1998). https://doi.org/10.1203/00006450-199806000-00017

Received:

Accepted:

Issue Date:

DOI: https://doi.org/10.1203/00006450-199806000-00017

This article is cited by

-

The principles of whole-hospital predictive analytics monitoring for clinical medicine originated in the neonatal ICU

npj Digital Medicine (2022)

-

Diagnostics for neonatal sepsis: current approaches and future directions

Pediatric Research (2017)

-

Complex signals bioinformatics: evaluation of heart rate characteristics monitoring as a novel risk marker for neonatal sepsis

Journal of Clinical Monitoring and Computing (2014)