Abstract

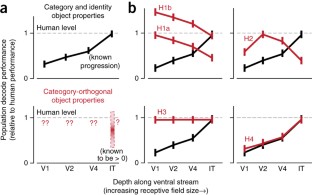

Extensive research has revealed that the ventral visual stream hierarchically builds a robust representation for supporting visual object categorization tasks. We systematically explored the ability of multiple ventral visual areas to support a variety of 'category-orthogonal' object properties such as position, size and pose. For complex naturalistic stimuli, we found that the inferior temporal (IT) population encodes all measured category-orthogonal object properties, including those properties often considered to be low-level features (for example, position), more explicitly than earlier ventral stream areas. We also found that the IT population better predicts human performance patterns across properties. A hierarchical neural network model based on simple computational principles generates these same cross-area patterns of information. Taken together, our empirical results support the hypothesis that all behaviorally relevant object properties are extracted in concert up the ventral visual hierarchy, and our computational model explains how that hierarchy might be built.

This is a preview of subscription content, access via your institution

Access options

Subscribe to this journal

Receive 12 print issues and online access

$209.00 per year

only $17.42 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

References

DiCarlo, J.J., Zoccolan, D. & Rust, N.C. How does the brain solve visual object recognition? Neuron 73, 415–434 (2012).

DiCarlo, J.J. & Cox, D.D. Untangling invariant object recognition. Trends Cogn. Sci. 11, 333–341 (2007).

Felleman, D.J. & Van Essen, D.C. Distributed hierarchical processing in the primate cerebral cortex. Cereb. Cortex 1, 1–47 (1991).

Tanaka, K. Inferotemporal cortex and object vision. Annu. Rev. Neurosci. 19, 109–139 (1996).

Logothetis, N.K. & Sheinberg, D.L. Visual object recognition. Annu. Rev. Neurosci. 19, 577–621 (1996).

Vogels, R. & Orban, G.A. Activity of inferior temporal neurons during orientation discrimination with successively presented gratings. J. Neurophysiol. 71, 1428–1451 (1994).

Carandini, M. et al. Do we know what the early visual system does? J. Neurosci. 25, 10577–10597 (2005).

Majaj, N.J., Hong, H., Solomon, E.A. & DiCarlo, J.J. Simple learned weighted sums of inferior temporal neuronal firing rates accurately predict human core object recognition performance. J. Neurosci. 35, 13402–13418 (2015).

Hung, C.P., Kreiman, G., Poggio, T. & DiCarlo, J.J. Fast readout of object identity from macaque inferior temporal cortex. Science 310, 863–866 (2005).

Rust, N.C. & Dicarlo, J.J. Selectivity and tolerance (“invariance”) both increase as visual information propagates from cortical area V4 to IT. J. Neurosci. 30, 12978–12995 (2010).

Movshon, J.A., Thompson, I.D. & Tolhurst, D.J. Spatial summation in the receptive fields of simple cells in the cat's striate cortex. J. Physiol. (Lond.) 283, 53–77 (1978).

Gochin, P.M. The representation of shape in the temporal lobe. Behav. Brain Res. 76, 99–116 (1996).

Ito, M., Tamura, H., Fujita, I. & Tanaka, K. Size and position invariance of neuronal responses in monkey inferotemporal cortex. J. Neurophysiol. 73, 218–226 (1995).

Goodale, M.A. & Milner, A.D. Separate visual pathways for perception and action. Trends Neurosci. 15, 20–25 (1992).

Ungerleider, L.G. & Haxby, J.V. 'What' and 'where' in the human brain. Curr. Opin. Neurobiol. 4, 157–165 (1994).

Bosking, W.H., Crowley, J.C. & Fitzpatrick, D. Spatial coding of position and orientation in primary visual cortex. Nat. Neurosci. 5, 874–882 (2002).

Zhou, H., Friedman, H.S. & von der Heydt, R. Coding of border ownership in monkey visual cortex. J. Neurosci. 20, 6594–6611 (2000).

Li, N., Cox, D.D., Zoccolan, D. & DiCarlo, J.J. What response properties do individual neurons need to underlie position and clutter “invariant” object recognition? J. Neurophysiol. 102, 360–376 (2009).

DiCarlo, J.J. & Maunsell, J.H. Anterior inferotemporal neurons of monkeys engaged in object recognition can be highly sensitive to object retinal position. J. Neurophysiol. 89, 3264–3278 (2003).

Logothetis, N.K., Pauls, J. & Poggio, T. Shape representation in the inferior temporal cortex of monkeys. Curr. Biol. 5, 552–563 (1995).

MacEvoy, S.P. & Yang, Z. Joint neuronal tuning for object form and position in the human lateral occipital complex. Neuroimage 63, 1901–1908 (2012).

Nishio, A., Shimokawa, T., Goda, N. & Komatsu, H. Perceptual gloss parameters are encoded by population responses in the monkey inferior temporal cortex. J. Neurosci. 34, 11143–11151 (2014).

Sayres, R. & Grill-Spector, K. Relating retinotopic and object-selective responses in human lateral occipital cortex. J. Neurophysiol. 100, 249–267 (2008).

Sereno, A.B., Sereno, M.E. & Lehky, S.R. Recovering stimulus locations using populations of eye-position modulated neurons in dorsal and ventral visual streams of non-human primates. Front. Integr. Neurosci. 8, 28 (2014).

Edelman, S. & Intrator, N. Towards structural systematicity in distributed, statically bound visual representations. Cogn. Sci. 27, 73–109 (2003).

Snippe, H.P. & Koenderink, J.J. Discrimination thresholds for channel-coded systems. Biol. Cybern. 66, 543–551 (1992).

Hinton, G., McClelland, J. & Rumelhart, D. Distributed representations. in Parallel Distributed Processing, Vol 1 (eds. Rumelhart, D. & McClelland, J.) 77–109 (MIT Press, 1986).

Eurich, C.W. & Schwegler, H. Coarse coding: calculation of the resolution achieved by a population of large receptive field neurons. Biol. Cybern. 76, 357–363 (1997).

Treisman, A. The binding problem. Curr. Opin. Neurobiol. 6, 171–178 (1996).

Yamins, D.L. et al. Performance-optimized hierarchical models predict neural responses in higher visual cortex. Proc. Natl. Acad. Sci. USA 111, 8619–8624 (2014).

Khaligh-Razavi, S.M. & Kriegeskorte, N. Deep supervised, but not unsupervised, models may explain it cortical representation. PLoS Comput. Biol. 10, e1003915 (2014).

Pinto, N., Cox, D.D. & DiCarlo, J.J. Why is real-world visual object recognition hard? PLoS Comput. Biol. 4, e27 (2008).

Rajalingham, R., Schmidt, K. & DiCarlo, J.J. Comparison of object recognition behavior in human and monkey. J. Neurosci. 35, 12127–12136 (2015).

Tsao, D.Y. & Livingstone, M.S. Mechanisms of face perception. Annu. Rev. Neurosci. 31, 411–437 (2008).

LeCun, Y. & Bengio, Y. Convolutional networks for images, speech, and time series. in The Handbook of Brain Theory and Neural Networks (ed. Arbib, M.A.) 255–258 (MIT Press, 1995).

Deng, J. et al. ImageNet: a large-scale hierarchical image database. Proc. IEEE Conf. Comput. Vis. Pattern Recognit. 248–255 (2009).

Chen, Y., Geisler, W.S. & Seidemann, E. Optimal decoding of correlated neural population responses in the primate visual cortex. Nat. Neurosci. 9, 1412–1420 (2006).

Mishkin, M., Ungerleider, L.G. & Macko, K.A. Object vision and spatial vision: two cortical pathways. Trends Neurosci. 6, 414–417 (1983).

Zoccolan, D., Kouh, M., Poggio, T. & DiCarlo, J.J. Trade-off between object selectivity and tolerance in monkey inferotemporal cortex. J. Neurosci. 27, 12292–12307 (2007).

Serre, T. et al. A quantitative theory of immediate visual recognition. Prog. Brain Res. 165, 33–56 (2007).

Nienborg, H. & Cumming, B.G. Decision-related activity in sensory neurons may depend on the columnar architecture of cerebral cortex. J. Neurosci. 34, 3579–3585 (2014).

Chikkerur, S., Serre, T., Tan, C. & Poggio, T. What and where: a Bayesian inference theory of attention. Vision Res. 50, 2233–2247 (2010).

Milner, P.M. A model for visual shape recognition. Psychol. Rev. 81, 521–535 (1974).

Yildirim, I., Kulkarni, T.D., Freiwald, W.A. & Tenenbaum, J.B. Efficient analysis-by-synthesis in vision: a computational framework, behavioral tests, and modeling neuronal representations. Proc. Annu. Conf. Cogn. Sci. Soc. 471 (2015).

Kersten, D., Mamassian, P. & Yuille, A. Object perception as Bayesian inference. Annu. Rev. Psychol. 55, 271–304 (2004).

Tanaka, K. Columns for complex visual object features in the inferotemporal cortex: clustering of cells with similar but slightly different stimulus selectivities. Cereb. Cortex 13, 90–99 (2003).

Brown, L.E., Halpert, B.A. & Goodale, M.A. Peripheral vision for perception and action. Exp. Brain Res. 165, 97–106 (2005).

Sereno, A.B. & Lehky, S.R. Population coding of visual space: comparison of spatial representations in dorsal and ventral pathways. Front. Comput. Neurosci. 4, 159 (2011).

Rishel, C.A., Huang, G. & Freedman, D.J. Independent category and spatial encoding in parietal cortex. Neuron 77, 969–979 (2013).

Swaminathan, S.K. & Freedman, D.J. Preferential encoding of visual categories in parietal cortex compared with prefrontal cortex. Nat. Neurosci. 15, 315–320 (2012).

Rosch, E., Mervis, C.B., Gray, W.D. & Johnson, D.M. Basic objects in natural categories. Cognit. Psychol. 8, 382–439 (1976).

DiCarlo, J.J. & Maunsell, J.H.R. Inferotemporal representations underlying object recognition in the free viewing monkey. Soc. Neurosci. Abstr. 498.2 (2000).

Frey, B.J. & Dueck, D. Clustering by passing messages between data points. Science 315, 972–976 (2007).

Quiroga, R.Q., Nadasdy, Z. & Ben-Shaul, Y. Unsupervised spike detection and sorting with wavelets and superparamagnetic clustering. Neural Comput. 16, 1661–1687 (2004).

Pedregosa, F. et al. Scikit-learn: machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

Rust, N.C., Mante, V., Simoncelli, E.P. & Movshon, J.A. How MT cells analyze the motion of visual patterns. Nat. Neurosci. 9, 1421–1431 (2006).

Jones, E. et al. SciPy: open source scientific tools for Python (2001–) http://www.scipy.org/ (15 July 2015).

Efron, B. & Tibshirani, R.J. An Introduction to the Bootstrap (CRC Press, 1994).

Kanwisher, N., McDermott, J. & Chun, M.M. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J. Neurosci. 17, 4302–4311 (1997).

Krizhevsky, A., Sutskever, I. & Hinton, G. ImageNet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 25 1106–1114 (2012).

Bergstra, J., Yamins, D. & Cox, D. Making a science of model search: hyperparameter optimization in hundreds of dimensions for vision architectures. Proc. Int. Conf. Mach. Learn. 115–123 (2013).

Acknowledgements

We are grateful to K. Schmidt and C. Stawarz for key technical support, and to D. Ardila, R. Rajalingham, J. Tenenbaum, S. Gershman, C. Jennings and J. Fan for useful suggestions. The infrastructure needed for this work was supported by DARPA (Neovision2) and the NSF (IIS-0964269). The bulk of the work presented in this manuscript was supported by the US National Institutes of Health (NEI-R01 EY014970), with partial support from the Simons Center for the Global Brain and the Office of Naval Research (MURI). H.H. was supported by a fellowship from the Samsung Scholarship. We thank NVIDIA for a grant of GPU hardware and Amazon for an education grant supporting computational and psychophysical work. Additional computational infrastructure support was provided by the McGovern Institute for Brain Research (OpenMind).

Author information

Authors and Affiliations

Contributions

H.H., N.J.M. and J.J.D. designed the neurophysiological experiments. H.H. and N.J.M. performed the neurophysiology experiments. D.L.K.Y., H.H. and J.J.D. designed the human psychophysical experiments. D.L.K.Y. performed the human psychophysical experiments. D.L.K.Y. and H.H. performed data analysis. D.L.K.Y. and H.H. performed computational modeling. D.L.K.Y., J.J.D., H.H. and N.J.M. wrote the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Integrated supplementary information

Supplementary Figure 1 Image sets.

(a) Typical examples drawn from the complex high-variability testing image set on which we collected neural data and evaluated models. The imageset contained 5760 images of 64 three-dimensional objects in 8 common categories, including animals, boats, cars, chairs, faces, fruits, planes and tables. The overall imageset was comprised of three subsets, with object view parameters (position, size and pose) chosen randomly from within low-, medium- and high-variation ranges, respectively. Object images were then superimposed on complex real-world background photographs, which were chosen randomly to ensure there were no correlations between background content and object category identity. This dataset supported a wide range of categorical and non-categorical tasks (Fig. 2), on which we evaluated population performance of V4 and IT neural populations (Figs. 3 – 5 and 7c) as well as computational models (Figs. 6, 7c, and Supplementary Figs. 7d–10). (b) Low-variation simpler examples drawn from the set of simple grating stimuli. This stimulus set supported three simple tasks, including horizontal and vertical position estimation of the center of grating object, as well as grating orientation (see Fig. 7a,b).

Supplementary Figure 2 Characterization of IT and V4 responses.

Single sites with high selectivity (panels a–c). (a) Category selectivity heatmaps of the single sites in our IT sample that are best at decoding each of the eight categories present in our stimulus set. Each colored bar represents the response of the indicated site relative to that site’s baseline (blue=low, red=high). The colored bars represent responses averaged over images of each of the eight object exemplars in the indicated category (vertical axis), further broken down into three increasing levels of parameter variation level (horizontal axis, see Methods). (b) Position selectivity response heatmaps for best single sites for object position estimation. Each colored squared position in each heat map represents the average of the indicated site’s activity over all images where the objects center is located in that square’s position. (c) Size selectivity response profiles for the best single sites for object size estimation. The x-axis represents the object diameter in degrees as seen by the animal. The y-axis represents response relative to baseline of the indicated site, averaged over all images whose size falls in the indicated diameter bin. Error bars are standard errors of the mean over images within each bin. Simple image-level metrics (panels d–f). (d) Histogram of per-site reliabilities for V4 sites (left panel) and IT sites (right panel), as measured by trial-vs-trial correlation of each site’s responses across images in high-variation image set (Supplementary Fig. 1a). (e) Histogram of per-site selectivity for V4 sites (left panel) and IT sites (right panel), defined for each site as d-prime of separating that site’s responses for its best (most highly response-driving) stimulus versus its worst (least responsive-driving) stimulus. (f) Histogram of population separability for V4 population (left panel) and IT population (right panel) for 5000 image pairs, measured as d-prime of population readout for separating the two images in the pair. In both the case of V4 and IT, 126 units were used to build population decodes: in the case of V4, all 126 measured units were included; while in the case of IT, for each image pair we randomly sampled a different subset of 126 units on which to build the decoder, to equalize with the V4 population.

Supplementary Figure 3 Performance training curves.

For each task, performance of population decodes as a function of number of training examples used to train linear classifiers and regressors. Error bars are over samples of units and image splits. Blue lines are for IT neural population, green lines are V4 neural population, gray is V1-like model population, and black is pixel control.

Supplementary Figure 5 Task performance distributions for single IT sites.

(a) Representative examples of distributions of classifier weights across neural sites for several tasks. x-axis represents normalized decoder weight, and y-axis is site bincount. Top row shows distributions for weights determined via linear SVM (and as used in Fig. 5); bottom row shows distributions for weights determined on a single-site basis. (b) and (c) Sparsity and imbalance measures for single-site weights (compare Fig 5a-c). (d) Scatter plots of single-site weights versus weights on the same units from SVM classifiers/regressors, for selected tasks. Each point is a distinct IT site. (e) Quantification of correlations of plots in panel d, for all tasks. x-axis measures Pearson’s R correlation between single-site and population decoding weights for each individual task.

Supplementary Figure 6 Comparison of task overlap to random and minimal control models.

(a) Comparison of weight overlap for categorical vs non-categorical tasks, relative to random overlap (top) and minimal overlap (bottom) models. Color represents t-statistic of separation of each pairwise overlap from the indicated control model (random in the top panel, minimal overlap in lower panel), with red color representing more overlap than random and blue representing less. (b) Average overlap for (i) (non-face) categorical tasks, (ii) faces-vs-non-face categorical tasks, (iii) non-face categorical tasks vs non-categorical tasks and (iv) faces vs non-categorical tasks. Shown are actual neural overlap (blue bars) in comparison to random overlap (gray) and minimal overlap (orange) models. Error bars for neural data overlap are due to variation in unit sampling and classifier training split. Error bars in model overlaps are due to variation of model input data (per-task and per-unit weight constrains) due to unit sampling and classifier training split, as well as random initial conditions of model weights.

Supplementary Figure 7 Computational Modeling approach.

(a) In previous work (Yamins, D., and Hong, H. et al. (2014) Proc Natl Acad Sci USA 111, 8619–24), we developed a model of areas in higher ventral cortex. Our approach was based on optimizing model parameters to maximize performance of the model’s output layer on a challenging object recognition task involving real-world images in a large number of semantically distinct categories. (Note: The cat-vs-dog task shown in the figure is just for illustration purposes; the actual task on which the model was optimized contained no animal images.) This model was then used to predict neural responses in macaque V4 and IT cortex, recorded on the images shown in Supplementary Fig. 1. (b) The top layer of the performance-optimized model generalizes from the photographic training set (red bar), and significantly outperforms control models (gray bars) and the V4 neural population on the 8-way object categorization task (Animals vs Boats vs Cars vs Chairs vs Faces vs Fruits vs Planes vs Tables) in the images shown in Supplementary Fig. 1a. Model performance is comparable to IT neural population (blue bar) and human performance measured via psychophysical experiments (black bar). (c) The performance-optimized model is then used predict neural response in IT cortex (top panel) and V4 cortex (bottom bars). Ability to predict IT neural patterns is better with each subsequent model layer, peaking at the top layer (red bars), whereas ability to predict V4 neurons peaks in the middle layers. For both V4 and IT, the performance-optimized model’s most predictive layer is significantly better than other control models, including ideal observers that perform perfectly on categorization tasks (purple bars) as well as control models that are also in the general class of neural networks (gray bars). All panels in this figure are related to previously published results (Yamins, D., and Hong, H., et al. (2014) Proc Natl Acad Sci USA 111, 8619–24). (d) Computational model training set performance timecourses. x axis represents amount of training data during model training, for the computational model used in Fig. 6 and Supplementary Figs. 8,9,10. y axis represents error rate, measured in percent incorrect. Gray line is performance on a held-out subset of the images in the overall training image set; black line is performance on actual subset of images on which the model was trained. (See Methods section for details of training.)

Supplementary Figure 8 Performance time-courses for computational model on test set tasks.

y-axis represents performance of linear regressors and classifiers trained on the top hidden layer of the computational model, on each task defined on the testing set (see Supplementary Fig. 1a). x-axis represents timepoints taken during training for categorization on the ImageNet dataset (as described in Methods). Performance was estimated by building top-level regularized classifiers and regressors (as described in the methods text) separately at each time step. Note that the x-axis is the same for all panels, representing the same training trajectory; the various y-axis panels are all based on the single feature set produced by the categorization training. The first two panels, with gray backgrounds, indicate categorical tasks (8-way basic categorization and subordinate category identifications); the remaining white-background panels indicate non-categorical tasks.

Supplementary Figure 10 Computational model categorization performance Vs non-categorical task performance.

y-axes in each panel are same as in Supplementary Fig. 8. x-axis is performance on test set categorization task (e.g., the y-axis of the upper-left-most panel in Supplementary Fig. 8). Each dot represents a distinct timepoint as shown on the x axis in Supplementary Fig. 8. Performance is shown for the top hidden layer of the model, which in every task achieved the highest performance level.

Supplementary Figure 11 Performance of populations on simple grating stimuli, using RBF classifier.

As in Fig. 7a, this figure shows population decoding results for position and orientation tasks defined on a simpler stimulus set consisting of grating patches placed on gray backgrounds. However, these results were evaluated using a non-linear Radial Basis Function (RBF) classifier with Gaussian kernels, in place of the simpler linear classifier as in Fig. 7a. As discussed in the text, the classifier parameters, including regularization constant C and kernel size σ were determined using standard cross-validation procedures. y-axis, bar colors, and error bars are as in Fig. 7a.

Supplementary Figure 12 Performance of neural populations as a function of increasing rotational variation.

This figure shows the same metrics as in main text Fig. 7c, for all tasks in the high-variation dataset task battery.

Supplementary Figure 13 Alternative computational model trained with lower-variation training data.

(a) Training performance curve for an alternative computational model, trained using a dataset containing a large number of categories but less overall background and object pose variation that the original model shown in Supplementary Fig. 7 (see Online Methods for more information). Axes and labels are as in Supplementary Fig. 7d. (b) Performance of alternative model on the high-variation testing set on which neural data was collected, for categorization task (left panel) and horizontal position estimation task (right panel). Performance for layers 1, 3, 4, 6 are shown. Axes are as in Supplementary Fig. 8. (c) Correlation between performance on testing-set categorization and horizontal position estimation tasks, for each of four model layers shown in panel b. The y axis and error bars are as in Fig. 6e.

Supplementary Figure 14 Alternative computational model performance by layer and task.

For the alternative computational model trained using less object pose variation, this figure shows performance on the testing set (as in Supplementary Fig. 13) as a function of model layer and task. Axes, error bars, and metrics are as in Supplementary Fig. 9.

Supplementary information

Supplementary Text and Figures

Supplementary Figures 1–14 and Supplementary Table 1 (PDF 3329 kb)

Rights and permissions

About this article

Cite this article

Hong, H., Yamins, D., Majaj, N. et al. Explicit information for category-orthogonal object properties increases along the ventral stream. Nat Neurosci 19, 613–622 (2016). https://doi.org/10.1038/nn.4247

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/nn.4247

This article is cited by

-

Curiosity: primate neural circuits for novelty and information seeking

Nature Reviews Neuroscience (2024)

-

Neural mechanisms underlying the hierarchical construction of perceived aesthetic value

Nature Communications (2023)

-

Model metamers reveal divergent invariances between biological and artificial neural networks

Nature Neuroscience (2023)

-

Perception of 3D shape integrates intuitive physics and analysis-by-synthesis

Nature Human Behaviour (2023)

-

The spatiotemporal neural dynamics of object location representations in the human brain

Nature Human Behaviour (2022)