Hello Nature readers, would you like to get this Briefing in your inbox free every week? Sign up here.

Beavers have unique patterns of scales on their tails that work not unlike human fingerprints. An AI system can be trained to recognise individual beavers. (blickwinkel/Alamy)

Algorithm identifies beavers’ ‘tailprints’

A pattern-learning AI system can tell Eurasian beavers (Castor fiber) apart by the pattern of scales on their tails. After training on hundreds of photos from 100 beavers that had previously been hunted, the algorithm could identify individuals with almost 96% accuracy. The method could make it faster and easier to track beaver populations, which is usually done by capturing individual animals and giving them ear tags or radio collars.

Reference: Ecology and Evolution paper

Satellite to map methane sources with AI

Data from a soon-to-be-launched satellite will help to create the most comprehensive global map of methane pollution. The project will use Google’s image detection algorithms to identify infrastructure such as pump jacks and storage tanks, where methane leaks tend to occur. Information on where oil and gas facilities are located is usually tightly guarded. It’s uncertain whether the information will translate into reduced emissions of the potent greenhouse gas. “I’m not confident that simply having this information will mean that companies and countries will switch off methane leaks like a light switch,” says Earth-science scholar Rob Jackson.

MIT Technology Review | 5 min read

Sora creates most realistic videos yet

OpenAI, creator of ChatGPT, has unveiled Sora, a system that can generate highly realistic videos from text prompts. “This technology, if combined with AI-powered voice cloning, could open up an entirely new front when it comes to creating deepfakes of people saying and doing things they never did,” says digital forensics researcher Hany Farid. Sora’s mistakes, such as swapping a walking person’s left and right legs, make it possible to detect its output — for now. In the long run “we will need to find other ways to adapt as a society”, says computer scientist Arvind Narayanan. This could, for example, include implementing watermarks for AI-generated videos.

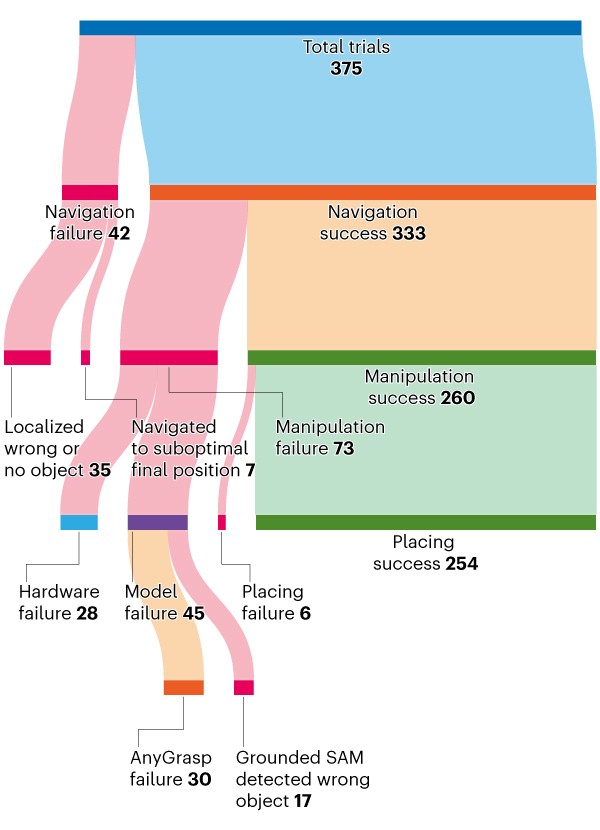

Infographic of the week

Liu, P. et al./arXiv preprint

For robots, tidying a room is a complex task — this graphic shows some of the many things that can go wrong when a system called OK-Robot is instructed to move objects around a room it has never before encountered. OK-Robot has a wheeled base, a tall pole and a retractable arm with a gripper, and is run entirely on off-the-shelf, open-source AI models. When given tasks such as “move the soda can to the box”, it managed to complete them almost 60% of the time, and more than 80% of the time in less cluttered rooms. (MIT Technology Review | 4 min read)

Reference: arXiv preprint (not peer reviewed)

Features & opinion

What the EU’s AI law means for science

The European Union’s new AI Act will put its toughest rules on the riskiest algorithms, but will exempt models developed purely for research. “They really don’t want to cut off innovation, so I’d be astounded if this is going to be a problem,” says technology ethics researcher Joanna Bryson. Some scientists suggest that the act could bolster open-source models, while others worry that it could hinder small companies that drive research. Powerful general-purpose models, such as the one behind ChatGPT, will face separate and stringent checks. Critics say that regulating AI models on the basis of their capability, rather than use, has no scientific basis. “Smarter and more capable does not mean more harm,” says AI researcher Jenia Jitsev.

AI tools combat image manipulation

Scientific publishers have started to use AI tools such as ImageTwin, ImaCheck and Proofig to help them to detect questionable images. The tools make it faster and easier to find rotated, stretched, cropped, spliced or duplicated images. But they are less adept at spotting more complex manipulations or AI-generated fakery. “The existing tools are at best showing the tip of an iceberg that may grow dramatically, and current approaches will soon be largely obsolete,” says Bernd Pulverer, chief editor of EMBO Reports.

How AI could help to reduce animal testing

An AI system built by the start-up Quantiphi tests drugs by analysing their impact on donated human cells. According to the company’s co-founder Asif Hasan, the method can achieve almost the same results as animal tests while reducing the time and cost of pre-clinical drug development by 45%. Another start-up, VeriSIM Life, creates AI simulations to predict how a drug would react in the body. Decreasing the pharmaceutical industry’s reliance on animal experiments could help alleviate ethical concerns as well as risks to humans. “Animals do not resemble human physiology,” says Quantiphi scientist Rahul Ramchandra Ganar. “And hence, when you go for clinical trials, there are many new adverse events or toxicology that get discovered.”