Abstract

Background:

Neutropenia is a common adverse reaction of chemotherapy. We assessed whether chemotherapy-induced neutropenia could be a predictor of survival for patients with non-small-cell lung cancer (NSCLC).

Methods:

A total of 387 chemotherapy-naïve patients who received chemotherapy (vinorelbine and gemcitabine followed by docetaxel, or paclitaxel and carboplatin) in a randomised controlled trial were evaluated. The proportional-hazards regression model was used to examine the effects of chemotherapy-induced neutropenia and tumour response on overall survival. Landmark analysis was used to lessen the bias of more severe neutropenia resulting from more treatment cycles allowed by longer survival, whereby patients who died within 126 days of starting chemotherapy were excluded.

Results:

The adjusted hazard ratios for patients with grade-1 to 2 neutropenia or grade-3 to 4 neutropenia compared with no neutropenia were 0.59 (95% confidence interval (CI), 0.36–0.97) and 0.71 (95% CI, 0.49–1.03), respectively. The hazard ratios did not differ significantly between the patients who developed neutropenia with stable disease (SD), and those who lacked neutropenia with partial response (PR).

Conclusion:

Chemotherapy-induced neutropenia is a predictor of better survival for patients with advanced NSCLC. Prospective randomised trials of early-dose increases guided by chemotherapy-induced toxicities are warranted.

Similar content being viewed by others

Main

Chemotherapy is the standard remedy for patients with advanced cancer and neutropenia is an important dose-limiting toxicity of anticancer agents. Several studies since the late 1990s have reported that neutropenia (or leukopenia) that occurs during chemotherapy is a predictor of significantly longer survival for patients with breast cancer (Saarto et al, 1997; Cameron et al, 2003). A recent study by Di Maio et al (2005) confirmed the positive correlation between chemotherapy-induced neutropenia and increased survival in a pooled analysis of three randomised trials, which included 1265 patients with advanced non-small-cell lung cancer (NSCLC). Pallis et al (2008) have also shown the association between chemotherapy-induced neutropenia and better clinical outcome for patients with NSCLC. In a prospective survey of oral fluoropyrimidine S-1 in 1055 patients with advanced gastric cancer, Yamanaka et al (2007) reported that patients with moderate (grade-2) neutropenia had the longest survival.

In light of these reports, we have analysed the associations between the extent of chemotherapy-induced neutropenia, overall survival and tumour response by reviewing data from a clinical trial of patients with advanced NSCLC.

Materials and methods

Patients and treatment

A total of 401 chemotherapy-naïve patients with NSCLC stage IIIB (positive pleural effusion) or stage IV (no brain metastases), who had Eastern Cooperative Oncology Group (ECOG) performance status of 0 or 1, were enrolled in this randomised controlled trial (Japan Multinational Trial Organization LC00-03) between March 2001 and April 2005. Of 393 eligible patients, information regarding chemotherapy-induced neutropenia was not available for six patients. Thus, data from 387 patients were included in this analysis. These participants were divided into two groups by treatment. The experimental group (VGD arm, n=192) received three cycles of intravenous vinorelbine (25 mg/m2) and gemcitabine (1000 mg/m2) administered on days 1 and 8 of each 21 day cycle, followed by three cycles of single-agent intravenous docetaxel (60 mg/m2) administered on day 1 of each 21 day cycle. The standard regimen (PC arm, n=195) consisted of six cycles of intravenous paclitaxel (225 mg/m2) plus carboplatin (area under curve =6) infused on day 1 of each 21 day cycle. Details of dose modifications and reductions have been described previously (Kubota et al, 2008). The protocol permitted use of granulocyte-colony-stimulating factor (G-CSF) for patients with grade-3 neutropenia with fever or grade-4 leukopenia or neutropenia, but did not permit prophylactic use.

Statistical analysis

Neutrophil counts were recorded on day 1, 8 and 15 in each treatment cycle for all patients and neutropenia was categorised using the National Cancer Institute common terminology criteria for adverse events (CTCAE, version 2.0). Tumour response was assessed by the Response Evaluation Criteria in Solid Tumors (RECIST) Group criteria. Overall survival was defined as time from randomisation until death from any cause. To evaluate the prognostic impact of chemotherapy-induced neutropenia, we first identified the worst grade of neutropenia during treatment for each patient. Then, using the proportional-hazards regression model, we estimated hazard ratios for overall survival according to the worst grade of neutropenia, after adjustment for covariates.

The participants in the trial had advanced NSCLC and a considerable number of patients died during the treatment period. This can lead to serious bias and result in a false-positive association between chemotherapy-induced neutropenia and longer survival, because patients who die during treatment receive fewer cycles of chemotherapy and, therefore, have less chance of developing more severe neutropenia. To lessen this bias, we used landmark analysis, whereby patients who died within 126 days (i.e., six 21-day cycles) of starting chemotherapy were excluded.

Survival curves were estimated using the Kaplan–Meier method. All reported p values are two-tailed; a value below 0.05 was considered statistically significant. All analyses were performed using SAS version 9.1 (SAS Institute, Cary, NC, USA).

Results

Incidence of neutropenia

Table 1 shows the grade of neutropenia according to treatment cycle of chemotherapy. A total of 275 of the 387 patients died. The median follow-up time for all patients was 393 days (range 19–1711). One hundred and fifty-five patients (40%) completed the planned six cycles of treatment and 308 patients (80%) had chemotherapy-induced neutropenia: 20 patients (5%) had grade 1, 38 (10%) had grade 2, 97 (25%) had grade 3 and 153 (40%) had grade 4 as the worst grade.

G-CSF use

Table 2 shows the use of G-CSF according to the worst grade of neutropenia. Prophylactic use was not permitted. Nevertheless, G-CSF was administered to 15 patients who did not have grade-3 or greater neutropenia, or grade-4 leukopenia, so these patients were excluded from the analysis.

Association between survival and chemotherapy-induced neutropenia

First, the association between the worst grade of neutropenia and the number of treatment cycles was evaluated. Patients who experienced more severe neutropenia received more cycles of chemotherapy (Table 3).

We then examined the causes of deaths that occurred within 126 days of the initiation of chemotherapy. Thirty-three patients died and lung cancer was the cause of death for 26 patients. Pneumonia, myocardial infarction, neutropenic sepsis and interstitial pneumonia resulting from previous radiation accounted for one death each. The causes of three deaths were unknown. Only one patient died from neutropenic sepsis through this clinical trial.

These data indicate that patients who had better outcomes could receive more cycles of treatment, resulting in higher incidence of chemotherapy-induced neutropenia. To lessen this bias, we used a landmark analysis, excluding the 33 patients who died and two patients who were lost to follow-up within 126 days of the initiation of chemotherapy. Thus, data from 337 patients were analysed: 162 patients in the VGD arm and 175 patients in the PC arm. Since the mean number of treatment cycles for patients who developed chemotherapy-induced neutropenia was still higher than that for patients who had no neutropenia (Table 3), we included the number of treatment cycles as a covariate in the multivariate analysis. Given the size of this trial, the patients were distributed into three categories according to the worst grade of neutropenia: absent (grade 0), mild (grades 1 and 2) and severe (grades 3 and 4).

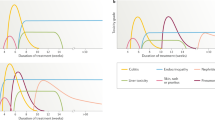

The median survival time was 10.5 months (95% confidence interval (CI) 8.2–12.4) for the grade-0 group (n=55), 16.6 months (95% CI 13.8–20.7) for the grade-1 to 2 group (n=46) and 17.8 months (95% CI 15.0–20.3) for the grade-3 to 4 group (n=236) (Figure 1). The baseline patient characteristics for the different groups are shown in Table 4. Using the proportional-hazards regression model to adjust for the imbalance of patient characteristics among groups, we estimated hazard ratios for overall survival according to the worst grade of neutropenia after adjustment for covariates (sex, smoking history, stage, ECOG performance status, weight loss, serum lactate dehydrogenase level, presence of bone, liver or skin metastases, pretreatment absolute neutrophil count and number of the treatment cycles as the known prognostic factors) (Paesmans et al, 1995; Pfister et al, 2004; Teramukai et al, 2009). Patients who had chemotherapy-induced neutropenia had lower risk of death than those who did not, although the difference between no neutropenia and grade-3 to 4 neutropenia was not significant. The adjusted hazard ratio compared with the grade-0 group was 0.59 (95% CI 0.36–0.97; P=0.036) for the grade-1 to 2 group, and that for the grade-3 to 4 group was 0.71 (95% CI 0.49–1.03; P=0.072) (Table 5). In both treatment arms, the proportion of patients who moved on from VGD or PC to second-line chemotherapy (e.g., because of progressive disease (PD)) was almost equal among the groups distributed by the grade of neutropenia.

We also estimated the hazard ratios for overall survival according to the combination of worst grade of neutropenia and best tumour response, after adjustment for the covariates listed above (Table 6). As a preliminary step, hazard ratios according to the best tumour response alone were calculated. The adjusted hazard ratio for stable disease (SD) compared with partial response (PR) as the best tumour response was 1.93 (95% CI, 1.39–2.67) and that for PD compared with PR was 3.31 (95% CI, 1.89–5.79). The adjusted hazard ratio compared with no neutropenia with PR was 0.29 (95% CI 0.11–0.80) for grade-1 to 2 neutropenia with PR; 0.44 (95% CI 0.21–0.92) for grade-3 to 4 neutropenia with PR; 0.78 (95% CI 0.33–1.87) for grade-1 to 2 neutropenia with SD and 0.80 (95% CI 0.38–1.70) for grade-3 to 4 neutropenia with SD. The hazard ratios did not differ significantly between the patients who developed neutropenia with SD and those who lacked neutropenia with PR.

Discussion

It has been reported that haematological toxicity could be a measure of the biological activities of cytotoxic drugs. Many of us believe that administration of larger dose of chemotherapeutic agents over a defined period is more likely to result in success – the patient will have more chances to go into complete or partial remission, and this will improve survival (Luciani et al, 2009). However, several studies in the last decade have reported that larger doses of chemotherapy do not always improve prognosis (Stadtmauer et al, 2000; Möbus et al, 2007). Using a unique time-dependent approach to analyse data from a prospective survey of patients with advanced gastric cancer treated with oral fluoropyrimidine S-1, Yamanaka et al (2007) reported that survival was longest in patients who experienced grade-2 neutropenia as the worst grade.

Here we review data from a clinical trial of patients with advanced NSCLC. Patients who developed neutropenia showed longer survival than those who had no neutropenia. Furthermore, severe neutropenia (grade 3–4) was no better than mild neutropenia (grade 1–2) for prediction of overall survival. As a whole, these results are consistent with previous reports of the chemotherapy of NSCLC and gastric cancer (Di Maio et al, 2005; Yamanaka et al, 2007; Pallis et al, 2008), and strongly suggest that neutropenia per se is not important, but the use of neutropenia to reflect that an adequate dose has been given.

The dose of chemotherapeutic agents is usually determined on the basis of body surface area (BSA) or creatinine clearance; however, elimination of the agents will vary from patient to patient because of a variety of factors such as pharmacogenetic background (Friedman et al, 1999) and drug interactions (Relling et al, 2000). Variation in drug elimination may explain why some patients in this clinical trial experience severe toxicities or inadequate antitumour effects. Absence of neutropenia may mean that the doses of chemotherapeutic agents administered are not enough to produce the full antitumour effect. Gurney (2002) pointed out a poor correlation between BSA and the pharmacokinetics of anticancer agents (Newell, 2002).

From this perspective, this association also suggests that neutropenia or other toxicities induced by chemotherapy can be used as an indicator for planning regimens tailored to individual patients. When we administer chemotherapy to patients, we prepare a schedule for administration of each agent. Then, after initiation of chemotherapy, we often reduce the planned doses of agents in the event of severe neutropenia or other toxicities, whereas we seldom increase the dose if a patient lacks such toxicities. However, increasing the doses of agents to induce mild or moderate neutropenia may be of benefit for patients who do not show haematological or major non-haematological toxicities in the first or second cycle of treatment.

We have previously confirmed that increased pretreatment neutrophil count is an independent negative prognostic factor (Teramukai et al, 2009), and we included it as one of covariates in the present study. Tumour-related leukocytosis (neutrophilia) is encountered occasionally in patients with NSCLC and has recently been demonstrated to be an important negative prognostic factor for overall survival and time to progression in patients with NSCLC (Mandrekar et al, 2006). Although autonomous production of G-CSF and granulocyte–macrophage-colony-stimulating factor (GM-CSF) by tumour has been identified in some cases, leukocytosis (neutrophilia) in NSCLC patients is not fully understood and is likely to be caused by a combination of factors. Considering the negative prognostic value of leukocytosis (neutrophilia), it can be hypothesised that a proportion of the patients who do not develop neutropenia during treatment may have a poorer prognosis because they may be potentially affected by tumour-related leukocytosis (neutrophilia) and protected from chemotherapy-induced neutropenia (Maione et al, 2009). However, the results of our analysis suggest that chemotherapy-induced neutropenia is a predictor independent of NSCLC-related leukocytosis, since the risk of death estimated by the proportional-hazards regression model was significantly lower in patients who had grade-1 to 2 chemotherapy-induced neutropenia after adjustment for covariates, including pretreatment neutrophil count.

We estimated hazard ratios for the overall survival for subgroups assigned by the combination of the worst grade of neutropenia and the best tumour response. Patients who experienced neutropenia with PR as the best tumour response showed lower risk of death than those with PR who lacked neutropenia. The hazard ratios did not differ significantly between the patients who developed mild or severe neutropenia with SD and those with PR who lacked neutropenia. There are some limitations to the assessment of tumour size using the RECIST method or other widely used methods of assessing tumour response to anticancer therapy. Lara et al (2008) reported the importance of how to interpret SD and introduced the concept of disease control rate. Results from the randomised trial (JMTO LC00-03) and this study add further evidence that the association between the RECIST response and overall survival may depend on the grade of neutropenia and that the RESICT response may not be a surrogate endpoint for overall survival of advanced NSCLC in the chemotherapy setting (Kubota et al, 2008). Further investigation into this association in a large-scale meta-analysis would be helpful to resolve the important question of whether tumour response to anticancer agents could be used as a surrogate for overall survival in patients with advanced cancer (Ichikawa and Sasaki, 2006).

In conclusion, we confirm that chemotherapy-induced neutropenia can predict survival for patients with advanced NSCLC. This association also suggests the possibility that neutropenia, or other chemotherapy-induced toxicities, can be used as indicators in setting up dosage regimens that are tailored for individual patients. Categorisation of patients according to drug elimination capacity may be useful in determining initial dosage regimens, with subsequent fine-tuning depending on the presence or absence of haematological and non-haematological toxicities during early cycles. Prospective randomised trials of early-dose increases guided by chemotherapy-induced toxicities are, therefore, warranted.

Change history

16 November 2011

This paper was modified 12 months after initial publication to switch to Creative Commons licence terms, as noted at publication

References

Cameron DA, Massie C, Kerr G, Leonard RC (2003) Moderate neutropenia with adjuvant CMF confers improved survival in early breast cancer. Br J Cancer 89: 1837–1842

Di Maio M, Gridelli C, Gallo C, Shepherd F, Piantedosi FV, Cigolari S, Manzione L, Illiano A, Barbera S, Robbiati SF, Frontini L, Piazza E, Ianniello GP, Veltri E, Castiglione F, Rosetti F, Gebbia V, Seymour L, Chiodini P, Perrone F (2005) Chemotherapy-induced neutropenia and treatment efficacy in advanced non-small-cell lung cancer: a pooled analysis of three randomised trials. Lancet Oncol 6: 669–677

Friedman HS, Petros WP, Friedman AH, Schaaf LJ, Kerby T, Lawyer J, Parry M, Houghton PJ, Lovell S, Rasheed K, Cloughsey T, Stewart ES, Colvin OM, Provenzale JM, McLendon RE, Bigner DD, Cokgor I, Haglund M, Rich J, Ashley D, Malczyn J, Elfring GL, Miller LL (1999) Irinotecan therapy in adults with recurrent or progressive malignant glioma. J Clin Oncol 17: 1516–1525

Gurney H (2002) How to calculate the dose of chemotherapy. Br J Cancer 86: 1297–1302

Ichikawa W, Sasaki Y (2006) Correlation between tumor response to first-line chemotherapy and prognosis in advanced gastric cancer patients. Ann Oncol 17: 1665–1672

Kubota K, Kawahara M, Ogawara M, Nishiwaki Y, Komuta K, Minato K, Fujita Y, Teramukai S, Fukushima M, Furuse K (2008) Vinorelbine plus gemcitabine followed by docetaxel versus carboplatin plus paclitaxel in patients with advanced non-small-cell lung cancer: a randomised, open-label, phase III study. Lancet Oncol 9: 1135–1142

Lara Jr PN, Redman MW, Kelly K, Edelman MJ, Williamson SK, Crowley JJ, Gandara DR (2008) Disease control rate at 8 weeks predicts clinical benefit in advanced non-small-cell lung cancer: results from Southwest Oncology Group randomized trials. J Clin Oncol 26: 463–467

Luciani A, Bertuzzi C, Ascione G, Di Gennaro E, Bozzoni S, Zonato S, Ferrari D, Foa P (2009) Dose intensity correlates with survival in elderly patients treated with chemotherapy for advanced non-small cell lung cancer. Lung Cancer 66: 94–96

Maione P, Rossi A, Di Maio M, Gridelli C (2009) Tumor-related leucocytosis and chemotherapy-induced neutropenia: linked or independent prognostic factors for advanced non-small cell lung cancer? Lung Cancer 66: 8–14

Mandrekar SJ, Schild SE, Hillman SL, Allen KL, Marks RS, Mailliard JA, Krook JE, Maksymiuk AW, Chansky K, Kelly K, Adjei AA, Jett JR (2006) A prognostic model for advanced stage nonsmall cell lung cancer. Pooled analysis of North Central Cancer Treatment Group trials. Cancer 107: 781–792

Möbus V, Wandt H, Frickhofen N, Bengala C, Champion K, Kimmig R, Ostermann H, Hinke A, Ledermann JA (2007) Phase III trial of high-dose sequential chemotherapy with peripheral blood stem cell support compared with standard dose chemotherapy for first-line treatment of advanced ovarian cancer: intergroup trial of the AGO-Ovar/AIO and EBMT. J Clin Oncol 25: 4187–4193

Newell DR (2002) Getting the right dose in cancer chemotherapy – time to stop using surface area? Br J Cancer 86: 1207–1208

Pallis AG, Agelaki S, Kakolyris S, Kotsakis A, Kalykaki A, Vardakis N, Papakotoulas P, Agelidou A, Geroyianni A, Agelidou M, Hatzidaki D, Mavroudis D, Georgoulias V (2008) Chemotherapy-induced neutropenia as a prognostic factor in patients with advanced non-small cell lung cancer treated with front-line docetaxel–gemcitabine chemotherapy. Lung Cancer 62: 356–363

Paesmans M, Sculier JP, Libert P, Bureau G, Dabouis G, Thiriaux J, Michel J, Cutsem OV, Sergysels R, Mommen P, Klastersky J (1995) Prognostic factors for survival in advanced non-small-cell lung cancer: univariate and multivariate analyses including recursive partitioning and amalgamation algorithms in 1,052 patients. J Clin Oncol 13: 1221–1230

Pfister DG, Johnson DH, Azzoli CG, Sause W, Smith TJ, Baker Jr S, Olak J, Stover D, Strawn JR, Turrisi AT, Somerfield MR (2004) American Society of Clinical Oncology treatment of unresectable non-small-cell lung cancer guideline: update 2003. J Clin Oncol 22: 330–353

Relling MV, Pui CH, Sandlund JT, Rivera GK, Hancock ML, Boyett JM, Schuetz EG, Evans WE (2000) Adverse effect of anticonvulsants on efficacy of chemotherapy for acute lymphoblastic leukaemia. Lancet 356: 285–290

Saarto T, Blomqvist C, Rissanen P, Auvinen A, Elomaa I (1997) Haematological toxicity: a marker of adjuvant chemotherapy efficacy in stage II and III breast cancer. Br J Cancer 80: 1763–1766

Stadtmauer EA, O’Neill A, Goldstein LJ, Crilley PA, Mangan KF, Ingle JN, Brodsky I, Martino S, Lazarus HM, Erban JK, Sickles C, Glick JH (2000) Conventional-dose chemotherapy compared with high-dose chemotherapy plus autologous hematopoietic stem-cell transplantation for metastatic breast cancer. Philadelphia Bone Marrow Transplant Group. N Engl J Med 342: 1069–1076

Teramukai S, Kitano T, Kishida Y, Kawahara M, Kubota K, Komuta K, Minato K, Mio T, Fujita Y, Yonei T, Nakano K, Tsuboi M, Shibata K, Furuse K, Fukushima M (2009) Pretreatment neutrophil count as an independent prognostic factor in advanced non-small-cell lung cancer: an analysis of Japan Multinational Trial Organisation LC00-03. Eur J Cancer 45: 1950–1958

Yamanaka T, Matsumoto S, Teramukai S, Ishiwata R, Nagai Y, Fukushima M (2007) Predictive value of chemotherapy-induced neutropenia for the efficacy of oral fluoropyrimidine S-1 in advanced gastric carcinoma. Br J Cancer 97: 37–42

Acknowledgements

This study was sponsored by the Japan Multinational Trial organisation. We thank the Translational Research Informatics Center, Kobe, Japan, for data management.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

From twelve months after its original publication, this work is licensed under the Creative Commons Attribution-NonCommercial-Share Alike 3.0 Unported License. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-sa/3.0/

About this article

Cite this article

Kishida, Y., Kawahara, M., Teramukai, S. et al. Chemotherapy-induced neutropenia as a prognostic factor in advanced non-small-cell lung cancer: results from Japan Multinational Trial Organization LC00-03. Br J Cancer 101, 1537–1542 (2009). https://doi.org/10.1038/sj.bjc.6605348

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/sj.bjc.6605348

Keywords

This article is cited by

-

Chemotherapy-induced neutropenia as a prognostic factor in patients with extensive-stage small cell lung cancer

European Journal of Clinical Pharmacology (2023)

-

Chemotherapy-induced neutropenia and treatment efficacy in advanced non-small-cell lung cancer: a pooled analysis of 6 randomized trials

BMC Cancer (2021)

-

Reduction of derived neutrophil-to-lymphocyte ratio after four weeks predicts the outcome of patients receiving second-line chemotherapy for metastatic colorectal cancer

Cancer Immunology, Immunotherapy (2021)

-

Prognostic potential of pre-partum blood biochemical and immune variables for postpartum mastitis risk in dairy cows

BMC Veterinary Research (2020)

-

Can body composition be used to optimize the dose of platinum chemotherapy in lung cancer? A feasibility study

Supportive Care in Cancer (2017)