Key Points

-

Stresses the importance of testing the validity and reliability of patient survey instruments so that dental teams can be confident that their results can accurately inform their quality development

-

Explains straightforward methods which can be used to assess the validity and reliability of patient survey instruments.

Abstract

Aim To consider the extent to which the validity and reliability of the Denplan Excel Patient Survey (DEPS) has been confirmed during its development and by its use in general dental practice and to explore methods by which any survey instrument used in general dental practice might be validated and tested for reliability.

Methods DEPS seeks to measure perceived practice performance on those issues considered to be of greatest importance to patients. Content validity was developed by a literature review and tested in a pilot study. Criterion validity was tested by comparing patient retention in a payment plan for practices achieving the highest DEPS scores with those attaining the lowest scores over a two year period (surveys completed between 2010 and 2012). Reliability was assessed using the test/re-test method for 23 practices with approximately a three year time interval between tests. Internal consistency was tested by comparing Net Promoter Scores (NPS – which is measured in DEPS) attained by practices with their Patient Perception Index (PPI) as measured by the ten core questions in DEPS.

Results Practices in the pilot study strongly endorsed the content validity of DEPS. The 12 practices with the highest scores in the DEPS slightly increased their number of patients registered in Denplan payment plans during a two year period. The 12 lowest scoring practices saw 7% of their patients de-register during the same period. The 23 practices selected for the test/re-test study averaged more than 250 responses for both the test and re-test phases. The magnitude and pattern of their results were similar in both phases, while, on average, a modest improvement in results was observed. Internal consistency was confirmed as NPS results in DEPS closely mapped PPI results. The higher the measurement of perceived quality (PPI) the more likely patients were to recommend the practice (NPS).

Conclusion Both through its development and use over the last four years The DEPS has demonstrated good validity and reliability. The authors conclude that this level of validity and reliability is adequate for the clinical/general care audit purpose of DEPS and that it is therefore likely to reliably inform practices where further development are indicated. It is important and quite straightforward to both validate and check the reliability of patient surveys used in general dental practice so that dental teams can be confident in the instrument they are using.

Similar content being viewed by others

Background

The Concise Oxford Dictionary1 defines 'valid' as 'sound, defensible and well-grounded'. 'Rely' is defined as 'depend with confidence or assurance'.

It is obviously important that patient survey instruments should be developed with these twin qualities of validity and reliability if they are to be of value to practices in accurately understanding the perceptions of their patients. Dental teams need to have confidence in the instruments they are using for this purpose. The scientific literature has elaborated on these simple definitions of validity and reliability when applied to survey questionnaires.

However, in respect of validity, Walonick2 states: 'validity refers to the accuracy or truthfulness of a measurement. Are we measuring what we think we are? This is a simple concept, but in reality, it is extremely difficult to determine if a measure is valid. Generally, validity is based solely on the judgement of the researcher.' He goes on to assert that 'validity' is an opinion therefore and that there is no statistical test for it.

Despite Walonick's view, there is copious literature on testing of the validity of survey instruments. The literature is, however, far from definitive and consistent, as might be expected with this subject, which is perhaps not a précis science? Nevertheless, the authors summarise, from a review of this literature, three elements commonly described as part of validity testing: (NB. The term 'construct' will be used in this paper as shorthand for 'the theme/subject matter being measured by a survey instrument'.)

Content validity

Are the questions selected for use in the survey adequate to assess to the construct being tested? Has previous evidence been reviewed for factors which might need inclusion in the survey in order to cover the scope of the construct? Many workers suggest that a panel of experts familiar with the construct, and a field test of the instrument, can support the testing of the content validity of a survey questionnaire.

Criterion validity

Do the results obtained from the instrument successfully predict 'real life' outcomes? If a survey claims to measure patient satisfaction with a service, do those practices with the best scores retain and recruit more patients than practices with lower scores for example?

Construct validity

Is the complete survey actually measuring the construct that it claims to measure? One way of testing this is to compare results from two different groups who might be expected to produce very different results. For example, if a survey was designed to measure anxiety, Mertens3 suggests that it could be tested on a group of students on a Caribbean island and their results compared to a group of patients who had been hospitalised with anxiety. An instrument with construct validity would be expected to measure significantly higher levels of anxiety in the latter group!

Walonick2 describes three statistical methods for estimating reliability:

The 'test-re-test' method

The survey is conducted at two different points in time using the same group of patients and the same instrument. Unless the perceptions of the group have changed in the interim, the results should be very similar.

The 'equivalent form' method

A second, and different, instrument is used to measure the same patient perceptions. The degree to which the results using the two different instruments correlate gives a measure of reliability.

The 'internal consistency' method

Many survey instruments are designed to have more than one question seeking to measure a particular aspect of patient perception. The degree to which the results from similar questions correlate is a popular method for testing reliability.

It would be prudent to apply at least some of these validity and reliability tests to any patient survey instrument being used in general dental practice. There is a danger otherwise that dental teams will not trust the feedback they are receiving.

The current version of the DEPS has been in use now for more than four years without any change to the questions or the grading of patient response offered. More than 500 practices in the voluntary practice certification scheme, Denplan Excel, have now used the instrument and more than 100,000 patient responses have been received in total. Practices are now in their second round of using the survey, as it is operated on a three year cycle. Busby et al.4 describe the development of the instrument and the protocol used in conducting the survey, which has remained unchanged during the four year period. Busby5 had previously described the development of the ten core questions in DEPS and a pilot study involving seven dental practices (20 dentists). A copy of the survey instrument is reproduced in Figure 1. Furthermore, Busby et al.6 have also described the relationship of the results for the ten core questions in the survey with the Reiccheld7 NPS which is also included in the instrument. Busby et al. (2012)4 describe how the PPI in DEPS summarises the overall result for a practice on the ten core questions with a percentage score.

Busby et al. (2012)4 state that DEPS was designed to 'inform practice development on those issues of greatest importance to patients.'

The construct which DEPS seeks to measure is perceived performance on those issues of greatest importance to patients. This construct could perhaps be summarised as perceived quality.

The aim of this paper is to consider the extent to which the validity and reliability of DEPS has been confirmed during its development and by its use in general dental practice.

Methods

Content validity

The thesis5 which led to the development of DEPS was revisited and scrutinised for evidence of content validity. As the construct being measured is 'perceived performance on those issues of greatest importance to patients', the thesis was reviewed to confirm that the literature on 'the issues of greatest importance to patients' did confirm the question range employed in DEPS. Busby5 describes a pilot study involving seven practices (25 dentists). At the end of the trial a representative from each practice was asked to give feedback about their experience with the survey in a standardised telephone interview. The interviewer asked 'Please score each of the following statements out of ten where ten means that you totally agree with the statement and zero that you totally disagree.' Statement three was, 'the patient survey was based on issues which are most important to patients.'

Criterion validity

The records at Denplan Ltd were analysed to investigate the relationship between overall scoring in DEPS (PPI) and patient retention and recruitment in Denplan payment plans to assess criterion validity. The top 12 scoring practices were compared with the lowest scoring 12 practices between 2010 and 2012. The total number of patients registered in Denplan patient payment plans with each of these groups was totalled for January 2011 and for January 2013.

Reliability: test and re-test

Twenty-three practices were identified from those participating in DEPS in 2014 which had also participated in a previous round of surveys (mostly in 2010 and 2011 referred to below as the 2010 round) and had received more than 100 responses, both in 2010 and 2014. Their second phase results (2014) were compared with their first phase results (2010).

Reliability: internal consistency.

The results from the Busby et al.6 paper on the relationship of the NPS and the PPI were re-scrutinised as a form of internal consistency test for reliability. Reference to Figure 1 will show that DEPS comprises of ten core questions which are about different issues held to be of greatest importance to patients. No two questions are asking for feedback on the same issue. However, in a separate part of the survey the Reichheld NPS question is asked, 'How likely is it that you would recommend your dental practice to a friend or colleague? Please give a score out of 10, where 0 = Not at all likely and 10 = extremely likely.'

In the first six months of 2014, 64 practices completed their DEPS and achieved more than 50 responses. Practices with less than 50 responses were not included in the study. A total of 10,810 patients responded. These data were analysed by grouping the 64 practices as follows:

Group 1: Practices receiving a NPS of less than 80. This group represents practices scoring statistically significantly (tested to 90% confidence) below the mean NPS score.

Group 2: Practices receiving an NPS of 80-89. This group represents practices scoring close to the mean NPS.

Group 3: Practices receiving an NPS of greater than 89. This group represents practices scoring significantly (tested to 90% confidence) above the mean NPS.

Results

Content validity

Busby4 (2011), in a literature review, cited 12 studies suggesting that competence, communication and cleanliness were the most important issues to patients. The thesis also explored the central importance of favourable patient perceptions about their own oral health (comfort, function and appearance). Furthermore, this work endorsed the importance of patient perceptions on 'value for money' with regard to any service which they pay for directly, as do the majority of patients using UK dental services. This literature review therefore appeared to accurately inform the ten questions developed to measure the construct.

The pilot study statement, 'the patient survey was based on issues which are most important to patients', received a score average of 8.3 from the six practices who responded, suggesting strong agreement with the statement.

Criterion validity

Table 1 shows patient recruitment and retention in Denplan payment plans for the top 12 scoring practices in DEPS 2011-2013 compared to the lowest scoring practices in that period. No practice was included unless they received more than 50 patient responses to their DEPS. The average number of responses for these 24 practices was 161.

Test and re-test reliability testing

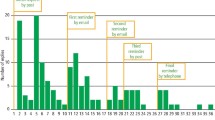

Table 2 shows the PPIs for 23 practices and the comparison between 2014 and 2010. The average 'ideal' percentage scores for the ten core questions for the same 23 practices is displayed in Figure 2 and again a comparison between 2010 and 2014 is shown.

Internal consistency reliability testing

Table 3 provides the mean PPI for each of the groups 1–3 on an NRS range.

Discussion

Survey science is somewhat controversial, as is briefly discussed in the 'Background' section. Nevertheless, the authors believe that valid and reliable patient surveys may provide broad but valuable insights into patient perceptions. They can therefore offer useful information in designing practice development plans designed to maintain success and quality.

Chisholm and Askham8 when reviewing the validity and reliability of patient feedback questionnaires used in medical practice state, 'few survey questionnaires will carry out the whole range of tests for validity and reliability.'

Indeed of the ten different questionnaires they reviewed only one carried out all five of their favoured tests for validity and reliability. The authors would suggest that by reporting five methods of testing DEPS above that a significant effort has been made to test the validity and reliability of this instrument. The authors hope that this paper has described how many of these tests for validity and reliability are quite straightforward to implement. It seems reasonable to expect that patient feedback instruments used in general dental practice should be subjected to at least some of these tests in order to reassure dental teams to be confident in interpreting their results.

Content validity was supported fully by both the literature review5 and the pilot testing of DEPS.5

Criterion validity seems well supported by the data in Table 1. As DEPS claims to measure issues of greatest importance to patients, it would be expected that practices with the least favourable patient perceptions on these issues would find it more difficult to recruit and retain patients than practices with the most favourable perceptions on these issues. It seems clear from Table 1 that this is the case. The lowest scoring practices in DEPS have lost more than 7% of their registered patients in this two year period while the highest scorers have made a very slight gain in patient numbers registered. Patient recruitment and retention in payment plans is obviously a multi-factorial phenomenon. Practice retention of patients generally has recently been shown to be a multi-factorial phenomenon by Lucarotti and Burke9. These data only account for the registration of patients in Denplan Ltd payment plans. Practices may choose to change the type of contract they have with patients and vice versa. All of these patients leaving a specific payment plan may not be lost to the practice. Nevertheless, there does not seem to be a logical reason why this 'churning' of patient contracts within a practice should be higher in one group compared to another. The authors believe that the data in Table 1 strongly supports criterion validity.

The authors did consider testing DEPS on patients making a complaint to a third party about their dentist as a form of construct validity test. Clearly it would be expected that significantly lower scores would be observed in this group in comparison with the NRS, if the construct was being successfully measured. Furthermore, this work might have cast light on which aspects of care were most commonly poorly perceived in patients making complaints. However, it was decided that, on balance, the harm that this might do to the primary objective of resolving the complaint could outweigh any benefit from this study.

Table 2 and Figure 2 show the results from the second use of DEPS (in 2014) with an identical questionnaire and protocol for 23 practices compared to their first results (2010). For the majority of these practices approximately three years had elapsed between the first test and the re-test. The primary objective of DEPS is to encourage practices to develop, particularly in their relatively low scoring aspects. The average PPIs for all 23 practices did improve between tests by 2.3 points which is statistically significant to 95% certainty. Busby et al.10 observed that there is a welcome tendency for the practices with the lowest scores in 2010 to achieve the most significant improvements. Table 2 gives further weight to this observation. Furthermore, Table 2 confirms that these 23 practices were achieving more than 250 responses on average in each phase. Figure 2 shows how similar the results are in each phase, for each aspect measured by DEPS, through the ten core questions, notwithstanding the modest improvement observed above. Both the pattern and magnitude of the results for both phases are similar. There is no dramatic difference or great inconsistency, simply, as expected, a modest improvement on average. Ideally a re-test, simply for checking reliability, would be performed in close time proximity to the first test, so that no time for improvements had been allowed. It was felt that this would have caused significant and unnecessary inconvenience to volunteer practices. This is particularly so, as the authors believe that this delayed re-test still provides notable evidence of reliability.

With regard to internal reliability testing Table 3 demonstrates that NPS scores tend to map PPI scores. Generally the higher that practice score on the 10 core questions of DEPS the more likely it is that patients will tend to recommend the practice to friends and colleagues therefore. The authors would suggest that this is a strong indication of reliability because this is precisely what would be expected. This is a variation on the type of internal reliability test commonly described in the literature, because DEPS is deliberately a concise survey which specifically avoids question 'duplication'. Nevertheless PPI and NPS are intended to measure a similar overall construct, if not one specific patient perception.

Conclusion

Both through its development and use over the last four years The DEPS has demonstrated good validity and reliability. The authors conclude that this level of validity and reliability is adequate for the clinical/general care audit purpose of DEPS and that it is therefore likely to reliably inform practices on where development is indicated. It is important and quite straightforward to both validate and check the reliability of patient surveys used in general dental practice so that dental teams can be confident in the instrument they are using.

Commentary

Information from patients on aspects of their oral health and the care provided by the dental team is an essential component of improving services. Denplan has been a long-standing presence in providing capitation schemes and has always placed an emphasis on quality.

The study involves the review of a questionnaire for patients with the primary purpose of gathering information about Denplan Excel which is a 'comprehensive clinical governance programme'.1 The authors have produced a scientific study to test both the validity and reliability of the Denplan Excel Patient Survey (DEPS). The essential aim was to review the content of the patient questionnaire to determine if a) the correct questions were being asked of patients and b) to determine any link between the scores from the questionnaires and whether a practice grew or shrank in terms of patient registrations (the latter was used to determine criterion validity). The analysis included comparison of data from the same 23 practices in 2011 and 2013.

Practices which fared poorly in the DEPS lost patients whilst the highest scorers made gains in patient registrations. However, these results should be treated with some caution as the number of practices in each group was small and the minimum number of patient responses for inclusion of a practice in the study was 50. In addition, the 'gains' or 'losses' in patient registrations were modest: the practices with poor scores lost over 7% of their registrations whilst those with good scores remained virtually the same (The authors describe this as 'a very slight gain' – it is very, very slight!).

It is difficult to see from the data, a strong linkage between the scores from the patient questionnaires and practice registrations thus raising some question as to whether criterion validity was clearly demonstrated. However, the fundamental point must be that feedback from patients is essential and gives the opportunity for providers to make changes and developments as a response to structured information from their patients. The results from this study support the inference that this questionnaire helped to improve patient satisfaction as measured by the questions asked. The outcomes for all 23 practices improved over the 2-3 year interval between analyses whilst it was noted that the lowest scoring practices improved more.

Professor Richard Ibbetson Director of Dentistry University of Aberdeen

Author questions and answers

1. Why did you undertake this research?

The importance of patient survey instruments as a metric for patient-perceived quality is increasing. The NHS and our regulators are insisting on their use. We believe that the greatest strength of patient survey instruments is to inform dental teams of development needs so that they can continue their success. Practices need to have confidence that the feedback they are receiving is accurate. Therefore, we considered it timely that we used these data we have accumulated over the last four years to test the validity and reliability of DEPS..

2. What would you like to do next in this area to follow on from this work?

We would like to investigate further which particular aspect of patient perceived quality is most important to continued practice success. We would also like to investigate further the extent to which a below average result in this survey motivates practice teams to develop.

References

Sykes J B . The Concise Oxford Dictionary. 6th ed. Oxford: Oxford University Press, 1976.

Walonick D . Survival Statistics Edition. 6th ed. StatPac Incorporated, 2013.

Mertens D . Research and Evaluation in Education and Psychology. Sage, 2015.

Busby M C, Burke F J T, Matthews R, Cytra J and Mullins A . The development of a concise questionnaire designed to measure perceived outcomes on the issues of greatest importance to patients. Br Dent J 2012; 212: 382–383.

Busby MC . Measuring success in dental practice using patient feedback. Birmingham: University of Birmingham, 2011. Available online at http://etheses.bham.ac.uk/1288/1/Busby_MPhil_11.pdf (accessed September 2015)

Busby M C, Matthews R, Burke, F J T, Mullins A and Shumacher K . Is any particular aspect of perceived quality associated with patients tending to promote a dental practice to their friends and colleagues? Br Dent J 2015; 218: 346–347.

Reichheld F . The Ultimate Question. Boston: Harvard Business School Publishing Corporation, 2006.

Chisholm A, Askham J . What do you think of your doctor? A Review of questionnaires for gathering patients' feedback on their doctor. Oxford: Picker Institute Europe, 2006.

Lucarotti P S K, Burke F J T . Factors influencing patients' continuing attendance at a given dentist. Br Dent J 2015; 218: 348–349.

Busby M C, Burke F J T, Matthews R, Cytra J, Mullins A . Can a concise, benchmarked patient survey help to stimulate improvements in perceived quality of care? Dent Update 2014; 41: 816–822.

Denplan Excel and DEPPA. Available online at http://www.denplan.co.uk/dentists/denplan-excel (accessed October 2015)

Author information

Authors and Affiliations

Corresponding author

Additional information

Refereed Paper

Rights and permissions

About this article

Cite this article

Busby, M., Matthews, R., Burke, F. et al. Long-term validity and reliability of a patient survey instrument designed for general dental practice. Br Dent J 219, 337–342 (2015). https://doi.org/10.1038/sj.bdj.2015.749

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/sj.bdj.2015.749