Abstract

There is a significant gap between researchers’ production of evidence and its use by policymakers. Several knowledge transfer strategies have emerged in the past years to promote the use of research. One of those strategies is the policy brief; a short document synthesizing the results of one or multiple studies. This scoping study aims to identify the use and effectiveness of policy briefs as a knowledge transfer strategy. Twenty-two empirical articles were identified, spanning 35 countries. Results show that policy briefs are considered generally useful, credible and easy to understand. The type of audience is an essential component to consider when writing a policy brief. Introducing a policy brief sooner rather than later might have a bigger impact since it is more effective in creating a belief rather than changing one. The credibility of the policy brief’s author is also a factor taken into consideration by decision-makers. Further research needs to be done to evaluate the various forms of uses of policy briefs by decision-makers.

Similar content being viewed by others

Introduction

Improving well-being and reducing health-related inequalities is a challenging endeavor for public policymakers. They must consider the nature and significance of the issue, the proposed interventions and their pros and cons such as their impact and acceptability (Lavis et al., 2012; Mays et al., 2005). Policymakers are members of a government department, legislature or other organization responsible for devising new regulations and laws (Cambridge University Press, 2019). They face the challenge of finding the best solutions to multiple health-related crises while being the most time and cost-effective possible. Limited by time and smothered by an overwhelming amount of information, some policymakers are likely to use cognitive shortcuts by selecting the “evidence” most appropriate to their political leanings (Baekgaard et al., 2019; Cairney et al., 2019; Oliver and Cairney, 2019).

To prevent snap decisions in policymaking, it is essential to develop tools to facilitate the dissemination and use of empirical research. Evidence-informed solutions might be an effective way to address these complicated questions since they derive knowledge from accurate and robust evidence instead of beliefs and provide a more holistic view of a problem. Although it may be possible for different stakeholders to agree on certain matters, a consensus is uncommon (Nutley et al., 2013). Using research evidence allows policymakers to decrease their bias towards an intervention’s perceived effectiveness. This leads to more confidence among policymakers on what to expect from an intervention as their decisions are guided by evidence (Lavis et al., 2004). However, trying to integrate research findings into the policy-making process comes with a whole new set of challenges, both for researchers and policymakers.

Barriers to evidence-informed policy

Barriers to evidence-informed policy can be defined in three categories: the research evidence is not available in an accessible format for the policymaker, the evidence is disregarded for political or ideological reasons and the evidence is not applicable to the political context (Hawkins and Pakhurst, 2016; Uzochukwu et al., 2016).

The first category of barriers refers to the availability and the type of evidence. The vast amount of information policymakers need to keep up-to-date in specific fields is a particular challenge to this barrier, leading to policymakers being frequently overwhelmed with the amount of information they need to go through regarding each case (Orandi and Locke, 2020). Decision-makers have reported a lack of competencies in finding, evaluating, interpreting or using certain evidence such as systematic reviews in their decision-making, leading to difficulty in accessing these reviews and identifying the key messages quickly (Tricco et al., 2015). Although policymakers use a broader variety of forms of evidence than previously examined in the literature, scholars have rarely been consulted and research evidence has rarely been seen as directly applicable (Oliver and de Vocht, 2017). The lack of awareness on the importance of research evidence and on the ways to access these resources also contribute to the gap between research and policy (Oliver et al., 2014; van de Goor et al., 2017). Some other frequently reported barriers to evidence use in policymaking were the poor access to timely, quality and relevant research evidence as well as the limited collaboration between policymakers and researchers (Oliver et al., 2014; Uzochukwu et al., 2016; van de Goor et al., 2017). Given the fact that research is only one input amongst all the others that policymakers must consider in their decision, it is no surprise that policymakers may disregard research evidence in favor of other sources of information (Uzochukwu et al., 2016).

The second category of barriers refers to policymakers’ ideology regarding research evidence and the presence of biases. Resistance to change and a lack of willingness by some policymakers to use research are two factors present when attempting to bridge the gap. This could be explained by certain sub-cultures of policymaking that grants little importance to evidence-informed solutions or by certain policymakers prioritizing their own opinion when research findings go against their expectations or against current policy (Koon et al., 2013; Uzochukwu et al., 2016). Policymakers tend to interpret new information based on their past attitudes and beliefs, much like the general population (Baekgaard et al., 2019). It also does not seem to persuade policymakers with beliefs opposed to the evidence, rather it increases the effect of attitudes on the interpretation of information by policymakers (Baekgaard et al., 2019). This highlights the importance of finding methods to disseminate tailored evidence in a way that policymakers will be open to receive and consider (Cairney and Kwiatkowski, 2017).

The third category of barriers refers to the evidence produced not always being tailor-made for application in different contexts (Uzochukwu et al., 2016; WHO, 2004). Indeed, the political context is an undeniable factor in the use of evidence in policymaking. Political and institutional factors such as the level of state centralization and democratization, the influence of external organizations and donors, the organization of bureaucracies and the social norms and values, can all affect the use of evidence in policy (Liverani et al., 2013). The elaboration of new policies implies making choices between different priorities while taking into consideration the limited resources available (Hawkins and Pakhurst, 2016). The evidence of research can always be contested or balanced with the potential negative consequences of the intervention in another domain, such as a health-care intervention having larger consequences on the economy. Even if the effectiveness of an intervention can be proved beyond doubt, this given issue might not be a priority for decision-makers, or it might involve unrealistic resources that would rather be granted to other issues. Policymakers need to stay aware of the political priorities identified and the citizens they need to justify their decisions to. In this sense, politics and institutions are not a barrier to the use of research but rather they are the context under which evidence must respond to (Cairney and Kwiatkowski, 2017; Hawkins and Parkhurst, 2016).

Summaries to prevent information overload

A great deal of research evidence has been developed but not enough of it is being disseminated in effective ways (Oliver and Boaz, 2019). Offering a summary of research results in an accessible format could facilitate policy discussion and ultimately improve the use of research and help policymakers with their decisions (Arcury et al., 2017; Cairney and Kwiatkowski, 2017). In this age of information overload, when too much information is provided, one can have trouble discerning what is most important and make a decision. It is not unlikely that policymakers will, after a brief glance, discard a large amount of information given to them (Beynon et al., 2012; Yang et al., 2003). Decision-makers oftentimes criticize the length and overly dense contents of research documents (Dagenais and Ridde, 2018; Oliver et al., 2014). Hence, summaries of research results could increase the odds of decision-makers reading and therefore using the evidence proposed by researchers.

There are different methods to summarize research findings to provide facts and more detail for those involved in decision-making. For example, an infographic is an effective visual representation that explains information simply and quickly by using a combination of text and graphical symbols (Huang and Tan, 2007). Another type of research summary is the rapid review, a form of knowledge synthesis tailored and targeted to answer specific questions arising in “real world” policy or program environments (Moore et al., 2016; Wilson et al., 2015). They are oftentimes commissioned by people who would need scientific results to back up a decision. To produce the information in a timely manner, certain components of the systematic review process need to be simplified or omitted (Khangura et al., 2012). One study examining the use of 139 rapid reviews found that 89% of them had been used by commissioning agencies, on average up to three uses per review. Policymakers used those rapid reviews mostly to determine the details of a policy, to identify priorities and solutions for future action and communicate the information to stakeholders. However, rapid reviews might be susceptible to bias as a consequence of streamlining the systematic review process (Tricco et al., 2015). Also, policymakers may not always be able to commission a rapid review due to financial constraints.

Policy briefs as a knowledge transfer tool

Another approach to summarizing research, which is more focused on summarizing results for the use of policymakers, is the policy brief. There are multiple definitions to the policy brief (Dagenais et Ridde, 2018). However, in this article it will refer to a short document that uses graphics and text to summarize the key elements of one or multiple researches and provides a succinct explanation of a policy issue or problem, together with options and specific recommendations for addressing that issue or problem (Arcury et al., 2017; Keepnews, 2016).

The objective of a policy brief is to inform policymakers’ decisions or motivate action (Keepnews, 2016; Wong et al., 2017). Their resolve can be placed on a continuum going from “neutral”, meaning objective and nuanced information, to “interventionist”, which puts forwards solutions to the stated problem (Dagenais and Ridde, 2018). However, it is not an advocacy statement nor is it an opinion piece. A policy brief is analytic in nature and aims to remain objective and fact-based, even if the evidence is persuasive (Wong et al., 2017). A policy brief should include contextual and structural factors as a way to apply locally what was initially more general evidence (Rajabi, 2012).

What is known about format preferences

The format of policy briefs is just as important as the content when it comes to evidence use by policymakers. Decision-makers like concise documents that can be quickly examined and interpreted (Rajabi, 2012). Evidence should be understandable and user-friendly, as well as visually appealing and easy to access (Beynon et al., 2012; Marquez et al., 2018; Oliver et al., 2014). Tailoring the message to the targeted audience and ensuring the timing is appropriate are also two important factors in research communication. Indeed, the wording and contextualization of findings can have a noticeable impact on the use of those results (Langer et al., 2016). Policymakers also prefer documents written by expert opinions that is both simple and clear. It must be restricted to the information of interest and propose recommendations for action (Dagenais and Ridde, 2018; Cairney and Oliver, 2020).

In the case of a workshop, sending the policy brief in advance facilitates the use of its information (Dagenais and Ridde, 2018). The results tend to be considered further since the information will already have been acknowledged prior to the workshop, leaving enough time during for it to be discussed with other stakeholders. These findings are in line with Langer’s report (2016), which suggested that interventions using a combination of evidence use mechanisms, such as communication of the evidence and interactions between stakeholders, are associated with an increased probability of being successful.

Why policy briefs were chosen

In the interest of sharing key lessons from research more effectively, it is essential to improve communication tools aimed at decision-making environments (Oliver and Boaz, 2019). In recent years, policy briefs have seen an increase in use as a way to inform or influence decision-making (Tessier et al., 2019). The policy brief was the chosen scope in this study as it is the most commonly used term referring to information-packaging documents. Indeed, a study of the nomenclature used in information-packaging efforts to support evidence-informed policymaking in low to middle income countries determined that “policy brief” was the most frequently used label (39%) to describe such a document (Adam et al., 2014). However, there are many different terms related to such a synthesized document, including the technical note, policy note, evidence brief, evidence summary, research snapshot, etc. (Dagenais and Ridde, 2018). Although these different terms were searched, the term “policy briefs” will be used in this paper.

Furthermore, policy briefs are postulated as a less intimidating form of research synthesis for policymakers, as opposed to systematic reviews. They offer key information on a given subject based on a systematic yet limited search of the literature for the most important elements. The policy brief is a first step into evidence, leading to further questioning and reading rather than providing a definitive report of what works (Nutley et al., 2013).

How should evidence use be measured?

The idea that evidence should be used to inform decision-making, rather than to determine what should be done, leads to questioning the way that evidence use should be measured (Hawkins and Pakhurst, 2016). What constitutes good use of evidence does not necessarily lead to the recommendations being applied. A policymaker might read the evidence but ultimately decide not to apply the recommendations due to taking into consideration a series of other factors such as the interests of other stakeholders and the limited resources available (Oliver and Boaz, 2019).

While the evidence may not have been used in decision-making, it was still used to inform (Hawkins and Pakhurst, 2016). The term evidence-based policy, implying that decision-making should depend on the body of research found, has been transitioning in the last few years to evidence-informed policy (Oxman et al., 2009; Nutley et al., 2019). This change reflects a new perspective of looking at research communication processes rather than solely the results and impact of the evidence use on decision-making. It sheds light into the current issues characterizing the know-do gap while also recognizing the political nature of the decision-making process.

Therefore, as a guide to evaluate the use of evidence by decision-makers, the instrumental, conceptual and persuasive use of policy briefs by decision-makers will be used. This approach allows for a more holistic view of evidence use and to determine more specifically in which ways policymakers use research evidence

The instrumental use refers to the direct use of the policy brief in the decision-making process. The conceptual use refers to the use of the policy brief to better understand a problem or a situation. The symbolic, or persuasive use, refers to the use of the policy brief to confirm or justify a decision or a choice, which has already been made (Anderson et al., 1999). This framework is based on the idea that good use of evidence should not rely solely on the following decisions taken by policymakers, but also on the manner in which these decisions were taken and how the evidence was identified, interpreted and considered to better inform the parties involved (Hawkins and Pakhurst, 2016).

In policy contexts, instrumental use of research is relatively rare while conceptual and strategic use tend to be more common (Boaz et al., 2018). However, evidence on the use and effectiveness of policy briefs more specifically as a knowledge transfer tool remains unclear. Previous reviews, such as Petkovic et al., (2016), have researched the use of systematic review summaries in decision-making and the policy-maker’s perspective towards the summaries in terms of understanding, knowledge and beliefs. Other articles have studied the barriers and facilitators to policymakers using systematic review summaries (Oliver et al., 2014; Tricco et al., 2015). It remains unknown under which circumstances does a policy brief elicit changes in attitude, knowledge and intention to use. Hence, this study will report what is known about whether policy briefs are considered effective by decision-makers, how policy briefs are used by decision-makers and which components of policy briefs were considered useful.

Therefore, the objectives of this study were to (1) identify evidence about the use of policy briefs and (2) identify which elements of content made for an effective policy brief. The first objective includes the perceived appreciation and the different types of use (instrumental, conceptual, persuasive) and the factors linked to use. The second objective includes the format, the context and the quality of the evidence.

Methods

Protocol

This study used the scoping review method by Arksey and O’Malley (2005). A scoping study is a synthesis and analysis of a broad range of research material aimed at quickly mapping the key concepts underpinning a wider research area that has not been reviewed comprehensively before and where several different study designs might be relevant (Arksey and O’Malley, 2005; Mays et al., 2001). This allows to provide a greater conceptual clarity on a specific topic (Davis, et al., 2009). A scoping study, as opposed to other kinds of systematic reviews, is less likely to address a specific research question or to assess the quality of included studies. Scoping studies tend to address broader topics where many different study designs might be applicable (Arksey and O’Malley, 2005). They do not reject studies based on their research designs.

This method was chosen to assess the breadth of knowledge available on the topic of short documents synthesizing research results and their usage by policymakers. Scoping reviews allow a greater assessment of the extent of the current research literature since the inclusion and exclusion criteria are not exhaustive.

Inclusion and exclusion criteria

Population

The policy brief must have been presented to the target users; policymakers. A policymaker refers to a person responsible for devising regulations and laws. In this paper, the term policymaker will be used along with the term decision-maker, which is characterized more broadly as any entity who, such as health system managers, could benefit from empirical research to make a decision. For this paper, we did not differentiate between types or levels of policymakers. Stakeholders involved in the decision-making process related to a large jurisdiction or organization for which policy briefs were provided were included. As an example, papers were rejected if the participants were making decisions for an individual person or a patient. Articles were accepted if other user types were included as participants, as long as policymakers or decision-makers were included as users. This was decided because many papers included a variety of participants and if the feedback given by policymakers would have been different from other decision-makers, it would have been explained in the article.

Type of document

Articles were included when decision-makers had to assess a short document synthesizing research results. Given that many different terms are used to describe short research syntheses, the articles were identified using terms such as policy briefs, evidence summaries, evidence briefs and plain language summaries. The full list can be found in Table 1.

Evaluations of systematic reviews were rejected as they are often written using technical language and can be lengthy (Moat et al., 2014). Furthermore, past research has evaluated the use and effectiveness of systematic reviews in policy. Given that this paper sought to evaluate short synthesized documents as a technical tool for knowledge transfer, any form of lengthy reports or reviews were excluded.

Rapid reviews were rejected due to their commissioned nature and the large breadth of literature available on their subject. Rapid reviews and commissioned research were excluded because they are different in a fundamental aspect: they are made as a direct response to a request from decision-makers. Since these papers are commissioned, there is already an intended use of these papers by decision-makers, as opposed to the use of non-commissioned papers. The expectations and motivations of these decision-makers in using these research results will be different. For these reasons, rapid reviews and commissioned research were excluded.

Articles were mostly excluded for being only examples of policy briefs, for not testing empirically the effectiveness of a policy brief, for testing another type of knowledge transfer tool (ex: deliberative dialogs) or for not having decision-makers as participants.

Type of study

All empirical studies were included, meaning qualitative, quantitative and mixed-methods. Any type of literature review such as systematic reviews were excluded to avoid duplication of studies and to allow an equal representation of all included studies. This prevented the comparison between the results of a systematic review and the results of one case study. Systematic reviews were however scanned for any study respecting the criteria to be added into the scoping review.

Outcomes

Empirical studies were eligible based on the implementation of a policy brief and the assessment of its use by decision-makers. Outcomes of interest were the use and effectiveness of policy briefs according to decision-makers, as well as the preferred type of content and format of such documents. These results were either reported directly by the decision-makers or through observations by the researchers. Articles were included if the policy brief was reviewed in any sort of way, whether through the participants giving their opinion on the policy brief or any commentary on the way the policy brief had been acknowledged. Articles were not excluded for not assessing a specific type of use. Examples of policy briefs and articles limited to the creation process of a policy brief and articles without any evaluations of the use of policy brief were not included, as no empirical evidence was used to back up what the authors considered made a policy brief effective.

Search strategy

To identify potentially eligible studies, literature searches have been conducted using PsycNET, PubMed, Web of Science and Embase from February 2018 to May 2019 in an iterative process. The search strategy was conceived in collaboration with a specialist in knowledge and information management. The scoping review’s objectives were discussed until four main concepts were identified. Related words to the four main concepts of the scoping study were searched with APA Thesaurus, these concepts being: (1) policy brief, (2) use, (3) knowledge transfer and (4) policymaker. The first term was used to find articles about the kind of summarized paper being evaluated. The second term was used to find articles discussing the ways these papers were used or discussing their effectiveness. Without this search term, many articles were simply mentioning policy briefs without evaluating them. The third term referred to the policy brief’s intent and to the large domain of knowledge transfer to get more precise research results into this field. The fourth search term allowed for the inclusion of the desired participants.

Different keywords for the concept of policy brief (any short document summarizing research results) were found through the literature and were also created using combinations of multiple keywords (e.g., research brief and evidence summary were combined to create research summary). The different concepts were then combined in the databases search engines until a point of saturation was reached and no new pertinent articles were found.

Study selection

Following the removal of duplicates, the articles were selected by analyzing the titles. If they seemed pertinent, the abstracts were then read. The remaining articles were verified by two authors to assess their eligibility, were read in their entirety and possibly eliminated if they did not respect the established criteria.

Data extraction

Once the articles were selected, summary sheets were created to extract data systematically. The factors recorded were the intended audience of the paper, the journal of publication, the objectives of the research, the research questions, a summary of the introduction, the variables researched, the type of research synthesis used in the study and a description of the document, information on sampling (size, response rate, type of participants, participants’ country, sampling method), the type of users reading the document (ex: practitioners, policymakers, consumers), a description of the experimentation, the research design, the main results found and the limits identified in the study.

Data analyses

Based on the extracted information compiled in the summary sheets, the data was taken from those summary sheets and separated into the two objectives of this study, which are (1) the evidence of policy brief use and (2) the elements of content that contributed to their effectiveness. Further themes were outlined based on the results, which formed the main findings. When more than one study had the same finding, the additional sources would be indicated. Similarly, any contradicting findings were also noted.

Results

Literature search

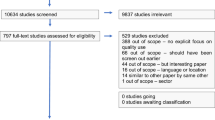

Four-thousand nine-hundred four unique records were retrieved, of which 215 were screened on full text. In total, 22 articles were included in this scoping study. The number of studies in each step of the literature review process are shown in Fig. 1.

Study characteristics

The year of publication ranged from 2007 to 2018, with 50% of the articles having been published in the last 5 years.

The studies spanned 35 countries, with the most common being conducted in Canada (n = 5), others being conducted in Burkina Faso (n = 2), the United States (n = 2), Netherlands (n = 1), Wales (n = 1), Thailand (n = 1), Nigeria (n = 1), Uganda (n = 1), Kenya (n = 1) and Israel (n = 1). Six studies were conducted in multiple countriesFootnote 1. Of the included studies, 12 took place in a total of 23 low to middle income countries, according to the World Bank Classification (2019).

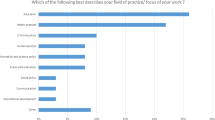

Case studies were the most common design (59%), followed by descriptive studies (27%) and randomized controlled trials (14%). Five studies used quantitative research methods, eight were qualitative methods and nine used mixed-methods. For more details, Table 2 presents an overview of the characteristics of selected studies.

Primary objective: use of policy briefs

Appreciation of policy briefs

The perception of decision-makers regarding policy briefs is a starting point to evaluate if more work should be put into its format to meet the needs of decision-makers or if it should go into communicating the importance of evidence-informed methods to decision-makers.

Of all the eligible studies, 19 (86%) found it useful or had a general appreciation towards policy briefs as a tool for knowledge transfer by decision-makers. Two studies (Kilpatrick et al., 2015; Orem et al., 2012) did not report about the perceived usefulness or appreciation of such a document and one study (de Goede et al., 2012) had policy actors declare they found the document of no importance and neglected it during the policy process. Many participants reported throughout the various studies that taking into consideration the available evidence would help improve decision-making (El-Jardali et al., 2014; Marquez et al., 2018; Vogel et al., 2013).

Types of use of policy briefs

The use of the policy brief in the decision-making process was assessed through its instrumental use, conceptual use and persuasive use.

In regard to instrumental use, many policymakers claimed to have used evidence to inform their decision-making, even sometimes going as far as actively seeking out policy briefs and improving their ability to assess and use research evidence (El-Jardali et al., 2014; Jones and Walsh, 2008). Policy briefs seem to oftentimes be used as a starting point for deliberations on policies and to facilitate the discussions with policy actors on definitions and solutions to multiple problems (Ellen et al., 2016; de Goede et al., 2012; Jones and Walsh, 2008; Suter and Armitage, 2011; Ti et al., 2017). Although policy briefs have helped in identifying problems and solutions in their communities, policymakers reported also relying on other sources of information, such as other literature, colleagues and their own knowledge (Goede et al., 2012; Suter and Armitage, 2011). When it comes to putting recommendations into action, policymakers may be more inclined to report intentions to take into consideration and apply the recommendations when the solutions offered require little effort or co-operation from others (Beynon et al., 2012).

Policy briefs are most commonly used conceptually, which is no surprise given that it is the type of use requiring the least commitment. They allow decision-makers to better understand the different facets of a situation, to inform policymaking and raise awareness on certain issues (Campbell et al., 2009; El-Jardali et al., 2014; Ellen et al., 2016; Goede et al., 2012; Suter and Armitage, 2011). A better comprehension of a situation can also lead to a change of beliefs in certain circumstances. Beynon et al. (2012) found that reading a policy brief lead to creating evidence-accurate beliefs more commonly amongst those with no prior opinion. The policy brief was not as effective in changing the beliefs of respondents who had an opinion on the issue before reading the brief.

Few studies reported the persuasive use of policy briefs. One study reported policy briefs being used to support prior beliefs such as good timing for specific policies and to allow the progression of information before publication in order to make sure it is aligned with national health policies (de Goede et al., 2012). Policy briefs can be seen as an effective tool for advocacy when the objective is to convince other stakeholders of a position using evidence-based research (Ti et al., 2017). However, one study had policymakers claim that although research needs to be used more, rarely will they use research to inform policy agendas or to evaluate the impacts of a policy (Campbell et al., 2009). Thus, it remains unclear whether policy briefs are often used in a persuasive way.

Factors linked to use

Decision-makers are more inclined to report intentions and actual follow-up actions that require little effort or co-operation from others although globally, women are less likely to claim that they will do follow-up actions than men (Beynon et al., 2012). The same study reported that a higher level of self-perceived influence predicts a higher level of influence and those readers are more inclined to act. Furthermore, decision-makers were most likely to use policy briefs if they were directly targeted by the subject of the evidence (Brownson et al., 2011).

Dissemination strategies are specific methods of distributing information to key parties with the intention of having the reader process that information. A policy brief could be very well written and have all the necessary information but if it is not properly shared with the intended audience, it might not be read. One effective dissemination strategy appreciated by policymakers is to send the policy briefs a few weeks before a workshop (Mc Sween-Cadieux et al., 2018) as well as an individualized email in advance of the policy brief (Ellen et al., 2016; Kilpatrick et al., 2015). Asking policymakers to be a part of the presentation of the briefs and to arrange a follow-up meeting to receive feedback on the documents was also viewed favorably (Kilpatrick et al., 2015).

Secondary objective: elements of content contributing to the effectiveness of policy briefs

Format

Decision-makers often report the language of researchers being too complex, inaccessible, lacking clarity and commonly using overly technical terms (Marquez et al., 2018; Mc Sween-Cadieux et al., 2017; Rosenbaum et al., 2011). They prefer the use of simple and jargon-free language in clear, short sentences (Ellen et al., 2014; Jones and Walsh, 2008; Kilpatrick et al., 2015; Schmidt et al., 2014; Vogel et al., 2013). Some decision-makers have reported having difficulty understanding the objectives in the policy brief and finding the document too long (Jones and Walsh, 2008; Marquez et al., 2018; Mc Sween-Cadieux et al., 2017). They appreciate the emphasis to be on the advantages of the policy brief and for it to be constructed around a key message to draw the reader and disseminate the critical details. Multiple articles recommended policy briefs not to go over one to two pages, with references to more detailed findings so the reader can investigate further (Dobbins et al., 2007; Ellen et al., 2014; Kilpatrick et al., 2015; Marquez et al., 2018; Suter and Armitage, 2011).

Furthermore, policy briefs need to be visually engaging. Since policymakers spend on average 30 to 60 min reading information about a particular issue, it is a challenge to present the information in such a way to make them go for the policy brief (Jones and Walsh, 2008). Information can be displayed in different ways to be more memorable such as charts, bullets, graphs and photos (Ellen et al., 2014; Marquez et al., 2018; Mc Sween-Cadieux et al., 2018). One research study has reported that an overly esthetic document may seem expensive to produce, which can lead to policymakers wondering why funding was diverted from programs to the production of policy briefs (Schmidt et al., 2014). Another study found that “graded-entry” formats, meaning a short interpretation of the main findings and conclusions, combined with a short and contextually framed narrative report, followed by the full systematic review, were associated with a higher score for clarity and accessibility of information compared to systematic reviews alone (Opiyo et al., 2013). However, the exact format of the document does not seem to be as important for policymakers as its clarity. Indeed, policymakers do not appear to have a preference between electronic and hard copy formats (Dobbins et al., 2007; Kilpatrick et al., 2015; Marquez et al., 2018). This is also shown by another case study, where policymakers preferred the longest version of a policy brief, one easier to scan, leading to believe that a longer text may not necessarily be the condemnation of a policy brief, as long as it is written in an easily scannable way with small chunks of information dispersed through the document (Ellen et al., 2014).

Context-related

There is a preference for local information over global information by decision-makers (Brownson et al., 2011; Jones and Walsh, 2008; Orem et al., 2012). It allows for local council members to identify relevant issues in their communities as well as responses tailored to the socio-political nature of the issue, such as cultural values, historical-political sensitivities and election timing (de Goede et al., 2012; Jones and Walsh, 2008). Authors of policy briefs, depending on the study, must consider the latest insights as well as the complex power relations underpinning the policy process when writing their recommendations. The issue of the policy brief has a significant impact on whether it can influence the views of decision-makers. To have a better grasp on the relevance of the topic, policymakers want to have the data put into context instead of simply presenting the facts and statistics (Schmidt et al., 2014). Furthermore, such research needs to be transmitted in a time-sensitive matter to remain relevant (Ellen et al., 2016; Marquez et al., 2018; Orem et al., 2012; Rosenbaum et al., 2011; Uneke et al., 2015).

Given the time pressures on policymakers to make rapid and impactful decisions, the use of actionable, evidence-informed recommendations acknowledging the specific situation are much appreciated by policymakers. Decision-makers wish for realistic recommendations on an economic and strategic plan. They dislike a policy brief that is too general and without any propositions of concrete action (Mc Sween-Cadieux et al., 2017; de Goede et al., 2012). Indeed, many policymakers claim that not concluding with recommendations is the least helpful feature for policy briefs (Moat et al., 2014). They prefer that the document provides more guidance on which actions should be taken and the steps to take as well as the possible implementations (Marquez et al., 2018; Mc Sween-Cadieux et al., 2017). However, it can also be a barrier to use if the content of the policy brief is not in line with the policy-maker’s system belief (de Geode et al., 2012).

Quality evidence

Quality, compelling evidence must be provided to facilitate the use of policy brief by decision-makers (Jones and Walsh, 2008). Therefore, it is required to know what kind of arguments are needed to promote research in the decision-making process. Although information about the situation and its context is appreciated, policymakers prefer having some guidance on what to do with such information afterwards. Some policymakers have reported a lack of details on the strategies to adopt, the tools to use and the processes required that would otherwise lead to a successful integration of the ideas proposed in the policy brief (Marquez et al., 2018; Suter and Armitage, 2011). There is a particular interest in detailed information about local applicability or costs, outcome measurements, broader framing of the research (Ellen et al., 2014; Rosenbaum et al., 2011), clear statements of the implication for practice from health service researchers (Dobbins et al., 2007), information about patient safety, effectiveness and cost savings (Kilpatrick et al., 2015).

On the other hand, less emphasis should be put on information steering away from important results. One study showed that researchers should more often than not forego acknowledgements, forest plot diagrams, conflicts of interest, methods, risk of bias, study characteristics, interventions that showed no significant effect and statistical information (e.g., confidence interval) (Marquez et al., 2018). Surprisingly, policymakers tend to prefer data-centered arguments rather than story-based arguments, the former containing data percentages and the latter containing personal stories (Brownson et al., 2011; Schmidt et al., 2014), hinting that the use of emotions might not be the most effective method in convincing policymakers to adopt research into their decision-making. However, a certain subjectivity is appreciated. Indeed, policymakers value researchers’ opinions about the policy implications of their findings (Jones and Walsh, 2008). Beynon et al. (2012) found that policy briefs, including an opinion piece acquire significance over time, possibly indicating that the effect of the opinion piece trickles in slowly.

Legitimacy however does not emerge solely from good evidence and arguments, but also from the source of those arguments, more specifically the authors involved. Policymakers specified that they pay attention to the authors of policy briefs and that it influences their acceptance of the evidence and arguments presented (Jones and Walsh, 2008). Authoritative messages were considered a key element of an effective policy brief. This is confirmed by Beynon et al. (2012), who found a clear authority effect on readers’ intentions to send the policy brief to someone else. Readers were more likely to share briefs with a recommendation from an authoritative figure rather than a recommendation from an unnamed researcher. It can be considered an obstacle to the use of the document if the latter is not perceived as coming from a credible source (Goede et al., 2012). Authoritative institutions, research groups and experts have been identified as the best mediators between researchers and decision-makers (Jones and Walsh, 2008).

Discussion

The objectives of this study were to identify what the literature has concluded about the use of policy briefs and which elements made for an effective one.

The results showed that policy briefs were considered generally useful, easy to understand and credible, regardless of the group, the issue, the features of the brief or the country tested. Different types of use were assessed, notably the instrumental, conceptual and persuasive use. Many policymakers claimed to use the evidence given in their decision-making process, some even reporting an increased demand for knowledge transfer products by policymakers. This fact and the surge of knowledge transfer literature in the past few years might suggest that policy briefs and other short summaries of research could become a more commonly used tool in the next years for the decision-making process in policy. Given that policymakers oftentimes rely on multiple sources of information and that policy briefs facilitated discussions between different actors, future interventions should aim to combine a policy brief with other mechanisms of evidence use (Langer et al., 2016).

One factor linked to a greater use of policy briefs was the dissemination strategies. Arranging a meeting with policymakers following the reading of the document to receive feedback is a good strategy to get the policymakers to read attentively and consider the content of the policy brief (Kilpatrick et al., 2015). A greater implication by policymakers seems to encourage the use of the policy brief. This supports the findings of Langer et al. (2016) concerning interaction as a mechanism to promote evidence use. Indeed, improved attitudes towards evidence were found after holding joint discussions with other decision-makers who were motivated to apply the evidence. Increasing motivation to use research evidence through different techniques such as the framing and tailoring of the evidence, the development of policymakers’ skills in interpreting evidence and better access to the evidence could lead to an increase in evidence-informed decision-making (Langer et al., 2016). Instead of working independently, it has been often proposed that researchers and policymakers should work in collaboration to increase the pertinence and promote the use of evidence (Gagliardi et al., 2015; Langer et al., 2016). The collaboration between policymakers and researchers would allow researchers to better understand policymakers’ needs and the contexts in which the evidence is used, thus providing a well-tailored version of the document for a greater use for those in need of evidence-informed results (Boaz et al., 2018; Langer et al., 2016). However, multiple barriers are present to the collaboration between researchers and decision-makers, such as differing needs and priorities, a lack of skill or understanding of the process and attitudes towards research (Gagliardi et al., 2015). Furthermore, different dilemmas come into play when considering how much academics should engage in policymaking. Although recommendations are often made for researchers to invest time into building alliances with policymakers and getting to know the political context, there is no guarantee that these efforts will lead to the expected results. Influencing policy through evidence advocacy requires engaging in different networks and seeing windows of opportunity, which may blur the line between scientists and policymakers (Cairney and Oliver, 2020). To remain neutral, researchers should aim to listen to the needs of policymakers and inform them of new evidence, rather than striving to have policymakers use the evidence in a specific way.

When policymakers considered the policy brief of little importance for their decision-making, it could be partially explained by the fact that the document shared was not aligned with the groups’ belief systems (de Goede et al., 2012). Similarly, Beynon et al. (2012) had found that policy briefs are not as effective in changing opinions in respondents who held previous beliefs rather than forging an opinion on a new topic. Being presented with information opposite of one’s belief can be uncomfortable. This cognitive dissonance can influence the level of acceptance of new information, which can affect its use. To return to a feeling of consistency with their own thoughts, policymakers could easily discard a policy brief opposing their beliefs. The use of policy briefs is, therefore, determined largely by the type of audience and whether they agree with the content. To improve the acceptance, the policy brief should strive to be aligned with the needs of policymakers. This implies that when creating and disseminating the evidence, researchers must consider their audience. Therefore, there is no “one-size-fits-all” and a better solution to improve the use of research is to communicate information based on the type of policymaker (Brownson et al., 2011; Jones and Walsh, 2008).

These results should lead researchers to first determine who is the targeted audience and how can the format of the policy brief be attractive to them. Different versions of policy briefs can be made according to the different needs, priorities and positions of varying policy actors (Jones and Walsh, 2008). Furthermore, people directly targeted by the content of the evidence are more likely to read the policy brief. In the knowledge to action cycle, it seems essential to have a clear picture of who will be reading the policy brief and what kind of information to provide as a way to better reach them.

The lack of recommendations was cited as being the least helpful feature of evidence briefs (Moat et al., 2014). This, along with other studies claiming the importance of clear recommendations could lead to believe that policymakers prefer an advocacy brief rather than a neutral brief (Goede et al., 2012; Marquez et al., 2018; Mc Sween-Cadieux et al., 2017). However, this brings the question of impartiality in research (Cairney and Oliver, 2017). The purpose of policy briefs and generally of knowledge transfer is to gather the best evidence and to disseminate it in a way to assure that it has an impact. Science is seen as neutral and providing only the facts, yet policymakers ask for precise recommendations and opinions. This seeming contradiction leads to wondering whether researchers should offer their opinion and how much co-production with policymakers should they be involved in to align the results with the policymakers’ agenda (Cairney and Oliver, 2017).

The credibility of the messenger is also an important factor in the decision-maker’s use of the document. Briefs were more likely to be shared when associated with an authoritative figure than with an unnamed research fellow. This authority effect may be due to the brief becoming more memorable when associated with an authoritative figure, which leads to a greater likelihood for the policymakers to share that message with other people (Beynon et al., 2012). Another possible explanation is the trust associated with authority. The results have shown that policymakers tend to forego the information about conflicts of interest, methodology, risks of bias and statistics. In other words, the details that would show the legitimacy of the data. Instead, they prefer going straight to the results and recommendation. This could lead to believe that policymakers would prefer to read a paper coming from a reputable source that they can already trust, so they can focus on analyzing the content rather than the legitimacy of it. Thus, the partnering between authoritative institutions, researchers and policymakers could help not only to better target the needs of policymakers but also to improve the legitimacy of the message communicated through the brief, in an effort to help policymakers focus more on the information being shared (Jones and Walsh, 2008).

Strengths and limitations

The use of all the similar terms related to policy briefs in the search strategy allowed for a wide search net during the literature search process, leading to finding more studies. Another strength was the framework assessing both the types of use and the format of the policy brief preferred by policymakers, which allowed a better understanding of the place policy briefs currently have in policymaking as well as an explanation of different content factors related to its use. As knowledge transfer is becoming a pillar in organizations across the globe, there remains however a gap in the use of research in decision-making. This review will enable researchers to better adapt the content of their research to their audience when writing a policy brief by adjusting the type of information that should be included in the document. One limit of the present scoping study is its susceptibility to a sampling bias. Although the articles assessed for eligibility were verified by two authors, the first records identified through database searching were carried out by a single author. The references of the selected articles were not searched systematically to find additional articles. This scoping study also does not assess the quality of the selected studies and evaluation since its objective is to map the current literature on a given subject.

Although the quality of the chosen articles was not assessed, it is possible to notice a few limits in their method, which can be found in the Table 2. There is also something to be said about publication bias, meaning that papers with positive results tend to be published in greater proportion than papers failing to prove their hypotheses.

Furthermore, few studies determined the actual use or effect of the policy brief in decision-making but instead assessed self-reported use of the policy brief or other outcomes, such as perceived credibility or relevance of those briefs, since these may affect the likelihood of research use in decision-making. Few studies reported the persuasive use of policy briefs. This could be explained by the reticence of participants to report such information due to the implications that they would use research results only to further their agenda rather than using them to make better decisions, or simply because researchers did not question the participants on such matters. Although the inclusion criteria of this study were fairly large, it is worth noting that the number of selected articles was fairly low, with only 22 studies included. Further research on persuasive research would need to assess researchers’ observations rather than self-reported use by policymakers. Since the current research has shown that policy briefs could be more useful in creating or reinforcing a belief, future studies could assess the actual use of policy briefs in decision-making.

Conclusion

The findings indicate that while policy briefs are generally valued by decision-makers, it is still necessary for these documents to be written with the end reader in mind to meet their needs. Indeed, an appreciation towards having a synthesized research document does not necessarily translate to its use, although it is a good first step given that it shows an open-mindedness of decision-makers to be informed by research. Decision-making is a complex process, of which the policy brief can be one step to better inform the decision-makers on the matter at hand. A policy brief is not a one-size-fits-all solution to all policy-making processes. Evidence can be used to inform but it might not be able to, on its own, fix conflicts between the varying interests, ideas and values circulating the process of policymaking (Hawkins and Pakhurst, 2016). Since credibility is an important factor for decision-makers, researchers will have to take into consideration the context, the authors associated with writing policy briefs and the actors that will play a lead role in promoting better communication between the different stakeholders.

Given that the current literature on the use of policy briefs is not too extensive, more research needs to be done on the use of such documents by policymakers. Future studies should look into the ways researchers can take the context into consideration when writing a policy brief. It would also be interesting to search whether different formats are preferred by policymakers intending to use evidence in different ways. Furthermore, there are other types of summarized documents that were excluded in this scoping review such as rapid reviews, or even different formats such as infographics. The use of commissioned summaries could be an interesting avenue to explore, as the demand for these types of documents from policymakers would ensure their use in a significant manner.

Data availability

All data analyzed in this study are cited in this article and available in the public domain.

Notes

The studies were conducted in Zambia (n = 3), Uganda (n = 3), South Africa (n = 2), Argentina (n = 2), China (n = 2), Cameroon (n = 2), Cambodia (n = 1), Norway (n = 2), Ethiopia (n = 2), India (n = 1), Ghana (n = 1), Nicaragua (n = 1), Bolivia (n = 1), Brazil (n = 1), England (n = 1), Wales (n = 1), Finland (n = 1), Germany (n = 1), Burkina Faso (n = 1), Italy (n = 1), Scotland (n = 1), Spain (n = 1), Mozambique (n = 1), Bangladesh (n = 1), Nigeria (n = 1), Central African Republic (n = 1), Sudan (n = 1), Colombia (n = 1) and Australia (n = 1).

References

Adam T, Moat KA, Ghaffar A, Lavis JN (2014) Towards a better understanding of the nomenclature used in information-packaging efforts to support evidence-informed policymaking in low-and middle-income countries. Implement Sci 9(1):67

Anderson M, Cosby J, Swan B, Moore H, Broekhoven M (1999) The use of research in local health service agencies. Soc Sci Med 49(8):1007–1019

Arcury TA, Wiggins MF, Brooke C, Jensen A, Summers P, Mora DC, Quandt SA (2017) Using “policy briefs” to present scientific results of CBPR: farmworkers in North Carolina. Prog Commun Health Partnerships: Res Educ Action 11(2):137

Arksey H, O’Malley L (2005) Scoping studies: towards a methodological framework. Int J Soc Res Methodol 8(1):19–32

Baekgaard M, Christensen J, Dahlmann CM, Mathiasen A, Petersen NBG (2019) The role of evidence in politics: motivated reasoning and persuasion among politicians. Br J Polit Sci 49(3):1117–1140

Beynon P, Chapoy C, Gaarder M, Masset, E (2012) What difference does a policy brief make? Full report of an IDS, 3ie, Norad study: Institute of Development Studies and the International Initiative for Impact Evaluation (3ie)

Boaz A, Hanney S, Borst R, O’Shea A, Kok M (2018) How to engage stakeholders in research: design principles to support improvement. Health Res Policy Syst 16(1):60

Brownson RC et al. (2011) Communicating evidence-based information on cancer prevention to state-level policy makers. J Natl Cancer Inst 103(4):306–316

Cairney P, Heikkila T, Wood M (2019) Making policy in a complex world (1st edn.). Cambridge University Press

Cairney P, Kwiatkowski R (2017) How to communicate effectively with policymakers: Combine insights from psychology and policy studies. Palgrave Commun 3(1):37

Cairney P, Oliver K (2017) Evidence-based policymaking is not like evidence-based medicine, so how far should you go to bridge the divide between evidence and policy? Health Res Policy Syst 15:1

Cairney P, Oliver K (2020) How should academics engage in policymaking to achieve impact? Polit Stud Rev 18(2):228–244

Cambridge University Press (2019) Definition of a policymaker. https://dictionary.cambridge.org. Accessed 2019

Campbell DM et al. (2009) Increasing the use of evidence in health policy: Practice and views of policy makers and researchers. Aust N Z Health Policy 6(21):1–11

Dagenais C, Ridde V (2018) Policy brief (PB) as a knowledge transfer tool: To “make a splash”, your PB must first be read. Gaceta Sanitaria

Davis K, Drey N, Gould D (2009) What are scoping studies? A review of the nursing literature. Int J Nurs Stud 46(10):1386–1400

Dobbins M, Jack S, Thomas H, Kothari A (2007) Public Health Decision-Makers’ informational needs and preferences for receiving research evidence. Worldview Evid-Based Nurs 4(3):156–163

El-Jardali F, Lavis J, Moat K, Pantoja T, Ataya N (2014) Capturing lessons learned from evidence-to-policy initiatives through structured reflection. Health Res Policy Syst 12(1):1–15

Ellen M et al. (2014) Health system decision makers’ feedback on summaries and tools supporting the use of systematic reviews: A qualitative study. Evid Policy 10(3):337–359

Ellen ME, Horowitz E, Vaknin S, Lavis JN (2016) Views of health system policymakers on the role of research in health policymaking in Israel. Israel J Health Policy Res 5:24

de Goede J, Putters K, Van Oers H (2012) Utilization of epidemiological research for the development of local public health policy in the Netherlands: a case study approach. Soc Sci Med 74(5):707–714

Gagliardi AR, Berta W, Kothari A, Boyko J, Urquhart R (2015) Integrated knowledge translation (IKT) in health care: a scoping review. Implementation. Science 11(1):38

Hawkins B, Parkhurst J (2016) The ‘good governance’ of evidence in health policy. Evid Policy 12(4):575–592

Huang W, Tan CL (2007) A system for understanding imaged infographics and its applications. In Proceedings of the 2007 ACM symposium on Document engineering, Association for Computing Machinery, Seoul, pp 9–18

Jones N, Walsh C (2008) Policy briefs as a communication tool for development research. ODI Background Note. ODI, London

Keepnews DM (2016) Developing a policy brief. Policy Polit Nurs Pract 17(2):61–65

Khangura S, Konnyu K, Cushman R, Grimshaw J, Moher D (2012) Evidence summaries: the evolution of a rapid review approach. Syst Rev 1:10

Kilpatrick K et al. (2015) The development of evidence briefs to transfer knowledge about advanced practice nursing roles to providers, policymakers and administrators. Nurs Leadership (Toronto, Ont.) 28(1):11–23

Koon AD, Rao KD, Tran NT, Abdul G (2013) Embedding health policy and systems research into decision-making processes in low- and middle-income countries. Health Res Policy Syst 18:9

Langer L, Tripney J, Gough D (2016) The science of using science: researching the use of research evidence in decision-making. Social Science Research Unit, & Evidence for Policy and Practice Information and Co-ordinating Centre

Lavis JN, Posada FB, Haines A, Osei E (2004) Use of research to inform public policymaking. Lancet 364(9445):1615–1621

Lavis JN et al. (2012) Guidance for evidence-informed policies about health systems: linking guidance development to policy development. PLoS Med 9:3

Liverani M, Hawkins B, Parkhurst JO (2013) Political and institutional influences on the use of evidence in public health policy. A systematic review. PLoS ONE 8(10):e77404

Marquez C, Johnson AM, Jassemi S, Park J, Moore JE, Blaine C, Straus SE (2018) Enhancing the uptake of systematic reviews of effects: What is the best format for health care managers and policy-makers? A mixed-methods study. Implement Sci 13(1):84

Mays N, Roberts E, Popay J (2001) Synthesising research evidence. In: Fulop N, Allen P, Clarke A, Black N (eds) Studying the organisation and delivery of health services: research methods. Routledge, London, pp. 188–220

Mays N, Pope C, Popay J (2005) Systematically reviewing qualitative and quantitative evidence to inform management and policy-making in the health field. J Health Serv Res Policy 10(Suppl 1):6–20

Mc Sween-Cadieux E, Dagenais C, Somé PA, Ridde V (2017) Research dissemination workshops: Observations and implications based on an experience in Burkina Faso. Health Res Policy Syst 15(1):1–12

Mc Sween-Cadieux E, Dagenais C, Ridde V (2018) A deliberative dialogue as a knowledge translation strategy on road traffic injuries in Burkina Faso: a mixed-method evaluation. Health Res Policy Syst 16(1):1–13

Moat KA, Lavis JN, Clancy SJ, El-Jardali F, Pantoja T (2014) Evidence briefs and deliberative dialogues: perceptions and intentions to act on what was learnt. Bull World Health Organiz 20:8

Moore GM, Redman S, Turner T, Haines M (2016) Rapid reviews in health policy: a study of intended use in the New South Wales’ Evidence Check programme. Evid Policy 12(46):505–519

Nutley S, Boaz A, Davies H, Fraser A (2019) New development: What works now? Continuity and change in the use of evidence to improve public policy and service delivery. Public Money Manage 39(4):310–6

Nutley S, Powell AE, Davies HTO (2013) What counts as good evidence, Alliance for Useful Evidence, London

Oliver K, Boaz A (2019) Transforming evidence for policy and practice: creating space for new conversations. Palgrave Commun 5(1):1

Oliver K, Cairney P (2019) The dos and don’ts of influencing policy: a systematic review of advice to academics. Palgrave Commun 5(1):21

Oliver KA, de Vocht F (2017) Defining ‘evidence’ in public health: a survey of policymakers’ uses and preferences. Eur J Public Health 1(27 May):112–7

Oliver K, Innvar S, Lorenc T, Woodman J, Thomas J (2014) A systematic review of barriers to and facilitators of the use of evidence by policymakers. BMC Health Serv Res 14(1):2

Opiyo N, Shepperd S, Musila N, Allen E, Nyamai R, Fretheim A, English M (2013) Comparison of alternative evidence summary and presentation formats in clinical guideline development: a mixed-method study. PLoS ONE 8:1

Orandi BJ, Locke JE (2020) Engaging policymakers to disseminate research. In: Dimick J., Lubitz C. (eds) Health Serv Res. Springer, Cham, pp. 283–288

Orem JN, Mafigiri DK, Marchal B, Ssengooba F, Macq J, Criel B (2012) Research, evidence and policymaking: The perspectives of policy actors on improving uptake of evidence in health policy development and implementation in Uganda. BMC Public Health 12(1):109

Oxman AD, Lavis JN, Lewin S, Fretheim A (2009) SUPPORT Tools for evidence informed health policymaking (STP) 1: What is evidence-informed policymaking? Health Res Policy Syst 7:S1

Petkovic J, Welch V, Jacob MH, Yoganathan M, Ayala AP, Cunningham H, Tugwell P (2016) The effectiveness of evidence summaries on health policymakers and health system managers use of evidence from systematic reviews: a systematic review. Implement Sci 11(1):1–14

Rajabi F (2012) Evidence-informed Health Policy Making: the role of policy brief. Int J Prevent Med 3(9):596–598

Rosenbaum SE, Glenton C, Wiysonge CS, Abalos E, Mignini L, Young T, Oxman AD (2011) Evidence summaries tailored to health policy-makers in low- and middle-income countries. Bull World Health Organ 89(1):54–61

Schmidt AM, Ranney LM, Goldstein AO (2014) Communicating program outcomes to encourage policymaker support for evidence-based state tobacco control. Int J Environ Res Public Health 11(12):12562–12574

Suter E, Armitage GD (2011) Use of a knowledge synthesis by decision makers and planners to facilitate system level integration in a large Canadian provincial health authority. Int J Integr Care, 11. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3107086. Accessed 2019

Tessier C, Tessier C, Centre de collaboration nationale sur les politiques publiques et la santé (2019) The policy brief: a tool for knowledge transfer. http://collections.banq.qc.ca/ark:/52327/4002463. Accessed 2020

Ti L, Hayashi K, Ti L, Kaplan K, Suwannawong P, Kerr T (2017) ”Knowledge translation to advance evidence-based health policy in Thailand. Evid Policy 13(4):723–731

Tricco AC, Antony J, Straus SE (2015) Systematic reviews vs. rapid reviews: What’s the difference? CADTH Rapid Review Summit

Uneke CJ, Ebeh Ezeoha A, Uro-Chukwu H, Ezeonu CT, Ogbu O, Onwe F, Edoga C (2015) ”Promoting evidence to policy link on the control of infectious diseases of poverty in Nigeria: outcome of a multi-stakeholders policy dialogue. Health Promot Perspect 5(2):104–115

Uzochukwu B, Onwujekwe O, Mbachu C, Okwuosa C, Etiaba E, Nyström ME, Gilson L (2016) The challenge of bridging the gap between researchers and policy makers: experiences of a Health Policy Research Group in engaging policy makers to support evidence informed policy making in Nigeria. Glob Health 12(1):1–15

van de Goor I, Hämäläinen RM, Syed A, Lau CJ, Sandu P, Spitters H, Karlsson LE, Dulf D, Valente A, Castellani T, Aro AR (2017) Determinants of evidence use in public health policy making: Results from a study across six EU countries. Health policy 121(3):273–81

Vogel JP, Oxman AD, Glenton C, Rosenbaum S, Lewin S, Gülmezoglu AM, Souza JP (2013) Policymakers' and other stakeholders' perceptions of key considerations for health system decisions and the presentation of evidence to inform those considerations: an international survey. Health Res Policy Syst 11(1):1–9

Wilson MG, Lavis JN, Gauvin FP (2015) Developing a rapid-response program for health system decision-makers in Canada: findings from an issue brief and stakeholder dialogue. Syst Rev 4(25):1–11

Wong SL, Green LA, Bazemore AW, Miller BF (2017) How to write a health policy brief. Fam Syst Health 35(1):21–24

World Bank (2019) Classification of low- and middle- income countries. https://data.worldbank.org/income-level/low-and-middle-income. Accessed 2020

World Health Organization (2004) Knowledge for better health: strengthening health systems. Ministerial Summit on Health Research:16-20

Yang CC, Chen H, Honga K (2003) Visualization of large category map for internet browsing. Decision Supp Syst 35(1):89–102

Acknowledgements

We thank Julie Desnoyers for her collaboration on developing the search strategy, Stéphanie Lebel for extracting data on the selected articles and Valéry Ridde for peer-reviewing the article. This study was conducted as part of the first author’s doctoral training in industrial-organizational psychology. The candidate received financial support from Équipe RENARD, a research team studying knowledge transfer, which is led by Christian Dagenais and funded by the FRQSC.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Arnautu, D., Dagenais, C. Use and effectiveness of policy briefs as a knowledge transfer tool: a scoping review. Humanit Soc Sci Commun 8, 211 (2021). https://doi.org/10.1057/s41599-021-00885-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1057/s41599-021-00885-9

This article is cited by

-

Disseminating health research to public health policy-makers and practitioners: a survey of source, message content and delivery modality preferences

Health Research Policy and Systems (2023)

-

Comparing two federal financing strategies on penetration and sustainment of the adolescent community reinforcement approach for substance use disorders: protocol for a mixed-method study

Implementation Science Communications (2022)

-

When the messenger is more important than the message: an experimental study of evidence use in francophone Africa

Health Research Policy and Systems (2022)