Abstract

Purpose

With the popularization of ChatGPT (Open AI, San Francisco, California, United States) in recent months, understanding the potential of artificial intelligence (AI) chatbots in a medical context is important. Our study aims to evaluate Google Gemini and Bard’s (Google, Mountain View, California, United States) knowledge in ophthalmology.

Methods

In this study, we evaluated Google Gemini and Bard’s performance on EyeQuiz, a platform containing ophthalmology board certification examination practice questions, when used from the United States (US). Accuracy, response length, response time, and provision of explanations were evaluated. Subspecialty-specific performance was noted. A secondary analysis was conducted using Bard from Vietnam, and Gemini from Vietnam, Brazil, and the Netherlands.

Results

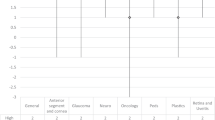

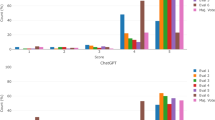

Overall, Google Gemini and Bard both had accuracies of 71% across 150 text-based multiple-choice questions. The secondary analysis revealed an accuracy of 67% using Bard from Vietnam, with 32 questions (21%) answered differently than when using Bard from the US. Moreover, the Vietnam version of Gemini achieved an accuracy of 74%, with 23 (15%) answered differently than the US version of Gemini. While the Brazil (68%) and Netherlands (65%) versions of Gemini performed slightly worse than the US version, differences in performance across the various country-specific versions of Bard and Gemini were not statistically significant.

Conclusion

Google Gemini and Bard had an acceptable performance in responding to ophthalmology board examination practice questions. Subtle variability was noted in the performance of the chatbots across different countries. The chatbots also tended to provide a confident explanation even when providing an incorrect answer.

This is a preview of subscription content, access via your institution

Access options

Subscribe to this journal

Receive 18 print issues and online access

$259.00 per year

only $14.39 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

All data generated or analysed during this study are included in this published article and its supplementary information files. Further enquiries can be directed to the corresponding author.

References

Chow JCL, Sanders L, Li K. Impact of ChatGPT on medical chatbots as a disruptive technology. Front Artif Intell. 2023;6:60.

Azaria A ChatGPT Usage and Limitations.

Fatani B ChatGPT for Future Medical and Dental Research. Cureus. 15. Available at: /pmc/articles/PMC10165936/ [Accessed May 31, 2023] (2023).

Gilson A, Safranek C, Huang T, Socrates V, Chi L, Taylor RA, et al. How Does ChatGPT Perform on the Medical Licensing Exams? The Implications of Large Language Models for Medical Education and Knowledge Assessment. medRxiv. 2022.12.23.22283901. Available at: https://www.medrxiv.org/content/10.1101/2022.12.23.22283901v1 [Accessed May 30, 2023] (2022).

Shay D, Kumar B, Bellamy D, Palepu A, Dershwitz M, Walz JM, et al. Assessment of ChatGPT success with specialty medical knowledge using anaesthesiology board examination practice questions. Br J Anaesth. Available at: https://pubmed.ncbi.nlm.nih.gov/37210278/ [Accessed May 31, 2023] (2023).

Bhayana R, Krishna S, Bleakney RR Performance of ChatGPT on a Radiology Board-style Examination: Insights into Current Strengths and Limitations. Radiology. Available at: https://pubmed.ncbi.nlm.nih.gov/37191485/ [Accessed May 31, 2023] 2023).

Lum ZC, Collins D, Dennison S, Guntupalli L, Choudhary S, Saiz AM, et al. Can Artificial Intelligence Pass the American Board of Orthopaedic Surgery? An Analysis of 3900 Questions. Available at: https://papers.ssrn.com/abstract=4439147 [Accessed May 31, 2023].

Mihalache A, Huang RS, Popovic MM, Muni RH ChatGPT-4: An assessment of an upgraded artificial intelligence chatbot in the United States Medical Licensing Examination. Med Teach. Available at: https://doi.org/10.1080/0142159X.2023.2249588 [Accessed January 15, 2024] (2023).

Google. Bard updates from Google I/O 2023: Images, new features. Available at: https://blog.google/technology/ai/google-bard-updates-io-2023/ [Accessed June 2, 2023].

Anon. Introducing Gemini: Google’s most capable AI model yet. Available at: https://blog.google/technology/ai/google-gemini-ai/#sundar-note [Accessed January 15, 2024].

Anon. Eye Quiz. Available at: http://eyequiz.com/ [Accessed January 15, 2024].

Anon. MedCalc’s Comparison of proportions calculator. Available at: https://www.medcalc.org/calc/comparison_of_proportions.php [Accessed January 15, 2024].

Anon. Where you can use Bard - Bard Help. Available at: https://support.google.com/bard/answer/13575153?hl=en [Accessed January 15, 2024].

Raimondi R, Tzoumas N, Salisbury T, Di Simplicio S, Romano MR Comparative analysis of large language models in the Royal College of Ophthalmologists fellowship exams. Available at: https://doi.org/10.1038/s41433-023-02563-3. [Accessed January 15, 2024].

Waisberg E, Ong J, Masalkhi M, Zaman N, Sarker P, Lee AG, et al. Google’s AI chatbot “Bard”: a side-by-side comparison with ChatGPT and its utilization in ophthalmology. Eye. 2023;2023:1–4. https://www.nature.com/articles/s41433-023-02760-0.

Mihalache A, Huang RS, Popovic MM, Muni RH. Performance of an upgraded artificial intelligence chatbot for ophthalmic knowledge assessment. JAMA Ophthalmol. 2023;141:798–800. https://jamanetwork.com/journals/jamaophthalmology/fullarticle/2807120.

Mihalache A, Popovic MM, Muni RH. Performance of an artificial intelligence chatbot in ophthalmic knowledge assessment. JAMA Ophthalmol. 2023;141:589–97. https://jamanetwork.com/journals/jamaophthalmology/fullarticle/2804364.

Cai LZ, Shaheen A, Jin A, Fukui R, Yi JS, Yannuzzi N, et al. Performance of generative large language models on ophthalmology board–style questions. Am J Ophthalmol. 2023;254:141–9.

Mihalache A, Huang RS, Popovic MM, Patil NS, Shor R, Pandya BU, et al. Accuracy of an Artificial Intelligence Chatbot’s Interpretation of Clinical Ophthalmic Images. JAMA Ophthalmol. 2024. https://doi.org/10.1001/jamaophthalmol.2024.0017.

Acknowledgements

RHM research is supported by the Silber TARGET Fund.

Author information

Authors and Affiliations

Contributions

AM was responsible for analysing data, interpreting results, and writing the manuscript. JG was responsible for prompting the chatbot and extracting data. NSP was responsible for the conception of the study’s design and revision of the manuscript. RSH was responsible for figure creation, analysing data, and revision of the manuscript. MMP was responsible for the conception of the study’s design and revision of the manuscript. AM was responsible for supervision of the study. PJK was responsible for supervision of the study. RHM was responsible for revision of the manuscript and supervision of the study. All authors fulfill ICMJE Criteria for Authorship.

Corresponding author

Ethics declarations

Competing interests

AM: None; JG: None; NSP: None; RSH: None; MMP: Financial support (to institution)—PSI Foundation, Fighting Blindness Canada; AM: None. PJK: Honoraria: Novartis, Bayer, Roche, Boehringer Ingelheim, RegenxBio, Apellis; Advisory board—Novartis, Bayer, Roche, Apellis, Novelty Nobility, Viatris, Biogen; Financial support (to institution)—Roche, Novartis, Bayer, RegenxBio; RHM: Consultant—Alcon, Apellis, AbbVie, Bayer, Bausch Health, Roche; Financial Support (to institution)- Alcon, AbbVie, Bayer, Novartis, Roche.

Ethics

An ethics statement was not required for this study type, no human or animal subjects or materials were used.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Mihalache, A., Grad, J., Patil, N.S. et al. Google Gemini and Bard artificial intelligence chatbot performance in ophthalmology knowledge assessment. Eye (2024). https://doi.org/10.1038/s41433-024-03067-4

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41433-024-03067-4