Abstract

Background/Objectives

Experimental investigation. Bing Chat (Microsoft) integration with ChatGPT-4 (OpenAI) integration has conferred the capability of accessing online data past 2021. We investigate its performance against ChatGPT-3.5 on a multiple-choice question ophthalmology exam.

Subjects/Methods

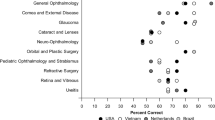

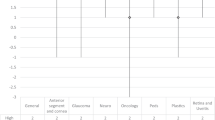

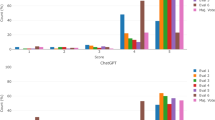

In August 2023, ChatGPT-3.5 and Bing Chat were evaluated against 913 questions derived from the Academy’s Basic and Clinical Science Collection collection. For each response, the sub-topic, performance, Simple Measure of Gobbledygook readability score (measuring years of required education to understand a given passage), and cited resources were collected. The primary outcomes were the comparative scores between models, and qualitatively, the resources referenced by Bing Chat. Secondary outcomes included performance stratified by response readability, question type (explicit or situational), and BCSC sub-topic.

Results

Across 913 questions, ChatGPT-3.5 scored 59.69% [95% CI 56.45,62.94] while Bing Chat scored 73.60% [95% CI 70.69,76.52]. Both models performed significantly better in explicit than clinical reasoning questions. Both models performed best on general medicine questions than ophthalmology subsections. Bing Chat referenced 927 online entities and provided at-least one citation to 836 of the 913 questions. The use of more reliable (peer-reviewed) sources was associated with higher likelihood of correct response. The most-cited resources were eyewiki.aao.org, aao.org, wikipedia.org, and ncbi.nlm.nih.gov. Bing Chat showed significantly better readability than ChatGPT-3.5, averaging a reading level of grade 11.4 [95% CI 7.14, 15.7] versus 12.4 [95% CI 8.77, 16.1], respectively (p-value < 0.0001, ρ = 0.25).

Conclusions

The online access, improved readability, and citation feature of Bing Chat confers additional utility for ophthalmology learners. We recommend critical appraisal of cited sources during response interpretation.

This is a preview of subscription content, access via your institution

Access options

Subscribe to this journal

Receive 18 print issues and online access

$259.00 per year

only $14.39 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

Available upon reasonable request to the corresponding author.

References

Honavar SG. Artificial intelligence in ophthalmology - Machines think! Indian J Ophthalmol. 2022;70:1075–9.

Abràmoff MD, Lou Y, Erginay A, Clarida W, Amelon R, Folk JC, et al. Improved automated detection of diabetic retinopathy on a publicly available dataset through integration of deep learning. Investig Ophthalmol Vis Sci. 2016;57:5200–6.

Gargeya R, Leng T. Automated identification of diabetic retinopathy using deep learning. Ophthalmology. 2017;124:962–9.

Ting DSW, Cheung CY-L, Lim G, Tan GSW, Quang ND, Gan A, et al. Development and validation of a deep learning system for diabetic retinopathy and related eye diseases using retinal images from multiethnic populations with diabetes. JAMA. 2017;318:2211–23.

Grassmann F, Mengelkamp J, Brandl C, Harsch S, Zimmermann ME, Linkohr B, et al. A deep learning algorithm for prediction of age-related eye disease study severity scale for age-related macular degeneration from color fundus photography. Ophthalmology. 2018;125:1410–20.

Burlina PM, Joshi N, Pekala M, Pacheco KD, Freund DE, Bressler NM. Automated grading of age-related macular degeneration from color fundus images using deep convolutional neural networks. JAMA Ophthalmol. 2017;135:1170–6.

Ting DSW, Pasquale LR, Peng L, Campbell JP, Lee AY, Raman R, et al. Artificial intelligence and deep learning in ophthalmology. Br J Ophthalmol. 2019;103:167.

Singh S, Djalilian A, Ali MJ. ChatGPT and ophthalmology: exploring its potential with discharge summaries and operative notes. Semin Ophthalmol. 2023;38:503–7.

ChatGPT. OpenAI. https://openai.com/chatgpt. Accessed 30 Jul 2023.

Ting DSJ, Tan TF, Ting DSW. ChatGPT in ophthalmology: the dawn of a new era? Eye. 2023.

Thirunavukarasu AJ, Ting DSJ, Elangovan K, Gutierrez L, Tan TF, Ting DSW. Large language models in medicine. Nat Med. 2023;29:1930–40.

Dave T, Athaluri SA, Singh S. ChatGPT in medicine: an overview of its applications, advantages, limitations, future prospects, and ethical considerations. Front Artif Intell. 2023;6:1169595.

Models. OpenAI. https://platform.openai.com/docs/models/overview. Accessed 30 Jul 2023

Sallam M. ChatGPT utility in healthcare education, research, and practice: systematic review on the promising perspectives and valid concerns. Healthcare. 2023;11:887.

Mihalache A, Popovic MM, Muni RH. Performance of an artificial intelligence chatbot in ophthalmic knowledge assessment. JAMA Ophthalmol. 2023;141:589–97.

Mihalache A, Huang RS, Popovic MM, Muni RH. Performance of an upgraded artificial intelligence chatbot for ophthalmic knowledge assessment. JAMA Ophthalmol. 2023;141:798–800.

Bing Chat. Microsoft. https://www.microsoft.com/en-us/edge/features/bing-chat?form=MT00D8. Accessed 30 Jul 2023.

Responsible and trusted AI. Microsoft. https://learn.microsoft.com/en-us/azure/cloud-adoption-framework/innovate/best-practices/trusted-ai. Accessed 30 Jul 2023.

Cai LZ, Shaheen A, Jin A, Fukui R, Yi JS, Yannuzzi N, et al. Performance of generative large language models on ophthalmology board style questions. Am J Ophthalmol. 2023;254:141–9.

Kleebayoon A, Wiwanitkit V. Comment on performance of generative large language models on ophthalmology board style questions. Am J Ophthalmol. 2023;256:200.

Kung TH, Cheatham M, Medenilla A, Sillos C, De Leon L, Elepaño C, et al. Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. PLoS Digit Health. 2023;2:e0000198.

McLaughlin GH. SMOG grading: a new readability formula. J Read. 1969;12:639–46.

Ishak NM, Bakar AYA. Qualitative data management and analysis using NVivo:An approach used to examine leadership qualitiesamong student leaders. Educ Res J. 2012;2:94–103.

Basic and clinical science course residency set. American Academy of Ophthalmology. https://store.aao.org/basic-and-clinical-science-course-residency-set.html. Accessed 30 Jul 2023.

Mehdi Y. Reinventing search with a new AI-powered Microsoft Bing and Edge, your copilot for the web. Microsoft. https://blogs.microsoft.com/blog/2023/02/07/reinventing-search-with-a-new-ai-powered-microsoft-bing-and-edge-your-copilot-for-the-web/. Accessed 3 Aug 2023.

Lee H. The rise of ChatGPT: exploring its potential in medical education. Anat Sci Educ. 2023;00:1–6.

Hasan MR, Khan B. An AI-based intervention for improving undergraduate STEM learning. PLoS ONE. 2023;18:e0288844.

Lam T, Cheung M, Munro Y, Lim K, Shung D, Sung J. Randomized controlled trials of artificial intelligence in clinical practice: systematic review. J Med Internet Res. 2022;24:e37188.

Grabeel K, Russomanno J, Oelschlegel S, Tester E, Heidel R. Computerized versus hand-scored health literacy tools: a comparison of Simple Measure of Gobbledygook (SMOG) and Flesch-Kincaid in printed patient education materials. J Med Library Assoc. 2018;106:38–45.

Taloni A, Borselli M, Scarsi V, Rossi C, Coco G, Scorcia V, et al. Comparative performance of humans versus GPT-4.0 and GPT-3.5 in the self-assessment program of American Academy of Ophthalmology. Sci Rep. 2023;13:18562.

Funding

BT is funded by the Eye Foundation of Canada. No other financial support.

Author information

Authors and Affiliations

Contributions

Conception/Design/Acquisition/Analysis/Interpretation (BT, JAM), Acquisition (BT, NH, JM), Drafting/Revision (all authors), Final approval (all authors), Agreement of accountability (all authors).

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethics approval

Under article 2.4 of the Tri-Council Policy Statement, this study was exempt from institutional review board approval since all data was gathered from publicly accessible published works. No identifiable forms of information were generated in this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Tao, B.KL., Hua, N., Milkovich, J. et al. ChatGPT-3.5 and Bing Chat in ophthalmology: an updated evaluation of performance, readability, and informative sources. Eye (2024). https://doi.org/10.1038/s41433-024-03037-w

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41433-024-03037-w