Abstract

Background

The proportion of health-related searches on the internet is continuously growing. ChatGPT, a natural language processing (NLP) tool created by OpenAI, has been gaining increasing user attention and can potentially be used as a source for obtaining information related to health concerns. This study aims to analyze the quality and appropriateness of ChatGPT’s responses to Urology case studies compared to those of a urologist.

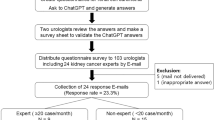

Methods

Data from 100 patient case studies, comprising patient demographics, medical history, and urologic complaints, were sequentially inputted into ChatGPT, one by one. A question was posed to determine the most likely diagnosis, suggested examinations, and treatment options. The responses generated by ChatGPT were then compared to those provided by a board-certified urologist who was blinded to ChatGPT’s responses and graded on a 5-point Likert scale based on accuracy, comprehensiveness, and clarity as criterias for appropriateness. The quality of information was graded based on the section 2 of the DISCERN tool and readability assessments were performed using the Flesch Reading Ease (FRE) and Flesch-Kincaid Reading Grade Level (FKGL) formulas.

Results

52% of all responses were deemed appropriate. ChatGPT provided more appropriate responses for non-oncology conditions (58.5%) compared to oncology (52.6%) and emergency urology cases (11.1%) (p = 0.03). The median score of the DISCERN tool was 15 (IQR = 5.3) corresponding to a quality score of poor. The ChatGPT responses demonstrated a college graduate reading level, as indicated by the median FRE score of 18 (IQR = 21) and the median FKGL score of 15.8 (IQR = 3).

Conclusions

ChatGPT serves as an interactive tool for providing medical information online, offering the possibility of enhancing health outcomes and patient satisfaction. Nevertheless, the insufficient appropriateness and poor quality of the responses on Urology cases emphasizes the importance of thorough evaluation and use of NLP-generated outputs when addressing health-related concerns.

This is a preview of subscription content, access via your institution

Access options

Subscribe to this journal

Receive 4 print issues and online access

$259.00 per year

only $64.75 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The datasets analyzed during the current study are available from the corresponding author on reasonable request.

References

Wise J. How Many People Use the Internet Daily in 2023? - EarthWeb. https://earthweb.com/how-many-people-use-the-internet-daily/ (accessed 15 May2023).

NTIA. More than Half of American Households Used the Internet for Health-Related Activities in 2019, NTIA Data Show | National Telecommunications and Information Administration. https://www.ntia.gov/blog/2020/more-half-american-households-used-internet-health-related-activities-2019-ntia-data-show (accessed 2 May2023).

Eysenbach G, Kohler C. What is the prevalence of health-related searches on the World Wide Web? Qualitative and quantitative analysis of search engine queries on the Internet. AMIA Annu Symp Proc. 2003;2003:225.

Introducing ChatGPT. https://openai.com/blog/chatgpt (accessed 15 May2023).

Liu Y, Yang Z, Yu Z, Liu Z, Liu D, Lin H, et al. Generative artificial intelligence and its applications in materials science: Current situation and future perspectives. J Mater. 2023. https://doi.org/10.1016/J.JMAT.2023.05.001.

Haleem A, Javaid M, Singh RP. An era of ChatGPT as a significant futuristic support tool: A study on features, abilities, and challenges. BenchCouncil Trans Benchmarks, Stand Eval. 2022;2:100089.

Ayers JW, Poliak A, Dredze M, Leas EC, Zhu Z, Kelley JB, et al. Comparing Physician and Artificial Intelligence Chatbot Responses to Patient Questions Posted to a Public Social Media Forum. JAMA Intern Med. 2023. https://doi.org/10.1001/JAMAINTERNMED.2023.1838.

Homolak J. Opportunities and risks of ChatGPT in medicine, science, and academic publishing: a modern Promethean dilemma. Croat Med J. 2023;64:1.

Charnock D, Shepperd S, Needham G, Gann R. DISCERN: an instrument for judging the quality of written consumer health information on treatment choices. J Epidemiol Community Health. 1999;53:105.

Daraz L, Morrow AS, Ponce OJ, Beuschel B, Farah MH, Katabi A, et al. Can Patients Trust Online Health Information? A Meta-narrative Systematic Review Addressing the Quality of Health Information on the Internet. J Gen Intern Med. 2019;34:1884–91.

Sbaffi L, Rowley J. Trust and Credibility in Web-Based Health Information: A Review and Agenda for Future Research. J Med Internet Res. 2017;19. https://doi.org/10.2196/JMIR.7579.

Zhou Z, Wang X, Li X, Liao L. Is ChatGPT an Evidence-based Doctor? Eur Urol. 2023. https://doi.org/10.1016/J.EURURO.2023.03.037.

Van Bulck L, Moons P. What if your patient switches from Dr. Google to Dr. ChatGPT? A vignette-based survey of the trustworthiness, value and danger of ChatGPT-generated responses to health questions. Eur J Cardiovasc Nurs. 2023. https://doi.org/10.1093/EURJCN/ZVAD038.

Sallam M. ChatGPT Utility in Healthcare Education, Research, and Practice: Systematic Review on the Promising Perspectives and Valid Concerns. Healthc (Basel, Switzerland) 2023;11. https://doi.org/10.3390/HEALTHCARE11060887.

Checcucci E, Rosati S, De Cillis S, Vagni M, Giordano N, Piana A, et al. Artificial intelligence for target prostate biopsy outcomes prediction the potential application of fuzzy logic. Prostate Cancer Prostatic Dis. 2021;25:359–62.

Lombardo R, De Nunzio C. Nomograms in PCa: where do we stand. Prostate Cancer Prostatic Dis. 2023;10. Online ahead of print.

Author information

Authors and Affiliations

Contributions

AC: Protocol/project development, Data collection and management, Data analysis, Manuscript writing/editing. MP: Data collection and management, MLR: Data collection and management. GIR: Manuscript writing/editing. MGA: Manuscript writing/editing. MF: Manuscript writing/editing. GC: Data analysis, Manuscript writing/editing. SC: Manuscript writing/editing. AM: Data collection and management, Manuscript writing/editing. ED: Protocol/project development, Data collection and management, Data analysis, Manuscript writing/editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethics approval

The data was treated anonymously, and the local ethical approval was not required. The study was performed in accordance with the Declaration of Helsinki.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Cocci, A., Pezzoli, M., Lo Re, M. et al. Quality of information and appropriateness of ChatGPT outputs for urology patients. Prostate Cancer Prostatic Dis 27, 103–108 (2024). https://doi.org/10.1038/s41391-023-00705-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41391-023-00705-y

This article is cited by

-

The inclusion of social determinants of health into evaluations of quality and appropriateness of AI assistant-ChatGPT

Prostate Cancer and Prostatic Diseases (2024)

-

ChatGPT in prostate cancer: myth or reality?

Prostate Cancer and Prostatic Diseases (2024)

-

Best of 2023 in Prostate Cancer and Prostatic Diseases

Prostate Cancer and Prostatic Diseases (2024)

-

Social determinants of health into evaluations of quality and appropriateness of AI assistant ChatGPT

Prostate Cancer and Prostatic Diseases (2024)

-

Adequacy of prostate cancer prevention and screening recommendations provided by an artificial intelligence-powered large language model

International Urology and Nephrology (2024)