Abstract

Study design: Systematic review of abstracts of published papers presumed to contain information on chronic pain in persons with spinal cord injury (SCI).

Objectives: To determine to what degree papers on SCI are abstracted in such a way that they can be retrieved, and evaluated as to the paper's applicability to a reader's questions.

Setting: US - academic department of rehabilitation medicine.

Methods: 868 abstracts published in Medline were independently examined by two out of 13 screeners, who answered four questions on the subjects and nature of the paper with ‘Yes’, ‘No’ or ‘insufficient information’. Frequency of ratings ‘insufficient information’, and screener agreement were evaluated as affected by screener and abstract/paper characteristics.

Results: Screeners could not determine whether the paper dealt with persons with traumatic SCI for 37% of abstracts; whether chronic pain was a topic could not be determined in 18%. Physicians were less willing than other disciplines to assign ‘insufficient information’. Screener agreement was better than chance, but not at the level suggested for quality measurement. Screener discipline and task experience did not make a difference, nor did abstract length, structure, or decade of publication of the paper.

Conclusion: Authors need to improve the quality of abstracts to make retrieval and screening of relevant papers more effective and efficient.

Sponsorship: National Institute on Disability and Rehabilitation Research.

Similar content being viewed by others

Introduction

Medical journals started printing abstracts to accompany research and other papers in the 1950s. JAMA published the first ones in 19561 and others followed suit. These abstracts replaced or supplemented the ‘summary’ that prior to that time ended many papers. The purpose of the abstract, formatted as one or more free-text paragraphs and printed at the very front, was to give the reader some sense of direction as to what was to follow. Abstracts should (and sometimes do) give readers enough information to assess if the paper is relevant to their interests, offers information that might affect the way they practice medicine, and (if a report of empirical research) uses methods that meet basic standards of validity.2

These traditional narrative abstracts have been criticized for a number of reasons, including conclusions that do not follow from the study findings and an overemphasis on positive conclusions.3,4,5 They often did not convey the information readers would expect.6 In the late 1980s proposals for ‘more informative abstracts’ were made,7 which eventually resulted in what are now known as structured abstracts, which first appeared in the Annals of Internal Medicine in 1987. Many prestigious journals followed this lead, including Spinal Cord.8 It was expected that structured abstracts would provide more and more relevant information, and allow readers to quickly assess reliability and applicability of clinical reports. In addition, structured abstracts were expected to facilitate peer review of manuscripts, and help in the accurate indexing and retrieval of reports.7,9,10 Structured abstracts have indeed been found to be more informative;11,12,13 to a degree this is due to the fact that they are longer (on average) than unstructured ones.11,14 However, they also may be more readable,11,15 and produce more indexing terms, which makes it easier to retrieve them from electronic data bases such as Medline and Embase.14 Only a few journals have not hopped on the bandwagon, citing issues of restricting creativity of authors.16

Structured abstracts are not without shortcomings, however.12,17 While studies that compared them to non-structured abstracts declared them improved,12,14 many lack a description of the study population,10,17,18 or are missing other information considered essential.13 Others do not evidence correspondence between the abstract and the paper, in that the data reported are different, or there are data in the abstract entirely lacking from the paper.2,19

However, informative and correct abstracts are of extreme importance: they are the component of papers most likely to be read,1,19,20 and sometimes they are the only part read.21,22 Not infrequently, clinicians make decisions based on nothing more than a perusal of the abstract.23 The purpose of this paper is to evaluate to what degree papers on persons with spinal cord injury (SCI) contain informative abstracts, that is abstracts that can be successfully retrieved and scanned as to their applicability for particular questions. The effect of certain abstract and abstract reader characteristics on judgments of informativeness is also evaluated.

Method

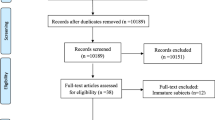

The data presented here are a ‘by-product’ of an ongoing project to conduct meta-analyses of the literature on chronic pain in persons with traumatic SCI. The purpose of the meta-analyses is to quantitatively summarize information on the incidence, prevalence and risk factors for chronic pain; assessment and diagnosis of chronic pain; impact of pain on functioning and quality of life; and chronic pain treatment outcomes, side effects, and costs. In order to perform these analyses, we needed to identify published and unpublished papers on any of these topics, based on original data reported for individuals with traumatic spinal cord injury. We sought potential papers in various ways, including systematic searches of Medline, CINAHL, Embase, PsychInfo, the Cochrane collaboration and other databases. The search criteria for Medline were the Medical Subject Headings (MeSH) terms spinal cord injury, paraplegia, quadriplegia, tetraplegia, pain (all exploded), and the parallel text words, as well as such words as ache, spinal lesion, etc. The search terms for other databases were similar. In addition, we performed hand searches of journals most likely to contain pertinent information (Spinal Cord, Journal of Spinal Cord Medicine, etc.), and of various co-investigators' own files. The reference lists of all papers, books and other documents identified in this way were scrutinized (ancestry search), and likely publications added to the list of references to be reviewed. We also performed a descendant search, searching the Web of Science database for any ‘offspring’ of (key) papers: later articles that quoted one of more of the papers identified as appropriate. This method was abandoned fairly quickly as not cost-efficient.

The number of titles mushroomed quickly to over 2000. The main reason was that the Medline MeSH term ‘spinal cord injury’ retrieves papers on low back pain, other diseases affecting the spinal column or cord without causing paralysis, and non-traumatic causes of paralysis such as cerebral palsy, metastatic cancer, etc. In fact, the term ‘spinal cord injury’ is found at three places in the MeSH tree: as a subcategory of ‘spinal cord diseases’; as a subcategory of ‘trauma, nervous system’; and once more, as a grouping under ‘wounds and injuires’. A second reason is that often papers are referenced in an article without it being clear what specific information they contain; in order not to miss any relevant information, we included rather than excluded these references. Obtaining copies of more than 2000 papers and books would have been excessively time-consuming and expensive. Because for many papers (especially the more recent ones) abstracts are available in the databases, we decided to use abstract-based screening to identify those papers that were potentially appropriate for the meta-analyses. Abstracts were printed, and provided to two screeners who completed a screening form guided by a 3-page syllabus.

The screeners were 13 individuals, including four rehabilitation physicians, three physical therapists, four occupational therapists, and two others. All had at least several years of clinical and/or research experience with SCI, and were familiar with the literature in their field of expertise. The screeners were to answer four key questions:

-

Does the paper abstracted deal with humans (rather than animals, SCI units, etc.)?

-

Are these individuals with traumatic SCI (rather than spinal injury from other origin, or other, non-spinal, disorders)?

-

Is information provided on chronic pain experienced by these persons?

-

Does the paper report original data (rather than constituting a review, theoretical treatise, etc.)?

For each question, the reviewers could answer ‘Yes’, ‘No’ or ‘Insufficient information in the abstract’. ‘Insufficient information’ indicates lack of informativeness of abstracts, and possible lack of perceptiveness of the screener. Screeners were instructed to err on the conservative side, and mark ‘??’ (insufficient information) rather than ‘No’ if there was doubt in their mind. Once the four questions had been answered, assignment of the paper was automatic: four times ‘Yes’ meant it was to be included in the meta-analysis; one ‘No’ excluded it, and a combination of ‘yes’ and ‘??’ answers meant that a decision could not be based on the abstract alone.

We decided to have each abstract screened by two independent reviewers, in order to minimize changes that a paper would be selected out even though it was appropriate, or at least should be classified as such based on the abstract information. Only if both reviewers declared a paper not relevant, and for the same reason, was it discarded. Disagreement between the two independent screeners reflects lack of precision of the abstract language, or possibly screener shortcomings or different tendencies to interpret terms in a certain way. An answer of ‘Yes’ on all four questions by both screeners was required for a paper to be a candidate for the meta-analyses. An answer of ‘No’ by both for the same question meant that the paper was no longer considered. Any other combination of answers necessitated obtaining the full text of the paper in order to answer the questions based on complete information.

In addition to the two screeners' judgments on the four key items, the following information was collected on each paper or abstract:

-

Journal in which published

-

Year of publication

-

Original language of paper (classified as English or any other; all abstracts were in English, whatever the language of the paper)

-

Whether the abstract was structured or not

-

Whether the abstract as published in the database was truncated. (Medline truncates non-structured abstracts at 250 (if the paper is less than 10 pages) or 400 words, but never truncates structured abstracts)

-

Abstract length in lines

Because of the latter, this analysis is limited to abstracts that were available in Medline, whatever the method or source that originally generated the paper as a possible data source for meta-analysis. The standard Medline Text printout, which provides the entire abstract as a single continuous paragraph (whether or not the original published in the journal had a different format), allows for easy counting of the number of lines. Partial last lines were counted as one. The average Medline Text line has 10.7 words (as counted by the ‘Word count’ utility in Microsoft Word), so a short 4-line abstract has about 42 words, while a lengthy 50-line one has in excess of 500.

The analysis focuses on the informativeness of abstracts, specifically the ability of screeners to determine the answer to the four questions, and the factors (year of publication, abstract length) that affect informativeness. Agreement between screeners is also analyzed, and factors affecting agreement. The percentage of time screeners agreed is reported, as well as weighted and unweighted kappa. Kappa quantifies agreement adjusted for chance agreement between judges. Weighted kappa does the same, but gives partial credit for similar (but not identical) answers. For instance, ‘Yes’ and ‘??’ are not the same, but are somewhat similar, compared to ‘Yes’ and ‘No’ answers. Kappa and weighted kappa range from 0.00 (agreement is not better than chance) to 1.00 (perfect agreement).

Statistical testing is used, but readers should be aware of the limitations of the generalizations that seem possible. For instance, it is not known whether any conclusions can be generalized to non-Medline journals, or diagnostic groups other than traumatic SCI. However, it is likely that our findings would be applicable to a search for literature on eg SCI and spasticity.

Results

The total number of abstracts included in this analysis is 868, covering the years 1975 (the first year for which Medline started printing abstracts, rather than just titles) through 2001. The number increased from 60 for the 1975–79 5-year period to 322 for the 1995–99 period. The year 2000 produced 69, and 2001 (an incomplete year) 10. These abstracts had been published in 286 different journals, indicating the range of journals publishing on SCI or closely related topics (Table 1). Most journals produced only one or two papers on (presumed) SCI pain during the entire period (or during the time they were published or covered in Medline), and Paraplegia/Spinal Cord was the only one with over 60 papers. Papers were concentrated, in that 11 journals (3.8% of the total) published 40.8% of the abstracts; Table 1 provides further information on the concentration in a limited number of journals of papers presumed to be on chronic pain in SCI.

Most papers (741 or 85.4%) were in English. Only a few (21 or 2.4%) abstracts had been truncated because of excessive length. A total of 117 (13.5%) had a structured format, all published in 1991 or later years. While a few papers had three-line abstracts (5 or 0.6%), most had more, with the longest having 51 lines. The average was 15.9, with a standard deviation of 7.5. The average for truncated abstracts was 25.7 (std dev 7.9), and the average for structured abstracts was 23.2 (std dev 7.3). English-language papers had abstracts that were marginally longer (16.1, with std dev of 7.5) than foreign-language papers (14.6±7.2).

The screeners each reviewed between 36 and 311 abstracts. Assignments came in batches of about 50, and there was no selection to match abstract topics or characteristics to screener. The pairing of screeners assigned to the same abstract was more or less random. Table 2 reflects the judgments of individual reviewers, and those of the disciplines and the group as a whole. (Because each abstract was screened independently by two screeners, the number of judgments is twice as large as the number of abstracts). It is clear that all screeners had trouble making a final decision on the four characteristics to be judged, resulting in a large number of ‘insufficient information’ judgments. A final assignment of a paper depended on a ‘Yes’ answer on all four issues, or a ‘No’ on at least one of them; all other abstracts were put in the category ‘indeterminate’ (??) for the overall decision.

The discipline subtotals indicate that physicians were more likely to assign a ‘Yes’ or a ‘No’ answer. Within each disciplinary group, there were screeners whose decisions seemed to differ from those of the others (eg physician 3 for ‘human or not’; physical therapist 2 for ‘traumatic SCI or not’); however, it should be remembered that all screeners did not receive the same package of abstracts, and that the ones assigned were not randomly sampled from the pool. Especially for screeners reviewing only a small number of abstracts (eg physical therapist 2), ‘aberrant’ profiles are quite possible. Experience in the task did not seem to make much of a difference in the ability of willingness to make a decision whether an abstract satisfied a particular criterion: for all screeners combined, the correlation between number of abstracts screened previously and assignment of a ‘not enough information’ was very weak and positive for the issues of yes/no traumatic SCI, yes/no chronic pain, and final assignment (0.08 or 0.09), but negative for yes/no human (−0.08), and only 0.03 for yes/no original. Two screeners improved in making all four decisions, and one deteriorated in all four. Logistic regression analysis confirmed these findings.

Several characteristics of the papers included and their abstracts do not appear to systematically affect informativeness: year of publication and language of the paper, the length of the abstract, and whether the abstract was structured or not (Table 3). For every finding indicating that a particular characteristic makes decisions more feasible (eg length of abstract and yes/no original data decision), there is an opposing trend (eg length and yes/no traumatic SCI). These characteristics are not independent from one another: over time, abstracts have grown in length; structured abstracts are longer (and never truncated), etc. Logistic regression suggested no findings other than the bivariate relationships shown in Table 3.

The agreement between the screeners on the four decisions is displayed in Table 4. (The assignment of the paper, as determined by the four decisions, is shown too, but it should be remembered that two screeners for a particular abstract may both have decided on a ‘not suitable for the meta analysis’, but for different reasons. For instance, screener 1 may have decided that no chronic pain data were reported, [while screener 2 thought there were such data, or was not sure], and screener 2 decided that there were no original data [while screener 1 was the opposite opinion]). Unweighted kappas are around 0.30; weighted kappas (which give partial credit in cases where one screener decides ‘Yes’ or ‘No’, the other ‘?’) is slightly higher. However, because of the implications of the decisions (our goal was to select papers, not to rate them on an ordinal scale), the unweighted kappas are presumably the more useful indicators of the agreement between screeners. The data in Table 5 indicate that characteristics of the papers or abstracts do not appear to affect the ability of screeners to agree in their judgments. Again, whatever trends there appear to be (eg number of lines and ‘human’?) are contradicted by findings for other decisions. This is not surprising given the results in Table 3, which indicated that paper/abstract attributes do not affect the ability of individual screeners to exclude ‘insufficient information to make a decision’.

Discussion

Abstracts are provided in order to allow readers to quickly scan papers for applicability to their own situation. For clinical applications where the clinician has a treatment dilemma, the abstract should offer the following information: nature of the patients in the study reported, the specific problem(s) treated; the treatment provided, the results of that treatment, and recommendations. For other applications (eg preparation of a research project) similar information is needed. Our data suggest that the abstracts published in the spinal cord injury literature (or the broader literature of papers that potentially deal with spinal cord injury) fail to provide the information necessary to perform such screening efficiently. It seems that the introduction of longer abstracts and of structured abstracts has not made a difference, as the data in Table 3 indicate. All of the improvements in research reporting since the early 1970s taken together appear to have increased the informativeness of abstracts minimally if at all.

As indicated in Table 2, often it is not feasible to determine from the abstract whether the subjects involved were persons with traumatic SCI, and whether data on chronic pain were reported. Given the fact that all 13 screeners had this problem to more or less the same degree, it is unlikely that this can be blamed on the performance of the screeners. There was a tendency for physicians to be more decisive, which may be related to their better knowledge of the literature involved (most of which was published in medical rather than allied health journals). However, it may just reflect physicians' training to be decisive, rather than wait and see. It is possible that the physicians less often specified ‘??’, but that their decisions as to a ‘Yes’ or ‘No’ were wrong more often. A separate analysis would be needed to determine whether physicians agreed with physicians more often than non-physicians agreed with non-physicians; that would give a preliminary indication of the physiatrists' superior knowledge as opposed to a tendency to select ‘Yes’ or ‘No’, and avoid ‘dithering’.

The data in Tables 4 and 5 indicate that even when the number of ‘maybe’ answers to the screening questions is small (as it is for ‘yes/no human’), the answers of the screeners do not necessarily agree: kappa, which indicates how much better agreement is than chance, is far from the level traditionally used as a cut-off for adequate interrater reliability (0.60 or 0.80). It is known that when the table's marginal totals are substantially unbalanced, kappa is low in spite of high agreement between raters.24 The recommendation has been made to either report proportionate agreement with respect to a positive (‘Yes’) decision and with respect to a negative (‘No’) decision,25 or kappa and percentage agreement.26 As is clear from Table 4, except for the decision whether the paper deals with human subjects, the per cent agreement was also low; therefore, kappa was used in Table 5.

The suggestion was made that the blame for the poor consensus lies with the abstracts, providing insufficient or ambiguous information. It is likely that the problem is with the instructions the screeners received, or with the conscientiousness with which they followed these instructions? That is possibly part of the explanation – but one would assume that most searches for literature are done with a similar level of preparation and precision, and most with less. Our study indicates the upper level of performance that can be achieved in routine literature searches; in most situations, especially clinical ones, the level will be less: in searches done in preparation for patient care, education or research, there is no syllabus with unchanging instructions, explicit criteria, and a requirement to state an explicit judgment on four elements. Thus, many searches will bypass papers that are relevant, because the abstract fails to mention the pertinent information, or provides it in a way that is ambiguous. These searches also will lead to perusal of the full text of many papers, which will result in a rejection because the ultimate judgment based on full text information is ‘not relevant’.

Many studies have addressed the issue of finding published research using bibliographic data bases, especially finding randomized clinical trials (RCTs) needed for clinical applications or systematic reviews.27,28,29,30 The deficiencies noted (published RCTs not indexed at all; failure by Medline staff or other database indexers to index the fact that randomization was used, etc.) has led to hand searching of journals, and development of trials data bases, eg the Cochrane collection. The problem addressed in this paper was not the difficulty of finding papers, but deciding on applicability once they are found. The issues are related, however, and can be compared to the issues of sensitivity and specificity in diagnostic screening tests. Inability to find the existing studies that are (potentially) applicable to one's question is the problem of false negatives – these studies should have shown up as relevant, but did not. Inclusion of many studies that according to our search strategy had relevant information, but did not upon review of the abstract, is the problem of false positives. The latter problem is compounded by the fact that in many instances, the abstract was not sufficient to decide whether the paper in question was applicable to our research question (a true positive) or not (a true negative). In these cases the abstract was insufficient as a screening test, and a review of the full text of the paper was necessary to decide whether it was a ‘case’ – comparable to the need for a full battery of tests to diagnose a disease.

With the easy availability of abstracts in electronic data bases, the search for papers to answer clinical or other questions will likely more and more be based on a computer search, based on text words included in the abstract, or the index terms assigned to the paper by the abstracters. Because index term assignments may be based on the abstract only,31 completeness and accuracy of abstracts assumes increased importance. Several journals have developed guidelines that are disseminated to potential authors to help them summarize in the abstract all the relevant information in their papers, for instance JAMA.1 The structured abstract instructions offer a template of what is to be included, and where (ie under what heading) – although both set of instructions may be disregarded.13 Word limitations on abstracts may make it fairly impossible to satisfy all criteria, while maintaining a minimal level of readability.

Taddio et al. mention the paradox of abstracts: the better (longer, more informative) they are, the less likely it is that a reader may want to read the entire paper.12 Longer, better abstracts may contain so much information that the reader may base clinical and other decisions on the abstract alone. However, doing so has been disapproved of unanimously – if for no other reason than that authors may put less of a gloss on their findings in the details of the ‘Discussion’ section than they do in the short sentence that summarizes the conclusion in the abstract. As Haynes et al. stated: structured abstracts have both been complimented and criticized for making reading the rest of an article superfluous.9

It has been claimed that with the full-text availability of journals (resulting from larger and cheaper storage capability of computers), full-text searching of text, rather than abstract searching, is feasible. It may be attractive to rely on the full text of papers to search for those applicable to a particular clinical or research question. However, more complete retrieval based on full text also brings with it the problem of more false positives – eg papers that to a search engine appear to concern both concept X and clinical population Y, but that only happen to mention X as not found in the population Y, which population the authors studied because of problem Z. We had this problem in selecting papers based on abstracts, and it is reasonable to expect it to be worse if the full text of papers is the basis for searching. Abstracts, if well written, have the advantage of containing all the key lexical elements of the full paper, without the ‘accidental’ ones. One study comparing abstract and full text lexical elements for 1103 papers published in four major medical journals concluded that ‘the abstracts do reflect the language of the article and thus are lexical, as well as intellectual, surrogates of the articles they describe’.32 The problem when it comes to expeditiously identifying literature may not be one of easier access to full texts, but abstracts that do a better job of summarizing the crucial substantive and methodological information on the study described.

Three recommendations can be made: Authors of papers should pay attention to the abstract, and make sure they make clear the nature of the subject or patient population studied, and the specific clinical problem or issue evaluated, diagnosed or treated. Journal editors and manuscript reviewers must insist that authors include the information necessary for efficient screening in the abstract. Readers should be aware of the pitfalls involved in identifying literature. They may want to work with a librarian to formulate a search strategy that optimizes the yield of their database searches.

References

Winker MA . The need for concrete improvement in abstract quality. JAMA 1999; 281: 1129–1130.

Estrada CA et al. Reporting and concordance of methodologic criteria between abstracts and articles in diagnostic test studies. J Gen Intern Med 2000; 15: 183–187.

Evans M & Pollock AV . Trials on trial. Review of trials of antibiotic prophylaxis. Arch Surg 1984; 119: 109–113.

Pocock SJ, Hughes MD & Lee RJ . Statistical problems in the reporting of clinical trials. A survey of three medical journals. N Engl J Med 1987; 317: 426–432.

Gotzsche PC . Methodology and overt and hidden bias in reports of 196 double-blind trials of nonsteroidal antiinflammatory drugs in rheumatoid arthritis. Control Clin Trials 1989; 10: 31–56.

Narine L, Yee DS, Einarson TR & Ilersich AL . Quality of abstracts of original research articles in CMAJ in 1989. CMAJ 1991; 144: 449–453.

Ad Hoc Working Group for Critical Appraisal of the Medical Literature . A proposal for more informative abstracts of clinical articles. Ann Intern Med 1987; 106: 598–604.

Harris P . A structured form of abstracts for Spinal Cord. Spinal Cord 1997; 35: 865

Haynes RB et al. More informative abstracts revisited. Ann Intern Med 1990; 113: 69–76.

Froom P & Froom J . Deficiencies in structured medical abstracts. J Clin Epidemiol 1993; 46: 591–594.

Hartley J & Benjamin M . An evaluation of structured abstracts in journals published by the British Psychological Society. Br J Educ Psychol 1998; 68: 443–456.

Taddio A et al. Quality of nonstructured and structured abstracts of original research articles in the British Medical Journal, the Canadian Medical Association Journal and the Journal of the American Medical Association. CMAJ 1994; 150: 1611–1615.

Trakas K et al. Quality assessment of pharmacoeconomic abstracts of original research articles in selected journals. Ann Pharmacother 1997; 31: 423–428.

Harbourt AM, Knecht LS & Humphreys BL . Structured abstracts in MEDLINE, 1989–1991. Bull Med Libr Assoc 1995; 83: 190–195.

Hartley J & Sydes M . Are structured abstracts easier to read than traditional ones? J Res Reading 1997; 20: 122–136.

Spitzer WO . The structured sonnet. J Clin Epidemiol 1991; 44: 729

Sanchez-Thorin JC, Cortes MC, Montenegro M & Villate N . The quality of reporting of randomized clinical trials published in Ophthalmology. Ophthalmology 2001; 108: 410–415.

Scherer RW & Crawley B . Reporting of randomized clinical trial descriptors and use of structured abstracts. JAMA 1998; 280: 269–272.

Pitkin RM, Branagan MA & Burmeister LF . Accuracy of data in abstracts of published research articles. JAMA 1999; 281: 1110–1111.

Pitkin RM & Branagan MA . Can the accuracy of abstracts be improved by providing specific instructions? A randomized controlled trial. JAMA 1998; 280: 267–269.

Haynes RB et al. Online access to MEDLINE in clinical settings. A study of use and usefulness. Ann Intern Med 1990; 112: 78–84.

Saint S et al. Journal reading habits of internists. J Gen Intern Med 2000; 15: 881–884.

Barry HC et al. Family physicians' use of medical abstracts to guide decision making: style or substance? J Am Board Fam Pract 2001; 14: 437–442.

Feinstein AR & Cicchetti DV . High agreement but low kappa: I. The problems of two paradoxes. J Clin Epidemiol 1990; 43: 543–549.

Cicchetti DV & Feinstein AR . High agreement but low kappa: II. Resolving the paradoxes. J Clin Epidemiol 1990; 43: 551–558.

Steinijans VW et al. Interobserver agreement: Cohen's kappa coefficient does not necessarily reflect the percentage of patients with congruent classifications. Int J Clin Pharmacol Ther 1997; 35: 93–95.

Derry S, Kong Loke Y & Aronson JK . Incomplete evidence: the inadequacy of databases in tracing published adverse drug reactions in clinical trials. BMC Med Res Methodol 2001; 1: 7

Watson RJ & Richardson PH . Identifying randomized controlled trials of cognitive therapy for depression: comparing the efficiency of Embase, Medline and PsycINFO bibliographic databases. Br J Med Psychol 1999; 72: 535–542.

Dickersin K, Scherer R & Lefebvre C . Identifying relevant studies for systematic reviews. BMJ 1994; 309: 1286–1291.

Avenell A, Handoll HH & Grant AM . Lessons for search strategies from a systematic review, in The Cochrane Library, of nutritional supplementation trials in patients after hip fracture. Am J Clin Nutr 2001; 73: 505–510.

Lodge H . Improving the accuracy of abstracts in scientific articles. JAMA 1998; 280: 2071

Ries JE et al. Comparing frequency of content-bearing words in abstracts and texts in articles from four medical journals: an exploratory study. Medinfo 2001; 10: 381–384.

Acknowledgements

Thanks to the abstract screeners: Thomas Bryce M.D., Stacy Glass PT, Allison Gurwitz OT, Ralph Marino MD, Heather Muterspaw OT, Kate Parkin PT, Kristjan Ragnarsson MD, Carrie Schauer PT, Audrey Schmerzler RN, Mary Shea OT, Adam Stein MD and Sarah Zimmerman OT. Venecia Toribio, Adejoke Akimlamilo, Salma Akter and Jessie Gonzales printed abstracts, counted lines and/or entered data with great diligence. Dr Marino provided feedback on an earlier version of this paper. Drs Bryce, Marino, Ragnarsson and Stein are Co-investigators on the meta-analyses which produced some of the data. This work was supported in part by a grant from the National Institute on Disability and Rehabilitation Research (NIDRR), Office of Special Education Services, US Department of Education to Mount Sinai School of Medicine (H133N000027). The conclusions of this paper are not necessarily endorsed by the Co-investigators or NIDRR.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Dijkers, M. Searching the literature for information on traumatic spinal cord injury: the usefulness of abstracts. Spinal Cord 41, 76–84 (2003). https://doi.org/10.1038/sj.sc.3101414

Published:

Issue Date:

DOI: https://doi.org/10.1038/sj.sc.3101414